How To Run Ollama On F5 AppStack With An NVIDIA GPU In AWS

If you're just getting started with AI, you'll want to watch this one, as Michael Coleman shows Aubrey King, from DevCentral, how to run Ollama on F5 AppStack on an AWS instance with an NVIDIA Tesla T4 GPU. You'll get to see the install, what it looks like when a WAF finds a suspicious conversation and even a quick peek at how Mistral handles a challenge differently than Gemma. 80Views2likes0Comments

80Views2likes0CommentsAction Items in OMB Memorandum M-22-09 “Moving the U.S. Government Toward Zero Trust...”

Purpose On January 26, 2022, OMB issued Memorandum M-22-09 for “Moving the U.S. Government Toward Zero Trust Cybersecurity Principles” listing a series of action items. This blog is to provide an overview of F5 capabilities and where they fit within those action items. Milestone Dates January 26, 2022 Issuance of M-22-09 “Moving the U.S. Government Toward Zero Trust Cybersecurity Principles” February 25, 2022 Agencies to designate and identify a zero trust strategy implementation lead for their organization March 27, 2022 Submit to OMB and CISA an implementation plan for FY22-FY24 May 27, 2022 Chief Data Officers to develop a set of initial categorizations for sensitive electronic documents within their enterprise January 26, 2023 Public-facing agency systems that support MFA must give users the option of using phishing-resistant authentication January 26, 2023 Each agency must select at least one FISMA Moderate system that requires authentication and make it Internet accessible August 27, 2022 Agencies must reach the first event logging maturity level (EL-1) as described in Memorandum M-21-31 End of FY2024 Agencies to achieve specific zero trust security goals Requirements to F5 Capability Mapping Page Requirements F5 Capabilities F5 Products 6 Section A.1 “Enterprise identity management must be compatible with common applications and platforms. As a general matter, users should be able to sign in once and then directly access other applications and platforms within their agency’s IT infrastructure. Beyond compatibility with common applications, an agency identity management program should facilitate integration among agencies and with externally operated cloud services; the use of modern, open standards often promotes such integration.” Proxies and transforms client side authentication method to adapt to application’s native authentication method. Modern authentication can now be applied to legacy web application without any changes. BIG-IP APM NGINX 7 Section A.2 Agencies must integrate and enforce MFA across applications involving authenticated access to Federal systems by agency staff, contractors, and partners. MFA, including PIV, can be applied to any applications, whether legacy or modern, without changes. BIG-IP APM 7 Section A.2 MFA should be integrated at the application layer MFA, including PIV, can be applied to any applications, whether legacy or modern, without changes. BIG-IP APM 7 Section A.2 guessing weak passwords or reusing passwords obtained from a data breach Finds compromised credentials in real-time, identifies botnets, and blocks simulation software. F5 Distributed Cloud Services 7 Section A.2 many approaches to multi-factor authentication will not protect against sophisticated phishing attacks, which can convincingly spoof official applications and involve dynamic interaction with users. Users can be fooled into providing a one-time code or responding to a security prompt that grants the attacker account access. These attacks can be fully automated and operate cheaply at significant scale. Finds compromised credentials in real-time, identifies botnets, and blocks simulation software. F5 Distributed Cloud Services 9 Section A.3 every request for access should be evaluated to determine whether it is appropriate, which requires the ability to continuously evaluate any active session After a session starts, a per-request policy runs each time the client makes an HTTP or HTTPS request. A per-request policy must provide the logic for determining how to process web-bound traffic. It must determine whether to allow or reject a URL request and control whether or not to bypass SSL traffic. BIG-IP APM NGINX 10 Section A.3 Agency authorization systems should work to incorporate at least one device-level signal alongside identity information about the authenticated user when regulating access to enterprise resources High-efficacy digital fingerprinting identifies returning web client patterns from new edge devices. F5 Distributed Cloud Services 12 Section C.1 Agencies should make heavy internal use of recent versions of standard encryption protocols, such as TLS 1.3 Regardless of your TLS version, you need to have visibility into encrypted threats to protect your business. SSL/TLS based-decryption devices that allow for packet inspection can intercept encrypted traffic, decrypt, inspect, and re-encrypt untrusted TLS traffic entering or leaving the network. BIG-IP LTM BIG-IP SSLO NGINX 13 Section C.1 agencies should plan for cryptographic agility in their network architectures, in anticipation of continuing to adopt newer versions of TLS Organizations don’t want to reconfigure hundreds of servers just to offer these new protocols. This is where transformational services become cipher agility. Cipher agility is the ability of an SSL device to offer multiple cryptographic protocols such as ECC, RSA2048, and DSA at the same time—even on the same virtual server. BIG-IP SSLO 13 Section C.2 agency DNS resolvers must support standard encrypted DNS protocols (DNS-over-HTTPS or DNS-over-TLS) NOTE: DNSSEC does not encrypt DNS data in transit. DNSSEC can be used to verify the integrity of a resolved DNS query, but does not provide confidentiality. DoH proxy—A passthrough proxy that proxies the client’s DoH request to a backend DoH server and the backend DoH server’s response back to the DoH client. DoH server—The server translates the client’s DoH request into a standard DNS request and forwards the DNS request using TCP or User Datagram Protocol (UDP) to the configured DNS server, such as the BIG-IP named process and the BIG-IP DNS cache feature. When the BIG-IP system receives a response from the configured DNS server, it translates the DNS response into a DoH response before sending it to the DoH client. BIG-IP DNS 14 Section C.3 Zero trust architectures—and this strategy— require agencies to encrypt all HTTP traffic, including within their environments. Handle SSL traffic in load balancing scenario and meet most of the security requirements effectively. The 3 common SSL configurations that can be set up on LTM device are: -SSL Offloading -SSL Passthrough -Full SSL Proxy / SSL Re-Encryption / SSL Bridging / SSL Terminations BIG-IP LTM BIG-IP SSLO NGINX 18 Section D.4 Making applications internet-accessible in a safe manner, without relying on a virtual private network (VPN) or other network tunnel Proxy-based access controls deliver a zero-trust platform for internal and external application access. That means applications are protected while extending trusted access to users, devices, and APIs. BIG-IP LTM BIG-IP APM 18 Section D.4 require agencies to put in place minimum viable monitoring infrastructure, denial of service protections, and an enforced access-control policy Integrate with SIEM for agency wide monitoring or use F5 management platform for greater visibility. On-prem DDOS works in conjunction with cloud service to protect from various attack strategies. BIG-IQ BIG-IP AFM BIG-IP ASM F5 Distributed Cloud DDOS 19 Section D.6 Automated, immutable deployments support agency zero trust goals Built-in support for automation and orchestration to work with technologies like Ansible, Terraform, Kubernetes and public clouds. BIG-IP NGINX 20 Section D.6 Agencies should work toward employing immutable workloads when deploying services, Built-in support for automation and orchestration to work with technologies like Ansible, Terraform, Kubernetes and public clouds. BIG-IP NGINX 24 Section F.1 Agencies are undergoing a transition to IPv6, as described in OMB Memorandum M-21- 07, while at the same time migrating to a zero trust architecture The BIG-IP device is situated between the clients and the servers to provide the applications the clients use. In this position—the strategic point of control—the BIG-IP device provides virtualization and high availability for all application services, making several physical servers look like a single entity behind the BIG-IP device. This virtualization capability provides an opportunity to start migrating either clients or servers—or both simultaneously—to IPv6 networks without having to change clients, application services, and both sides of the network all at once. BIG-IP LTM NGINX 24 Section F.2 OMB Memorandum M-19-1735 requires agencies to use PIV credentials as the “primary” means of authentication used for Federal information systems PIV authentication can be applied to any applications, whether legacy or modern, without changes. BIG-IP APM 25 Section F.3 Current OMB policies neither require nor prohibit inline decryption of enterprise network traffic SSL Orchestrator is designed and purpose-built to enhance SSL/TLS infrastructure, provide security solutions with visibility into SSL/TLS encrypted traffic, and optimize and maximize your existing security investments. BIG-IP SSLO 25 Section F.4 This memorandum expands the scope of M-15-13 to encompass these internal connections. NOTE: M-15-13 specifically exempts internal connections, stating, “[T]he use of HTTPS is encouraged on intranets, but not explicitly required.” SSL Orchestrator delivers dynamic service chaining and policy-based traffic steering, applying context-based intelligence to encrypted traffic handling to allow you to intelligently manage the flow of encrypted traffic across your entire security stack, ensuring optimal availability. BIG-IP LTM BIG-IP SSLO NGINX Your F5 Account Team Can Help Every US Federal agency has a dedicated F5 account team to support the mission. They are ready to discuss F5 capabilities and help provide further information for your Zero Trust implementation plan. Please contact your F5 account team directly or use this contact form.2.3KViews1like1CommentQ/A with itacs GmbH's Kai Wilke - DevCentral's Featured Member for February

Kai Wilke is a Principal Consultant for IT Security at itacs GmbH – a German consulting company located in Berlin City specializing in Microsoft security solutions, SharePoint deployments, and customizations as well as classical IT Consulting. He is also a 2017 DevCentral MVP and DevCentral’s Featured Member for February! For almost 20 years in IT, he’s constantly explored the evens and odds of various technologies, including different operating systems, SSO and authentication services, RBAC models, PKI and cryptography components, HTTP-based services, proxy servers, firewalls, and core networking components. His focus in these areas has always been security related and included the design, implementation and review of secure and high availability/high performance datacenters. DevCentral got a chance to talk with Kai about his work, life and mastery of iRules. DevCentral: You’ve been a very active contributor to the DevCentral community and wondered what keeps you involved? Kai: Working with online communities has always been an important thing for me and it began long time ago within the good old Usenet and the predecessor of the Darknet. Before joining the F5 community, I was also once an honored member of the Microsoft Online Community and was five times awarded as a Microsoft MVP for Enterprise Security and Microsoft-related firewall/proxy server technologies. My opinion is that if you want to become an expert for a certain technology or product, you should not just learn THE-ONE straight-forward method fetched from manuals, guides or even exams. Instead, you have to dive deeply into all of those edge scenarios and learn all the uncountable ways to mess the things up. And dealing with questions and problems of other peers is probably the best catalyst to gain that kind of experience. Besides of that, the quality of the DevCentral content and the knowledge of other community members are absolutely astonishing. It makes simply a lot of fun for me to work within the DevCentral community and to learn every day a little bit more… DC: Tell us a little about the areas of BIG-IP expertise you have. KW: Over the years, I successfully implemented BIG-IP LTM, APM, ASM, and DNS Service deployments for our customers. Technologically, I internalized TMOS and its architecture very well and I pretty much learned how to write simple but also somewhat complex iRules to control the delivery of arbitrary data on their way from A to B in any possible fashion. DC: You are a Principal Consultant for IT Security at itacs GmbH - a German consulting company. Can you describe your typical workday? KW: Because of my history with Microsoft related infrastructures, my current workload is pretty versatile. Many of my current projects are still settled in the Microsoft / Windows system environment and are covering the design and review of security related areas. Right now, I’m working with several DAX companies and also LaaS, PaaS and SaaS service providers to analyze their Active Directory and System Management infrastructures and to design and implement a very unique, fundamental and comprehensive security concept to counter those dreaded PtH (Pass-the-Hash) and APT (Advance Persistent Threat) attacks we are facing these days. Over the last years, my F5 customer base has periodically grown so I would say my work is a 50:50 mix right now. I do F5 workshops, designs, implementations, second and third level support as well as configuration reviews and optimization of existing environments. I work with some big web 2.0 customers that have the demand to pretty much exhaust all the capabilities of an F5. This challenges me as a network architect and as an ADC developer. I realize every day that working with F5 products makes so much more fun than any Microsoft product I have ever dealt with. So in the future, I will even more put my focus on F5! DC: Describe one of your biggest BIG-IP challenges and how DevCentral helped in that situation. KW: In my opinion, the F5 products themselves are not that challenging – but sometimes the underlying technologies and the detailed project requirements are. But as long as those requirements can be drawn and explained on a sheet of paper, I am somewhat confident that the BIG-IP platform is able to support the requirements – thanks to the F5 developers who have created a platform which is not purely scenario driven but rather supports a comprehensive list of RFC standards which can be combined as needed. For an example, one of my largest customers operates an affiliate resource tracking system with three billion web requests per day with a pretty much aggressive session setup rate during peak hours. I have designed and implemented their BIG-IP LTM platform to offload SSL-encryption and the TCP-connection handling to various backend systems using well selected and performance optimized settings. Other scenarios require slightly more complex content switching, the selective use of pre-authentication and/or combination with IDS/IPS systems. To support those requirements, I developed a very granular and scalable iRule administration framework which is able to simplify the configuration by using rather easy-to-use iRule configuration files (operated by non TCL developers) which will then trigger the much more complex iRule code (written and tested by TCL developers) as needed. The latest version of my iRule administration framework (which is currently under testing/development) will be able to support a couple thousand websites on a single Virtual Server, where each websites can trigger handcrafted TCL code blocks as needed, but without adding linear or even exponential overhead to the system as the regular iRule approaches would do. The core and the configuration files of the latest version are heavily based on TCL procedures to create a very flexible code base and also conditional control structures, but completely without calling any TCL procedures during runtime to boost the performance dramatically. Sounds interesting? Then stay tuned, I am sure I will publish this framework to the CodeShare once it’s stable enough… 😉 DC: Lastly, if you weren’t an IT admin – what would be your dream job? Or better, when you were a kid – what did you want to be when you grew up? KW: I was typing my first assembler code out of a C64 magazine at the age of 10, so I really wanted to be a developer and/or IT admin since then. But besides of my current job, I can also imagine being a racecar driver. I really have petrol in my blood and pretty much enjoy driving on the German Autobahn. As an alternative, I could also imagine being a cook. I really love cooking and enjoy awesome food! DC: Thanks Kai! Just don't fire up that sterno while shifting gears!! Check out all of Kai’s DevCentral contributions and check out their blog websites: ops365.de, flow365.de and brandmysharepoint.de.708Views0likes0CommentsWhat is BIG-IP Next?

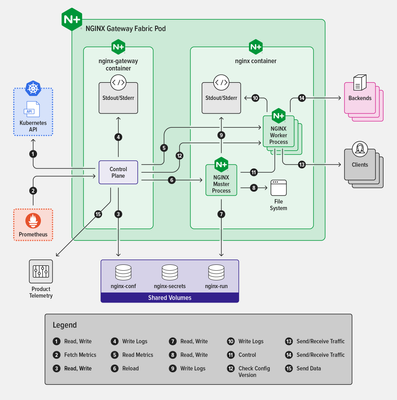

BIG-IP Next LTM and BIG-IP Next WAF hit general availability back in October, and we hit the road for a tour around North America for its arrival party! Those who attended one of our F5 Academy sessions got a deep-dive presentation into BIG-IP Next conceptually, and then a lab session to work through migrating workloads and deploying them. I got to attend four of the events and discuss with so many fantastic community members what's old, what's new, what's borrowed, what's blue...no wait--this is no wedding! But for those of us who've been around the block with BIG-IP for a while, if not married to the tech, we definitely have a relationship with it, for better and worse, right? And that's earned. So any time something new, or in our case "Next" comes around, there's risk and fear involved personally. But don't fret. Seriously. It's going to be different in a lot of ways, but it's going to be great. And there are a crap-ton (thank you Mark Rober!) of improvements that once we all make it through the early stages, we'll embrace and wonder why we were even scared in the first place. So with all that said, will you come on the journey with me? In this first of many articles to come from me this year, I'll cover the high-level basics of what is so next about BIG-IP Next, and in future entries we'll be digging into the tech and learning together. BIG-IP and BIG-IP Next Conceptually - A Comparison BIG-IP has been around since before the turn of the century (which is almost old enough to rent a car here in the United States) and this year marks the 20 year anniversary of TMOS. That the traffic management microkernel (TMM) is still grokking like a boss all these years later is a testament to that early innovation! So whereas TMOS as a system is winding down, it's heart, TMM, will go on (cue sappy Celine Dion ditty in 3, 2, 1...) Let's take a look at what was and what is. With TMOS, the data plane and control plane compete for resources as it's one big system. With BIG-IP, the separation of duties is more explicit and intentionally designed to scale on the control plane. Also, the product modules are no longer either completely integrated in TMM or plugins to TMM, but rather, isolated to their own container structures. The image above might convey the idea that LTM or WAF or any of the other modules are single containers, but that's just shown that way for brevity. Each module is an array of containers. But don't let that scare you. The underlying kubernetes architecture is an abstraction that you may--but certainly are not required to--care about. TMM continues to be its awesome TMM self. The significant change operationally is how you interact with BIG-IP. With TMOS, historically you engage directly with each device, even if you have some other tools like BIG-IQ or third-party administration/automation platforms. With BIG-IP Next, everything is centralized on Central Manager, and the BIG-IP Next instances, whether they are running on rSeries, VELOS, or Virtual Edition, are just destinations for your workloads. In fact, outside of sidecar proxies for troubleshooting, instance logins won't even be supported! Yes, this is a paradigm shift. With BIG-IP Next, you will no longer be configuration-object focused. You will be application-focused. You'll still have the nerd-knobs to tweak and turn, but they'll be done within the context of an application declaration. If you haven't started your automation journey yet, you might not be familiar with AS3. It's been out now for years and works with BIG-IP to deploy applications declaratively. Instead of following a long pre-flight checklist with 87 steps to go from nothing to a working application, you simply define the parameters of your application in a blob of JSON data and click the easy button. For BIG-IP Next, this is the way. Now, in the Central Manager GUI, you might interact with FAST templates that deliver a more traditional view into configuring applications, but the underlying configuration engine is all AS3. For more, I hosted aseries of streams in December to introduce AS3 Foundations, I highly recommend you take the time to digest the basics. Benefits I'm Excited About There are many and you can read about them on the product page on F5.com. But here's my short list: API-first. Period. BIG-IP had APIs with iControl from the era before APIs were even cool, but they were not first-class citizens. The resulting performance at scale requires effort to manage effectively. Not only performance, but feature parity among iControl REST, iControl SOAP, tmsh, and the GUI has been a challenge because of the way development occurred over time. Not so with BIG-IP Next. Everything is API-first, so all tooling is able to consume everything. This is huge! Migration assistance. Central Manager has the JOURNEYS tool on sterroids built-in to the experience. Upload your UCS, evaluate your applications to see what can be migrated without updates, and deploy! It really is that easy. Sure, there's work to be done for applications that aren't fully compatible yet, but it's a great start. You can do this piece (and I recommend that you do) before you even think about deploying a single instance just to learn what work you have ahead of you and what solutions you might need to adapt to be ready. Simplified patch/upgrade process. If you know, you know...patches are upgrades with BIG-IP, and not in place at that. This is drastically improved with BIG-IP Next! Because of the containerized nature of the system, individual containers can be targeted for patching, and depending on the container, may not even require a downtime consideration. Release cycle. A more frequent release cadence might terrify the customers among us that like to space out their upgrades to once every three years or so, but for the rest of us, feature delivery to the tune of weeks instead of twice per year is an exciting development (pun intended!) Features I'm Excited About Versioning for iRules and policies. For those of us who write/manage these things, this is huge! Typically I'd version by including it in the title, and I know some who set release tags in repos. With Central Manager, it's built-in and you can deploy iRules and polices by version and do diffs in place. I'm super excited about this! Did I mention the API? On the API front...it's one API, for all functionality. No digging and scraping through the GUI, tmsh, iControl REST, iControl SOAP, building out a node.js app to deploy a custom API endpoint with iControl LX, if even possible with some of the modules like APM or ASM. Nope, it's all there in one API. Glorious. Centralized dashboards. This one is for the Ops teams! Who among us has spent many a day building custom dashboards to consume stats from BIG-IPs across your org to have a single pane of glass to manage? I for one, and I'm thrilled to see system, application, and security data centralized for analysis and alerting. Log/metric streaming. And finally, logs and metrics! Telemetry Streaming from the F5 Automation Toolchain doesn't come forward in BIG-IP Next, but the ideas behind it do. If you need your data elsewhere from Central Manager, you can set up remote logging with OpenTelemetry (see the link in the resources listed below for a first published example of this.) There are some great features coming with DNS, Access, and all the other modules when they are released as well. I'll cover those when they hit general availability. Let's Go! In the coming weeks, I'll be releasing articles on installation and licensing walk-throughs for Central Manager and the instances, andcontent from our awesome group of authors is already starting to flow as well. Here are a few entries you can feast your eyes on, including an instance Proxmox installation: For the kubernetes crowd, BIG-IP Next CNF Solutions for RedHat Openshift Installing BIG-IP Next Instance on Proxmox Remote Logging with BIG-IP Next and OpenTelemetry Are you ready? Grab a trial licensefrom your MyF5 dashboard and get going! And make sure to join us in the BIG-IP Next Academy group here on DevCentral. The launch team is actively engaged there for next-related questions/issues, so that's the place to be in your early journey! Also...if you want the ultimate jump-start for all things BIG-IP Next, join usatAppWorld 2024 in SanJose next month!3.4KViews17likes4CommentsFastNetMon and F5 Distributed Cloud (XC): An enhanced DDoS strategy.

In the world of DDoS, the more help seems to be the better when trying to thwart attacks. F5 recently partnered with FastNetMon to provide that extra help for those that want to use their own detection mechanisms but use cloud scrubbing for mitigations. So who ISFastNetMon? Founded in 2016, they focus on developing scalable DDoS detection and mitigation software that can run on any network. FastNetMon has a presence in 134 countries, which is impressive given their humble beginnings in the open-source community. They believe every business needs DDoS protection and, like F5, pride themselves on helping protect businesses against the - not if but when - inevitable DDoS attack(s). FastNetMon offers a variety of ways to deploy at the customer edge, from containers to virtual machines.FastNetMon specializes in developing scalable DDoS detection and mitigation software. Using the same scripting modeling that was previously used for <legacy> Silverline, F5 has recently enlisted FastNetMon again, but this time for Distributed Cloud (XC). What is the partnership all about? In a nutshell, FastNetMon created an integration where upon detecting a DDoS attack, could announce the targeted /24 prefix to the Distributed Cloud scrubbing centers but also could withdraw that same route using an F5 XC API. The benefits of this integration are many, includingcompletely automated logic to rapidly detect, announce, and withdrawal the attacked prefix. In order for this to work, two things to note. An API service credential needs to be created and permissions need to be set within the tenant for FastNetMon to operate. Links: Deployment model documentation Integration documentation294Views0likes0CommentsSecurity Automation with F5 BIG-IP and Event Driven Ansible

Updated (September 19th 2023) INTRODUCTION TO EVENT DRIVEN SECURITY: Event Driven Security is one of the projects I have been working on for the last year or so. The idea of creating automated security that can react similarly to how I would react in situations is fascinating to me, and then comes the BIG Question.... "Can I code it?" Originally our solution we had utilized ELK (Elastic Logstash Kibana) where Elasticsearch was my logging and monitoring tool, Kibana was the frontend GUI for helping me visualize and set up my watchers for my webhook triggers, Logstash would be an intermediary to receive my webhooks to help me execute Ansible related code. While using Logstash, if the Ansible code was simple it had no issues, however when things got more complex (i.e., taking payloads from Elastic and feeding them through Logstash to my playbooks), I would sometimes get intermittent results. Some of this could be my lack of knowledge of the software but for me it needed to be simple! As I want to become more complex with my Event Driven Security, I needed a product that would follow those needs. And luckily in October 2022 that product was announced "Event Driven Ansible" it made it so I didn’t need Logstash anymore i could call Ansible related code directly, it even took in webhooks (JSON based) to trigger the code, so I was already half way there! CODE FOR EVENT DRIVEN SECURITY: So now I have setup the preface let’s get down to the good stuff! I have setup a GitHub repository for the code i have been testing withhttps://github.com/f5devcentral/f5-bd-ansible-eda-demowhich is free for all to use and please feel free to take/fork/expand!!! There are some cool things worth noting in the code specifically the transformation of the watch code into something usable in playbooks. This code will take all the times the watcher finds a match in its filter and then then copies the Source IP from that code and puts it into a CSV list, then it sends the list as a variable within the webhook along with the message to execute the code. Here is the code I am mentioning above about transforming and sending the payloads in an elastic watcher. See the Full code in the GitHub repo. (Github Repo --> elastic -->watch_blocked_ips.json) "actions": { "logstash_exec": { "transform": { "script": { "source": """ def hits = ctx.payload.hits.hits; def transform = ''; for (hit in hits) { transform += hit._source.src_ip; transform += ', ' } return transform; """, "lang": "painless" } }, "webhook": { "scheme": "http", "host": "10.1.1.12", "port": 5000, "method": "post", "path": "/endpoint", "params": {}, "headers": {}, "body": """{ "message": "Ansible Please Block Some IPs", "payload": "{{ctx.payload._value}}" }""" } } } } In the Ansible Rulebook the big thing to note is that from the Pre-GA code (which was all CLI ansible-rulebook based) to the GA version (EDA GUI) rulebooks now are setup to call Ansible Automation Platform (AAP) templates. In the code below you can see that its looking for an existing template "Block IPs" in the organization "Default" to be able to run correctly. (Github Repo --> rulebooks -->webhook-block-ips.yaml) --- - name: Listen for events on a webhook hosts: all ## Define our source for events sources: - ansible.eda.webhook: host: 0.0.0.0 port: 5000 ## Define the conditions we are looking for rules: - name: Block IPs condition: event.payload.message == "Ansible Please Block Some IPs" action: run_job_template: name: "Block IPs" organization: "Default" This shows my template setup in Ansible Automation Platform 2.4.x, there is one CRITICAL piece of information i wanted to share about using EDA GA and AAP 2.4 code is that within the template you MUSTtick the checkbox on the "Prompt on launch" in the "variables section". This will allow the payload from EDA (given to it from Elastic) to pass on to the playbook. In the Playbook you can see how we extract the payload from the event using the ansible_eda variable, this allows us to pull in the event we were sent from Elastic to Event Driven Ansible and then sent to the Ansible Automation Platform template to narrow down the specific fields we needed (Message and Payload) from there we create an array from that payload so we can pass it along to our F5 code to start adding Blocked IPs to the WAF Policy.(Github Repo --> playbooks -->block-ips.yaml) --- - name: ASM Policy Update with Blocked IPs hosts: lb connection: local gather_facts: false vars: Blocked_IPs_Events: "{{ ansible_eda.event.payload }}" F5_VIP_Name: VS_WEB F5_VIP_Port: "80" F5_Admin_Port: "443" ASM_Policy_Name: "WAF-POLICY" ASM_Policy_Directory: "/tmp/f5/" ASM_Policy_File: "WAF-POLICY.xml" tasks: - name: Setup provider ansible.builtin.set_fact: provider: server: "{{ ansible_host }}" user: "{{ ansible_user }}" password: "{{ ansible_password }}" server_port: "{{ F5_Admin_Port }}" validate_certs: "no" - name: Blocked IP Events From EDA debug: msg: "{{ Blocked_IPs_Events.payload }}" - name: Create Array from BlockedIPs ansible.builtin.set_fact: Blocked_IPs: "{{ Blocked_IPs_Events.payload.split(', ') }}" when: Blocked_IPs_Events is defined - name: Remove Last Object from Array which is empty array object ansible.builtin.set_fact: Blocked_IPs: "{{ Blocked_IPs[:-1] }}" when: Blocked_IPs_Events is defined ... All of this combined, creates a well-oiled setup that looks like the following diagram below, with the code and the flows setup we can now create proactive event based security! Here is the flow of the code that is in the GitHub repo when executed. The F5 BIG-IP is pushing all the monitoring logs to Elastic. Elastic is taking all that data and storing it while utilizing a watcher with its filters and criteria, The Watcher finds something that matches its criteria and sends the webhook with payload to Event Driven Ansible. Event Driven Ansible's Rulebook triggers and calls a template within Ansible Automation Platform and sends along the payload given to it from Elastic. Ansible Automation Platforms Template executes a playbook to secure the F5 BIG-IP using the payload given to it from EDA (originally from Elastic). In the End we go Full Circle, starting from the F5 BIG-IP and ending at the F5 BIG-IP! Full Demonstration Video: Check out our full demonstration video we recently posted (Sept 13th 2023) is available on-demand viahttps://www.f5.com/company/events/webinars/f5-and-red-hat-3-part-demo-series This page does require a registration and you can check out our 3 part series. The one related to this lab is the "Event-Driven Automation and Security with F5 and Red Hat Ansible" Proactive Securiy with F5 & Event Driven Ansible Video Demo LINKS TO CODE: https://github.com/f5devcentral/f5-bd-ansible-eda-demo1.8KViews10likes0CommentsWhere are F5's archived deployment guides?

Archived F5 Deployment Guides This article contains an index of F5’s archived deployment guides, previously hosted onF5 | Multi-Cloud Security and Application Delivery.They are all now hosted on cdn.f5.com. Archived guides... are no longer supported and no longer being updated -provided for reference only. may refer to products or versions, by F5 or 3rd parties that are end-of-life (EOL) or end-of-support (EOS). may refer to iApp templates that are deprecated. For current/updated iApps and FAST templates see myF5 K13422: F5-supported and F5-contributed iApp templates Current F5 Deployment Guides Deployment Guides (https://www.f5.com/resources/deployment-guides) IMPORTANT:The guidance found in archived guides is no longer supported by F5, Inc. and is supplied for reference only.For assistance configuring F5 devices with 3 rd party applications we recommend contacting F5 Professional Services here:Request Professional Services | F5 Archived Deployment Guide Index Deployment Guide Name (links to off-platform) Written for… CA Bundle Iapp BIG-IP V11.5+, V12.X, V13 Microsoft Internet Information Services 7.0, 7.5, 8.0, 10 BIG-IP V11.4 - V13: LTM, AAM, AFM Microsoft Exchange Server 2016 BIG-IP V11 - V13: LTM, APM, AFM Microsoft Sharepoint 2016 BIG-IP V11.4 - V13: LTM, APM, ASM, AFM, AAM Microsoft Active Directory Federation Services BIG-IP V11 - V13: LTM, APM SAP Netweaver: Erp Central Component BIG-IP V11.4: LTM, AAM, AFM, ASM SAP Netweaver: Enterprise Portal BIG-IP V11.4: LTM, AAM, AFM, ASM Microsoft Dynamics CRM 2013 And 2011 BIG-IP V11 - V13: LTM, APM, AFM IBM Qradar BIG-IP V11.3: LTM Microsoft Dynamics CRM 2016 and 2015 BIG-IP V11 - V13: LTM, APM, AFM SSL Intercept V1.5 BIG-IP V12.0+: LTM IBM Websphere 7 BIG-IP LTM, WEBACCELERATOR, FIREPASS Microsoft Dynamics CRM 4.0 BIG-IP V9.X: LTM SSL Intercept V1.0 BIG-IP V11.4+, V12.0: LTM, AFM SMTP Servers BIG-IP V11.4, V12.X, V13: LTM, AFM Oracle E-Business Suite 12 BIG-IP V11.4 - V13: LTM, AFM, AAM HTTP Applications BIG-IP V11.4 - V13: LTM, AFM, AAM Amazon Web Services Availability Zones BIG-IP LTM VE: V12.1.0 HF2+, V13 Oracle PeopleSoft Enterprise Applications BIG-IP V11.4+: LTM, AAM, AFM, ASM HTTP Applications: Downloadable IApp: BIG-IP V11.4 - V13: LTM, APM, AFM, ASM Oracle Weblogic 12.1, 10.3 BIG-IP V11.4: LTM, AFM, AAM IBM Lotus Sametime BIG-IP V10: LTM Analytics BIG-IP V11.4 - V14.1: LTM, APM, AAM, ASM, AFM Cacti Open Source Network Monitoring System BIG-IP V10: LTM NIST SP-800-53R4 Compliance BIG-IP: V12 Apache HTTP Server BIG-IP V11, V12: LTM, APM, AFM, AAM Diameter Traffic Management BIG-IP V10: LTM Nagios Open Source Network Monitoring System BIG-IP V10: LTM F5 BIG-IP Apm With IBM, Oracle and Microsoft BIG-IP V10: APM Apache Web Server BIG-IP V9.4.X, V10: LTM, WA DNS Traffic Management BIG-IP V10: LTM Diameter Traffic Management BIG-IP V11.4+, V12: LTM Citrix XenDesktop BIG-IP V10: LTM F5 As A SAML 2.0 Identity Provider For Common SaaS Applications BIG-IP V11.3+, V12.0 Apache Tomcat BIG-IP V10: LTM Citrix Presentation Server BIG-IP V9.X: LTM Npath Routing - Direct Server Return BIG-IP V11.4 - V13: LTM Data Center Firewall BIG-IP V11.6+, V12: AFM, LTM Citrix XenApp Or XenDesktop Iapp V2.3.0 BIG-IP V11, V12: LTM, APM, AFM Citrix XenApp Or XenDesktop BIG-IP V10.2.1: APM16KViews0likes0CommentsIntroducing F5 BIG-IP Next CNF Solutions for Red Hat OpenShift

5G and Red Hat OpenShift 5G standards have embraced Cloud-Native Network Functions (CNFs) for implementing network services in software as containers. This is a big change from previous Virtual Network Functions (VNFs) or Physical Network Functions (PNFs). The main characteristics of Cloud-Native Functions are: Implementation as containerized microservices Small performance footprint, with the ability to scale horizontally Independence of guest operating system,since CNFs operate as containers Lifecycle manageable by Kubernetes Overall, these provide a huge improvement in terms of flexibility, faster service delivery, resiliency, and crucially using Kubernetes as unified orchestration layer. The later is a drastic change from previous standards where each vendor had its own orchestration. This unification around Kubernetes greatly simplifies network functions for operators, reducing cost of deploying and maintaining networks. Additionally, by embracing the container form factor, allows Network Functions (NFs) to be deployed in new use cases like far edge. This is thanks to the smaller footprint while at the same time these can be also deployed at large scale in a central data center because of the horizontal scalability. In this article we focus on Red Hat OpenShift which is the market leading and industry reference implementation of Kubernetes for IT and Telco workloads. Introduction to F5 BIG-IP Next CNF Solutions F5 BIG-IP Next CNF Solutions is a suite of Kubernetes native 5G Network Functions, implemented as microservices. It shares the same Cloud Native Engine (CNE) as F5 BIG-IP Next SPK introduced last year. The functionalities implemented by the CNF Solutions deal mainly with user plane data. User plane data has the particularity that the final destination of the traffic is not the Kubernetes cluster but rather an external end-point, typically the Internet. In other words, the traffic gets in the Kubernetes cluster and it is forwarded out of the cluster again. This is done using dedicated interfaces that are not used for the regular ingress and egress paths of the regular traffic of a Kubernetes cluster. In this case, the main purpose of using Kubernetes is to make use of its orchestration, flexibility, and scalability. The main functionalities implemented at initial GA release of the CNF Solutions are: F5 Next Edge Firewall CNF, an IPv4/IPv6 firewall with the main focus in protecting the 5G core networks from external threads, including DDoS flood protection and IPS DNS protocol inspection. F5 Next CGNAT CNF, which offers large scale NAT with the following features: NAPT, Port Block Allocation, Static NAT, Address Pooling Paired, and Endpoint Independent mapping modes. Inbound NAT and Hairpining. Egress path filtering and address exclusions. ALG support: FTP/FTPS, TFTP, RTSP and PPTP. F5 Next DNS CNF, which offers a transparent DNS resolver and caching services. Other remarkable features are: Zero rating DNS64 which allows IPv6-only clients connect to IPv4-only services via synthetic IPv6 addresses. F5 Next Policy Enforcer CNF, which provides traffic classification, steering and shaping, and TCP and video optimization. This product is launched as Early Access in February 2023 with basic functionalities. Static TCP optimization is now GA in the initial release. Although the CGNAT (Carrier Grade NAT) and the Policy Enforcer functionalities are specific to User Plane use cases, the Edge Firewall and DNS functionalities have additional uses in other places of the network. F5 and OpenShift BIG-IP Next CNF Solutions fully supportsRed Hat OpenShift Container Platform which allows the deployment in edge or core locations with a unified management across the multiple deployments. OpenShift operators greatly facilitates the setup and tuning of telco grade applications. These are: Node Tuning Operator, used to setup Hugepages. CPU Manager and Topology Manager with NUMA awareness which allows to schedule the data plane PODs within a NUMA domain which is aligned with the SR-IOV NICs they are attached to. In an OpenShift platform all these are setup transparently to the applications and BIG-IP Next CNF Solutions uniquely require to be configured with an appropriate runtimeClass. F5 BIG-IP Next CNF Solutions architecture F5 BIG-IP Next CNF Solutions makes use of the widely trusted F5 BIG-IP Traffic Management Microkernel (TMM) data plane. This allows for a high performance, dependable product from the start. The CNF functionalities come from a microservices re-architecture of the broadly used F5 BIG-IP VNFs. The below diagram illustrates how a microservices architecture used. The data plane POD scales vertically from 1 to 16 cores and scales horizontally from 1 to 32 PODs, enabling it to handle millions of subscribers. NUMA nodes are supported. The next diagram focuses on the data plane handling which is the most relevant aspect for this CNF suite: Typically, each data plane POD has two IP address, one for each side of the N6 reference point. These could be named radio and Internet sides as shown in the diagram above. The left-side L3 hop must distribute the traffic amongst the lef-side addresses of the CNF data plane. This left-side L3 hop can be a router with BGP ECMP (Equal Cost Multi Path), an SDN or any other mechanism which is able to: Distribute the subscribers across the data plane PODs, shown in [1] of the figure above. Keep these subscribers in the same PODs when there is a change in the number of active data plane PODs (scale-in, scale-out, maintenance, etc...) as shown in [2] in the figure above. This minimizes service disruption. In the right side of the CNFs, the path towards the Internet, it is typical to implement NAT functionality to transform telco's private addresses to public addresses. This is done with the BIG-IP Next CG-NAT CNF. This NAT makes the return traffic symmetrical by reaching the same POD which processed the outbound traffic. This is thanks to each POD owning part of this NAT space, as shown in [3] of the above figure. Each POD´s NAT address space can be advertised via BGP. When not using NAT in the right side of the CNFs, it is required that the network is able to send the return traffic back to the same POD which is processing the same connection. The traffic must be kept symmetrical at all times, this is typically done with an SDN. Using F5 BIG-IP Next CNF Solutions As expected in a fully integrated Kubernetes solution, both the installation and configuration is done using the Kubernetes APIs. The installation is performed using helm charts, and the configuration using Custom Resource Definitions (CRDs). Unlike using ConfigMaps, using CRDs allow for schema validation of the configurations before these are applied. Details of the CRDs can be found in this clouddocs site. Next it is shown an overview of the most relevant CRDs. General network configuration Deploying in Kubernetes automatically configures and assigns IP addresses to the CNF PODs. The data plane interfaces will require specific configuration. The required steps are: Create Kubernetes NetworkNodePolicies and NetworkAttchment definitions which will allow to expose SR-IOV VFs to the CNF data planes PODs (TMM). To make use of these SR-IOV VFs these are referenced in the BIG-IP controller's Helm chart values file. This is described in theNetworking Overview page. Define the L2 and L3 configuration of the exposed SR-IOV interfaces using the F5BigNetVlan CRD. If static routes need to be configured, these can be added using the F5BigNetStaticroute CRD. If BGP configuration needs to be added, this is configured in the BIG-IP controller's Helm chart values file. This is described in the BGP Overview page. It is expected this will be configured using a CRD in the future. Traffic management listener configuration As with classic BIG-IP, once the CNFs are running and plumbed in the network, no traffic is processed by default. The traffic management functionalities implemented by BIG-IP Next CNF Solutions are the same of the analogous modules in the classic BIG-IP, and the CRDs in BIG-IP Next to configure these functionalities are conceptually similar too. Analogous to Virtual Servers in classic BIG-IP, BIG-IP Next CNF Solutions have a set of CRDs that create listeners of traffic where traffic management policies are applied. This is mainly the F5BigContextSecure CRD which allows to specify traffic selectors indicating VLANs, source, destination prefixes and ports where we want the policies to be applied. There are specific CRDs for listeners of Application Level Gateways (ALGs) and protocol specific solutions. These required several steps in classic BIG-IP: first creating the Virtual Service, then creating the profile and finally applying it to the Virtual Server. In BIG-IP Next this is done in a single CRD. At time of this writing, these CRDs are: F5BigZeroratingPolicy - Part of Zero-Rating DNS solution; enabling subscribers to bypass rate limits. F5BigDnsApp - High-performance DNS resolution, caching, and DNS64 translations. F5BigAlgFtp - File Transfer Protocol (FTP) application layer gateway services. F5BigAlgTftp - Trivial File Transfer Protocol (TFTP) application layer gateway services. F5BigAlgPptp - Point-to-Point Tunnelling Protocol (PPTP) application layer gateway services. F5BigAlgRtsp - Real Time Streaming Protocol (RTSP) application layer gateway services. Traffic management profiles and policies configuration Depending on the type of listener created, these can have attached different types of profiles and policies. In the case of F5BigContextSecure it can get attached thefollowing CRDs to define how traffic is processed: F5BigTcpSetting - TCP options to fine-tune how application traffic is managed. F5BigUdpSetting - UDP options to fine-tune how application traffic is managed. F5BigFastl4Setting - FastL4 option to fine-tune how application traffic is managed. and the following policies for security and NAT: F5BigDdosPolicy - Denial of Service (DoS/DDoS) event detection and mitigation. F5BigFwPolicy - Granular stateful-flow filtering based on access control list (ACL) policies. F5BigIpsPolicy - Intelligent packet inspection protects applications from malignant network traffic. F5BigNatPolicy - Carrier-grade NAT (CG-NAT) using large-scale NAT (LSN) pools. The ALG listeners require the use of F5BigNatPolicy and might make use for the F5BigFwPolicyCRDs.These CRDs have also traffic selectors to allow further control over which traffic these policies should be applied to. Firewall Contexts Firewall policies are applied to the listener with best match. In addition to theF5BigFwPolicy that might be attached, a global firewall policy (hence effective in all listeners) can be configured before the listener specific firewall policy is evaluated. This is done with F5BigContextGlobal CRD, which can have attached a F5BigFwPolicy. F5BigContextGlobal also contains the default action to apply on traffic not matching any firewall rule in any context (e.g. Global Context or Secure Context or another listener). This default action can be set to accept, reject or drop and whether to log this default action. In summary, within a listener match, the firewall contexts are processed in this order: ContextGlobal Matching ContextSecure or another listener context. Default action as defined by ContextGlobal's default action. Event Logging Event logging at high speed is critical to provide visibility of what the CNFs are doing. For this the next CRDs are implemented: F5BigLogProfile - Specifies subscriber connection information sent to remote logging servers. F5BigLogHslpub - Defines remote logging server endpoints for the F5BigLogProfile. Demo F5 BIG-IP Next CNF Solutions roadmap What it is being exposed here is just the begin of a journey. Telcos have embraced Kubernetes as compute and orchestration layer. Because of this, BIG-IP Next CNF Solutions will eventually replace the analogous classic BIG-IP VNFs. Expect in the upcoming months that BIG-IP Next CNF Solutions will match and eventually surpass the features currently being offered by the analogous VNFs. Conclusion This article introduces fully re-architected, scalable solution for Red Hat OpenShift mainly focused on telco's user plane. This new microservices architecture offers flexibility, faster service delivery, resiliency and crucially the use of Kubernetes. Kubernetes is becoming the unified orchestration layer for telcos, simplifying infrastructure lifecycle, and reducing costs. OpenShift represents the best-in-class Kubernetes platform thanks to its enterprise readiness and Telco specific features. The architecture of this solution alongside the use of OpenShift also extends network services use cases to the edge by allowing the deployment of Network Functions in a smaller footprint. Please check the official BIG-IP Next CNF Solutions documentation for more technical details and check www.f5.com for a high level overview.1.8KViews2likes1CommentForgeRock with F5 Distributed Cloud (XC) Account Protection and Authentication Intelligence

Introduction Can you think of a person you know who has experienced fraud? Surveys suggest that 1 in 3 global consumers have experienced fraud in the past 3 months. For businesses that means higher churn, revenue loss and potential fines. F5 recognizes the impact on consumers and businesses when fraud occurs. As such, F5 XC's Account Protection and Authentication Intelligence services are now available with ForgeRock empowering ForgeRock customers to slash fraud and abuse, increase top-line revenue and remove log-in friction from legitimate users. Who is ForgeRock? ForgeRock is a global leader in identity, orchestration and access decisioning. Specifically, ForgeRock Identity Platform is AI-powered consisting of identity management, access management, identity governanceand universal directory with dynamic lifecycle orchestration able to send rich signals amongst the digital enterprise. They offer cloud and hybrid environments reaching more than 1,300 customers around the world. Let's get started by walking through how we can enable account protection and authentication intelligence with the ForgeRock plugin! Deployment Steps Prerequisites ForgeRock Tomcat 9 Java 8 or above Forgerock Open AM (7.1.2) F5 provided API Hostname Frontend/Backend JS path Customer ID (can be customized by customer) Cookie name (can be customized by customer) Decryption Key for Cookie Step 1: Create the F5 .jar file The first step will be to visit the ForgeRock backstageand type in "F5". Next choose, "F5 Auth Tree Nodes" which will land at this page. Figure 1: F5 Auth Tree Node backstage landing page Following the steps above will create the specific F5 XC nodes which are required for building the tree. Step 2: Building the tree Once logged into ForgeRock, click on Top Level Realm > AuthenticationTrees > Create Tree > Name the tree > Create. From here we begin to build the tree with the nodes we created from the .jar file as well as some of the existing ForgeRock built-in nodes. Figure 2: Creation of new tree The most important node is the "F5 XC FR Configuration" node which houses the service specific information as well as the defined attributes for Account Protection and Authentication Intelligence. Figure 3: F5 XC FR Configuration node Please note that Authentication Intelligence endpoint configurations are the same as Account Protection endpoint configurations. The completed tree will look like this Figure 4: Completed Authentication tree This integration is based upon encrypted cookies which are used to send the fraud recommendations from F5 XC. The cookie is collected from the website by the "F5 XC Analyze" node and then is decrypted by the "F5 XC Account Protection" node. For the protected endpoints, the "FR" value is read and then an action is then taken based upon the customers configurations as defined in the "F5 XC FR Configuration" node. For Authentication Intelligence, if enabled, a legitimateuser's session is extended beyond the default timeout. The ForgeRock plug-in will use an encrypted persistent cookie which is saved in the client's browser and maintains the user session details and remains even when the user closes the browser. Step 3: Validating the AP configurations In this example, to demonstrate the power of Account Protection, let's say a merchant is protecting his "Shop" endpoint. A user visits the website and makes more than 2 orders and then visits the protected "Shop" endpoint. Based upon the collected telemetry the recommendation comes back as fraud. The previously defined action in the ForgeRock tree is to redirect the user. Figure 5: User triggering redirection action Next we can see the webpage begins to take the action of redirect as per the pre-configured action based upon the fraud recommendation. Figure 6: Endpoint beginning the redirection And then the actual website used for redirection. Figure 7: Successful redirection of the user Step 4: Validating the AI configurations Starting off in the ForgeRock Access Manager, we see the flag for JS injection is ON, the default session is set for 2 minutes, Authentication Intelligence is Enabled, and the action for the "https://apgis.fun:8443/index1"endpoint is extend. Figure 8: Configuration of Authentication Intelligence within ForgeRock Access Manager After the "e1" user logs into the endpoint, despite it having an action of extend, the recommendation for that user is ineligible as seen below. This means that the user is ineligible for a session extension, so the session will be the default of 2 minutes. Figure 9: Ineligible user for session extension After two minutes and a refresh of the page, user "e1" has been logged out. Figure 10: Completed action to log out user after 2 minutes Now let's say that the recommendation comes back as eligible for a session extension of 7 days. Figure 11: User eligible for 7 day session extension This can be verified under the Identities menu in the ForgeRock Access Manager. Figure 12: Access was extended to 7 days Please note that "dc" value in the content of the cookie is being shown in plain text for illustrative purposes, in a production environment, the "dc" value is stored inside encrypted content. The availability of F5 XC Account Protection and Authentication Intelligence on ForgeRock's Identity Platform creates the perfect synergy to help further protect users from fraud and risk as well as reducing log-in friction. It's a win-win! Additional Resources Enabling F5 Distributed Cloud Fraud and Risk Services with ForgeRock Connector Video: HERE Enabling F5 Distributed Cloud Fraud and Risk Solutions with ForgeRock Connector DevCentral article: HERE Integrating F5 Distributed Cloud Fraud and Risk Services with ForgeRock Brightboard Lesson: HERE ForgeRock Marketplace Backstage: HERE F5 Download Integration: HERE1.9KViews0likes0Comments