big-ip

12024 TopicsGRE Tunnel Issue

Has anyone run into an issue with GRE tunnels on a BIG-IP? I have a few setup running into a TGW in AWS and something seems to break them. Config change, Module change, ?? I haven't been able to pin down an exact trigger. Sometimes I could failover and have the tunnels on the other HA member work fine and failing back would results in tunnels going down again. (The tunnels are unique to each BIG-IP) They start responding with ICMP protocol 47 unavailable. Once this happens a reboot doesn't seem to fix it. If I tear down the BIG-IP and rebuild it, I can keep them working again for X amount of time before the cycle repeats. Self-IPs are open to the protocol, also tried allow all for a bit. No NATs involved with underlay IPs.25Views0likes1CommentHow to get a F5 BIG-IP VE Developer Lab License

(applies to BIG-IP TMOS Edition) To assist operational teams teams improve their development for the BIG-IP platform, F5 offers a low cost developer lab license. This license can be purchased from your authorized F5 vendor. If you do not have an F5 vendor, and you are in either Canada or the US you can purchase a lab license online: CDW BIG-IP Virtual Edition Lab License CDW Canada BIG-IP Virtual Edition Lab License Once completed, the order is sent to F5 for fulfillment and your license will be delivered shortly after via e-mail. F5 is investigating ways to improve this process. To download the BIG-IP Virtual Edition, log into my.f5.com (separate login from DevCentral), navigate down to the Downloads card under the Support Resources section of the page. Select BIG-IP from the product group family and then the current version of BIG-IP. You will be presented with a list of options, at the bottom, select the Virtual-Edition option that has the following descriptions: For VMware Fusion or Workstation or ESX/i: Image fileset for VMware ESX/i Server For Microsoft HyperV: Image fileset for Microsoft Hyper-V KVM RHEL/CentoOS: Image file set for KVM Red Hat Enterprise Linux/CentOS Note: There are also 1 Slot versions of the above images where a 2nd boot partition is not needed for in-place upgrades. These images include _1SLOT- to the image name instead of ALL. The below guides will help get you started with F5 BIG-IP Virtual Edition to develop for VMWare Fusion, AWS, Azure, VMware, or Microsoft Hyper-V. These guides follow standard practices for installing in production environments and performance recommendations change based on lower use/non-critical needs for development or lab environments. Similar to driving a tank, use your best judgement. Deploying F5 BIG-IP Virtual Edition on VMware Fusion Deploying F5 BIG-IP in Microsoft Azure for Developers Deploying F5 BIG-IP in AWS for Developers Deploying F5 BIG-IP in Windows Server Hyper-V for Developers Deploying F5 BIG-IP in VMware vCloud Director and ESX for Developers Note: F5 Support maintains authoritative Azure, AWS, Hyper-V, and ESX/vCloud installation documentation. VMware Fusion is not an official F5-supported hypervisor so DevCentral publishes the Fusion guide with the help of our Field Systems Engineering teams.108KViews14likes153CommentsAWS Transit Gateway Connect: GRE + BGP = ?

GRE and BGP are technologies that are... mature. In this article we'll take a look at how you can use AWS Transit Gateway Connect to do some unique networking and application delivery in the cloud. In December 2020 AWS released a new feature of Transit Gateway (TGW) that enables a device to peer with TGW via a GRE/BGP tunnel. The intent was to be used with SD-WAN devices, but we can also use it for things like load balancing many internal private addresses, NAT gateway, etc... In this article we'll look at my experience of setting up TGW Connect in a lab environment based on F5's documentation for setting up GRE and BGP. Challenges with TGW For folks that are not familiar with TGW it is an AWS service that allows you to stitch together multiple physical and virtual networks via AWS internal networking (VPC peering) or via network protocols (VPN, Direct Connect (private L2)). Using TGW you can steer traffic to a specific network device by creating a route table entry within a VPC that points to the device's ENI (network interface). This is useful for a case where you want to send all traffic for a specific CIDR (192.0.2.0/24) to traverse that device. In the scenario where a device is responsible for a CIDR it is also responsible for updating the route table for HA. This could be done via Lambda function, our Cloud Failover Extension, manual updates, etc.... The other downside is that this limits you to a single device per Availability Zone to receive traffic for that CIDR. TGW Connect provides a mechanism for the device to use a GRE tunnel/BGP to establish a connection to TGW and use dynamic routing protocols (BGP) to advertise the health of the device. This allows you to establish up to 4 devices to peer with TGW with up to 5 Gbps of traffic per connection (for comparison you can burst up to 50 Gbps with a VPC connection). Topology of TGW Connect When using TGW Connect it re-uses existing TGW connections. In practice this means that you are likely using an existing Direct Connect or VPC connection (I guess you could also use a VPN connection, but that would be weird). See also: https://aws.amazon.com/blogs/networking-and-content-delivery/simplify-sd-wan-connectivity-with-aws-transit-gateway-connect/ Configuring a BIG-IP for TGW Connect To use a BIG-IP with TGW Connect you will need a device that is licensed for BGP (also called Advanced Routing, part of Better/Best). Follow the steps for setting up TGW Connect and be sure to specify a different peer ASN than your TGW (you will need to use eBGP). The "Peer Address" will be the self-ip of the BIG-IP on the AWS VPC (when using a VPC). Configuring target VPC When setting up TGW Connect you will peer with an existing VPC. In the subnet that you want to use with TGW Connect (Peer Address of GRE tunnel) you will need to have a route that points to the TGW peer address; for example if you specify a CIDR of 10.254.254.0/24 for TGW and the peer address is 10.254.254.11 you will need to create a route that includes 10.254.254.0/24 on the subnet for BIG-IP peer address. Also make sure to open up Security Groups to allow GRE traffic to traverse to/from the interface that will be used for the GRE tunnel. The rule should allow the IP of the peer address (i.e. 10.254.254.11). Route to TGW from 10.1.7.0/24 GRE Tunnel On the BIG-IP under Network / Tunnels you will need to create a GRE Tunnel. You can use the default "gre" tunnel profile. Specify the same "Peer Address" that you used when setting up TGW Connect. You will also want to specify the Remote Address that is the TGW address. BGP Peer Next you will need to configure the BIG-IP to act as a BGP peer to TGW connecting over the GRE tunnel. TGW requires that you use an IP in the 169.254.0.0 range. This will require modify a db variable to allow that address to be used as a self-ip. The tmsh command to use is. modify sys db config.allow.rfc3927 { value "enable" } You can then create your BGP peer address to match the value that you used in TGW Connect. The BGP peer address will need to be configured to allow BGP updates (port 179). Since the traffic is occurring over the GRE tunnel there is no need to update AWS Security Groups (invisible to the ENI). Setting up BGP Peering TGW Connect requires eBGP to be used. The following is an example of a working config. This also assumes you go through the pre-req of setting up BGP/RHI. Be careful to only advertise the routes that you want, when you use "redistribute kernel" it will also advertise 0.0.0.0/0! Please also see: https://support.f5.com/csp/article/K15923612 ! no service password-encryption ! router bgp 65520 bgp graceful-restart restart-time 120 aggregate-address 10.0.0.0/16 summary-only aggregate-address 10.1.0.0/16 summary-only aggregate-address 10.2.0.0/16 summary-only aggregate-address 10.3.0.0/16 summary-only redistribute kernel neighbor 169.254.10.2 remote-as 64512 neighbor 169.254.10.2 ebgp-multihop 2 neighbor 169.254.10.2 soft-reconfiguration inbound neighbor 169.254.10.2 prefix-list tenlist out neighbor 169.254.10.3 remote-as 64512 neighbor 169.254.10.3 ebgp-multihop 2 neighbor 169.254.10.3 soft-reconfiguration inbound neighbor 169.254.10.3 prefix-list tenlist out ! ip prefix-list tenlist seq 5 deny 10.254.254.0/24 ip prefix-list tenlist seq 10 permit 10.0.0.0/8 ge 16 ! line con 0 login line vty 0 39 login ! end This example was created with help from a BGP expert Brandon Frelich. In the example above we only limiting routes to 3 CIDRs and configuring ECMP. At this point the BIG-IP could allocate VIPs on the CIDR, act as an AFM firewall, and if we used 0.0.0.0/0 it could act as an outbound gateway. Verifying the Setup You should be able to see your BGP connection go green in the AWS console and also see the status by running "show ip bgp neighbors" from imish. AWS Console ip-10-1-1-112.ec2.internal[0]>show ip bgp summary BGP router identifier 169.254.10.1, local AS number 65520 BGP table version is 4 2 BGP AS-PATH entries 0 BGP community entries Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd 169.254.10.2 4 64512 293 293 4 0 0 00:47:08 3 169.254.10.3 4 64512 293 292 4 0 0 00:47:08 3 Total number of neighbors 2 Output from imish (tmos)# list /ltm virtual-address one-line ltm virtual-address 10.0.0.0 { address 10.0.0.0 arp disabled floating disabled icmp-echo disabled mask 255.255.0.0 route-advertisement selective traffic-group none unit 0 } ltm virtual-address 10.1.0.0 { address 10.1.0.0 arp disabled floating disabled icmp-echo disabled mask 255.255.0.0 route-advertisement selective traffic-group none unit 0 } ltm virtual-address 10.2.0.0 { address 10.2.0.0 arp disabled floating disabled icmp-echo disabled mask 255.255.0.0 route-advertisement selective traffic-group none unit 0 } (tmos)# list /net route tgw net route tgw { interface /Common/tgw-connect network 10.0.0.0/8 } Output from TMSH. Note route-advertisement is enabled on the virtual-addresses. We are using a static route to steer traffic to the GRE tunnel. You should also see any routes advertised as well. ECMP Considerations When you deploy multiple BIG-IP devices TGW can use ECMP to spray traffic across multiple devices (by enabling traffic group None or multiple standalone devices). Be aware that if you need to statefully inspect traffic you may want to enable SNAT to have the return traffic go to the same device or use traffic-group-1 to run in Active/Standby via Route Health Injection. Otherwise you will need to follow the guidance on setting up a forwarding virtual server to ignore the system connection table. Routing Connections One of the issues that a customer discovered when exploring this solution is that the BIG-IP will initially send health checks across the GRE tunnel using an IP from the 169.254.x.x address range, this follows the address selection criteria that a BIG-IP uses. One method of dealing with this is to assign an IP address in a range that you would like to advertise across the tunnel like 198.168.254.0/24. Creating a self-ip of 198.168.254.253 that you assign to the tunnel. To send traffic for a different range (i.e. 10.0.0.0/16) you can then create a static route on the BIG-IP that points to 198.168.254.1. Since the BIG-IP sees the address is on the tunnel it will correctly forward the traffic through the tunnel. Another question that arose was whether it was possible to have asymmetric traffic flows of utilizing both the GRE TGW Connect tunnel and the TGW VPC Connection of the VPC itself. I discovered that YES this is possible by following the guidance on enabling asymmetrically routed traffic. It hurts your brain a bit, but here's the results of a flow that is using both a Connect and VPC connection. You can do some crazy things, but with great power... Request traffic over VPC Connection Response traffic over TGW Connect (GRE) Other Options You could also achieve a similar result by using default VPC peering and making use of Cloud Failover Extension for updating the route table. The benefit of that approach is that you don't have to deal with GRE/BGP! It does limit you to a single device per-AZ vs. being able to get up to 4 devices running across a connection.7.3KViews2likes1CommentAccelerate your AI initiatives using F5 VELOS

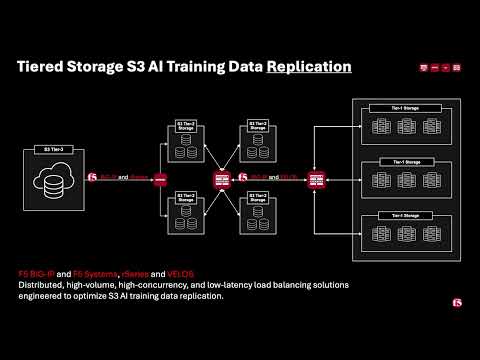

Introduction F5 VELOS is a rearchitected, next-generation hardware platform that scales application delivery performance and automates application services to address many of today’s most critical business challenges. F5 VELOS is a key component of the F5 Application Delivery and Security Platform (ADSP). Demo Video High-Throughput and Concurrency for AI Data Ingestion Given the escalating data demands of AI training and inference pipelines, there is a critical need to architect object-based storage systems, such as S3, and corresponding clients in a manner that ensures high-throughput, scalability, and fault tolerance under massive parallel workloads. S3 Storage Systems increase scalability and resiliency by distributing data objects across multiple storage nodes, leveraging a unified “bucket” abstraction to streamline data organization, access, and fault tolerance. S3 Client Implementations employ highly parallelized, and multi-threaded operations to maximize data transfer rates and throughput, satisfying the low-latency, high-volume requirements of AI and other computationally intensive workloads. Performance and Security for AI Workloads F5 BIG-IP delivers multi-layer load balancing reinforced by robust in-flight security services and performance thresholds engineered to meet or exceed the most demanding enterprise-scale capacity requirements. F5 VELOS Chassis & Blades have advanced FPGA accelerators, high-performance CPU architectures, and cryptographic offload engines. They are all combined with scaling to multi-terabit throughput to meet or exceed the most demanding enterprise capacity requirements. F5 BIG-IP and VELOS enable high-performance data mobility and security for AI workloads anywhere. Load Balancing for S3 AI Training Data Replication Data Replication for Training AI model training and retraining often requires the replication of data from web-service-based object storage tiers to high-performance clustered filesystems. Market Constraints Tier-1 storage systems command high costs, and the ecosystem of certified providers for AI-specific architectures remains comparatively narrow. High-Performance Requirements Effective model training demands access to Tier-1 storage that supports hardware-accelerated data transfers, ensuring rapid delivery of input to GPU memory. S3 Based Migration Replication from cost-efficient, lower-performance storage repositories to Tier 1 infrastructure is commonly orchestrated via the S3 protocol to maintain both scalability and performance. Tiered Storage S3 AI Training Data Replication F5 BIG-IP and F5 Systems, rSeries and VELOS Distributed, high-volume, high-concurrency, and low-latency load balancing solutions engineered to optimize S3 AI training data replication. BIG-IP Best-In-Class Traffic Management & Security: SPEED Smart Load Balancing & Security Directs traffic to the optimal storage for performance, security, and availability. Seamless Data Flow BIG-IP LTM ensures efficient, secure routing from external sources to local storage. Optimized S3 Routing BIG-IP DNS directs client connections to highly available storage nodes for smooth data ingestion. BIG-IP Best-In-Class Traffic Management & Security: SCALE High-Throughput Traffic Management Optimize TCP and HTTPS flows for seamless object storage access. Accelerated Packet Processing Leverage embedded eVPA in FPGA for high-performance L4 IPv4 throughput. Crypto Offload for Speed BIG-IP LTM offloads encryption to best-in-class hardware on rSeries and VELOS, boosting performance. BIG-IP Best-In-Class Traffic Management & Security: Security Robust DDoS Protection BIG-IP’s AFM defends against volumetric and targeted attacks. Secure Traffic Management BIG-IP LTM ensures efficient, secure routing from external sources to local storage. End-to-End Data Protection Safeguards AI workloads with policy-driven security and threat mitigation. F5 Systems Enables Accelerated AI Application Delivery F5 VELOS, rSeries, and BIG-IP Enable distributed, high-volume, high-concurrency, low-latency application delivery for S3. The All-New VELOS CX1610 Provides the multi-terabit throughput necessary for high-performance traffic orchestration. F5 BIG-IP App Services Suite Simplify and secure application delivery for the most demanding high-throughput AI infrastructure needs. Conclusion Unleash Massive Throughput The All-New VELOS BX520 Blade The All-New VELOS CX1610 Chassis Related Articles F5 VELOS: A Next-Generation Fully Automatable Platform F5 rSeries: Next-Generation Fully Automatable Hardware Realtime DoS mitigation with VELOS BX520 Blade DEMO: The Next Generation of F5 Hardware is Ready for you 459Views3likes0Comments

459Views3likes0CommentsBIG-IP for Scalable App Delivery & Security in Hybrid Environments

Scope As enterprises deploy multiple instances of the same applications across diverse infrastructure platforms such as VMware, OpenShift, Nutanix, and public cloud environments and across geographically distributed locations to support redundancy and facilitate seamless migration, they face increasing challenges in ensuring consistent performance, centralized security, and operational visibility. The complexity of managing distributed application traffic, enforcing uniform security policies, and maintaining high availability across hybrid environments introduces significant operational overhead and risk, hindering agility and scalability. F5 BIG-IP Application Delivery and Security address this challenge by providing a unified, policy-driven approach to manage secure workloads across hybrid multi-cloud environments. It can be used to scale up application services on existing infrastructure or with new business models. Introduction This article highlights how F5 BIG-IP deploys identical application workloads across multiple environments. This ensure high availability, seamless traffic management, and consistent performance. By supporting smooth workload transitions and zero-downtime deployments, F5 helps organizations maintain reliable, secure, and scalable applications. From a business perspective, it enhances operational agility, supports growing traffic demands, reduces risk during updates, and ultimately delivers a reliable, secure, and high-performance application experience that meets customer expectations and drives growth. This use case covers a typical enterprise setup with the following environments: VMware (On-Premises) Nutanix (On-Premises) OCP (On-Premises) Google Cloud Platform (GCP) Solution Overview The following video shows how F5 BIG-IP VE running on different virtualized platforms and environments can be configured to scale, secure, and deliver applications equally, even when located on-prem and in cloud environments. By providing a uniform interface and security policies organizations can focus on other priorities and changing business needs. Architecture Overview As illustrated in the diagram, when new application workloads are provisioned across environments such as AWS, GCP, VMware (on-prem), Nutanix (on-prem & VMware) BIG-IP ensures seamless integration with existing services. Platforms Supported Environments VMware On-Prem, GCP, Azure Nutanix On-Prem, AWS, Azure OCP On-Prem, AWS, Azure This article outlines the deployment in VMware platform. For deployment in other platforms like Nutanix and GCP, refer the detailed guide below. F5 Scalable Enterprise Workload Deployments Complete Guide Scalable Enterprise Workload Deployment Across Hybrid Environments Enterprise applications are deployed smoothly across multiple environments to address diverse customer needs. With F5’s advanced Application Delivery and Security features, organizations can ensure consistent performance, high availability, and robust protection across all deployment platforms. F5 provides a unified and secure application experience across cloud, on-premises, and virtualized environments. Workload Distribution Across Environments Workloads are distributed across the following environments: VMware: App A & App B OpenShift: App B Nutanix: App B & App C → VMware: Add App C → OpenShift: Add App A & App C → Nutanix: Add App A Applications being used: A → Juice Shop (Vulnerable web app for security testing) B → DVWA (Damn Vulnerable Web Application) C → Mutillidae Initial Infrastructure & B, Nutanix: App B &C, GCP: App B. VMware In the VMware on-premises environment, Applications A and B are deployed and connected to two separate load balancers. This forms the existing infrastructure. These applications are actively serving user traffic with delivery and security managed by BIG-IP. Web Application Firewall (WAF) is enabled, which will prevent any malicious threats. The corresponding logs can be found under BIG-IP > Security > Event Logs Note: This initial deployment infrastructure has also been implemented on Nutanix and GCP. For the full details, please consult the complete guide here Adding additional workloads To demonstrate BIG-IP’s ability to support evolving enterprise demands, we will introduce new workloads across all environments. This will validate its seamless integration, consistent security enforcement, and support for continuous delivery across hybrid infrastructures. VMware Let us add additional application-3 (mutillidae) to the VMware on-premises environment. Try to access the application through BIG-IP virtual server. Apply the WAF policy to the newly created virtual server, then verify the same by simulating malicious attacks. Nutanix The use case described for VMware is equally applicable and supported when deploying BIG-IP on Nutanix Bare Metal as well as Nutanix on VMware. For demonstration purposes, the Nutanix Community Edition hypervisor is booted as a virtual machine within VMware. Inside this hypervisor, a new virtual machine is created and provisioned using the BIG-IP image downloaded from the F5 Downloads portal. Once the BIG-IP instance is online, an additional VM hosting the application workload is deployed. This application VM is then associated with a BIG-IP virtual server, ensuring that the application remains isolated and protected from direct external exposure. OCP The use case described for VMware is equally applicable and fully supported when deploying BIG-IP with Red Hat OpenShift Container Platform (OCP) including Nutanix and VMware-based infrastructures. For demonstration, OCP is deployed on a virtualized cluster, while BIG-IP is provisioned externally using an image from the F5 Downloads portal. BIG-IP consumes the OpenShift configuration and dynamically creates the required virtual servers, pools, and health monitors. Traffic to the application is routed through BIG-IP, ensuring that the application remains isolated from direct external exposure while benefiting from enterprise-grade traffic management, security enforcement, and observability. GCP (Google Cloud Platform) The use case discussed above for VMware is also applicable and supported when deploying BIG-IP on public cloud platforms such as Azure, AWS, and GCP. For demonstration purposes, GCP is selected as the cloud environment for deploying BIG-IP. Within the same project where the BIG-IP instance is provisioned, an additional virtual machine hosting application workloads is deployed and associated with the BIG-IP virtual server. This setup ensures that the application workloads remain protected behind BIG-IP, preventing direct external exposure. Key Resources: Please refer to the detailed guide below, which outlines the deployment of Nutanix on VMware and GCP, and demonstrates how BIG-IP delivers consistent security, traffic management, and application delivery across hybrid environments. F5 Scalable Enterprise Workload Deployments Complete Guide Conclusion This demonstration clearly illustrates that BIG-IP’s Application Delivery and Security capabilities offer a robust, scalable, and consistent solution across both multi-cloud and on-premises environments. By deploying BIG-IP across diverse platforms, organizations can achieve uniform application security, while maintaining reliable connectivity, strong encryption, and comprehensive protection for both modern and legacy workloads. This unified approach allows businesses to seamlessly scale infrastructure and address evolving user demands without sacrificing performance, availability, or security. With BIG-IP, enterprises can confidently deliver applications with resilience and speed, while maintaining centralized control and policy enforcement across heterogeneous environments. Ultimately, BIG-IP empowers organizations to simplify operations, standardize security, and accelerate digital transformation across any environment. References F5 Application Delivery and Security Platform BIG-IP Data Sheet F5 Hybrid Security Architectures: One WAF Engine, Total Flexibility Distributed Cloud (XC) Github Repo BIG-IP Github Repo 480Views2likes0Comments

480Views2likes0CommentsLB Connection Limit Detection Method

We have set a connection limit on the load balancer. If there is a way to detect when the upper limit of the connection limit is exceeded, please let us know. We are considering detection via log monitoring, but we would like to confirm if there are other methods available.124Views0likes5CommentsUse F5 APM as Forward Proxy

Hello All, I have one BIG-IP with APM license and I wan to use it as a forward proxy. I have used this iApp https://devcentral.f5.com/codeshare/apm-explicit-proxy and now I have: DNS Resolver Tunnel for traffic HTTP profile Virtual Server (Proxy) listening on 8080 Although this is configured, when I point to this proxy with my browser it doesn’t seem to work. I suppose that now I have to create two more separate virtual servers listening on ports 80 and 443 for handling http and https traffic. Am I right? The question is once I have configured this two virtual servers how can I forward traffic to Internet? If the VS haven’t got pool members, does it check the routing table? Or I have to create an iRule with something like this: When HTTP::request { Forward } When HTTP::response { Forward } Also, I don’t want to inspect SSL traffic, I Would like to use the Proxy as a passthrough but only allow certain https sites, Do I need to inspect SSL traffic to filter by URLs? Thanks in advantageSolved460Views0likes3CommentsTCP Profile with Verified Accept enabled and three-way TCP handshake

Hi, I'm trying to understand exactly how the Standard virtual server processes connections using the full proxy architecture works when Verified Accept is enable on the TCP profile. With Verified Accept disabled, the three-way TCP handshake occurs on the client side of the connection before the BIG-IP LTM system initiates the TCP handshake on the server side of the connection. Only when the client side TCP handshake is complete, LTM chooses a pool member and start the server side three-way TCP handshake. When Verified Accept is enabled, "the system sends the server a SYN packet, and waits for the server to respond with a SYN-ACK, before responding to the client's SYN with a SYN-ACK" (K98387022: TCP Profile with Verified Accept enabled). My question : when Verified Accept is enabled the server side TCP handshake is completed before or after the client side TCP handshake ? I'm confusing because in the F5 documentation this behavior is not clearly described and because in the document K98387022 I read this example : For example, given an HTTP virtual server, the order of events changes. Verified Accept disabled: CLIENT_ACCEPTED -> HTTP_REQUEST -> LB_SELECTED -> SERVER_CONNECTED -> HTTP_REQUEST_SEND Verified Accept enabled: CLIENT_ACCEPTED -> LB_SELECTED -> SERVER_CONNECTED -> HTTP_REQUEST -> HTTP_REQUEST_SEND If I'm not mistaken, CLIENT_ACCEPTED means that the connection has been established and that the three-way handshake is complete. So, in this example the client side handshake is completed before the server side handshake in both cases ? Thanks for your help DiegoSolved108Views1like4CommentsF5 Software Downgrade from version 17.x.x to 15.x.x

After upgrading from version 15.x.x to 17.x.x, I attempted to downgrade from 17.x.x back to 15.x.x. However, the log continuously displayed “logger[xxxxx]:Re-stating devmgmtd”, and the prompt remained in the “INOPERATIVE” state. Could you please provide the correct procedure for performing a version downgrade?Solved299Views0likes3Comments