application delivery

43218 TopicsiRule based RADIUS Server Stack

Code is community submitted, community supported, and recognized as ‘Use At Your Own Risk’. Short Description The iRule based RADIUS Server Stack can be used to turn a UDP-based Virtual Server into a flexible and fully featured RADIUS Server, including regular REQUEST/RESPONSE as well as CHALLENGE/RESPONSE based RADIUS authentication. Problem solved by this Code Snippet The RADIUS Server Stack covers the RADIUS protocol core-mechanics outlined in RFC 2865 and RFC 5080 and can easily be extended to support every other RADIUS related RFC built on top of these specifications. The RADIUS Server Stack can be used as an extension for LTMs missing RADIUS Server functionalities, as well as iRule command functionalities to support Self-Hosted RADIUS Server scenarios. How to use this Code Snippet Visit my GitHub Repository for further explanations how the RADIUS Server Stack can be used to perform RADIUS Server operations within an iRule. Code Snippet Meta Information Version: 1.1 Coding Language: TCL Full Code Snippet Visit: https://github.com/KaiWilke/F5-iRule-RADIUS-Server-Stack831Views1like1CommentiRule based RADIUS Client Stack

Code is community submitted, community supported, and recognized as ‘Use At Your Own Risk’. Short Description The iRule based RADIUS Client Stack can be used to perform RADIUS based user authentication via SIDEBAND UDP connections. Problem solved by this Code Snippet The RADIUS Client Stack covers the RADIUS protocol core-mechanics outlined in RFC 2865 and RFC 5080 and can be utilized for a Password Authentication Protocol (PAP) authentication within an iRule. How to use this Code Snippet Visit my GitHub Repository for further explanations how the RADIUS Client Stack can be used to perform RADIUS Client operations within an iRule. Code Snippet Meta Information Version: 1.1 Coding Language: TCL Full Code Snippet Visit: https://github.com/KaiWilke/F5-iRule-RADIUS-Client-Stack1.3KViews2likes1CommentPrism.js language definition for iRules (TMOS v21.0)

Code is community submitted, community supported, and recognized as ‘Use At Your Own Risk’. Short Description The provided iRule language definition can be used to highlight iRule code within websites using the Prism.js framework. Problem solved by this Code Snippet The Prism.js framework is widely used to highlight code blocks on websites. Even the DevCentral Page uses the Prism.js framework to highlight code boxes using the default TCL language definition contributed by Peter Chaplin to the Prism.js repository. if { "1" equals "1" } then { HTTP::path "/something" pool some_pool } As you may see in the example above, the default TCL language definition is not trained to highlight any of the F5 specific commands (e.g. HTTP::path) and operators (e.g. equals), so that they remain non-highlighted in black color. I took the time to write a language definition for iRules based on the reduced TCL 8.4 syntax supported by iRules in addition to the iRule command and operator set based on TMOS version 21.0. I finally ended up with nearly 10kbyte RegEx signatures to provide a rich command highlighting experience for iRule code snippets. How to use this Code Snippet Visit my GitHub Repository for additional implementation notes of the 'Prism.js language definition for iRules'. Feel free to discuss the project here on CrowSRC! Cheers, Kai Code Snippet Meta Information Version: 1.1 Coding Language: Prism.js, JS, RegEx Full Code Snippet Visit: https://github.com/KaiWilke/F5-PrismJS-iRule-Language-Definition893Views1like3CommentsAccelerate your AI initiatives using F5 VELOS

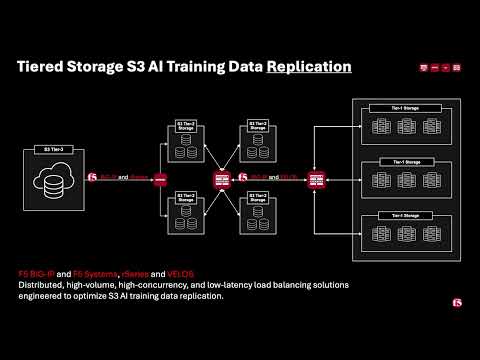

Introduction F5 VELOS is a rearchitected, next-generation hardware platform that scales application delivery performance and automates application services to address many of today’s most critical business challenges. F5 VELOS is a key component of the F5 Application Delivery and Security Platform (ADSP). Demo Video High-Throughput and Concurrency for AI Data Ingestion Given the escalating data demands of AI training and inference pipelines, there is a critical need to architect object-based storage systems, such as S3, and corresponding clients in a manner that ensures high-throughput, scalability, and fault tolerance under massive parallel workloads. S3 Storage Systems increase scalability and resiliency by distributing data objects across multiple storage nodes, leveraging a unified “bucket” abstraction to streamline data organization, access, and fault tolerance. S3 Client Implementations employ highly parallelized, and multi-threaded operations to maximize data transfer rates and throughput, satisfying the low-latency, high-volume requirements of AI and other computationally intensive workloads. Performance and Security for AI Workloads F5 BIG-IP delivers multi-layer load balancing reinforced by robust in-flight security services and performance thresholds engineered to meet or exceed the most demanding enterprise-scale capacity requirements. F5 VELOS Chassis & Blades have advanced FPGA accelerators, high-performance CPU architectures, and cryptographic offload engines. They are all combined with scaling to multi-terabit throughput to meet or exceed the most demanding enterprise capacity requirements. F5 BIG-IP and VELOS enable high-performance data mobility and security for AI workloads anywhere. Load Balancing for S3 AI Training Data Replication Data Replication for Training AI model training and retraining often requires the replication of data from web-service-based object storage tiers to high-performance clustered filesystems. Market Constraints Tier-1 storage systems command high costs, and the ecosystem of certified providers for AI-specific architectures remains comparatively narrow. High-Performance Requirements Effective model training demands access to Tier-1 storage that supports hardware-accelerated data transfers, ensuring rapid delivery of input to GPU memory. S3 Based Migration Replication from cost-efficient, lower-performance storage repositories to Tier 1 infrastructure is commonly orchestrated via the S3 protocol to maintain both scalability and performance. Tiered Storage S3 AI Training Data Replication F5 BIG-IP and F5 Systems, rSeries and VELOS Distributed, high-volume, high-concurrency, and low-latency load balancing solutions engineered to optimize S3 AI training data replication. BIG-IP Best-In-Class Traffic Management & Security: SPEED Smart Load Balancing & Security Directs traffic to the optimal storage for performance, security, and availability. Seamless Data Flow BIG-IP LTM ensures efficient, secure routing from external sources to local storage. Optimized S3 Routing BIG-IP DNS directs client connections to highly available storage nodes for smooth data ingestion. BIG-IP Best-In-Class Traffic Management & Security: SCALE High-Throughput Traffic Management Optimize TCP and HTTPS flows for seamless object storage access. Accelerated Packet Processing Leverage embedded eVPA in FPGA for high-performance L4 IPv4 throughput. Crypto Offload for Speed BIG-IP LTM offloads encryption to best-in-class hardware on rSeries and VELOS, boosting performance. BIG-IP Best-In-Class Traffic Management & Security: Security Robust DDoS Protection BIG-IP’s AFM defends against volumetric and targeted attacks. Secure Traffic Management BIG-IP LTM ensures efficient, secure routing from external sources to local storage. End-to-End Data Protection Safeguards AI workloads with policy-driven security and threat mitigation. F5 Systems Enables Accelerated AI Application Delivery F5 VELOS, rSeries, and BIG-IP Enable distributed, high-volume, high-concurrency, low-latency application delivery for S3. The All-New VELOS CX1610 Provides the multi-terabit throughput necessary for high-performance traffic orchestration. F5 BIG-IP App Services Suite Simplify and secure application delivery for the most demanding high-throughput AI infrastructure needs. Conclusion Unleash Massive Throughput The All-New VELOS BX520 Blade The All-New VELOS CX1610 Chassis Related Articles F5 VELOS: A Next-Generation Fully Automatable Platform F5 rSeries: Next-Generation Fully Automatable Hardware Realtime DoS mitigation with VELOS BX520 Blade DEMO: The Next Generation of F5 Hardware is Ready for you 459Views3likes0Comments

459Views3likes0CommentsF5 rSeries: Next-Generation Fully Automatable Hardware

What is rSeries? F5 rSeries is a rearchitected, next-generation hardware platform that scales application delivery performance and automates application services to address many of today’s most critical business challenges. F5 rSeries is a key component of the F5 Application Delivery and Security Platform (ADSP). rSeries relies on a Kubernetes-based platform layer (F5OS) that is tightly integrated with F5 TMOS software. Going to a microservice-based platform layer allows rSeries to provide additional functionality that was not possible in previous generations of F5 BIG-IP platforms. Customers do not need to learn Kubernetes but still get the benefits of it. Management of the hardware will still be done via a familiar F5 CLI, webUI or API. The additional benefit of automation capabilities can greatly simplify the process of deploying F5 products. A significant amount of time and resources are saved due to automation, which translates to more time to perform critical tasks. F5OS rSeries UI Demo Video Why is this important? Get more done in less time by using a highly automatable hardware platform that can deploy software solutions in seconds, not minutes or hours. Increased performance improves ROI: The rSeries platform is a high performance and highly scalable appliance with improved processing power. Running multiple versions on the same platform allows for more flexibility than previously possible. Pay-as-you-Grow licensing options that unlock more CPU resources. Key rSeries Use-Cases NetOps Automation Shorten time to market by automating network operations and offering cloud like orchestration with full stack programmability Drive app development and delivery with self-service and faster response time Business Continuity Drive consistent policies across on-prem and public cloud and across hardware and software based ADCs Build resiliency with rSeries’ superior performance and failover capabilities Future proof investments by running multiple versions of apps side-by-side; migrate applications at your own pace Cloud Migration On-Ramp Accelerate cloud strategy by adopting cloud operating models and on-demand scalability with rSeries and use that as on ramp to cloud Dramatically reduce TCO with rSeries systems; extend commercial models to migrate from hardware to software or as applications move to cloud Automation Capabilities Declarative APIs and integration with automation frameworks (Terraform, Ansible) greatly simplifies operations and reduces overhead: AS3 (Application Services 3 Extension): A declarative API that simplifies the configuration of application services. With AS3, customers can deploy and manage configurations consistently across environments. Ansible Automation: Prebuilt Ansible modules for rSeries enable automated provisioning, configuration, and updates, reducing manual effort and minimizing errors. Terraform: Organizations leveraging Infrastructure as Code (IaC) can use Terraform to define and automate the deployment of rSeries appliances and associated configurations. Example json file: Example of running the Automation Playbook: Example of the results: More information on Automation: Automating F5OS on rSeries GitHub Automation Repository Specialized Hardware Performance rSeries offers more hardware-accelerated performance capabilities with more FPGA chipsets that are more tightly integrated with TMOS. It also includes the latest Intel processing capabilities. This enhances the following: SSL and compression offload L4 offload for higher performance and reduced load on software Hardware-accelerated SYN flood protection Hardware-based protection from more than 100 types of denial-of-service (DoS) attacks Support for F5 Intelligence Services Migration Options (BIG-IP Journeys) Use BIG-IP Jouneys to easily migrate your existing configuration to rSeries. This covers the following: Entire L4-L7 configuration can be migrated Individual Applications can be migrated BIG-IP Tenant configuration can be migrated Automatically identify and resolve migration issues Convert UCS files into AS3 declarations if needed Post-deployment diagnostics and health The Journeys Tool, available on DevCentral’s GitHub, facilitates the migration of legacy BIG-IP configurations to rSeries-compatible formats. Customers can convert UCS files, validate configurations, and highlight unsupported features during the migration process. Multi-tenancy capabilities in rSeries simplify the process of isolating workloads during and after migration. GitHub repository for F5 Journeys Conclusion The F5 rSeries platform addresses the modern enterprise’s need for high-performance, scalable, and efficient application delivery and security solutions. By combining cutting-edge hardware capabilities with robust automation tools and flexible migration options, rSeries empowers organizations to seamlessly transition from legacy platforms while unlocking new levels of performance and operational agility. Whether driven by the need for increased throughput, advanced multi-tenancy, the rSeries platform stands as a future-ready solution for securing and optimizing application delivery in an increasingly complex IT landscape. Related Content Cloud Docs rSeries Guide F5 rSeries Appliance Datasheet F5 VELOS: A Next-Generation Fully Automatable Platform DEMO: The Next Generation of F5 Hardware is Ready for you 658Views2likes0Comments

658Views2likes0CommentsRealtime DoS mitigation with VELOS BX520 Blade

Introduction F5 VELOS is a rearchitected, next-generation hardware platform that scales application delivery performance and automates application services to address many of today’s most critical business challenges. F5 VELOS is a key component of the F5 Application Delivery and Security Platform (ADSP). Demo Video DoS attacks are a fact of life Detect and mitigate large-scale, volumetric network and application-targeted attacks in real-time to defend your businesses and your customers against multi-vector, denial of service (DoS) activity attempting to disrupt your business. DoS impacts include: Loss of Revenue Degradation of Infrastructure Indirect costs often include: Negative Customer Experience. Brand Image DoS attacks do not need to be massive to be effective. F5 VELOS: Key Specifications Up to 6Tbps total Layer 4-7 throughput 6.4 Billion concurrent connections Higher density resources/Rack Unit than any previous BIG-IP Flexible support for multi-tenancy and blade groupings API first architecture / fully automatable Future-proof architecture built on Kubernetes Multi-terabit security – firewall and real-time DoS Real-time DoS Mitigation with VELOS Challenges Massive volume attacks are not required to negatively impact “Goodput”. Shorter in duration to avoid Out of Band/Sampling Mitigation. Using BIG-IP inline DoS protection can react quickly and mitigate in real-time. Simulated DoS Attack 600 Gbps 1.5 Million Connections Per Second (CPS) 120 Million Concurrent Flows Example Dashboard without DoS Attack Generated Attack Flood an IP from many sources 10 Gb/s with 10 Million CPS DoS Attack launched (<2% increase in Traffic) Impact High CPU Consumption: 10M+ new CPS High memory utilization with Concurrent Flows increasing quickly Result Open connections much higher New connections increasing rapidly Higher CPU Application Transaction Failures Enable Network Flood Mitigation Mitigation Applied Enabling the Flood Vector on BIG-IP AFM Device DoS Observe “Goodput” returning to normal in seconds as BIG-IP mitigates the Attack Conclusion Distributed denial of service (DDoS) attacks continue to see enormous growth across every metric. This includes an increasing number and frequency of attacks, average peak bandwidth and overall complexity. As organizations face unstoppable growth and the occurrence of these attacks, F5 provides organizations multiple options for complete, layered protection against DDoS threats across layers 3–4 and 7. F5 enables organizations to maintain critical infrastructure and services — ensuring overall business continuity under this barrage of evolving, and increasing DoS/DDoS threats attempting to disrupt or shut down their business. Related Articles F5 VELOS: A Next-Generation Fully Automatable Platform F5 rSeries: Next-Generation Fully Automatable Hardware Accelerate your AI initiatives using F5 VELOS DEMO: The Next Generation of F5 Hardware is Ready for you 421Views3likes0Comments

421Views3likes0CommentsF5 VELOS: A Next-Generation Fully Automatable Platform

What is VELOS? The F5 VELOS platform is the next generation of F5’s chassis-based systems. VELOS can bridge traditional and modern application architectures by supporting a mix of traditional F5 BIG-IP tenants as well as next-generation BIG-IP Next tenants in the future. F5 VELOS is a key component of the F5 Application Delivery and Security Platform (ADSP). VELOS relies on a Kubernetes-based platform layer (F5OS) that is tightly integrated with F5 TMOS software. Going to a microservice-based platform layer allows VELOS to provide additional functionality that was not possible in previous generations of F5 BIG-IP platforms. Customers do not need to learn Kubernetes but still get the benefits of it. Management of the chassis will still be done via a familiar F5 CLI, webUI, or API. The additional benefit of automation capabilities can greatly simplify the process of deploying F5 products. A significant amount of time and resources are saved due to automation, which translates to more time to perform critical tasks. F5OS VELOS UI Demo Video Why is VELOS important? Get more done in less time by using a highly automatable hardware platform that can deploy software solutions in seconds, not minutes or hours. Increased performance improves ROI: The VELOS platform is a high-performance and highly scalable chassis with improved processing power. Running multiple versions on the same platform allows for more flexibility than previously possible. Significantly reduce the TCO of previous-generation hardware by consolidating multiple platforms into one. Key VELOS Use-Cases NetOps Automation Shorten time to market by automating network operations and offering cloud-like orchestration with full-stack programmability Drive app development and delivery with self-service and faster response time Business Continuity Drive consistent policies across on-prem and public cloud and across hardware and software-based ADCs Build resiliency with VELOS’ superior platform redundancy and failover capabilities Future-proof investments by running multiple versions of apps side-by-side; migrate applications at your own pace Cloud Migration On-Ramp Accelerate cloud strategy by adopting cloud operating models and on-demand scalability with VELOS and use that as on-ramp to cloud Dramatically reduce TCO with VELOS systems; extend commercial models to migrate from hardware to software or as applications move to cloud Automation Capabilities Declarative APIs and integration with automation frameworks (Terraform, Ansible) greatly simplifies operations and reduces overhead: AS3 (Application Services 3 Extension): A declarative API that simplifies the configuration of application services. With AS3, customers can deploy and manage configurations consistently across environments. Ansible Automation: Prebuilt Ansible modules for VELOS enable automated provisioning, configuration, and updates, reducing manual effort and minimizing errors. Terraform: Organizations leveraging Infrastructure as Code (IaC) can use Terraform to define and automate the deployment of VELOS appliances and associated configurations. Example json file: Example of running the Automation Playbook: Example of the results: More information on Automation: Automating F5OS on VELOS GitHub Automation Repository Specialized Hardware Performance VELOS offers more hardware-accelerated performance capabilities with more FPGA chipsets that are more tightly integrated with TMOS. It also includes the latest Intel processing capabilities. This enhances the following: SSL and compression offload L4 offload for higher performance and reduced load on software Hardware-accelerated SYN flood protection Hardware-based protection from more than 100 types of denial-of-service (DoS) attacks Support for F5 Intelligence Services VELOS CX1610 chassis VELOS BX520 blade Migration Options (BIG-IP Journeys) Use BIG-IP Journeys to easily migrate your existing configuration to VELOS. This covers the following: Entire L4-L7 configuration can be migrated Individual Applications can be migrated BIG-IP Tenant configuration can be migrated Automatically identify and resolve migration issues Convert UCS files into AS3 declarations if needed Post-deployment diagnostics and health The Journeys Tool, available on DevCentral’s GitHub, facilitates the migration of legacy BIG-IP configurations to VELOS-compatible formats. Customers can convert UCS files, validate configurations, and highlight unsupported features during the migration process. Multi-tenancy capabilities in VELOS simplify the process of isolating workloads during and after migration. GitHub repository for F5 Journeys Conclusion The F5 VELOS platform addresses the modern enterprise’s need for high-performance, scalable, and efficient application delivery and security solutions. By combining cutting-edge hardware capabilities with robust automation tools and flexible migration options, VELOS empowers organizations to seamlessly transition from legacy platforms while unlocking new levels of performance and operational agility. Whether driven by the need for increased throughput, advanced multi-tenancy, the VELOS platform stands as a future-ready solution for securing and optimizing application delivery in an increasingly complex IT landscape. Related Content Cloud Docs VELOS Guide F5 VELOS Chassic System Datasheet DEMO: The Next Generation of F5 Hardware is Ready for you 652Views3likes0Comments

652Views3likes0CommentsQuestions on R-Series 5800s

Hello...Trying to bring up a new 5800 What is the recommendation for port-channel. Can we create port-channel between two different pipelines? i.e Ports 3.0,4.0,5.0 and 6.0 are in Pipeline-1 and Ports 7.0,8.0,9.0 and 10.0 are in pipeline-2. Can we create a LAG with 3.0 and 7.0? Plan on using a 10G link for HA and was planning to convert 6.0 to 10G and connect between two R-series 2. When deploying a tenant, Should we just leave it as "Recommended" for Provisioning instead of "Advanced" and have 18 vCPUs if plan on having just one tenant. Not sure how much Virtual disk size is recommended. Any recommendation for Virtual Disk Size? 3. If we want to have additional tenant, is it best to leave the tenant at 14 vCPU or can we change it later and What is the impact? Just existing tenant restart?17Views0likes0CommentsQuestions on Migrating configs from iSeries to RSeries F5s

When we migrate between different platforms , I plan on following the below procedure but have few questions From the iSeries platform (iF5-A(Active) and iF5-B(Standby)), need to take backups and move them to the /shared folder and edit the UCS file(bigip_base.conf for LTM and bigip_gtm.conf for GTM) by modifying the Management Ips, Adding new Vlans(as I want to have new subnet for VIPs), creating new self IPs for all the node subnets and edit the existing domain to a new domain(ex:ab.xyz.com to dc.xyz.com). Modified files are iF5-A.ucs and iF5-B.ucs. - We need to remove trunk config from the new UCS file(K50152613), so do we remove the full config including the config where trunk has all the vlans added along with deleting the trunk interface? We have to manually add the vlans and Trunks through F5OS? - How can we edit iApps as they are no longer supported? On the new R-Series F5s, Bring them up and configure them for HA. Now when I copy using "platform migrate" On the R-Series rF5-A(Active) and rF5-B(Standby), tmsh load sys ucs /tmp/iF5-A.ucs platform-migrate for rF5-A tmsh load sys ucs /tmp/iF5-B.ucs platform-migrate for rF5-B I see that in the KB article, it saves the non compatible config in /var/local/ucs/platform_migrate_ignored_objects Based on this K82540512, Looks like interfaces, trunks and Vlans are not copied? Does it copy the VIPs, nodes, pool members without Vlans? and should we be doing this after manually creating the Trunks and Vlans on F5OS? 3. Why do we need to do "modify sys crypto master-key prompt-for-password" https://my.f5.com/manage/s/article/K82540512 If anyone has done this successfully, please let me know how you did it and what issues you saw? Thank you176Views0likes5CommentsCreate Domino LTPA token on F5 problem

Hi, I'm trying to use the code at http://per.lausten.dk/blog/2009/06/how-to-create-a-ltpa-session-cookie-for-lotus-domino-using-f5.html to create a Domino LTPA token but I am getting the following error showing on the Domino server: Token does not lead with 0 [Single Sign-On token is invalid]. The token should begin with the version number 0123 e.g. from the code set ltpa_version "\x00\x01\x02\x03" However, after decoding the token and then looking at it in a hex editor the version number shows as: C0 80 01 02 03 ....... Can somebody explain to me why the \x00 is being changed to C0 80 please? I've experimented putting other numbers in thefirst position to see what happens e.g. \x01\x01\x02\x03 and the hex readout looks correct i.e. 01 01 02 03. It only fails when I use a \x00 in the first position. Thanks for any suggestions. Jeff773Views0likes17Comments