Key Steps to Securely Scale and Optimize Production-Ready AI for Banking and Financial Services

Banking and financial services are at an AI inflection point: models are moving from experiments into production, but tooling, infrastructure, and operations often lag behind. Ensuring the right AI workflow solutions are deployed matters because they can deliver competitive advantage, reduce costs through efficient compute and orchestration, and strengthen security, governance, and compliance.

This article outlines three key actions banks and financial firms can take to better securely scale, connect, and optimize their AI workflows, which will be demonstrated through a scenario of a bank taking a new AI application to production.

Scenario Details:

- A global bank is bringing a new generative artificial intelligence (GenAI) powered application to production

- The GenAI powered application, which we’ll call the AI chat concierge, is designed to augment and improve call center agent interactions with account holders through faster, smarter and more personalized conversations.

- The bank is looking to improve the efficiency, performance, governance and security of its AI infrastructure supporting its new AI chat concierge application.

- The bank’s CISO will not let them deploy a GenAI powered application into a production environment without more sophisticated continuous testing in place to address AI infrastructure data leakage.

- Deployment of AI chat concierge in production environment needs to demonstrate full visibility for auditors and needs performance analytics of time to first token

Here is a real-world example of a similar AI application for reference: AI @ Morgan Stanley Assistant.

1. Key action: Keeping AI infrastructure optimized and AI/ML models on track with high-performance data flows in hybrid and multicloud environments

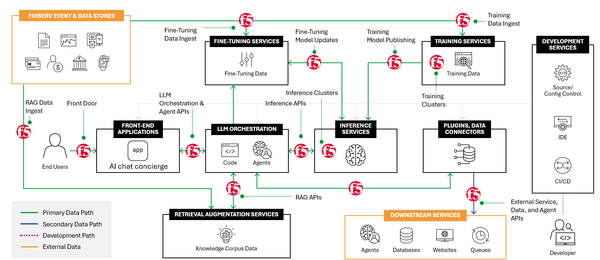

The AI chat concierge depends on the use of S3 and S3‑compatible stores to move large volumes of call transcripts, interaction logs, audio artifacts, and transaction context needed for training and fine‑tuning (see top left corner of the diagram below). Any disruption or latency in those S3 pipelines delays training cycles, reduces model freshness, and undermines production‑grade outputs for the agent assistant.

The AI chat concierge also relies on retrieval‑augmented workflows (see bottom of diagram below) and live agent support can create bursty, unpredictable traffic (call spikes during product launches, rate moves, or security incidents) that can overwhelm legacy pipelines and degrade responsiveness.

Source: https://www.f5.com/resources/reference-architectures/ai-overview#architecture

Source: https://www.f5.com/resources/reference-architectures/ai-overview#architecture

How F5 BIG‑IP helps:

To ensure AI infrastructure is optimized and performant, the following use cases can be achieved with BIG-IP:

- Ensure Resilient AI Data and S3 Delivery Pipelines with F5 BIG-IP watch demo

- Enforce Policy-Driven AI Data Delivery with F5 BIG-IP watch demo

- Optimize AI data ingestion and S3 cluster reliability with F5 BIG-IP watch demo

- Offers deep observability into data flow Learn more

Outcome: Using BIG‑IP for these use cases preserves S3 delivery and front‑end transport, keeping training/fine‑tuning on schedule and ensuring predictable, secure agent interactions during surge events at the bank’s call center.

2. Key Action: Improving security and governance around AI chat concierge interactions

When the bank’s call center agents interact with the AI chat concierge application (see left side of diagram below) it is imperative to the bank’s CISO that these interactions are secure and maintain governance. For example, if the agent includes an account holder’s sensitive information in the prompt (see middle of diagram) and/or received an inappropriate response to relay to the account holder—the architecture needs the right solution in place to enforce organizational and access policies for proprietary and sensitive data flows between agents and AI. An example of such a solution is F5 AI Guardrails (see top of the diagram below).

How F5 AI Guardrails helps:

F5 AI Guardrails helps define and observe how AI models and agents interact with users and data, and defend against attackers. As you can see in the diagram below, every prompt and response is scanned by F5 AI Guardrails and can be automatically blocked and/or redacted when appropriate. By obstructing potentially harmful AI model outputs, F5 AI Guardrails helps keep agent and account-holder interactions productive.

For the bank’s regulatory requirements, F5 AI Guardrails can provide scalable AI compliance and risk assessment. During an audit, they can easily look into the solution’s observability, scanning, and logging tools to ensure the institution’s compliance with ready-made presents for GDPR and more. Additionally, auditors will appreciate F5 AI Red Team as much as the bank’s CISO, which empowers institutions with a vast and continuously updated threat library to test AI application vulnerabilities via a swarm of sophisticated agents simulating adversarial attacks.

3. Key Action: Streamline Agentic AI with the right integrated MCP protocols

The bank’s AI chat concierge application agentic AI data requirements — such as pulling real‑time market data, risk modeling, interest rates, or other contextual signals — need reliable, low‑latency access to many backend services and data sources. The bank, like many in a similar situation, had organic growth and development of the tooling lead to a patchwork of custom connectors and bespoke wiring for each model and data feed, creating operational inefficiencies, fragile integrations, and security gaps when agentic behaviors initiate external calls.

A better approach is to standardize how models and agentic applications exchange context and requests with backend systems using a Model Context Protocol (MCP) and a robust traffic management layer (see bottom half of diagram below). This standardization reduces bespoke integration work, centralizes control, and enforces consistent transport, security, and observability for agent-initiated communications.

How F5 BIG‑IP helps:

- BIG-IP LTM with Model Context Protocol enhances MCP-based systems by reliably routing traffic between AI-driven applications and backend services

- Route traffic between AI-driven applications & backend services

- Central hub for distributing & load-balancing JSON-RPC requests across MCP endpoints

- Simplifies communication with standard transport protocols

- Secure, structured communication between AI models & external services, with natural language commands

Learn about Managing Model Context Protocol in iRules here.

Banks are at an inflection point: moving AI into production unlocks value only when tooling, connectivity, and controls keep pace. The three practical actions here—right‑sizing compute and orchestration, enforcing security and governance for AI chat interactions, and standardizing agentic integrations with an MCP and traffic hub—deliver better performance, simpler integrations, and clearer auditability.

What AI challenges is your bank or financial services organization facing? Comment below.

Employee

EmployeeHelp guide the future of your DevCentral Community!

What tools do you use to collaborate? (1min - anonymous)