Decrypting TLS traffic on BIG-IP

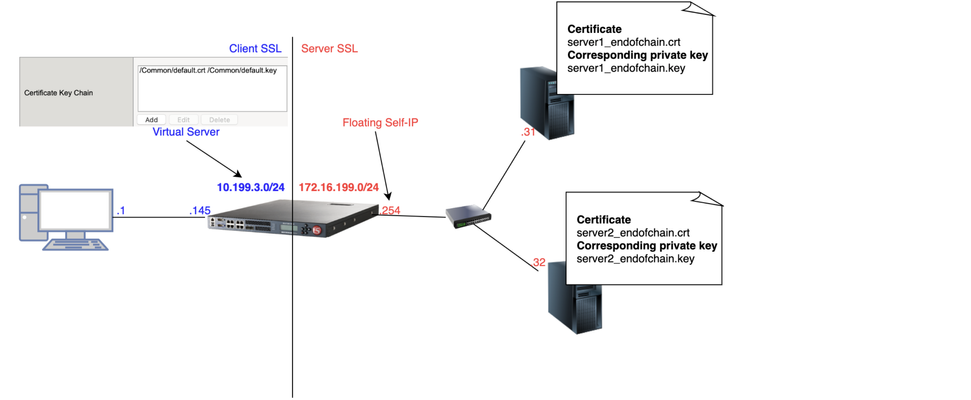

1 Introduction As soon as I joined F5 Support, over 5 years ago, one of the first things I had to learn quickly was to decrypt TLS traffic becausemost of our customers useL7 applications protectedby TLS layer. In this article, I will show 4 ways to decrypt traffic on BIG-IP, including thenew one just release in v15.xthat is ideal for TLS1.3 where TLS handshake is also encrypted. If that's what you want to know just skip totcpdump --f5 ssl optionsection as this new approach is just a parameter added to tcpdump. As this article is very hands-on, I will show my lab topology for the tests I performed and then every possible way I used to decrypt customer's traffic working for Engineering Services at F5. 2 Lab Topology This is the lab topology I used for the lab test where all tests were performed: Also, for every capture I issued the followingcurlcommand: Update: the virtual server's IP address is actually 10.199.3.145/32 2 The 4 ways to decrypt BIG-IP's traffic RSA private key decryption There are 3 constraints here: Full TLS handshake has to be captured CheckAppendix 2to learn how to to disable BIG-IP's cache RSA key exchange has to be used, i.e. no (EC)DHE CheckAppendix1to understand how to check what's key exchange method used in your TLS connection CheckAppendix 2to understandhow to prioritise RSA as key exchange method Private key has to be copied to Wireshark machine (ssldump command solves this problem) Roughly, to accomplish that we can setCache Sizeto 0 on SSL profile and remove (EC)DHE fromCipher Suites(seeAppendix 1for details) I first took a packet capture using :pmodifier to capture only the client and server flows specific to my Client's IP address (10.199.3.1): Note: The0.0interface will capture any forwarding plane traffic (tmm) andnnnis the highest noise to capture as much flow information as possible to be displayed on the F5 dissector header.For more details about tcpdump syntax, please have a look atK13637: Capturing internal TMM information with tcpdumpandK411: Overview of packet tracing with the tcpdump utility. Also,we need to make sure we capture the full TLS handshake. It's perfectly fine to captureresumed TLS sessionsas long as full TLS handshake has been previously captured. Initially, our capture is unencrypted as seen below: On Mac, I clicked onWireshark→Preferences: ThenProtocols→TLS→RSA keys listwhere we see a window where we can reference BIG-IP's (or server if we want to decrypt server SSL side) private key: Once we get there, we need to add any IP address of the flow we want Wireshark to decrypt, the corresponding port and the private key file (default.crtfor Client SSL profile in this particular lab test): Note:For Client SSL profile, this would be the private key onCertificate Chainfield corresponding to the end entity Certificate being served to client machines through the Virtual Server. For Server SSL profile, the private key is located on the back-end server and the key would be the one corresponding to the end entity Certificate sent in the TLSCertificatemessage sent from back-end server to BIG-IP during TLS handshake. Once we clickOK, we can see the HTTP decrypted traffic (in green): In production environment, we would normally avoid copying private keys to different machines soanother option is usessldumpcommand directly on the server we're trying to capture. Again, if we're capturing Client SSL traffic,ssldumpis already installed on BIG-IP. We would follow the same steps as before but instead of copying private key to Wireshark machine, we would simply issue this command on the BIG-IP (or back-end server if it's Server SSL traffic): Syntax:ssldump-r<capture.pcap>-k<private key.key>-M<type a name for your ssldump file here.pms>. For more details, please have a look atK10209: Overview of packet tracing with the ssldump utility. Inssldump-generated.pms, we should find enough information for Wireshark to decrypt the capture: Syntax:ssldump-r<capture.pcap>-k<private key.key>-M<type a name for your ssldump file here.pms>. For more details, please have a look atK10209: Overview of packet tracing with the ssldump utility. Inssldump-generated.pms, we should find enough information for Wireshark to decrypt the capture: After I clickedOK, we indeed see the decrypted http traffic back again: We didn't have to copy BIG-IP's private key to Wireshark machine here. iRules The only constraint here is that we should apply the iRule to the virtual server in question. Sometimes that's not desirable, especially when we're troubleshooting an issue where we want the configuration to be unchanged. Note: there is abugthat affects versions 11.6.x and 12.x that was fixed on 13.x. It records the wrong TLS Session ID to LTM logs. The workaround would be to manually copy the Session ID from tcpdump capture or to use RSA decryption as in previous example. You can also combine bothSSL::clientrandomandSSL::sessionidwhich isthe ideal: Reference: K12783074: Decrypting SSL traffic using the SSL::sessionsecret iRules command (12.x and later) Again, I took a capture usingtcpdumpcommand: After applying above iRule to our HTTPS virtual server and taking tcpdump capture, I see this on /var/log/ltm: To copy this to a *.pms file wecanuseon Wireshark we can use sed command (reference:K12783074): Note:If you don't want to overwrite completely the PMS file make sure youuse >> instead of >. The endresult would be something like this: As both resumed and full TLS sessions have client random value, I only had to copy CLIENT_RANDOM + Master secret to our PMS file because all Wireshark needs is a session reference to apply master secret. To decrypt file on Wireshark just go toWireshark→Preferences→Protocols→TLS→Pre-Master Key logfile namelike we did inssldumpsection and add file we just created: As seen on LTM logs,CLIENTSSL_HANDSHAKEevent captured master secret from our client-side connection andSERVERSSL_HANDSHAKEfrom server side. In this case, we should have both client and server sides decrypted, even though we never had access to back-end server: Notice added anhttpfilter to show you both client and and server traffic were decrypted this time. tcpdump --f5 ssl option This was introduced in 15.x and we don't need to change virtual server configuration by adding iRules. The only thing we need to do is to enabletcpdump.sslproviderdb variable which is disabled by default: After that, when we take tcpdump capture, we just need to add --f5 ssl to the command like this: Notice that we've got a warning message because Master Secret will be copied to tcpdump capture itself, so we need to be careful with who we share such capture with. I had to update my Wireshark to v3.2.+ and clicked onAnalyze→Enabled Protocols: And enable F5 TLS dissector: Once we open the capture, we can find all the information you need to create our PMS file embedded in the capture: Very cool, isn't it? We can then copy the Master Secretand Client Random values by right clicking like this: And then paste it to a blank PMS file. I first pasted the Client Random value followed by Master Secret value like this: Note: I manually typedCLIENT_RANDOMand then pasted both values for both client and server sides directly from tcpdump capture. The last step was to go toWireshark→Preferences→Protocols→TLSand add it toPre-master-Secret log filenameand clickOK: Fair enough! Capture decrypted on both client and server sides: I usedhttpfilter to display only decrypted HTTP packets just like in iRule section. Appendix 1 How do we know which Key Exchange method is being used? RSA private key can only decrypt traffic on Wireshark if RSA is the key exchange method negotiated during TLS handshake. Client side will tell the Server side which ciphers it support and server side will reply with the chosen cipher onServer Hellomessage. With that in mind, on Wireshark, we'd click onServer Helloheader underCipher Suite: Key Exchange and Authentication both come before theWITHkeyword. In above example, because there's only RSA we can say that RSA is used for both Key Exchange and Authentication. In the following example, ECDHE is used for key exchange and RSAfor authentication: Appendix 2 Disabling Session Resumption and Prioritising RSA key exchange We can set Cache Size to 0 to disableTLS session resumptionand change the Cipher Suites to anything that makes BIG-IP pick RSA for Key Exchange:11KViews12likes10CommentsClient SSL Authentication on BIG-IP as in-depth as it can go

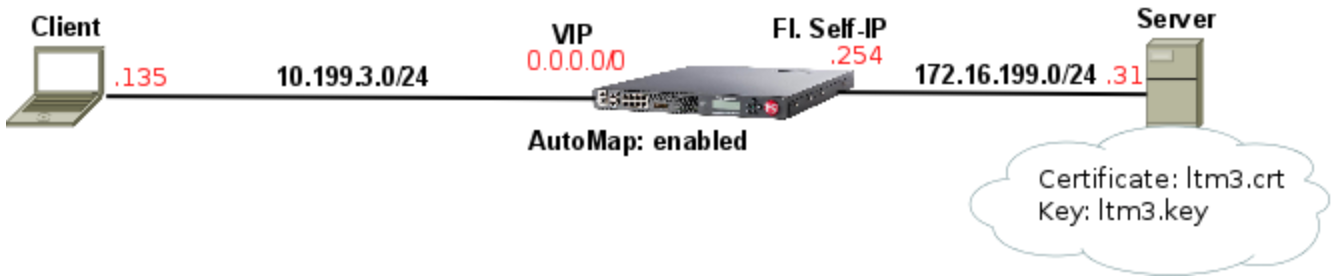

Quick Intro In this article, I'm going to explain how SSL client certificate authentication works on BIG-IP and explain what actually happens during client authentication as in-depth as I can, showing the TLS headers on Wireshark. This article is about the client side of BIG-IP (Client SL profile) authenticating a client connecting to BIG-IP. The Topology For reference so we can follow Wireshark output: How to Configure Client Certificate Authentication on Client SSL profile Essentially, what we're doing here is making BIG-IP verify client's credentials before allowing the TLS handshake to proceed. However, such credentials are in the form of a client certificate. The way to do this is to configure BIG-IP by: Adding a CA file to Trusted Certificate Authorities (ca-file in tmsh) to validate client certificate Optionally adding same CA that signed client certificate to Advertised Certificate Authorities Enforcing Client Certificate validation by setting Client Certificate option on BIG-IP to require Optionally setting the Frequency of such checks if we don't want to stick to the defaults. I'll go through each option now. Adding CA file to Trusted Certificate Authorities We should add to Trusted Certificate Authorities a single certificate file (*.crt) with one CA or concatenated file with 2 or more CAs with the purpose of validating client certificate, i.e. confirming client's identity. Upon receiving client certificate, BIG-IP will go through this list of CAs and confirm client's identity. It also has another purpose which is to authenticate BIG-IP to client but it's out of the scope of this article. Optionally add CA file to Advertised Certificate Authorities Trusted Certificate Authoritiesexplicitly tells BIG-IP the CA or chain of CAs it will use to validate client certificate whereasAdvertised Certificate Authorities tells client in advance what kind of CA BIG-IP trusts so that client can make the decision about which certificate to send to BIG-IP: Why would we use Advertised Certificate Authorities? Let's imagine a situation where Client's application has more than one Client Certificate configured. How is it going to figure out which certificate to send to BIG-IP? That's where Advertised Certificate Authorities comes to rescue us! When we add our CA bundle to Advertised Certificate Authorities, we're telling BIG-IP to add it to a header field named Distinguished Names within Certificate Request message. I dedicated the Appendix section to show you more in-depth how changing Advertised Certificate Authority affects Certificate Request header. Configuring BIG-IP to enforce Client Certificate validation To enable client certificate authentication on BIG-IP we change Local Traffic››Profiles : SSL : Client›› Client Certificate to request: The default is set to ignore where client certificate authentication is disabled. If we truly want to enable client certificate validation we need to select require. The reason why is because request makes BIG-IP request a client certificate from the client but BIG-IP will not perform the validation to confirm if the certificate sent is valid in this mode. The following subsections explain each option. Ignore Client Certificate Authentication is disabled (the default). BIG-IP never sends Certificate Request to client and therefore client does not need to send its certificate to BIG-IP. In this case, TLS handshake proceeds successfully without any client authentication: pcap:ssl-sample-peer-cert-mode-ignore.pcap Wireshark filter used:frame.number == 5 or frame.number == 6 Request BIG-IP requests Client Certificate by sending Certificate Request message but does not check whether client certificate is valid which is not really client authentication, is it? This means that ca-file(Trusted Certificate Authorities in the GUI) will not be used to validate client certificate and we will consider any certificate sent to us to be valid. For example, inssl-sample-peer-cert-mode-request-with-no-client-cert-sent.pcapwe now see that BIG-IP sends aCertificate Requestmessage and client responds with aCertificatemessage this time: Because I didn't add any client certificate to my browser, it sent a blank certificate to BIG-IP. Again, BIG-IP did not perform any validation whatsoever, so TLS handshake proceeded successfully. We can then conclude that this setting only makes BIG-IP request client certificate and that's it. Require It behaves just like Request but BIG-IP also performs client certificate validation, i.e. BIG-IP will use CA we hopefully added to ca-file (Trusted Certificate Authorities in the GUI) to confirm if client certificate is valid. This means that if we don't add a CA to Trusted Certificate Authorities (ca-file in tmsh) then validation will fail. Setting the Frequency of Client Certificate Requests This setting specifies the frequency BIG-IP authenticates client by enabling/disabling TLS session resumption. It has only 2 options: once BIG-IP requests client certificate during first handshake and no longer re-authenticates client as long as TLS session is reused and valid. The way BIG-IP does it is by using Session Resumption/Reuse. During first TLS handshake from client, BIG-IP sends a Session ID to Client within Server Hello header and in subsequent TLS connections, assuming session ID is still in BIG-IP's cache and client re-sends it back to BIG-IP, then session will be resumed every time client tries to establish a TLS session (respecting cache timeout). First time client sends Client Hello with blank session ID as it's cache is empty and then it is assigned a Session ID by BIG-IP (409f...): pcap:ssl-sample-clientcert-auth-once-enabled.pcap Wireshark filter used:filter:!ip.addr == 172.16.199.254 and frame.number > 1 and frame.number < 7 Certificate Requestconfirms BIG-IP is trying to authenticate client. Notice Session ID BIG-IP sent to client is 409f7... Then when Client tries to go through another TLS handshake and sends above session ID in Client Hello (packet #70 below). BIG-IP then confirms session is being resumed by sending samesession IDin Server Hello back to client: Wireshark filter used:!ip.addr == 172.16.199.254 and frame.number > 66 and frame.number < 73 This resumed TLS handshake just means we will not go through full handshake and will no longer need to exchange keys, select ciphers or re-authenticate client as we're reusing those already negotiated in the full TLS handshake where we first received Session ID 409f... always BIG-IP requests client certificate, i.e. re-authenticates client at every handshake. On BIG-IP, this is accomplished by disabling session reuse which makes BIG-IP not to sendSession IDback to Client in the beginning and forcing a full TLS handshake every time. pcap:ssl-sample-clientcert-auth-always-enabled.pcap Wireshark filter used: !ip.addr == 172.16.199.254 and ((frame.number > 1 and frame.number < 7) or (frame.number > 74 and frame.number < 80)) Appendix: Understanding how Advertised Certificate Authority field affects Certificate Request header For this test, I've got the following: myCAbundle.crt: concatenation of root_ca.crt and ltm2.crt (signed by root_ca.crt) client_cert.crt: added to Firefox and signed by ltm2.crt I've also added myCAbundle.crt to Trusted Certificate Authorities so BIG-IP is able to verify that client_cert.crt is valid. For each test, I will use change Advertised Certificate Authorities so we can see what happens. We'll go through 3 tests here: Setting Advertised Certificate Authority to None Setting Advertised Certificate Authority to a certificate that didn't sign client cert Setting Advertised Certificate Authority to a bundle that signed client cert Setting Advertised Certificate Authority to None Note that even though no CA was advertised in Certificate Request message, BIG-IP still advertises Certificate typesandSignature Hash Algorithmsso that client knows in advance what kind of certificate (RSA, DSS or ECDSA) and hash algorithms BIG-IP supports in advance. If client certificate had not been signed using any of the certificate types and hashing algorithms listed then handshake would have failed. However, in this case validation is successful as we can see on frame #8 that client certificate is RSA type and hashed with SHA1: pcap: ssl-sample-advcert-none.pcap filter used: !ip.addr == 172.16.199.254 and frame.number > 1 and frame.number < 16 It's worth noting that Distinguished Names is NOT populated and has length of zero because we didn't attach a bundle to Advertised Certificate Authorities. In this case, it worked fine because my client browser had only one certificate attached, so it shouldn't cause a problem anyway. Setting Advertised Certificate Authority to a certificate that didn't sign client cert pcap: ssl-sample-advcert-default-firefox.pcap Wireshark filter used: None I've set Advertised Certificate Authority to default.crt as this is NOT the CA that signed client's certificate: The difference here when compared to None is that now we can see that Distinguished Names is now populated with the certificate I added (default.crt): However, even though I added the correct certificate to my Firefox browser, it sent a blank certificate instead. Why? That's because BIG-IP signalled in Distinguished Names that default.crt is the CA that signed the certificate BIG-IP is looking for and as Firefox doesn't have any certificate signed by default.crt, it just sent a blank certificate back to BIG-IP. Also, because BIG-IP is now performing proper validation, i.e. comparing whatever client certificate is sent to it with the CA list added to Trusted Certificate Authorities, it knows a blank certificate is not valid and terminates the TLS handshake with a Fatal Alert. Setting Advertised Certificate Authority to a bundle that signed client cert pcap: ssl-sample-advcert-ltm2chainedwithrootca.pcap filter used: !ip.addr == 172.16.199.254 and frame.number > 1 Now I've set Advertised Certificate Authority to the correct bundle that signed my client certificate: And indeed handshake succeeds because: BIG-IP advertises myCAbundle.crt in Certificate Request >> Distinguished Names header, as per Advertised Certificate Authority configuration By reading Distinguished Names field, Client correctly sends the correct client certificate back to BIG-IP BIG-IP correctly validates client certificate using myCAbundle configured on Trusted Certificate Authorities Hope this article provides some clarification about these mysterious TLS headers.14KViews10likes8CommentsHow Proxy SSL works on BIG-IP

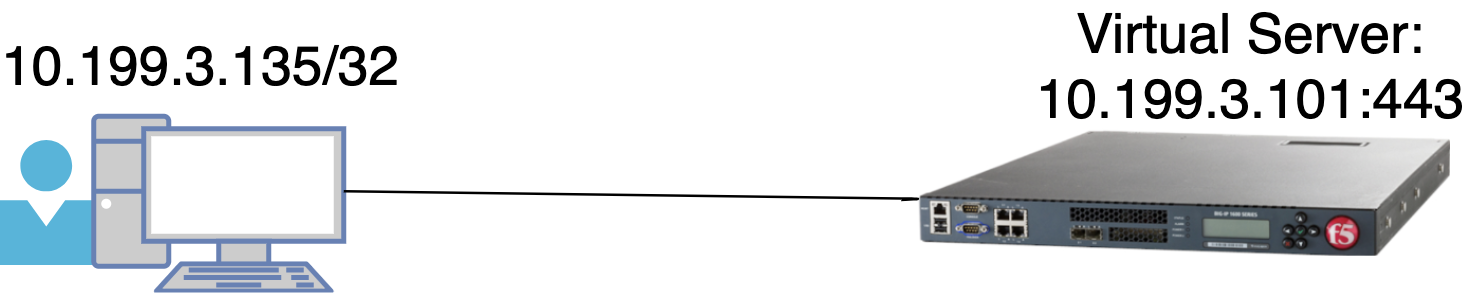

1. Lab Scenario Lab test results: Client completes 3-way handshake with BIG-IP and BIG-IP immediately opens and completes 3-way handshake with back-end server Upon receiving Client Hello on client-side, BIG-IP immediately sends Client Hello on server-side as it is BIG-IP copies same cipher suite list seen on client-side Client Hello to server-side Client Hello BIG-IP ignores what is configured on both Client SSL or Server SSL profiles. As soon as BIG-IP receives Server Hello it confirms two things: Is RSA the chosen key exchange mechanism in Cipher Suite on Server Hello message sent from back-end server? Does Certificate sent by back-end matches the one configured on Server SSL profile on BIG-IP? ONLY if both conditions above match BIG-IP proceeds with handshake. Otherwise, BIG-IP sends a Handshake Failure (Fatal Alert) to both Server and Client with reset cause ofillegal_parameter BIG-IP copies same Server SSL/Back-end Server certificate to Certificate message sent to Client on client-side BIG-IP completely ignores certificate you configured on Client SSL. It always uses the same server-side certificate. Assuming TLS handshake completes successfully BIG-IP is able to decrypt all client-side as well as server-side data which is the whole purpose of Proxy SSL. 2. How Proxy SSL works When Proxy SSL is enabled,BIG-IP does its best to match client-side to server-side connection in terms of negotiation and traffic to make it as transparent as possible to both client and back-end server and at the same allowing BIG-IP to decrypt traffic. About data transparency BIG-IP achieves transparency by copying whatever client and server sends back and forth. About data decryption BIG-IP has an extra configuration requirement for Proxy SSL configuration (according toK13385) that you should add the same certificate/key present on the back-end server to Certificate/Key fields on Server SSL proxy of BIG-IP. This way BIG-IP can decrypt both client and server sides of connection. In practical terms and to achieve this, BIG-IP completely changes the original purpose of Server SSL Certificate and Key fields. Here's my config: Certificate/Key fields above are no longer used for the purpose described inK14806, i.e. to independently authenticate BIG-IP to back-end server through Client Authentication. Instead, when Proxy SSL is enabled it is used to validate if Certificate sent by back-end server is the same one in this field. If so, BIG-IP also copies this certificate to Certificate message sent to Client on client-side. Noticethat when Proxy SSL is NOT bypassedCertificate configured on Client SSL profile is never used. As soon as I sent first request to Wildcard forwarding VIP BIG-IP establishes TCP connection on client-side first and then immediately on server-side: The next step is to forward exactly the sameClient Hellowe receive from Client on client-side to Server on server-side: Now server sends Server Hello: The first thing BIG-IP checks is key exchange mechanism as Proxy SSL has to use RSA (frame 12): Now BIG-IP checks if Certificate insideCertificatemessage is the same as the one configured on Server SSL. In this case I have used Certificate's unique serial number to confirm this. On BIG-IP: Now on Server's message (frame 12): Now BIG-IP sends this same certificate inServer Hellomessage client-side and we can confirm from Serial number that it is the same: From this moment handshake should complete successfully and BIG-IP is maintaining 2 separate connections using the same certificate/key pair on client and server side with the ability to decrypt both. 3. Troubleshooting Proxy SSL when BIG-IP is the culprit Typically, if BIG-IP is the culprit it either because back-end server selected non-RSA key exchange cipher or because cert/key which are not supported. In another test I used DHE on purpose andBIG-IP resets connection immediately after Server Hello message is received from back-end server which is typical sign of validation error: I confirmed back-end server had selected ECDHE key exchange cipher which is not supported by Proxy SSL (frame 12): In case you don't know yet here's how you work out key exchange cipher: Disabling non-RSA cipher on back-end server did the trick to fix the above error as Proxy SSL only supports RSA key exchange. 4. Setting Up Proxy SSL on BIG-IP I used very minimal configuration for this lab and the only thing I did was to create a wildcard forwarding virtual server using Standard VIP: I enabled proxy-ssl on both Client and Server SSL profiles and added back-end server certificate (ltm3.crt) and key (ltm3.key) to Server SSL profile: I also disabled (EC)DHE and explicitly configured RSA as key exchange mechanism in my back-end server. I also confirmed back-end server was also usingltm3.crt/ltm3.keyas it must match the one configured on Server SSL profile: And it all worked fine:5.6KViews7likes12CommentsAutomate Let's Encrypt Certificates on BIG-IP

To quote the evil emperor Zurg: "We meet again, for the last time!" It's hard to believe it's been six years since my first rodeo with Let's Encrypt and BIG-IP, but (uncompromised) timestamps don't lie. And maybe this won't be my last look at Let's Encrypt, but it will likely be the last time I do so as a standalone effort, which I'll come back to at the end of this article. The first project was a compilation of shell scripts and python scripts and config files and well, this is no different. But it's all updated to meet the acme protocol version requirements for Let's Encrypt. Here's a quick table to connect all the dots: Description What's Out What's In acme client letsencrypt.sh dehydrated python library f5-common-python bigrest BIG-IP functionality creating the SSL profile utilizing an iRule for the HTTP challenge The f5-common-python library has not been maintained or enhanced for at least a year now, and I have an affinity for the good work Leo did with bigrest and I enjoy using it. I opted not to carry the SSL profile configuration forward because that functionality is more app-specific than the certificates themselves. And finally, whereas my initial project used the DNS challenge with the name.com API, in this proof of concept I chose to use an iRule on the BIG-IP to serve the challenge for Let's Encrypt to perform validation against. Whereas my solution is new, the way Let's Encrypt works has not changed, so I've carried forward the process from my previous article that I've now archived. I'll defer to their how it works page for details, but basically the steps are: Define a list of domains you want to secure Your client reaches out to the Let’s Encrypt servers to initiate a challenge for those domains. The servers will issue an http or dns challenge based on your request You need to place a file on your web server or a txt record in the dns zone file with that challenge information The servers will validate your challenge information and notify you You will clean up your challenge files or txt records The servers will issue the certificate and certificate chain to you You now have the key, cert, and chain, and can deploy to your web servers or in our case, to the BIG-IP Before kicking off a validation and generation event, the client registers your account based on your settings in the config file. The files in this project are as follows: /etc/dehydrated/config # Dehydrated configuration file /etc/dehydrated/domains.txt # Domains to sign and generate certs for /etc/dehydrated/dehydrated # acme client /etc/dehydrated/challenge.irule # iRule configured and deployed to BIG-IP by the hook script /etc/dehydrated/hook_script.py # Python script called by dehydrated for special steps in the cert generation process # Environment Variables export F5_HOST=x.x.x.x export F5_USER=admin export F5_PASS=admin You add your domains to the domains.txt file (more work likely if signing a lot of domains, I tested the one I have access to). The dehydrated client, of course is required, and then the hook script that dehydrated interacts with to deploy challenges and certificates. I aptly named that hook_script.py. For my hook, I'm deploying a challenge iRule to be applied only during the challenge; it is modified each time specific to the challenge supplied from the Let's Encrypt service and is cleaned up after the challenge is tested. And finally, there are a few environment variables I set so the information is not in text files. You could also move these into a credential vault. So to recap, you first register your client, then you can kick off a challenge to generate and deploy certificates. On the client side, it looks like this: ./dehydrated --register --accept-terms ./dehydrated -c Now, for testing, make sure you use the Let's Encrypt staging service instead of production. And since I want to force action every request while testing, I run the second command a little differently: ./dehydrated -c --force --force-validation Depicted graphically, here are the moving parts for the http challenge issued by Let's Encrypt at the request of the dehydrated client, deployed to the F5 BIG-IP, and validated by the Let's Encrypt servers. The Let's Encrypt servers then generate and return certs to the dehydrated client, which then, via the hook script, deploys the certs and keys to the F5 BIG-IP to complete the process. And here's the output of the dehydrated client and hook script in action from the CLI: # ./dehydrated -c --force --force-validation # INFO: Using main config file /etc/dehydrated/config Processing example.com + Checking expire date of existing cert... + Valid till Jun 20 02:03:26 2022 GMT (Longer than 30 days). Ignoring because renew was forced! + Signing domains... + Generating private key... + Generating signing request... + Requesting new certificate order from CA... + Received 1 authorizations URLs from the CA + Handling authorization for example.com + A valid authorization has been found but will be ignored + 1 pending challenge(s) + Deploying challenge tokens... + (hook) Deploying Challenge + (hook) Challenge rule added to virtual. + Responding to challenge for example.com authorization... + Challenge is valid! + Cleaning challenge tokens... + (hook) Cleaning Challenge + (hook) Challenge rule removed from virtual. + Requesting certificate... + Checking certificate... + Done! + Creating fullchain.pem... + (hook) Deploying Certs + (hook) Existing Cert/Key updated in transaction. + Done! This results in a deployed certificate/key pair on the F5 BIG-IP, and is modified in a transaction for future updates. This proof of concept is on github in the f5devcentral org if you'd like to take a look. Before closing, however, I'd like to mention a couple things: This is an update to an existing solution from years ago. It works, but probably isn't the best way to automate today if you're just getting started and have already started pursuing a more modern approach to automation. A better path would be something like Ansible. On that note, there are several solutions you can take a look at, posted below in resources. Resources https://github.com/EquateTechnologies/dehydrated-bigip-ansible https://github.com/f5devcentral/ansible-bigip-letsencrypt-http01 https://github.com/s-archer/acme-ansible-f5 https://github.com/s-archer/terraform-modular/tree/master/lets_encrypt_module(Terraform instead of Ansible) https://community.f5.com/t5/technical-forum/let-s-encrypt-with-cloudflare-dns-and-f5-rest-api/m-p/292943(Similar solution to mine, only slightly more robust with OCSP stapling, the DNS instead of HTTP challenge, and with bash instead of python)20KViews6likes18CommentsSSL Profiles Part 1: Handshakes

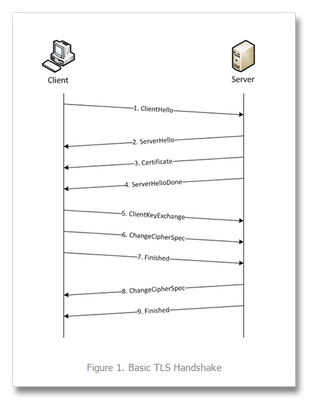

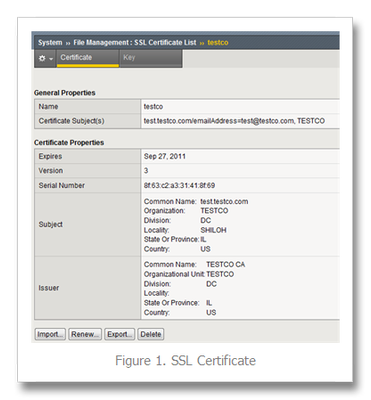

This is the first in a series of tech tips on the F5 BIG-IP LTM SSL profiles. SSL Overview and Handshake SSL Certificates Certificate Chain Implementation Cipher Suites SSL Options SSL Renegotiation Server Name Indication Client Authentication Server Authentication All the "Little" Options SSL, or the Secure Socket Layer, was developed by Netscape back in the ‘90s to secure the transport of web content. While adopted globally, the standards body defined the Transport Layer Security, or TLS 1.0, a few years later. Commonly interchanged in discussions, the final version of SSL (v3) and the initial version of TLS (v1) do not interoperate, though TLS includes the capabality to downgrade to SSLv3 if necessary. Before we dive into the options within the SSL profiles, we’ll start in this installment with a look at the SSL certificate exchanges and take a look at what makes a client versus a server ssl profile. Server-Only Authentication This is the basic TLS handshake, where the only certificate required is on the serverside of the connection. The exchange is shown in Figure 1 below. In the first step. the client sends a ClientHello message containing the cipher suites (I did a tech tip a while back on manipulating profiles that has a good breakdown of the fields in a ClientHello message), a random number, the TLS version it supports (highest), and compression methods. The ServerHello message is then sent by the server with the version, a random number, the ciphersuite, and a compression method from the clients list. The server then sends its Certificate and follows that with the ServerDone message. The client responds with key material (depending on cipher selected) and then begins computing the master secret, as does the server upon receipt of the clients key material. The client then sends the ChangeCipherSpec message informing the server that future messages will be authenticated and encrypted (encryption is optional and dependent on parameters in server certificate, but most implementations include encryption). The client finally sends its Finished message, which the server will decrypt and verify. The server then sends its ChangeCipherSpec and Finished messages, with the client performing the same decryption and verification. The application messages then start flowing and when complete (or there are SSL record errors) the session will be torn down. Question—Is it possible to host multiple domains on a single IP and still protect with SSL/TLS without certificate errors? This question is asked quite often in the forums. The answer is in the details we’ve already discussed, but perhaps this isn’t immediately obvious. Because the application messages—such as an HTTP GET—are not received until AFTER this handshake, there is no ability on the server side to switch profiles, so the default domain will work just fine, but the others will receive certificate errors. There is hope, however. In the TLSv1 standard, there is an option called Server Name Indication, or SNI, which will allow you to extract the server name and switch profiles accordingly. This could work today—if you control the client base. The problem is most browsers don’t default to TLS, but rather SSLv3. Also, TLS SNI is not natively supported in the profiles, so you’ll need to get your hands dirty with some serious iRule-fu (more on this later in the series). Anyway, I digress. Client-Authenticated Handshake The next handshake is the client-authenticated handshake, shown below in Figure 2. This handshake adds a few steps (in bold above), inserting the CertificateRequest by the server in between the Certificate and ServerHelloDone messages, and the client starting off with sending its Certificate and after sending its key material in the ClientKeyExchange message, sends the CertificateVerify message which contains a signature of the previous handshake messages using the client certificate’s private key. The server, upon receipt, verifies using the client’s public key and begins the heavy compute for the master key before both finish out the handshake in like fashion to the basic handshake. Resumed Handshake Figure 3 shows the abbreviated handshake that allows the performance gains of not requiring the recomputing of keys. The steps passed over from the full handshake are greyed out. It’s after step 5 that ordinarily both server and client would be working hard to compute the master key, and as this step is eliminated, bulk encryption of the application messages is far less costly than the full handshake. The resumed session works by the client submitting the existing session ID from previous connections in the ClientHello and the server responding with same session ID (if the ID is different, a full handshake is initiated) in the ServerHello and off they go. Profile Context As with many things, context is everything. There are two SSL profiles on the LTM, clientssl and serverssl. The clientssl profile is for acting as the SSL server, and the serverssl profile is for acting as the SSL client. Not sure why it worked out that way, but there you go. So if you will be offloading SSL for your applications, you’ll need a clientssl profile. If you will be offloading SSL to make decisions/optimizations/inspects, but still require secured transport to your servers, you’ll need a clientssl and a serverssl profile. Figure 4 details the context. Conclusion Hopefully this background on the handshake process and the profile context is beneficial to the profile options we’ll cover in this series. For a deeper dive into the handshakes (and more on TLS), you can check out RFC 2246 and this presentation on SSL and TLS cryptography. Next up, we’ll take a look at Certificates.11KViews6likes6CommentsTLS Stateful vs Stateless Session Resumption

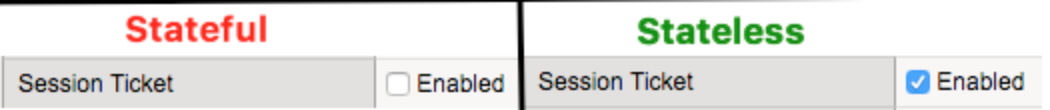

1. Preliminary Information TLS Session Resumption allows caching of TLS session information. There are 2 kinds: stateful and stateless. In stateful session resumption, BIG-IP stores TLS session information locally. In stateless session resumption, such job is delegated to the client. BIG-IP supports both stateful and stateless TLS session resumption. Enabling stateful or stateless session resumption is just a matter of ticking/unticking a tickbox on LTM's Client SSL profile: In this article, I'm going to walk through how session resumption works by performing a lab test. Here's my topology: Do not confuseSession Reuse/Resumption withRenegotiation. Renegotiation uses the same TCP connection to renegotiate security parameters which does not involved Session ID or Session Tickets. For more information please refer to SSL Legacy Renegotiation Secure Renegotiation. 2. How Stateful Session Resumption works Capture used:ssl-sample-session-ticket-disabled.pcap 2.1 New session Statefulmeans BIG-IP will keep storing session information from as many clients its cache allows and TLS handshake will proceed as follows: We can see above that Client sends an empty Session ID field and BIG-IP replies with a new Session ID (filter used:tcp.stream eq 0). After that, full handshake proceeds normally where Certificate and Client Key Exchange are sent and there is also the additional cost CPU-wise to compute the keys: 2.2 Reusing Previous Session Now both Client and Server have Session ID 56bcf9f6ea40ac1bbf05ff7fd209d423da9f96404103226c7f927ad7a2992433 stored in their TLS session cache. The good thing about it is that in the next TLS connection request, client won't need to go through the full TLS handshake again. Here's what we see: Client just sends Session ID (56bcf9f6ea40ac1bbf05ff7fd209d423da9f96404103226c7f927ad7a2992433) it previously learnt from BIG-IP (via Server Hello from previous connection) on its Client Hello message. BIG-IP then confirms this session ID is in its SSL Session cache and they both go through what is known as abbreviated TLS handshake. No certificate or key information is exchanged during abbreviated TLS handshake and previously negotiated keys are re-used. 3. How Stateless Session Resumption works Capture used:ssl-sample-session-ticket-enabled-2.pcap 3.1 New Session Because of the burden on BIG-IP that has to store one session per client,RFC5077suggested a new way of doing session Resumption that offloads the burden of keeping all TLS session information to client and nothing else needs to be stored on BIG-IP. Let's see the magic! Client first signals it supports stateless session resumption by adding SessionTickets TLS extension to its Client Hello message (in green): BIG-IP also signals back to client (in red) it supports SessionTicket TLS by adding empty SessionTicket TLS extension.Notice thatSession IDis NOT used here! The TLS handshake proceeds normally just like in stateful session resumption. However, just before handshake is completed (with Finished message), BIG-IP sends a new TLS message calledNew Session Ticketwhich consists of encrypted session information (e.g. master secret, cipher used, etc) where BIG-IP is able to decrypt later using a unique key it generates only for this purpose: From this point on, client (10.199.3.135) keeps session ticket in its TLS cache until next time it needs to connect to the same server (assuming session ticket did not expire). 3.2 Reusing Previous Session Now, when the same client wants to re-use previous session,it forwards the same session ticket aboveinSessionTicket TLS extensionon its Client Hello message as seen below: As we've noticed, Client also creates a new Session ID used for the following purpose: Server replies back with same session ID: BIG-IP accepted Session Ticket and is going to reuse the session. Server replies with empty/different Session ID: BIG-IP decided to go through full handshake either because Session Ticket expired or it is falling back to stateful session resumption. PS: Such session ID is NOT stored on BIG-IP otherwise it would defeat the purpose of stateless session reuse. It is a one-off usage just to confirm to client BIG-IP accepted session ticket they sent and we're not going to generate new session keys. In our example, BIG-IP successfully accepted and reused TLS session. We can confirm that an abbreviated TLS handshake took place and on Server Hello message BIG-IP replied back with same session ID client sent (to BIG-IP): Now, client This is session resumption in action and BIG-IP doesn't even have to store session information locally, making it a more scalable option when compared to stateful session resumption.6KViews6likes4CommentsSSL Orchestrator Advanced Use Cases: Reducing Complexity with Internal Layered Architecture

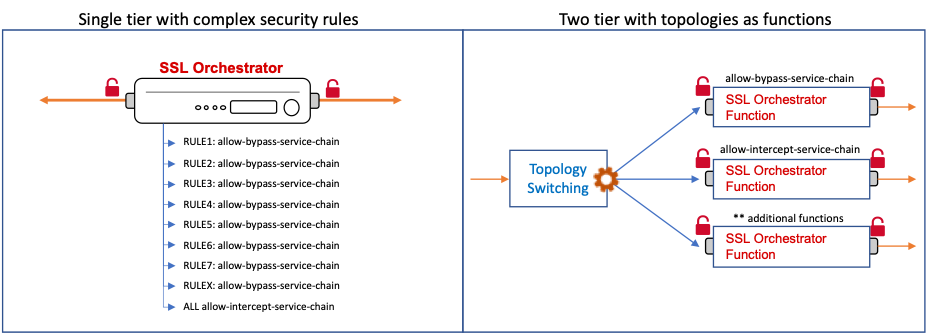

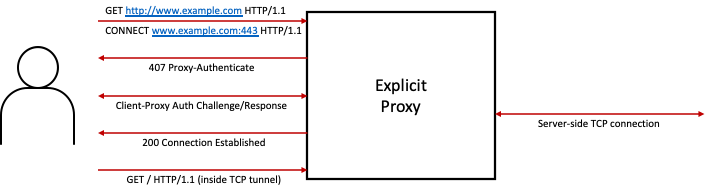

Introduction Sir Isaac Newton said, "Truth is ever to be found in the simplicity, and not in the multiplicity and confusion of things". The world we live in is...complex. No getting around that. But at the very least, we should strive for simplicity where we can achieve it. As IT folk, we often find ourselves mired in the complexity of things until we lose sight of the big picture, the goal. How many times have you created an additional route table entry, or firewall exception, or virtual server, because the alternative meant having a deeper understanding of the existing (complex) architecture? Sure, sometimes it's unavoidable, but this article describes at least one way that you can achieve simplicity in your architecture. SSL Orchestrator sits as an inline point of presence in the network to decrypt, re-encrypt, and dynamically orchestrate that traffic to the security stack. You need rules to govern how to handle specific types of traffic, so you create security policy rules in the SSL Orchestrator configuration to describe and take action on these traffic patterns. It's definitely easy to create a multitude of traffic rules to match discrete conditions, but if you step back and look at the big picture, you may notice that the different traffic patterns basically all perform the same core actions. They allow or deny traffic, intercept or bypass TLS (decrypt/not-decrypt), and send to one or a few service chains. If you were to write down all of the combinations of these actions, you'd very likely discover a small subset of discrete "functions". As usual, F5 BIG-IP and SSL Orchestrator provide some innovative and unique ways to optimize this. And so in this article we will explore SSL Orchestrator topologies "as functions" to reduce complexity. Specifically, you can reduce the complexity of security policy rules, and in doing so, quite likely increase the performance of your SSL Orchestrator architecture. SSL Orchestrator Use Case: Reducing Complexity with Internal Layered Architectures The idea is simple. Instead of a single topology with a multitude of complex traffic pattern matching rules, create small atomic topologies as static functions and steer traffic to the topologies by virtue of "layered" traffic pattern matching. Granted, if your SSL Orchestrator configuration is already pretty simple, then please keep doing what you're doing. You've got this, Tiger. But if your environment is getting complex, and you're not quite convinced yet that topologies as functions is a good idea, here are a few additional benefits you'll get from this topology layering: Dynamic egress selection: topologies as functions can define different egress paths. Dynamic CA selection: topologies as functions can use different local issuing CAs for different traffic flows. Dynamic traffic bypass: certain types of traffic can be challenging to handle internally. For example, mutual TLS traffic can be bypassed globally with the "Bypass on client cert failure" option in the SSL configuration, but bypassing mutual TLS sites by hostname is more complex. A layered architecture can steer traffic (by SNI) through a bypass topology, with a service chain. More flexible pattern recognition: for all of its flexibility, SSL Orchestrator security policy rules cannot catch every possible use case. External traffic pattern recognition, via iRules or CPM (LTM policies) offer near infinite pattern matching options. You could, for example, steer traffic based on incoming tenant VLAN or route domain for multi-tenancy configurations. More flexible automation strategies: as iRules, data groups, and CPM policies are fully automate-able across many AnO platforms (ex. AS3, Ansible, Terraform, etc.), it becomes exceedingly easy to automate SSL Orchestrator traffic processing, and removes the need to manage individual topology security policy rules. Hopefully these benefits give you a pretty clear indication of the value in this architecture strategy. So without further ado, let's get started. Configuration Before we begin, I'd like to make note of the following caveats: While every effort has been made to simplify the layered architecture, there is still a small element of complexity. If you are at all averse to creating virtual servers or modifying iRules, then maybe this isn't for you. But as you are reading this in a forum dedicated to programmability, I'm guessing you the reader are ready for a challenge. This is a "field contributed" solution, so not officially supported by F5. This topology layering architecture is applicable to all modern versions of SSL Orchestrator, from 5.0 to 8.0. While topology layering can be used for inbound topologies, it is most appropriate for outbound. The configuration below also only describes the layer 3 implementation. But layer 2 layering is also possible. With this said, there are just a few core concepts to understand: Basic layered architecture configuration - how the pieces fit together The iRules - how traffic moves through the architecture Or the CPM policies - an alternative to iRules Note again that this is primarily useful in outbound topologies. Inbound topologies are typically more atomic on their own already. I will cover both transparent and explicit forward proxy configurations below. Basic layered architecture configuration A layered architecture takes advantage of a powerful feature of the BIG-IP called "VIP targeting". The idea is that one virtual server calls another with negligible latency between the two VIPs. The "external" virtual server is client-facing. The SSL Orchestrator topology virtual servers are thus "internal". Traffic enters the external VIP and traffic rules pass control to any of a number of internal "topology function" VIPs. You certainly don't have to use the iRule implementation presented here. You just need a client-facing virtual server with an iRule that VIP-targets to one or more SSL Orchestrator topologies. Each outbound topology is represented by a virtual server that includes the application server name. You can see these if you navigate to Local Traffic -> Virtual Servers in the BIG-IP UI. So then the most basic topology layering architecture might just look like this: when CLIENT_ACCEPTED { virtual "/Common/sslo_my_topology.app/sslo_my_topology-in-t-4" } This iRule doesn't do anything interesting, except get traffic flowing across your layered architecture. To be truly useful you'll want to include conditions and evaluations to steer different types of traffic to different topologies (as functions). As the majority of security policy rules are meant to define TLS traffic patterns, the provided iRules match on TLS traffic and pass any non-TLS traffic to a default (intercept/inspection) topology. These iRules are intended to simplify topology switching by moving all of the complexity of traffic pattern matching to a library iRule. You should then only need to modify the "switching" iRule to use the functions in the library, which all return Boolean true or false results. Here are the simple steps to create your layered architecture: Step 1: Build a set of "dummy" VLANs. A topology must be bound to a VLAN. But since the topologies in this architecture won't be listening on client-facing VLANs, you will need to create a separate VLAN for each topology you intend to create. A dummy VLAN is a VLAN with no interface assigned. In the BIG-IP UI, under Network -> VLANs, click Create. Give your VLAN a name and click Finished. It will ask you to confirm since you're not attaching an interface. Repeat this step by creating unique VLAN names for each topology you are planning to use. Step 2: Build a set of static topologies as functions. You'll want to create a normal "intercept" topology and a separate "bypass" topology, though you can create as many as you need to encompass the unique topology functions. Your intercept topology is configured as such: L3 outbound topology configuration, normal topology settings, SSL configuration, services, service chains No security policy rules - just the ALL rule with TLS intercept action (and service chain), and optionally remove the built-in Pinners rule Attach to a dummy VLAN (a VLAN with no assigned interfaces) Your bypass topology should then look like this: L3 outbound topology configuration, skip the SSL Configuration settings, optionally re-use services and service chains No security policy rules - just the ALL rule with TLS bypass action (and service chain) Attach to a separate dummy VLAN (a VLAN with no assigned interfaces) Note the name you use for each topology, as this will be called explicitly in the iRule. For example, if you name the topology "myTopology", that's the name you will use in each "call SSLOLIB::target" function (more on this in a moment) . If you look in the SSL Orchestrator UI, you will see that it prepends "sslo_" (ex. sslo_myTopology). Don't include the "sslo_" portion in the iRule. Step 3: Import the SSLOLIB iRule (attached here). Name it "SSLOLIB". This is the library rule, so no modifications are needed. The functions within (as described below) will return a true or false, so you can mix these together in your switching rule as needed. Step 4: Import the traffic switching iRule (attached here). You will modify this iRule as required, but the SSLOLIB library rule makes this very simple. Step 5: Create your external layered virtual server. This is the client-facing virtual server that will catch the user traffic and pass control to one of the internal SSL Orchestrator topology listeners. Type: Standard Source: 0.0.0.0/0 Destination: 0.0.0.0/0 Service Port: 0 Protocol: TCP VLAN: client-facing VLAN Address Translation: disabled Port Translation: disabled Default Persistence Profile: ssl iRule: the traffic switching iRule Note that the ssl persistence profile is enabled here to allow the iRules to handle client side SSL traffic without SSL profiles attached. Also make sure that Address and Port Translation are disabled before clicking Finished. Step 6: Modify the traffic switching iRule to meet your traffic matching requirements (see below). You have the basic layered architecture created. The only remaining step is to modify the traffic switching iRule as required, and that's pretty easy too. The iRules I'll repeat, there are near infinite options here. At the very least you need to VIP target from the external layered VIP to at least one of the SSL Orchestrator topology VIPs. The iRules provided here have been cultivated to make traffic selection and steering as easy as possible by pushing all of the pattern functions to a library iRule (SSLOLIB). The idea is that you will call a library function for a specific traffic pattern and if true, call a separate library function to steer that flow to the desired topology. All of the build instructions are contained inside the SSLOLIB iRule, with examples. SSLOLIB iRule: https://github.com/f5devcentral/sslo-script-tools/blob/main/internal-layered-architecture/transparent-proxy/SSLOLIB Switching iRule: https://github.com/f5devcentral/sslo-script-tools/blob/main/internal-layered-architecture/transparent-proxy/sslo-layering-rule The function to steer to a topology (SSLOLIB::target) has three parameters: <topology name>: this is the name of the desired topology. Use the basic topology name as defined in the SSL Orchestrator configuration (ex. "intercept"). ${sni}: this is static and should be left alone. It's used to convey the SNI information for logging. <message>: this is a message to send to the logs. In the examples, the message indicates the pattern matched (ex. "SRCIP"). Note, include an optional 'return' statement at the end to cancel any further matching. Without the 'return', the iRule will continue to process matches and settle on the value from the last evaluation. Example (sending to a topology named "bypass"): call SSLOLIB::target "bypass" ${sni} "DSTIP" ; return There are separate traffic matching functions for each pattern: SRCIP IP:<ip/subnet> SRCIP DG:<data group name> (address-type data group) SRCPORT PORT:<port/port-range> SRCPORT DG:<data group name> (integer-type data group) DSTIP IP:<ip/subnet> DSTIP DG:<data group name> (address-type data group) DSTPORT PORT:<port/port-range> DSTPORT DG:<data group name> (integer-type data group) SNI URL:<static url> SNI URLGLOB:<glob match url> (ends_with match) SNI CAT:<category name or list of categories> SNI DG:<data group name> (string-type data group) SNI DGGLOB:<data group name> (ends_with match) Examples: # SOURCE IP if { [call SSLOLIB::SRCIP IP:10.1.0.0/16] } { call SSLOLIB::target "bypass" ${sni} "SRCIP" ; return } if { [call SSLOLIB::SRCIP DG:my-srcip-dg] } { call SSLOLIB::target "bypass" ${sni} "SRCIP" ; return } # SOURCE PORT if { [call SSLOLIB::SRCPORT PORT:5000] } { call SSLOLIB::target "bypass" ${sni} "SRCPORT" ; return } if { [call SSLOLIB::SRCPORT PORT:1000-60000] } { call SSLOLIB::target "bypass" ${sni} "SRCPORT" ; return } # DESTINATION IP if { [call SSLOLIB::DSTIP IP:93.184.216.34] } { call SSLOLIB::target "bypass" ${sni} "DSTIP" ; return } if { [call SSLOLIB::DSTIP DG:my-destip-dg] } { call SSLOLIB::target "bypass" ${sni} "DSTIP" ; return } # DESTINATION PORT if { [call SSLOLIB::DSTPORT PORT:443] } { call SSLOLIB::target "bypass" ${sni} "DSTPORT" ; return } if { [call SSLOLIB::DSTPORT PORT:443-9999] } { call SSLOLIB::target "bypass" ${sni} "DSTPORT" ; return } # SNI URL match if { [call SSLOLIB::SNI URL:www.example.com] } { call SSLOLIB::target "bypass" ${sni} "SNIURLGLOB" ; return } if { [call SSLOLIB::SNI URLGLOB:.example.com] } { call SSLOLIB::target "bypass" ${sni} "SNIURLGLOB" ; return } # SNI CATEGORY match if { [call SSLOLIB::SNI CAT:$static::URLCAT_list] } { call SSLOLIB::target "bypass" ${sni} "SNICAT" ; return } if { [call SSLOLIB::SNI CAT:/Common/Government] } { call SSLOLIB::target "bypass" ${sni} "SNICAT" ; return } # SNI URL DATAGROUP match if { [call SSLOLIB::SNI DG:my-sni-dg] } { call SSLOLIB::target "bypass" ${sni} "SNIDGGLOB" ; return } if { [call SSLOLIB::SNI DGGLOB:my-sniglob-dg] } { call SSLOLIB::target "bypass" ${sni} "SNIDGGLOB" ; return } To combine these, you can use simple AND|OR logic. Example: if { ( [call SSLOLIB::DSTIP DG:my-destip-dg] ) and ( [call SSLOLIB::SRCIP DG:my-srcip-dg] ) } Finally, adjust the static configuration variables in the traffic switching iRule RULE_INIT event: ## User-defined: Default topology if no rules match (the topology name as defined in SSLO) set static::default_topology "intercept" ## User-defined: DEBUG logging flag (1=on, 0=off) set static::SSLODEBUG 0 ## User-defined: URL category list (create as many lists as required) set static::URLCAT_list { /Common/Financial_Data_and_Services /Common/Health_and_Medicine } CPM policies LTM policies (CPM) can work here too, but with the caveat that LTM policies do not support URL category lookups. You'll probably want to either keep the Pinners rule in your intercept topologies, or convert the Pinners URL category to a data group. A "url-to-dg-convert.sh" Bash script can do that for you. url-to-dg-convert.sh: https://github.com/f5devcentral/sslo-script-tools/blob/main/misc-tools/url-to-dg-convert.sh As with iRules, infinite options exist. But again for simplicity here is a good CPM configuration. For this you'll still need a "helper" iRule, but this requires minimal one-time updates. when RULE_INIT { ## Default SSLO topology if no rules match. Enter the name of the topology here set static::SSLO_DEFAULT "intercept" ## Debug flag set static::SSLODEBUG 0 } when CLIENT_ACCEPTED { ## Set default topology (if no rules match) virtual "/Common/sslo_${static::SSLO_DEFAULT}.app/sslo_${static::SSLO_DEFAULT}-in-t-4" } when CLIENTSSL_CLIENTHELLO { if { ( [POLICY::names matched] ne "" ) and ( [info exists ACTION] ) and ( ${ACTION} ne "" ) } { if { $static::SSLODEBUG } { log -noname local0. "SSLO Switch Log :: [IP::client_addr]:[TCP::client_port] -> [IP::local_addr]:[TCP::local_port] :: [POLICY::rules matched [POLICY::names matched]] :: Sending to $ACTION" } virtual "/Common/sslo_${ACTION}.app/sslo_${ACTION}-in-t-4" } } The only thing you need to do here is update the static::SSLO_DEFAULT variable to indicate the name of the default topology, for any traffic that does not match a traffic rule. For the comparable set of CPM rules, navigate to Local Traffic -> Policies in the BIG-IP UI and create a new CPM policy. Set the strategy as "Execute First matching rule", and give each rule a useful name as the iRule can send this name in the logs. For source IP matches, use the "TCP address" condition at ssl client hello time. For source port matches, use the "TCP port" condition at ssl client hello time. For destination IP matches the "TCP address" condition at ssl client hello time. Click on the Options icon and select "Local" and "External". For destination port matches the "TCP port" condition at ssl client hello time. Click on the Options icon and select "Local" and "External". For SNI matches, use the "SSL Extension server name" condition at ssl client hello time. For each of the conditions, add a simple "Set variable" action as ssl client hello time. Name the variable "ACTION" and give it the name of the desired topology. Apply the helper iRule and CPM policy to the external traffic steering virtual server. The "first" matching rule strategy is applied here, and all rules trigger on ssl client hello, so you can drag them around and re-order as necessary. Note again that all of the above only evaluates TLS traffic. Any non-TLS traffic will flow through the "default" topology that you identify in the iRule. It is possible to re-configure the above to evaluate HTTP traffic, but honestly the only significant use case here might be to allow or drop traffic at the policy. Layered architecture for an explicit forward proxy You can use the same logic to support an explicit proxy configuration. The only difference will be that the frontend layered virtual server will perform the explicit proxy functions. The backend SSL Orchestrator topologies will continue to be in layer 3 outbound (transparent proxy) mode. Normally SSL Orchestrator would build this for you, but it's pretty easy and I'll show you how. You could technically configure all of the SSL Orchestrator topologies as explicit proxies, and configure the client facing virtual server as a layer 3 pass-through, but that adds unnecessary complexity. If you also need to add explicit proxy authentication, that is done in the one frontend explicit proxy configuration. Use the settings below to create an explicit proxy LTM configuration. If not mentioned, settings can be left as defaults. Under SSL Orchestrator -> Configuration in the UI, click on the gear icon in the top right corner. This will expose the DNS resolver configuration. The easiest option here is to select "Local Forwarding Nameserver" and then enter the IP address of the local DNS service. Click "Save & Next" and then "Deploy" when you're done. Under Network -> Tunnels in the UI, click Create. This will create a TCP tunnel for the explicit proxy traffic. Profile: select tcp-forward Under Local Traffic -> Profiles -> Services -> HTTP in the UI, click Create. This will create the HTTP explicit proxy profile. Proxy Mode: Explicit Explicit Proxy: DNS Resolver: select the ssloGS-net-resolver Explicit Proxy: Tunnel Name: select the TCP tunnel created earlier Under Local Traffic -> Virtual Servers, click Create. This will create the client-facing explicit proxy virtual server. Type: Standard Source: 0.0.0.0/0 Destination: enter an IP the client can use to access the explicit proxy interface Service Port: enter the explicit proxy listener port (ex. 3128, 8080) HTTP Profile: HTTP explicit profile created earlier VLANs and Tunnel Traffic: set to "Enable on..." and select the client-facing VLAN Address Translation: enabled Port Translation: enabled Under Local Traffic -> Virtual Servers, click Create again. This will create the TCP tunnel virtual server. Type: Standard Source: 0.0.0.0/0 Destination: 0.0.0.0/0 Service Port: * VLANs and Tunnel Traffic: set to "Enable on..." and select the TCP tunnel created earlier Address Translation: disabled Port Translation: disabled iRule: select the SSLO switching iRule Default Persistence Profile: select ssl Note, make sure that Address and Port Translation are disabled before clicking Finished. Under Local Traffic -> iRules, click Create. This will create a small iRule for the explicit proxy VIP to forward non-HTTPS traffic through the TCP tunnel. Change "<name-of-TCP-tunnel-VIP>" to reflect the name of the TCP tunnel virtual server created in the previous step. when HTTP_REQUEST { virtual "/Common/<name-of-TCP-tunnel-VIP>" [HTTP::proxy addr] [HTTP::proxy port] } Add this new iRule to the explicit proxy virtual server. To test, point your explicit proxy client at the IP and port defined IP:port and give it a go. HTTP and HTTPS explicit proxy traffic arriving at the explicit proxy VIP will flow into the TCP tunnel VIP, where the SSLO switching rule will process traffic patterns and send to the appropriate backend SSL Orchestrator topology-as-function. Testing and Considerations Assuming you have the default topology defined in the switching iRule's RULE_INIT, and no traffic matching rules defined, all traffic from the client should pass effortlessly through that topology. If it does not, Ensure the named defined in the static::default_topology variable is the actual name of the topology, without the prepended "sslo_". Enable debug logging in the iRule and observe the LTM log (/var/log/ltm) for any anomalies. Worst case, remove the client facing VLAN from the frontend switching virtual server and attach it to one of your topologies, effectively bypassing the layered architecture. If traffic does not pass in this configuration, then it cannot in the layered architecture. You need to troubleshoot the SSL Orchestrator topology itself. Once you have that working, put the dummy VLAN back on the topology and add the client facing VLAN to the switching virtual server. Considerations The above provides a unique way to solve for complex architectures. There are however a few minor considerations: This is defined outside of SSL Orchestrator, so would not be included in the internal HA sync process. However, this architecture places very little administrative burden on the topologies directly. It is recommended that you create and sync all of the topologies first, then create the layered virtual server and iRules, and then manually sync the boxes. If you make any changes to the switching iRule (or CPM policy), that should not affect the topologies. You can initiate a manual BIG-IP HA sync to copy the changes to the peer. If upgrading to a new version of SSL Orchestrator (only), no additional changes are required. If upgrading to a new BIG-IP version, it is recommended to break HA (SSL Orchestrator 8.0 and below) before performing the upgrade. The external switching virtual server and iRules should migrate natively. Summary And there you have it. In just a few steps you've been able to reduce complexity and add capabilities, and along the way you have hopefully recognized the immense flexibility at your command.1.6KViews5likes2CommentsSSL Orchestrator Advanced Use Cases: Forward Proxy Authentication