security

18143 TopicsiRule based RADIUS Server Stack

Code is community submitted, community supported, and recognized as ‘Use At Your Own Risk’. Short Description The iRule based RADIUS Server Stack can be used to turn a UDP-based Virtual Server into a flexible and fully featured RADIUS Server, including regular REQUEST/RESPONSE as well as CHALLENGE/RESPONSE based RADIUS authentication. Problem solved by this Code Snippet The RADIUS Server Stack covers the RADIUS protocol core-mechanics outlined in RFC 2865 and RFC 5080 and can easily be extended to support every other RADIUS related RFC built on top of these specifications. The RADIUS Server Stack can be used as an extension for LTMs missing RADIUS Server functionalities, as well as iRule command functionalities to support Self-Hosted RADIUS Server scenarios. How to use this Code Snippet Visit my GitHub Repository for further explanations how the RADIUS Server Stack can be used to perform RADIUS Server operations within an iRule. Code Snippet Meta Information Version: 1.1 Coding Language: TCL Full Code Snippet Visit: https://github.com/KaiWilke/F5-iRule-RADIUS-Server-Stack831Views1like1CommentiRule based RADIUS Client Stack

Code is community submitted, community supported, and recognized as ‘Use At Your Own Risk’. Short Description The iRule based RADIUS Client Stack can be used to perform RADIUS based user authentication via SIDEBAND UDP connections. Problem solved by this Code Snippet The RADIUS Client Stack covers the RADIUS protocol core-mechanics outlined in RFC 2865 and RFC 5080 and can be utilized for a Password Authentication Protocol (PAP) authentication within an iRule. How to use this Code Snippet Visit my GitHub Repository for further explanations how the RADIUS Client Stack can be used to perform RADIUS Client operations within an iRule. Code Snippet Meta Information Version: 1.1 Coding Language: TCL Full Code Snippet Visit: https://github.com/KaiWilke/F5-iRule-RADIUS-Client-Stack1.3KViews2likes1CommentAccelerate your AI initiatives using F5 VELOS

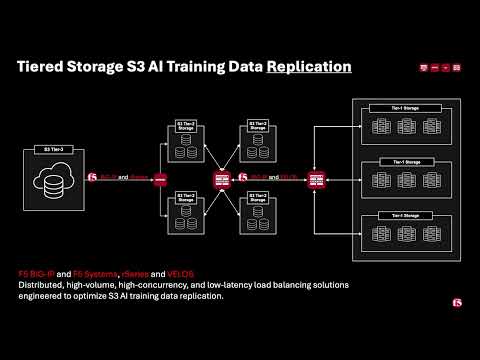

Introduction F5 VELOS is a rearchitected, next-generation hardware platform that scales application delivery performance and automates application services to address many of today’s most critical business challenges. F5 VELOS is a key component of the F5 Application Delivery and Security Platform (ADSP). Demo Video High-Throughput and Concurrency for AI Data Ingestion Given the escalating data demands of AI training and inference pipelines, there is a critical need to architect object-based storage systems, such as S3, and corresponding clients in a manner that ensures high-throughput, scalability, and fault tolerance under massive parallel workloads. S3 Storage Systems increase scalability and resiliency by distributing data objects across multiple storage nodes, leveraging a unified “bucket” abstraction to streamline data organization, access, and fault tolerance. S3 Client Implementations employ highly parallelized, and multi-threaded operations to maximize data transfer rates and throughput, satisfying the low-latency, high-volume requirements of AI and other computationally intensive workloads. Performance and Security for AI Workloads F5 BIG-IP delivers multi-layer load balancing reinforced by robust in-flight security services and performance thresholds engineered to meet or exceed the most demanding enterprise-scale capacity requirements. F5 VELOS Chassis & Blades have advanced FPGA accelerators, high-performance CPU architectures, and cryptographic offload engines. They are all combined with scaling to multi-terabit throughput to meet or exceed the most demanding enterprise capacity requirements. F5 BIG-IP and VELOS enable high-performance data mobility and security for AI workloads anywhere. Load Balancing for S3 AI Training Data Replication Data Replication for Training AI model training and retraining often requires the replication of data from web-service-based object storage tiers to high-performance clustered filesystems. Market Constraints Tier-1 storage systems command high costs, and the ecosystem of certified providers for AI-specific architectures remains comparatively narrow. High-Performance Requirements Effective model training demands access to Tier-1 storage that supports hardware-accelerated data transfers, ensuring rapid delivery of input to GPU memory. S3 Based Migration Replication from cost-efficient, lower-performance storage repositories to Tier 1 infrastructure is commonly orchestrated via the S3 protocol to maintain both scalability and performance. Tiered Storage S3 AI Training Data Replication F5 BIG-IP and F5 Systems, rSeries and VELOS Distributed, high-volume, high-concurrency, and low-latency load balancing solutions engineered to optimize S3 AI training data replication. BIG-IP Best-In-Class Traffic Management & Security: SPEED Smart Load Balancing & Security Directs traffic to the optimal storage for performance, security, and availability. Seamless Data Flow BIG-IP LTM ensures efficient, secure routing from external sources to local storage. Optimized S3 Routing BIG-IP DNS directs client connections to highly available storage nodes for smooth data ingestion. BIG-IP Best-In-Class Traffic Management & Security: SCALE High-Throughput Traffic Management Optimize TCP and HTTPS flows for seamless object storage access. Accelerated Packet Processing Leverage embedded eVPA in FPGA for high-performance L4 IPv4 throughput. Crypto Offload for Speed BIG-IP LTM offloads encryption to best-in-class hardware on rSeries and VELOS, boosting performance. BIG-IP Best-In-Class Traffic Management & Security: Security Robust DDoS Protection BIG-IP’s AFM defends against volumetric and targeted attacks. Secure Traffic Management BIG-IP LTM ensures efficient, secure routing from external sources to local storage. End-to-End Data Protection Safeguards AI workloads with policy-driven security and threat mitigation. F5 Systems Enables Accelerated AI Application Delivery F5 VELOS, rSeries, and BIG-IP Enable distributed, high-volume, high-concurrency, low-latency application delivery for S3. The All-New VELOS CX1610 Provides the multi-terabit throughput necessary for high-performance traffic orchestration. F5 BIG-IP App Services Suite Simplify and secure application delivery for the most demanding high-throughput AI infrastructure needs. Conclusion Unleash Massive Throughput The All-New VELOS BX520 Blade The All-New VELOS CX1610 Chassis Related Articles F5 VELOS: A Next-Generation Fully Automatable Platform F5 rSeries: Next-Generation Fully Automatable Hardware Realtime DoS mitigation with VELOS BX520 Blade DEMO: The Next Generation of F5 Hardware is Ready for you 459Views3likes0Comments

459Views3likes0CommentsF5 rSeries: Next-Generation Fully Automatable Hardware

What is rSeries? F5 rSeries is a rearchitected, next-generation hardware platform that scales application delivery performance and automates application services to address many of today’s most critical business challenges. F5 rSeries is a key component of the F5 Application Delivery and Security Platform (ADSP). rSeries relies on a Kubernetes-based platform layer (F5OS) that is tightly integrated with F5 TMOS software. Going to a microservice-based platform layer allows rSeries to provide additional functionality that was not possible in previous generations of F5 BIG-IP platforms. Customers do not need to learn Kubernetes but still get the benefits of it. Management of the hardware will still be done via a familiar F5 CLI, webUI or API. The additional benefit of automation capabilities can greatly simplify the process of deploying F5 products. A significant amount of time and resources are saved due to automation, which translates to more time to perform critical tasks. F5OS rSeries UI Demo Video Why is this important? Get more done in less time by using a highly automatable hardware platform that can deploy software solutions in seconds, not minutes or hours. Increased performance improves ROI: The rSeries platform is a high performance and highly scalable appliance with improved processing power. Running multiple versions on the same platform allows for more flexibility than previously possible. Pay-as-you-Grow licensing options that unlock more CPU resources. Key rSeries Use-Cases NetOps Automation Shorten time to market by automating network operations and offering cloud like orchestration with full stack programmability Drive app development and delivery with self-service and faster response time Business Continuity Drive consistent policies across on-prem and public cloud and across hardware and software based ADCs Build resiliency with rSeries’ superior performance and failover capabilities Future proof investments by running multiple versions of apps side-by-side; migrate applications at your own pace Cloud Migration On-Ramp Accelerate cloud strategy by adopting cloud operating models and on-demand scalability with rSeries and use that as on ramp to cloud Dramatically reduce TCO with rSeries systems; extend commercial models to migrate from hardware to software or as applications move to cloud Automation Capabilities Declarative APIs and integration with automation frameworks (Terraform, Ansible) greatly simplifies operations and reduces overhead: AS3 (Application Services 3 Extension): A declarative API that simplifies the configuration of application services. With AS3, customers can deploy and manage configurations consistently across environments. Ansible Automation: Prebuilt Ansible modules for rSeries enable automated provisioning, configuration, and updates, reducing manual effort and minimizing errors. Terraform: Organizations leveraging Infrastructure as Code (IaC) can use Terraform to define and automate the deployment of rSeries appliances and associated configurations. Example json file: Example of running the Automation Playbook: Example of the results: More information on Automation: Automating F5OS on rSeries GitHub Automation Repository Specialized Hardware Performance rSeries offers more hardware-accelerated performance capabilities with more FPGA chipsets that are more tightly integrated with TMOS. It also includes the latest Intel processing capabilities. This enhances the following: SSL and compression offload L4 offload for higher performance and reduced load on software Hardware-accelerated SYN flood protection Hardware-based protection from more than 100 types of denial-of-service (DoS) attacks Support for F5 Intelligence Services Migration Options (BIG-IP Journeys) Use BIG-IP Jouneys to easily migrate your existing configuration to rSeries. This covers the following: Entire L4-L7 configuration can be migrated Individual Applications can be migrated BIG-IP Tenant configuration can be migrated Automatically identify and resolve migration issues Convert UCS files into AS3 declarations if needed Post-deployment diagnostics and health The Journeys Tool, available on DevCentral’s GitHub, facilitates the migration of legacy BIG-IP configurations to rSeries-compatible formats. Customers can convert UCS files, validate configurations, and highlight unsupported features during the migration process. Multi-tenancy capabilities in rSeries simplify the process of isolating workloads during and after migration. GitHub repository for F5 Journeys Conclusion The F5 rSeries platform addresses the modern enterprise’s need for high-performance, scalable, and efficient application delivery and security solutions. By combining cutting-edge hardware capabilities with robust automation tools and flexible migration options, rSeries empowers organizations to seamlessly transition from legacy platforms while unlocking new levels of performance and operational agility. Whether driven by the need for increased throughput, advanced multi-tenancy, the rSeries platform stands as a future-ready solution for securing and optimizing application delivery in an increasingly complex IT landscape. Related Content Cloud Docs rSeries Guide F5 rSeries Appliance Datasheet F5 VELOS: A Next-Generation Fully Automatable Platform DEMO: The Next Generation of F5 Hardware is Ready for you 658Views2likes0Comments

658Views2likes0CommentsF5 VELOS: A Next-Generation Fully Automatable Platform

What is VELOS? The F5 VELOS platform is the next generation of F5’s chassis-based systems. VELOS can bridge traditional and modern application architectures by supporting a mix of traditional F5 BIG-IP tenants as well as next-generation BIG-IP Next tenants in the future. F5 VELOS is a key component of the F5 Application Delivery and Security Platform (ADSP). VELOS relies on a Kubernetes-based platform layer (F5OS) that is tightly integrated with F5 TMOS software. Going to a microservice-based platform layer allows VELOS to provide additional functionality that was not possible in previous generations of F5 BIG-IP platforms. Customers do not need to learn Kubernetes but still get the benefits of it. Management of the chassis will still be done via a familiar F5 CLI, webUI, or API. The additional benefit of automation capabilities can greatly simplify the process of deploying F5 products. A significant amount of time and resources are saved due to automation, which translates to more time to perform critical tasks. F5OS VELOS UI Demo Video Why is VELOS important? Get more done in less time by using a highly automatable hardware platform that can deploy software solutions in seconds, not minutes or hours. Increased performance improves ROI: The VELOS platform is a high-performance and highly scalable chassis with improved processing power. Running multiple versions on the same platform allows for more flexibility than previously possible. Significantly reduce the TCO of previous-generation hardware by consolidating multiple platforms into one. Key VELOS Use-Cases NetOps Automation Shorten time to market by automating network operations and offering cloud-like orchestration with full-stack programmability Drive app development and delivery with self-service and faster response time Business Continuity Drive consistent policies across on-prem and public cloud and across hardware and software-based ADCs Build resiliency with VELOS’ superior platform redundancy and failover capabilities Future-proof investments by running multiple versions of apps side-by-side; migrate applications at your own pace Cloud Migration On-Ramp Accelerate cloud strategy by adopting cloud operating models and on-demand scalability with VELOS and use that as on-ramp to cloud Dramatically reduce TCO with VELOS systems; extend commercial models to migrate from hardware to software or as applications move to cloud Automation Capabilities Declarative APIs and integration with automation frameworks (Terraform, Ansible) greatly simplifies operations and reduces overhead: AS3 (Application Services 3 Extension): A declarative API that simplifies the configuration of application services. With AS3, customers can deploy and manage configurations consistently across environments. Ansible Automation: Prebuilt Ansible modules for VELOS enable automated provisioning, configuration, and updates, reducing manual effort and minimizing errors. Terraform: Organizations leveraging Infrastructure as Code (IaC) can use Terraform to define and automate the deployment of VELOS appliances and associated configurations. Example json file: Example of running the Automation Playbook: Example of the results: More information on Automation: Automating F5OS on VELOS GitHub Automation Repository Specialized Hardware Performance VELOS offers more hardware-accelerated performance capabilities with more FPGA chipsets that are more tightly integrated with TMOS. It also includes the latest Intel processing capabilities. This enhances the following: SSL and compression offload L4 offload for higher performance and reduced load on software Hardware-accelerated SYN flood protection Hardware-based protection from more than 100 types of denial-of-service (DoS) attacks Support for F5 Intelligence Services VELOS CX1610 chassis VELOS BX520 blade Migration Options (BIG-IP Journeys) Use BIG-IP Journeys to easily migrate your existing configuration to VELOS. This covers the following: Entire L4-L7 configuration can be migrated Individual Applications can be migrated BIG-IP Tenant configuration can be migrated Automatically identify and resolve migration issues Convert UCS files into AS3 declarations if needed Post-deployment diagnostics and health The Journeys Tool, available on DevCentral’s GitHub, facilitates the migration of legacy BIG-IP configurations to VELOS-compatible formats. Customers can convert UCS files, validate configurations, and highlight unsupported features during the migration process. Multi-tenancy capabilities in VELOS simplify the process of isolating workloads during and after migration. GitHub repository for F5 Journeys Conclusion The F5 VELOS platform addresses the modern enterprise’s need for high-performance, scalable, and efficient application delivery and security solutions. By combining cutting-edge hardware capabilities with robust automation tools and flexible migration options, VELOS empowers organizations to seamlessly transition from legacy platforms while unlocking new levels of performance and operational agility. Whether driven by the need for increased throughput, advanced multi-tenancy, the VELOS platform stands as a future-ready solution for securing and optimizing application delivery in an increasingly complex IT landscape. Related Content Cloud Docs VELOS Guide F5 VELOS Chassic System Datasheet DEMO: The Next Generation of F5 Hardware is Ready for you 652Views3likes0Comments

652Views3likes0CommentsBase64 decoding issue (JSON request)

Hello Everyone, i'm facing an issue with Base64 decoding on F5 ASM. the request body look like this: Original message before encoding { "data": { "name":"khaled", "Age":"30", "Car":"BMW", "Conutry":"Egypt", "City":"Cairo" } } The developer encoded only the value part of the key {"data":"IHsKICAgICAgICAibmFtZSI6ICJraGFsZWQiLAogICAgICAgICJBZ2UiOiAiMzAiLAogICAgICAgICJDYXIiOiAiQk1XIiwKICAgICAgICAiQ29udXRyeSI6ICJFZ3lwdCIsCiAgICAgICAgIkNpdHkiOiAiQ2Fpcm8iCiAgICB9"} i created JSON profile and base64 decoding is required: When F5 ASM decode the request body, the value part is decoded correctly but "data" become garbage. because ASM doesn't know that the part of the request is encoded not the whole request body, how can i fix this behavior. after decoding: uZ { "name": "khaled", "Age": "30", "Car": "BMW", "Conutry": "Egypt", "City": "Cairo" } i searched to fix this issue, and i found this Securing Base64-Encoded Parameters , i added "data" parameter then For the Parameter Value Type setting, select User-input value. On the Data Type tab, for the Data Type setting, select either Alpha-Numeric or File Upload. Select the Base64 Decoding check box if you want the system to apply base64 decoding to values for this parameter. When i changed the profile to disable decoding on the request body, a lot of violations triggered (meta chars) { } " : {"data":"IHsKICAgICAgICAibmFtZSI6ICJraGFsZWQiLAogICAgICAgICJBZ2UiOiAiMzAiLAogICAgICAgICJDYXIiOiAiQk1XIiwKICAgICAgICAiQ29udXRyeSI6ICJFZ3lwdCIsCiAgICAgICAgIkNpdHkiOiAiQ2Fpcm8iCiAgICB9"}121Views0likes1CommentUpdate an ASM Policy Template via REST-API - the reverse engineering way

I always want to automate as many tasks as possible. I have already a pipeline to import ASM policy templates. Today I had the demand to update this base policies. Simply overwriting the template with the import tasks does not work. I got the error message "The policy template ax-f5-waf-jump-start-template already exists.". Ok, I need an overwrite tasks. Searching around does not provide me a solution, not even a solution that does not work. Simply nothing, my google-foo have deserted me. Quick chat with an AI, gives me a solution that was hallucinated. The AI answer would be funny if it weren't so sad. I had no hope that AI could solve this problem for me and it was confirmed, again. I was configuring Linux systems before the internet was widely available. Let's dig us in the internals of the F5 REST API implementation and solve the problem on my own. I took a valid payload and removed a required parameter, "name" in this case. The error response changes, this is always a good signal in this stage of experimenting. The error response was "Failed Required Fields: Must have at least 1 of (title, name, policyTemplate)". There is also a valid field named "policyTemplate". My first thought: This could be a reference for an existing template to update. I added the "policyTemplate" parameter and assigned it an existing template id. The error message has changed again. It now throws "Can't use string (\"ox91NUGR6mFXBDG4FnQSpQ\") as a HASH ref while \"strict refs\" in use at /usr/local/share/perl5/F5/ASMConfig/Entity/Base.pm line 888.". An perl error that is readable and the perl file is in plain text available. Looking at the file at line 888: The Perl code looks for an "id" field as property of the "policyTemplate" parameter. Changing the payload again and added the id property. And wow that was easy, it works and the template was updated. Final the payload for people who do not want to do reverse engineering. Update POST following payload to /mgmt/tm/asm/tasks/import-policy-template to update an ASM policy template: { "filename": "<username>~<filename>", "policyTemplate": { "id": "ox91NUGR6mFXBDG4FnQSpQ" } } Create POST following payload /mgmt/tm/asm/tasks/import-policy-template to create an ASM policy template: { "name": "<name>", "filename": "<username>~<filename>" } Hint: You must upload the template before to /var/config/rest/downloads/<username>~<filename>". Conclusion Documentation is sometimes overrated if you can read Perl. Missed I the API documentation for this endpoint and it was just a exercise for me?154Views2likes6CommentsEven More Hands-On Quantum-Safe PKI: Building Enterprise PQC Certificate Authorities with EJBCA Community Edition

Your PQC CAs just graduated from the command line to the corner office. Back in December and again in January we published Hands-On Quantum-Safe PKI, a step-by-step lab for building quantum-resistant certificate authorities from scratch using OpenSSL. You learned ML-DSA algorithms, built a Root CA, chained an Intermediate CA, issued end-entity certificates, and stood up revocation infrastructure, all by hand, one command at a time. Billions of you went through it. Six of you even enjoyed it. But here's the thing about building a CA with OpenSSL: it works beautifully for learning and it works beautifully for testing. It does not work beautifully at 2 AM when someone asks you to revoke a certificate and your "management interface" is vim index.txt . Calgon, take me away! The lab has expanded. The Post-Quantum Cryptography Step-by-Step Lab now includes a complete EJBCA Community Edition deployment track, nine modules that take you from bare metal to enterprise-managed, quantum-resistant Certificate Authorities running inside a real PKI management platform. Same SassyCorp identity. Same ML-DSA-87 Root CA and ML-DSA-65 Intermediate CA. Now with a database, an application server, audit logs, and a web UI that doesn't require you to memorize openssl ca flags. But if you want to we won't stop you and we love you for that. 🔥🔥🔥 Access the Complete Lab on GitHub 🔥🔥🔥; The new lab walks through deploying Keyfactor's EJBCA Community Edition v9.3 on Ubuntu with WildFly 35, MariaDB, and OpenJDK 21. You configure a 3-port TLS architecture, HTTP on 8080, public HTTPS on 8442, and mutual TLS admin access on 8443 where your browser has to prove it's worth it is before EJBCA lets you touch anything. It's PKI with actual access control, which is a refreshing change from chmod 600 being your entire security model. The Evolution: Why This Matters Think of it as three stages of PQC readiness. The first lab (CNSA 2.0 with OpenSSL) taught you algorithm mechanics for federal use cases. The second lab (FIPS 203/204/205) broadened that to commercial compliance. This third expansion puts those same algorithms inside infrastructure that can actually manage certificates at scale — issuance, renewal, revocation, OCSP, CRL distribution, role-based access, and audit logging that doesn't live in a flat file. That progression is intentional. You can't meaningfully operate an enterprise PKI platform if you don't understand what's happening underneath it. And you can't stop at OpenSSL if your organization needs to manage more than a handful of certificates. The compliance clock is running - NIST is deprecating classical asymmetric algorithms by 2030, the NSA wants full CNSA 2.0 enforcement by 2033, and Australia is trying to eliminate classical public-key crypto entirely by 2030 (bless their ambitious hearts). Having people who can actually stand up and operate PQC certificate authorities isn't optional anymore. It's PKI Thunderdome! What You Can Do Next After completing the lab, you'll have a fully operational EJBCA instance with three CAs — the RSA ManagementCA for internal admin plumbing, plus your ML-DSA-87 Root and ML-DSA-65 Intermediate for quantum-resistant certificate operations. From here you can issue end-entity certificates through EJBCA's enrollment interface, configure CRL distribution points, set up OCSP responders, explore the REST API, and experiment with hybrid certificates that combine PQC and classical algorithms. You've got an enterprise PKI playground that happens to be quantum-resistant. The whole thing runs on a single VM if you want, that's what we did. No Docker, no scripts, no "just run this compose file and trust me." Every command is manual, every configuration file is edited by hand, and every step explains why. We remain faithful disciples of the "Learn Python the Hard Way" school of pedagogy, mostly because it works and partly because suffering builds character. The lab is open source, community-driven, and waiting for your pull requests. Go break something, then fix it. That's how you learn. Access the Complete Lab on GitHub → References Resource URL EJBCA Community Edition (Keyfactor) https://github.com/Keyfactor/ejbca-ce Keyfactor PQC Hybrid CA Tutorial https://docs.keyfactor.com/ejbca/latest/tutorial-create-pqc-hybrid-ca-chain Keyfactor EJBCA Installation Docs https://docs.keyfactor.com/ejbca-software/latest/installation WildFly 35 Documentation https://docs.wildfly.org/35/ PQC Coalition — International Requirements https://pqcc.org/international-pqc-requirements/122Views2likes1CommentPractical Mapping Guide - F5 BIG-IP TMOS Modules to Feature-Scoped CNFs

Introduction BIG-IP TMOS and BIG-IP CNFs solve similar problems with very different deployment and configuration models. In TMOS you deploy whole modules (LTM, AFM, DNS, etc.), while in CNFs you deploy only the specific features and data plane functions you need as cloud native components. Modules vs feature scoped services In TMOS, enabling LTM gives you a broad set of capabilities in one module: virtual servers, pools, profiles, iRules, SNAT, persistence, etc., all living in the same configuration namespace. In CNFs, you deploy only the features you actually need, expressed as discrete custom resources: for example, a routing CNF for more precise TMM routing, a firewall CNF for policies, or specific CRs for profiles and NAT, rather than bringing the entire LTM or AFM module along “just in case”. Configuration objects vs custom resources TMOS configuration is organized as objects under modules (for example: LTM virtual, LTM pool, security, firewall policy, net vlan), managed via tmsh, GUI, or iControl REST. CNFs expose those same capabilities through Kubernetes Custom Resources (CRs): routes, policies, profiles, NAT, VLANs, and so on are expressed as YAML and applied with GitOps-style workflows, making individual features independently deployable with a version history. Coarse-grained vs precise feature deployment On TMOS, deploying a use case often means standing up a full BIG-IP instance with multiple modules enabled, even if the application only needs basic load balancing with a single HTTP profile and a couple of firewall rules. With CNFs, you can carve out exactly what you need: for example, only a precise TMM routing function plus the specific TCP/HTTP/SSL profiles and security policies required for a given application or edge segment, reducing blast radius and resource footprint. BIG-IP Next CNF Custom Resources (CRs) extend Kubernetes APIs to configure the Traffic Management Microkernel (TMM) and Advanced Firewall Manager (AFM), providing declarative equivalents to BIG-IP TMOS configuration objects. This mapping ensures functional parity for L4-L7 services, security, and networking while enabling cloud-native scalability. Focus here covers core mappings with examples for iRules and Profiles. What are Kubernetes Custom Resources Think of Kubernetes API as a menu of objects you can create: Pods (containers), Services (networking), Deployments (replicas). CRs add new menu items for your unique needs. You first define a CustomResourceDefinition (CRD) (the blueprint), then create CR instances (actual objects using that blueprint). How CRs Work (Step-by-Step) Create CRD - Define new object type (e.g., "F5BigFwPolicy") Apply CRD - Kubernetes API server registers it, adding new REST endpoints like /apis/f5net.com/v1/f5bigfwpolicies Create CR - Write YAML instances of your new type and apply with kubectl Controllers Watch - Custom operators/controllers react to CR changes, creating real resources (Pods, ConfigMaps, etc.) CR examples Networking and NAT Mappings Networking CRs handle interfaces and routing, mirroring, TMOS VLANs, Self-IPs and NAT mapping. CNF CR TMOS Object(s) Purpose F5BigNetVlan VLANs, Self-IPs, MTU Interface config F5BigCneSnatpool SNAT Automap Source NAT s Example: F5BigNetVlan CR sets VLAN tags and IPs, applied via kubectl apply, propagating to TMM like tmsh create net vlan. Security and Protection Mappings Protection CRs map to AFM and DoS modules for threat mitigation. CNF CR CR Purpose TMOS Object(s) Purpose F5BigFwPolicy applies industry-standard firewall rules to TMM AFM Policies Stateful ACL filtering F5BigFwRulelist Consists of an array of ACL rules AFM Rule list Stateful ACL filtering F5BigSvcPolicy Allows creation of Timer Policies and attaching them to the Firewall Rules AFM Policies Stateful ACL filtering F5BigIpsPolicy Allows you to filter inspected traffic (matched events) by various properties such as, the inspection profile’s host (virtual server or firewall policy), traffic properties, inspection action, or inspection service. IPS AFM policies Enforce IPS F5BigDdosGlobal Configures the TMM Proxy Pod to protect applications and the TMM Pod from Denial of Service / Distributed Denial of Service (Dos/DDoS) attacks. Global DoS/DDoS Device DoS protection F5BigDdosProfile The Percontext DDoS CRD configures the TMM Proxy Pod to protect applications from Denial of Service / Distributed Denial of Service (Dos/DDoS) attacks. DoS/DDoS per profile Per Context DoS profile protection F5BigIpiPolicy Each policy contains a list of categories and actions that can be customized, with IPRep database being common. Feedlist based policies can also customize the IP addresses configured. IP Intelligence Policies Reputation-based blocking Example: F5BigFwPolicy references rule-lists and zones, equivalent to tmsh creating security firewall policy. Traffic Management Mappings Traffic CRs proxy TCP/UDP and support ALGs, akin to LTM Virtual Servers and Pools. CNF CR TMOS Object(s) Purpose Part of the Apps CRs Virtual Server (Ingress/HTTPRoute/GRPCRoute) LTM Virtual Servers Load balancing Part of the Apps CRs Pool (Endpoints) LTM Pools Node groups F5BigAlgFtp FTP Profile/ALG FTP gateway F5BigDnsCache DNS Cache Resolution/caching Example: GRPCRoute CR defines backend endpoints and profiles, mapping to tmsh create ltm virtual. Profiles Mappings with Examples Profiles CRs customize traffic handling, referenced by Traffic Management CRs, directly paralleling TMOS profiles. CNF CR TMOS Profile(s) Example Configuration Snippet F5BigTcpSetting TCP Profile spec: { defaultsFrom: "tcp-lan-optimized" } tunes congestion like tmsh create LTM profile TCP optimized F5BigUdpSetting UDP Profile spec: { idleTimeout: 300 } sets timeouts F5BigUdpSetting HTTP Profile spec: { http2Profile: "http2" } enables HTTP/2 F5BigClientSslSetting ClientSSL Profile spec: { certKeyChain: { name: "default" } } for TLS termination F5PersistenceProfile Persistence Profile Enables source/dest persistence Profiles attach to Virtual Servers/Ingresses declaratively, e.g., spec.profiles: [{ name: "my-tcp", context: "tcp" }]. iRules Support and Examples CNF fully supports iRules for custom logic, integrated into Traffic Management CRs like Virtual Servers or FTP/RTSP. iRules execute in TMM, preserving TMOS scripting. Example CR Snippet: apiVersion: k8s.f5net.com/v1 kind: F5BigCneIrule metadata: name: cnfs-dns-irule namespace: cnf-gateway spec: iRule: > when DNS_REQUEST { if { [IP::addr [IP::remote_addr] equals 10.10.1.0/24] } { cname cname.siterequest.com } else { host 10.20.20.20 } } This mapping facilitates TMOS-to-CNF migrations using tools like F5Big-Ip Controller for CR generation from UCS configs. For full CR specs, refer to official docs. The updated full list of CNF CRs per release can be found over here, https://clouddocs.f5.com/cnfs/robin/latest/cnf-custom-resources.html Conclusion In this article, we examined how BIG-IP Next CNF redefines the TMOS module model into a feature-scoped, cloud-native service architecture. By mapping TMOS objects such as virtual servers, profiles, and security policies to their corresponding CNF Custom Resources, we illustrated how familiar traffic management and security constructs translate into declarative Kubernetes workflows. Related content F5 Cloud-Native Network Functions configurations guide BIG-IP Next Cloud-Native Network Functions (CNFs) CNF DNS Express BIG-IP Next for Kubernetes CNFs - DNS walkthrough BIG-IP Next for Kubernetes CNFs deployment walkthrough | DevCentral BIG-IP Next Edge Firewall CNF for Edge workloads | DevCentral Modern Applications-Demystifying Ingress solutions flavors | DevCentral47Views1like0Comments