Deploy WAF on any Edge with F5 Distributed Cloud (SaaS Console, Automation)

F5 XC WAAP/WAF presents a clear advantage over classical WAAP/WAFs in that it can be deployed on a variety of environments without loss of functionality. In this first article of a series, we present an overview of the main deployment options for XC WAAP while follow-on articles will dive deeper into the details of the deployment procedures.5.3KViews9likes0CommentsEnhance your Application Security using Client-side signals – Part 2

Elevate your web and mobile application security with F5's innovative integration of web proxy strategies and client-side signals in this two-part series. Dive into the three critical categories of client-side signals—human interaction, device environment, and network signals—to enhance your application security strategy. Gain practical insights on distinguishing between humans and bots, fingerprinting devices, and analyzing network signals, ensuring robust protection for your online applications.105Views1like0CommentsSMTP Smugglers Blues

The SMTP protocol has been vulnerable to email smuggling for decades. Many of the mail servers out there have mitigations in place to handle this vulnerability but not all of them, especially the quick libraries and add-ons you can find on web sites. Protecting your server from these attacks is simple with F5 BIG-IP Advanced WAF and our SMTP Protocol Security profiles. Read to learn how to give those bad actors the “Smugglers Blues”187Views2likes2CommentsCookie Tampering Protection using F5 Distributed Cloud Platform

This article aims to cover the basics of cookies and then showed how intruders can tamper cookies to modify application behavior. Finally, we also showcased how F5 XC cookie tampering protection can be used to safeguard our sensitive cookie workloads.169Views1like0CommentsMitigating OWASP API Security Top 10 risks using F5 NGINX App Protect

This 2019 API Security article covers the summary of OWASP API Security Top 10 – 2019 categories and newly published 2023 API security article covered introductory part of newest edition of OWASP API Security Top 10 risks – 2023. We will deep-dive into some of those common risks and how we can protect our applications against these vulnerabilities using F5 NGINX App Protect. Excessive Data Exposure Problem Statement: As shown below in one of the demo application API’s, Personal Identifiable Information (PII) data, like Credit Card Numbers (CCN) and U.S. Social Security Numbers (SSN), are visible in responses that are highly sensitive. So, we must hide these details to prevent personal data exploits. Solution: To prevent this vulnerability, we will use the DataGuard feature in NGINX App Protect, which validates all response data for sensitive details and will either mask the data or block those requests, as per the configured settings. First, we will configure DataGuard to mask the PII data as shown below and will apply this configuration. Next, if we resend the same request, we can see that the CCN/SSN numbers are masked, thereby preventing data breaches. If needed, we can update configurations to block this vulnerability after which all incoming requests for this endpoint will be blocked. If you open the security log and filter with this support ID, we can see that the request is either blocked or PII data is masked, as per the DataGuard configuration applied in the above section. Injection Problem Statement: Customer login pages without secure coding practices may have flaws. Intruders could use those flaws to exploit credential validation using different types of injections, like SQLi, command injections, etc. In our demo application, we have found an exploit which allows us to bypass credential validation using SQL injection (by using username as “' OR true --” and any password), thereby getting administrative access, as below: Solution: NGINX App Protect has a database of signatures that match this type of SQLi attacks. By configuring the WAF policy in blocking mode, NGINX App Protect can identify and block this attack, as shown below. If you check in the security log with this support ID, we can see that request is blocked because of SQL injection risk, as below. Insufficient Logging & Monitoring Problem Statement: Appropriate logging and monitoring solutions play a pivotal role in identifying attacks and also in finding the root cause for any security issues. Without these solutions, applications are fully exposed to attackers and SecOps is completely blind to identifying details of users and resources being accessed. Solution: NGINX provides different options to track logging details of applications for end-to-end visibility of every request both from a security and performance perspective. Users can change configurations as per their requirements and can also configure different logging mechanisms with different levels. Check the links below for more details on logging: https://www.nginx.com/blog/logging-upstream-nginx-traffic-cdn77/ https://www.nginx.com/blog/modsecurity-logging-and-debugging/ https://www.nginx.com/blog/using-nginx-logging-for-application-performance-monitoring/ https://docs.nginx.com/nginx/admin-guide/monitoring/logging/ https://docs.nginx.com/nginx-app-protect-waf/logging-overview/logs-overview/ Unrestricted Access to Sensitive Business Flows Problem Statement: By using the power of automation tools, attackers can now break through tough levels of protection. The inefficiency of APIs to detect automated bot tools not only causes business loss, but it can also adversely impact the services for genuine users of an application. Solution: NGINX App Protect has the best-in-class bot detection technology and can detect and label automation tools in different categories, like trusted, untrusted, and unknown. Depending on the appropriate configurations applied in the policy, requests generated from these tools are either blocked or alerted. Below is an example that shows how requests generated from the Postman automation tool are getting blocked. By filtering the security log with this support-id, we can see that the request is blocked because of an untrusted bot. Lack of Resources & Rate Limiting Problem Statement: APIs do not have any restrictions on the size or number of resources that can be requested by the end user. Above mentioned scenarios sometimes lead to poor API server performance, Denial of Service (DoS), and brute force attacks. Solution: NGINX App Protect provides different ways to rate limit the requests as per user requirements. A simple rate limiting use case configuration is able to block requests after reaching the limit, which is demonstrated below. Conclusion: In short, this article covered some common API vulnerabilities and shows how NGINX App Protect can be used as a mitigation solution to prevent these OWASP API security risks. Related resources for more information or to get started: F5 NGINX App Protect OWASP API Security Top 10 2019 OWASP API Security Top 10 20232.2KViews7likes0CommentsSupport of WAF Signature Staging in F5 Distributed Cloud (XC)

Introduction: Attack signatures are the rules and patterns which identifies attacks against your web application. When the Load balancer in the F5Distributed Cloud (XC)console receives a client request, it compares the request to the attack signatures associated with your WAF policy and detects if the pattern is matched. Hence, it will trigger an "attack signature detected" violation and will either alarm or block based on the enforcement mode of your WAF policy. A generic WAF policy would include only the attack signatures needed to protect your application. If too many are included, you waste resources on keeping up with signatures that you don't need. Same way, if you don't include enough, you might let an attack compromise your application. F5 XC WAF is supporting multiple states of attack signatures like enable, disable, suppress, auto-supress and staging. This article focusses on how F5 XC WAF supports staging and detects the staged attack signatures and gives the details of attack signatures by allowing them into the application. Staging: A request that triggers a staged signature will not cause the request to be blocked,but you will see signature trigger details in the security event. When a new/updated attack signature(s) is automatically placed in staging then you won't know how that attack signature is going to affect your application until you had some time to test it first. After you test the new signature(s), then you can take them out of staging, apply respective event action to protect your application! Environment: F5 Distributed Cloud Console Security Dashboard Configuration: Here is the step-by-step process of configuring the WAF Staging Signatures and validating them with new and updated signature attacks. Login to F5 Distributed Cloud Console and navigate to “Web App & API Protection” -> App Firewall and then click on `Add App Firewall`. Name the App Firewall Policy and configure it with given values. Navigate to “Web App & API Protection” à Load Balancers à HTTP Load Balancers and click on `Add HTTP Load Balancers`. Name the Load Balancer and Configure it with given values and associate the origin pool. Origin pool ``petstore-op`` configuration. Associate the initially created APP firewall ``waf-sig-staging`` under LB WAF configuration section. ``Save and Exit`` the configuration and Verify that the Load balancer has created successfully with the name ``petstore-op``. Validation: To verify the staging attacks, you need the signature attacks listed in attack signature DB. In this demo we are using the below newly added attack signature (200104860) and updated attack signature (200103281) Id’s. Now, Let’s try to access the LB domain with the updated attack signature Id i.e 200103281 and verify that the LB dashboard has detected the staged attack signature by reflecting the details. F5 XC Dashboard Event Log: Now try to access the LB domain with new signature attack adding the cookie in the request header. F5 XC Dashboard Event Log: Now, Disable the staging in WAF policy ``waf-sig-staging``. Let’s try to access the LB domain with new signature attack. F5 XC Dashboard Event Log: Conclusion: As you see from the demo, F5 XC WAF supports staging feature which will enhance the testing scope of newly added and updated attack signature(s). Reference: F5 Distributed Cloud WAF Attack Signatures945Views5likes2CommentsSecuring Applications using mTLS Supported by F5 Distributed Cloud

Introduction Mutual Transport Layer Security (mTLS) is a process that establishes encrypted and secure TLS connection between the parties and ensures both parties use X.509 digital certificates to authenticate each other. It helps to prevent the malicious third-party attacks which will imitate the genuine applications.This authentication method helps when a server needs to ensure the authenticity and validity of either a specific user or device.As the SSL became outdated several companies like Skype, Cloudfare are now using mTLS to secure business servers. Not using TLS or other encryption tools without secure authentication leads to ‘man in the middle attacks.’ Using mTLS we can provide an identity to a server that can be cryptographically verified and makes your resources more flexible. mTLS with XFCC Header Not only supporting the mTLS process, F5 Distributed Cloud WAF is giving the feasibility to forward the Client certificate attributes (subject, issuer, root CA etc..) to origin server via x-forwarded-client-cert header which provides additional level of security when the origin server ensures to authenticate the client by receiving multiple requests from different clients. This XFCC header contains the following attributes by supporting multiple load balancer types like HTTPS with Automatic Certificate and HTTPS with Custom Certificate. Cert Chain Subject URI DNS How to Configure mTLS In this Demo we are using httpbin as an origin server which is associated through F5 XC Load Balancer. Here is the procedure to deploy the httpbin application, creating the custom certificates and step-by-step process of configuring mTLS with different LB (Load Balancer) types using F5 XC. Deploying HttpBin Application Here is the link to deploy the application using docker commands. Signing server/leaf cert with locally created Root CA Commands to generate CA Key and Cert: openssl genrsa -out root-key.pem 4096 openssl req -new -x509 -days 3650 -key root-key.pem -out root-crt.pem Commands to generate Server Certificate: openssl genrsa -out cert-key2.pem 4096 openssl req -new -sha256 -subj "/CN=test-domain1.local" -key cert-key2.pem -out cert2.csr echo "subjectAltName=DNS:test-domain1.local" >> extfile.cnf openssl x509 -req -sha256 -days 501 -in cert2.csr -CA root-crt.pem -CAkey root-key.pem -out cert2.pem -extfile extfile.cnf -CAcreateserial Note: Add the TLS Certificate to XC console, create a LB(HTTP/TCP) and attach origin pools and TLS certificates to it. In Ubuntu: Move above created CA certificate (ca-crt.pem) to /usr/local/share/ca-certificates/ca-crt.pem and modify "/etc/hosts" file by mapping the VIP(you can get this from your configured LB -> DNS info -> IP Addr) with domain, in this case the (test-domain1.local). mTLS with HTTPS Custom Certificate Log in the F5 Distributed Cloud Console and navigate to “Web APP & API Protection” module. Go to Load Balancers and Click on ‘Add HTTP Load Balancer’. Give the LB Name (test-mtls-cust-cert), Domain name (mtlscusttest.f5-hyd-demo.com), LB Type as HTTPS with Custom Certificate, Select the TLS configuration as Single Certificate and configure the certificate details. Click in ‘Add Item’ under TLS Certificates and upload the cert and key files by clicking on import from files. Click on apply and enable the mutual TLS, import the root cert info, and add the XFCC header value. Configure the origin pool by clicking on ‘Add Item’ under Origins. Select the created origin pool for httpbin. Click on ‘Apply’ and then save the LB configuration with ‘Save and Exit’. Now, we have created the Load Balancer with mTLS parameters. Let us verify the same with the origin server. mTLS with HTTPS with Automatic Certificate Log in the F5 Distributed Cloud Console and navigate to “Web APP & API Protection” module. Goto Load Balancers and Click on ‘Add HTTP Load Balancer’. Give the LB Name(mtls-auto-cert), Domain name (mtlstest.f5-hyd-demo.com), LB Type as HTTPS with Automatic Certificate, enable the mutual TLS and add the root certificate. Also, enable x-forwarded-client-cert header to add the parameters. Configure the origin pool by clicking on ‘Add Item’ under Origins. Select the created origin pool for httpbin. Click on ‘Apply’ and then save the LB configuration with ‘Save and Exit’. Now, we have created the HTTPS Auto Cert Load Balancer with mTLS parameters. Let us verify the same with the origin server. Conclusion As you can see from the demonstration, F5 Distributed Cloud WAF is providing the additional security to the origin servers by forwarding the client certificate info using mTLS XFCC header. Reference Links mTLS Insights Create root cert pair F5 Distributed Cloud WAF1.8KViews2likes0CommentsAutomation of OWASP Injection mitigation using F5 Distributed Cloud Platform

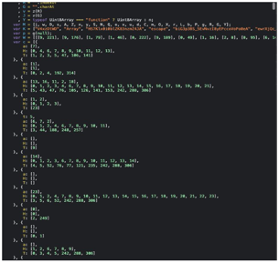

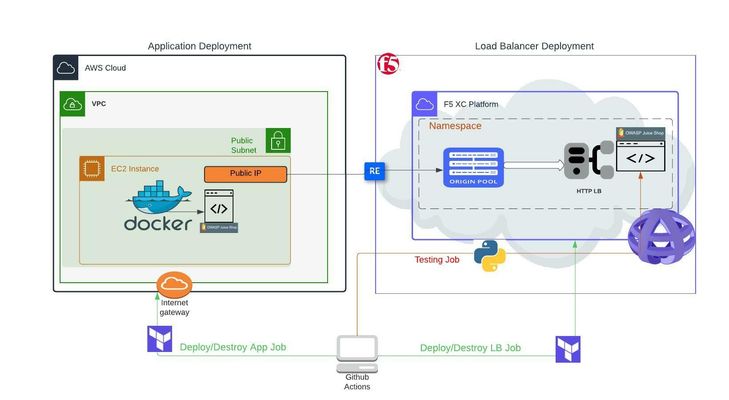

Objective: The purpose of this article is to automate F5 Distributed Cloud Platform (F5 XC) detection and mitigation of OWASP TOP 10 Injection attacks and integrating code in GitHub. This article shows how we can use Terraform, Python and Github workflow to provide the flexibility of updating existing infrastructure after every change using CI/CD event triggers. For more details about this feature please refer: Injection Attack Mitgation Article Introduction to Injection: An application is vulnerable to attack when: The data provided is not validated by the application User requested schema is not being analyzed before processing Data is used within search parameters to extract additional and sensitive records If a user tries to use Cross-site Scripting to get some unauthorized data Some of the common injections are SQL, NoSQL, OS command, Object Relational Mapping (ORM), Etc. In this automation article we are trying to bypass password validation in a demo application using SQL Injection code. Design: For the purpose of reusability, I have separated demo application and F5 XC resources deployments in 2 different flows as below. 1. First, we are deploying a demo application (Juice Shop) as a docker container in AWS EC2 machine (if customer already has their application running, they can skip this and use their application public IP directly in below flow) 2. Once the demo app is deployed, we are using application public IP to configure the origin pool, WAF and load balancer in F5 XC Once demo application and F5 XC resources are deployed successfully, python script is generating login request consisting of malicious SQL Injection. Once traffic is generated, F5 XC platform WAF will detect and block the malicious request. Finally, we are destroying the above resources using terraform Above workflow is integrated using GitHub Actions file which ensures dynamic deployment of the demo app and F5 XC load balancer which can be exposed using public domain name. Repo code URL:https://github.com/f5devcentral/owasp-injection-mitigation Conclusion: In this article we have showed how we can leverage power of CI/CD deployment to automate end to end verification of injection attacks mitigationusing GitHub Actions, Terraform and Python developed in a generic way where users can bring up the complete setup within a few clicks. For further information check the links below: F5 Distributed Cloud Platform (Link) F5 Distributed Cloud WAF Overview (Link) OWASP Injection Attacks (Link)1.9KViews2likes0CommentsUse F5 Distributed Cloud to service chain WAAP and CDN

The Content Delivery Network (CDN) market has become increasingly commoditized. Many providers have augmented their CDN capabilities with WAFs/WAAPs, DNS, load balancing, edge compute, and networking. Managing all these solutions together creates a web of operational complexity, which can be confusing. F5’s synergistic bundling of CDN with Web Application and API Protection (WAAP) benefits those looking for simplicity and ease of use. It provides a way around the complications and silos that many resource-strapped organizations face with their IT systems. This bundling also signifies how CDN has become a commodity product often not purchased independently anymore. This trend is encouraging many competitors to evolve their capabilities to include edge computing – a space where F5 has gained considerable experience in recent years. F5 is rapidly catching up to other providers’ CDNs. F5’s experience and leadership building the world’s best-of-breed Application Delivery Controller (ADC), the BIG-IP load balancer, put it in a unique position to offer the best application delivery and security services directly at the edge with many of its CDN points of presence. With robust regional edge capabilities and a global network, F5 has entered the CDN space with a complementary offering to an already compelling suite of features. This includes the ability to run microservices and Kubernetes workloads anywhere, with a complete range of services to support app infrastructure deployment, scale, and lifecycle management all within a single management console. With advancements made in the application security space at F5, WAAP capabilities are directly integrated into the Distributed Cloud Platform to protect both web apps and APIs. Features include (yet not limited to): Web Application Firewall: Signature + Behavioral WAF functionality Bot Defense: Detect client signals, determining if clients are human or automated DDoS Mitigation: Fully managed by F5 API Security: Continuous inspection and detection of shadow APIs Solution Combining the Distributed Cloud WAAP with CDN as a form of service chaining is a straightforward process. This not only gives you the best security protection for web apps and APIs, but also positions apps regionally to deliver them with low latency and minimal compute per request. In the following solution, we’ve combined Distributed Cloud WAAP and CDN to globally deliver an app protected by a WAF policy from the closest regional point of presence to the user. Follow along as I demonstrate how to configure the basic elements. Configuration Log in to the Distributed Cloud Console and navigate to the DNS Management service. Decide if you want Distributed Cloud to manage the DNS zone as a Primary DNS server or if you’d rather delegate the fully qualified domain name (FQDN) for your app to Distributed Cloud with a CNAME. While using Delegation or Managed DNS is optional, doing so makes it possible for Distributed Cloud to automatically create and manage the SSL certificates needed to securely publish your app. Next, in Distributed Cloud Console, navigate to the Web App and API Protection service, then go to App Firewall, then Add App Firewall. This is where you’ll create the security policy that we’ll later connect our HTTP LB. Let’s use the following basic WAF policy in YAML format, you can paste it directly in to the Console by changing the configuration view to JSON and then changing the format to YAML. Note: This uses the namespace “waap-cdn”, change this to match your individual tenant’s configuration. metadata: name: buytime-waf namespace: waap-cdn labels: {} annotations: {} disable: false spec: blocking: {} detection_settings: signature_selection_setting: default_attack_type_settings: {} high_medium_low_accuracy_signatures: {} enable_suppression: {} enable_threat_campaigns: {} default_violation_settings: {} bot_protection_setting: malicious_bot_action: BLOCK suspicious_bot_action: REPORT good_bot_action: REPORT allow_all_response_codes: {} default_anonymization: {} use_default_blocking_page: {} With the WAF policy saved, it’s time to configure the origin server. Navigate to Load Balancers > Origin Pools, then Add Origin Pool. The following YAML uses a FQDN DNS name reach the app server. Using an IP address for the server is possible as well. metadata: name: buytime-pool namespace: waap-cdn labels: {} annotations: {} disable: false spec: origin_servers: - public_name: dns_name: webserver.f5-cloud-demo.com labels: {} no_tls: {} port: 80 same_as_endpoint_port: {} healthcheck: [] loadbalancer_algorithm: LB_OVERRIDE endpoint_selection: LOCAL_PREFERRED With the supporting WAF and Origin Pool resources configured, it’s time to create the HTTP Load Balancer. Navigate to Load Balancers > HTTP Load Balancers, then create a new one. Use the following YAML to create the LB and use both resources created above. metadata: name: buytime-online namespace: waap-cdn labels: {} annotations: {} disable: false spec: domains: - buytime.waap.f5-cloud-demo.com https_auto_cert: http_redirect: true add_hsts: true port: 443 tls_config: default_security: {} no_mtls: {} default_header: {} enable_path_normalize: {} non_default_loadbalancer: {} header_transformation_type: default_header_transformation: {} advertise_on_public_default_vip: {} default_route_pools: - pool: tenant: your-tenant-uid namespace: waap-cdn name: buytime-pool kind: origin_pool weight: 1 priority: 1 endpoint_subsets: {} routes: [] app_firewall: tenant: your-tenant-uid namespace: waap-cdn name: buytime-waf kind: app_firewall add_location: true no_challenge: {} user_id_client_ip: {} disable_rate_limit: {} waf_exclusion_rules: [] data_guard_rules: [] blocked_clients: [] trusted_clients: [] ddos_mitigation_rules: [] service_policies_from_namespace: {} round_robin: {} disable_trust_client_ip_headers: {} disable_ddos_detection: {} disable_malicious_user_detection: {} disable_api_discovery: {} disable_bot_defense: {} disable_api_definition: {} disable_ip_reputation: {} disable_client_side_defense: {} resource_version: "517528014" With the HTTP LB successfully deployed, check that its status is ready on the status page. You can verify the LB is working by sending a basic request using the command line tool, curl. Confirm that the value of the HTTP header “Server” is “volt-adc”. da.potter@lab ~ % curl -I https://buytime.waap.f5-cloud-demo.com HTTP/2 200 date: Mon, 17 Oct 2022 23:23:55 GMT content-type: text/html; charset=UTF-8 content-length: 2200 vary: Origin access-control-allow-credentials: true accept-ranges: bytes cache-control: public, max-age=0 last-modified: Wed, 24 Feb 2021 11:06:36 GMT etag: W/"898-177d3b82260" x-envoy-upstream-service-time: 136 strict-transport-security: max-age=31536000 set-cookie: 1f945=1666049035840-557942247; Path=/; Domain=f5-cloud-demo.com; Expires=Sun, 17 Oct 2032 23:23:55 GMT set-cookie: 1f9403=viJrSNaAp766P6p6EKZK7nyhofjXCVawnskkzsrMBUZIoNQOEUqXFkyymBAGlYPNQXOUBOOYKFfs0ne+fKAT/ozN5PM4S5hmAIiHQ7JAh48P4AP47wwPqdvC22MSsSejQ0upD9oEhkQEeTG1Iro1N9sLh+w+CtFS7WiXmmJFV9FAl3E2; path=/ x-volterra-location: wes-sea server: volt-adc Now it’s time to configure the CDN Distribution and service chain it to the WAAP HTTP LB. Navigate to Content Delivery Network > Distributions, then Add Distribution. The following YAML creates a basic CDN configuration that uses the WAAP HTTP LB above. metadata: name: buytime-cdn namespace: waap-cdn labels: {} annotations: {} disable: false spec: domains: - buytime.f5-cloud-demo.com https_auto_cert: http_redirect: true add_hsts: true tls_config: tls_12_plus: {} add_location: false more_option: cache_ttl_options: cache_ttl_override: 1m origin_pool: public_name: dns_name: buytime.waap.f5-cloud-demo.com use_tls: use_host_header_as_sni: {} tls_config: default_security: {} volterra_trusted_ca: {} no_mtls: {} origin_servers: - public_name: dns_name: buytime.waap.f5-cloud-demo.com follow_origin_redirect: false resource_version: "518473853" After saving the configuration, verify that the status is “Active”. You can confirm the CDN deployment status for each individual region by going to the distribution’s action button “Show Global Status”, and scrolling down to each region to see that each region’s “site_status.status” value is “DEPLOYMENT_STATUS_DEPLOYED”. Verification With the CDN Distribution successfully deployed, it’s possible to confirm with the following basic request using curl. Take note of the two HTTP headers “Server” and “x-cache-status”. The Server value will now be “volt-cdn”, and the x-cache-status will be “MISS” for the first request. da.potter@lab ~ % curl -I https://buytime.f5-cloud-demo.com HTTP/2 200 date: Mon, 17 Oct 2022 23:24:04 GMT content-type: text/html; charset=UTF-8 content-length: 2200 vary: Origin access-control-allow-credentials: true accept-ranges: bytes cache-control: public, max-age=0 last-modified: Wed, 24 Feb 2021 11:06:36 GMT etag: W/"898-177d3b82260" x-envoy-upstream-service-time: 63 strict-transport-security: max-age=31536000 set-cookie: 1f945=1666049044863-471593352; Path=/; Domain=f5-cloud-demo.com; Expires=Sun, 17 Oct 2032 23:24:04 GMT set-cookie: 1f9403=aCNN1JINHqvWPwkVT5OH3c+OIl6+Ve9Xkjx/zfWxz5AaG24IkeYqZ+y6tQqE9CiFkNk+cnU7NP0EYtgGnxV0dLzuo3yHRi3dzVLT7PEUHpYA2YSXbHY6yTijHbj/rSafchaEEnzegqngS4dBwfe56pBZt52MMWsUU9x3P4yMzeeonxcr; path=/ x-volterra-location: dal3-dal server: volt-cdn x-cache-status: MISS strict-transport-security: max-age=31536000 To see a security violation detected by the WAF in real-time, you can simulate a simple XSS exploit with the following curl: da.potter@lab ~ % curl -Gv "https://buytime.f5-cloud-demo.com?<script>('alert:XSS')</script>" * Trying x.x.x.x:443... * Connected to buytime.f5-cloud-demo.com (x.x.x.x) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/cert.pem * CApath: none * (304) (OUT), TLS handshake, Client hello (1): * (304) (IN), TLS handshake, Server hello (2): * TLSv1.2 (IN), TLS handshake, Certificate (11): * TLSv1.2 (IN), TLS handshake, Server key exchange (12): * TLSv1.2 (IN), TLS handshake, Server finished (14): * TLSv1.2 (OUT), TLS handshake, Client key exchange (16): * TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1): * TLSv1.2 (OUT), TLS handshake, Finished (20): * TLSv1.2 (IN), TLS change cipher, Change cipher spec (1): * TLSv1.2 (IN), TLS handshake, Finished (20): * SSL connection using TLSv1.2 / ECDHE-ECDSA-AES256-GCM-SHA384 * ALPN, server accepted to use h2 * Server certificate: * subject: CN=buytime.f5-cloud-demo.com * start date: Oct 14 23:51:02 2022 GMT * expire date: Jan 12 23:51:01 2023 GMT * subjectAltName: host "buytime.f5-cloud-demo.com" matched cert's "buytime.f5-cloud-demo.com" * issuer: C=US; O=Let's Encrypt; CN=R3 * SSL certificate verify ok. * Using HTTP2, server supports multiplexing * Connection state changed (HTTP/2 confirmed) * Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0 * Using Stream ID: 1 (easy handle 0x14f010000) > GET /?<script>('alert:XSS')</script> HTTP/2 > Host: buytime.f5-cloud-demo.com > user-agent: curl/7.79.1 > accept: */* > * Connection state changed (MAX_CONCURRENT_STREAMS == 128)! < HTTP/2 200 < date: Sat, 22 Oct 2022 01:04:39 GMT < content-type: text/html; charset=UTF-8 < content-length: 269 < cache-control: no-cache < pragma: no-cache < set-cookie: 1f945=1666400679155-452898837; Path=/; Domain=f5-cloud-demo.com; Expires=Fri, 22 Oct 2032 01:04:39 GMT < set-cookie: 1f9403=/1b+W13c7xNShbbe6zE3KKUDNPCGbxRMVhI64uZny+HFXxpkJMsCKmDWaihBD4KWm82reTlVsS8MumTYQW6ktFQqXeFvrMDFMSKdNSAbVT+IqQfSuVfVRfrtgRkvgzbDEX9TUIhp3xJV3R1jdbUuAAaj9Dhgdsven8FlCaADENYuIlBE; path=/ < x-volterra-location: dal3-dal < server: volt-cdn < x-cache-status: MISS < strict-transport-security: max-age=31536000 < <html><head><title>Request Rejected</title></head> <body>The requested URL was rejected. Please consult with your administrator.<br/><br/> Your support ID is 85281693-eb72-4891-9099-928ffe00869c<br/><br/><a href='javascript:history.back();'>[Go Back]</a></body></html> * Connection #0 to host buytime.f5-cloud-demo.com left intact Notice that the above request intentionally by-passes the CDN cache and is sent to the HTTP LB for the WAF policy to inspect. With this request rejected, you can confirm the attack by navigating to the WAAP HTTP LB Security page under the WAAP Security section within Apps & APIs. After refreshing the page, you’ll see the security violation under the “Top Attacked” panel. Demo To see all of this in action, watch my video below. This uses all of the configuration details above to make a WAAP + CDN service chain in Distributed Cloud. Additional Guides Virtually deploy this solution in our product simulator, or hands-on with step-by-step comprehensive demo guide. The demo guide includes all the steps, including those that are needed prior to deployment, so that once deployed, the solution works end-to-end without any tweaks to local DNS. The demo guide steps can also be automated with Ansible, in case you'd either like to replicate it or simply want to jump to the end and work your way back. Conclusion This shows just how simple it can be to use the Distributed Cloud CDN to frontend your web app protected by a WAF, all natively within the F5 Distributed Cloud’s regional edge POPs. The advantage of this solution should now be clear – the Distributed Cloud CDN is cloud-agnostic, flexible, agile, and you can enforce security policies anywhere, regardless of whether your web app lives on-prem, in and across clouds, or even at the edge. For more information about Distributed Cloud WAAP and Distributed Cloud CDN, visit the following resources: Product website: https://www.f5.com/cloud/products/cdn Distributed Cloud CDN & WAAP Demo Guide: https://github.com/f5devcentral/xcwaapcdnguide Video: https://youtu.be/OUD8R6j5Q8o Simulator: https://simulator.f5.com/s/waap-cdn Demo Guide: https://github.com/f5devcentral/xcwaapcdnguide7KViews10likes0Comments