From ASM to Advanced WAF: Advancing your Application Security

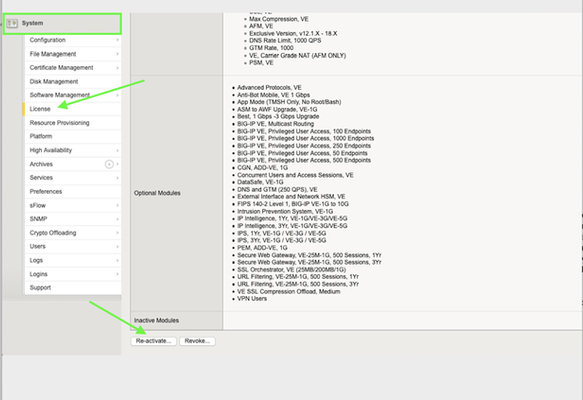

TL;DR: As of April 01, 2021, F5 has officially placed Application Security Manager (ASM) into End of Sale (EoS) status, signifying the eventual retirement of the product. (F5 Support Announcement - K72212499 ) Existing ASM,or BEST bundle customers, under a valid support contract running BIG-IP version 14.1 or greater can simply reactivate their licenses to instantly upgrade to Advanced WAF (AdvWAF) completely free of charge. Introduction Protecting your applications is becoming more challenging every day; applications are getting more complex, and attackers are getting more advanced. Over the years we have heard your feedback that managing a Web Application Firewall (WAF) can be cumbersome and you needed new solutions to protect against the latest generation of attacks. Advanced Web Application Firewall, or AdvWAF, is an enhanced version of the Application Security Manager (ASM) product that introduces new attack mitigation techniques and many quality-of-life features designed to reduce operational overhead. On April 01, 2021 – F5 started providing free upgrades for existing Application Security Manager customers to the Advanced WAF license. Keep on reading for: A brief history of ASM and AdvWAF How the AdvWAF license differs from ASM (ASM vs AdvWAF How to determine if your BIG-IPs are eligible for this free upgrade Performing the license upgrade How did we get here? For many years, ASM has been the gold standard Web Application Firewall (WAF) used by thousands of organizations to help secure their most mission-critical web applications from would-be attackers. F5 acquired the technology behind ASM in 2004 and subsequently ‘baked’ it into the BIG-IP product, immediately becoming the leading WAF product on the market. In 2018, after nearly 14 years of ASM development, F5 released the new, Advanced WAF license to address the latest threats. Since that release, both ASM and AdvWAF have coexisted, granting customers the flexibility to choose between the traditional or enhanced versions of the BIG-IP WAF product.As new features were released, they were almost always unique to AdvWAF, creating further divergence as time went on, and often sparking a few common questions (all of which we will inevitably answer in this very article) such as: Is ASM going away? What is the difference between ASM and AdvWAF? Will feature X come to ASM too? I need it! How do I upgrade from ASM to AdvWAF? Is the BEST bundle no longer really the BEST? To simplify things for our customers (and us too!), we decided to announce ASM as End of Sale (EoS), starting on April 01, 2021. This milestone, for those unfamiliar, means that the ASM product can no longer be purchased after April 01 of this year – it is in the first of 4 stages of product retirement. An important note is that no new features will be added to ASM going forward. So, what’s the difference? A common question we get often is “How do I migrate my policy from ASM to AdvWAF?” The good news is that the policies are functionally identical, running on BIG-IP, with the same web interface, and have the same learning engine and underlying behavior. In fact, our base policies can be shared across ASM, AdvWAF, and NGINX App Protect (NAP). The AdvWAF license simply unlocks additional features beyond what ASM has, that is it – all the core behaviors of the two products are identical otherwise. So, if an engineer is certified in ASM and has managed ASM security policies previously, they will be delighted to find that nothing has changed except for the addition of new features. This article does not aim to provide an exhaustive list of every feature difference between ASM and AdvWAF. Instead, below is a list of the most popular features introduced in the AdvWAF license that we hope you can take advantage of. At the end of the article, we provide more details on some of these features: Secure Guided Configurations Unlimited L7 Behavioral DoS DataSafe (Client-side encryption) OWASP Compliance Dashboard Threat Campaigns (includes Bot Signature updates) Additional ADC Functionality Micro-services protection Declarative WAF Automation I’m interested, what’s the catch? There is none! F5 is a security company first and foremost, with a mission to provide the technology necessary to secure our digital world. By providing important useability enhancements like Secure Guided Config and OWASP Compliance Dashboard for free to existing ASM customers, we aim to reduce the operational overhead associated with managing a WAF and help make applications safer than they were yesterday - it’s a win-win. If you currently own a STANDALONE, ADD-ON or BEST Bundle ASM product running version 14.1 or later with an active support contract, you are eligible to take advantage of this free upgrade. This upgrade does not apply to customers running ELA licensing or standalone ASM subscription licenses at this time. If you are running a BIG-IP Virtual Edition you must be running at least a V13 license. To perform the upgrade, all you need to do is simply REACTIVATE your license, THAT IS IT! There is no time limit to perform the license reactivation and this free upgrade offer does not expire. *Please keep in mind that re-activating your license does trigger a configuration load event which will cause a brief interruption in traffic processing; thus, it is always recommended to perform this in a maintenance window. Step 1: Step 2: Choose “Automatic” if your BIG-IP can communicate outbound to the Internet and talk to the F5 Licensing Server. Choose Manual if your BIG-IP cannot reach the F5 Licensing Server directly through the Internet. Click Next and the system will re-activate your license. After you’ve completed the license reactivation, the quickest way to know if you now have AdvWAF is by looking under the Security menu. If you see "Guided Configuration”, the license upgrade was completed successfully. You can also login to the console and look for the following feature flags in the /config/bigip.license file to confirm it was completed successfully by running: grep -e waf_gc -e mod_waf -e mod_datasafe bigip.license You should see the following flags set to enabled: Waf_gc: enabled Mod_waf: enabled Mod_datasafe: enabled *Please note that the GUI will still reference ASM in certain locations such as on the resource provisioning page; this is not an indication of any failure to upgrade to the AdvWAF license. *Under Resource Provisioning you should now see that FPS is licensed. This will need to be provisioned if you plan on utilizing the new AdvWAF DataSafe feature explained in more detail in the Appendix below. For customers with a large install base, you can perform license reactivation through the CLI. Please refer to the following article for instructions: https://support.f5.com/csp/article/K2595 Conclusion F5 Advanced WAF is an enhanced WAF license now available for free to all existing ASM customers running BIG-IP version 14.1 or greater, only requiring a simple license reactivation. The AdvWAF license will provide immediate value to your organization by delivering visibility into the OWASP Top 10 compliance of your applications, configuration wizards designed to build robust security policies quickly, enhanced automation capabilities, and more. If you are running ASM with BIG-IP version 14.1 or greater, what are you waiting for? (Please DO wait for your change window though 😊) Acknowledgments Thanks to Brad Scherer , John Marecki , Michael Everett , and Peter Scheffler for contributing to this article! Appendix: More details on select AdvWAF features Guided Configurations One of the most common requests we hear is, “can you make WAF easier?” If there was such a thing as an easy button for WAF configurations, Guided Configs are that button. Guided Configurations easily take you through complex configurations for various use-cases such as Web Apps, OWASP top 10, API Protection, DoS, and Bot Protection. L7DoS – Behavioral DoS Unlimited Behavioral DoS - (BaDoS) provides automatic protection against DoS attacks by analyzing traffic behavior using machine learning and data analysis. With ASM you were limited to applying this type of DoS profile to a maximum of 2 Virtual Servers. The AdvWAF license completely unlocks this capability, removing the 2 virtual server limitation from ASM. Working together with other BIG-IP DoS protections, Behavioral DoS examines traffic flowing between clients and application servers in data centers, and automatically establishes the baseline traffic/flow profiles for Layer 7 (HTTP) and Layers 3 and 4. DataSafe *FPS must be provisioned DataSafe is best explained as real-time L7 Data Encryption. Designed to protect websites from Trojan attacks by encrypting data at the application layer on the client side. Encryption is performed on the client-side using a public key generated by the BIG-IP system and provided uniquely per session. When the encrypted information is received by the BIG-IP system, it is decrypted using a private key that is kept on the server-side. Intended to protect, passwords, pins, PII, and PHI so that if any information is compromised via MITB or MITM it is useless to the attacker. DataSafe is included with the AdvWAF license, but the Fraud Protection Service (FPS) must be provisioned by going to System > Resource Provisioning: OWASP Compliance Dashboard Think your policy is air-tight? The OWASP Compliance Dashboard details the coverage of each security policy for the top 10 most critical web application security risks as well as the changes needed to meet OWASP compliance. Using the dashboard, you can quickly improve security risk coverage and perform security policy configuration changes. Threat Campaigns (includes Bot Signature updates) Threat campaigns allow you to do more with fewer resources. This feature is unlocked with the AdvWAF license, it, however, does require an additional paid subscription above and beyond that. This paid subscription does NOT come with the free AdvWAF license upgrade. F5’s Security Research Team (SRT) discovers attacks with honeypots – performs analysis and creates attack signatures you can use with your security policies. These signatures come with an extremely low false-positive rate, as they are strictly based on REAL attacks observed in the wild. The Threat Campaign subscription also adds bot signature updates as part of the solution. Additional ADC Functionality The AdvWAF license comes with all of the Application Delivery Controller (ADC) functionality required to both deliver and protect a web application. An ASM standalone license came with only a very limited subset of ADC functionality – a limit to the number of pool members, zero persistence profiles, and very few load balancing methods, just to name a few. This meant that you almost certainly required a Local Traffic Manager (LTM) license in addition to ASM, to successfully deliver an application. The AdvWAF license removes many of those limitations; Unlimited pool members, all HTTP/web pertinent persistence profiles, and most load balancing methods, for example.12KViews8likes8CommentsWhat is Shape Security?

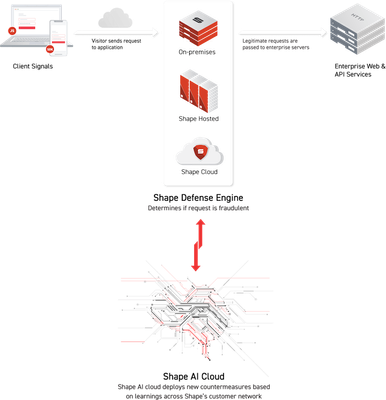

What is Shape Security? You heard the news that F5 acquired Shape Security, but what is Shape Security? Shape defends against malicious automation targeted at web and mobile applications. Why defend against malicious automation? Attackers use automation for all sorts of nefarious purposes. One of the most common and costly attacks is credential stuffing. On average, over eight million usernames and passwords are reported spilled or stolen per day. Attackers attempt to use these credentials across many websites, including those of financial institutions, retail, airlines, hospitality, and other industries. Because many people reuse usernames and passwords across many sites, these attacks are remarkably successful. And given the volume of the activity, criminals rely on automation to carry it out. (See the Shape 2018 Credential Spill Report.) Other than credential stuffing, attackers use automation for fake account creation, scraping, verifying stolen credit cards, and all manner of fraud. Losses include system downtime from heavy bot load, financial liability from account takeover, and loss of customer trust. What makes automation distinct as an attack vector? Traditional attacks on websites from XSS to SQL injection depend upon malformed inputs and vulnerabilities in apps that allow such inputs through. In contrast, malicious automation scripts send well-formed inputs, inputs that are indistinguishable from what is sent by valid users. Even if an application checks user inputs, even if the developers have followed secure programming practices, the app remains vulnerable to malicious automation. Even though the inputs may not vary from those of valid users, the attackers can achieve great damage ranging from account takeover to other forms of online fraud and abuse. What about traditional means of stopping bots? (CAPTCHAs and IP Rate Limits) We all dislike CAPTCHAs, those annoying puzzles that force us to prove we’re human. As it turns out, machine learning algorithms are now better at solving these puzzles than we humans. Other than adding friction for real customers, CAPTCHAs accomplish little. (See How Cybercriminals Bypass CAPTCHA.) Advanced attackers are also adept at bypassing IP rate limits. These criminals disguise their attacks through proxy services that make it look as if their requests are coming from thousands of valid residential IP addresses. Research by Shape shows that attackers reused IP addresses during a campaign only 2.2 times on average, well below any feasible rate limit. (See 5 Rando Stats from Watching eCrime All Day Every Day.) Many of these IP addresses are shared by real users because of NATing performed by ISPs. Therefore, blacklisting these IPs may interfere with real customers but will not stop sophisticated attackers. Why is it so difficult to stop malicious automations? With HTTP requests arriving from so many valid IP addresses containing inputs identical to valid requests, stopping malicious automation is no easy task. Attackers go to great lengths to simulate real users, from deploying real browsers to copying natural mouse movements to subtle randomization of behavior. With so much money at stake, attackers persist, retool, and continuously probe. The reality is that there is no silver bullet to stop such attacks, no one defense that will last for long. Shape’s ever learning, ever adapting system. Rather than depend upon a single countermeasure, Shape’s system is strategically designed for continuous learning and adaptation. Shape instruments mobile and web apps with code to collect signals that cover both browser environments and user behavior. A real-time rules engine processes these signals to detect bot activity and mitigate attacks. These signals feed into a data system that fuels multiple machine learning systems, the findings of which, guided by data scientists and domain experts, drive the development of new signals and new rules, ever improving the quality of data and decisions. As Shape has defended much of Fortune 500 for several years now, battling the most advanced, persistent attackers, it has gone through many learning cycles, developing an extremely rich signal and rule set and refining the learning process. Stopping bots depends on a network effect gained from defending many enterprises and analyzing massive quantities of data. How does the Shape system work? What are the components? The Shape system for continuous learning and adaptation consists of four main components: Shape Defense Engine, Client Signals, Shape AI Cloud, and Shape Protection Manager. At the core of the Shape system is a Layer 7 scriptable reverse proxy, the Shape Defense Engine. Deployed as clusters either on premises or in the cloud, the Shape Defense Engine processes traffic, applies real-time rules for bot detection, serves Shape’s JavaScript to browsers and mobile devices, and transmits telemetry to the Shape AI Cloud. Shape’s Client Signals are collected by JavaScript that utilizes remarkably sophisticated obfuscation. Based on a virtual machine implemented in JavaScript with opcodes randomized at frequent intervals, this technology makes reengineering both extremely difficult and minimizes the window for exploitation. The obfuscation hides from attackers what signals Shape collects, leaving them groping in the dark to solve a complex multivariate problem. In addition to the JavaScript for web, Shape offers mobile SDKs for integration into iOS and Android devices. The SDKs utilize JavaScript loaded in web views to ensure that the signals collected can evolve without requiring new integrations and forced app upgrades. The JavaScript collects signal data on the environment and user behavior that it attaches to HTTP requests to protected resources, such as login paths, paths for account creation, or paths that return data desired by scrapers. These requests with the signal data are routed through the Shape Defense Engine to determine whether the request is from a human or a bot. The Shape Defense Engine sends telemetry to the Shape AI Cloud, a highly secure data system, where it is analyzed by multiple machine learning algorithms for the detection of patterns of automation and of fraud more broadly. The insights gained enable Shape to generate new rules for bot detection and to offer rich security intelligence reports to its customers. The Shape Protection Manager (SPM), a web console, provides tools for deploying, configuring, and monitoring the Shape Defense Engine and viewing analytics from the Shape AI Cloud. From the SPM, you can dig into attack traffic, see when and how often attackers retool, see what paths attackers target, see which browsers and IP addresses they spoof. The SPM is the customer’s window into the Shape system and the toolset for managing it. What is next? Deploying Shape involves routing protected HTTP traffic through the Shape Defense Engine, which is often done through the configuration of BIG-IP and Nginx. In the next article in this series introducing Shape Security, we’ll learn best practices for integrating Shape. Related Information F5 Bot Defense and Management F5 Fraud and Risk Mitigation (Distributed Cloud) OWASP Top-21 Automated Threats (Distributed Cloud) F5 XC Bots & Fraud OWASP Automated Threats15KViews6likes2CommentsAdvanced WAF v16.0 - Declarative API

Since v15.1 (in draft), F5® BIG-IP® Advanced WAF™ canimport Declarative WAF policy in JSON format. The F5® BIG-IP® Advanced Web Application Firewall (Advanced WAF) security policies can be deployed using the declarative JSON format, facilitating easy integration into a CI/CD pipeline. The declarative policies are extracted from a source control system, for example Git, and imported into the BIG-IP. Using the provided declarative policy templates, you can modify the necessary parameters, save the JSON file, and import the updated security policy into your BIG-IP devices. The declarative policy copies the content of the template and adds the adjustments and modifications on to it. The templates therefore allow you to concentrate only on the specific settings that need to be adapted for the specific application that the policy protects. ThisDeclarative WAF JSON policyis similar toNGINX App Protect policy. You can find more information on theDeclarative Policyhere : NAP :https://docs.nginx.com/nginx-app-protect/policy/ Adv. WAF :https://techdocs.f5.com/en-us/bigip-15-1-0/big-ip-declarative-security-policy.html Audience This guide is written for IT professionals who need to automate their WAF policy and are familiar with Advanced WAF configuration. These IT professionals can fill a variety of roles: SecOps deploying and maintaining WAF policy in Advanced WAF DevOps deploying applications in modern environment and willing to integrate Advanced WAF in their CI/CD pipeline F5 partners who sell technology or create implementation documentation This article covershow to PUSH/PULL a declarative WAF policy in Advanced WAF: With Postman With AS3 Table of contents Upload Policy in BIG-IP Check the import Apply the policy OpenAPI Spec File import AS3 declaration CI/CD integration Find the Policy-ID Update an existing policy Video demonstration First of all, you need aJSON WAF policy, as below : { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "blocking", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false } } } 1. Upload Policy in BIG-IP There are 2 options to upload a JSON file into the BIG-IP: 1.1 Either youPUSHthe file into the BIG-IP and you IMPORT IT OR 1.2 the BIG-IPPULLthe file froma repository (and the IMPORT is included)<- BEST option 1.1PUSH JSON file into the BIG-IP The call is below. As you can notice, it requires a 'Content-Range' header. And the value is 0-(filesize-1)/filesize. In the example below, the file size is 662 bytes. This is not easy to integrate in a CICD pipeline, so we created the PULL method instead of the PUSH (in v16.0) curl --location --request POST 'https://10.1.1.12/mgmt/tm/asm/file-transfer/uploads/policy-api.json' \ --header 'Content-Range: 0-661/662' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ --header 'Content-Type: application/json' \ --data-binary '@/C:/Users/user/Desktop/policy-api.json' At this stage,the policy is still a filein the BIG-IP file system. We need toimportit into Adv. WAF. To do so, the next call is required. This call import the file "policy-api.json" uploaded previously. AnCREATEthe policy /Common/policy-api-arcadia curl --location --request POST 'https://10.1.1.12/mgmt/tm/asm/tasks/import-policy/' \ --header 'Content-Type: application/javascript' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ --data-raw '{ "filename":"policy-api.json", "policy": { "fullPath":"/Common/policy-api-arcadia" } }' 1.2PULL JSON file from a repository Here, theJSON file is hosted somewhere(in Gitlab or Github ...). And theBIG-IP will pull it. The call is below. As you can notice, the call refers to the remote repo and the body is a JSON payload. Just change the link value with your JSON policy URL. With one call, the policy isPULLEDandIMPORTED. curl --location --request POST 'https://10.1.1.12/mgmt/tm/asm/tasks/import-policy/' \ --header 'Content-Type: application/json' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ --data-raw '{ "fileReference": { "link": "http://10.1.20.4/root/as3-waf/-/raw/master/policy-api.json" } }' Asecond versionof this call exists, and refer to the fullPath of the policy.This will allow you to update the policy, from a second version of the JSON file, easily.One call for the creation and the update. As you can notice below, we add the"policy":"fullPath" directive. The value of the "fullPath" is thepartitionand thename of the policyset in the JSON policy file. This method is VERY USEFUL for CI/CD integrations. curl --location --request POST 'https://10.1.1.12/mgmt/tm/asm/tasks/import-policy/' \ --header 'Content-Type: application/json' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ --data-raw '{ "fileReference": { "link": "http://10.1.20.4/root/as3-waf/-/raw/master/policy-api.json" }, "policy": { "fullPath":"/Common/policy-api-arcadia" } }' 2. Check the IMPORT Check if the IMPORT worked. To do so, run the next call. curl --location --request GET 'https://10.1.1.12/mgmt/tm/asm/tasks/import-policy/' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ You should see a 200 OK, with the content below (truncated in this example). Please notice the"status":"COMPLETED". { "kind": "tm:asm:tasks:import-policy:import-policy-taskcollectionstate", "selfLink": "https://localhost/mgmt/tm/asm/tasks/import-policy?ver=16.0.0", "totalItems": 11, "items": [ { "isBase64": false, "executionStartTime": "2020-07-21T15:50:22Z", "status": "COMPLETED", "lastUpdateMicros": 1.595346627e+15, "getPolicyAttributesOnly": false, ... From now, your policy is imported and created in the BIG-IP. You can assign it to a VS as usual (Imperative Call or AS3 Call).But in the next session, I will show you how to create a Service with AS3 including the WAF policy. 3. APPLY the policy As you may know, a WAF policy needs to be applied after each change. This is the call. curl --location --request POST 'https://10.1.1.12/mgmt/tm/asm/tasks/apply-policy/' \ --header 'Content-Type: application/json' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ --data-raw '{"policy":{"fullPath":"/Common/policy-api-arcadia"}}' 4. OpenAPI spec file IMPORT As you know,Adv. WAF supports OpenAPI spec (2.0 and 3.0). Now, with the declarative WAF, we can import the OAS file as well. The BEST solution, is toPULL the OAS filefrom a repo. And in most of the customer' projects, it will be the case. In the example below, the OAS file is hosted in SwaggerHub(Github for Swagger files). But the file could reside in a private Gitlab repo for instance. The URL of the projectis :https://app.swaggerhub.com/apis/F5EMEASSA/Arcadia-OAS3/1.0.0-oas3 The URL of the OAS file is :https://api.swaggerhub.com/apis/F5EMEASSA/Arcadia-OAS3/1.0.0-oas3 This swagger file (OpenAPI 3.0 Spec file) includes all the application URL and parameters. What's more, it includes the documentation (for NGINX APIm Dev Portal). Now, it ispretty easy to create a WAF JSON Policy with API Security template, referring to the OAS file. Below, you can notice thenew section "open-api-files"with the link reference to SwaggerHub. And thenew templatePOLICY_TEMPLATE_API_SECURITY. Now, when I upload / import and apply the policy, Adv. WAF will download the OAS file from SwaggerHub and create the policy based on API_Security template. { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "blocking", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "https://api.swaggerhub.com/apis/F5EMEASSA/Arcadia-OAS3/1.0.0-oas3" } ] } } 5. AS3 declaration Now, it is time to learn how we cando all of these steps in one call with AS3(3.18 minimum). The documentation is here :https://clouddocs.f5.com/products/extensions/f5-appsvcs-extension/latest/declarations/application-security.html?highlight=waf_policy#virtual-service-referencing-an-external-security-policy With thisAS3 declaration, we: Import the WAF policy from a external repo Import the Swagger file (if the WAF policy refers to an OAS file) from an external repo Create the service { "class": "AS3", "action": "deploy", "persist": true, "declaration": { "class": "ADC", "schemaVersion": "3.2.0", "id": "Prod_API_AS3", "API-Prod": { "class": "Tenant", "defaultRouteDomain": 0, "API": { "class": "Application", "template": "generic", "VS_API": { "class": "Service_HTTPS", "remark": "Accepts HTTPS/TLS connections on port 443", "virtualAddresses": ["10.1.10.27"], "redirect80": false, "pool": "pool_NGINX_API_AS3", "policyWAF": { "use": "Arcadia_WAF_API_policy" }, "securityLogProfiles": [{ "bigip": "/Common/Log all requests" }], "profileTCP": { "egress": "wan", "ingress": { "use": "TCP_Profile" } }, "profileHTTP": { "use": "custom_http_profile" }, "serverTLS": { "bigip": "/Common/arcadia_client_ssl" } }, "Arcadia_WAF_API_policy": { "class": "WAF_Policy", "url": "http://10.1.20.4/root/as3-waf-api/-/raw/master/policy-api.json", "ignoreChanges": true }, "pool_NGINX_API_AS3": { "class": "Pool", "monitors": ["http"], "members": [{ "servicePort": 8080, "serverAddresses": ["10.1.20.9"] }] }, "custom_http_profile": { "class": "HTTP_Profile", "xForwardedFor": true }, "TCP_Profile": { "class": "TCP_Profile", "idleTimeout": 60 } } } } } 6. CI/CID integration As you can notice, it is very easy to create a service with a WAF policy pulled from an external repo. So, it is easy to integrate these calls (or the AS3 call) into a CI/CD pipeline. Below, an Ansible playbook example. This playbook run the AS3 call above. That's it :) --- - hosts: bigip connection: local gather_facts: false vars: my_admin: "admin" my_password: "admin" bigip: "10.1.1.12" tasks: - name: Deploy AS3 WebApp uri: url: "https://{{ bigip }}/mgmt/shared/appsvcs/declare" method: POST headers: "Content-Type": "application/json" "Authorization": "Basic YWRtaW46YWRtaW4=" body: "{{ lookup('file','as3.json') }}" body_format: json validate_certs: no status_code: 200 7. FIND the Policy-ID When the policy is created, a Policy-ID is assigned. By default, this ID doesn't appearanywhere. Neither in the GUI, nor in the response after the creation. You have to calculate it or ask for it. This ID is required for several actions in a CI/CD pipeline. 7.1 Calculate the Policy-ID Wecreated this python script to calculate the Policy-ID. It is an hash from the Policy name (including the partition). For the previous created policy named"/Common/policy-api-arcadia",the policy ID is"Ar5wrwmFRroUYsMA6DuxlQ" Paste this python codein a newwaf-policy-id.pyfile, and run the commandpython waf-policy-id.py "/Common/policy-api-arcadia" Outcome will beThe Policy-ID for /Common/policy-api-arcadia is: Ar5wrwmFRroUYsMA6DuxlQ #!/usr/bin/python from hashlib import md5 import base64 import sys pname = sys.argv[1] print 'The Policy-ID for', sys.argv[1], 'is:', base64.b64encode(md5(pname.encode()).digest()).replace("=", "") 7.2 Retrieve the Policy-ID and fullPath with a REST API call Make this call below, and you will see in the response, all the policy creations. Find yours and collect thePolicyReference directive.The Policy-ID is in the link value "link": "https://localhost/mgmt/tm/asm/policies/Ar5wrwmFRroUYsMA6DuxlQ?ver=16.0.0" You can see as well, at the end of the definition, the "fileReference"referring to the JSON file pulled by the BIG-IP. And please notice the"fullPath", required if you want to update your policy curl --location --request GET 'https://10.1.1.12/mgmt/tm/asm/tasks/import-policy/' \ --header 'Content-Range: 0-601/601' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ { "isBase64": false, "executionStartTime": "2020-07-22T11:23:42Z", "status": "COMPLETED", "lastUpdateMicros": 1.595417027e+15, "getPolicyAttributesOnly": false, "kind": "tm:asm:tasks:import-policy:import-policy-taskstate", "selfLink": "https://localhost/mgmt/tm/asm/tasks/import-policy/B45J0ySjSJ9y9fsPZ2JNvA?ver=16.0.0", "filename": "", "policyReference": { "link": "https://localhost/mgmt/tm/asm/policies/Ar5wrwmFRroUYsMA6DuxlQ?ver=16.0.0", "fullPath": "/Common/policy-api-arcadia" }, "endTime": "2020-07-22T11:23:47Z", "startTime": "2020-07-22T11:23:42Z", "id": "B45J0ySjSJ9y9fsPZ2JNvA", "retainInheritanceSettings": false, "result": { "policyReference": { "link": "https://localhost/mgmt/tm/asm/policies/Ar5wrwmFRroUYsMA6DuxlQ?ver=16.0.0", "fullPath": "/Common/policy-api-arcadia" }, "message": "The operation was completed successfully. The security policy name is '/Common/policy-api-arcadia'. " }, "fileReference": { "link": "http://10.1.20.4/root/as3-waf/-/raw/master/policy-api.json" } }, 8 UPDATE an existing policy It is pretty easy to update the WAF policy from a new JSON file version. To do so, collect from the previous call7.2 Retrieve the Policy-ID and fullPath with a REST API callthe"Policy" and"fullPath"directive. This is the path of the Policy in the BIG-IP. Then run the call below, same as1.2 PULL JSON file from a repository,but add thePolicy and fullPath directives Don't forget to APPLY this new version of the policy3. APPLY the policy curl --location --request POST 'https://10.1.1.12/mgmt/tm/asm/tasks/import-policy/' \ --header 'Content-Type: application/json' \ --header 'Authorization: Basic YWRtaW46YWRtaW4=' \ --data-raw '{ "fileReference": { "link": "http://10.1.20.4/root/as3-waf/-/raw/master/policy-api.json" }, "policy": { "fullPath":"/Common/policy-api-arcadia" } }' TIP : this call, above, can be used in place of the FIRST call when we created the policy "1.2PULL JSON file from a repository". But be careful, the fullPath is the name set in the JSON policy file. The 2 values need to match: "name": "policy-api-arcadia" in the JSON Policy file pulled by the BIG-IP "policy":"fullPath" in the POST call 9 Video demonstration In order to help you to understand how it looks with the BIG-IP, I created this video covering 4 topics explained in this article : The JSON WAF policy Pull the policy from a remote repository Update the WAF policy with a new version of the declarative JSON file Deploy a full service with AS3 and Declarative WAF policy At the end of this video, you will be able to adapt the REST Declarative API calls to your infrastructure, in order to deploy protected services with your CI/CD pipelines. Direct link to the video on DevCentral YouTube channel : https://youtu.be/EDvVwlwEFRw3.5KViews5likes1CommentWhat is NGINX?

Introduction NGINX started out as a high performance web-server and quickly expanded adding more functionality in an integrated manner. Put simply, NGINX is an open source web server, reverse proxy server, cache server, load balancer, media server and much more. The enterprise version of NGINX has exclusive production ready features on top of what's available, including status monitoring, active health checks, configuration API, and live dashboard for metrics. Think of this article as a quick introduction to each product but more importantly, as our placeholder for NGINX articles on DevCentral. If you're interested in NGINX, you can use this article as the place to find DevCentral articles broken down by functionality in the near future. By the way, this article here has also links to a bunch of interesting articles published on AskF5 and some introductory NGINX videos. NGINX as a Webserver The most basic use case of NGINX. It can handle hundreds of thousandsof requests simultaneously by using an event-drive architecture (as opposed to process-driven one) to handle multiple requests within one thread. NGINX as a Reverse Proxy and Load Balancer Both NGINX and NGINX+ provide load balancing functionality and work as reverse-proxy by sitting in front of back-end servers: Similar to F5, traffic comes in, NGINX load balances the requests to different back-end servers. In NGINX Plus version, it can even do session persistence and health check monitoring. Published Content: Server monitoring - some differences between BIG-IP and NGINX NGINX as Caching Server NGINX content caching improves efficiency, availability and capacity of back end servers. When caching is on, NGINX checks if content exists in its cache and if that's the case, content is served to client without the need to contact back end server. Otherwise, NGINX reaches out to backend server to retrieve content. A content cache sits between a client and back-end server and saves copies of pre-defined cacheable content. Caching improves performance as strategically, content cache is supposed to be closer to client. It also has the benefit of offloads requests from back-end servers. NGINX Controller NGINX controller is a piece of software that centralises and simplifies configuration, deployment and monitoring of NGINX Plus instances such as load balancers, API gateway and even web server. By the way, NGINX Controller 3.0 has just been released. Published Content: Introducing NGINX Controller 3.0 Setting up NGINX Controller Use of NGINX Controller to Authenticate API Calls Publishing an API using NGINX Controller NGINX as Kubernetes Ingress Controller NGINX Kubernetes Ingress Controller is a software that manages all Kubernetes ingress resources within a Kubernetes cluster. It monitors and retrieves all ingress resources running in a cluster and configure the corresponding L7 proxy accordingly. There are 2 versions of NGINX Ingress Controllers. One is maintained by the community and the other by NGINX itself. Published Content: Lightboard Lesson: NGINX Kubernetes Ingress Controller Overview NGINX as API Gateway An API Gateway is a way of abstracting application services interaction from client by providing a single entry-point into the system. Clients may issue a simple request to the application, for example, by requesting to load some information from a specific product. In the background, API gateway may contact several different services to bundle up the information requested and fulfil client's request. NGINX API management module for NGINX Controller can do request routing, composition, applying rate limiting to prevent overloading, offloading TLS traffic to improve performance, authentication, and real-time monitoring and alerting. NGINX as Application Server (Unit) NGINX Unit provides all sorts of functionalities to integrate applications and even to migrate and split services out of older monolithic applications. A key feature of Unit is that we don't need to reload processes once they're reconfigured. Unit only changes part of the memory associated to the changes we made. In later versions, NGINX Unit can also serve as intermediate node within a web framework, accepting all kinds of traffic and maintaining dynamic configuration and acting as a reverse proxy for back-end servers. NGINX as WAF NGINX uses ModSecurity module to protect applications from L7 attacks. NGINX as Sidecar Proxy Container We can also use NGINX as side car proxy container in Service Mesh architecture deployment (e.g. using Istio with NGINX as sidecar proxy container). A service mesh is an infrastructure layer that is supposed to be configurable and fast for the purposes of network-based interprocess communication using APIs. NGINX can be configured as a Sidecar proxy to handle inter-service communication, monitoring and security-related features. This is a way of ensuring developers only handle development, support and maintenance while platform engineers (ops team) can handle the service mesh maintenance.1.6KViews3likes2CommentsWhat is iCall?

tl;dr - iCall is BIG-IP’s event-based granular automation system that enables comprehensive control over configuration and other system settings and objects. The main programmability points of entrance for BIG-IP are the data plane, the control plane, and the management plane. My bare bones description of the three: Data Plane - Client/server traffic on the wire and flowing through devices Control Plane - Tactical control of local system resources Management Plane - Strategic control of distributed system resources You might think iControl (our SOAP and REST API interface) fits the description of both the control and management planes, and whereas you’d be technically correct, iControl is better utilized as an external service in management or orchestration tools. The beauty of iCall is that it’s not an API at all—it’s lightweight, it’s built-in via tmsh, and it integrates seamlessly with the data plane where necessary (via iStats.) It is what we like to call control plane scripting. Do you remember relations and set theory from your early pre-algebra days? I thought so! Let me break it down in a helpful way: P = {(data plane, iRules), (control plane, iCall), (management plane, iControl)} iCall allows you to react dynamically to an event at a system level in real time. It can be as simple as generating a qkview in the event of a failover or executing a tcpdump on a server with too many failed logins. One use case I’ve considered from an operations perspective is in the event of a core dump to have iCall generate a qkview, take checksums of the qkview and the dump file, upload the qkview and generate a support case via the iHealth API, upload the core dumps to support via ftp with the case ID generated from iHealth, then notify the ops team with all the appropriate details. If I had a solution like that back in my customer days, it would have saved me 45 minutes easy each time this happened! iCall Components Three are three components to iCall: events, handlers, and scripts. Events An event is really what drives the primary reason to use iCall over iControl. A local system event (whether it’s a failover, excessive interface or application errors, too many failed logins) would ordinarily just be logged or from a system perspective, ignored altogether. But with iCall, events can be configured to force an action. At a high level, an event is "the message," some named object that has context (key value pairs), scope (pool, virtual, etc), origin (daemon, iRules), and a timestamp. Events occur when specific, configurable, pre-defined conditions are met. Example (placed in /config/user_alert.conf) alert local-http-10-2-80-1-80-DOWN "Pool /Common/my_pool member /Common/10.2.80.1:80 monitor status down" { exec command="tmsh generate sys icall event tcpdump context { { name ip value 10.2.80.1 } { name port value 80 } { name vlan value internal } { name count value 20 } }" } Handlers Within the iCall system, there are three types of handlers: triggered, periodic, and perpetual. Triggered A triggered handler is used to listen for and react to an event. Example (goes with the event example from above:) sys icall handler triggered tcpdump { script tcpdump subscriptions { tcpdump { event-name tcpdump } } } Periodic A periodic handler is used to react to an interval timer. Example: sys icall handler periodic poolcheck { first-occurrence 2017-07-14:11:00:00 interval 60 script poolcheck } Perpetual A perpetual handler is used under the control of a deamon. Example: handler perpetual core_restart_watch sys icall handler perpetual core_restart_watch { script core_restart_watch } Scripts And finally, we have the script! This is simply a tmsh script moved under the /sys icall area of the configuration that will “do stuff" in response to the handlers. Example (continuing the tcpdump event and triggered handler from above:) modify script tcpdump { app-service none definition { set date [clock format [clock seconds] -format "%Y%m%d%H%M%S"] foreach var { ip port count vlan } { set $var $EVENT::context($var) } exec tcpdump -ni $vlan -s0 -w /var/tmp/${ip}_${port}-${date}.pcap -c $count host $ip and port $port } description none events none } Resources iCall Codeshare Lightboard Lessons on iCall Threshold violation article highlighting periodic handler7.4KViews2likes10CommentsLightboard Lessons: What is a TLS Cipher Suite?

When a web client (Internet browser) connects to a secure website, the data is encrypted. But, how does all that happen? And, what type of encryption is used? And, how does the Internet browser know what type of encryption the web server wants to use? This is all determined by what is known as a TLS Cipher Suite. In this video, John outlines the components of a TLS Cipher Suite and explains how it all works. Enjoy! Related Resources: TLS ciphers supported on BIG-IP platforms How Certificates Use Digital Signatures - Joshua Davies439Views2likes2CommentsWhat is Kubernetes?

Kubernetes is a container-orchestration platform. Its goal is to abstract away the complexity to run containerised applications in terms of network, storage, scaling and more. It also provides a declarative REST API (which is extensible) in order to automate the process of application hosting and exposure. If that sounds confusing, think of it as the thing that abstracts your infrastructure. We no longer have to worry about servers but onlyhow to deploy our application to Kubernetes. This is how a Kubernetes cluster may look like: It is comprised of a cluster of physical servers or virtual machines known as nodes in Kubernetes world. We can add or remove nodes at will and Kubernetes can scale down or up to a staggering amount of up to 5,000 nodes! Master nodes vs Worker Nodes There are 2 kinds of nodes you should initially know about: master and worker nodes¹. ¹ OpenShift (an enterprise fork of Kubernetes) adds the notion of infrastructure node. Infrastructure nodes are meant to host shared services (e.g. router nodes, monitoring, etc). Master nodes manage the Kubernetes cluster using 4 main components: Schedulerschedules pods to worker nodes. Controller managermakes sure cluster's actual state = desired state. ETCDis where Kubernetes store its objects and metadata. API Servervalidates objects before they're stored inETCDand of course is the central point of contact for object creation, retrieval and to watch the state of objects and cluster in general. A popular tool to "talk" to API Server iskubectl. If you install Kubernetes, you would definitely usekubectl². ² Openshift has a similar tool called "oc" Worker nodescommunicate withMaster node's API Serverin the following manner: Kubeletruns on each worker node and watches API SERVER to continuously monitor for Pods that should be created, deleted or changed. When we first add a Node to Kubernetes cluster,Kubeletis the daemon that registers the Node resource to API Server. Kube-proxymakes sure client traffic is redirected to the correct pod networking-wise in an efficient manner. Redirection is accomplished by using either iptables rules orIPVSvirtual servers. Container runtimeis usually Docker. Pods and Containers: Where does a Kubernetes application reside? Not every application is compatible with Kubernetes environment. Developers have to create their application in a specific way with small replicable components (also known as micro-services) that are independent from other components. Such components are hosted inside of a Pod. Pods run in Worker nodes: Within pods we can find one or more containers and that's where our application (or small chunk of our application) resides. In Appendix section, I will explain why using pods instead of containers directly. Understanding Pod's scalability component Pods are supposed to be replicable so application is designed in such a way to enable horizontal (auto) scalability. That's one of the powers of Kubernetes! We have a cluster of nodes where chunks of our application (pods) can easily increase or decrease in numbers. This is also the reason why our pods should be coded in a way that allows them to be replicable. Imagine our application has a component calledshopping-trolleyand another one calledcheck-out: Ourshopping-trolleypods may eventually become too overloaded and we might need more replicas to cope with the additional traffic/load. Increasing/reducing the number of replicas is as easy as writing down the number of replicas or letting cloud providers auto-scale it for you. Cloud providers also allow us to increase replicas based on CPU cycles, memory, etc. Before the rise of container orchestrators like Kubernetes, we would have to scale out the whole application stack, unnecessarily overloading servers. Kubernetes also allows us to scale out only parts of the application that needs it, effectively reducing unnecessary overload on servers and costs. The other advantage is that we can upgrade part of our application with zero downtime without the overhead to re-deploy the whole application at once. Services: how traffic reaches Application within a Pod Scheduler spreads out pods throughout Kubernetes cluster. However, it is usually a good idea to group pod replicas behind a single entry-point for reachability purposes as a pod's IP may change. This is where a Kubernetes Servicescomes in: Services act as the single point of access for a groups of pods with fixedDNS name and port. The way Services work out which pod should belong to it is by the use of labels. There is a label selector on a service and pods with same label are grouped into the Service. Understanding the 3 ways Services can be exposed ClusterIP Services can be exposed internally when one group of pods want to communicate with another one. This is the default and is calledClusterIPtype. A private IP address reachable only within Kubernetes cluster is used as single point of access for the group of pods. NodePort Services can be exposed externally by using Node's public IP address and port as cluster's entry point for client's external traffic. This is calledNodePorttype. If we useNodePort, external clients would have to directly reach one of the nodes soNodePortmight not be suitable for most production environments. If we need to load balance traffic among nodes, the next type is the solution. LoadBalancer This is another layer on top ofNodePortthat load balances traffic in a round robin fashion to all nodes. However, theLoadBalancertype can only be tied to a single Service, i.e. if we have multiple Services,we would need oneLoadBalancerper Service which could become quite costly. If we want to use a single Public IP address to direct external traffic to the right service based on URL, the next type is the solution. Ingress Resource TheIngresstype reads the HTTP Host header and forwards connection to a Service based on the URL/PATH. An Ingress can point to multiple Services based on URL using a single Public IP address as entry point. This overcomes the limitation of oneLoadBalancerper Service onLoadBalancertype. Final Remarks Kubernetes is currently a well-established DevOps tool but it is a very extensive topic and is constantly evolving. For release update, please watch Kubernetes official blog. There are many Kubernetes objects that were not covered here but should be covered in a future article. Appendix: Why Pods? Why not using containers directly? Design The underlying Container technology is independent from Kubernetes. Pods act as a layer of abstraction on top of it. With that in mind, Kubernetes doesn't really have to adapt to different container technology (such as Docker, Rocket, etc) and avoids runtime lock-in, i.e. each container runtime has its own strength. Application Requirements Within pods, containers can potentially share resources more easily. For example, one container might run to perform a certain task and the other one to take care of authentication. Another example would be one container writing to a shared storage volume and another one reading from it to perform additional processing. Containers in the same pod share the same network and IPC namespaces.1.5KViews2likes1CommentLightboard Lessons: What is "The Cloud"?

"The Cloud" is an often misunderstood term, but it's an important part of web application delivery today. Cloud providers can deliver significant advantages (cost, security, performance, etc) over traditional hosted environments. This video outlines the basics of the cloud and discusses why it might be beneficial to move your applications to the cloud. Look for more cloud-related videos in the future as well...enjoy! Related Resources: BIG-IP in the public cloud DevCentral Cloud Resources227Views2likes1CommentWhat Is BIG-IP?

tl;dr - BIG-IP is a collection of hardware platforms and software solutions providing services focused on security, reliability, and performance. F5's BIG-IP is a family of products covering software and hardware designed around application availability, access control, and security solutions. That's right, the BIG-IP name is interchangeable between F5's software and hardware application delivery controller and security products. This is different from BIG-IQ, a suite of management and orchestration tools, and F5 Silverline, F5's SaaS platform. When people refer to BIG-IP this can mean a single software module in BIG-IP's software family or it could mean a hardware chassis sitting in your datacenter. This can sometimes cause a lot of confusion when people say they have question about "BIG-IP" but we'll break it down here to reduce the confusion. BIG-IP Software BIG-IP software products are licensed modules that run on top of F5's Traffic Management Operation System® (TMOS). This custom operating system is an event driven operating system designed specifically to inspect network and application traffic and make real-time decisions based on the configurations you provide. The BIG-IP software can run on hardware or can run in virtualized environments. Virtualized systems provide BIG-IP software functionality where hardware implementations are unavailable, including public clouds and various managed infrastructures where rack space is a critical commodity. BIG-IP Primary Software Modules BIG-IP Local Traffic Manager (LTM) - Central to F5's full traffic proxy functionality, LTM provides the platform for creating virtual servers, performance, service, protocol, authentication, and security profiles to define and shape your application traffic. Most other modules in the BIG-IP family use LTM as a foundation for enhanced services. BIG-IP DNS - Formerly Global Traffic Manager, BIG-IP DNS provides similar security and load balancing features that LTM offers but at a global/multi-site scale. BIG-IP DNS offers services to distribute and secure DNS traffic advertising your application namespaces. BIG-IP Access Policy Manager (APM) - Provides federation, SSO, application access policies, and secure web tunneling. Allow granular access to your various applications, virtualized desktop environments, or just go full VPN tunnel. Secure Web Gateway Services (SWG) - Paired with APM, SWG enables access policy control for internet usage. You can allow, block, verify and log traffic with APM's access policies allowing flexibility around your acceptable internet and public web application use. You know.... contractors and interns shouldn't use Facebook but you're not going to be responsible why the CFO can't access their cat pics. BIG-IP Application Security Manager (ASM) - This is F5's web application firewall (WAF) solution. Traditional firewalls and layer 3 protection don't understand the complexities of many web applications. ASM allows you to tailor acceptable and expected application behavior on a per application basis . Zero day, DoS, and click fraud all rely on traditional security device's inability to protect unique application needs; ASM fills the gap between traditional firewall and tailored granular application protection. BIG-IP Advanced Firewall Manager (AFM) - AFM is designed to reduce the hardware and extra hops required when ADC's are paired with traditional firewalls. Operating at L3/L4, AFM helps protect traffic destined for your data center. Paired with ASM, you can implement protection services at L3 - L7 for a full ADC and Security solution in one box or virtual environment. BIG-IP Hardware BIG-IP hardware offers several types of purpose-built custom solutions, all designed in-house by our fantastic engineers; no white boxes here. BIG-IP hardware is offered via series releases, each offering improvements for performance and features determined by customer requirements. These may include increased port capacity, traffic throughput, CPU performance, FPGA feature functionality for hardware-based scalability, and virtualization capabilities. There are two primary variations of BIG-IP hardware, single chassis design, or VIPRION modular designs. Each offer unique advantages for internal and collocated infrastructures. Updates in processor architecture, FPGA, and interface performance gains are common so we recommend referring to F5's hardware pagefor more information.61KViews2likes3CommentsLightboard Lesson: What is vCMP?

F5's Virtual Clustered Multiprocessing, or vCMP for short, is virtualization technology that couples a custom hypervisor running on the BIG-IP host with a hardware-specific scalable number of guest virtual machines running TMOS. This article wraps a short series of Lightboard Lessons Jason filmed to introduce the high level vCMP concepts. What is vCMP? In this first video, Jason covers the differences between multi-tenancy and virtualization and discusses how the vCMP system works. vCMP Guest Networking and High Availability In part 2, Jason covers the great flexibility in guest provisioning, guest networking concepts, and a high availability overview for guests versus chassis. vCMP Security (coming soon!) In part 3, Jason concludes the series with a discussion on network security, guest deployment security, and secure memory management. Resources vCMP Admin (appliances) vCMP Admin (VIPRION) VIPRION Systems: Configuration K15930: Overview of vCMP configuration considerations K16947: Best practices for the HA group feature K14088: vCMP host and compatible guest matrix K14218: vCMP guest memory/CPU core allocation matrix K14727: BIG-IP vCMP hosts and guests configuration options1KViews2likes1Comment