F5 Friday: Load Balancing MySQL with F5 BIG-IP

Scaling MySQL just got a whole lot easier load balancing MySQL – any database, really – is not a trivial task. Generally speaking one does not simply round robin your way through a cluster of MySQL databases as a means to achieve scalability. It is databases, in fact, that have driven a wide variety of scalability patterns such as sharding and partitioning to achieve the ultimate goal of high-performance and scalability simultaneously. Unfortunately, most folks don’t architect their applications with scalability in mind. A single database is all that’s necessary at first, and because of the way in which the application interacts with the database, it doesn’t make sense to code in support for multiple database instances, such as is often implemented with a MySQL master-slave cluster. That’s because the application has to actually open a connection to the database in question. If you’re only starting with one database, you really can’t code in a connection to a separate instance. Eventually that application’s usage grows and the demands upon the database require a more scalable approach. Enter the MySQL master/slave relationship. A typical configuration is to maintain the master as the “write” database, i.e. all updates and/or inserts must use the master, while the slave instance is used as a “read only” instance. Obviously this means the application code must be changed to support this kind of functional sharding. Unless you leverage network server virtualization from a load balancing service capable of acting as a full-proxy at layer 7 (application) like BIG-IP. This solution leverages iRules to implement database load balancing. While this specific example is designed to perform the common functional sharding pattern of read-write separation for a master-slave MySQL cluster, the flexibility of iRules is such that other architectural solutions can easily be designed using the same basic functions. Location based sharding is another popular means of scaling databases, and using the GeoLocation capabilities of BIG-IP along with iRules to inspect and route database requests, it should be a fairly trivial architectural task to implement. The ability to further extend sharding or other distribution methodologies for scaling databases without modifying the application itself is a huge bonus for both developers and operations. By decoupling the application from the database, it provides a more flexibility set of scalability domains in which technology targeted scalability strategies can be leveraged independent of the other layers. This is an important facet of agile infrastructure architecture and should not be underestimated as a benefit of network server virtualization. MySQL Load Balancing Resources: MySQL Proxy iRule MySQL Proxy iApp (deployment package for BIG-IP v11) The Full-Proxy Data Center Architecture Infrastructure Scalability Pattern: Sharding Streams Infrastructure Scalability Pattern: Sharding Sessions Infrastructure Scalability Pattern: Partition by Function or Type IT as a Service: A Stateless Infrastructure Architecture Model F5 Friday: Platform versus Product At the Intersection of Cloud and Control… What is a Strategic Point of Control Anyway? All F5 Friday Posts on DevCentral Why Single-Stack Infrastructure Sucks2.4KViews0likes0CommentsVMware Horizon and F5 iAPP Deployments Backed by Ansible Automation

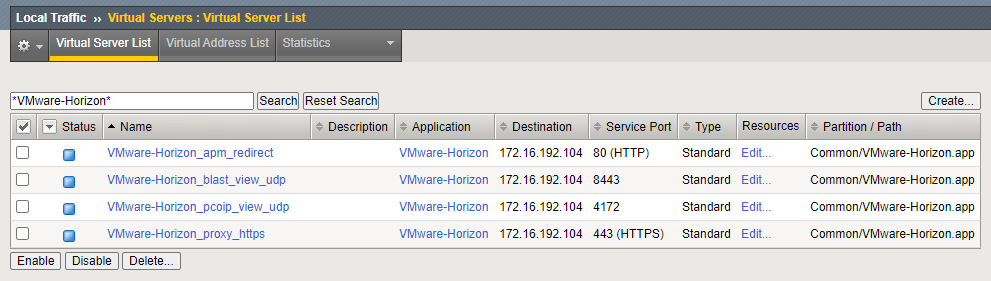

The Intro: A little over a year ago I knew barely anything about automation, zero about ansible, and didn't even think it would be something so tied to my life like it is now. I spend all my moments trying to think about how I can make Automation easier in my life, and being in Business Development I spend a lot of time testing F5 solutions and integrations between vendors (specifically between F5 and VMware as well as F5 and RedHat Ansible). I figured why not bring them a little closer together? It takes forever to build Labs and setup environments, and with automation I can do this in mere minutes compared to the hours it use to take (we are talking fresh builds, clean environments). I plan on sharing more about more of my VMware and Ansible automation integrations down the chain (like Horizon labs that can be built from scratch and ready to test in 30 minutes or less). But I wanted to start out with something that I get a lot of questions about:is it possible to automate iApp Deployments? Specifically the VMware Horizon iApp? The answer is YOU CAN NOW! grant you this like all automation is a work in progress. My suggestion is if you have a use case you want to build using what I have started with I encourage it!! TAKE, FORK and Expand!!!! The Code: All of the code I am using is completely accessible via the F5 DevCentral Git Repository and feel free to use it! What does it do? Well, if you are an F5 Guru then you might think it looks similar to how our AS3 code works, if you aren't a Guru its basically taking one set of variables and sending off a single command to the F5 to build the Application (I tell it the things that make it work, and how I want it deployed and it does all the work for me). Keep in mind this isn't using F5 AS3 code, it just mimics the same methods bytaking a JSON declaration of how I want things to be and the F5 does all of the imperative commands for me. --- - name: Build JSON payload ansible.builtin.template: src=f5.horizon.{{deployment_type |lower }}.j2 dest=/tmp/f5.horizon.json - name: Deploy F5 Horizon iApp f5networks.f5_modules.bigip_iapp_service: #Using Collections if not use - bigip_iapp_service: name: "VMware-Horizon" template: "{{iapp_template_name}}" parameters: "{{ lookup('template', '/tmp/f5.horizon.json') }}" provider: server: "{{f5_ip}}" user: "{{f5_user}}" password: "{{f5_pass}}" validate_certs: no delegate_to: localhost All of this code can be found at - https://github.com/f5devcentral/f5-bd-horizon-iapp-deploy/ Deployments: Using the F5 iApp for Horizon provided many options of deployment but they were all categorized into 3 buckets F5 APM with VMware Horizon - Where the F5 acts as the Gateway for all VMware Horizon Connections (Proxying PCoIP/Blast) F5 LTM with VMware Horizon - Internal Connections to an environment from a LAN and being able to secure and load balance Connection Servers F5 LTM with VMware Unified Access Gateway - Using the F5 to load balance the VMware Unified Access Gateways (UAGs) and letting the UAGs proxy the connections. The deployments offer the ability to utilize pre-imported certificates, set the Virtual IP, add additional Connection Servers, Create the iRule for internal connections (origin header check) and much more. All of this is dependent on your deployment and the way you need it setup. The current code doesn't import in the iApp Template nor the certificates, this could be done with other code but currently is not part of this code. All three of these deployment models are considered and part of the code and how its deployed is based on the variables file "{{code_directory}}/vars/horizon_iapp_vars.yml" as shown below. Keep in mind this is using clear text (i.e. username/password for AD) for some variables you can add other ways of securing your passwords like an Ansible VAULT. #F5 Authentication f5_ip: 192.168.1.10 f5_user: admin f5_pass: "my_password" f5_admin_port: 443 #All Deployment Types deployment_type: "apm" #option can be APM, LTM or UAG #iApp Variables iapp_vip_address: "172.16.192.100" iapp_template_name: "f5.vmware_view.v1.5.9" #SSL Info iapp_ssl_cert: "/Common/Wildcard-2022" # If want to use F5 Default Cert for Testing use "/Common/default.crt" iapp_ssl_key: "/Common/Wildcard-2022" # If want to use F5 Default Cert for Testing use "/Common/default.key" iapp_ssl_chain: "/#do_not_use#" #Horizon Info iapp_horizon_fqdn: "horizon.mycorp.com" iapp_horizon_netbios: "My-Corp" iapp_horizon_domainname: "My-Corp.com" iapp_horizon_nat_addresss: "" #enter NAT address or leave empty for none # LTM Deployment Type iapp_irule_origin: - "/Common/Horizon-Origin-Header" # APM and LTM Deployment Types iapp_horizon_connection_servers: - { ip: "192.168.1.50", port: "443" } # to add Connection Servers just add additional line - { ip: "192.168.1.51", port: "443" } #APM Deployment Type iapp_active_directory_username: "my_ad_user" iapp_active_directory_password: "my_ad_password" iapp_active_directory_password_encrypted: "no" # This is still being validated but requires the encrypted password from the BIG-IP iapp_active_directory_servers: - { name: "ad_server_1.mycorp.com", ip: "192.168.1.20" } # to add Active Directory Servers just add additional lines - { name: "ad_server_2.mycorp.com", ip: "192.168.1.21" } # UAG Deployment Type iapp_horizon_uag_servers: - { ip: "192.168.199.50", port: "443" } # to add UAG Servers Just add additional lines - { ip: "192.168.199.51", port: "443" } How do the Variables integrate with the Templates? The templates are JSON based code which Ansible will inject the variables into them depending on the deployment method called. This makes it easier to templates to specific deployments because we don't hard code specific values that aren't necessary or are part of the default deployments. Advanced Deployments would require modification of the JSON code to apply specialized settings that aren't apart of the default. If you want to see more about the templates for each operation (APM/LTM/UAG) check out the JSON Code at the link below: https://github.com/f5devcentral/f5-bd-horizon-iapp-deploy/tree/main/roles/ansible-deploy-iapp/templates The Results: Within seconds I can deploy, configure and make changes to my deployments or even change my deployment type. Could I do this in the GUI? Absolutely but the point is to Automate ALL THE THINGS, and being able to integrate this with solutions like Lab in a box (built from scratch including the F5) saves massive amounts of time. Example of a VMware Horizon iApp Deployment with F5 APM done in ~12 Seconds [root@Elysium f5-bd-horizon-iapp-deploy]# time ansible-playbook horizon_iapp_deploy.yaml PLAY [localhost] ******************************************************************************************************************************************************************** TASK [bypass-variables : ansible.builtin.stat] ************************************************************************************************************************************** ok: [localhost] TASK [bypass-variables : ansible.builtin.include_vars] ****************************************************************************************************************************** ok: [localhost] TASK [create-irule : Create F5 iRule] *********************************************************************************************************************************************** skipping: [localhost] TASK [ansible-deploy-iapp : Build JSON payload] ************************************************************************************************************************************* ok: [localhost] TASK [ansible-deploy-iapp : Deploy F5 Horizon iApp] ********************************************************************************************************************************* changed: [localhost] PLAY RECAP ************************************************************************************************************************************************************************** localhost : ok=4 changed=1 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0 real 0m11.954s user 0m6.114s sys 0m0.542s Links: All of this code can be found at - https://github.com/f5devcentral/f5-bd-horizon-iapp-deploy/1.2KViews0likes0CommentsiApp – What is it?

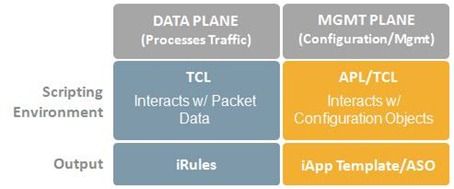

iApp is a seriously cool, game changing technology that was released in F5’s v11. There are so many benefits to our customers with this tool that I am going to break it down over a series of posts. Today we will focus on what it is. Hopefully you are already familiar with the power of F5’s iRules technology. If not, here is a quick background. F5 products support a scripting language based on TCL. This language allows an administrator to tell their BIG-IP to intercept, inspect, transform, direct and track inbound or outbound application traffic. An iRule is the bit of code that contains the set of instructions the system uses to process data flowing through it, either in the header or payload of a packet. This technology allows our customers to solve real-time application issues, security vulnerabilities, etc that are unique to their environment or are time sensitive. An iApp is like iRules, but for the management plane. Again, there is a scripting language that administrators can build instructions the system will use. But instead of describing how to process traffic, in the case of iApp, it is used to describe the user interface and how the system will act on information gathered from the user. The bit of code that contains these instructions is referred to as an iApp or iApp template. A system administrator can use F5-provided iApp templates installed on their BIG-IP to configure a service for a new application. They will be presented with the text and input fields defined by the iApp author. Once complete, their answers are submitted, and the template implements the configuration. First an application service object (ASO) is created that ties together all the configuration objects which are created, like virtual servers and profiles. Each object created by the iApp is then marked with the ASO to identify their membership in the application for future management and reporting. That about does it for what an iApp is…..next up, how they can work for you.1.2KViews0likes4CommentsF5 BIG-IP Platform Security

When creating any security-enabled network device, development teams must fully investigate security of the device itself to ensure it cannot be compromised. A gate provides no security to a house if the gap between the bars is large enough to drive a truck through. Many highly effective exploits have breached the very software and hardware that are designed to protect against them. If an attacker can breach the guards, then they don’t need to worry about being stealthy, meaning if one can compromise the box, then they probably can compromise the code. F5 BIG-IP Application Delivery Controllers are positioned at strategic points of control to manage an organization’s critical information flow. In the BIG-IP product family and the TMOS operating system, F5 has built and maintained a secure and robust application delivery platform, and has implemented many different checks and counter-checks to ensure a totally secure network environment. Application delivery security includes providing protection to the customer’s Application Delivery Network (ADN), and mandatory and routine checks against the stack source code to provide internal security—and it starts with a secure Application Delivery Controller. The BIG-IP system and TMOS are designed so that the hardware and software work together to provide the highest level of security. While there are many factors in a truly secure system, two of the most important are design and coding. Sound security starts early in the product development process. Before writing a single line of code, F5 Product Development goes through a process called threat modeling. Engineers evaluate each new feature to determine what vulnerabilities it might create or introduce to the system. F5’s rule of thumb is a vulnerability that takes one hour to fix at the design phase, will take ten hours to fix in the coding phase and one thousand hours to fix after the product is shipped—so it’s critical to catch vulnerabilities during the design phase. The sum of all these vulnerabilities is called the threat surface, which F5 strives to minimize. F5, like many companies that develop software, has invested heavily in training internal development staff on writing secure code. Security testing is time-consuming and a huge undertaking; but it’s a critical part of meeting F5’s stringent standards and its commitment to customers. By no means an exhaustive list but the BIG-IP system has a number of features that provide heightened and hardened security: Appliance mode, iApp Templates, FIPS and Secure Vault Appliance Mode Beginning with version 10.2.1-HF3, the BIG-IP system can run in Appliance mode. Appliance mode is designed to meet the needs of customers in industries with especially sensitive data, such as healthcare and financial services, by limiting BIG-IP system administrative access to match that of a typical network appliance rather than a multi-user UNIX device. The optional Appliance mode “hardens” BIG-IP devices by removing advanced shell (Bash) and root-level access. Administrative access is available through the TMSH (TMOS Shell) command-line interface and GUI. When Appliance mode is licensed, any user that previously had access to the Bash shell will now only have access to the TMSH. The root account home directory (/root) file permissions have been tightened for numerous files and directories. By default, new files are now only user readable and writeable and all directories are better secured. iApp Templates Introduced in BIG-IP v11, F5 iApps is a powerful new set of features in the BIG-IP system. It provides a new way to architect application delivery in the data center, and it includes a holistic, application-centric view of how applications are managed and delivered inside, outside, and beyond the data center. iApps provide a framework that application, security, network, systems, and operations personnel can use to unify, simplify, and control the entire ADN with a contextual view and advanced statistics about the application services that support business. iApps are designed to abstract the many individual components required to deliver an application by grouping these resources together in templates associated with applications; this alleviates the need for administrators to manage discrete components on the network. F5’s new NIST 800-53 iApp Template helps organizations become NIST-compliant. F5 has distilled the 240-plus pages of guidance from NIST into a template with the relevant BIG-IP configuration settings—saving organizations hours of management time and resources. Federal Information Processing Standards (FIPS) Developed by the National Institute of Standards and Technology (NIST), Federal Information Processing Standards are used by United States government agencies and government contractors in non-military computer systems. FIPS 140 series are U.S. government computer security standards that define requirements for cryptography modules, including both hardware and software components, for use by departments and agencies of the United States federal government. The requirements cover not only the cryptographic modules themselves but also their documentation. As of December 2006, the current version of the standard is FIPS 140-2. A hardware security module (HSM) is a secure physical device designed to generate, store, and protect digital, high-value cryptographic keys. It is a secure crypto-processor that often comes in the form of a plug-in card (or other hardware) with tamper protection built in. HSMs also provide the infrastructure for finance, government, healthcare, and others to conform to industry-specific regulatory standards. FIPS 140 enforces stronger cryptographic algorithms, provides good physical security, and requires power-on self tests to ensure a device is still in compliance before operating. FIPS 140-2 evaluation is required to sell products implementing cryptography to the federal government, and the financial industry is increasingly specifying FIPS 140-2 as a procurement requirement. The BIG-IP system includes a FIPS cryptographic/SSL accelerator—an HSM option specifically designed for processing SSL traffic in environments that require FIPS 140-1 Level 2–compliant solutions. Many BIG-IP devices are FIPS 140-2 Level 2–compliant. This security rating indicates that once sensitive data is imported into the HSM, it incorporates cryptographic techniques to ensure the data is not extractable in a plain-text format. It provides tamper-evident coatings or seals to deter physical tampering. The BIG-IP system includes the option to install a FIPS HSM (BIG-IP 6900, 8900, 11000, and 11050 devices). BIG-IP devices can be customized to include an integrated FIPS 140-2 Level 2–certified SSL accelerator. Other solutions require a separate system or a FIPS-certified card for each web server; but the BIG-IP system’s unique key management framework enables a highly scalable secure infrastructure that can handle higher traffic levels and to which organizations can easily add new services. Additionally the FIPS cryptographic/SSL accelerator uses smart cards to authenticate administrators, grant access rights, and share administrative responsibilities to provide a flexible and secure means for enforcing key management security. Secure Vault It is generally a good idea to protect SSL private keys with passphrases. With a passphrase, private key files are stored encrypted on non-volatile storage. If an attacker obtains an encrypted private key file, it will be useless without the passphrase. In PKI (public key infrastructure), the public key enables a client to validate the integrity of something signed with the private key, and the hashing enables the client to validate that the content was not tampered with. Since the private key of the public/private key pair could be used to impersonate a valid signer, it is critical to keep those keys secure. Secure Vault, a super-secure SSL-encrypted storage system introduced in BIG-IP version 9.4.5, allows passphrases to be stored in an encrypted form on the file system. In BIG-IP version 11, companies now have the option of securing their cryptographic keys in hardware, such as a FIPS card, rather than encrypted on the BIG-IP hard drive. Secure Vault can also encrypt certificate passwords for enhanced certificate and key protection in environments where FIPS 140-2 hardware support is not required, but additional physical and role-based protection is preferred. In the absence of hardware support like FIPS/SEEPROM (Serial (PC) Electrically Erasable Programmable Read-Only Memory), Secure Vault will be implemented in software. Even if an attacker removed the hard disk from the system and painstakingly searched it, it would be nearly impossible to recover the contents due to Secure Vault AES encryption. Each BIG-IP device comes with a unit key and a master key. Upon first boot, the BIG-IP system automatically creates a master key for the purpose of encrypting, and therefore protecting, key passphrases. The master key encrypts SSL private keys, decrypts SSL key files, and synchronizes certificates between BIG-IP devices. Further increasing security, the master key is also encrypted by the unit key, which is an AES 256 symmetric key. When stored on the system, the master key is always encrypted with a hardware key, and never in the form of plain text. Master keys follow the configuration in an HA (high-availability) configuration so all units would share the same master key but still have their own unit key. The master key gets synchronized using the secure channel established by the CMI Infrastructure as of BIG-IP v11. The master key encrypted passphrases cannot be used on systems other than the units for which the master key was generated. Secure Vault support has also been extended for vCMP guests. vCMP (Virtual Clustered Multiprocessing) enables multiple instances of BIG-IP software to run on one device. Each guest gets their own unit key and master key. The guest unit key is generated and stored at the host, thus enforcing the hardware support, and it’s protected by the host master key, which is in turn protected by the host unit key in hardware. Finally F5 provides Application Delivery Network security to protect the most valuable application assets. To provide organizations with reliable and secure access to corporate applications, F5 must carry the secure application paradigm all the way down to the core elements of the BIG-IP system. It’s not enough to provide security to application transport; the transporting appliance must also provide a secure environment. F5 ensures BIG-IP device security through various features and a rigorous development process. It is a comprehensive process designed to keep customers’ applications and data secure. The BIG-IP system can be run in Appliance mode to lock down configuration within the code itself, limiting access to certain shell functions; Secure Vault secures precious keys from tampering; and optional FIPS cards ensure organizations can meet or exceed particular security requirements. An ADN is only as secure as its weakest link. F5 ensures that BIG-IP Application Delivery Controllers use an extremely secure link in the ADN chain. ps Resources: F5 Security Solutions Security is our Job (Video) F5 BIG-IP Platform Security (Whitepaper) Security, not HSMs, in Droves Sometimes It Is About the Hardware Investing in security versus facing the consequences | Bloor Research White Paper Securing Your Enterprise Applications with the BIG-IP (Whitepaper) TMOS Secure Development and Implementation (Whitepaper) BIG-IP Hardware Updates – SlideShare Presentation Audio White Paper - Application Delivery Hardware A Critical Component F5 Introduces High-Performance Platforms to Help Organizations Optimize Application Delivery and Reduce Costs Technorati Tags: F5, PCI DSS, virtualization, cloud computing, Pete Silva, security, coding, iApp, compliance, FIPS, internet, TMOS, big-ip, vCMP467Views0likes1CommentF5 Friday: Programmability and Infrastructure as Code

#SDN #ADN #cloud #devops What does that mean, anyway? SDN and devops share some common themes. Both focus heavily on the notion of programmability in network devices as a means to achieve specific goals. For SDN it’s flexibility and rapid adaptation to changes in the network. For devops, it’s more a focus on the ability to treat “infrastructure as code” as a way to integrate into automated deployment processes. Each of these notions is just different enough to mean that systems supporting one don’t automatically support the other. An API focused on management or configuration doesn’t necessarily provide the flexibility of execution exhorted by SDN proponents as a significant benefit to organizations. And vice-versa. INFRASTRUCTURE as CODE Devops is a verb, it’s something you do. Optimizing application deployment lifecycle processes is a primary focus, and to do that many would say you must treat “infrastructure as code.” Doing so enables integration and automation of deployment processes (including configuration and integration) that enables operations to scale along with the environment and demand. The result is automated best practices, the codification of policy and process that assures repeatable, consistent and successful application deployments. F5 supports the notion (and has since 2003 or so) of infrastructure as code in two ways: iControl iControl, the open, standards-based API for the entire BIG-IP platform, remains the primary integration point for partners and customers alike. Whether it’s inclusion in Opscode Chef recipes, or pre-packaged solutions with systems from HP, Microsoft, or VMware, iControl offers the ability to manage the control plane of BIG-IP from just about anywhere. iControl is service-enabled and has been accessed and integrated through more programmatic languages than you can shake a stick at. Python, PERL, Java, PHP, C#, PowerShell… if it can access web-based services, it can communicate with BIG-IP via iControl. iApp A latter addition to the BIG-IP platform, iApp is best practice application delivery service deployment codified. iApps are service- and application-oriented, enabling operations and consumers of IT as a Service to more easily deploy requisite application delivery services without requiring intimate knowledge of the hundreds of individual network attributes that must be configured. iApp is also used in conjunction with iControl to better automate and integrate application delivery services into an IT as a Service environment. Using iApp to codify performance and availability policies based on application and business requirements, consumers – through pre-integrated solutions – can simply choose an appropriate application delivery “profile” along with their application to ensure not only deployment but production success. Infrastructure as code is an increasingly important view to take of the provisioning and deployment processes for network and application delivery services as they enable more consistent, accurate policy configuration and deployment. Consider research from Dimension Data that found “total number of configuration violations per device has increased from 29 to 43 year over year -- and that the number of security-related configuration errors (such as AAA Authentication, Route Maps and ACLS, Radius and TACACS+) also increased. AAA Authentication errors in particular jumped from 9.3 per device to 13.6, making it the most frequently occurring policy violation.” The ability to automate a known “good” configuration and policy when deploying application and network services can decrease the risk of these violations and ensure a more consistent, stable (and ultimately secure) network environment. PROGRAMMABILITY Less with “infrastructure as a code” (devops) and more-so with SDN comes the notion of programmability. On the one hand, this notion squares well with the “infrastructure as code” concept, as it requires infrastructure to be enabled in such as a way as to provide the means to modify behavior at run time, most often through support for a common standard (OpenFlow is the darling standard du jour for SDN).For SDN, this tends to focus on the forwarding information base (FIB) but broader applicability has been noted at times, and no doubt will continue to gain traction. The ability to “tinker” with emerging and experimental protocols, for example, is one application of programmability of the network. Rather than wait for vendor support, it is proposed that organizations can deploy and test support for emerging protocols through OpenFlow enabled networks. While this capability is likely not really something large production networks would undertake, still, the notion that emerging protocols could be supported on-demand, rather than on a vendor' driven timeline, is often desirable. Consider support for SIP, before UCS became nearly ubiquitous in enterprise networks. SIP is a message-based protocol, requiring deep content inspection (DCI) capabilities to extract AVP codes as a means to determine routing to specific services. Long before SIP was natively supported by BIG-IP, it was supported via iRules, F5’s event-driven network-side scripting language. iRules enabled customers requiring support for SIP (for load balancing and high-availability architectures) to program the network by intercepting, inspecting, and ultimately routing based on the AVP codes in SIP payloads. Over time, this functionality was productized and became a natively supported protocol on the BIG-IP platform. Similarly, iRules enables a wide variety of dynamism in application routing and control by providing a robust environment in which to programmatically determine which flows should be directed where, and how. Leveraging programmability in conjunction with DCI affords organizations the flexibility to do – or do not – as they so desire, without requiring them to wait for hot fixes, new releases, or new products. SDN and ADN – BIRDS of a FEATHER The very same trends driving SDN at layer 2-3 are the same that have been driving ADN (application delivery networking) for nearly a decade. Five trends in network are driving the transition to software defined networking and programmability. They are: • User, device and application mobility; • Cloud computing and service; • Consumerization of IT; • Changing traffic patterns within data centers; • And agile service delivery. The trends stretch across multiple markets, including enterprise, service provider, cloud provider, massively scalable data centers -- like those found at Google, Facebook, Amazon, etc. -- and academia/research. And they require dynamic network adaptability and flexibility and scale, with reduced cost, complexity and increasing vendor independence, proponents say. -- Five needs driving SDNs Each of these trends applies equally to the higher layers of the networking stack, and are addressed by a fully programmable ADN platform like BIG-IP. Mobile mediation, cloud access brokers, cloud bursting and balancing, context-aware access policies, granular traffic control and steering, and a service-enabled approach to application delivery are all part and parcel of an ADN. From devops to SDN to mobility to cloud, the programmability and service-oriented nature of the BIG-IP platform enables them all. The Half-Proxy Cloud Access Broker Devops is a Verb SDN, OpenFlow, and Infrastructure 2.0 Devops is Not All About Automation Applying ‘Centralized Control, Decentralized Execution’ to Network Architecture Identity Gone Wild! Cloud Edition Mobile versus Mobile: An Identity Crisis350Views0likes0CommentsF5 Friday: Applications aren't protocols. They're Opportunities.

Applications are as integral to F5 technologies as they are to your business. An old adage holds that an individual can be judged by the company he keeps. If that holds true for organizations, then F5 would do well to be judged by the vast array of individual contributors, partners, and customers in its ecosystem. From its long history of partnering with companies like Microsoft, IBM, HP, Dell, VMware, Oracle, and SAP to its astounding community of over 160,000 engineers, administrators and developers speaks volumes about its commitment to and ability to develop joint and custom solutions. F5 is committed to delivering applications no matter where they might reside or what architecture they might be using. Because of its full proxy architecture, F5’s ADC platform is able to intercept, inspect and interact with applications at every layer of the network. That means tuning TCP stacks for mobile apps, protecting web applications from malicious code whether they’re talking JSON or XML, and optimizing delivery via HTTP (or HTTP 2.0 or SPDY) by understanding the myriad types of content that make up a web application: CSS, images, JavaScript and HTML. But being application-driven goes beyond delivery optimization and must cover the broad spectrum of technologies needed not only to deliver an app to a consumer or employee, but manage its availability, scale and security. Every application requires a supporting cast of services to meet a specific set of business and user expectations, such as logging, monitoring and failover. Over the 18 years in which F5 has been delivering applications it has developed technologies specifically geared to making sure these supporting services are driven by applications, imbuing each of them with the application awareness and intelligence necessary to efficiently scale, secure and keep them available. With the increasing adoption of hybrid cloud architectures and the need to operationally scale the data center, it is important to consider the depth and breadth to which ADC automation and orchestration support an application focus. Whether looking at APIs or management capabilities, an ADC should provide the means by which the services applications need can be holistically provisioned and managed from the perspective of the application, not the individual services. Technology that is application-driven, enabling app owners and administrators the ability to programmatically define provisioning and management of all the application services needed to deliver the application is critical moving forward to ensure success. F5 iApps and F5 BIG-IQ Cloud do just that, enabling app owners and operations to rapidly provision services that improve the security, availability and performance of the applications that are the future of the business. That programmability is important, especially as it relates to applications according to our recent survey (results forthcoming)in which a plurality of respondents indicated application templates are "somewhat or very important" to the provisioning of their applications along with other forms of programmability associated with software-defined architectures including cloud computing. Applications increasingly represent opportunity, whether it's to improve productivity or increase profit. Capabilities that improve the success rate of those applications are imperative and require a deeper understanding of an application and its unique delivery needs than a protocol and a port. F5 not only partners with application providers, it encapsulates the expertise and knowledge of how best to deliver those applications in its technologies and offers that same capability to each and every organization to tailor the delivery of their applications to meet and exceed security, reliability and performance goals. Because applications aren't just a set of protocols and ports, they're opportunities. And how you respond to opportunity is as important as opening the door in the first place.324Views0likes0CommentsF5 Monday? The Evolution To IT as a Service Continues … in the Network

#v11 #vcmp #scaleN #iApp It’s time to bring the benefits of server virtualization, rapid provisioning and efficient, flexible scalability models to the network. Many of you know I’m a developer by trade and gained my networking stripes after joining Network Computing Magazine around the turn of the century. I focused heavily on application-centric solutions (sometimes much to my chagrin; consider evaluating ERP solutions for a moment and I’m sure you’ll understand why) but I was also tasked with reviewing networking solutions. In particular, the realm of load balancing and application delivery fell squarely to me for most of my time with the magazine. Thus I’ve been following F5 for a lot longer than I’ve been on the dark-side team, and have seen its solutions evolve from the most basic of network appliances (remember when SSL accelerators and load balancers were stand-alone?) to the highly complex and advanced application delivery service solutions offered today. The last time F5 released a version this significant was v9, when it moved from a simple proxy-based appliance to a hardware-enabled, full-proxy architecture. It moved from a solution to being a platform upon which We could build out future solutions more easily and empowered customers by providing both a service-enabled control plane, iControl, and a programmable framework for better managing the diverse traffic needs of our very disparate vertical industry customer-base, iRules. While version 10 was not a trivial release by any stretch of the imagination, it pales in comparison with the fundamental architectural changes and enhancements in v11. It isn’t just about new features and functionality – though these are certainly included – it’s about architecture (internal to BIG-IP and for data centers) and automation (internal to BIG-IP and for data centers). It’s about breaking the traditional high-availability (scalability) paradigm by taking advantage of virtualization inside and out. It’s about enabling a service-oriented view of policies and infrastructure that better fits within the cloud computing demesne. It’s about laying the foundation of an optimized data center architecture based on strategic points of control throughout the network that enable increased efficiency and reliability while simultaneously bringing the benefits associated with a service-focused architecture – reusability, repeatability and consistency – to the network. I can’t, without writing more than you really want to read on a Monday, describe everything that makes v11 such a significant release. With that in mind, let me just touch on what I think is the most game-changing pieces of the newest version of BIG-IP – and why. iApp iApp is evolutionary for F5, but is likely the most revolutionary for the industry in general. iApp is a framework that moves the focus of BIG-IP configuration from network-oriented objects to application-centric views. But that’s just the surface under it. It’s a framework, and that implies programmability and extensibility, and that’s exactly what it offers. You might recall that for many years F5 has offered Application Ready Solutions; deployment guides developed by our engineers, often in concert with application partners like Microsoft, Oracle, SAP and VMware, that detailed the optimal configuration of BIG-IP (including all relevant modules) for a specific application or solution – SharePoint, Oracle Database, VMware View, etc… These guides were step-by-step instructions administrators could be guided by to optimally configure BIG-IP. This certainly reduced the application deployment lifecycle, but it didn’t address related challenges such as misconfiguration. As cloud computing and virtualization came along it also failed to address the need to create consistent deployment configurations that were easily repeatable through automation. iApp not only addresses this challenge, it goes above and beyond by providing the framework through which F5 partners and customers can easily create their own iApp deployment packages and share them. So not only do you get repeatable application deployment architectures that can be shared across BIG-IP instances, you also get the same to share with others – or benefit from others’ expertise. iApp is to infrastructure deployment what an EAR or an assembly is to application deployment. It’s a mobile package of BIG-IP configuration. It’s application-centric management from a pool of reusable application services. Scale N Traditional infrastructure high-availability models rely on an active-standby (or in some cases active-active) configuration. That means two (or more) devices which one designated primary and the others as secondary. Scale N breaks this paradigm and says, why should be so restricted? Cloud computing and virtualization benefits are premised upon the ability to scale out and up on –demand, why shouldn’t infrastructure follow the same model? After all, the traditional paradigm requires fail-over by device – or instance. If you need to fail-over – or simply move – one application, the entire device must be interrupted. With v11 F5 is introducing the concept of Device Service Clusters, which allow the targeted fail-over of application instances and achieves active-active-active N . Basically you can now scale out across a pool of CPUs or devices regardless of BIG-IP form factor or deployment platform, and manage them as one. This is possible by leveraging a previously introduced feature called vCMP. vCMP I’ll include a short refresher on vCMP because it’s inherently important to the overall capabilities of scale N and the ability to impart why it is that iApp combined with scale N is so significant. If you’ll recall, vCMP standards for virtual Clustered Multi-Processing and it’s built off our previous CMP (Clustered Multi-Processing) capabilities. vCMP makes it possible to deploy individual BIG-IP “guest” instances that enable fault-isolation, version independency and on-demand hardware-layer scalability. This, combined with some secret sauce, is what makes scaleN possible and ultimately part of what makes v11 so significant a departure from traditional infrastructure deployment and management models. Now, you combine all three and provide the ability to synchronize service configurations across instances via an automated policy synchronization capability (also new to v11) and you now have capabilities in the application delivery infrastructure with similar benefits and abilities as those found previously only in the server / application virtualization infrastructure: automated, repeatable, manageable, scalable infrastructure services. It takes us several giant steps toward the realization of stateful failure; a goal that has long eluded traditional infrastructure-based scalability architectures. v11 also includes a wealth of security-related features and enhancements as well as global application delivery service support. It is, simply put, too big to contain in a single – or even several – posts. You’ll want to explore the new options and new capabilities and consider how they fit into a strategic view of your data center architecture as you continue the path toward IT as a Service. This version supports that transformation with a very service and application-centric view of infrastructure and application delivery services, and enables even more collaboration between components and customers; collaboration that is necessary to automate - and ultimately liberate – the data center of the future with a more agile, dynamic architecture. So take some time to explore, ask questions, and find out more about v11. We are confident you’ll find that the ability to move more fluidly toward an agile infrastructure, toward IT as a Service, will be served well by the strategic trifecta of iApp, Scale N and vCMP. v11 Resources iApp Wiki here on DevCentral F5 Delivers on Dynamic Data Center Vision with New Application Control Plane Architecture F5 iApp: Moving Application Delivery Beyond the Network Introducing v11: The Next Generation of Infrastructure BIG-IP v11 Information Page F5 Friday: The Gap That become a Chasm IT as a Service: A Stateless Infrastructure Architecture Model If a Network Can’t Go Virtual Then Virtual Must Come to the Network You Can’t Have IT as a Service Until IT Has Infrastructure as a Service Meet the Challenge of Consumerization by Managing Applications Instead of Clients This is Why We Can’t Have Nice Things Intercloud: Are You Moving Applications or Architectures? The Consumerization of IT: The OpsStore What CIOs Can Learn from the Spartans315Views0likes1CommentTo Err is Human

#devops Automating incomplete or ineffective processes will only enable you to make mistakes faster – and more often. Most folks probably remember the play on "to err is human…" proverb when computers first began to take over, well, everything. The saying was only partially tongue-in-cheek, because as we've long since learned the reality is that computers allow us to make mistakes faster and more often and with greater reach. One of the statistics used to justify a devops initiative is the rate at which human error contributes to a variety of operational badness: downtime, performance, and deployment life-cycle time. Human error is a non-trivial cause of downtime and other operational interruptions. A recent Paragon Software survey found that human error was cited as a cause of downtime by 13.2% of respondents. Other surveys have indicated rates much higher. Gartner analysts Ronni J. Colville and George Spafford in "Configuration Management for Virtual and Cloud Infrastructures" predict as much as 80% of outages through 2015 impacting mission-critical services will be caused by "people and process" issues. Regardless of the actual rates at which human error causes downtime or other operational disruptions, reality is that it is a factor. One of the ways in which we hope to remediate the problem is through automation and devops. While certainly an appropriate course of action, adopters need to exercise caution when embarking on such an initiative, lest they codify incomplete or inefficient processes that simply promulgate errors faster and more often. DISCOVER, REMEDIATE, REFINE, DEPLOY Something that all too often seems to be falling by the wayside is the relationship between agile development and agile operations. Agile isn't just about fast(er) development cycles, it's about employing a rapid, iterative process to the development cycle. Similarly, operations must remember that it is unlikely they will "get it right" the first time and, following agile methodology, are not expected to. Process iteration assists in discovering errors, missing steps, and other potential sources of misconfiguration that are ultimately the source of outages or operational disruption. An organization that has experienced outages due to human error are practically assured that they will codify those errors into automation frameworks if they do not take the time to iteratively execute on those processes to find out where errors or missing steps may lie. It is process that drives continuous delivery in development and process that must drive continuous delivery in devops. Process that must be perfected first through practice, through the application of iterative models of development on devops automation and orchestration. What may appear as a tedious repetition is also an opportunity to refine the process. To discover and eliminate inefficiencies that streamline the deployment process and enable faster time to market. Inefficiencies that are generally only discovered when someone takes the time to clearly document all steps in the process – from beginning (build) to end (production). Cross-functional responsibilities are often the source of such inefficiencies, because of the overlap between development, operations, and administration. The outage of Microsoft’s cloud service for some customers in Western Europe on 26 July happened because the company’s engineers had expanded capacity of one compute cluster but forgot to make all the necessary configuration adjustments in the network infrastructure. -- Microsoft: error during capacity expansion led to Azure cloud outage Applying an agile methodology to the process of defining and refining devops processes around continuous delivery automation enables discovery of the errors and missing steps and duplicated tasks that bog down or disrupt the entire chain of deployment tasks. We all know that automation is a boon for operations, particularly in organizations employing virtualization and cloud computing to enable elasticity and improved provisioning. But what we need to remember is that if that automation simply encodes poor processes or errors, then automation just enables to make mistakes a whole lot faster. Take care to pay attention to process and to test early, test often. How to Prevent Cascading Error From Causing Outage Storm In Your Cloud Environment? DOWNTIME, OUTAGES AND FAILURES - UNDERSTANDING THEIR TRUE COSTS Devops Proverb: Process Practice Makes Perfect 1024 Words: The Devops Butterfly Effect Devops is a Verb BMC DevOps Leadership Series305Views0likes0CommentsFedRAMP Ramps Up

Tomorrow June 6th, the Federal Risk and Authorization Management Program, the government’s cloud security assessment plan known as FedRAMP will begin accepting security certification applications from companies that provide software services and data storage through the cloud. On Monday, GSA issued a solicitation for cloud providers, both commercial and government, to apply for FedRAMP certification. FedRAMP is the result of government’s work address security concerns related to the growing practice of cloud computing and establishes a standardized approach to security assessment, authorizations and continuous monitoring for cloud services and products. By creating industry-wide security standards and focusing more on risk management, as opposed to strict compliance with reporting metrics, officials expect to improve data security as well as simplify the processes agencies use to purchase cloud services, according to Katie Lewin, director of the federal cloud computing program at the General Services Administration. As both the cloud and the government’s use of cloud services grew, officials found that there were many inconsistencies to requirements and approaches as each agency began to adopt the cloud. FedRAMP’s goal is to bring consistency to the process but also give cloud vendors a standard way of providing services to the government. And with the government’s cloud-first policy, which requires agencies to consider moving applications to the cloud as a first option for new IT projects, this should streamline the process of deploying to the cloud. This is an ‘approve once, and use many’ approach, reducing the cost and time required to conduct redundant, individual agency security assessment. Recently, the GSA released a list of nine accredited third-party assessment organizations—or 3PAOs—that will do the initial assessments and test the controls of providers per FedRAMP requirements. The 3PAOs will have an ongoing part in ensuring providers meet requirements. FedRAMP provides an overall checklist for handling risks associated with Web services that would have a limited, or serious impact on government operations if disrupted. Cloud providers must implement these security controls to be authorized to provide cloud services to federal agencies. The government will forbid federal agencies from using a cloud service provider unless the vendor can prove that a FedRAMP-accredited third-party organization has verified and validated the security controls. Once approved, the cloud vendor would not need to be ‘re-evaluated’ by every government entity that might be interested in their solution. There may be instances where additional controls are added by agencies to address specific needs. Independent, third-party auditors are tasked with testing each product/solution for compliance which is intended to save agencies from doing their own risk management assessment. Details of the auditing process are expected early next month but includes a System Security Plan that clarifies how the requirements of each security control will be met within a cloud computing environment. Within the plan, each control must detail the solutions being deployed such as devices, documents and processes; the responsibilities of providers and government customer to implement the plan; the timing of implementation; and how solution satisfies controls. A Security Assessment Plan details how each control implementation will be assessed and tested to ensure it meets the requirements and the Security Assessment Report explains the issues, findings, and recommendations from the security control assessments detailed in the security assessment plan. Ultimately, each provider must establish means of preventing unauthorized users from hacking the cloud service. The regulations allow the contractor to determine which elements of the cloud must be backed up and how frequently. Three backups are required, one available online. All government information stored on a provider's servers must be encrypted. When the data is in transit, providers must use a "hardened or alarmed carrier protective distribution system," which detects intrusions, if not using encryption. Since cloud services may span many geographic areas with various people in the mix, providers must develop measures to guard their operations against supply chain threats. Also, vendors must disclose all the services they outsource and obtain the board's approval to contract out services in the future. After receiving the initial applications, FedRAMP program officials will develop a queue order in which to review authorization packages. Officials will prioritize secure Infrastructure as a Service (IaaS) solutions, contract vehicles for commodity services, and shared services that align with the administration’s Cloud First policy. F5 has an iApp template for NIST Special Publication 800-53 which aims to make compliance with NIST Special Publication 800-53 easier for administrators of BIG-IPs. It does this by presenting a simplified list of configuration elements together in one place that are related to the security controls defined by the standard. This makes it easier for an administrator to configure a BIG-IP in a manner that complies with the organization's policies and procedures as defined by the standard. This iApp does not take any actions to make applications being serviced through a BIG-IP compliant with NIST Special Publication 800-53 but focuses on the configuration of the management capabilities of BIG-IP and not on the traffic passing through it. ps Resources: Cloud Security With FedRAMP CLOUD SECURITY ACCREDITATION PROGRAM TAKES FLIGHT FedRAMP comes fraught with challenges FedRAMP about to hit the streets FedRAMP takes applications for service providers Contractors dealt blanket cloud security specs FedRAMP includes 168 security controls New FedRAMP standards first step to secure cloud computing GSA to tighten oversight of conflict-of-interest rules for FedRAMP What does finalized FedRAMP plan mean for industry? New FedRAMP standards first step to secure cloud computing GSA reopens cloud email RFQ NIST, GSA setting up cloud validation process FedRAMP Security Controls Unveiled FedRAMP security requirements benchmark IT reform FedRAMP baseline controls released Federal officials launch FedRAMP297Views0likes0CommentsiApp + iControl

#iapp #iControl #orchestration In the past few years there has been a lot of talk about dynamic data centers, automation, orchestration, etc. iApp has a very large benefit in these environments that might not be completely obvious. Last week I met with a customer who was responsible hosting managed applications and support services for their enterprise customers. To implement configuration they built a provisioning system to automate infrastructure and application services based on the user’s inputs. That included scripting each step individually to configure the ADC. Remember that iApp templates can reduce configuration steps up to 90%? Well they are also iControl enabled. That means that whether a template is configured via the UI or API the complexity and time to deploy is equally reduced. Also since an iApp service is just another object, deploying one can be done in a single call regardless how complicated the application may be. So with iApp templates they no longer have to script hundreds of calls to build a single application implementation. They can make one call and pass only the required data unique to the customer and the iApp will complete the rest of the configuration on the device. That certainly reduces the complexity and cost to orchestrate our device and simplify automation.292Views0likes0Comments