What is iWorkflow?

tl;dr - F5's iWorkflow is no longer a supported product and is superceeded by Application Services 3 Extension (AS3). For more AS3 information please see Application Services 3 Extension Documentation . Vocabulary To understand iWorkflow we need to define a few terms that will show up in the product: Clouds - The connectors for BIG-IP ADC services to iWorkflow. Use by Service Templates to connect Tenants to iApps. Tenants - A permission-defined role within iWorkflow. Tenants map users and user groups to service templates through cloud assignments. iApp Templates - Application templates used in deployment and management of multiple ADC features and objects. iApp templates deployed in iWorkflow are used to create one or more Service Templates. Service Templates - Service Templates are the customer visible options of an iApp available for deployment within a specific cloud connection. L4-L7 Services - These are the deployed from service templates by the tenants of iWorkflow. How It Works iWorkflow consolidates BIG-IP environments into a REST API accessible solution for your orchestration system. Managing access and services are made through role-based access controls (RBAC). Using iApps, orchestration providers will deploy applications through iApp-defined service catalogs. iWorkflow maintains the connections to your cloud and on-premise BIG-IP infrastructure and manages the access that each business unit will need to successfully deploy one or more applications. iWorkflow installs to your preferred virtual environment connects via F5's iControlREST API to existing BIG-IP ADC services through one of the following: Existing L3 Connections (Datacenter/AWS/Azure/3rd Party Cloud Providers) Cisco APIC VMWare NSX BIG-IP Virtual Clustered Multiprocessing (vCMP) iWorkflow administrators will create a service catalog for his users to access through their orchestration providers or directly through the iWorkflow GUI. An high level deployment workflow is shown below. Using iControlREST for interfacing with BIG-IP and your orchestration providers, iWorkflow allows BIG-IP to participate in fast Agile DevOps workflows. RBAC allows security, network, and development teams access to their areas of administration all controlled by iWorkflow. For further reading on how iWorkflow integrates into your existing infrastructure and development plans, please review the below links. If there is more you would like to see related to iWorkflow please drop us a line and we'll be happy to assist. iWorkflow on DevCentral - The best repository for all things iWorkflow (Requires Login) iApp Template Development Tips and Techniques(Requires Login) iWorkflow 101 and 201 Series on Youtube (relax and learn to the sweet satin sounds of Nathan Pearce)3.2KViews1like0CommentsiWorkflow 201 (episode #01) - Introducing the iControl REST API

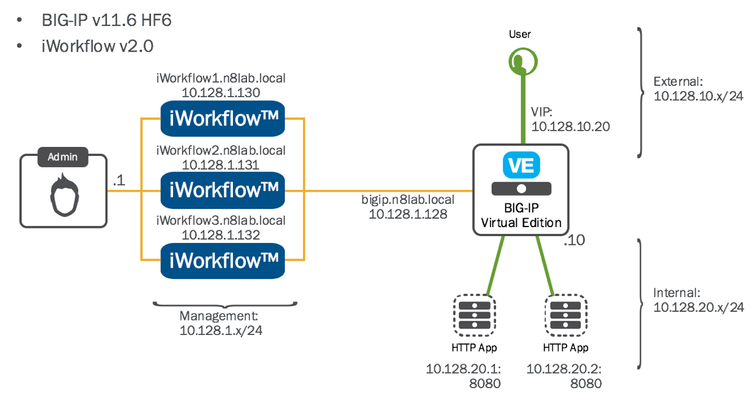

Welcome to the first episode in the iWorkflow 201 series. The iWorkflow 101 series focussed on the GUI-driven operation of the iWorkflow platform whereas the 201 series focuses on performing tasks via the iControl REST API. This first episode is dedicated to those not familiar with using APIs but wanting to make that leap from GUI or Command-line Interface (CLI) management. Consequently, most of the episode will be focussed on introducing the tools you need, with some lessons on how to use them. Finally, at the end of the episode we’ll make our first API call to the iWorkflow platform. Background Reading If this is your first time venturing away from the GUI/CLI, or maybe you just want a refresh, I recommend you watch this great REST API introduction video posted byWebConcepts. In this video you'll see how you can communicate with popular on-line services including Facebook, Google Maps, and Instagram via their REST APIs: It really is that straight forward. In short, you’re using the internet, but not through the traditional web browser. So, now you understand the importance of a REST API, lets move onto the tools. The toolset Introducing POSTMAN! POSTMAN is a Google Chrome browser application. Its great for getting to know REST API’s as it requires no scripting languages. It is a pure REST client. No, it is not the only REST tool out there. However, its supported on a number of platforms and is very straight forward. For your convenience, following are the POSTMAN Setup and Intro instruction videos: POSTMAN Install POSTMAN API Response Viewer iWorkflow iControl REST API Introduction Ok, you’ve got the tools installed and are now are ready to go. So, lets now move on to F5’s RESTful API implementation which is callediControl REST. You will notice, on DevCentral, that there is also a SOAP/XML version of iControl. We implemented this on BIG-IP devices, back in 2001–before REST existed and before iWorkflow. Hence, the BIG-IP device has both the iControl SOAP API and the iControl REST API. iWorkflow, on the other hand, only supports F5’siControl REST framework. Also worth noting, the iWorkflow platform communicates with BIG-IP devices using the BIG-IP iControl REST API. If you watched the video iniWorkflow 101 (episode #02) - Install and Setupyou will have noticed that the iWorkflow platform updates the BIG-IP REST Framework on older BIG-IP versions that require it. In that video I was communicating with BIG-IP version 11.6 so the REST Framework update was performed. The lab environment NOTE: While we will look at iWorkflow Clusters in a future 201 REST API episode it is not important in this episode and all communication will be with iworkflow1.n8lab.local on 10.128.1.130 First Contact Just as in the 1996 feature-length Star Trek episode “Star Trek: First Contact", its time to query the iWorkflow platform. And our first URI to call will be for a list of devices known to iWorkflow. The URI: https://10.128.1.130/mgmt/shared/resolver/device-groups/cm-cloud-managed-devices/devices Lets start off by posting this request into POSTMAN. If you hit send before providing the login credentials then you will receive the following: This is to be expected. You can provide the login credentials under the Authorization tab just below the request URL as show in the following diagram: With those details added, run the query again and you should receive a JSON response like the following. In this diagram we can see properties of the iWorkflow platform: Scrolling further down you can also see the BIG-IP and the other two iWorkflow platforms from the iWorkflow cluster. Here it is in a video: Summary Congratulations!! You’re now talking to your iWorkflow platform via its iControl REST API. If you cannot wait for the next episode, where we will deploy and application services policy via REST, you may want to start looking at the iWorkflow API Reference at the bottom of this page on DevCentral:https://devcentral.f5.com/s/wiki/iWorkflow.HomePage.ashx UPDATE: if you want to grab a POSTMAN collection to import into your environment, visit my GitHub page here1.1KViews0likes3CommentsiWorkflow 101 (episode #1) - The Architecture Explained [End of Life]

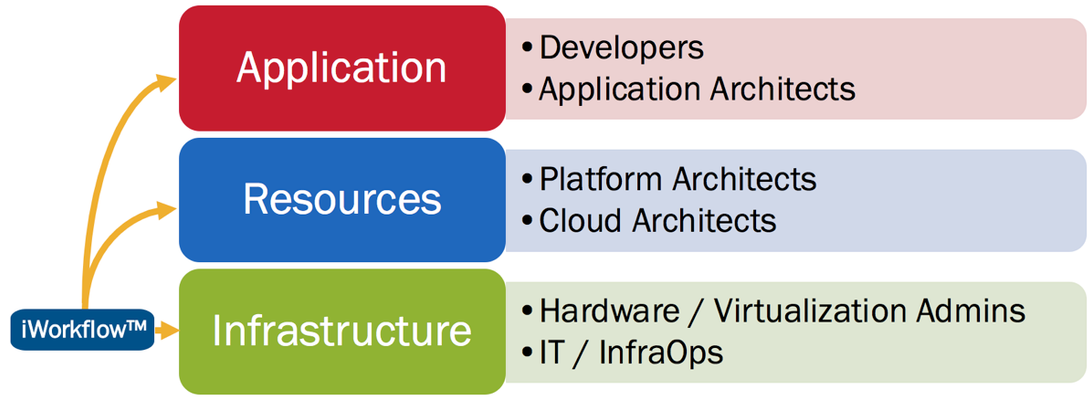

The F5 and Cisco APIC integration based on the device package and iWorkflow is End Of Life. The latest integration is based on the Cisco AppCenter named ‘F5 ACI ServiceCenter’. Visit https://f5.com/cisco for updated information on the integration. F5® iWorkflow™ accelerates the deployment of applications and services while reducing exposure to operational risk. The iWorkflow™ 101 series has been created to share common workflows for the purpose of accelerating your organizations journey towards integration, automation, and continuous deployment.In episode #1 of the iWorkflow™ 101 series we take a high-level look at the various themes and components of the iWorkflow™ platform to aid in understanding its operation. Who’s it for? iWorkflow™ is for anyone interested in: the rapid deployment of performance, high-availability, and security policy the simplification of policy execution through abstraction of complexity extending continuous deployment practices to include scalability and security reducing exposure to operational risk These points hold true whether you’re a traditional infrastructure administrator managing F5 BIG-IP’s directly, a platform architect or engineer responsible for presenting resources to business units, or an application architect or developer looking to rapidly deploy new apps and services as part of the continuous deployment pipeline, while ensuring they remain fast, highly-available, and secure. Architectural Walkthrough New theme’s introduced in this episode: Application Services Application Services Templates Services Template Catalogues Tenants Clouds Workflows Application Services If you are familiar with F5 BIG-IP’s then you already know about Application Services. If not, they are the Layer 4 – 7 features and functions delivered by the BIG-IP application delivery controller. Such application services include: High-availability monitoring and resilience Load-balancing DNS Services DDoS mitigation Application/Data security Access control Scaling and optimization Context switching / app routing and much more. For more information on application delivery controllers and the services they both provide please visit: F5® BIG-IP Application Services Templates An application services template defines a configuration, while accepting deployment-specific information at the time of execution. At this stage its important to introduce theDeclarative Model, and a simple way to explain this is to talk about McDonalds… When you enter a McDonalds restaurant you are presented with a range of meal options. Lets say you were to choose a Big Mac meal, which includes fries and a soft drink. The “meal" itself is the template: you get a Big Mac, fries, and a soft drink. Sure, you can choose which soft drink you get (cola, lemonade, water), you can even select a dipping sauce (ketchup, honey mustard, sweet chili, etc), but you are not required to define the Big Mac, nor are you able to order items outside of the meal options–try asking for a Pizza instead of Fries! The declarative model provides an abstraction to the meal creation process alleviating the customer from much the complexity. In contrast to the declarative model we have the imperative model. Using the McDonalds scenario again, an imperative model would require that you order every single ingredient individually, in addition to explaining how they are prepared, and how they are put together to make the meal. Back to Services Templates, the declarative model allows for infrastructure administrators and architects to define sets of common deployment configurations and expose such templates to teams that may not be skilled in application delivery policy.Organizations can then realize the benefits of advanced functionality while avoiding lengthy deployment delays, as such an architecture eliminates the need for business units to become experts in every technology. Instead, approved, repeatable policies can be deployed directly by operations staff, or by 3rd party orchestration systems, at the time of application deployment. In 2011, F5® released iApps (F5’s application services templates) to eliminate much of the manual process and repetition involved in configuring the BIG-IP application delivery controller. For more information on the benefits of services templates, please read: “Why you need service templates”. For technical details on F5 iApps, navigate here. Services Template Catalogue A Services Template Catalogue presents the application services templates to the deployment staff. The deployment could be performed manually by an employee via the iWorkflow™ GUI, or by 3 rd party systems communicating with the iWorkflow™ iControl REST API. In either scenario, both the administrator and 3rd party system are interfacing with the Services Template Catalogue. Connectors The connectors provide the communication between iWorkflow and other systems. For example, the local BIG-IP connector provides a tenant with destination BIG-IP’s upon which to deploy policy. The Cisco APIC and VMware NSX connectors provide for the deployment of BIG-IP application services within Cisco ACI and VMware NSX environments. Lastly, the Integration SDK, allows organizations to build their own integrations and functionality. Tenants A services template catalogue, and the destination devices and environments, are presented through the iWorkflow™ Tenant feature. Consider a Tenant as a grouping of Application Services Templates, Connectors, Devices, and the Users and Groups with the appropriate permissions to deploy application services upon them. Such a grouping vastly simplifies the management of fine-grained access control, while limiting the user’s exposure to the complexity of the environment. Workflows In the context of iWorkflow™, workflows are the end-to-end execution of a system’s or operator’s intent to deploy policy. In the case of an iWorkflow Tenant, the execution starts directly with iWorkflow™, via the GUI or iWorkflow™ REST API. However, in the case of a 3rd party system the workflow starts from within that system which executes the application services template deployment through iWorkflow™. Workflow walkthrough Stitching these themes together, following is a step-by-step walkthrough of a simple workflow: When talking about workflows we start with the intent, and work through to the executed policy. This intent could be that of a 3 rd party system, or of an iWorkflow Operator manually deploy an iApp. With that in mind, referring to the number diagram above, lets now walk through the various elements of a workflow: To the administrators and 3 rd party systems from which iWorkflow™ takes instruction, there are two interfaces: a) the iWorkflow™ GUI, and b) the iWorkflow™ iControl REST API. The iControl REST API may be interfaced by 3rd party systems or by sys-admins using various scripting options or desktop REST API clients. Detailed examples of using each will be provided in future iWorkflow 101 episodes found here on DevCentral: iWorkflow Home The “Service Template Catalogue” presents the local, administrator-defined services templates that have been permitted for the tenant. Exactly which options are configurable at the time of deployment, within each template, is predetermined by the iWorkflow™ administrator. Consequently, the simplicity, or complexity, of each template, and how they are implemented per iWorkflow tenant, is extensively configurable to match an organizations requirements. The “Services Templates” themselves, the F5 iApps, that are presented via the “Service Template Catalogue”. How much of their functionality that is exposed via the Service Template Catalogue is configurable, and thus simplifying how administrators can accommodate the varying capabilities of the iWorkflow Operators: be they 3 rd party systems or sys-admins. The iWorkflow™ platform itself that hosts the Services Templates, the presentation of the Service Template Catalogue, and the iWorkflow™ Connectors, while also managing the multi-tenancy, role-based access control, and what elements of which templates should be exposed where and to whom. Its quite amazing, really! iWorkflow™ communicates with the BIG-IP ADC’s via the BIG-IP iControl REST API. The F5 BIG-IP Application Delivery Controllers (ADCs) themselves. These devices, physical or virtual, consume the performance, availability, and security policies that were defined by the services templates, and enforce that desired behavior. The Provider / Tenant model There are two distinct iWorkflow™ personas: the iWorkflow Administrator, and of the iWorkflow Tenant. The iWorkflow™ administrator creates and manages the various objects of the iWorkflow™ platform that arerequired to execute a workflow. Once configured, these object are provided to the tenants. Such objects include: BIG-IP Devices Connectors Services Template Catalogues Users/Groups Tenants Licenses The iWorkflow™ Administrator is not able to create, delete or modify these objects. The role of the Tenant is to execute the deployment of performance, high-availability, and security policies via the service template catalogue, as configured/permitted by theiWorkflow™ Administrator. This is typically referred to as a Provider/Tenant model. iWorkflow Administrator As shown in the diagram below (top right corner), the “admin” user is logged in and that user is an iWorkflow™ Administrator. An administrator has the ability to add BIG-IP Devices, create Connectors, add Catalogue entries, and more. iWorkflow Tenant In this example the user “user1” is logged in. Note that it no-longer states that an “Administrator” is logged in, as per the previous image (in the top right-hand corner). In this example, the iWorkflow Tenant has been configured with access to the “myConn1”, local Connector. “user1” does not have the ability to create, delete, or modify Connectors. Only to deploy pre-determined policy to BIG-IP Devices via the "myConn1” connector. Summary Using application services templates, organizations can eliminate repetitive effort during deployments. This enables them to accelerate time to market for new applications and services, reduce exposure to operational risk, and enable infrastructure consumers (the business) to self-serve: deliver performance, high-availability, and security policy at speed. For more information, return to the DevCentraliWorkflow Homepage.713Views0likes4CommentsF5 Python SDK and Kubernetes

It’s been almost a year since my original article that discussed the basics of using the Docker API to configure BIG-IP pool members. This article dives into more details of utilizing the F5 Python SDK to dynamically update the BIG-IP using Kubernetes as an example. This is meant as a guide for those that are interested in the internals of how to utilize the BIG-IP iControl REST API. Those that are interested in an integrated solution with Kubernetes and F5 BIG-IP should take a look at RedHat OpenShift. This provides a platform for hosting containers that integrates with F5 BIG-IP. There are also community efforts lead by F5 customers to integrate their BIG-IP with Kubernetes (using Go instead of Python that is used in the example). If you’re interested in learning more about F5 and containers please contact your local F5 account team. Kubernetes Service Load Balancer / Ingress Router For the following example we’re using Kubernetes as the example infrastructure. Kubernetes enables an infrastructure that provides a structure for hosting containers (Docker / rkt). The challenge with using Kubernetes and BIG-IP is that services tend to be ephemeral in Kubernetes making it hard to use traditional means (GUI/TMSH) of configuring pool members and virtual servers. Kubernetes also has the notion of a “service load balancer” and “ingress router” that can be configured from within Kubernetes. The example code takes the approach of fetching data from Kubernetes about its “service load balancer” and “ingress router” and uses it to configure the BIG-IP. The pseudo code of my example is the following: # Step 1: Connect to BIG-IP bigip_client = BigIPManagement(); # Step 2: Connect to Kubernetes kubernetes_client = KubernetesManagement(); # Step 3: Get everything I want from Kubernetes everything_i_want = kubernetes_client.get_everything_you_want(); # Step 4: Update BIG-IP with everything you want bigip_client.update_or_create_everything(everything_i_want); The actual code is available at: https://github.com/f5devcentral/f5-icontrol-codeshare-python/tree/master/kubernetes-example Step 1: Connecting to BIG-IP There are numerous ways to integrate your infrastructure with BIG-IP. The most basic method is to write directly to the iControl REST API using your preferred programming platform. This works well and is documented up on DevCentral. There also exist language specific bindings/libraries. Here’s a few examples: · Go: go-bigip (community) · Node.JS: icontrol (community) · Python: f5-sdk (F5 contributed) You are not limited to using code for automation. If you prefer to use Configuration Management tools some examples of F5 integrations are: · Puppet (PuppetLabs supported) · Chef (community) · Ansible (F5 contributed) · VRealize Orchestrator (bluemedora supported) · Heat (F5 supported) · Cloud Formation (F5 contributed) Examples also exist for integrating with your preferred SDN toolkit · OpenStack · Cisco ACI · VMWare NSX Each method has its pros/cons. For the use-case of connecting to Kubernetes I opted for the Python SDK because I know Python and it’s easier for me to program (I did try to do this in Go and I gave up). In general I’d recommend using an automation tool that works best for you. Programmers may prefer the native iControl REST API, System Engineers may prefer CM tools like Puppet, and Network Engineers will utilize infrastructure tools that are made available to them. You can also combine tools as long as you have clear lines of separation (i.e. use partitions or naming convention to keep things sane). Step 2: Connecting to your external data source Generally you’re interested in automation because you have data in one place that you would like to synchronize from one source to another. You might have configurations that live in an Excel spread sheet, CSV/TXT/YAML/JSON file, MySQL database, etcd, etc… For my example I utilized two libraries to connect to Kubernetes: 1. Pykube: Python library that connects to Kubernetes admin API 2. Python-etcd: Python library that connects to backend data store that flannel uses for storing network data Step 3: Get everything I want from Kubernetes Using these libraries I fetch information from Kubernetes about the “service load balancers” that have been defined (virtual service / pool members), ingress routers (LTM policies / L7 content routing). The second library is used for a more advanced example that allows one to use the BIG-IP SDN capability to connect to the VXLAN network that can be utilized by Kubernetes (look at the code for details…). Step 4: Update BIG-IP with everything you want Once you have all the data that you have from Kubernetes you need to make decisions on what you want to update. For the example it creates/updates virtual servers, pool members, wide-ips, and routes. Depending on the use-case you may want to only update a subset of the options. In an existing deployment you may want to only update a designated LTM pool and not modify any other objects. You may also want to opt between different options of how to make the update. For customers that have developed their own iApps they may want to leverage these iApp to help ensure a more consistent deployment. iWorkFlow: Keeping the peace Ideally the application, system, security, and network teams will have a trust model that enables an application developer to promote a change in their application that will immediately propagate to production. In cases where RBAC controls are required to limit actions (i.e. app developer can only add/remove pool members from the pool named “app_owner_pool”) iWorkFlow can be utilized as a REST-PROXY to the BIG-IP and apply resource level RBAC. This can allow for separate automation identities “kubernetes_automation_agent_userid” and automation roles “kubernetes_only_allow_pool_update_role”. Go for automation Again, this is a Python example, but the same basic flow will exist for many use-cases. For a deep dive into some Python code that also handles the provisioning of “bare metal” (VE/vCMP) hosts take a look at the f5-openstack-agent. This covers some of the steps commonly involved in managing your BIG-IP. This has been a very imperative (step-by-step) example of managing your BIG-IP, but take a look at Chef, Puppet, Ansible, HEAT, and Cloud Formation templates for better ways to be more declarative.460Views0likes1CommentF5 iWorkflow and Cisco ACI : True application centric approach in application deployment (End Of Life)

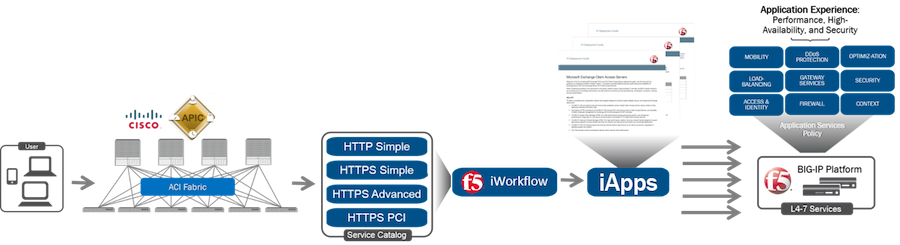

The F5 and Cisco APIC integration based on the device package and iWorkflow is End Of Life. The latest integration is based on the Cisco AppCenter named ‘F5 ACI ServiceCenter’. Visit https://f5.com/cisco for updated information on the integration. On June 15 th , 2016, F5 released iWorkflow version 2.0, a virtual appliance platform designed to deploy application with greater agility and consistency. F5 iWorkflow Cisco APIC cloud connector provides a conduit allowing APIC to deploy F5 iApps on BIG-IP. By leveraging iWorkflow, administrator has the capability to customize application template and expose it to Cisco APIC thru iWorkflow dynamic device package. F5 iWorkflow also support Cisco APIC Chassis and Device Manager features. Administrator can now build Cisco ACI L4-L7 devices using a pair of F5 BIG-IP vCMP HA guest with a iWorkflow HA cluster. The following 2-part video demo shows: (1) How to deploy iApps virtual server in BIG-IP thru APIC and iWorkflow (2) How to build Cisco ACI L4-L7 devices using F5 vCMP guests HA and iWorkflow HA cluster F5 iWorkflow, BIG-IP and Cisco APIC software compatibility matrix can be found under: https://support.f5.com/kb/en-us/solutions/public/k/11/sol11198324.html Check out iWorkflow DevCentral page for more iWorkflow info: https://devcentral.f5.com/s/wiki/iworkflow.homepage.ashx You can download iWorkflow from https://downloads.f5.com458Views1like1CommentiWorkflow 201 (episode #03) - Calling REST from scripting languages (Javascript and Python)

NOTE: there’s a video at the end if you don’t want to read this! Thus far, we’ve had a lot of focus on POSTMAN collections. For those who haven’t been following the series, POSTMAN is a great REST client that we’ve been using to demonstrate the F5 iWorkflow REST API. Take a look at first two iWorkflow 201 articles to understand more of that: iWorkflow 201 (episode #01) - Introducing the REST API iWorkflow 201 (episode #02) - Deploying a services template via the REST API Now you’ve had some time to familiarize yourself with REST, its time to start communicating with iWorkflow via some popular scripting languages. If you are new to REST, and even newer to scripting, then here’s a trick that will save you some headaches. Yes, I’m talking about POSTMAN again… So, in POSTMAN, once you’ve worked out the REST transaction you want to perform, you can click the “Generate Code” button near the top right of the screen. Here’s an example using the Auth Token transaction: Step 1 - With the desired transaction open, click “Generate Code”. Step 2 - In the window that appears, select the desired language from which you require to execute the REST transaction. Step 3 - Copy that data and start scripting! Or hand it to someone in your organization who’s looking to self-serve their L4 - L7 service templates via REST. NOTE: I do not own shares in POSTMAN. Its just really cool, and free. Side note - Need to brush up on scripting? Whether you’ve scripted before and just want a refresh, or you’re starting as a beginner and are eager to dive right in, there’s great, free resources available to you. I’ve heard good things about the on-line courses by Codecademy.com, where there are free course on Javascript and Python. Once you've completed those, take a look at the Codecademy.com REST course where you’ll learn to communicate with REST API's using real API services from Youtube, NPR and more: https://www.codecademy.com/en/tracks/youtube https://www.codecademy.com/en/tracks/npr Ok, lets get scripting! Scripting Languages While I’ve put Javascript first, and Python second, this is not an F5 prioritization – but, yes, I prefer Javascript :) For both examples we will a) request a token, b) modify the Auth Token timeout, and c) list the Tenant’s L4 - L7 Service Templates. I’ve chosen these three transactions as they provide examples of POST, PATCH, and GET. As covered in previous articles, iWorkflow employs a Provider/Tenant model. The Provider (administrator role) configures the iWorkflow platform. The Tenant deploys services using the iWorkflow platform. All of the examples in this article will be Tenant-based functions. Javascript Either you were a Javascript pro at the start of the article, or you’ve just returned from a few days at Codecademy.com. Whichever of the two, we’ll now start with an Auth Token request to the iWorkflow REST interface. In this exercise I’ll be using Node v4.4.0 running on my Macbook. Node is a very popular Javascript runtime. NOTE: in my lab I am using the default, self-signed SSL certificates that are generated during the iWorkflow install and setup. By default (and rightly so, for security reasons) Node will barf at the self-signed SSL Cert. To circumvent this security behavior I have added the following line to the top of my Javascript file: process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; Part 1 - Request an Auth Token Below is the full script, generated by POSTMAN with the addition of the SSL cert-check bypass, that I saved as “Javascript-Request_Auth_Token.js”: $ cat ./Javascript-Request_Auth_Token.js process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; var http = require("https"); var options = { "method": "POST", "hostname": "10.128.1.130", "port": null, "path": "/mgmt/shared/authn/login", "headers": { "content-type": "application/json", "cache-control": "no-cache" } }; var req = http.request(options, function (res) { var chunks = []; res.on("data", function (chunk) { chunks.push(chunk); }); res.on("end", function () { var body = Buffer.concat(chunks); console.log(body.toString()); }); }); req.write(JSON.stringify({ username: 'User1', password: 'admin', loginProvidername: 'tmos' })); req.end(); Most of the contents of “var options {}” will look familiar to you already. We also have the JSON payload that will be sent as part of the POST transaction (NOTE: username, password, and loginProvidername). All of the above were used in iWorkflow 201 episodes #01 and #02. To execute the transaction, type: node ./Javascript-Request_Auth_Token.js Here are the results from this transaction: $ node ./Javascript-Request_Auth_Token.js {"username":"User1","loginReference":{"link":"https://localhost/mgmt/cm/system/authn/providers/local/login"},"loginProviderName":"local","token":{"token":"3YRVEXFR7UXM2ZMI3MEF5URVJV","name":"3YRVEXFR7UXM2ZMI3MEF5URVJV","userName":"User1","authProviderName":"local","user":{"link":"https://localhost/mgmt/shared/authz/users/User1"},"groupReferences":[{"link":"https://localhost/mgmt/shared/authn/providers/local/groups/05a36de3-4c85-4599-bfed-bfa45649df85"}],"timeout":1200,"startTime":"2016-08-10T17:38:40.556-0700","address":"10.128.1.1","partition":"[All]","generation":1,"lastUpdateMicros":1470875920556194,"expirationMicros":1470877120556000,"kind":"shared:authz:tokens:authtokenitemstate","selfLink":"https://localhost/mgmt/shared/authz/tokens/3YRVEXFR7UXM2ZMI3MEF5URVJV"},"generation":0,"lastUpdateMicros":0} You’ll note its not presented as pretty as POSTMAN, but it is the same data! Next action, modify the Auth Token resource. Part 2 - Modify the Auth Token resource (timeout) Next, we are going to change the timeout value of the Auth Token resource. While we do not require an extended timeout, it is an example of PATCH(ing) a resource. Here is the code to modify the Auth Token (but something is missing from it): $ cat Javascript-Modify_Auth_Token_Timeout.js process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; var http = require("https"); var options = { "method": "PATCH", "hostname": "10.128.1.130", "port": null, "path": "/mgmt/shared/authz/tokens/[Auth Token]", "headers": { "x-f5-auth-token": "[Auth Token]", "content-type": "application/json", "cache-control": "no-cache" } }; var req = http.request(options, function (res) { var chunks = []; res.on("data", function (chunk) { chunks.push(chunk); }); res.on("end", function () { var body = Buffer.concat(chunks); console.log(body.toString()); }); }); req.write(JSON.stringify({ timeout: '36000' })); req.end(); For this to work we need to enter the Auth Tokenresource that we are modifying. You will need to replace[Auth Token]on lines 7 and 9 with the value of the Auth Token returned from our first transaction in "Part 1 - Request an Auth Token”. My Auth Token from the original transaction is “3YRVEXFR7UXM2ZMI3MEF5URVJV”. So the script will now look like the following (note lines 7 and 9): $ cat Javascript-Modify_Auth_Token_Timeout.js process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; var http = require("https"); var options = { "method": "PATCH", "hostname": "10.128.1.130", "port": null, "path": "/mgmt/shared/authz/tokens/3YRVEXFR7UXM2ZMI3MEF5URVJV", "headers": { "x-f5-auth-token": "3YRVEXFR7UXM2ZMI3MEF5URVJV", "content-type": "application/json", "cache-control": "no-cache" } }; var req = http.request(options, function (res) { var chunks = []; res.on("data", function (chunk) { chunks.push(chunk); }); res.on("end", function () { var body = Buffer.concat(chunks); console.log(body.toString()); }); }); req.write(JSON.stringify({ timeout: '36000' })); req.end(); Ok, now we are ready to execute. As per the second to last line in this script, the value of Timeout will be increased from the default of 1200 seconds to the new value of 36000 seconds. The result: $ node Javascript-Modify_Auth_Token_Timeout.js {"token":"2NTTOAJBHEUWMFTLZ3KQY5R6SP","name":"3YRVEXFR7UXM2ZMI3MEF5URVJV","userName":"User1","authProviderName":"local","user":{"link":"https://localhost/mgmt/shared/authz/users/User1"},"groupReferences":[{"link":"https://localhost/mgmt/shared/authn/providers/local/groups/05a36de3-4c85-4599-bfed-bfa45649df85"}],"timeout":36000,"startTime":"2016-08-11T08:29:32.307-0700","address":"10.128.1.1","partition":"[All]","generation":3,"lastUpdateMicros":1470929566962077,"expirationMicros":1470965372307000,"kind":"shared:authz:tokens:authtokenitemstate","selfLink":"https://localhost/mgmt/shared/authz/tokens/3YRVEXFR7UXM2ZMI3MEF5URVJV"} In the response we now see"timeout":36000 So far we’ve performed the two more complicated transactions, a POST and a PATCH. Now we’ll end with a GET. Part 3 -List the Tenant’s L4 - L7 Service Templates This final transaction, lets use our Auth Token to obtain a list of the L4 - L7 Service Templates available to User1. As this is a GET transaction there is no JSON payload being sent (no req.write at the end of the script). Don’t forget to edit the script and replace [Auth Token]with the Auth Token you generate earlier for the “x-f5-auth-token” header on line 9. Failing to do this will result in a "401 - Unauthorized" response. $ cat Javascript-List_L4-L7_Service_Templates.js process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; var http = require("https"); var options = { "method": "GET", "hostname": "10.128.1.130", "port": null, "path": "/mgmt/cm/cloud/tenant/templates/iapp/", "headers": { "x-f5-auth-token": “[Auth Token]", "cache-control": "no-cache" } }; var req = http.request(options, function (res) { var chunks = []; res.on("data", function (chunk) { chunks.push(chunk); }); res.on("end", function () { var body = Buffer.concat(chunks); console.log(body.toString()); }); }); req.end(); And here’s the result. I have 1 L4 - L7 Service Template named “f5.http_ServiceTypeA": $ node Javascript-List_L4-L7_Service_Templates.js {"items":[{"name":"f5.http_ServiceTypeA","sections":[{"description":"Virtual Server and Pools","displayName":"pool"}],"vars":[{"name":"pool__addr","isRequired":true,"defaultValue":"","providerType":"NODE","serverTier":"Servers","description":"What IP address do you want to use for the virtual server?","displayName":"addr","section":"pool","validator":"IpAddress"}],"tables":[{"name":"pool__hosts","isRequired":false,"description":"What FQDNs will clients use to access the servers?","displayName":"hosts","section":"pool","columns":[{"name":"name","isRequired":true,"defaultValue":"","description":"Host","validator":"FQDN"}]},{"name":"pool__members","serverTier":"Servers","isRequired":false,"description":"Which web servers should be included in this pool?","displayName":"members","section":"pool","columns":[{"name":"addr","isRequired":false,"defaultValue":"","providerType":"NODE","description":"Node/IP address","validator":"IpAddress"},{"name":"port","isRequired":true,"defaultValue":"8080","providerType":"PORT","description":"Port","validator":"PortNumber"}]}],"properties":[{"id":"cloudConnectorReference","isRequired":true,"value":"https://localhost/mgmt/cm/cloud/tenants/myTenant1/connectors/bea388b8-46f8-4363-9f89-d8920ea8931f"}],"generation":2,"lastUpdateMicros":1468991604443417,"kind":"cm:cloud:tenant:templates:iapp:tenantiapptemplateworkerstate","selfLink":"https://localhost/mgmt/cm/cloud/tenant/templates/iapp/f5.http_ServiceTypeA"}],"generation":0,"lastUpdateMicros":0} Pro Tips Tip 1 - Passing options via the command line Programmers are allergic to inefficiencies. Some saying it causes them physical pain! Having to edit your scripts all the time to update IP Addresses, Auth Tokens, or other data, can get a little tedious. So, here is how we pass that information to the script from the command line and then place that information in the right parts of the script using variables. I'll use the “Javascript - Modify Auth Token Timeout.js” script as an example. At the beginning of the script we’re going to add the following four lines (between process.env…and var http…): process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; var myArgs = process.argv.slice(2); if (myArgs.length < 3) { console.log("Usage: Host Token Timeout") }; var http = require("https"); Without going into laborious detail, this creates an array which stores the arguments you’ve provided. The ifcondition checks that you have provided three arguments. If there are less than three arguments it prints a statement saying that you must provide the Host,AuthToken, and Timeoutfor it to work. Then we modify the following four lines in the script to use the arguments that we passed in: "hostname": myArgs[0], "path": "/mgmt/shared/authz/tokens/" +myArgs[1], "x-f5-auth-token": myArgs[1], req.write(JSON.stringify({ timeout: myArgs[2] })); The number in square braces marks the position in the array (list). Yes, computers start from zero! So, now the whole script looks like this: $ cat ./Javascript-Modify_Auth_Token_Timeout.js #!/usr/bin/env node process.env.NODE_TLS_REJECT_UNAUTHORIZED = "0"; var myArgs = process.argv.slice(2); if (myArgs.length < 3) { console.log("Usage: Host Token Timeout") }; var http = require("https"); var options = { "method": "PATCH", "hostname": myArgs[0], "port": null, "path": "/mgmt/shared/authz/tokens/" +myArgs[1], "headers": { "x-f5-auth-token": myArgs[1], "content-type": "application/json", "cache-control": "no-cache" } }; var req = http.request(options, function (res) { var chunks = []; res.on("data", function (chunk) { chunks.push(chunk); }); res.on("end", function () { var body = Buffer.concat(chunks); console.log(body.toString()); }); }); req.write(JSON.stringify({ timeout: myArgs[2] })); req.end(); And is executed like this: $ node ./Javascript-Modify_Auth_Token_Timeout.js 10.128.1.1303YRVEXFR7UXM2ZMI3MEF5URVJV 36000 Tip 2 - Make your script executable More on the theme of efficiency, you can eliminate the requirement to type ”node” before the script. This requires two things. 1) Tell the command shell what runtime to execute against, and 2) change the attribute of the file to executable. Step 1: add this as the first line in the script: #!/usr/bin/env node Step 2: Change the attribute of the file so that it is executable (on OS X 10.11.6 - same for *nix) $ chmod u+x Javascript-Modify_Auth_Token_Timeout.js Now you can execute the script like this (without the preceding ’node’): $ ./Javascript-Modify_Auth_Token_Timeout.js 10.128.1.130 H43I46IBZKEIDQIKZRREN3VITS 36000 Javascript Summary We’ve review the three most common types of transaction you will perform against the iWorkflow REST API: GET, POST, and PATCH. Versions of each of the scripts above, with support for command line arguments, have been posted to Github and can be found here: Get Auth Token:https://github.com/npearce/F5_iWorkflow_REST_API_Commands/blob/master/Javascript-Request_Auth_Token.js Modify Auth Token Timeout:https://github.com/npearce/F5_iWorkflow_REST_API_Commands/blob/master/Javascript-Modify_Auth_Token_Timeout.js List L4 - L7 Service Templates:https://github.com/npearce/F5_iWorkflow_REST_API_Commands/blob/master/Javascript-List_L4-L7_Service_Templates.js Python Ok, without the Javascript examples out of the way, lets do the same in Python. NOTE: in my lab I am using the default, self-signed SSL certificates that are generated during the iWorkflow install and setup. By default (and rightly so, for security reasons) Python will barf at the self-signed SSL Cert. To circumvent this security behavior I have added the following line to the top of my python scripts: verify=Falseto the request (see below in Part1) Part 1 - Request an Auth Token Below is the full script, generated by POSTMAN with the addition of the SSL cert-check bypass, that I saved as “Javascript - Request Auth Token.py”: $ cat Python-Request_Auth_Token.py import requests url = "https://10.128.1.130/mgmt/shared/authn/login" payload = "{\n \"username\": \"User1\",\n \"password\": \"admin\",\n \"loginProvidername\": \"tmos\"\n}" headers = { 'authorization': "Basic YWRtaW46YWRtaW4=", 'content-type': "application/json", 'cache-control': "no-cache" } response = requests.request("POST", url, data=payload, headers=headers, verify=False) print(response.text) NOTE: You’ll get a warning on the response about the ‘insecure mode’ due to the ‘verify=False’ option but it will work all the same. As an alternative, you could avoid using self-signed SSL certs. This is what the response will look like: $ python Python-Request_Auth_Token.py /Library/Python/2.7/site-packages/requests-2.7.0-py2.7.egg/requests/packages/urllib3/connectionpool.py:768: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.org/en/latest/security.html InsecureRequestWarning) {"username":"User1","loginReference":{"link":"https://localhost/mgmt/cm/system/authn/providers/local/login"},"loginProviderName":"local","token":{"token":"JX74CUNSBUHJX7JKGM7RYB64PV","name":"JX74CUNSBUHJX7JKGM7RYB64PV","userName":"User1","authProviderName":"local","user":{"link":"https://localhost/mgmt/shared/authz/users/User1"},"groupReferences":[{"link":"https://localhost/mgmt/shared/authn/providers/local/groups/05a36de3-4c85-4599-bfed-bfa45649df85"}],"timeout":1200,"startTime":"2016-08-11T17:08:12.662-0700","address":"10.128.1.1","partition":"[All]","generation":1,"lastUpdateMicros":1470960492662099,"expirationMicros":1470961692662000,"kind":"shared:authz:tokens:authtokenitemstate","selfLink":"https://localhost/mgmt/shared/authz/tokens/JX74CUNSBUHJX7JKGM7RYB64PV"},"generation":0,"lastUpdateMicros":0} Ignoring the InsecureRequestWarning, you'll notice some familiar JSON. Specifically, the Auth Token: 5MN24CKDPCR7POARJ3FWTIALF5 Next action, modify the Auth Token resource. Part 2 - Modify the Auth Token resource (timeout) Next, we are going to change the 'timeout' value of the Auth Token resource. While we do not require an extended timeout, it is an example of PATCH(ing) a resource. Here is the code to modify the Auth Token (but something is missing from it): $ cat Python-Modify_Auth_Token_Timeout.py import requests url = "https://10.128.1.130/mgmt/shared/authz/tokens/[Auth Token]" payload = "{\n \"timeout\":\"36000\"\n}" headers = { 'x-f5-auth-token': "[Auth Token]", 'content-type': "application/json", 'cache-control': "no-cache" } response = requests.request("PATCH", url, data=payload, headers=headers, verify=False) print(response.text) Replace [Auth Token]on lines 3 and 7 with the Auth Token we received in the previous transaction. In this case:5MN24CKDPCR7POARJ3FWTIALF5 Execute with$ python Python-Modify_Auth_Token_Timeout.py You’ll now see the Timeout value has been increased to 36000 seconds. $ python Python-Modify_Auth_Token_Timeout.py /Library/Python/2.7/site-packages/requests-2.7.0-py2.7.egg/requests/packages/urllib3/connectionpool.py:768: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.org/en/latest/security.html InsecureRequestWarning) {"token":"5MN24CKDPCR7POARJ3FWTIALF5","name":"5MN24CKDPCR7POARJ3FWTIALF5","userName":"User1","authProviderName":"local","user":{"link":"https://localhost/mgmt/shared/authz/users/User1"},"groupReferences":[{"link":"https://localhost/mgmt/shared/authn/providers/local/groups/05a36de3-4c85-4599-bfed-bfa45649df85"}],"timeout":36000,"startTime":"2016-08-11T17:25:11.383-0700","address":"10.128.1.1","partition":"[All]","generation":2,"lastUpdateMicros":1470961824663992,"expirationMicros":1470997511383000,"kind":"shared:authz:tokens:authtokenitemstate","selfLink":"https://localhost/mgmt/shared/authz/tokens/5MN24CKDPCR7POARJ3FWTIALF5"} Part 3 -List the Tenant’s L4 - L7 Service Templates In this final transaction of the exercise lets use our Auth Token to obtain a list of the L4 - L7 Service Templates available to User1. As this is a GET transaction there is no JSON payload being sent. Just a GET with the x-f5-auth-tokenheader. Don’t forget to edit the script and replace[Auth Token]with the Auth Token you generated earlier for the “x-f5-auth-token” header on line 9. Failing to do this will result in a "401 - Unauthorized” response. This is the script: $ cat Python-List_L4-L7_Service_Templates.py import requests url = "https://10.128.1.130/mgmt/cm/cloud/tenant/templates/iapp/" headers = { 'x-f5-auth-token': "5MN24CKDPCR7POARJ3FWTIALF5", 'cache-control': "no-cache" } response = requests.request("GET", url, headers=headers, verify=False) print(response.text) This is the response: $ python Python-List_L4-L7_Service_Templates.py /Library/Python/2.7/site-packages/requests-2.7.0-py2.7.egg/requests/packages/urllib3/connectionpool.py:768: InsecureRequestWarning: Unverified HTTPS request is being made. Adding certificate verification is strongly advised. See: https://urllib3.readthedocs.org/en/latest/security.html InsecureRequestWarning) {"items":[{"name":"f5.http_ServiceTypeA","sections":[{"description":"Virtual Server and Pools","displayName":"pool"}],"vars":[{"name":"pool__addr","isRequired":true,"defaultValue":"","providerType":"NODE","serverTier":"Servers","description":"What IP address do you want to use for the virtual server?","displayName":"addr","section":"pool","validator":"IpAddress"}],"tables":[{"name":"pool__hosts","isRequired":false,"description":"What FQDNs will clients use to access the servers?","displayName":"hosts","section":"pool","columns":[{"name":"name","isRequired":true,"defaultValue":"","description":"Host","validator":"FQDN"}]},{"name":"pool__members","serverTier":"Servers","isRequired":false,"description":"Which web servers should be included in this pool?","displayName":"members","section":"pool","columns":[{"name":"addr","isRequired":false,"defaultValue":"","providerType":"NODE","description":"Node/IP address","validator":"IpAddress"},{"name":"port","isRequired":true,"defaultValue":"8080","providerType":"PORT","description":"Port","validator":"PortNumber"}]}],"properties":[{"id":"cloudConnectorReference","isRequired":true,"value":"https://localhost/mgmt/cm/cloud/tenants/myTenant1/connectors/bea388b8-46f8-4363-9f89-d8920ea8931f"}],"generation":2,"lastUpdateMicros":1468991604443417,"kind":"cm:cloud:tenant:templates:iapp:tenantiapptemplateworkerstate","selfLink":"https://localhost/mgmt/cm/cloud/tenant/templates/iapp/f5.http_ServiceTypeA"}],"generation":0,"lastUpdateMicros":0} Again, we see a single L4 - L7 Service Template named f5.http_ServiceTypeA. Pro Tips Tip 1 - Passing options via the command line As stated in the Javascript section, programmers are allergic to inefficiencies. Some saying it causes them physical pain! Having to edit your scripts all the time to update IP Addresses, Auth Tokens, or other data, can get a little tedious. So, here is how we pass that information to the script from the command line and then place that information in the right parts of the script using variables. I'll use the “Python - Modify Auth Token Timeout.py” script as an example. Changes made to the script: Add:import sys To check if all the options are provided (Host, Auth Token, and Timeout) Add: if len(sys.argv) < 4: print "Usage: Host Token Timeout" Substitute the Host Address with " + sys.argv[1] +", the Auth Token with " + sys.argv[2], and the Timeout with " + sys.argv[3] + “. Example below: $ cat Python-Modify_Auth_Token_Timeout.py import sys import requests if len(sys.argv) < 4: print "Usage: Host Token Timeout" sys.exit() url = "https://" + sys.argv[1] + "/mgmt/shared/authz/tokens/" + sys.argv[2] payload = "{\n \"timeout\":\"" + sys.argv[3] + "\"\n}" headers = { 'x-f5-auth-token': sys.argv[2], 'content-type': "application/json", 'cache-control': "no-cache" } response = requests.request("PATCH", url, data=payload, headers=headers, verify=False) print(response.text) Now you can execute the following: $ python Python-Modify_Auth_Token_Timeout.py 10.128.1.130 5MN24CKDPCR7POARJ3FWTIALF5 34000 This allows you to change the Host, Token and Timeout as required and can be used for many other operations beyond Auth Token Timeout. Tip 2 - Make your script executable More on the theme of efficiency, you can eliminate the requirement to type ‘python' before the script. This requires two things. 1) Tell the command shell what runtime to execute against, and 2) change the attribute of the file to executable. Step 1:add this as the first line in the script: #!/usr/bin/env python Step 2:Change the attribute of the file so that it is executable (on OS X 10.11.6 - same for *nix) $ chmod u+x Python-Modify_Auth_Token_Timeout.py Now you can execute the script like this (without the preceding ’node’): $ ./Python-Modify_Auth_Token_Timeout.py 10.128.1.130 5MN24CKDPCR7POARJ3FWTIALF5 36000 Neat, right?! Python Summary We’ve reviewed the three most common types of transaction you will perform against the iWorkflow REST API: GET, POST, and PATCH. Versions of each of the scripts above, with support for command line arguments, have been posted to Github and can be found here: Get Auth Token:https://github.com/npearce/F5_iWorkflow_REST_API_Commands/blob/master/Python-Request_Auth_Token.py Modify Auth Token Timeout:https://github.com/npearce/F5_iWorkflow_REST_API_Commands/blob/master/Python-Modify_Auth_Token_Timeout.py List L4 - L7 Service Templates:https://github.com/npearce/F5_iWorkflow_REST_API_Commands/blob/master/Python-List_L4-L7_Service_Templates.py Conclusion In this article we’ve extended upon what we learned using POSTMAN collections in iWorkflow 201 episodes #01 and #02, and are now performing some basic actions from the popular scripting languages, Javascript and Python. Hopefully this has explained how you can start automating basic tasks, which will free you up to do more! These introduction steps are the first phase of automation that can then be leveraged with 3rd party management and orchestration systems. Here’s a video of the exercises above:456Views0likes0CommentsiWorkflow 201 (episode #02) - Deploying a services template via the iControl REST API [End of Life]

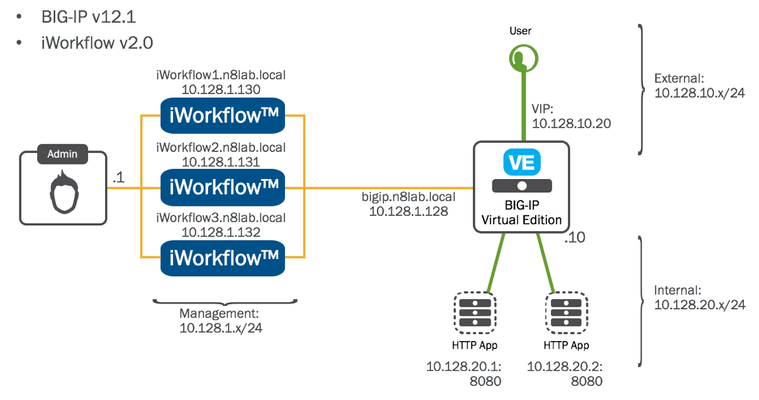

The F5 and Cisco APIC integration based on the device package and iWorkflow is End Of Life. The latest integration is based on the Cisco AppCenter named ‘F5 ACI ServiceCenter’. Visit https://f5.com/cisco for updated information on the integration. In iWorkflow 101 (episode #03) - Deploying a services template via the Tenant GUI we deployed an L4 - L7 Service onto a BIG-IP device via the iWorkflow Tenant web interface. In that episode we logged into the iWorkflow platform as an Administrator and setup a BIG-IP Connector, created a Tenant, and added an L4 - l7 Service Template to the Tenant Catalog. We then logged in to the iWorkflow Platform GUI as a Tenant and deployed L4 - L7 Service via the Tenant catalog. Oh the fun we had! Well, in this episode we will perform the Tenant deployment via the iControl REST API. The lab environment For this episode we will be communicating with “iworkflow1.n8lab.local” on “10.128.1.130". Communication with the iWorkflow REST API with be performed using the Google Chrome app, POSTMAN. For more details on using POSTMAN to communicate with the REST API please review the previous episode:iWorkflow 201 (episode #01) - Introducing the iControl REST API. Understanding iWorkflow Roles: the Provider/Tenant model Before we execute any more commands, its time for a quick refresh on iWorkflow’s Provider/Tenant model. In iWorkflow 101 (episode #03) - Deploying a service template via the Tenant GUIwe split the article into two parts. Part 1 was performed by using iWorkflow administrator credentials and in that part we configured the platform by adding connectors, templates, catalog’s, users, etc. These steps made the platform ready for service deployments. Next, covered in Part 2 of the article, we logged in using iWorkflow Tenant credentials and deployed an L4 - L7 Service. An Administrator cannot deploy an application delivery policy onto a BIG-IP and a Tenant cannot modify the operation or integration of the iWorkflow platform. These roles apply just the same via the REST API as they do in the GUI. For this episode, iworkflow1.n8lab.local has two users on it: 1) the default ‘admin’ account, which is an iWorkflow Administrator role, and 2) the 'User1'Tenant account wecreated back iniWorkflow 101 (episode #2) - Install and Setup. To review the differing behavior of these roles lets first perform a GET request to the following REST collection as an administrator role: https://ip_address/mgmt/shared/resolver/device-groups/cm-cloud-managed-devices/devices You’ll receive a list of devices including the members of the iWorkflow cluster and the bigip.n8lab.local depicted in the diagram at the start of the article. Now, perform the same transaction after changing the user credentials from “admin" to “User1”: Execute the command again. You will receive an error like the following: The Tenant doesn’t have permission to access ALL of the iWorkflow resources. This is by design. iWorkflow provides highly-granular, per-Tenantaccess control. While we will go through the iWorkflow role-based access control (RBAC) in detail in a future episode, it is important to understand that a Provider/Tenant model is in play and that it applies to the iWorkflow REST API just as it does to the iWorkflow GUI. While there are a few minor exceptions to this rule, the default access policy applied to a User account is inherited from the iWorkflow Tenant(s) that the User has been granted access. Note in the diagram above that access is specific to the Tenant name and its child sub-collections and resource. For example, in the diagram above, the resources all start with: /mgmt/cm/cloud/tenants/MyTenant/ Anywho, more on RBAC in a different episode! So, how does a Tenant view its available resources? Take a look at the iWorkflow Connectors:https://10.128.1.130/mgmt/cm/cloud/tenants/MyTenant/connectors As discussed in iWorkflow 101 (episode #1) - The Architecture Explainedthe iWorkflow connectors are the conduits to BIG-IP resources, in addition to third-party environments like Cisco ACI and VMware NSX. The connectors are created by iWorkflow Administrators under the ‘Clouds’ tab and are then associated with iWorkflow Tenants. In this environment we have only a ‘Local’ BIG-IP connector. You may be asking why the Tenant cannot list the BIG-IP devices in their connector. This is because the Tenants job is to deploy L4 - L7 Services, which are pushed to the available BIG-IP’s. The Tenant doesn’t manage the BIG-IPs. Put another way, this is not a BIG-IP/device centric perspective and such enables a simpler self-service model. With the iWorkflow Tenant/Provider model refresh out of the way lets get back to deploying an L4 - L7 Service via REST. Token Auth & Some POSTMAN Pro-tips We introduced the POSTMAN tool in the last episode. POSTMAN isn’t the only tool that can communicate with iWorkflow. You can take any of these examples and perform them via scripting languages or directly from 3rd party orchestration tools. I use POSTMAN to show examples while remaining both scripting language and orchestration tool agnostic. In the following video we will explain POSTMAN collections and environment variables. To show how these work we will walk through the exercise of using iWorkflow Auth Tokens so you no longer need to send your credentials back and for the across the network for every request. So, sit back and learn how to use iWorkflow Auth Tokens while also learning how to be really efficient with POSTMAN. NOTE: If you’re trying these exercises out in a lab then, like me, you are probably using self-signed SSL certificates on your iWorkflow platform. POSTMAN doesn’t handle these as gracefully as a web browser so you might want to take a look at this (instructions for Mac, Windows, and Linux):http://blog.getpostman.com/2014/01/28/using-self-signed-certificates-with-postman/ Review: The iWorkflow REST API calls made in this video (using the environment variables for “iWorkflow_Mgmt_IP" and “iWorkflow1_Auth_Token”) were: https://{{iWorkflow_Mgmt_IP}}/mgmt/shared/authn/login https://{{iWorkflow_Mgmt_IP}}/mgmt/shared/authz/tokens/{{iWorkflow1_Auth_Token}} https://{{iWorkflow_Mgmt_IP}}/mgmt/cm/cloud/tenants Links referenced in this video: The “F5_iWorkflow_REST_Commands” GitHub repository can be found here:https://github.com/npearce/F5_iWorkflow_REST_API_Commands The RAW files that were imported from GitHub in the video above are here (you can import these yourself): The POSTMAN environment:https://raw.githubusercontent.com/npearce/F5_iWorkflow_REST_API_Commands/master/iWorkflow%20Lab.postman_environment.json The Auth Token POSTMAN Collection:https://raw.githubusercontent.com/npearce/F5_iWorkflow_REST_API_Commands/master/F5%20iWorkflow%20REST%20API%20-%20Auth%20Tokens.postman_collection.json Pre-launch check-list We’re using the same n8lab.local environment (see diagram at the top) that starred in previous episodes. Within n8lab.local we’ve already discovered a BIG-IP device, created a local BIG-IP Cloud connector, and an iWorkflow Tenant. That’s the ‘administrator’ role tasks taken care of (FYI: those administrator tasks can also be performed via REST). So, lets now perform the Tenant L4 - L7 Service deployment via the iWorkflow REST API! Step #1: The iWorkflow Tenant REST perspective Lest take a walk through the iWorkflow objects via the REST API. There are some small exceptions to this rule but, MOST of the Tenant activity happens below the Tenant assigned REST collection. In this lab, that refers to child resources and sub-collections of: https://{{iWorkflow_Mgmt_IP}}/mgmt/cm/cloud/tenants/myTenant1/ NOTE: Before we go making any transactions remember, if you’ve downloaded the POST collection from Github, make sure the current Auth Token is for the “User1” credentials and not the “admin” user. Refer to the diagram below: You’ll see after the credentials change that if you try and run “List Tenants” in the Auth Token POSTMAN collection it will fail. The Tenant User is not permitted to see all the tenants. Hence, calling this /mgmt/cm/cloud/tenants REST collection will report a 401 Unauthorized error. However, if you reference a specific REST collection that the Tenant User is assigned to, you will receive happy data. Using the ‘User1’ Auth Token, lets call "/mgmt/cm/cloud/tenants/myTenant1" List the L4 - L7 Services deployed by this Tenant: “/mgmt/cm/cloud/tenants/myTenant1/services/iapp/" Step #2: Review the resources In the iWorkflow 101 series we’ve already established that “User1" has been associated with “myTenant1” (an iWorkflow Tenant is a collection of resources that facilitates service deployments). We saw that myTenant1 has a local BIG-IP connector, and an L4 - L7 ServiceCatalog that contains the L4 - L7 Service Template “f5.http_ServiceTypeA”. We’ve looked at these resources via the GUI so now we can take a look at them via the REST API. In the video below we take a look at the resources available to “User1” in the following order: The Roles this user has been granted:/mgmt/shared/authz/roles The connectors associated with myTenant1: “/mgmt/cm/cloud/tenants/myTenant1/connectors" There servers deployed through myTenant1 (both Virtual's and Pool members): “/mgmt/cm/cloud/tenants/myTenant1/virtual-servers/" The L4 - L7 Service Templates available to User1 through its Tenant assignments (we only have one Tenant in this lab):/mgmt/cm/cloud/tenant/templates/iapp/ The L4 - L7 Services that have been deployed already using the myTenant1 service templates:/mgmt/cm/cloud/tenants/myTenant1/services/iapp/ Having familiarized ourselves with the various iWorkflow objects we used in the 101 series, we may now deploy an L4 - L7 Service via the iWorkflow REST API. Step #3: Deploying an L4 - L7 Service Time to hit the Go button. As per the video below, to deploy an L4 - L7 Service, we POST to to the Tenant resource (.../myTenant/services/iapp). For example, to deploy using the“f5.http_ServiceTypeA” service templates, which is in the “myTenant1” service catalog, we would execute a POST with a JSON payload as follows: POSThttps://{{iWorkflow1_Mgmt_IP}}/mgmt/cm/cloud/tenants/myTenant1/services/iapp { "name":"myTestDeployment", "tenantTemplateReference":{ "link":"https://localhost/mgmt/cm/cloud/tenant/templates/iapp/f5.http_ServiceTypeA" }, "properties":[ { "id":"cloudConnectorReference", "value":"https://localhost/mgmt/cm/cloud/connectors/local/bea388b8-46f8-4363-9f89-d8920ea8931f" } ], "tables":[ { "name":"pool__hosts", "columns":["name"], "rows":[["acme.com"]] }, { "name":"pool__members", "columns":["addr", "port"], "rows":[ ["10.128.20.1", "8080"], ["10.128.20.2", "8080"] ] } ], "vars":[ { "name":"pool__addr", "value":"10.128.10.21" } ] } Note the reference to the Service Template and the connector within the JSON body of the post. This is followed by the deployment specific details. Watch it all happen in the video below. The POSTMAN collection for this episode can be imported from Github using this link: https://raw.githubusercontent.com/npearce/F5_iWorkflow_REST_API_Commands/master/F5%20iWorkflow%20REST%20API%20-%20Tenant%20L4%20-%20L7%20Service%20Deployment.postman_collection.json404Views0likes1CommentiWorkflow 101 (episode #2) - Install and Setup

Last weeks episode,The Architecture Explained,drew a lot of attention. The most frequent request was for video content showing the iWorkflow interface and how the Tenants and connectors are configured (if you don’t understand those themes, take a look at Episode #1). Well, this week we’ll do precisely that and take a look at the interface. To avoid ending up with one long video I’m going to break it into two parts: Install and Licensing of the iWorkflow Platform Overview of the Administrative GUI and basic Setup Acquiring a copy of iWorkflow iWorkflow will be available for download mid-June. When released, it will be made available via https://downloads.f5.com/ NOTE: iWorkflow is not available as a hardware appliance. For the purpose of this demonstration I’ll be importing the OVA image into VMware Fusion v7.1.2 on my MacBook running Apple OS X 10.11.5 (El Capitan). Initial Setup iWorkflow boots just like any Linux-based virtual machine. To start working with its administrative interface, you’ll need to connect it to a network. You require: Management IP Address/Netmask Default Gateway (Optional) DNS (Optional) If you want to automate some of the license activation process, you will require DNS and a Default Gateway that can reach F5’s activations servers. I have listed them as ‘Optional' here because you can manually copy and paste the licensing data to activate. For the purpose of this demonstration I will go through the activation process manually to show all the steps. The environment we are building out will look as follows: Details of the environment: LAB Domain: n8lab.local Management: 10.128.1.x/24 Internet (external): 10.128.10.x/24 Servers (internal): 10.128.20.x/24 iWorkflow™ 2.0 Management – 10.128.1.130 BIG-IP™ v11.6 HF6 Management – 10.128.1.128 External Self IP – 10.128.10.10 Internal Self IP – 10.128.20.10 Virtual IP – 10.128.10.20 Web Server #1 – 10.128.20.1:8080 Web Server #2 – 10.128.20.1:8081 Install & License In the following video we will cover all of the steps to install and license the iWorkflow platform: Discover a BIG-IP Device Now that we have iWorkflow licensed and networked we need to configure it for use. The next step in this episode will be to discover a device (a BIG-IP) which will be used in next weeks episode titled, "iWorkflow 101- episode #3 - Deploying a Services Template via the iWorkflow GUI”. Once we’ve discovered a BIG-IP device, we need to create the conduit through which a Tenant deploys application services templates (F5 iApps). These conduits, or connectors, are referred to as iWorkflow Clouds. iWorkflow supports a number of Clouds but, for this 101 episode we’ll use the BIG-IP connector, which is suitable for most environments. In the following video we’ll take a look at the Administrative GUI, show how to import a BIG-IP Device, and lastly, create a connector and Tenant to support deploying application delivery policy. Conclusion Three main things to remember: NTP - very important to keep iWorkflow and the BIG-IPs in time sync Registration key: we’ll provide an evaluation license process once iWorkflow is released in mid-June. NTP - in case I didn’t mention this already. In the next episode we will create a Tenant Catalog with an Application Services Template. We will then login to the iWorkflow platform as a Tenant and deploy our first configuration.398Views0likes1CommentF5 in Container Environments: BIG-IP and Beyond

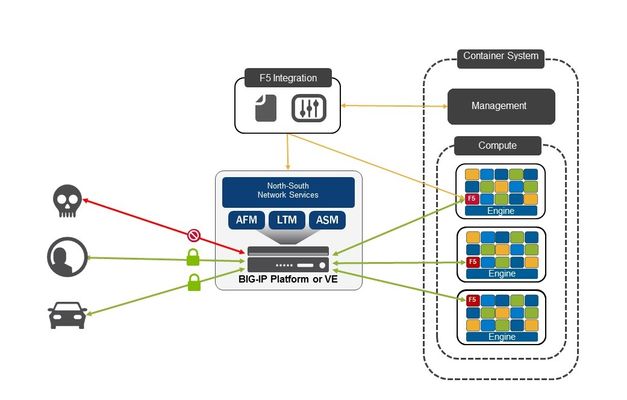

Container systems like Docker are cool. From some tinkerer like me who loves to be able to create a working webserver with a single command and have it run anywhere, to serious compute projects using Docker integrated with tools like Jenkins and GitHub allowing developers to write code then build, test and deploy it automatically while they get on with the next revision, Docker has become a an overnight (OK, well, three year) success story. But the magic word there is integrated. Because what turns a cool container technology from an interesting tool to a central part of your IT infrastructure is the ability to take the value it brings (in this case light weight, fast, run anywhere execution environments) and make it work effectively with other parts of the environment. To become a credible platform for enterprise IT, we need tools to orchestrate the lifecycle of containers and to manage features such as high availability and scheduling. Luckily we’re now well supplied with tools like Mesos Marathon, Docker Swarm, Kubernetes, and a host of others. You also need rest of the infrastructure to be as agile and integrated as the container management system. When you have the ability to spin up applications on demand, in seconds, you want the systems that manage application traffic to be part of the process, tightly coupled with the systems that are creating the applications and services. Which is where F5 comes in. We are committed to building services that integrate with the tools that you use to manage the rest of your environment, so that you can rely on F5 to be protecting, accelerating and managing your application traffic with the same flexibility and agility that you need elsewhere. Our vision is of an architecture where F5 components subscribe to events from container management systems, then create the right application delivery services in the right place to service traffic to the new containers. This might be something simple, such as just adding a new container to an existing pool. It might mean creating a whole new configuration for a new application or service, with the right levels of security and control. It might even mean deploying a whole new platform to perform these services. Maybe it will deploy a BIG-IP Virtual Edition with all the features and functions you expect from F5. But perhaps we need something new. A lighter weight platform that can deal well with East-West traffic in a micro services environment – while a BIG-IP is managing the North-South client traffic and defending the perimeter? f you think this sounds interesting, then I’d encourage you to watch this space. If you think it sounds really interesting and you will be at Dockercon in Seattle during the week of June 20th you should head to an evening panel discussion hosted by our friends at Skytap on June 21 where F5’er Shawn Wormke will be able to tell you (a little) more.377Views0likes0CommentsLightboard Lessons: BIG-IP in the public cloud

In this episode, Jason talks about some of common ways people refer to "cloud" and how BIG-IP fits into those molds in the Amazon, Google, and Microsoft cloud offerings. Resources BIG-IP VE Setup - Amazon Web Services BIG-IP VE Setup - Google Cloud BIG-IP VE Setup - Microsoft Azure Getting Started with Ansible AWS Cloud Formation Templates iWorkFlow 101 Series (Youtube)363Views0likes0Comments