Controlling a Pool Members Ratio and Priority Group with iControl

A Little Background A question came in through the iControl forums about controlling a pool members ratio and priority programmatically. The issue really involves how the API’s use multi-dimensional arrays but I thought it would be a good opportunity to talk about ratio and priority groups for those that don’t understand how they work. In the first part of this article, I’ll talk a little about what pool members are and how their ratio and priorities apply to how traffic is assigned to them in a load balancing setup. The details in this article were based on BIG-IP version 11.1, but the concepts can apply to other previous versions as well. Load Balancing In it’s very basic form, a load balancing setup involves a virtual ip address (referred to as a VIP) that virtualized a set of backend servers. The idea is that if your application gets very popular, you don’t want to have to rely on a single server to handle the traffic. A VIP contains an object called a “pool” which is essentially a collection of servers that it can distribute traffic to. The method of distributing traffic is referred to as a “Load Balancing Method”. You may have heard the term “Round Robin” before. In this method, connections are passed one at a time from server to server. In most cases though, this is not the best method due to characteristics of the application you are serving. Here are a list of the available load balancing methods in BIG-IP version 11.1. Load Balancing Methods in BIG-IP version 11.1 Round Robin: Specifies that the system passes each new connection request to the next server in line, eventually distributing connections evenly across the array of machines being load balanced. This method works well in most configurations, especially if the equipment that you are load balancing is roughly equal in processing speed and memory. Ratio (member): Specifies that the number of connections that each machine receives over time is proportionate to a ratio weight you define for each machine within the pool. Least Connections (member): Specifies that the system passes a new connection to the node that has the least number of current connections in the pool. This method works best in environments where the servers or other equipment you are load balancing have similar capabilities. This is a dynamic load balancing method, distributing connections based on various aspects of real-time server performance analysis, such as the current number of connections per node or the fastest node response time. Observed (member): Specifies that the system ranks nodes based on the number of connections. Nodes that have a better balance of fewest connections receive a greater proportion of the connections. This method differs from Least Connections (member), in that the Least Connections method measures connections only at the moment of load balancing, while the Observed method tracks the number of Layer 4 connections to each node over time and creates a ratio for load balancing. This dynamic load balancing method works well in any environment, but may be particularly useful in environments where node performance varies significantly. Predictive (member): Uses the ranking method used by the Observed (member) methods, except that the system analyzes the trend of the ranking over time, determining whether a node's performance is improving or declining. The nodes in the pool with better performance rankings that are currently improving, rather than declining, receive a higher proportion of the connections. This dynamic load balancing method works well in any environment. Ratio (node): Specifies that the number of connections that each machine receives over time is proportionate to a ratio weight you define for each machine across all pools of which the server is a member. Least Connections (node): Specifies that the system passes a new connection to the node that has the least number of current connections out of all pools of which a node is a member. This method works best in environments where the servers or other equipment you are load balancing have similar capabilities. This is a dynamic load balancing method, distributing connections based on various aspects of real-time server performance analysis, such as the number of current connections per node, or the fastest node response time. Fastest (node): Specifies that the system passes a new connection based on the fastest response of all pools of which a server is a member. This method might be particularly useful in environments where nodes are distributed across different logical networks. Observed (node): Specifies that the system ranks nodes based on the number of connections. Nodes that have a better balance of fewest connections receive a greater proportion of the connections. This method differs from Least Connections (node), in that the Least Connections method measures connections only at the moment of load balancing, while the Observed method tracks the number of Layer 4 connections to each node over time and creates a ratio for load balancing. This dynamic load balancing method works well in any environment, but may be particularly useful in environments where node performance varies significantly. Predictive (node): Uses the ranking method used by the Observed (member) methods, except that the system analyzes the trend of the ranking over time, determining whether a node's performance is improving or declining. The nodes in the pool with better performance rankings that are currently improving, rather than declining, receive a higher proportion of the connections. This dynamic load balancing method works well in any environment. Dynamic Ratio (node) : This method is similar to Ratio (node) mode, except that weights are based on continuous monitoring of the servers and are therefore continually changing. This is a dynamic load balancing method, distributing connections based on various aspects of real-time server performance analysis, such as the number of current connections per node or the fastest node response time. Fastest (application): Passes a new connection based on the fastest response of all currently active nodes in a pool. This method might be particularly useful in environments where nodes are distributed across different logical networks. Least Sessions: Specifies that the system passes a new connection to the node that has the least number of current sessions. This method works best in environments where the servers or other equipment you are load balancing have similar capabilities. This is a dynamic load balancing method, distributing connections based on various aspects of real-time server performance analysis, such as the number of current sessions. Dynamic Ratio (member): This method is similar to Ratio (node) mode, except that weights are based on continuous monitoring of the servers and are therefore continually changing. This is a dynamic load balancing method, distributing connections based on various aspects of real-time server performance analysis, such as the number of current connections per node or the fastest node response time. L3 Address: This method functions in the same way as the Least Connections methods. We are deprecating it, so you should not use it. Weighted Least Connections (member): Specifies that the system uses the value you specify in Connection Limit to establish a proportional algorithm for each pool member. The system bases the load balancing decision on that proportion and the number of current connections to that pool member. For example,member_a has 20 connections and its connection limit is 100, so it is at 20% of capacity. Similarly, member_b has 20 connections and its connection limit is 200, so it is at 10% of capacity. In this case, the system select selects member_b. This algorithm requires all pool members to have a non-zero connection limit specified. Weighted Least Connections (node): Specifies that the system uses the value you specify in the node's Connection Limitand the number of current connections to a node to establish a proportional algorithm. This algorithm requires all nodes used by pool members to have a non-zero connection limit specified. Ratios The ratio is used by the ratio-related load balancing methods to load balance connections. The ratio specifies the ratio weight to assign to the pool member. Valid values range from 1 through 100. The default is 1, which means that each pool member has an equal ratio proportion. So, if you have server1 a with a ratio value of “10” and server2 with a ratio value of “1”, server1 will get served 10 connections for every one that server2 receives. This can be useful when you have different classes of servers with different performance capabilities. Priority Group The priority group is a number that groups pool members together. The default is 0, meaning that the member has no priority. To specify a priority, you must activate priority group usage when you create a new pool or when adding or removing pool members. When activated, the system load balances traffic according to the priority group number assigned to the pool member. The higher the number, the higher the priority, so a member with a priority of 3 has higher priority than a member with a priority of 1. The easiest way to think of priority groups is as if you are creating mini-pools of servers within a single pool. You put members A, B, and C in to priority group 5 and members D, E, and F in priority group 1. Members A, B, and C will be served traffic according to their ratios (assuming you have ratio loadbalancing configured). If all those servers have reached their thresholds, then traffic will be distributed to servers D, E, and F in priority group 1. he default setting for priority group activation is Disabled. Once you enable this setting, you can specify pool member priority when you create a new pool or on a pool member's properties screen. The system treats same-priority pool members as a group. To enable priority group activation in the admin GUI, select Less than from the list, and in the Available Member(s) box, type a number from 0 to 65535 that represents the minimum number of members that must be available in one priority group before the system directs traffic to members in a lower priority group. When a sufficient number of members become available in the higher priority group, the system again directs traffic to the higher priority group. Implementing in Code The two methods to retrieve the priority and ratio values are very similar. They both take two parameters: a list of pools to query, and a 2-D array of members (a list for each pool member passed in). long [] [] get_member_priority( in String [] pool_names, in Common__AddressPort [] [] members ); long [] [] get_member_ratio( in String [] pool_names, in Common__AddressPort [] [] members ); The following PowerShell function (utilizing the iControl PowerShell Library), takes as input a pool and a single member. It then make a call to query the ratio and priority for the specific member and writes it to the console. function Get-PoolMemberDetails() { param( $Pool = $null, $Member = $null ); $AddrPort = Parse-AddressPort $Member; $RatioAofA = (Get-F5.iControl).LocalLBPool.get_member_ratio( @($Pool), @( @($AddrPort) ) ); $PriorityAofA = (Get-F5.iControl).LocalLBPool.get_member_priority( @($Pool), @( @($AddrPort) ) ); $ratio = $RatioAofA[0][0]; $priority = $PriorityAofA[0][0]; "Pool '$Pool' member '$Member' ratio '$ratio' priority '$priority'"; } Setting the values with the set_member_priority and set_member_ratio methods take the same first two parameters as their associated get_* methods, but add a third parameter for the priorities and ratios for the pool members. set_member_priority( in String [] pool_names, in Common::AddressPort [] [] members, in long [] [] priorities ); set_member_ratio( in String [] pool_names, in Common::AddressPort [] [] members, in long [] [] ratios ); The following Powershell function takes as input the Pool and Member with optional values for the Ratio and Priority. If either of those are set, the function will call the appropriate iControl methods to set their values. function Set-PoolMemberDetails() { param( $Pool = $null, $Member = $null, $Ratio = $null, $Priority = $null ); $AddrPort = Parse-AddressPort $Member; if ( $null -ne $Ratio ) { (Get-F5.iControl).LocalLBPool.set_member_ratio( @($Pool), @( @($AddrPort) ), @($Ratio) ); } if ( $null -ne $Priority ) { (Get-F5.iControl).LocalLBPool.set_member_priority( @($Pool), @( @($AddrPort) ), @($Priority) ); } } In case you were wondering how to create the Common::AddressPort structure for the $AddrPort variables in the above examples, here’s a helper function I wrote to allocate the object and fill in it’s properties. function Parse-AddressPort() { param($Value); $tokens = $Value.Split(":"); $r = New-Object iControl.CommonAddressPort; $r.address = $tokens[0]; $r.port = $tokens[1]; $r; } Download The Source The full source for this example can be found in the iControl CodeShare under PowerShell PoolMember Ratio and Priority.27KViews0likes3CommentsAccessing TCP Options from iRules

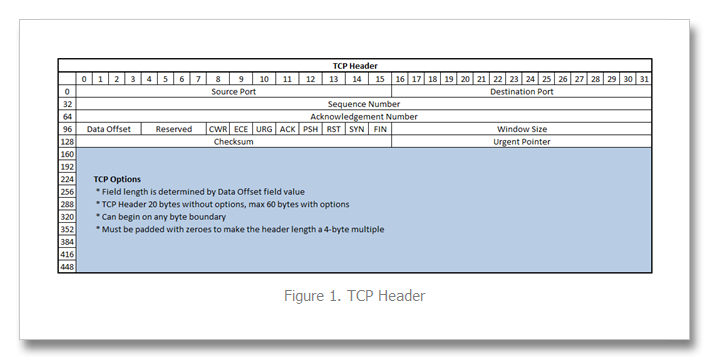

I’ve written several articles on the TCP profile and enjoy digging into TCP. It’s a beast, and I am constantly re-learning the inner workings. Still etched in my visual memory map, however, is the TCP header format, shown in Figure 1 below. Since 9.0 was released, TCP payload data (that which comes after the header) has been consumable in iRules via the TCP::payload and the port information has been available in the contextual commands TCP::local_port/TCP::remote_port and of course TCP::client_port/TCP::server_port. Options, however, have been inaccessible. But beginning with version 10.2.0-HF2, it is now possible to retrieve data from the options fields. Preparing the BIG-IP Prior to version 11.0, it was necessary to set a bigpipe database key with the option (or options) of interest: bigpipe db Rules.Tcpoption.settings [option, first|last], [option, first|last] In version 11.0 and forward, the DB keys are no more and you need to create a tcp profile with the these options defined, like so: ltm profile tcp tcp_opt { app-service none tcp-options "{option first|last} {option first|last}" } The option is an integer between 2 and 255, and the first/last setting indicates whether the system will retain the first or last instance of the specified option. Once that key is set, you’ll need to do a bigstart restart for it to take (warning: service impacting). Note also that the LTM only collects option data starting with the ACK of a connection. The initial SYN is ignored even if you select the first keyword. This is done to prevent a SYN flood attack (in keeping with SYN-cookies). A New iRules Command: TCP::option The TCP::option command has the following syntax: TCP::option get <option> v11 Additions/Changes: TCP::option set <option number> <value> <next|all> TCP::option noset <option number> Pretty simple, no? So now that you can access them, what fun can be had? Real World Scenario: Akamai In Akamai’s IPA and SXL product lines, they support client IP visibility by embedding a version number (one byte) and an IPv4 address (four bytes) as part of their overlay path feature in tcp option number 28. To access this data, we first set the database key: tmsh create ltm profile tcp tcp_opt tcp-options "{28 first}" Now, the iRule utilizing the TCP::option command: when CLIENT_ACCEPTED { set opt28 [TCP::option get 28] if { [string length $opt28] == 5 } { binary scan $opt28 cH8 ver addr if { $ver != 1 } { log local0. "Unsupported Akamai version: $ver" } else { scan $addr "%2x%2x%2x%2x" ip1 ip2 ip3 ip4 set optaddr "$ip1.$ip2.$ip3.$ip4" } } } when HTTP_REQUEST { if { [info exists optaddr] } { HTTP::header insert "X-Forwarded-For" $optaddr } } The Akamai version should be one, so we log if not. Otherwise, we take the address (stored in the variable addr in hex) and scan it to get the decimal equivalents to build the address for inserting in the X-Forwarded-For header. Cool, right? Also cool—along with the new TCP::option command , an extension was made to the IP::addr command to parse binary fields into a dotted decimal IP address. This extension is also available beginning in 10.2.0-HF2, but extended in 11.0. Here’s the syntax: IP::addr parse [-ipv4 | -ipv6 [swap]] <binary field> [<offset>] So for example, if you had an IPv6 address in option 28 with a 1 byte offset, you would parse that like: log local0. "IP::addr parse IPv6 output: [IP::addr parse -ipv6 [TCP::option get 28] 1]" ## Log Result ## May 27 21:51:34 ltm13 info tmm[27207]: Rule /Common/tcpopt_test <CLIENT_ACCEPTED>: IP::addr parse IPv6 output: 2601:1930:bd51:a3e0:20cd:a50b:1cc1:ad13 But in the context of our TCP option, we have 5-bytes of data with the first byte not mattering in the context of an address, so we get at the address with this: set optaddr [IP::addr parse -ipv4 [TCP::option get 28] 1] This cleans up the rule a bit: when CLIENT_ACCEPTED { set opt28 [TCP::option get 28] if { [string length $opt28] == 5 } { binary scan $opt c ver if { $ver != 1 } { log local0. "Unsupported Akamai version: $ver" } else { set optaddr [IP::addr parse -ipv4 $opt28 1] } } } when HTTP_REQUEST { if { [info exists optaddr] } { HTTP::header insert "X-Forwarded-For" $optaddr } } No need to store the address in the first binary scan and no need for the scan command at all so I eliminated those. Setting a forwarding header is not the only thing we can do with this data. It could also be shipped off to a logging server, or used as a snat address (assuming the server had either a default route to the BIG-IP, or specific routes for the customer destinations, which is doubtful). Logging is trivial, shown below with the log command. The HSL commands could be used in lieu of log if sending off-box to a log server. when CLIENT_ACCEPTED { set opt28 [TCP::option get 28] if { [string length $opt28] == 5 } { binary scan $opt c ver if { $ver != 1 } { log local0. "Unsupported Akamai version: $ver" } else { set optaddr [IP::addr parse -ipv4 $opt28 1] log local0. "Client IP extracted from Akamai TCP option is $optaddr" } } } If setting the provided IP as a snat address, you’ll want to make sure it’s a valid IP address before doing so. You can use the TCL catch command and IP::addr to perform this check as seen in the iRule below: when CLIENT_ACCEPTED { set addrs [list \ "192.168.1.1" \ "256.168.1.1" \ "192.256.1.1" \ "192.168.256.1" \ "192.168.1.256" \ ] foreach x $addrs { if { [catch {IP::addr $x mask 255.255.255.255}] } { log local0. "IP $x is invalid" } else { log local0. "IP $x is valid" } } } The output of this iRule: <CLIENT_ACCEPTED>: IP 192.168.1.1 is valid <CLIENT_ACCEPTED>: IP 256.168.1.1 is invalid <CLIENT_ACCEPTED>: IP 192.256.1.1 is invalid <CLIENT_ACCEPTED>: IP 192.168.256.1 is invalid <CLIENT_ACCEPTED>: IP 192.168.1.256 is invalid Adding this logic into a functional rule with snat: when CLIENT_ACCEPTED { set opt28 [TCP::option get 28] if { [string length $opt28] == 5 } { binary scan $opt c ver if { $ver != 1 } { log local0. "Unsupported Akamai version: $ver" } else { set optaddr [IP::addr parse -ipv4 $opt28 1] if { [catch {IP::addr $x mask 255.255.255.255}] } { log local0. "$optaddr is not a valid address" snat automap } else { log local0. "Akamai inserted Client IP is $optaddr. Setting as snat address." snat $optaddr } } } Alternative TCP Option Use Cases The Akamai solution shows an application implementation taking advantage of normally unused space in TCP headers. There are, however, defined uses for several option “kind” numbers. The list is available here: http://www.iana.org/assignments/tcp-parameters/tcp-parameters.xml. Some options that might be useful in troubleshooting efforts: Opkind 2 – Max Segment Size Opkind 3 – Window Scaling Opkind 5 – Selective Acknowledgements Opkind 8 – Timestamps Of course, with tcpdump you get all this plus the context of other header information and data, but hey, another tool in the toolbox, right? Addendum I've been working with F5 SE Leonardo Simon on on additional examples I wanted to share here that uses option 28 or 253 to extract an IPv6 address if the version is 34 and otherwise extracts an IPv4 address if the version is 1 or 2. Option 28 when CLIENT_ACCEPTED { set opt28 [TCP::option get 28] binary scan $opt28 c ver #log local0. "version: $ver" if { $ver == 34 } { set optaddr [IP::addr parse -ipv6 $opt28 1] log local0. "opt28 ipv6 address: $optaddr" } elseif { $ver == 1 || $ver == 2 } { set optaddr [IP::addr parse -ipv4 $opt28 1] log local0. "opt28 ipv4 address: $optaddr" } } Option 253 when CLIENT_ACCEPTED { set opt253 [TCP::option get 253] binary scan $opt253 c ver #log local0. "version: $ver" if { $ver == 34 } { set optaddr [IP::addr parse -ipv6 $opt253 1] log local0. "opt253 ipv6 address: $optaddr" } elseif { $ver == 1 || $ver == 2 } { set optaddr [IP::addr parse -ipv4 $opt253 1] log local0. "opt253 ipv4 address: $optaddr" } }17KViews2likes10CommentsAPM Configuration to Support Duo MFA using iRule

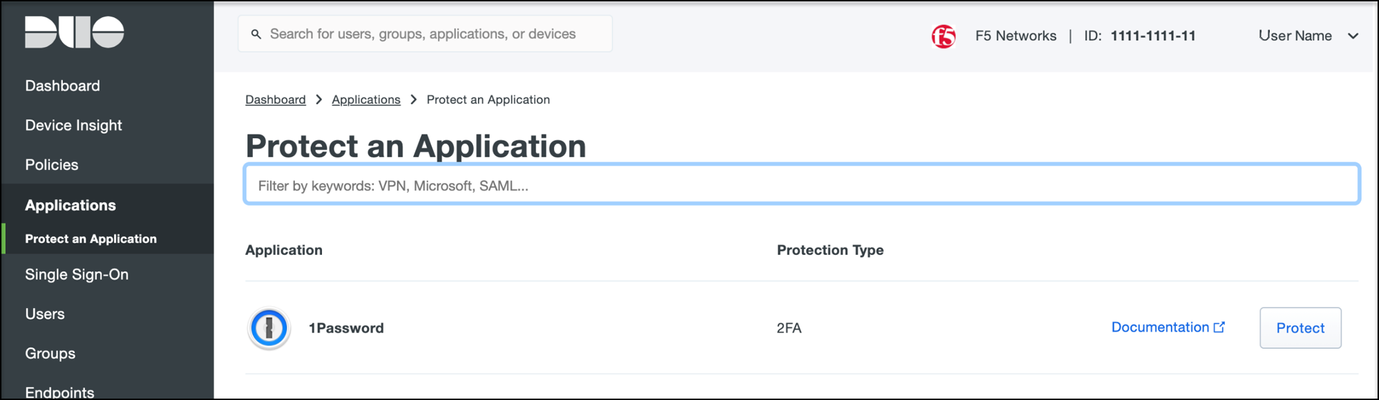

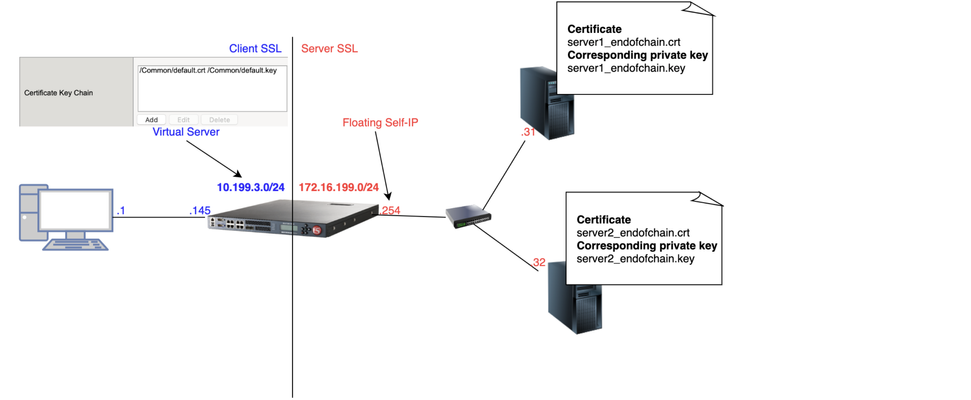

Overview BIG-IP APM has supported Duo as an MFA provider for a long time with RADIUS-based integration. Recently, Duo has added support for Universal Prompt that uses Open ID Connect (OIDC) protocol to provide two-factor authentication. To integrate APM as an OIDC client and resource server, and Duo as an Identity Provider (IdP), Duo requires the user’s logon name and custom parameters to be sent for Authentication and Token request. This guide describes the configuration required on APM to enable Duo MFA integration using an iRule. iRules addresses the custom parameter challenges by generating the needed custom values and saving them in session variables, which the OAuth Client agent then uses to perform MFA with Duo. This integration procedure is supported on BIG-IP versions 13.1, 14.1x, 15.1x, and 16.x. To integrate Duo MFA with APM, complete the following tasks: 1. Choose deployment type: Per-request or Per-session 2. Configure credentials and policies for MFA on the DUO web portal 3. Create OAuth objects on the BIG-IP system 4. Configure the iRule 5. Create the appropriate access policy/policies on the BIG-IP system 6. Apply policy/policies and iRule to the APM virtual server Choose deployment type APM supports two different types of policies for performing authentication functions. Per-session policies: Per-session policies provide authentication and authorization functions that occur only at the beginning of a user’s session. These policies are compatible with most APM use cases such as VPN, Webtop portal, Remote Desktop, federation IdP, etc. Per-request policies: Per-request policies provide dynamic authentication and authorization functionality that may occur at any time during a user’s session, such as step-up authentication or auditing functions only for certain resources. These policies are only compatible with Identity Aware Proxy and Web Access Management use cases and cannot be used with VPN or webtop portals. This guide contains information about setting up both policy types. Prerequisites Ensure the BIG-IP system has DNS and internet connectivity to contact Duo directly for validating the user's OAuth tokens. Configure credentials and policies for MFA on Duo web portal Before you can protect your F5 BIG-IP APM Web application with Duo, you will first need to sign up for a Duo account. 1. Log in to the Duo Admin Panel and navigate to Applications. 2. Click Protect an application. Figure 1: Duo Admin Panel – Protect an Application 3. Locate the entry for F5 BIG-IP APM Web in the applications list and click Protect to get the Client ID, Client secret, and API hostname. You will need this information to configure objects on APM. Figure 2: Duo Admin Panel – F5 BIG-IP APM Web 4. As DUO is used as a secondary authentication factor, the user’s logon name is sent along with the authentication request. Depending on your security policy, you may want to pre-provision users in Duo, or you may allow them to self-provision to set their preferred authentication type when they first log on. To add users to the Duo system, navigate to the Dashboard page and click the Add New...-> Add User button. A Duo username should match the user's primary authentication username. Refer to the https://duo.com/docs/enrolling-users link for the different methods of user enrollment. Refer to Duo Universal Prompt for additional information on Duo’s two-factor authentication. Create OAuth objects on the BIG-IP system Create a JSON web key When APM is configured to act as an OAuth client or resource server, it uses JSON web keys (JWKs) to validate the JSON web tokens it receives from Duo. To create a JSON web key: 1. On the Main tab, select Access > Federation > JSON Web Token > Key Configuration. The Key Configuration screen opens. 2. To add a new key configuration, click Create. 3. In the ID and Shared Secret fields, enter the Client ID and Client Secret values respectively obtained from Duo when protecting the application. 4. In the Type list, select the cryptographic algorithm used to sign the JSON web key. Figure 3: Key Configuration screen 5. Click Save. Create a JSON web token As an OAuth client or resource server, APM validates the JSON web tokens (JWT) it receives from Duo. To create a JSON web token: 1. On the Main tab, select Access > Federation > JSON Web Token > Token Configuration. The Token Configuration screen opens. 2. To add a new token configuration, click Create. 3. In the Issuer field, enter the API hostname value obtained from Duo when protecting the application. 4. In the Signing Algorithms area, select from the Available list and populate the Allowed and Blocked lists. 5. In the Keys (JWK) area, select the previously configured JSON web key in the allowed list of keys. Figure 4: Token Configuration screen 6. Click Save. Configure Duo as an OAuth provider APM uses the OAuth provider settings to get URIs on the external OAuth authorization server for JWT web tokens. To configure an OAuth provider: 1. On the Main tab, select Access > Federation > OAuth Client / Resource Server > Provider. The Provider screen opens. 2. To add a provider, click Create. 3. In the Name field, type a name for the provider. 4. From the Type list, select Custom. 5. For Token Configuration (JWT), select a configuration from the list. 6. In the Authentication URI field, type the URI on the provider where APM should redirect the user for authentication. The hostname is the same as the API hostname in the Duo application. 7. In the Token URI field, type the URI on the provider where APM can get a token. The hostname is the same as the API hostname in the Duo application. Figure 5: OAuth Provider screen 8. Click Finished. Configure Duo server for APM The OAuth Server settings specify the OAuth provider and role that Access Policy Manager (APM) plays with that provider. It also sets the Client ID, Client Secret, and Client’s SSL certificates that APM uses to communicate with the provider. To configure a Duo server: 1. On the Main tab, select Access > Federation > OAuth Client / Resource Server > OAuth Server. The OAuth Server screen opens. 2. To add a server, click Create. 3. In the Name field, type a name for the Duo server. 4. From the Mode list, select how you want the APM to be configured. 5. From the Type list, select Custom. 6. From the OAuth Provider list, select the Duo provider. 7. From the DNS Resolver list, select a DNS resolver (or click the plus (+) icon, create a DNS resolver, and then select it). 8. In the Token Validation Interval field, type a number. In a per-request policy subroutine configured to validate the token, the subroutine repeats at this interval or the expiry time of the access token, whichever is shorter. 9. In the Client Settings area, paste the Client ID and Client secret you obtained from Duo when protecting the application. 10. From the Client's ServerSSL Profile Name, select a server SSL profile. Figure 6: OAuth Server screen 11. Click Finished. Configure an auth-redirect-request and a token-request Requests specify the HTTP method, parameters, and headers to use for the specific type of request. An auth-redirect-request tells Duo where to redirect the end-user, and a token-request accesses the authorization server for obtaining an access token. To configure an auth-redirect-request: 1. On the Main tab, select Access > Federation > OAuth Client / Resource Server > Request. The Request screen opens. 2. To add a request, click Create. 3. In the Name field, type a name for the request. 4. For the HTTP Method, select GET. 5. For the Type, select auth-redirect-request. 6. As shown in Figure 7, specify the list of GET parameters to be sent: request parameter with value depending on the type of policy For per-request policy: %{subsession.custom.jwt_duo} For per-session policy: %{session.custom.jwt_duo} client_id parameter with type client-id response_type parameter with type response-type Figure 7: Request screen with auth-redirect-request (Use “subsession.custom…” for Per-request or “session.custom…” for Per-session) 7. Click Finished. To configure a token-request: 1. On the Main tab, select Access > Federation > OAuth Client / Resource Server > Request. The Request screen opens. 2. To add a request, click Create. 3. In the Name field, type a name for the request. 4. For the HTTP Method, select POST. 5. For the Type, select token-request. 6. As shown in Figure 8, specify the list of POST parameters to be sent: client_assertion parameter with value depending on the type of policy For per-request policy: %{subsession.custom.jwt_duo_token} For per-session policy: %{session.custom.jwt_duo_token} client_assertion_type parameter with value urn:ietf:params:oauth:client-assertion-type:jwt-bearer grant_type parameter with type grant-type redirect_uri parameter with type redirect-uri Figure 8: Request screen with token-request (Use “subsession.custom…” for Per-request or “session.custom…” for Per-session) 7. Click Finished. Configure the iRule iRules gives you the ability to customize and manage your network traffic. Configure an iRule that creates the required sub-session variables and usernames for Duo integration. Note: This iRule has sections for both per-request and per-session policies and can be used for either type of deployment. To configure an iRule: 1. On the Main tab, click Local Traffic > iRules. 2. To create an iRules, click Create. 3. In the Name field, type a name for the iRule. 4. Copy the sample code given below and paste it in the Definition field. Replace the following variables with values specific to the Duo application: <Duo Client ID> in the getClientId function with Duo Application ID. <Duo API Hostname> in the createJwtToken function with API Hostname. For example, https://api-duohostname.com/oauth/v1/token. <JSON Web Key> in the getJwkName function with the configured JSON web key. Note: The iRule ID here is set as JWT_CREATE. You can rename the ID as desired. You specify this ID in the iRule Event agent in Visual Policy Editor. Note: The variables used in the below example are global, which may affect your performance. Refer to the K95240202: Understanding iRule variable scope article for further information on global variables, and determine if you use a local variable for your implementation. proc randAZazStr {len} { return [subst [string repeat {[format %c [expr {int(rand() * 26) + (rand() > .5 ? 97 : 65)}]]} $len]] } proc getClientId { return <Duo Client ID> } proc getExpiryTime { set exp [clock seconds] set exp [expr $exp + 900] return $exp } proc getJwtHeader { return "{\"alg\":\"HS512\",\"typ\":\"JWT\"}" } proc getJwkName { return <JSON Web Key> #e.g. return "/Common/duo_jwk" } proc createJwt {duo_uname} { set header [call getJwtHeader] set exp [call getExpiryTime] set client_id [call getClientId] set redirect_uri "https://" set redirect [ACCESS::session data get "session.server.network.name"] append redirect_uri $redirect append redirect_uri "/oauth/client/redirect" set payload "{\"response_type\": \"code\",\"scope\":\"openid\",\"exp\":${exp},\"client_id\":\"${client_id}\",\"redirect_uri\":\"${redirect_uri}\",\"duo_uname\":\"${duo_uname}\"}" set jwt_duo [ ACCESS::oauth sign -header $header -payload $payload -alg HS512 -key [call getJwkName] ] return $jwt_duo } proc createJwtToken { set header [call getJwtHeader] set exp [call getExpiryTime] set client_id [call getClientId] set aud "<Duo API Hostname>/oauth/v1/token" #Example: set aud https://api-duohostname.com/oauth/v1/token set jti [call randAZazStr 32] set payload "{\"sub\": \"${client_id}\",\"iss\":\"${client_id}\",\"aud\":\"${aud}\",\"exp\":${exp},\"jti\":\"${jti}\"}" set jwt_duo [ ACCESS::oauth sign -header $header -payload $payload -alg HS512 -key [call getJwkName] ] return $jwt_duo } when ACCESS_POLICY_AGENT_EVENT { set irname [ACCESS::policy agent_id] if { $irname eq "JWT_CREATE" } { set ::duo_uname [ACCESS::session data get "session.logon.last.username"] ACCESS::session data set session.custom.jwt_duo [call createJwt $::duo_uname] ACCESS::session data set session.custom.jwt_duo_token [call createJwtToken] } } when ACCESS_PER_REQUEST_AGENT_EVENT { set irname [ACCESS::perflow get perflow.irule_agent_id] if { $irname eq "JWT_CREATE" } { set ::duo_uname [ACCESS::session data get "session.logon.last.username"] ACCESS::perflow set perflow.custom [call createJwt $::duo_uname] ACCESS::perflow set perflow.scratchpad [call createJwtToken] } } Figure 9: iRule screen 5. Click Finished. Create the appropriate access policy/policies on the BIG-IP system Per-request policy Skip this section for a per-session type deployment The per-request policy is used to perform secondary authentication with Duo. Configure the access policies through the access menu, using the Visual Policy Editor. The per-request access policy must have a subroutine with an iRule Event, Variable Assign, and an OAuth Client agent that requests authorization and tokens from an OAuth server. You may use other per-request policy items such as URL branching or Client Type to call Duo only for certain target URIs. Figure 10 shows a subroutine named duosubroutine in the per-request policy that handles Duo MFA authentication. Figure 10: Per-request policy in Visual Policy Editor Configuring the iRule Event agent The iRule Event agent specifies the iRule ID to be executed for Duo integration. In the ID field, type the iRule ID as configured in the iRule. Figure 11: iRule Event agent in Visual Policy Editor Configuring the Variable Assign agent The Variable Assign agent specifies the variables for token and redirect requests and assigns a value for Duo MFA in a subroutine. This is required only for per-request type deployment. Add sub-session variables as custom variables and assign their custom Tcl expressions as shown in Figure 12. subsession.custom.jwt_duo_token = return [mcget {perflow.scratchpad}] subsession.custom.jwt_duo = return [mcget {perflow.custom}] Figure 12: Variable Assign agent in Visual Policy Editor Configuring the OAuth Client agent An OAuth Client agent requests authorization and tokens from the Duo server. Specify OAuth parameters as shown in Figure 13. In the Server list, select the Duo server to which the OAuth client directs requests. In the Authentication Redirect Request list, select the auth-redirect-request configured earlier. In the Token Request list, select the token-request configured earlier. Some deployments may not need the additional information provided by OpenID Connect. You could, in that case, disable it. Figure 13: OAuth Client agent in Visual Policy Editor Per-session policy Configure the Per Session policy as appropriate for your chosen deployment type. Per-request: The per-session policy must contain at least one logon page to set the username variable in the user’s session. Preferably it should also perform some type of primary authentication. This validated username is used later in the per-request policy. Per-session: The per-session policy is used for all authentication. A per-request policy is not used. Figures 14a and 14b show a per-session policy that runs when a client initiates a session. Depending on the actions you include in the access policy, it can authenticate the user and perform actions that populate session variables with data for use throughout the session. Figure 14a: Per-session policy in Visual Policy Editor performs both primary authentication and Duo authentication (for per-session use case) Figure 14b: Per-session policy in Visual Policy Editor performs primary authentication only (for per-request use case) Apply policy/policies and iRule to the APM virtual server Finally, apply the per-request policy, per-session policy, and iRule to the APM virtual server. You assign iRules as a resource to the virtual server that users connect. Configure the virtual server’s default pool to the protected local web resource. Apply policy/policies to the virtual server Per-request policy To attach policies to the virtual server: 1. On the Main tab, click Local Traffic > Virtual Servers. 2. Select the Virtual Server. 3. In the Access Policy section, select the policy you created. 4. Click Finished. Figure 15: Access Policy section in Virtual Server (per-request policy) Per-session policy Figure 16 shows the Access Policy section in Virtual Server when the per-session policy is deployed. Figure 16: Access Policy section in Virtual Server (per-session policy) Apply iRule to the virtual server To attach the iRule to the virtual server: 1. On the Main tab, click Local Traffic > Virtual Servers. 2. Select the Virtual Server. 3. Select the Resources tab. 4. Click Manage in the iRules section. 5. Select an iRule from the Available list and add it to the Enabled list. 6. Click Finished.15KViews10likes47CommentsAPM-DHCP Access Policy Example and Detailed Instructions

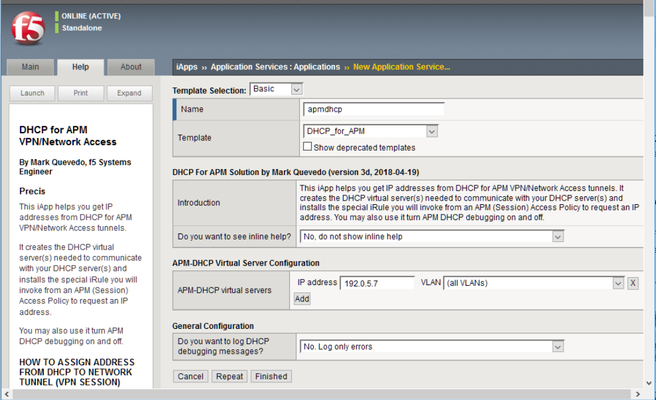

Prepared with Mark Quevedo, F5 Principal Software Engineer May, 2020 Sectional Navigation links Important Version Notes || Installation Guide || What Is Going On Here? || Parameters You Set In Your APM Access Policy || Results of DHCP Request You Use in Access Policy || Compatibility Tips and Troubleshooting Introduction Ordinarily you assign an IP address to the “inside end” of an APM Network Tunnel (full VPN connection) from an address Lease Pool, from a static list, or from an LDAP or RADIUS attribute. However, you may wish to assign an IP address you get from a DHCP server. Perhaps the DHCP server manages all available client addresses. Perhaps it handles dynamic DNS for named client workstations. Or perhaps the DHCP server assigns certain users specific IP addresses (for security filtering). Your DHCP server may even assign client DNS settings as well as IP addresses. APM lacks DHCP address assignment support (though f5's old Firepass VPN had it ). We will use f5 iRules to enable DHCP with APM. We will send data from APM session variables to the DHCP server so it can issue the “right” IP address to each VPN tunnel based on user identity, client info, etc. Important Version Notes Version v4c includes important improvements and bug fixes. If you are using an older version, you should upgrade. Just import the template with “Overwrite existing templates” checked, then “reconfigure” your APM-DHCP Application Service—you can simply click “Finished” without changing any options to update the iRules in place. Installation Guide First install the APM-DHCP iApp template (file DHCP_for_APM.tmpl). Create a new Application Service as shown (choose any name you wish). Use the iApp to manage the APM-DHCP virtual servers you need. (The iApp will also install necessary iRules.) You must define at least one APM-DHCP virtual server to receive and send DHCP packets. Usually an APM-DHCP virtual server needs an IP address on the subnet on which you expect your DHCP server(s) to assign client addresses. You may define additional APM-DHCP virtual servers to request IP addresses on additional subnets from DHCP. However, if your DHCP server(s) support subnet-selection (see session.dhcp.subnet below) then you may only need a single APM-DHCP virtual server and it may use any IP that can talk to your DHCP server(s). It is best to give each APM-DHCP virtual server a unique IP address but you may use an BIG-IP Self IP as per SOL13896 . Ensure your APM and APM-DHCP virtual servers are in the same TMOS Traffic Group (if that is impossible set TMOS db key tmm.sessiondb.match_ha_unit to false). Ensure that your APM-DHCP virtual server(s) and DHCP server(s) or relay(s) are reachable via the same BIG-IP route domain. Specify in your IP addresses any non-zero route-domains you are using (e.g., “192.168.0.20%3”)—this is essential. (It is not mandatory to put your DHCP-related Access Policy Items into a Macro—but doing so makes the below screenshot less wide!) Into your APM Access Policy, following your Logon Page and AD Auth (or XYZ Auth) Items (etc.) but before any (Full/Advanced/simple) Resource Assign Item which assigns the Network Access Resource (VPN), insert both Machine Info and Windows Info Items. (The Windows Info Item will not bother non-Windows clients.) Next insert a Variable Assign Item and name it “DHCP Setup”. In your “DHCP Setup” Item, set any DHCP parameters (explained below) that you need as custom session variables. You must set session.dhcp.servers. You must also set session.dhcp.virtIP to the IP address of an APM-DHCP virtual server (either here or at some point before the “DHCP_Req” iRule Event Item). Finally, insert an iRule Event Item (name it “DHCP Req”) and set its Agent ID to DHCP_req. Give it a Branch Rule “Got IP” using the expression “expr {[mcget {session.dhcp.address}] ne ""}” as illustrated. You must attach iRule ir-apm-policy-dhcp to your APM virtual server (the virtual server to which your clients connect). Neither the Machine Info Item nor the Windows Info Item is mandatory. However, each gathers data which common DHCP servers want to see. By default DHCP_req will send that data, when available, to your DHCP servers. See below for advanced options: DHCP protocol settings, data sent to DHCP server(s), etc. Typically your requests will include a user identifier from session.dhcp.subscriber_ID and client (machine or connection) identifiers from other parameters. The client IP address assigned by DHCP will appear in session.dhcp.address. By default, the DHCP_req iRule Event handler will also copy that IP address into session.requested.clientip where the Network Access Resource will find it. You may override that behavior by setting session.dhcp.copy2var (see below). Any “vendor-specific information” supplied by the DHCP server 1 (keyed by the value of session.dhcp.vendor_class) will appear in variables session.dhcp.vinfo.N where N is a tag number (1-254). You may assign meanings to tag numbers. Any DNS parameters the DHCP server supplies 2 are in session.dhcp.dns_servers and session.dhcp.dns_suffix. If you want clients to use those DNS server(s) and/or DNS default search domain, put the name of every Network Access Resource your Access Policy may assign to the client into the session.dhcp.dns_na_list option. NB: this solution does not renew DHCP address leases automatically, but it does release IP addresses obtained from DHCP after APM access sessions terminate. 3 Please configure your DHCP server(s) for an address lease time longer than your APM Maximum Session Timeout. Do not configure APM-DHCP virtual servers in different BIG-IP route domains so they share any part of a DHCP client IP range (address lease pool). For example, do not use two different APM-DHCP virtual servers 10.1.5.2%6 and 10.1.5.2%8 with one DHCP client IP range 10.1.5.10—10.1.5.250. APM-DHCP won’t recognize when two VPN sessions in different route domains get the same client IP from a non-route-domain-aware DHCP server, so it may not release their IP’s in proper sequence. This solution releases DHCP address leases for terminated APM sessions every once in a while, when a new connection comes in to the APM virtual server (because the BIG IP only executes the relevant iRules on the “event” of each new connection). When traffic is sparse (say, in the middle of the night) there may be some delay in releasing addresses for dead sessions. If ever you think this solution isn’t working properly, be sure to check the BIG IP’s LTM log for warning and error messages. DHCP Setup (a Variable Assign Item) will look like: Put the IP of (one of) your APM-DHCP virtual server(s) in session.dhcp.virtIP. Your DHCP server list may contain addresses of DHCP servers or relays. You may list a directed broadcast address (e.g., “172.16.11.255”) instead of server addresses but that will generate extra network chatter. To log information about DHCP processing for the current APM session you may set variable session.dhcp.debug to true (don’t leave it enabled when not debugging). DHCP Req (an iRule Event Item) will look like: Note DHCP Req branch rules: If DHCP fails, you may wish to warn the user: (It is not mandatory to Deny access after DHCP failure—you may substitute another address into session.requested.clientip or let the Network Access Resource use a Lease Pool.) What is going on here? We may send out DHCP request packets easily enough using iRules’ SIDEBAND functions, but it is difficult to collect DHCP replies using SIDEBAND. 4 Instead, we must set up a distinct LTM virtual server to receive DHCP replies on UDP port 67 at a fixed address. We tell the DHCP server(s) we are a DHCP relay device so replies will come back to us directly (no broadcasting). 5 For a nice explanation of the DHCP request process see http://technet.microsoft.com/en-us/library/cc940466.aspx. At this time, we support only IPv4, though adding IPv6 would require only toil, not genius. By default, a DHCP server will assign a client IP on the subnet where the DHCP relay device (that is, your APM-DHCP virtual server) is homed. For example, if your APM-DHCP virtual server’s address were 172.30.4.2/22 the DHCP server would typically lease out a client IP on subnet 172.30.4.0. Moreover, the DHCP server will communicate directly with the relay-device IP so appropriate routes must exist and firewall rules must permit. If you expect to assign client IP’s to APM tunnel endpoints on multiple subnets you may need multiple APM-DHCP virtual servers (one per subnet). Alternatively, some but not all DHCP servers 6 support the rfc3011 “subnet selection” or rfc3527 “subnet/link-selection sub-option” so you can request a client IP on a specified subnet using a single APM-DHCP virtual server (relay device) IP which is not homed on the target subnet but which can communicate easily with the DHCP server(s): see parameter session.dhcp.subnet below. NOTE: The subnet(s) on which APM Network Access (VPN) tunnels are homed need not exist on any actual VLAN so long as routes to any such subnet(s) lead to your APM (BIG-IP) device. Suppose you wish to support 1000 simultaneous VPN connections and most of your corporate subnets are /24’s—but you don’t want to set up four subnets for VPN users. You could define a virtual subnet—say, 172.30.4.0/22—tell your DHCP server(s) to assign addresses from 172.30.4.3 thru 172.30.7.254 to clients, put an APM-DHCP virtual server on 172.30.4.2, and so long as your Layer-3 network knows that your APM BIG-IP is the gateway to 172.30.4.0/22, you’re golden. When an APM Access Policy wants an IP address from DHCP, it will first set some parameters into APM session variables (especially the IP address(es) of one or more DHCP server(s)) using a Variable Assign Item, then use an iRule Event Item to invoke iRule Agent DHCP_req in ir apm policy dhcp. DHCP_req will send DHCPDISCOVERY packets to the specified DHCP server(s). The DHCP server(s) will reply to those packets via the APM-DHCP virtual-server, to which iRule ir apm dhcp must be attached. That iRule will finish the 4-packet DHCP handshake to lease an IP address. DHCP_req handles timeouts/retransmissions and copies the client IP address assigned by the DHCP server into APM session variables for the Access Policy to use. We use the APM Session-ID as the DHCP transaction-ID XID and also (by default) in the value of chaddr to avert collisions and facilitate log tracing. Parameters You Set In Your APM Access Policy Required Parameters session.dhcp.virtIP IP address of an APM-DHCP virtual-server (on UDP port 67) with iRule ir-apm-dhcp. This IP must be reachable from your DHCP server(s). A DHCP server will usually assign a client IP on the same subnet as this IP, though you may be able to override that by setting session.dhcp.subnet. You may create APM-DHCP virtual servers on different subnets, then set session.dhcp.virtIP in your Access Policy (or branch) to any one of them as a way to request a client IP on a particular subnet. No default. Examples (“Custom Expression” format): expr {"172.16.10.245"} or expr {"192.0.2.7%15"} session.dhcp.servers A TCL list of one or more IP addresses for DHCP servers (or DHCP relays, such as a nearby IP router). When requesting a client IP address, DHCP packets will be sent to every server on this list. NB: IP broadcast addresses like 10.0.7.255 may be specified but it is better to list specific servers (or relays). Default: none. Examples (“Custom Expression” format): expr {[list "10.0.5.20" "10.0.7.20"]} or expr {[list "172.30.1.20%5"]} Optional Parameters (including some DHCP Options) NOTE: when you leave a parameter undefined or empty, a suitable value from the APM session environment may be substituted (see details below). The defaults produce good results in most cases. Unless otherwise noted, set parameters as Text values. To exclude a parameter entirely set its Text value to '' [two ASCII single-quotes] (equivalent to Custom Expression return {''} ). White-space and single-quotes are trimmed from the ends of parameter values, so '' indicates a nil value. It is best to put “Machine Info” and “Windows Info” Items into your Access Policy ahead of your iRule Event “DHCP_req” Item (Windows Info is not available for Mac clients beginning at version 15.1.5 as they are no longer considered safe). session.dhcp.debug Set to 1 or “true” to log DHCP-processing details for the current APM session. Default: false. session.dhcp.firepass Leave this undefined or empty (or set to “false”) to use APM defaults (better in nearly all cases). Set to “true” to activate “Firepass mode” which alters the default values of several other options to make DHCP messages from this Access Policy resemble messages from the old F5 Firepass product. session.dhcp.copy2var Leave this undefined or empty (the default) and the client IP address from DHCP will be copied into the Access Policy session variable session.requested.clientip, thereby setting the Network Access (VPN) tunnel’s inside IP address. To override the default, name another session variable here or set this to (Text) '' to avert copying the IP address to any variable. session.dhcp.dns_na_list To set the client's DNS server(s) and/or DNS default search domain from DHCP, put here a Custom Expression TCL list of the name(s) of the Network Access Resource(s) you may assign to the client session. Default: none. Example: expr {[list "/Common/NA" "/Common/alt-NA"]} session.dhcp.broadcast Set to “true” to set the DHCP broadcast flag (you almost certainly should not use this). session.dhcp.vendor_class Option 60 A short string (32 characters max) identifying your VPN server. Default: “f5 APM”. Based on this value the DHCP server may send data to session.dhcp.vinfo.N (see below). session.dhcp.user_class Option 77 A Custom Expression TCL list of strings by which the DHCP server may recognize the class of the client device (e.g., “kiosk”). Default: none (do not put '' here). Example: expr {[list "mobile" "tablet"]} session.dhcp.client_ID Option 61 A unique identifier for the remote client device. Microsoft Windows DHCP servers expect a representation of the MAC address of the client's primary NIC. If left undefined or empty the primary MAC address discovered by the Access Policy Machine Info Item (if any) will be used. If no value is set and no Machine Info is available then no client_ID will be sent and the DHCP server will distinguish clients by APM-assigned ephemeral addresses (in session.dhcp.hwcode). If you supply a client_ID value you may specify a special code, a MAC address, a binary string, or a text string. Set the special code “NONE” (or '') to avoid sending any client_ID, whether Machine Info is available or not. Set the special code “XIDMAC” to send a unique MAC address for each APM VPN session—that will satisfy DHCP servers desiring client_ID‘s while averting IP collisions due to conflicting Machine Info MAC’s like Apple Mac Pro’s sometimes provide. A value containing twelve hexadecimal digits, possibly separated by hyphens or colons into six groups of two or by periods into three groups of four, will be encoded as a MAC address. Values consisting only of hexadecimal digits, of any length other than twelve hexits, will be encoded as a binary string. A value which contains chars other than [0-9A-Fa-f] and doesn't seem to be a MAC address will be encoded as a text string. You may enclose a text string in ASCII single-quotes (') to avert interpretation as hex/binary (the quotes are not part of the text value). On the wire, MAC-addresses and text-strings will be prefixed by type codes 0x01 and 0x00 respectively; if you specify a binary string (in hex format) you must include any needed codes. Default: client MAC from Machine Info, otherwise none. Example (Text value): “08-00-2b-2e-d8-5e”. session.dhcp.hostname Option 12 A hostname for the client. If left undefined or empty, the short computer name discovered by the APM Access Policy Windows Info Item (if any) will be used. session.dhcp.subscriber_ID Sub-option 6 of Option 82 An identifier for the VPN user. If undefined or empty, the value of APM session variable session.logon.last.username will be used (generally the user's UID or SAMAccountName). session.dhcp.circuit_ID Sub-option 1 of Option 82 An identifier for the “circuit” or network endpoint to which client connected. If left undefined or empty, the IP address of the (current) APM virtual server will be used. session.dhcp.remote_ID Sub-option 2 of Option 82 An identifier for the client's end of the connection. If left undefined or empty, the client’s IP address + port will be used. session.dhcp.subnet Option 118 Sub-option 5 of Option 82 The address (e.g., 172.16.99.0) of the IP subnet on which you desire a client address. With this option you may home session.dhcp.virtIP on another (more convenient) subnet. MS Windows Server 2016 added support for this but some other DHCP servers still lack support. Default: none. session.dhcp.hwcode Controls content of BOOTP htype, hlen, and chaddr fields. If left undefined or empty, a per-session value optimal in most situations will be used (asserting that chaddr, a copy of XID, identifies a “serial line”). If your DHCP server will not accept the default, you may set this to “MAC” and chaddr will be a locally-administered Ethernet MAC (embedding XID). When neither of those work you may force any value you wish by concatenating hexadecimal digits setting the value of htype (2 hexits) and chaddr (a string of 0–32 hexits). E.g., a 6-octet Ethernet address resembles “01400c2925ea88”. Most useful in the last case is the MAC address of session.dhcp.virtIP (i.e., a specific BIG-IP MAC) since broken DHCP servers may send Layer 2 packets directly to that address. Results of DHCP Request For Use In Access Policy session.dhcp.address <-- client IP address assigned by DHCP! session.dhcp.message session.dhcp.server, session.dhcp.relay session.dhcp.expires, session.dhcp.issued session.dhcp.lease, session.dhcp.rebind, session.dhcp.renew session.dhcp.vinfo.N session.dhcp.dns_servers, session.dhcp.dns_suffix session.dhcp.xid, session.dhcp.hex_client_id, session.dhcp.hwx If a DHCP request succeeds the client IP address appears in session.dhcp.address. If that is empty look in session.dhcp.message for an error message. The IP address of the DHCP server which issued (or refused) the client IP is in session.dhcp.server (if session.dhcp.relay differs then DHCP messages were relayed). Lease expiration time is in session.dhcp.expires. Variables session.dhcp.{lease, rebind, renew} indicate the duration of the address lease, plus the rebind and renew times, in seconds relative to the clock value in session.dhcp.issued (issued time). See session.dhcp.vinfo.N where N is tag number for Option 43 vendor-specific information. If the DHCP server sends client DNS server(s) and/or default search domain, those appear in session.dhcp.dns_servers and/or session.dhcp.dns_suffix. To assist in log analysis and debugging, session.dhcp.xid contains the XID code used in the DHCP request. The client_ID value (if any) sent to the DHCP server(s) is in session.dhcp.hex_client_id. The DHCP request’s htype and chaddr values (in hex) are concatenated in session.dhcp.hwx. Compatibility Tips and Troubleshooting Concern Response My custom parameter seems to be ignored. You should set most custom parameters as Text values (they may morph to Custom Expressions). My users with Apple Mac Pro’s sometimes get no DHCP IP or a conflicting one. A few Apple laptops sometimes give the Machine Info Item bogus MAC addresses. Set session.dhcp.client_ID to “XIDMAC“ to use unique per-session identifiers for clients. After a VPN session ends, I expect the very next session to reuse the same DHCP IP but that doesn’t happen. Many DHCP servers cycle through all the client IP’s available for one subnet before reusing any. Also, after a session ends APM-DHCP takes a few minutes to release its DHCP IP. When I test APM-DHCP with APM VE running on VMware Workstation, none of my sessions gets an IP from DHCP. VMware Workstation’s built-in DHCP server sends bogus DHCP packets. Use another DHCP server for testing (Linux dhcpd(8) is cheap and reliable). I use BIG-IP route domains and I notice that some of my VPN clients are getting duplicate DHCP IP addresses. Decorate the IP addresses of your APM-DHCP virtual servers, both in the iApp and in session.dhcp.virtIP, with their route-domain ID’s in “percent notation” like “192.0.2.5%3”. APM-DHCP is not working. Double-check your configuration. Look for errors in the LTM log. Set session.dhcp.debug to “true” before trying to start a VPN session, then examine DHCP debugging messages in the LTM log to see if you can figure out the problem. Even after looking at debugging messages in the log I still don’t know why APM-DHCP is not working. Run “tcpdump –ne -i 0.0 -s0 port 67” to see where the DHCP handshake fails. Are DISCOVER packets sent? Do any DHCP servers reply with OFFER packets? Is a REQUEST sent to accept an OFFER? Does the DHCP server ACK that REQUEST? If you see an OFFER but no REQUEST, check for bogus multicast MAC addresses in the OFFER packet. If no OFFER follows DISCOVER, what does the DHCP server’s log show? Is there a valid zone/lease-pool for you? Check the network path for routing errors, hostile firewall rules, or DHCP relay issues. Endnotes In DHCP Option 43 (rfc2132). In DHCP Options 6 and 15 (rfc2132). Prior to version v3h, under certain circumstances with some DHCP servers, address-release delays could cause two active sessions to get the same IP address. And even more difficult using [listen], for those of you in the back of the room. A bug in some versions of VMware Workstation’s DHCP server makes this solution appear to fail. The broken DHCP server sends messages to DHCP relays in unicast IP packets encapsulated in broadcast MAC frames. Anormal BIG-IP virtual server will not receive such packets. As of Winter 2017 the ISC, Cisco, and MS Windows Server 2016 DHCP servers support the subnet/link selection options but older Windows Server and Infoblox DHCP servers do not. Supporting Files - Download attached ZIP File Here.14KViews7likes52CommentsGetting Started with iRules: Variables

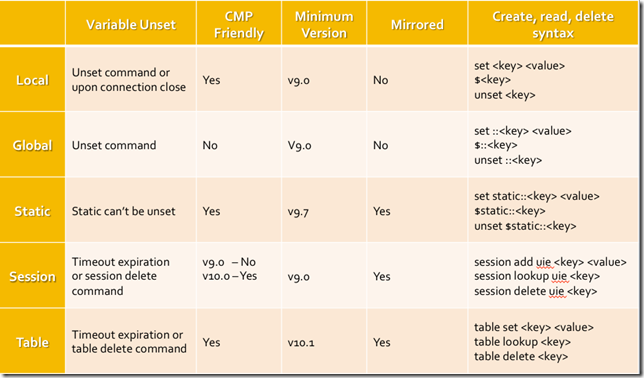

If you've been following along in this series, it's time to add another building block to the framework of what iRules are and can do. If you're new, it would behoove you to start at the beginning and catch up. So far we've covered introductions across the board for programming basics and concepts, F5 terminology and basic technology concepts, the core of what iRules are and why you'd use them, as well as a couple of cornerstone iRules concepts like events and priorities. All of these concepts are required to get a proper understanding of the base iRules infrastructure and functionality. Using those concepts as foundations we're going to add to the mix something that's integral to any programming language: variables. We'll cover a bit about what variables are, but also some iRules specific variables functionality and commentary. Things to look for in this article: What is a variable? How do I work with variables in my iRule? What types of variables are available to me in iRules? Is there a performance impact when using variables? When should I use variables? What is a variable? A variable, in simple terms, is a piece of data stored in memory. This is done usually with the notion of using that data again at some point, recalling it to make use of it later in your script. For instance if you want to store the hostname of an incoming connection so that you can reference that hostname on the response, or if you want to store the result of a given command, etc. those things would be stored in a variable. Every scripting language has variables of some form or another, and they're quite important in the grand scheme of things. Without them you'd be building static scripts to perform one off, iterative tasks. Variables are a large part of what allows dynamic programming to account for multiple conditions and use cases. Whether it's storing the data from a math operation to be compared against a desired result, or checking to see if a given condition has been met yet, variables are at the very core of just about everything in programming, and aren't something that we could live without in modern coding. The idea is simple, take a piece of data, whether it's a number or a string or...anything, and store it in memory with a unique name. Then, later, you can recall the value of that data by simply referencing the name you created to represent it. You can, of course, modify, or delete variables at will also. How do I work with variables in my iRule? There are two main functions when it comes to any variable: setting and retrieving. To set a variable in Tcl you simply use the set command and specify the desired value. This can be a static or dynamic value, such as an integer or the result of a command. The desired value is then stored in memory and associated with the variable name supplied. This looks like: #Basic variable creation in Tcl set integer 5 set hostname [HTTP::host] To retrieve the value associated with a given variable you simply reference that variable directly and you will get the resulting value. For instance "$integer" and "$hostname" would reference the values from the above example. Of course calling them by themselves won't do much good. Simple referring to a variable within your code will do pretty much nothing. You'll almost certainly be referencing the variables in relation to something or from another command. I.E.: #Basic variable reference to retrieve and use the value stored in memory if {$integer > 0) { log local0. "Host: $hostname" } What types of variables are available to me in iRules? This topic can creep in scope pretty darn quickly, given that variables are just a simple memory structure, and there are many different types of memory structures available to you via Tcl and iRules. From simple variables to arrays to tables and data groups, there are many ways to manage data in memory. For the purposes of this article, however, we're going to focus on the two main types of actual variables and leave the discussion of other data structures for later. There are two main types of variables in iRules, local and global. Local Variables All variables, unless otherwise specified, are created as local variables within an iRule. What does that mean? Well, a local variable means that it is assigned the same scope as the iRule that created it. All iRules are inherently connection based, and as such all local variables are connection based as well. This means that the connection dictates the memory space for a given iRule's local variables and data. For instance, if connection1 comes in and an iRule executes, creating 5 variables, those variables will only exist until connection1 closes and the connection is terminated on the BIG-IP. At that time the memory allocated to that flow will be freed up, and the variables created while processing that particular connection's iRule(s) will no longer be accessible. This is the case with all iRules and all variables created from within an iRule using the basic set command structure pictured above. Local variables are low cost, easy to use, you never have to worry about memory management with them, as they are automatically cleaned up when the connection terminates. It's important to remember that iRules as a whole, and the variables therein are connection bound. This can cause some confusion when people are expecting a more static state. Local variables will account for the vast majority of your variable usage within iRules. They're efficient, easy to use, and highly useful depending on the situation. These can be set directly as shown above but are also often the result of a command that provides output. There are also multiple ways to reference a variable within iRules. When using a command that directly affects the variable it is usually appropriate to leave the "$" off and reference the name directly, other times braces allow you to more clearly define the beginning and end of a variable name. Some examples would look like: #Standard variable reference log local0. "My host is $host" #Set variable to the output of a particular command using brackets [] set int [expr {5 + 8}] #Directly manipulating a variable's value means no "$", most times incr int #Bracing can allow you to deliniate between variable name and adjacent characters log local0. "Today's date is the ${int}th" Global Variables "Global variables" is a bit of a misnomer, actually. This is the general terminology used within most programming languages, including Tcl, for variables that exist outside of the local memory space. I.E., in the case of iRules, variables that exist beyond the constraints of a single connection. For instance, what if I want to store the IP address of my logging server, and have that always available, to every connection that comes through the BIG-IP, without having to re-set that variable every single time my iRule fires? That would be a global variable. Tcl has a mechanism for handling this, and it's relatively easy to use. Interestingly, though...we aren't using it. The global variable handling within Tcl requires a shared memory space, which in our world does something referred to as "demoting" from CMP. As you'll recall from the "Introduction to F5 Technology & Terms" article, CMP is Clustered Multi-Processing, and is the technology that allows us to distribute tasks within the BIG-IP to multiple cores in an efficient, scalable manner. Because of the requirements of the default Tcl global variable handling, making use of a global variable within Tcl forces any connection going through the Virtual to which the offending iRule is applied to be demoted from CMP, I.E. use only a single processing core, rather than all of them to process the traffic for that Virtual. This is a bad thing, and will severely limit performance. As such, we strongly recommend avoiding global variables in their traditional sense all together. But what about that log server address? There's still a definite need for long lived data to be stored in memory and available at will. It's for this purpose that we've included a new namespace within iRules called the "static" namespace. You can effectively set static global variable data in the static namespace without breaking CMP, and thereby not decreasing performance. To do so simply set up your static::varname variables, likely in RULE_INIT, since that special event only runs once at load time and static variables are global in scope, meaning they stay set until the configuration is reloaded. Once these variables are set you can call them like you would any other variable from within any iRule, and they will always be available. It looks something like this: #Set a static variable value, which will exist until config reload, living outside of the scope of any one particular iRule set static::logserver "10.10.1.145" #Reference that static variable just as you would any other variable from within any iRule log $static::logserver "This is a remote log message" For a more complete look at the different types of memory structures that you can access in iRules, I've included a handy dandy table to show memory structures by type along with some information about each, and an example of using that structure. We'll cover some of these in more detail in later installments of this series, but I want to make you aware of the different types of memory structures available. Note: There is a slight error in the table. It IS possible to unset static variables. Is there a performance impact when using variables? Running any command that isn't cached in any script has some cost associated with it, there's just no way around it. Variables in iRules happen to have a miniscule cost, generally speaking, so long as you're using them appropriately. The cost associated with a variable is in the creation process. It takes resources, albeit a very small amount, to store the desired data in memory and create a reference to that data to be used in the future. Accessing a variable is simply making a call to that reference, and as such doesn't have an additional cost. A common misunderstanding, however, is just what constitutes a variable within iRules, vs. a command or function that will perform a query. Many people see things like "HTTP::host" or "IP::client_addr" and, due to the format, assume it is a command or function and as such will cost CPU cycles to query the value and return it. This is not the case at all. These type of references are cached within TMM, so whether you call "HTTP::host" once or 100 times, you're not going to require more resources, as you're not performing a query to determine the hostname each time, rather just referencing a value that's already stored in memory. Think of these and many other similar commands as pre-populated variable data that you can use at will. When should I use variables? While the cost of creating variables is low, there still is some overhead associated with it. In iRules, due to the exorbitantly high rate of execution in some deployments, we tend to lean towards extreme efficiency mindedness. In this vein we recommend only using variables where they are actually necessary, rather than many programming practices which dictate to use them often as a means of keeping code tidy, even when not truly warranted. For instance, a common practice with many new iRule programmers is to do things like set host [HTTP::host] As I just explained above, however, this is completely unnecessary. Rather, it would be more efficient to simply re-use the HTTP::host command any time you need to reference this information. When it does make sense to use a variable, however, is when you are going to modify the data in some way. For instance log local0. "My lower case URI: [string tolower [HTTP::uri]]" The above will give you an all lowercase representation of the HTTP URI. This is a very common use case, and it is not abnormal to have the need to reference the lowercase version of the URI multiple times in a given iRule. While the HTTP::uri command is cached and will not incur additional overhead regardless of how many times you reference it, the string tolower command is not. As such, it would make sense in this case, assuming you're going to reference the lowercase URI at least 2 or more times, to create a variable and reference that: set loweruri [string tolower [HTTP::uri]] log local0. "My lower case URI: $loweruri" Effectively you want to use variables any time you are going to have to repeat any operation against a value that has a cost associated with it. Rather than repeat that operation multiple times and accumulate extra overhead it's better to perform the operation once, store the result, and reference the variable from there. That covers the basics of variables: What they are, how they work, when to use them and when not, which types you can make use of and how they'll affect your performance, if at all. Hopefully that allows you to approach your iRules with that much more confidence, understanding such a core piece of their makeup. In the next article we'll dig into control structures and operators.14KViews3likes3CommentsiRule to set SameSite for compatible clients and remove it for incompatible clients (LTM|ASM|APM)