Routing traffic by URI using iRule

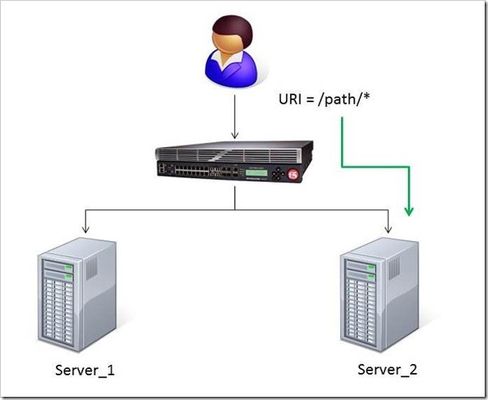

The DevCentral team recently developed a solution for routing traffic to a specific server based on the URI path. A BIG-IP Local Traffic Manager (LTM) sits between the client and two servers to load balance the traffic for those servers. The Challenge: When a user conducts a search on a website and is directed to one of the servers, the search information is cached on that server. If another user searches for that same data but the LTM load balances to the other server, the cached data from the first server does him no good. So to solve this caching problem, the customer wants traffic that contains a specific search parameter to be routed to the second server (as long as the server is available). Specifically in this case, when a user loads a page and the URI starts with /path/* that traffic should be sent to Server_2. The picture below shows a representation of what the customer wants to accomplish: The Solution: So, the question becomes: How does the customer ensure all /path/* traffic is sent to a specific server? Well, you guessed it...the ubiquitous and loveable iRule! Everyone had a pretty good idea an iRule would be used to solve this problem, but what does that iRule look like? Well, here it is!! when HTTP_REQUEST { if { [string tolower [HTTP::path]] starts_with "/path/" } { persist none set pm [lsearch -inline [active_members -list <pool name>] x.x.x.x*] catch { pool <pool name> member [lindex $pm 0] [lindex $pm 1] } } } Let's talk through the specifics of this solution... For efficiency, start by checking the least likely condition. If an HTTP_REQUEST comes in, immediately check for the "/path/" string. Keep in mind the "string tolower" command on the HTTP::path before the comparison to "/path/" to ensure the cases match correctly. Also, notice the use of HTTP::path instead of the full URI for the comparison...there's no need to use the full URI for this check. Next, turn off persistence just in case another profile or iRule is forcing the connection to persist to a place other than the beloved Server_2. Then, search all active members in the pool for the Server_2 IP address and port. The "lsearch -inline" ensures the matching value is returned instead of just the index. The "active_members -list" is used to ensure we get a list of IP addresses and ports, not just the number of active members. Note the asterisk behind the IP address in the search command...this is needed to ensure the port number is included in the search. Based on the searches, the resulting values are set in a variable called "pm". Next, use the catch command to stop any TCL errors from causing problems. Because we are getting the active members list, it's possible that the pool member we are trying to match is NOT active and therefore the pool member listed in the pool command may not be there...this is what might cause that TCL error. Then send the traffic to the correct pool member, which requires the IP and port. The astute observer and especially the one familiar with the output of "active_members -list" will notice that each pool member returned in the list is already pre-formatted in "ip port" format. However, just using the pm variable in the pool command returns a TCL error, likely because the pm variable is a single object instead of two unique objects. So, the lindex is used to pull out each element individually to avoid the TCL error. Testing: Our team tested the iRule by adding it to a development site and then accessing several pages on that site. We made sure the pages included "/path/" in the URIs! We used tcpdump on the BIG-IP to capture the transactions (tcpdump -ni 0.0 -w/var/tmp/capture1.pcap tcp port 80 -s0) and then downloaded them locally and used Wireshark for analysis. Using these tools, we determined that all the "/path/" traffic routed to Server_2 and all other traffic was still balanced between Server_1 and Server_2. So, the iRule worked correctly and it was ready for prime time! Special thanks to Jason Rahm and Joe Pruitt for their outstanding technical expertise and support in solving this challenge! For more information on this iRule or any other LTM issues, you can submit questions/comments in the "Comments" section below, or you can contact the DevCentral team at https://devcentral.f5.com/s/community/contact-us.4KViews0likes5CommentsF5 in AWS Part 1 - AWS Networking Basics

Updated for Current Versions and Documentation Part 1 : AWS Networking Basics Part 2: Running BIG-IP in an EC2 Virtual Private Cloud Part 3: Advanced Topologies and More on Highly-Available Services Part 4: Orchestrating BIG-IP Application Services with Open-Source Tools Part 5: Cloud-init, Single-NIC, and Auto Scale Out of BIG-IP in v12 If you work in IT, and you haven’t been living under a rock, then you have likely heard of Amazon Web Services (AWS). There has been a substantial increase in the maturity and stability of the AWS Elastic Compute Cloud (EC2), but you are wondering – can I continue to leverage F5 services in AWS? In this series of blog posts, we will discuss the how and why of running F5 BIG-IP in EC2. In this specific article, we’ll start with the basics of the AWS EC2 and Virtual Private Cloud (VPC). Later in the series, we will discuss some of the considerations associated with running BIG-IP as compute instance in this environment, we’ll outline the best deployment models for your application in EC2, and how these deployment models can be automated using open-source tools. Note: AWS uses the terms "public" and "private" to refer to what F5 Networks has typically referred to as "external" and "internal" respectively. We will use this terms interchangeably. First, what is AWS? If you have read the story, you will know that the EC2 project began with an internal interest at Amazon to move away from messy, multi-tenant networks using VLANs for segregation. Instead, network engineers at Amazon wanted to build an entirely IP-based architecture. This vision morphed into the universe of application services available today. Of course, building multi-tenant, purely L3 networks at massive scale had implications for both security and redundancy (we’ll get to this later). Today, EC2 enables users to run applications and services on top of virtualized network, storage, and compute infrastructure, where hosts are deployed in the form of Amazon Machine Images (AMIs). These AMIs can either be private to the user or launched from the public AWS marketplace. Hosts can be added to elastic load balancing (ELB) groups and associated with publicly accessible IPs to implement a simple horizontal model for availability. AWS became truly relevant for the enterprise with the introduction of the Virtual Private Cloud service. VPCs enabled users to build virtual private networks at the IP layer. These private networks can be connected to on-premise configurations by way of a VPN Gateway, or connected to the internet via an Internet Gateway. When deploying hosts within a VPC, the user has a significant amount of control over how each host is attached to the network. For example, a host can be attached to multiple networks and given several public or private IPs on one or multiple interfaces. Further, users can control many of the security aspects they are used to configuring in an on-premise environment (albeit in a slightly different way), including network ACLs, routing, simple firewalling, DHCP options, etc. Lets talk about these and other important EC2 aspects and try to understand how they affect our application deployment strategy. L2 Restrictions As we mentioned above, one of the design goals of AWS was to remove layer 2 networking. This is a worthy accomplishment but we lose access to certain useful protocols, including ARP (and gratuitious ARP), broadcast and multi-cast groups, 802.1Q tagging. We can no longer use VLANs for some availability models, for quality of service management, or for tenant isolation. Network Interfaces For larger topologies, one of the largest impacts given the removal of 802.1Q protocol support is the number of subnets we can attach to a node in the network. Because in AWS each interface is attached as a layer 3 endpoint, we must add an interface for each subnet. This contrasts with traditional networks, where you can add VLANs to your trunk for each subnet via tagging. Even though we're in a virtual world, the number of virtual network interfaces (or Elastic Network Interfaces (ENIs) in AWS terminology) is also limited according to the EC2 instance size. Together, the limits on number of interfaces and mapping between interface and subnet effectively limit the number of directly connected networks we can attach to a device (like BIG-IP, for example). IP Addressing AWS offers two kinds of globally routable IP address; these are “Public IP Addresses” and “Elastic IP Address”. In the table below, we outlined some of the differences between these two types of IP addresses. You can probably figure out for yourself why we will want to use Elastic IPs with BIG-IP. Like interfaces, AWS limits the number of IPs in several ways, including the number of IPs that can be attached to an interface and the number of elastic IPs per AWS account. Table 1: Differences between Public and Elastic IP Addresses Public IP Elastic IP Released on device termination/disassociation YES NO Assignable to secondary interfaces NO YES Can be associated after launch NO YES Amazon provides more information on public and elastic IP addresses here: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-instance-addressing.html#concepts-public-addresses Each interface on an EC2 instance is given a private IP address. This IP address is routable locally through your subnet and assigned from the address range associated with the subnet to which your interface is attached. Multiple private secondary IP addresses can be attached to an interface, and is a useful technique for creating more complex topologies. The number of interfaces and private IPs per interface within an Amazon VPC are listed here: http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/using-eni.html#AvailableIpPerENI NAT Instances, Subnets and Routing When creating a VPC using the wizard available in the AWS VPC web portal, several default configurations are possible. One of these configurations is “VPC with Public and Private subnets”. In this configuration, what if the instances on our private subnet wish to access the outside world? Because we cannot attach public or elastic IP address to instances within the private subnet, we must use NAT provided by AWS. Like BIG-IP and other network devices in EC2, the NAT instance will live as a compute node within your VPC. This is good way to allow outbound traffic from your internal servers, but to prevent those servers from receiving inbound traffic. When you create subnets manually or through the VPC wizard, you’ll note that each subnet has an associated routing table. These route tables may be updated to control traffic flow between instances and subnets in your VPC. Regions and Availability Zones We know quite a few number of people who have been confused by the concept of availabilty zones in EC2. To put it clearly, an availabilty zone is a physically isolated datacenter in a region. Regions may contain mulitple availability zones. Availabilty zones run on different networking and storage infrastructure, and depend on seperate power supplies and internet connections. Striping your application deployments across availability zones is a great way to provide redundancy and, perhaps a hot standby, but please note that these are not the same thing. Amazon does not mirror any data between zones on behalf of the customer. While VPCs can span availability zones, subnets may not. To close this blog post, we are fortunate enough to get a video walk through from Vladimir Bojkovic, Solution Architect at F5 Networks. He shows how to create a VPC with internal and external subnets as a practical demonstration of the concepts we discussed above.4KViews2likes4CommentsThe Full-Proxy Data Center Architecture

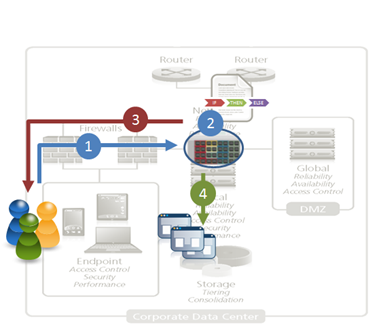

Why a full-proxy architecture is important to both infrastructure and data centers. In the early days of load balancing and application delivery there was a lot of confusion about proxy-based architectures and in particular the definition of a full-proxy architecture. Understanding what a full-proxy is will be increasingly important as we continue to re-architect the data center to support a more mobile, virtualized infrastructure in the quest to realize IT as a Service. THE FULL-PROXY PLATFORM The reason there is a distinction made between “proxy” and “full-proxy” stems from the handling of connections as they flow through the device. All proxies sit between two entities – in the Internet age almost always “client” and “server” – and mediate connections. While all full-proxies are proxies, the converse is not true. Not all proxies are full-proxies and it is this distinction that needs to be made when making decisions that will impact the data center architecture. A full-proxy maintains two separate session tables – one on the client-side, one on the server-side. There is effectively an “air gap” isolation layer between the two internal to the proxy, one that enables focused profiles to be applied specifically to address issues peculiar to each “side” of the proxy. Clients often experience higher latency because of lower bandwidth connections while the servers are generally low latency because they’re connected via a high-speed LAN. The optimizations and acceleration techniques used on the client side are far different than those on the LAN side because the issues that give rise to performance and availability challenges are vastly different. A full-proxy, with separate connection handling on either side of the “air gap”, can address these challenges. A proxy, which may be a full-proxy but more often than not simply uses a buffer-and-stitch methodology to perform connection management, cannot optimally do so. A typical proxy buffers a connection, often through the TCP handshake process and potentially into the first few packets of application data, but then “stitches” a connection to a given server on the back-end using either layer 4 or layer 7 data, perhaps both. The connection is a single flow from end-to-end and must choose which characteristics of the connection to focus on – client or server – because it cannot simultaneously optimize for both. The second advantage of a full-proxy is its ability to perform more tasks on the data being exchanged over the connection as it is flowing through the component. Because specific action must be taken to “match up” the connection as its flowing through the full-proxy, the component can inspect, manipulate, and otherwise modify the data before sending it on its way on the server-side. This is what enables termination of SSL, enforcement of security policies, and performance-related services to be applied on a per-client, per-application basis. This capability translates to broader usage in data center architecture by enabling the implementation of an application delivery tier in which operational risk can be addressed through the enforcement of various policies. In effect, we’re created a full-proxy data center architecture in which the application delivery tier as a whole serves as the “full proxy” that mediates between the clients and the applications. THE FULL-PROXY DATA CENTER ARCHITECTURE A full-proxy data center architecture installs a digital "air gap” between the client and applications by serving as the aggregation (and conversely disaggregation) point for services. Because all communication is funneled through virtualized applications and services at the application delivery tier, it serves as a strategic point of control at which delivery policies addressing operational risk (performance, availability, security) can be enforced. A full-proxy data center architecture further has the advantage of isolating end-users from the volatility inherent in highly virtualized and dynamic environments such as cloud computing . It enables solutions such as those used to overcome limitations with virtualization technology, such as those encountered with pod-architectural constraints in VMware View deployments. Traditional access management technologies, for example, are tightly coupled to host names and IP addresses. In a highly virtualized or cloud computing environment, this constraint may spell disaster for either performance or ability to function, or both. By implementing access management in the application delivery tier – on a full-proxy device – volatility is managed through virtualization of the resources, allowing the application delivery controller to worry about details such as IP address and VLAN segments, freeing the access management solution to concern itself with determining whether this user on this device from that location is allowed to access a given resource. Basically, we’re taking the concept of a full-proxy and expanded it outward to the architecture. Inserting an “application delivery tier” allows for an agile, flexible architecture more supportive of the rapid changes today’s IT organizations must deal with. Such a tier also provides an effective means to combat modern attacks. Because of its ability to isolate applications, services, and even infrastructure resources, an application delivery tier improves an organizations’ capability to withstand the onslaught of a concerted DDoS attack. The magnitude of difference between the connection capacity of an application delivery controller and most infrastructure (and all servers) gives the entire architecture a higher resiliency in the face of overwhelming connections. This ensures better availability and, when coupled with virtual infrastructure that can scale on-demand when necessary, can also maintain performance levels required by business concerns. A full-proxy data center architecture is an invaluable asset to IT organizations in meeting the challenges of volatility both inside and outside the data center. Related blogs & articles: The Concise Guide to Proxies At the Intersection of Cloud and Control… Cloud Computing and the Truth About SLAs IT Services: Creating Commodities out of Complexity What is a Strategic Point of Control Anyway? The Battle of Economy of Scale versus Control and Flexibility F5 Friday: When Firewalls Fail… F5 Friday: Platform versus Product3.9KViews1like1CommentInfrastructure Architecture: Whitelisting with JSON and API Keys

Application delivery infrastructure can be a valuable partner in architecting solutions …. AJAX and JSON have changed the way in which we architect applications, especially with respect to their ascendancy to rule the realm of integration, i.e. the API. Policies are generally focused on the URI, which has effectively become the exposed interface to any given application function. It’s REST-ful, it’s service-oriented, and it works well. Because we’ve taken to leveraging the URI as a basic building block, as the entry-point into an application, it affords the opportunity to optimize architectures and make more efficient the use of compute power available for processing. This is an increasingly important point, as capacity has become a focal point around which cost and efficiency is measured. By offloading functions to other systems when possible, we are able to increase the useful processing capacity of an given application instance and ensure a higher ratio of valuable processing to resources is achieved. The ability of application delivery infrastructure to intercept, inspect, and manipulate the exchange of data between client and server should not be underestimated. A full-proxy based infrastructure component can provide valuable services to the application architect that can enhance the performance and reliability of applications while abstracting functionality in a way that alleviates the need to modify applications to support new initiatives. AN EXAMPLE Consider, for example, a business requirement specifying that only certain authorized partners (in the integration sense) are allowed to retrieve certain dynamic content via an exposed application API. There are myriad ways in which such a requirement could be implemented, including requiring authentication and subsequent tokens to authorize access – likely the most common means of providing such access management in conjunction with an API. Most of these options require several steps, however, and interaction directly with the application to examine credentials and determine authorization to requested resources. This consumes valuable compute that could otherwise be used to serve requests. An alternative approach would be to provide authorized consumers with a more standards-based method of access that includes, in the request, the very means by which authorization can be determined. Taking a lesson from the credit card industry, for example, an algorithm can be used to determine the validity of a particular customer ID or authorization token. An API key, if you will, that is not stored in a database (and thus requires a lookup) but rather is algorithmic and therefore able to be verified as valid without needing a specific lookup at run-time. Assuming such a token or API key were embedded in the URI, the application delivery service can then extract the key, verify its authenticity using an algorithm, and subsequently allow or deny access based on the result. This architecture is based on the premise that the application delivery service is capable of responding with the appropriate JSON in the event that the API key is determined to be invalid. Such a service must therefore be network-side scripting capable. Assuming such a platform exists, one can easily implement this architecture and enjoy the improved capacity and resulting performance boost from the offload of authorization and access management functions to the infrastructure. 1. A request is received by the application delivery service. 2. The application delivery service extracts the API key from the URI and determines validity. 3. If the API key is not legitimate, a JSON-encoded response is returned. 4. If the API key is valid, the request is passed on to the appropriate web/application server for processing. Such an approach can also be used to enable or disable functionality within an application, including live-streams. Assume a site that serves up streaming content, but only to authorized (registered) users. When requests for that content arrive, the application delivery service can dynamically determine, using an embedded key or some portion of the URI, whether to serve up the content or not. If it deems the request invalid, it can return a JSON response that effectively “turns off” the streaming content, thereby eliminating the ability of non-registered (or non-paying) customers to access live content. Such an approach could also be useful in the event of a service failure; if content is not available, the application delivery service can easily turn off and/or respond to the request, providing feedback to the user that is valuable in reducing their frustration with AJAX-enabled sites that too often simply “stop working” without any kind of feedback or message to the end user. The application delivery service could, of course, perform other actions based on the in/validity of the request, such as directing the request be fulfilled by a service generating older or non-dynamic streaming content, using its ability to perform application level routing. The possibilities are quite extensive and implementation depends entirely on goals and requirements to be met. Such features become more appealing when they are, through their capabilities, able to intelligently make use of resources in various locations. Cloud-hosted services may be more or less desirable for use in an application, and thus leveraging application delivery services to either enable or reduce the traffic sent to such services may be financially and operationally beneficial. ARCHITECTURE is KEY The core principle to remember here is that ultimately infrastructure architecture plays (or can and should play) a vital role in designing and deploying applications today. With the increasing interest and use of cloud computing and APIs, it is rapidly becoming necessary to leverage resources and services external to the application as a means to rapidly deploy new functionality and support for new features. The abstraction offered by application delivery services provides an effective, cross-site and cross-application means of enabling what were once application-only services within the infrastructure. This abstraction and service-oriented approach reduces the burden on the application as well as its developers. The application delivery service is almost always the first service in the oft-times lengthy chain of services required to respond to a client’s request. Leveraging its capabilities to inspect and manipulate as well as route and respond to those requests allows architects to formulate new strategies and ways to provide their own services, as well as leveraging existing and integrated resources for maximum efficiency, with minimal effort. Related blogs & articles: HTML5 Going Like Gangbusters But Will Anyone Notice? Web 2.0 Killed the Middleware Star The Inevitable Eventual Consistency of Cloud Computing Let’s Face It: PaaS is Just SOA for Platforms Without the Baggage Cloud-Tiered Architectural Models are Bad Except When They Aren’t The Database Tier is Not Elastic The New Distribution of The 3-Tiered Architecture Changes Everything Sessions, Sessions Everywhere3.1KViews0likes0CommentsBack to Basics: Health Monitors and Load Balancing

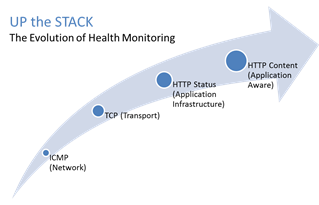

#webperf #ado Because every connection counts One of the truisms of architecting highly available systems is that you never, ever want to load balance a request to a system that is down. Therefore, some sort of health (status) monitoring is required. For applications, that means not just pinging the network interface or opening a TCP connection, it means querying the application and verifying that the response is valid. This, obviously, requires the application to respond. And respond often. Best practices suggest determining availability every 5 seconds or so. That means every X seconds the load balancing service is going to open up a connection to the application and make a request. Just like a user would do. That adds load to the application. It consumes network, transport, application and (possibly) database resources. Resources that cannot be used to service customers. While the impact on a single application may appear trivial, it's not. Remember, as load increases performance decreases. And no matter how trivial it may appear, health monitoring is adding load to what may be an already heavily loaded application. But Lori, you may be thinking, you expound on the importance of monitoring and visibility all the time! Are you saying we shouldn't be monitoring applications? Nope, not at all. Visibility is paramount, providing the actionable data necessary to enable highly dynamic, automated operations such as elasticity. Visibility through health-monitoring is a critical means of ensuring availability at both the local and global level. What we may need to do, however, is move from active to passive monitoring. PASSIVE MONITORING Passive monitoring, as the modifier suggests, is not an active process. The Load balancer does not open up connections nor query an application itself. Instead, it snoops on responses being returned to clients and from that infers the current status of the application. For example, if a request for content results in an HTTP error message, the load balancer can determine whether or not the application is available and capable of processing subsequent requests. If the load balancer is a BIG-IP, it can mark the service as "down" and invoke an active monitor to probe the application status as well as retrying the request to another available instance – insuring end-users do not see an error. Passive (inband) monitors are not binary. That is, they aren't simple "on" or "off" based on HTTP status codes. Such monitors can be configured to track the number of failures and evaluate failure rates against a configurable failure interval. When such thresholds are exceeded, the application can then be marked as "down". Passive monitors aren't restricted to availability status, either. They can also monitor for performance (response time). Failure to meet response time expectations results in a failure, and the application continues to be watched for subsequent failures. Passive monitors are, like most inline/inband technologies, transparent. They quietly monitor traffic and act upon that traffic without adding overhead to the process. Passive monitoring gives operations the visibility necessary to enable predictable performance and to meet or exceed user expectations with respect to uptime, without negatively impacting performance or capacity of the applications it is monitoring.2.8KViews1like2CommentsScheduling BIG-IP Configuration Backups via the GUI with an iApp

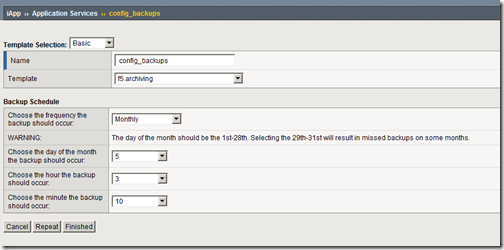

Beginning with BIG-IP version 11, the idea of templates has not only changed in amazing and powerful ways, it has been extended to be far more than just templates. The replacement for templates is called iApp TM . But to call the iApp TM just a template would be woefully inaccurate and narrow. It does templates well, and takes the concept further by allowing you to re-enter a templated application and make changes. Previously, deploying an application via a template was sort of like the Ron Popeil rotisserie: “Set it, and forget it!” Once it was executed, the template process was over, it was up to you to track and potentially clean up all those objects. Now, the application service you create based on an iApp TM template effectively “owns” all the objects it created, so any change to the deployment adds/changes/deletes objects as necessary. The other exciting change from the template perspective is the idea of strictness. Once an application service is configured, any object created that is owned by that service cannot be changed outside of the service itself. This means that if you want to add a pool member, it must be done within the application service, not within the pool. You can turn this off, but what a powerful protection of your services! Update for v11.2 - https://devcentral.f5.com/s/Tutorials/TechTips/tabid/63/articleType/ArticleView/articleId/1090565/Archiving-BIG-IP-Configurations-with-an-iApp-in-v112.aspx The Problem I received a request from one of our MVPs that he’d really like to be able to allow his users to schedule configuration backups without dropping to the command line. Knowing that the iApp TM feature was releasing soon with version 11, I started to see how I might be able to coax a command line configuration from the GUI. In training, I was told that “anything you can do in tmsh, you can do with an iApp TM .” This is excellent, and the basis for why I think they are going to be incredibly popular for not only controlling and managing applications, but also for extending CLI functions to the GUI. Anyway, so in order to schedule a configuration backup, I need: A backup script A cron job to call said script That’s really all there is to it. The Solution Thankfully, the background work is already done courtesy of a config backup codeshare entry by community user Colin Stubbs in the Advanced Design & Config Wiki. I did have to update the following bigpipe lines from the script: bigpipe export oneline “${SCF_FILE}” to tmsh save /sys config one-line file “${SCF_FILE}” bigpipe export “${SCF_FILE}” to tmsh save /sys config file "${SCF_FILE}" bigpipe config save “${UCS_FILE}” passphrase “${UCS_PASSPHRASE}” to tmsh save /sys ucs "${UCS_FILE}" passphrase "${UCS_PASSPHRASE}" bigpipe config save “${UCS_FILE}” to tmsh save /sys ucs "${UCS_FILE}" Also, I created (according the script comments from the codeshare entry) a /var/local/bin directory to place the script and a /var/local/backups directory for the script to dump the backup files in. These are optional and can be changed as necessary in your deployment, you’ll just need to update the script to reflect your file system preferences. Now that I have everything I need to support a backup, I can move on to the iApp TM template configuration. iApp TM Components A template consists of three parts: implementation, presentation, and help. You can create an empty template, or just start with presentation or help if you like. The implementation is tmsh script language, based on the Tcl language so loved by all of us iRulers. Please reference the tmsh wiki for the available tmsh extensions to the Tcl language. The presentation is written with the Application Presentation Language, or APL, which is new and custom-built for templates. It is defined on the APL page in the iApp TM wiki. The help is written in HTML, and is used to guide users in the use of the template. I’ll focus on the presentation first, and then the implementation. I’ll forego the help section in this article. Presentation The reason I’m starting with the presentation section of the template is that the implementation section’s Tcl variables reflect the presentation methods naming conventions. I want to accomplish a few things in the template presentation: Ask users for the frequency of backups (daily, weekly, monthly) If weekly, ask for the day of the week If monthly, ask for the day of the month and provide a warning about days 29-31 For all frequencies, ask for the hour and minute the backup should occur The APL code for this looks like this: section time_select { choice day_select display "large" { "Daily", "Weekly", "Monthly" } optional ( day_select == "Weekly" ) { choice dow_select display "medium" { "Sunday", "Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday" } } optional ( day_select == "Monthly" ) { message dom_warning "The day of the month should be the 1st-28th. Selecting the 29th-31st will result in missed backups on some months." choice dom_select display "medium" tcl { for { set x 1 } { $x < 32 } { incr x } { append dom "$x\n" } return $dom } } choice hr_select display "medium" tcl { for { set x 0 } { $x < 24 } { incr x} { append hrs "$x\n" } return $hrs } choice min_select display "medium" tcl { for { set x 0 } { $x < 60 } { incr x } { append mins "$x\n" } return $mins } } text { time_select "Backup Schedule" time_select.day_select "Choose the frequency the backup should occur:" time_select.dow_select "Choose the day of the week the backup should occur:" time_select.dom_warning "WARNING: " time_select.dom_select "Choose the day of the month the backup should occur:" time_select.hr_select "Choose the hour the backup should occur:" time_select.min_select "Choose the minute the backup should occur:" } A few things to point out. First, the sections (which can’t be nested) provide a way to set apart functional differences in your form. I only needed one here, but it’s very useful if I were to build on and add options for selecting a UCS or SCF format, or specifying a mail address for the backups to be mailed to. Second, order matters. The objects will be displayed in the template as you define them. Third, the optional command allows me to hide questions that wouldn’t make sense given previous answers. If you dig into some of the canned templates shipping with v11, you’ll also see another use case for the optional command. Fourth, you can use Tcl commands to populate fields for you. This can be generated data like I did above, or you can loop through configuration objects to present in the template as well. Finally, the text section is where you define the language you want to appear with each of your objects. The nomenclature here is section.variable. To give you an idea what this looks like, here is a screenshot of a monthly backup configuration: Once my template (f5.archiving) is saved, I can configure it in the Application Services section by selecting the template. At this point, I have a functioning presentation, but with no implementation, it’s effectively useless. Implementation Now that the presentation is complete, I can move on to an implementation. I need to do a couple things in the implementation: Grab the data entered into the application service Convert the day of week information from long name to the appropriate 0-6 (or 1-7) number for cron Use that data to build a cron file (statically assigned at this point to /etc/cron.d/f5backups) Here is the implementation section: array set dow_map { Sunday 0 Monday 1 Tuesday 2 Wednesday 3 Thursday 4 Friday 5 Saturday 6 } set hr $::time_select__hr_select set min $::time_select__min_select set infile [open "/etc/cron.d/f5backups" "w" "0755"] puts $infile "SHELL=\/bin\/bash" puts $infile "PATH=\/sbin:\/bin:\/usr\/sbin:\/usr\/bin" puts $infile "#MAILTO=user@somewhere" puts $infile "HOME=\/var\/tmp\/" if { $::time_select__day_select == "Daily" } { puts $infile "$min $hr * * * root \/bin\/bash \/var\/local\/bin\/f5backup.sh 1>\/var\/tmp\/f5backup.log 2>\&1" } elseif { $::time_select__day_select == "Weekly" } { puts $infile "$min $hr * * $dow_map($::time_select__dow_select) root \/bin\/bash \/var\/local\/bin\/f5backup.sh 1>\/var\/tmp\/f5backup.log 2>\&1" } elseif { $::time_select__day_select == "Monthly" } { puts $infile "$min $hr $::time_select__dom_select * * root \/bin\/bash \/var\/local\/bin\/f5backup.sh 1>\/var\/tmp\/f5backup.log 2>\&1" } close $infile A few notes: The dow_map array is to convert the selected day (ie, Saturday) to a number for cron (6). The variables in the implementation section reference the data supplied from the presentation like so: $::<section>__<presentation variable name> (Note the double underscore between them. As such, DO NOT use double underscores in your presentation variables) tmsh special characters need to be escaped if you’re using them for strings. A succesful configuration of the application service results in this file configuration for /etc/cron.d/f5backups: SHELL=/bin/bash PATH=/sbin:/bin:/usr/sbin:/usr/bin #MAILTO=user@somewhere HOME=/var/tmp/ 54 15 * * * root /bin/bash /var/local/bin/f5backup.sh 1>/var/tmp/f5backup.log 2>&1 So this backup is scheduled to run daily at 15:54. This is confirmed with this directory listing on my BIG-IP: [root@golgotha:Active] bin # ls -las /var/local/backups total 2168 8 drwx------ 2 root root 4096 Aug 16 15:54 . 8 drwxr-xr-x 9 root root 4096 Aug 3 14:44 .. 1076 -rw-r--r-- 1 root root 1091639 Aug 15 15:54 f5backup-golgotha.test.local-20110815155401.tar.bz2 1076 -rw-r--r-- 1 root root 1092259 Aug 16 15:54 f5backup-golgotha.test.local-20110816155401.tar.bz2 Conclusion This is just scratching the surface of what can be done with the new iApp TM feature in v11. I didn’t even cover the ability to use presentation and implementation libraries, but that will be covered in due time. If you’re impatient, there are already several examples (including this one here) in the codeshare. Related Articles F5 DevCentral > Community > Group Details - iApp iApp Wiki Home - DevCentral Wiki F5 Agility 2011 - James Hendergart on iApp iApp Codeshare - DevCentral Wiki iApp Lab 5 - Priority Group Activation - DevCentral Wiki iApp Template Development Tips and Techniques - DevCentral Wiki Lori MacVittie - iApp2.8KViews0likes13CommentsWhat is server offload and why do I need it?

One of the tasks of an enterprise architect is to design a framework atop which developers can implement and deploy applications consistently and easily. The consistency is important for internal business continuity and reuse; common objects, operations, and processes can be reused across applications to make development and integration with other applications and systems easier. Architects also often decide where functionality resides and design the base application infrastructure framework. Application server, identity management, messaging, and integration are all often a part of such architecture designs. Rarely does the architect concern him/herself with the network infrastructure, as that is the purview of “that group”; the “you know who I’m talking about” group. And for the most part there’s no need for architects to concern themselves with network-oriented architecture. Applications should not need to know on which VLAN they will be deployed or what their default gateway might be. But what architects might need to know – and probably should know – is whether the network infrastructure supports “server offload” of some application functions or not, and how that can benefit their enterprise architecture and the applications which will be deployed atop it. WHAT IT IS Server offload is a generic term used by the networking industry to indicate some functionality designed to improve the performance or security of applications. We use the term “offload” because the functionality is “offloaded” from the server and moved to an application network infrastructure device instead. Server offload works because the application network infrastructure is almost always these days deployed in front of the web/application servers and is in fact acting as a broker (proxy) between the client and the server. Server offload is generally offered by load balancers and application delivery controllers. You can think of server offload like a relay race. The application network infrastructure device runs the first leg and then hands off the baton (the request) to the server. When the server is finished, the application network infrastructure device gets to run another leg, and then the race is done as the response is sent back to the client. There are basically two kinds of server offload functionality: Protocol processing offload Protocol processing offload includes functions like SSL termination and TCP optimizations. Rather than enable SSL communication on the web/application server, it can be “offloaded” to an application network infrastructure device and shared across all applications requiring secured communications. Offloading SSL to an application network infrastructure device improves application performance because the device is generally optimized to handle the complex calculations involved in encryption and decryption of secured data and web/application servers are not. TCP optimization is a little different. We say TCP session management is “offloaded” to the server but that’s really not what happens as obviously TCP connections are still opened, closed, and managed on the server as well. Offloading TCP session management means that the application network infrastructure is managing the connections between itself and the server in such a way as to reduce the total number of connections needed without impacting the capacity of the application. This is more commonly referred to as TCP multiplexing and it “offloads” the overhead of TCP connection management from the web/application server to the application network infrastructure device by effectively giving up control over those connections. By allowing an application network infrastructure device to decide how many connections to maintain and which ones to use to communicate with the server, it can manage thousands of client-side connections using merely hundreds of server-side connections. Reducing the overhead associated with opening and closing TCP sockets on the web/application server improves application performance and actually increases the user capacity of servers. TCP offload is beneficial to all TCP-based applications, but is particularly beneficial for Web 2.0 applications making use of AJAX and other near real-time technologies that maintain one or more connections to the server for its functionality. Protocol processing offload does not require any modifications to the applications. Application-oriented offload Application-oriented offload includes the ability to implement shared services on an application network infrastructure device. This is often accomplished via a network-side scripting capability, but some functionality has become so commonplace that it is now built into the core features available on application network infrastructure solutions. Application-oriented offload can include functions like cookie encryption/decryption, compression, caching, URI rewriting, HTTP redirection, DLP (Data Leak Prevention), selective data encryption, application security functionality, and data transformation. When network-side scripting is available, virtually any kind of pre or post-processing can be offloaded to the application network infrastructure and thereafter shared with all applications. Application-oriented offload works because the application network infrastructure solution is mediating between the client and the server and it has the ability to inspect and manipulate the application data. The benefits of application-oriented offload are that the services implemented can be shared across multiple applications and in many cases the functionality removes the need for the web/application server to handle a specific request. For example, HTTP redirection can be fully accomplished on the application network infrastructure device. HTTP redirection is often used as a means to handle application upgrades, commonly mistyped URIs, or as part of the application logic when certain conditions are met. Application security offload usually falls into this category because it is application – or at least application data – specific. Application security offload can include scanning URIs and data for malicious content, validating the existence of specific cookies/data required for the application, etc… This kind of offload improves server efficiency and performance but a bigger benefit is consistent, shared security across all applications for which the service is enabled. Some application-oriented offload can require modification to the application, so it is important to design such features into the application architecture before development and deployment. While it is certainly possible to add such functionality into the architecture after deployment, it is always easier to do so at the beginning. WHY YOU NEED IT Server offload is a way to increase the efficiency of servers and improve application performance and security. Server offload increases efficiency of servers by alleviating the need for the web/application server to consume resources performing tasks that can be performed more efficiently on an application network infrastructure solution. The two best examples of this are SSL encryption/decryption and compression. Both are CPU intense operations that can consume 20-40% of a web/application server’s resources. By offloading these functions to an application network infrastructure solution, servers “reclaim” those resources and can use them instead to execute application logic, serve more users, handle more requests, and do so faster. Server offload improves application performance by allowing the web/application server to concentrate on what it is designed to do: serve applications and putting the onus for performing ancillary functions on a platform that is more optimized to handle those functions. Server offload provides these benefits whether you have a traditional client-server architecture or have moved (or are moving) toward a virtualized infrastructure. Applications deployed on virtual servers still use TCP connections and SSL and run applications and therefore will benefit the same as those deployed on traditional servers. I am wondering why not all websites enabling this great feature GZIP? 3 Really good reasons you should use TCP multiplexing SOA & Web 2.0: The Connection Management Challenge Understanding network-side scripting I am in your HTTP headers, attacking your application Infrastructure 2.0: As a matter of fact that isn't what it means2.6KViews0likes1CommentFull examples of iControlREST for device and application service deployment