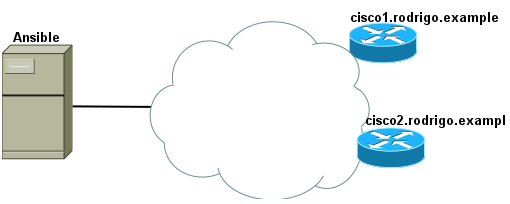

How to use Ansible with Cisco routers

Quick Intro For those who don't know, there is an Ansible plugin callednetwork_clito retrieve network device configuration for backup, inspection and even execute commands. So, let's assume we have Ansible already installed and 2 routers: I used Debian Linux here and I had to install Python 3: I've also installed pip as we can see above because I wanted to install a specific version of Ansible (2.5.+) that would allow myself to use network_cli plugin: Note: we can list available Ansible versions by just typingpip install ansible== I've also created a user namedansible: Edited Linuxsudoersfile withvisudocommand: And addedAnsible user permission to run root commands without prompting for password so my file looked liked this: Quick Set Up This is my directory structure: These are the files I used for this lab test: Note: we can replace cisco1.rodrigo.example for an IP address too. In Ansible, there is a default config file (ansible.cfg) where we store the global config, i.e. how we want Ansible to behave. We also keep the list of our hosts into an inventory file (inventory.yml here). There is a default folder (group_vars) where we can store variables that would apply to any router we ran Ansible against and in this case it makes sense as my router credentials are the same. Lastly, retrieve_backup.yml is my actual playbook, i.e. where I tell Ansible what to do. Note: I manually logged in to cisco1.rodrigo.example and cisco2.rodrigo.example to populate ssh known_hosts files, otherwise Ansible complains these hosts are untrusted. Populating our Playbook file retrieve_backup.yml Let's say we just want to retrieve the OSPF configuration from our Cisco routers. We can useios_commandto type in any command to Cisco router and useregisterto store the output to a variable: Note: be careful with the indentation. I used 2 spaces here. We can then copy the content of the variable to a file in a given directory. In this case, we copied whatever is inospf_outputvariable toospf_configdirectory. From the Playbook file above we can work out that variables are referenced between{{ }}and we might probably be wondering why do we need to append stdout[0] to ospf_output right? If you know Python, you might be interested in knowing a bit more about what's going on under the hood so I'll clarify things a bit more here. The variableospf_outputis actually a dictionary andstdoutis one of its keys. In reality, ospf_output.stdout could be represented as ospf_output['stdout'] We add the[0] because the object retrieved by the key stdout is not a string. It's a list! And[0] just represents the first object in the list. Executing our Playbook I'll create ospf_config first: And we execute our playbook by issuingansible-playbookcommand: Now let's check if our OSPF config was retrieved: We can pretty much type in any IOS command we'd type in a real router, either to configure it or to retrieve its configuration. We could also append the date to the file name but it's out of the scope of this article. That's it for now.3.1KViews1like1CommentConverting a Cisco ACE configuration file to F5 BIG-IP Format

In September, Cisco announced that it was ceasing development and pulling back on sales of its Application Control Engine (ACE) load balancing modules. Customers of Cisco’s ACE product line will now have to look for a replacement product to solve their load balancing and application delivery needs. One of the first questions that will come up when a customer starts looking into replacement products surrounds the issue of upgradability. Will the customer be able to import their current configuration into the new technology or will they have to start with the new product from scratch. For smaller businesses, starting over can be a refreshing way to clean up some of the things you’ve been meaning to but weren’t able to for one reason or another. But, for a large majority of the users out there, starting over from nothing with a new product is a daunting task. To help with those users considering a move to the F5 universe, DevCentral has included several scripts to assist with the configuration migration process. In our Codeshare section we created some scripts useful in converting ACE configurations into their respective F5 counterparts. https://devcentral.f5.com/s/articles/cisco-ace-to-f5-big-ip https://devcentral.f5.com/s/articles/Cisco-ACE-to-F5-Conversion-Python-3 https://devcentral.f5.com/s/articles/cisco-ace-to-f5-big-ip-via-tmsh We also have scripts covering Cisco’s CSS (https://devcentral.f5.com/s/articles/cisco-css-to-f5-big-ip ) and CSM products (https://devcentral.f5.com/s/articles/cisco-csm-to-f5-big-ip ) as well. In this article, I’m going to focus on the ace2f5-tmsh” in the ace2f5.zip script library. The script takes as input an ACE configuration and creates a TMSH script to create the corresponding F5 BIG-IP objects. ace2f5-tmsh.pl $ perl ace2f5-tmsh.pl ace_config > tmsh_script We could leave it at that, but I’ll use this article to discuss the components of the ACE configuration and how they map to F5 objects. ip The ip object in the ACE configuration is defined like this: ip route 0.0.0.0 0.0.0.0 10.211.143.1 equates to a tmsh “net route” command. net route 0.0.0.0-0 { network 0.0.0.0/0 gw 10.211.143.1 } rserver An “rserver” is basically a node containing a server address including an optional “inservice” attribute indicating whether it’s active or not. ACE Configuration rserver host R190-JOEINC0060 ip address 10.213.240.85 rserver host R191-JOEINC0061 ip address 10.213.240.86 inservice rserver host R192-JOEINC0062 ip address 10.213.240.88 inservice rserver host R193-JOEINC0063 ip address 10.213.240.89 inservice It will be used to find the IP address for a given rserver hostname. serverfarm A serverfarm is a LTM pool except that it doesn’t have a port assigned to it yet. ACE Configuration serverfarm host MySite-JoeInc predictor hash url rserver R190-JOEINC0060 inservice rserver R191-JOEINC0061 inservice rserver R192-JOEINC0062 inservice rserver R193-JOEINC0063 inservice F5 Configuration ltm pool Insiteqa-JoeInc { load-balancing-mode predictive-node members { 10.213.240.86:any { address 10.213.240.86 }} members { 10.213.240.88:any { address 10.213.240.88 }} members { 10.213.240.89:any { address 10.213.240.89 }} } probe a “probe” is a LTM monitor except that it does not have a port. ACE Configuration probe tcp MySite-JoeInc interval 5 faildetect 2 passdetect interval 10 passdetect count 2 will map to the TMSH “ltm monitor” command. F5 Configuration ltm monitor Insiteqa-JoeInc { defaults from tcp interval 5 timeout 10 retry 2 } sticky The “sticky” object is a way to create a persistence profile. First you tie the serverfarm to the persist profile, then you tie the profile to the Virtual Server. ACE Configuration sticky ip-netmask 255.255.255.255 address source MySite-JoeInc-sticky timeout 60 replicate sticky serverfarm MySite-JoeInc class-map A “class-map” assigns a listener, or Virtual IP address and port number which is used for the clientside and serverside of the connection. ACE Configuration class-map match-any vip-MySite-JoeInc-12345 2 match virtual-address 10.213.238.140 tcp eq 12345 class-map match-any vip-MySite-JoeInc-1433 2 match virtual-address 10.213.238.140 tcp eq 1433 class-map match-any vip-MySite-JoeInc-31314 2 match virtual-address 10.213.238.140 tcp eq 31314 class-map match-any vip-MySite-JoeInc-8080 2 match virtual-address 10.213.238.140 tcp eq 8080 class-map match-any vip-MySite-JoeInc-http 2 match virtual-address 10.213.238.140 tcp eq www class-map match-any vip-MySite-JoeInc-https 2 match virtual-address 10.213.238.140 tcp eq https policy-map a policy-map of type loadbalance simply ties the persistence profile to the Virtual . the “multi-match” attribute constructs the virtual server by tying a bunch of objects together. ACE Configuration policy-map type loadbalance first-match vip-pol-MySite-JoeInc class class-default sticky-serverfarm MySite-JoeInc-sticky policy-map multi-match lb-MySite-JoeInc class vip-MySite-JoeInc-http loadbalance vip inservice loadbalance policy vip-pol-MySite-JoeInc loadbalance vip icmp-reply class vip-MySite-JoeInc-https loadbalance vip inservice loadbalance vip icmp-reply class vip-MySite-JoeInc-12345 loadbalance vip inservice loadbalance policy vip-pol-MySite-JoeInc loadbalance vip icmp-reply class vip-MySite-JoeInc-31314 loadbalance vip inservice loadbalance policy vip-pol-MySite-JoeInc loadbalance vip icmp-reply class vip-MySite-JoeInc-1433 loadbalance vip inservice loadbalance policy vip-pol-MySite-JoeInc loadbalance vip icmp-reply class reals nat dynamic 1 vlan 240 class vip-MySite-JoeInc-8080 loadbalance vip inservice loadbalance policy vip-pol-MySite-JoeInc loadbalance vip icmp-reply F5 Configuration ltm virtual vip-Insiteqa-JoeInc-12345 { destination 10.213.238.140:12345 pool Insiteqa-JoeInc persist my_source_addr profiles { tcp {} } } ltm virtual vip-Insiteqa-JoeInc-1433 { destination 10.213.238.140:1433 pool Insiteqa-JoeInc persist my_source_addr profiles { tcp {} } } ltm virtual vip-Insiteqa-JoeInc-31314 { destination 10.213.238.140:31314 pool Insiteqa-JoeInc persist my_source_addr profiles { tcp {} } } ltm virtual vip-Insiteqa-JoeInc-8080 { destination 10.213.238.140:8080 pool Insiteqa-JoeInc persist my_source_addr profiles { tcp {} } } ltm virtual vip-Insiteqa-JoeInc-http { destination 10.213.238.140:http pool Insiteqa-JoeInc persist my_source_addr profiles { tcp {} http {} } } ltm virtual vip-Insiteqa-JoeInc-https { destination 10.213.238.140:https profiles { tcp {} } Conclusion If you are considering migrating from Cicso’s ACE to F5, I’d consider you take a look at the Cisco conversion scripts to assist with the conversion.2.4KViews0likes6CommentsF5 & Cisco ACI Essentials - Take advantage of Policy Based Redirect

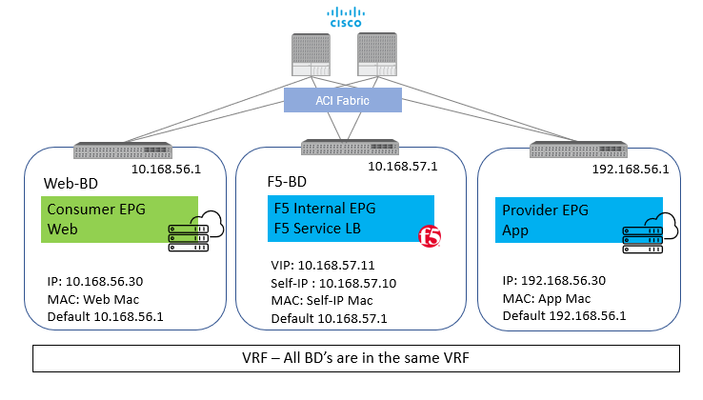

Different applications and environments have unique needs on how traffic is to be handled. Some applications due to the nature of their functionality or maybe due to a business need do require that the application server(s) are able to view the real IP of the client making the request to the application. Now when the request comes to the BIG-IP it has the option to change the real IP of the request or to keep it intact. In order to keep it intact the setting on the F5 BIG-IP ‘Source Address Translation’ is set to ‘None’. Now as simple as it may sound to just toggle a setting on the BIG-IP, a change of this setting causes significant change in traffic flow behavior. Let’s take an example with some actual values. Starting with a simple setup of a standalone BIG-IP with one interface on the BIG-IP for all traffic (one-arm) Client – 10.168.56.30 BIG-IP Virtual IP – 10.168.57.11 BIG-IP Self IP – 10.168.57.10 Server – 192.168.56.30 Scenario 1: With SNAT From Client : Src: 10.168.56.30 Dest: 10.168.57.11 From BIG-IP to Server: Src: 10.168.57.10 (Self-IP) Dest: 192.168.56.30 With this the server will respond back to 10.168.57.10 and BIG-IP will take care of forwarding the traffic back to the client. Here the application server see’s the IP 10.168.57.10 and not the client IP Scenario 2: No SNAT From Client : Src: 10.168.56.30 Dest: 10.168.57.11 From BIG-IP to Server: Src: 10.168.56.30 Dest: 192.168.56.30 With this the server will respond back to 10.168.56.30 and here where comes in the complication, the return traffic needs to go back to the BIG-IP and not the real client. One way to achieve this is to set the default GW of the server to the Self-IP of the BIG-IP and then the server will send the return traffic to the BIG-IP. BUT what if the server default gateway is not to be changed for whatsoever reason. It is at this time Policy based redirect will help. The default gw of the server will point to the ACI fabric, the ACI fabric will be able to intercept the traffic and send it over to the BIG-IP. With this the advantage of using PBR is two-fold The server(s) default gateway does not need to point to BIG-IP but can point to the ACI fabric The real client IP is preserved for the entire traffic flow Avoid server originated traffic to hit BIG-IP, resulting BIG-IP to configure a forwarding virtual to handle that traffic.If server originated traffic volume is high it could result unnecessary load the BIG-IP Before we get to the deeper into the topic of PRB below are a few links to help you refresh on some of the Cisco ACI and BIG-IP concepts ACI fundamentals: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-x/aci-fundamentals/b_ACI-Fundamentals.html SNAT and Automap: https://support.f5.com/csp/article/K7820 BIG-IP modes of deployment: https://support.f5.com/csp/article/K96122456#link_02_01 Now let’s look at what it takes to configure PBR using a Standalone BIG-IP Virtual Edition in One-Arm mode Network diagram for reference: To use the PBR feature on APIC - Service graph is a MUST Details on L4-L7 service graph on APIC To get hands on experience on deploying a service graph (without pbr) Configuration on APIC 1) Bridge domain ‘F5-BD’ Under Tenant->Networking->Bridge domains->’F5-BD’->Policy IP Data plane learning - Disabled 2) L4-L7 Policy-Based Redirect Under Tenant->Policies->Protocol->L4-L7 Policy based redirect, create a new one Name: ‘bigip-pbr-policy’ L3 destinations: BIG-IP Self-IP and MAC IP: 10.168.57.10 MAC: Find the MAC of interface the above Self-IP is assigned from logging into the BIG-IP (example: 00:50:56:AC:D2:81) 3) Logical Device Cluster- Under Tenant->Services->L4-L7, create a logical device Managed – unchecked Name: ‘pbr-demo-bigip-ve` Service Type: ADC Device Type: Virtual (in this example) VMM domain (choose the appropriate VMM domain) Devices: Add the BIG-IP VM from the dropdown and assign it an interface Name: ‘1_1’, VNIC: ‘Network Adaptor 2’ Cluster interfaces Name: consumer, Concrete interface Device1/[1_1] Name: provider, Concrete interface: Device1/[1_1] 4) Service graph template Under Tenant->Services->L4-L7->Service graph templates, create a service graph template Give the graph a name:’ pbr-demo-sgt’ and then drag and drop the logical device cluster (pbr-demo-bigip-ve) to create the service graph ADC: one-arm Route redirect: true 5) Click on the service graph created and then go to the Policy tab, make sure the Connections for the connectors C1 and C2 and set as follows: Connector C1 Direct connect – False (Not mandatory to set to 'True' because PBR is not enabled on consumer connector for the consumer to VIP traffic) Adjacency type – L3 Connector C2 Direct connect - True Adjacency type - L3 6) Apply the service graph template Right click on the service graph and apply the service graph Choose the appropriate consumer End point group (‘App’) provider End point group (‘Web’) and provide a name for the new contract For the connector select the following: BD: ‘F5-BD’ L3 destination – checked Redirect policy – ‘bigip-pbr-policy’ Cluster interface – ‘provider’ Once the service graph is deployed, it is in applied state and the network path between the consumer, BIG-IP and provider has been successfully setup on the APIC. 7) Verify the connector configuration for PBR. Go to Device selection policy under Tenant->Services-L4-L7. Expand the menu and click on the device selection policy deployed for your service graph. For the consumer connector where PBR is not enabled Connector name - Consumer Cluster interface - 'provider' BD- ‘F5-BD’ L3 destination – checked Redirect policy – Leave blank (no selection) For the provider connector where PBR is enabled: Connector name - Provider Cluster interface - 'provider' BD - ‘F5-BD’ L3 destination – checked Redirect policy – ‘bigip-pbr-policy’ Configuration on BIG-IP 1) VLAN/Self-IP/Default route Default route – 10.168.57.1 Self-IP – 10.168.57.10 VLAN – 4094 (untagged) – for a VE the tagging is taken care by vCenter 2) Nodes/Pool/VIP VIP – 10.168.57.11 Source address translation on VIP: None 3)iRule (end of the article) that can be helpful for debugging Few differences in configuration when the BIG-IP is a Virtual edition and is setup in a High availability pair 1)BIG-IP: Set MAC Masquerade (https://support.f5.com/csp/article/K13502) 2)APIC: Logical device cluster Promiscuous mode – enabled Add both BIG-IP devices as part of the cluster 3) APIC: L4-L7 Policy-Based Redirect L3 destinations: Enter the Floating BIG-IP Self-IP and MAC masquerade ------------------------------------------------------------------------------------------------------------------------------------------------------------------ Configuration is complete, let’s take a look at the traffic flows Client-> F5 BIG-IP -> Server Server-> F5 BIG-IP -> Client In Step 2 when the traffic is returned from the client, ACI uses the Self-IP and MAC that was defined in the L4-L7 redirect policy to send traffic to the BIG-IP iRule to help with debugging on the BIG-IP when LB_SELECTED { log local0. "==================================================" log local0. "Selected server [LB::server]" log local0. "==================================================" } when HTTP_REQUEST { set LogString "[IP::client_addr] -> [IP::local_addr]" log local0. "==================================================" log local0. "REQUEST -> $LogString" log local0. "==================================================" } when SERVER_CONNECTED { log local0. "Connection from [IP::client_addr] Mapped -> [serverside {IP::local_addr}] \ -> [IP::server_addr]" } when HTTP_RESPONSE { set LogString "Server [IP::server_addr] -> [IP::local_addr]" log local0. "==================================================" log local0. "RESPONSE -> $LogString" log local0. "==================================================" } Output seen in /var/log/ltm on the BIG-IP, look at the event <SERVER_CONNECTED> Scenario 1: No SNAT -> Client IP is preserved Rule /Common/connections <HTTP_REQUEST>: Src: 10.168.56.30 -> Dest: 10.168.57.11 Rule /Common/connections <SERVER_CONNECTED>: Src: 10.168.56.30 Mapped -> 10.168.56.30 -> Dest: 192.168.56.30 Rule /Common/connections <HTTP_RESPONSE>: Src: 192.168.56.30 -> Dest: 10.168.56.30 If you are curious of the iRule output if SNAT is enabled on the BIG-IP - Enable AutoMap on the virtual server on the BIG-IP Scenario 2: With SNAT -> Client IP not preserved Rule /Common/connections <HTTP_REQUEST>: Src: 10.168.56.30 -> Dest: 10.168.57.11 Rule /Common/connections <SERVER_CONNECTED>: Src: 10.168.56.30 Mapped -> 10.168.57.10-> Dest: 192.168.56.30 Rule /Common/connections <HTTP_RESPONSE>: Src: 192.168.56.30 -> Dest: 10.168.56.30 References: ACI PBR whitepaper: https://www.cisco.com/c/en/us/solutions/collateral/data-center-virtualization/application-centric-infrastructure/white-paper-c11-739971.html Troubleshooting guide: https://www.cisco.com/c/dam/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/troubleshooting/Cisco_TroubleshootingApplicationCentricInfrastructureSecondEdition.pdf Layer4-Layer7 services deployment guide https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/L4-L7-services/Cisco-APIC-Layer-4-to-Layer-7-Services-Deployment-Guide-401/Cisco-APIC-Layer-4-to-Layer-7-Services-Deployment-Guide-401_chapter_011.html Service graph: https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/L4-L7-services/Cisco-APIC-Layer-4-to-Layer-7-Services-Deployment-Guide-401/Cisco-APIC-Layer-4-to-Layer-7-Services-Deployment-Guide-401_chapter_0111.html https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/4-x/L4-L7-services/Cisco-APIC-Layer-4-to-Layer-7-Services-Deployment-Guide-401.pdf2.3KViews1like3CommentsUnmanaged Mode - what it means for ACI and BIG-IP integration [End of Life]

The F5 and Cisco APIC integration based on the device package and iWorkflow is End Of Life. The latest integration is based on the Cisco AppCenter named ‘F5 ACI ServiceCenter’. Visit https://f5.com/cisco for updated information on the integration. The adoption of Cisco ACI with the APIC controller continue to gain traction in the market. With their latest major APIC release, 1.2.1i , Cisco has streamlined how ADCs are connected to the fabric. There have traditionally been two methods of connecting services: Service Insertion with a device package and a service graph (Managed) Connecting a device as an endpoint device into an EPG (Unmanaged) What Cisco has done is simplified how a services device can be connected into the fabric as an Unmanaged device to the APIC. This is known as “Unmanaged Mode” vs the “Managed Mode” where a device package is used. Instead of the usual multi-step manual configuration process for specifying the network configuration in APIC, the attachment has been consolidated into the service graph. Before it was necessary to manually static bind the VLAN to the EPG (Provider and Consumer) and assign the physical domain to the EPG. It was also required to bind contracts between multiple Provider and Consumer EPGs. Now, all you have to do is go into the service graph and specify connectivity just like you were building a managed service graph. By doing this, there is now one common location and workflow for configuring services. The process is simplified. Advantages Service graph representation with Unmanaged and Managed modes mixed (few devices managed by APIC, few devices NOT managed by APIC) Unified view of a service chain Why needed Customers have requested to provide “Unmanaged ” mode for their custom devices Need to manage their own security policies outside of the networking team Need to use their existing orchestration infrastructure for a particular devices in the chain Need to use advanced vendor specific functionality What this means for BIG-IP integration BIG-IP is attached as an EPG - but now being able to represent this mode within a service graph Difference between Managed and Unmanaged mode Mode Goal Unmanaged Mode (Device managed externally) Service Chaining (traffic redirection) through ACI Managed Mode (Device managed by device package) Service Chaining (traffic redirection) through ACI Device configuration through APIC (Policy based configuration) Some prerequisites for deploying an Unmanaged logical device cluster BIGIP: Define VLANS, Self IP’s and trunk (if needed) APIC: VLAN Pool – Static allocation Fabric access policies to ensure physical connectivity to BIGIP Click here to view a video with more details on how to deploy a BIGIP in Unmanaged mode on APIC https://www.youtube.com/watch?v=OJPEYzNGD3A Once deployed as an Unmanaged device cluster with traffic redirection through ACI configure your BIGIP with nodes, pools, monitors, virtual servers and all other features required by your application like you always do by using the BIG-IP GUI/CLI etc (not through the Cisco ACI) References: http://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-x/L4-L7_Services_Deployment/guide/b_L4L7_Deploy_ver121x/b_L4L7_Deploy_ver121x_chapter_010000.pdf http://blogs.cisco.com/datacenter/new-innovations-for-l4-7-network-services-integration-with-ciscos-aci-approach1.6KViews0likes5CommentsUnify Visibility with F5 ACI ServiceCenter in Cisco ACI and F5 BIG-IP Deployments

What is F5 ACI ServiceCenter? F5 ACI ServiceCenter is an application that runs natively on Cisco Application Policy Infrastructure Controller (APIC), which provides administrators a unified way to manage both L2-L3 and L4-L7 infrastructure in F5 BIG-IP and Cisco ACI deployments.Once day-0 activities are performed and BIG-IP is deployed within the ACI fabric, F5 ACI ServiceCenter can then be used to handle day-1 and day-2 operations. F5 ACI ServiceCenter is well suited for both greenfield and brownfield deployments. F5 ACI ServiceCenteris a successful and popular integration between F5 BIG-IP and Cisco Application Centric Infrastructure (ACI).This integration is loosely coupled and can be installed and uninstalled at anytime without any disruption to the APIC and the BIG-IP.F5 ACI ServiceCenter supports REST API and can be easily integrated into your automation workflow: F5 ACI ServiceCenter Supported REST APIs. Where can we download F5 ACI ServiceCenter? F5 ACI ServiceCenter is completely Free of charge and it is available to download from Cisco DC App Center. F5 ACI ServiceCenter is fully supported by F5. If you run into any issues and/or would like to see a new feature or an enhancement integrated into future F5 ACI ServiceCenter releases, you can open a support tickethere. Why should we use F5 ACI ServiceCenter? F5 ACI ServiceCenter has three main independent use cases and you have the flexibility to use them all or to pick and choose to use whichever ones that fit your requirements: Visibility F5 ACI ServiceCenter provides enhanced visibility into your F5 BIG-IP and Cisco ACI deployment. It has the capability to correlate BIG-IP and APIC information. For example, you can easily find out the correlated APIC Endpoint information for a BIG-IP VIP, and you can also easily determine the APIC Virtual Routing and Forwarding (VRF) to BIG-IP Route Domain (RD) mapping from F5 ACI ServiceCenter as well. You can efficiently gather the correlated information from both the APIC and the BIG-IP on F5 ACI ServiceCenter without the need to hop between BIG-IP and APIC. Besides, you can also gather the health status, the logs, statistics etc. on F5 ACI ServiceCenter as well. L2-L3 Network Configuration After BIG-IP is inserted into ACI fabric using APIC service graph, F5 ACI ServiceCenter has the capability to extract the APIC service graph VLANs from the APIC and then deployed the VLANs on the BIG-IP. This capability allows you to always have the single source of truth for network configuration between BIG-IP and APIC. L4-L7 Application Services F5 ACI ServiceCenter leverages F5 Automation Toolchain for application services: Advanced mode, which uses AS3 (Application Services 3 Extension) Basic mode, which uses FAST (F5 Application Services Templates) F5 ACI ServiceCenter also has the ability to dynamically add or remove pool members from a pool on the BIG-IP based on the endpoints discovered by the APIC, which helps to reduce configuration overhead. Other Features F5 ACI ServiceCenter can manage multiple BIG-IPs - physical as well as virtual BIG-IPs. If Link Layer Discovery Protocol (LLDP) is enabled on the interfaces between Cisco ACI and F5 BIG-IP,F5 ACI ServiceCenter can discover the BIG-IP and add it to the device list as well. F5 ACI Service can also categorize the BIG-IP accordingly, for example, if it is a standalone or in a high availability (HA) cluster. Starting from version 2.11, F5 ACI ServiceCenter supports multi-tenant design too. These are just some of the features and to find out more, check out F5 ACI ServiceCenter User and Deployment Guide. F5 ACI ServiceCenter Resources Webinar: Unify Your Deployment for Visibility with Cisco and the F5 ACI ServiceCenter Learn: F5 DevCentral Youtube Videos: F5 ACI ServiceCenter Playlist Cisco Learning Video:Configuring F5 BIG-IP from APIC using F5 ACI ServiceCenter Cisco ACI and F5 BIG-IP Design Guide White Paper Hands-on: F5 ACI ServieCenter Interactive Demo Cisco dCloud Lab -Cisco ACI with F5 ServiceCenter Lab v3 Get Started: Download F5 ACI ServiceCenter F5 ACI ServiceCenter User and Deployment Guide1.6KViews1like0CommentsF5 and Cisco ACI Essentials - Design guide for a Single Pod APIC cluster

Deployment considerations It is usually an easy decision to have BIG-IP as part of your ACI deployment as BIG-IP is a mature feature rich ADC solution. Where time is spent is nailing down the design and the deployment options for the BIG-IP in the environment. Below we will discuss a few of the most commonly asked questions: SNAT or no SNAT There are various options you can use to insert the BIG-IP into the ACI environment. One way is to use the BIG-IP as a gateway for servers or as a routing next hop for routing instances. Another option is to use Source Network Address Translation (SNAT) on the BIG-IP, however with enabling SNAT the visibility into the real source IP address is lost. If preserving the source IP is a requirement then ACI's Policy-Based Redirect (PBR) can be used to make sure the return traffic goes back to the BIG-IP. BIG-IP redundancy F5 BIG-IP can be deployed in different high-availability modes. The two common BIG-IP deployment modes: active-active and active-standby. Various design considerations, such as endpoint movement during fail-overs, MAC masquerade, source MAC-based forwarding, Link Layer Discovery Protocol (LLDP), and IP aging should also be taken into account for each of the deployment modes. Multi-tenancy Multi-tenancy is supported by both Cisco ACI and F5 BIG-IP in different ways. There are a few ways that multi-tenancy constructs on ACI can be mapped to multi-tenancy on BIG-IP. The constructs revolve around tenants, virtual routing and forwarding (VRF), route domains, and partitions. Multi-tenancy can also be based on the BIG-IP form factor (appliance, virtual edition and/or virtual clustered multiprocessor (vCMP)). Tighter integration Once a design option is selected there are questions around what more can be done from an operational or automation perspective now that we have a BIG-IP and ACI deployment? The F5 ACI ServiceCenter is an application developed on the Cisco ACI App Center platform built for exactly that purpose. It is an integration point between the F5 BIG-IP and Cisco ACI. The application provides an APIC administrator a unified way to manage both L2-L3 and L4-L7 infrastructure. Once day-0 activities are performed and BIG-IP is deployed within the ACI fabric using any of the design options selected for your environment, then the F5 ACI ServiceCenter can be used to handle day-1 and day-2 operations. The day-1 and day-2 operations provided by the application are well suited for both new/greenfield and existing/brownfield deployments of BIG-IP and ACI deployments. The integration is loosely coupled, which allows the F5 ACI ServiceCenter to be installed or uninstalled with no disruption to traffic flow, as well as no effect on the F5 BIG-IP and Cisco ACI configuration. Check here to find out more. All of the above topics and more are discussed in detail here in the single pod white paper.1.5KViews3likes0CommentsF5 and Cisco ACI Essentials: Automate automate automate !!!

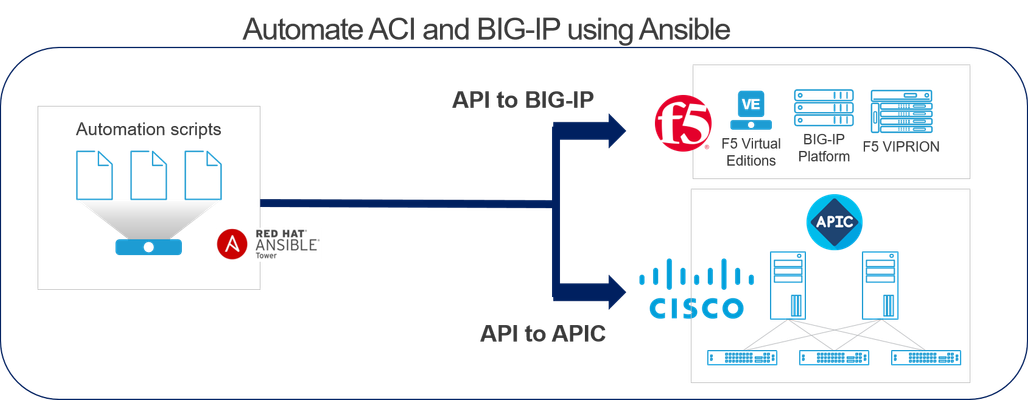

This article will focus on automation support by BIG-IP and Cisco ACI and how automation tools specifically Ansible can be used to automate different use cases. Before getting into the weeds let's discuss and understand BIG-IP's and Cisco ACI's automation strategies. BIG-IP automation strategy BIG-IP automation strategy is simple-abstract as much complexity as possible from the user, give an easy button to the user to deploy their BIG-IP configuration. This could honestly mean different methods to different people, some prefer sending a single API call to perform one action( A one-to-one mapping between your API<->Configuration). Others prefer a more declarative approach where one API call performs multiple actions, basically a one-to -many mapping between your API(1)<->Configuration(N). A great link to refresh and learn about the different options: https://www.f5.com/products/automation-and-orchestration Cisco ACI automation strategy Cisco Application Policy Infrastructure Controller (APIC) is the network controller for the ACI fabric. APIC is the unified point of automation and management for the Cisco ACI fabric, policy enforcement, and health monitoring. The Cisco ACI programmability model provides complete programmatic access using APIC. Click here to learn more https://developer.cisco.com/site/aci/ Automation tools There are a lot of automation tools that are being talked for network automation BUT the one that comes up in every customer conversation is Ansible. Its simplicity, maturity and community adoption has made it very popular. In this article we are going to focus on using Ansible to automate a service discovery use case. Use Case: Dynamic EP attach/detach Let’s take an example of a simple http web service being made highly available and secure using the BIG-IP Virtual IP address. This web service has a bunch of backend web servers hosting the application, the IP of this web servers is configured on the BIG-IP as pool members. These same web server IP’s are learned as endpoints in the ACI fabric and are part of an End Point Group (EPG) on the APIC. Hence there is a logical mapping between a EPG on APIC and a pool on the BIG-IP. Now if the application is adding or deleting web servers that is hosting the application maybe to save cost or maybe to deal with increase/decrease of traffic, what happens is that the web server IP will be automatically learned/unlearned on APIC. BUT an admin will still have to add/remove that web server IP from the pool on BIG-IP. This can be a burden on the network admin specially if this happens very often. Here is where automation can help and let’s look at how in the next section More details on the use case can be found at https://devcentral.f5.com/s/articles/F5-Cisco-ACI-Essentials-Dynamic-pool-sizing-using-the-F5-ACI-ServiceCenter Automation of Use Case: Dynamic EP attach/detach Automation can be achieved by using Ansible and Ansible tower where API calls are made directly to the BIG-IP. Another option it to use the F5 ACI ServiceCenter (a native F5 ACI integration) to automate this particular use case. Ansible and Ansible tower To learn more about Ansible and Ansible tower: https://www.ansible.com/products/tower Using this method of automation separate API calls are made directly to the ACI and the BIG-IP. Sample playbook to perform the addition and deletion of pool members to a BIG-IP pool based on members in a particular EPG. The mapping of pool to EPG is provided as input to the playbook. - name: Dynamic end point attach/dettach hosts: aci connection: local gather_facts: false vars: epg_members: [] pool_members: [] pool_members_ip: [] bigip_ip: 10.192.73.xx bigip_password: admin bigip_username: admin # Here we are mapping pool 'dynamic_pool' to EPG 'internalEPG' which belongs to APIC tenant 'TenantDemo' app_profile_name: AppProfile epg_name: internalEPG partition: Common pool_name: dynamic_pool pool_port: 80 tenant_name: TenantDemo tasks: - name: Setup provider set_fact: provider: server: "{{bigip_ip}}" user: "{{bigip_username}}" password: "{{bigip_password}}" server_port: "443" validate_certs: "false" - name: Get end points learned from End Point group aci_rest: action: "get" uri: "/api/node/mo/uni/tn-{{tenant_name}}/ap-{{app_profile_name}}/epg-{{epg_name}}.json?query-target=subtree&target-subtree-class=fvIp" host: "{{inventory_hostname}}" username: "{{ lookup('env', 'ANSIBLE_NET_USERNAME') }}" password: "{{ lookup('env', 'ANSIBLE_NET_PASSWORD') }}" validate_certs: "false" register: eps - name: Get the IP's of the servers part of the EPG set_fact: epg_members="{{epg_members + [item]}}" loop: "{{eps | json_query(query_string)}}" vars: query_string: "imdata[*].fvIp.attributes.addr" no_log: True - name: Get only the IPv4 IP's set_fact: epg_members="{{epg_members | ipv4}}" - name: Adding Pool members to the BIG-IP bigip_pool_member: provider: "{{provider}}" state: "present" name: "{{item}}" host: "{{item}}" port: "{{pool_port}}" pool: "{{pool_name}}" partition: "{{partition}}" loop: "{{epg_members}}" - name: Query BIG-IP facts bigip_device_facts: provider: "{{provider}}" gather_subset: - ltm-pools register: bigip_facts - name: "Show members belonging to pool {{pool_name}}" set_fact: pool_members="{{pool_members + [item]}}" loop: "{{bigip_facts.ltm_pools | json_query(query_string)}}" vars: query_string: "[?name=='{{pool_name}}'].members[*].name[]" - set_fact: pool_members_ip: "{{pool_members_ip + [item.split(':')[0]]}}" loop: "{{pool_members}}" - debug: "msg={{pool_members_ip}}" #If there are any membeers on the BIG-IP that are not present in the EPG,then delete them - name: Find the members to be deleted if any set_fact: members_to_be_deleted: "{{ pool_members_ip | difference(epg_members) }}" - debug: "msg={{members_to_be_deleted}}" - name: Delete Pool members from the BIG-IP bigip_pool_member: provider: "{{provider}}" state: "absent" name: "{{item}}" port: "{{pool_port}}" pool: "{{pool_name}}" preserve_node: yes partition: "{{partition}}" loop: "{{members_to_be_deleted}}" Ansible tower's scheduling feature can be used to schedule this playbook to be run every minute, every hour or once per day based on how often an application is expected to change and how important is it for the configuration on both the Cisco ACI and the BIG-IP to be in sync. F5 ACI ServiceCenter To learn more about the integration : https://www.f5.com/cisco The F5 ACI ServiceCenter is installed on the APIC controller. Here automation can be used to create the initial EPG to Pool mapping. Once the mapping is created the F5 ACI ServiceCenter handles the dynamic sizing of pools based on events generated by APIC. Events are generated when a server is learned/unlearned on an EPG which is what the F5 ACI ServiceCenter listens to and accordingly adds or removes pool members from the BIG-IP. Sample playbook to deploy the mapping configuration on the BIG-IP through the F5 ACI ServiceCenter --- - name: Deploy EPG to Pool mapping hosts: localhost gather_facts: false connection: local vars: apic_ip: "10.192.73.xx" big_ip: "10.192.73.xx" partition: "Dynamic" tasks: - name: Login to APIC uri: url: https://{{apic_ip}}/api/aaaLogin.json method: POST validate_certs: no body_format: json body: aaaUser: attributes: name: "admin" pwd: "******" headers: content_type: "application/json" return_content: yes register: cookie - debug: msg="{{cookie['cookies']['APIC-cookie']}}" - set_fact: token: "{{cookie['cookies']['APIC-cookie']}}" - name: Login to BIG-IP uri: url: https://{{apic_ip}}/appcenter/F5Networks/F5ACIServiceCenter/loginbigip.json method: POST validate_certs: no body: url: "{{big_ip}}" user: "admin" password: "admin" body_format: json headers: DevCookie: "{{token}}" #The body of this request defines the mapping of Pool to EPG #Here we are mapping pool 'web_pool' to EPG 'internalEPG' which belongs to APIC tenant 'TenantDemo' - name: Deploy AS3 dynamic EP mapping uri: url: https://{{apic_ip}}/appcenter/F5Networks/F5ACIServiceCenter/updateas3data.json method: POST validate_certs: no body: url: "{{big_ip}}" partition: "{{partition}}" application: "DemoApp1" json: class: Application template: http serviceMain: class: Service_HTTP virtualAddresses: - 10.168.56.100 pool: web_pool web_pool: class: Pool monitors: - http members: - servicePort: 80 serverAddresses: [] - addressDiscovery: event servicePort: 80 constants: class: Constants serviceCenterEPG: web_pool: tenant: TenantDemo application: AppProfile epg: internalEPG body_format: json status_code: - 202 - 200 headers: DevCookie: "{{token}}" return_content: yes register: complete_info - name: Get task ID of above request set_fact: task_id: "{{ complete_info.json.message.taskId}}" when: complete_info.json.code == 202 - name: Get deployment status uri: url: https://{{apic_ip}}/appcenter/F5Networks/F5ACIServiceCenter/getasynctaskresponse.json method: POST validate_certs: no body: taskId: "{{task_id}}" body_format: json headers: DevCookie: "{{token}}" return_content: yes register: result until: result.json.message.message != "in progress" retries: 5 delay: 2 when: task_id is defined - name: Display final result debug: var: result After deploying this configuration, adding/deleting pool members and making sure the configuration is in sync is the responsibility of the F5 ACI ServiceCenter. Takeaways Both methods are highly effective and usable. The choice of which one to use comes down to the operational model in your environment. Some pros and cons to help made the decision on which platform to use for automation. Ansible Tower Pros No dependency on any other tools Fits in better with bigger company automation strategy to use Ansible for ALL network automation Cons Have to manage playbook execution and scheduling using Ansible Tower If more logic is needed besides what’s described above playbooks will have to be written and maintained Execution of playbook is based on scheduling and is not event driven F5 ACI ServiceCenter Pros Only pool-epg mapping has to be deployed using automation, rest all is handled by the application User interface to view pool member to EPG mapping once deployed and view discrepancies if any Limited automation knowledge is needed, heavy lifting is being done by the application Dynamically adding/deleting pool members is event driven, as members are learned/unlearned by the F5 ACI ServiceCenter an action is taken Cons Another tool is required Customization of pool to EPG mapping is not present. Only one-to-one EPG to pool mapping is present. References Learn about the F5 ACI ServiceCenter and other Cisco integrations: https://f5.com/cisco Download the F5 ACI ServiceCenter: https://dcappcenter.cisco.com/f5-aci-servicecenter.html Lab to execute Ansible playbooks: https://dcloud.cisco.com (Lab name: F5 and Ansible )1.5KViews1like0CommentsEnable Consistent Application Services for Containers with CIS

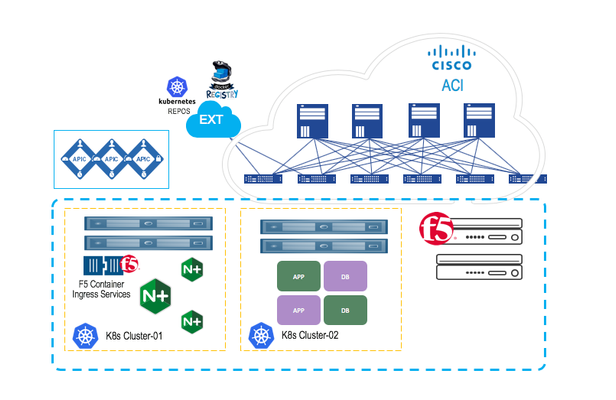

Kubernetes is all about abstracting away complexity. As Kubernetes continues to evolve, it becomes more intelligent and will become even more powerful when it comes to helping enterprises manage their data center, not just at the cloud.While enterprises have had to deal with the challenges associated with managing different types of modern applications (AI/ML, Big data, and analytics) to process that data, they are faced with the challenge to maintain a top-level network/security policies and gaining better control of the workload, to ensure operational and functional consistency. This is where Cisco ACI and F5 Container Ingress Services come into the picture. F5 CIS and Cisco ACI Cisco ACI offers these customers an integrated network fabric for Kubernetes. Recently,F5 and Cisco joined forces byintegrating F5 Container Ingress Services (or CIS) with Cisco ACI to bring L4-7 services into Kubernetes environment, to further simplify the user experience in deploying, scaling and managing containerized applications. This integration specifically enables: Unified networking: Containers, VMs, and bare-metal Secure multi-tenancy and seamless integration of Kubernetes network policies and ACI policies A single point of automation with enhanced visibility for ACI and BIG-IP. F5 Application Services natively integrated in Container and PaaS Environments One of the key benefits for such implementation is the ACI encapsulation normalization. The ACI fabric, as the normalizer for the encapsulation, allows you to merge different network technologies or encapsulations be it vlan or vxlan into a single policy model. BIG-IP through a simple VLAN connection to ACI, with no need for additional gateway, can communicate with any service anywhere. Solution Deployment To integrate F5 CIS with the Cisco ACI forKubernetes environment, you perform a series of tasks. Some you perform in the network to set up the Cisco Application Policy Infrastructure Controller (APIC); others you perform on the Kubernetes server(s). Rather thangetting down to the nitty-gritty, I willjust highlightthe steps todeploy the joint solution. Pre-requisites The BIG-IP CIS and Cisco ACI joint solution deployment assumes that you have the following in place: A working Cisco ACI installation ACI must be integrated with vCenter with dVS Fabric tenant pre-provisioned with the required VRFs/EPGs/L3OUTs. BIG-IP already running for non-container workload Deploying Kubernetes Clusters to ACI Fabrics The following steps will provide you a complete cluster configuration: Step 1.Run ACI provisioning tool to prepare Cisco ACI to work with Kubernetes Cisco provides an acc_provision tool to provision the fabric for the Kubernetes VMM domain and generate a .yaml file that Kubernetes uses to deploy the required Cisco Application Centric Infrastructure (ACI) container components. You can download the provisioning tool here. Next, you can use this provision tool to generate a sample configuration file that you can edit. $ acc-provision--sample > aci-containers-config.yaml We can now edit the sample configuration file to provide information from your network. With such configuration file, now you can run the following command to provision the CiscoACIfabric: acc-provision -c aci-containers-config.yaml -o aci-containers.yaml -f kubernetes-<version> -a -u [apic username] -p [apic password] Step 2. Prepare the ACI CNI Plugin configuration File The above command also generates the fileaci-containers.yamlthat you use after installing Kubernetes. Step 3.Preparing the Kubernetes Nodes - Set up networking for the node to support Kubernetes installation. With provisioned ACI, you start to prepare networking for the Kubernetes nodes. This includes steps such as Configuring the VMs interface toward the ACI fabric, Configuring a static route for the multicast subnet, Configuring the DHCP Client to work with ACIetc. Step 4.Installing Kubernetes cluster After you provision Cisco ACI and prepare the Kubernetes nodes, you can install Kubernetes and ACI containers. You can use any installation method you choose appropriate to your environment. Step 5.Deploy Cisco ACI CNI plugin When the Kubernetes cluster is up and running, you can copy the preciously generated CNI configuration to the master node, and install the CNI plug-in using the following command: kubectl apply -f aci-containers.yaml The command installs the following (PODs): ACI Containers Host Agent and OpFlex agent in a DaemonSet calledaci-containers-host Open vSwitch in a DaemonSet calledaci-containers-openvswitch ACI Containers Controller in a deployment calledaci-containers-controller. Other required configurations, including service accounts, roles, and security context For ‘the authoritative word on this specific implementation’, you can click here the workflow for integrating k8s into Cisco ACI for latest and greatest. After you have performed the previous steps, you can verify the integration in the Cisco APIC GUI. The integration creates a tenant, three EPGs, and a VMM domain. Each tenant will have the visibility of all the Kubernetes POD's. Install the BIG-IP Controller The F5 BIG-IP Controller (k8s-bigip-ctlr) or Container Ingress Services, if you aren't familiar, is a Kubernetes native service that provides the glue between container services and BIG-IP. It watches for changes and communicates those to BIG-IP delivered application services. These, in turn, keep up with the changes in container environments and enable enforcement of security policies. Once you have a running Kubernetes cluster deployed to ACI Fabric, you can follow these instructions to install BIG-IP Controller. Use the kubectl get command to verify that thek8s-bigip-ctlrPod launched successfully. BIG-IP asnorth-south load balancer forExternal Services For Kubernetes services that are exposed externally and need to be load balanced, Kubernetes does not handle the provisioning of the load balancing. It is expected that the load balancing network function is implemented separately. For these services, Cisco ACI takes advantage of the symmetric policy-based redirect (PBR) feature available in the Cisco Nexus 9300-EX or FX leaf switches in ACI mode. This is where BIG-IP Container Ingress Services (or CIS) comes into thepicture, as the north-south load balancer.On ingress, incoming traffic to an externally exposed service is redirected by PBR to BIG-IP for that particular service. If a Kubernetes cluster contains more than one IP pod for a particular service, BIG-IP will load balance the traffic across all the pods for that service. In addition, each new POD is added to BIG-IP pool automatically. Conclusion F5 CIS and Cisco ACI together offer a unified control, visibility, security and application services, for both container and non-container workload. Further Resources F5 Container Ingress Services Click here Cisco ACI and Kubernetes Integration Click here1.4KViews1like0CommentsHow to onboard F5 BIG-IP VE in Cisco CSP 2100/5000 for NFV solutions deployment

Are you considering Network Functions Virtualization (NFV) solutions for your data center? Are you wondering how your current F5 BIG-IP solutions can be translated into NFV environment? What NFV platform can be used with F5 NFV solutions in your data center? Good News! F5 has certified its BIG-IP NFV solutions with Cisco Cloud Services Platform (CSP). Click here for a complete list of versions validated. Cisco CSP is an open x86 Linux Kernel-based virtual machine (KVM) software and hardware platform is ideal for colocation and data center network functions virtualization (NFV). F5 has a broad portfolio of VNFs available on BIG-IP which include Virtual Firewall (vFW), Virtual Application Delivery Controllers (vADC), Virtual Policy Manager (vPEM), Virtual DNS (vDNS) and other BIG-IP products. F5 VNF + Cisco CSP 2100: together provides a joint solution that allow network administrators to quickly and easily deploy F5 VNFs through a simple, built-in, native web user interface (WebUI), command-line interface (CLI), or REST API. BIG-IP VE Key Features in CSP 10G throughput with SR-IOV PCIE or SR-IOV passthrough Intel X710 NIC - Quad 10G port supported All BIG-IP modules can run in CSP 2100 Follow the steps below to onboard F5 BIG-IP VE in Cisco CSP with a Day0 file Day0 file contents and creation Sample user_data #cloud-config write_files: - path: /config/onboarding/waitForF5Ready.sh permissions: 0755 owner: root:root content: | #!/bin/bash # This script checks the prompt while the device is # booting up, waiting until it is ready to accept # the provisioning commands. echo `date` -- Waiting for F5 to be ready sleep 5 while [[ ! -e '/var/prompt/ps1' ]]; do echo -n '.' sleep 5 done sleep 5 STATUS=`cat /var/prompt/ps1` while [[ ${STATUS}x != 'NO LICENSE'x ]]; do echo -n '.' sleep 5 STATUS=`cat /var/prompt/ps1` done echo -n ' ' while [[ ! -e '/var/prompt/cmiSyncStatus' ]]; do echo -n '.' sleep 5 done STATUS=`cat /var/prompt/cmiSyncStatus` while [[ ${STATUS}x != 'Standalone'x ]]; do echo -n '.' sleep 5 STATUS=`cat /var/prompt/cmiSyncStatus` done echo echo `date` -- F5 is ready... - path: /config/onboarding/setupLogging.sh permissions: 0755 owner: root:root content: | #!/bin/bash # This script creates a file to collect the output # of the provisioning commands for debugging. FILE=/var/log/onboard.log if [ ! -e $FILE ] then touch $FILE nohup $0 0<&- &>/dev/null & exit fi exec 1<&- exec 2<&- exec 1<>$FILE exec 2>&1 - path: /config/onboarding/onboard.sh permissions: 0755 owner: root:root content: | #!/bin/bash # This script sets up the logging, waits until the device # is ready to provision and then executes the commands # to set up networking, users and register with F5. . /config/onboarding/setupLogging.sh if [ -e /config/onboarding/waitForF5Ready.sh ] then echo "/config/onboarding/waitForF5Ready.sh exists" /config/onboarding/waitForF5Ready.sh else echo "/config/onboarding/waitForF5Ready.sh is missing" echo "Failsafe sleep for 5 minutes..." sleep 5m fi echo "Configure access" tmsh modify sys global-settings hostname <<hostname>> tmsh modify auth user admin shell bash password <<admin_password>> tmsh modify sys db systemauth.disablerootlogin value true tmsh save /sys config echo "Disable mgmt-dhcp..." tmsh modify sys global-settings mgmt-dhcp disabled echo "Set Management IP..." tmsh create /sys management-ip <<mgmt_ip/mask>> Example: 10.192.74.46/24 tmsh create /sys management-route default gateway <<gateway_ip>> echo "Save changes..." tmsh save /sys config partitions all echo "Set NTP..." tmsh modify sys ntp servers add { 0.pool.ntp.org 1.pool.ntp.org } tmsh modify sys ntp timezone America/Los_Angeles echo "Add DNS server..." tmsh modify sys dns name-servers add { <<ntp_ip>> } tmsh modify sys httpd ssl-port 8443 tmsh modify net self-allow defaults add { tcp:8443 } if [[ \ "8443\ " != \ "443\ " ]] then tmsh modify net self-allow defaults delete { tcp:443 } fi tmsh mv cm device bigip1 <<hostname>> tmsh save /sys config echo "Register F5..." tmsh install /sys license registration-key <<license_key>> tmsh show sys license date runcmd: [nohup sh -c '/config/onboarding/onboard.sh' &] Sample meta_data.json { "uuid": "1d9d6d3a-1d36-4db7-8d7c-63963d4d6f20", "hostname": "<<hostname>>" } Preparation: Assuming the content are in a directory named ‘example_files/iso_contents/openstack/2012-08-10’ Once the values above are entered into the user_data file, create the ISO file: genisoimage -volid config-2 -rock -joliet -input-charset utf-8 -output f5.iso example_files/iso_contents/ or (depending on you OS) mkisofs -R -V config-2 -o f5.iso example_files/iso_contents/ Process on CSP Download F5 BIG-IP VE (release 12.1.2 of later) qcow image from http://downloads.f5.com Log into Cisco CSP 2100 Go to "Configuration" -> "Repository" -> "+" Click on “Browse” and locate the F5 BIG-IP VE qcow image, then click "Upload" Go back to “Configuration” -> “Repository and follow the same upload process for the Day0 iso file. At this point you should be to view both the qcow and Day0 iso image in the repository tab To create a F5 BIG-IP virtual function, go to "Configuration" -> "Services" -> "+" A wizard will pop up After deployment F5 BIG-IP VE virtual function deployment in Cisco CSP 2100 is completed, you can monitor the BIG-IP VE boot up progress by clicking "Console Since the BIG-IP is being booted with a Day0 file, NTP/DNS configurations are already present on the BIG-IP. The BIG-IP will be licensed and ready to be configured. The MGMT IP, default username/password was specified in the Day0 file. The Day0 file can be enhanced to add more networking and other configuration parameters if needed by specifying the appropriate tmsh commands. Make sure the BIG-IP interface mapping to CSP 2100 VNIC is correct by verifying the MAC address assignment. Consult with CSP 2100 guide in obtaining CSP 2100 VF VNIC MAC address info. To check BIG-IP MAC address, go to "Network" -> "Interfaces" To check on the CSP, click on the service deployed, scroll to the bottom, expand the VNIC information tab Configure VLAN consistent with the CSP 2100 VLAN tag configuration, make sure VLANs are untagged at the BIG-IP level After BIG-IP VE connectivity is established in the network rest of the configurations, such as Self-IP, default gateway, virtual servers are consistent with any BIG-IP VE configuration. To learn more about the F5 and Cisco partnership and joint solutions, visithttps://f5.com/solutions/technology-alliances/cisco For more information about Cisco CSP visithttp://www.cisco.com/go/csp Click here for a complete list of BIG-IP and CSP versions validated.1.2KViews0likes3CommentsHigh Performance Intrusion Prevention

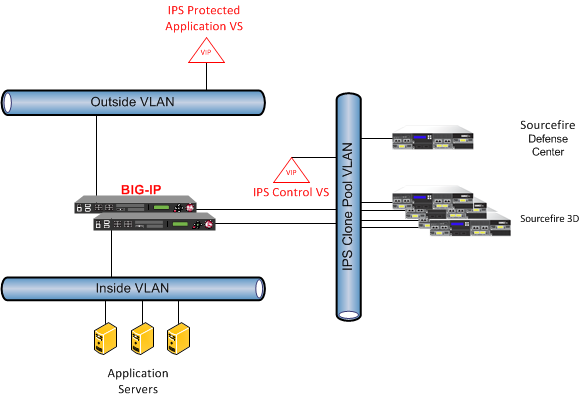

Overview Utilizing the F5 BIG-IP ability to reliably load balance a high volume of concurrent connections to application services is further improved with integrations with IPS equipment providers. In this article I will articulate details pertaining to how the F5 BIG-IP along with the Cisco/Sourcefire Next Generation Intrusion Prevention System (NGIPS) managed device solutions can be utilized to provide a secure application delivery platform. This platform is secure and provides advanced client / server application monitored visibility. This solution is not limited to just utilizing Cisco/Sourcefire. It can also be extended to other IPS providers. This article will focus on the functional demonstration that we are presenting at the 2014 RSA Conference. The demo that was presented at the 2014 RSA conference is a functional demo. It does not stress the performance bounds of the joint solution. It is meant to demonstrate how the solution is built, monitored, and operated. Solution IPS deployments have limited bandwidth per sensor node. The traffic to be analyzed may require different sized IPS sensors depending on the traffic profiles. With BIG-IP these different traffic profiles can be sent to the properly sized IPS sensor. BIG-IP can also perform SSL offload. Be that as it may, the NGIPS will then inspect the traffic in the clear. Scale-out Flexibility Utilizing the BIG-IP Load Balancing capabilities, IPS inspection bandwidth can be increased by increasing the IPS Sensor node count within the load balanced pool. BIG-IP will load balance the traffic across all nodes within the pool. If sensors need to be serviced, they can be removed from the pool without affecting the end user. Multiple IPS Pools can be configured to address the varying bandwidth needs of your applications. Properly configured, the BIG-IP can selectively choose which traffic flows that will be sent to the IPS pool nodes for inspection. Configuration For the RSA conference we built a functional demonstration to load balance traffic to two Sourcefire NGIPS managed devices. The sensor receives the traffic using the BIG-IP Clone pool capabilities. As traffic patterns match IPS rules, and signatures. The Sourcefire Remediation API communicates to BIG-IP to enforce a denylist on the violating source IPs. To perform these tasks, BIG-IP uses two iRules. The first iRule is the Command iRule. And, the second is the Protection iRule. We will touch upon these aspects in the follow-on dialog. Demonstration Network Topology Overview Network Topologies The flexibility that BIG-IP provides for traffic steering and load balancing allows for multiple ways to deploy the sensors. The sensors can be deployed as transparent in-line devices, Load balanced through Gateway Pools, and Load Balanced as cloned traffic. Leveraging the excellent capabilities and strength of BIG-IP to perform SSL Offload can be implored with either of these deployment topologies. In-Line Transparent The in-line deployment places the NGIPS managed devices in between the BIG-IP and the application servers. We use an interim VLAN to pass the traffic through the NGIPS devices. Health monitoring transparent IPS sensors is challenging. By design these devices do not directly respond to pings or other direct unicasted packets. If a sensor goes offline the remaining sensors will carry on inspecting traffic. In-line Transparent Topology In-Line utilizing Gateway Pools An alternative way to deploy the sensors is to use Gateway Pools. Gateway Pools is a feature that allows traffic forwarding decisions to be performed through a pool of gateways (IP forwarding) devices. BIG-IP has nineteen different load balancing techniques that can be selected. The benefit this brings is that each gateway pool member can be health monitored. And, intelligent node selection based on concurrent connection and performance measurements. The network topology is similar to the in-line transparent setup with the addition of multiple NGIPS managed devices. Each of the NGIPS managed devices are configured with IP addresses. A virtual router is defined in order to address these sensors much in the same was as BIG-IP would forward traffic through a router. Using Clone Pools BIG-IP Clone Pool feature provides the ability to send a copy of traffic to a pool for inspection. The clone pool is directional in the sense the user can decide to just clone the client side traffic, server side traffic, or both. The NGIPS managed devices are on a side VLAN. The benefit this provides is the ability to apply the IPS inspection based on a per Virtual Server basis. This is the topology we chose to demonstrate at the RSA conference. Example Deployment Topology SSL Off-load assists with inspection BIG-IP has a huge capacity to perform SSL Offload. This is the ability for the BIG-IP to decrypt the SSL traffic from the clients in order for the NGIPS managed devices to see the un-encrypted traffic content. Many intrusion inspection devices are un-able to decrypt the SSL traffic, or the performance degradation of enabling SSL decryption severely impacts the overall application performance capabilities. All of the previously mentioned topologies can be deployed with SSL Offload as long as the BIG-IP is not re-encrypting the traffic to the server pools. If this is the case then a sandwich approach can be deployed. Detection and Enforcement IPS Blocking for in-line operation The NGIPS managed devices can be configured to block the offending traffic. This alone will not relieve the individual sensors from continuing to receive more violating traffic. However, the NGIPS signals the offending source IPs to the BIG-IP. The BIG-IP can perform the blocking enforcement on behalf of the intelligence that the NGIPS managed devices had detected. In turn the NGIPS is not burdened with the offensive traffic. Remediation API The Sourcefire NGIPS with Defense Center has a Remediation API that is used to signal the BIG-IP which sources to denylist. The remediation API is coupled with F5 iRule technology to handle the control communications and perform enforcement. The Defense Center communicates with a HTTP Request containing the offending source IP and a time out period. The time out period is the time before the offending source is allowed back in. iRule overview The iRules are utilized to perform both control and enforcement. The first iRule will receive source IP and timeout information from the Sourcefire Defense Center. This information is submitted into an internal data table. The second iRule will perform the enforcement to reject connection requests from the aforementioned source IPs. In turn time passes, and these clients can come back in to the playground. If they play nicely they are allowed to stay. Once, they violate the rule base. These source IPs will be reapplied and maintain an entry within our denylist table and continue to be rejected access. Command iRule The command iRule is applied to a Virtual Server that listens on the IPS VLAN. This virtual has no pool members associated to it. It’s there to catch the HTTP traffic from the Sourcefire Defense Center remediation API. The remediation API will send to the BIG-IP the offending Source IP and a configurable timeout period in seconds when a client trips an IPS rule. This iRule will also log to the ‘/var/log/ltm’ log file that the IP address has been added to the denylist table. when HTTP_REQUEST { if { [URI::query [HTTP::uri] "action"] equals "denylist" } { set blockingIP [URI::query [HTTP::uri] "sip"] set IPtimeout [URI::query [HTTP::uri] "timeout"] table add -subtable "denylist" $blockingIP 1 $IPtimeout HTTP::respond 200 content "$blockingIP added to denylist for $IPtimeout seconds" return } HTTP::respond 200 content "You need to include an ?action query" } Command iRule Enforcement iRule The enforcement iRule is applied to the Application Virtual Servers. The internal table of IP addresses that is maintained by the BIG-IP is queried when a new connection request is initiated. If the initiator is on the denylist the connection request is dropped. The iRule will also log to that the client attempted to access a protected Virtual Server. After sufficient time (timeout period provided by the Remediation API from above) has passed the source IP is removed from the table to allow new connections to be established. As mentioned before if the client trips a blocking rule it will again be rejected. when CLIENT_ACCEPTED { set srcip [IP::remote_addr] if { [table lookup -subtable "denylist" $srcip] != "" } { drop log local0. "Block IP on black list" return } } Enforcement and Protection iRule Management Interface This solution is managed and monitored with both the BIG-IP as well as the Sourcefire Defense Center. The Defense Center Content Explorer is a dashboard with a series of charts reporting traffic content details. BIG-IP Virtual Server and Pool statistics will report the traffic distribution of the NGIPS managed devices. Cisco / Sourcefire Defense Center These graphics are some screen shots of the Defense Center reporting. Context Explorer: Events over time Application Protocol distribution Client Application Information distribution F5 Dashboard: Sensor activity Traffic Overview Dashboard Web Application Traffic Risk BIG-IP Virtual Server and Pool Statistics BIG-IP Statistics show that the IPS Pools members are receiving the clone pool traffic. IPS Pool Statistics Virtual Server Statistics Conclusion Combining BIG-IP with Intrusion Prevention appliances is a very compelling solution. This demonstration was built utilizing the existing product feature sets of our currently released products. The demonstration is a functional representation to show how BIG-IP can load balance traffic to IPS sensors. The flexibility that both products provide, allows for improvements to the overall solution. The iRule code can be added to existing iRules. Utilizing the Sourcefire FireAMP features will extend this solution to also monitor for Malware. If a Server should become compromised Sourcefire will detect that from the Server Clone Pool traffic flows. Technorati Tags: IPS Cisco Sourcefire F5 iRule Security BIG-IP LiveJournal Tags: IPS Cisco Sourcefire F5 iRule Security BIG-IPS Cisco Sourcefire F5 iRule Security BIG-IP1KViews0likes0Comments