BIG-IP Edge Client 2.0.2 for Android

Earlier this week F5 released our BIG-IP Edge Client for Android with support for the new Amazon Kindle Fire HD. You can grab it off Amazon instantly for your Android device. By supporting BIG-IP Edge Client on Kindle Fire products, F5 is helping businesses secure personal devices connecting to the corporate network, and helping end users be more productive so it’s perfect for BYOD deployments. The BIG-IP® Edge Client™ for all Android 4.x (Ice Cream Sandwich) or later devices secures and accelerates mobile device access to enterprise networks and applications using SSL VPN and optimization technologies. Access is provided as part of an enterprise deployment of F5 BIG-IP® Access Policy Manager™, Edge Gateway™, or FirePass™ SSL-VPN solutions. BIG-IP® Edge Client™ for all Android 4.x (Ice Cream Sandwich) Devices Features: Provides accelerated mobile access when used with F5 BIG-IP® Edge Gateway Automatically roams between networks to stay connected on the go Full Layer 3 network access to all your enterprise applications and files Supports multi-factor authentication with client certificate You can use a custom URL scheme to create Edge Client configurations, start and stop Edge Client BEFORE YOU DOWNLOAD OR USE THIS APPLICATION YOU MUST AGREE TO THE EULA HERE: http://www.f5.com/apps/android-help-portal/eula.html BEFORE YOU CONTACT F5 SUPPORT, PLEASE SEE: http://support.f5.com/kb/en-us/solutions/public/2000/600/sol2633.html If you have an iOS device, you can get the F5 BIG-IP Edge Client for Apple iOS which supports the iPhone, iPad and iPod Touch. We are also working on a Windows 8 client which will be ready for the Win8 general availability. ps Resources F5 BIG-IP Edge Client Samsung F5 BIG-IP Edge Client Rooted F5 BIG-IP Edge Client F5 BIG-IP Edge Portal for Apple iOS F5 BIG-IP Edge Client for Apple iOS F5 BIG-IP Edge apps for Android Securing iPhone and iPad Access to Corporate Web Applications – F5 Technical Brief Audio Tech Brief - Secure iPhone Access to Corporate Web Applications iDo Declare: iPhone with BIG-IP Technorati Tags: F5, infrastructure 2.0, integration, cloud connect, Pete Silva, security, business, education,technology, application delivery, ipad, cloud, context-aware,infrastructure 2.0, iPhone, web, internet, security,hardware, audio, whitepaper, apple, iTunes2.5KViews0likes3CommentsThe Challenges of SQL Load Balancing

#infosec #iam load balancing databases is fraught with many operational and business challenges. While cloud computing has brought to the forefront of our attention the ability to scale through duplication, i.e. horizontal scaling or “scale out” strategies, this strategy tends to run into challenges the deeper into the application architecture you go. Working well at the web and application tiers, a duplicative strategy tends to fall on its face when applied to the database tier. Concerns over consistency abound, with many simply choosing to throw out the concept of consistency and adopting instead an “eventually consistent” stance in which it is assumed that data in a distributed database system will eventually become consistent and cause minimal disruption to application and business processes. Some argue that eventual consistency is not “good enough” and cite additional concerns with respect to the failure of such strategies to adequately address failures. Thus there are a number of vendors, open source groups, and pundits who spend time attempting to address both components. The result is database load balancing solutions. For the most part such solutions are effective. They leverage master-slave deployments – typically used to address failure and which can automatically replicate data between instances (with varying levels of success when distributed across the Internet) – and attempt to intelligently distribute SQL-bound queries across two or more database systems. The most successful of these architectures is the read-write separation strategy, in which all SQL transactions deemed “read-only” are routed to one database while all “write” focused transactions are distributed to another. Such foundational separation allows for higher-layer architectures to be implemented, such as geographic based read distribution, in which read-only transactions are further distributed by geographically dispersed database instances, all of which act ultimately as “slaves” to the single, master database which processes all write-focused transactions. This results in an eventually consistent architecture, but one which manages to mitigate the disruptive aspects of eventually consistent architectures by ensuring the most important transactions – write operations – are, in fact, consistent. Even so, there are issues, particularly with respect to security. MEDIATION inside the APPLICATION TIERS Generally speaking mediating solutions are a good thing – when they’re external to the application infrastructure itself, i.e. the traditional three tiers of an application. The problem with mediation inside the application tiers, particularly at the data layer, is the same for infrastructure as it is for software solutions: credential management. See, databases maintain their own set of users, roles, and permissions. Even as applications have been able to move toward a more shared set of identity stores, databases have not. This is in part due to the nature of data security and the need for granular permission structures down to the cell, in some cases, and including transactional security that allows some to update, delete, or insert while others may be granted a different subset of permissions. But more difficult to overcome is the tight-coupling of identity to connection for databases. With web protocols like HTTP, identity is carried along at the protocol level. This means it can be transient across connections because it is often stuffed into an HTTP header via a cookie or stored server-side in a session – again, not tied to connection but to identifying information. At the database layer, identity is tightly-coupled to the connection. The connection itself carries along the credentials with which it was opened. This gives rise to problems for mediating solutions. Not just load balancers but software solutions such as ESB (enterprise service bus) and EII (enterprise information integration) styled solutions. Any device or software which attempts to aggregate database access for any purpose eventually runs into the same problem: credential management. This is particularly challenging for load balancing when applied to databases. LOAD BALANCING SQL To understand the challenges with load balancing SQL you need to remember that there are essentially two models of load balancing: transport and application layer. At the transport layer, i.e. TCP, connections are only temporarily managed by the load balancing device. The initial connection is “caught” by the Load balancer and a decision is made based on transport layer variables where it should be directed. Thereafter, for the most part, there is no interaction at the load balancer with the connection, other than to forward it on to the previously selected node. At the application layer the load balancing device terminates the connection and interacts with every exchange. This affords the load balancing device the opportunity to inspect the actual data or application layer protocol metadata in order to determine where the request should be sent. Load balancing SQL at the transport layer is less problematic than at the application layer, yet it is at the application layer that the most value is derived from database load balancing implementations. That’s because it is at the application layer where distribution based on “read” or “write” operations can be made. But to accomplish this requires that the SQL be inline, that is that the SQL being executed is actually included in the code and then executed via a connection to the database. If your application uses stored procedures, then this method will not work for you. It is important to note that many packaged enterprise applications rely upon stored procedures, and are thus not able to leverage load balancing as a scaling option. Depending on your app or how your organization has agreed to protect your data will determine which of these methods are used to access your databases. The use of inline SQL affords the developer greater freedom at the cost of security, increased programming(to prevent the inherent security risks), difficulty in optimizing data and indices to adapt to changes in volume of data, and deployment burdens. However there is lively debate on the values of both access methods and how to overcome the inherent risks. The OWASP group has identified the injection attacks as the easiest exploitation with the most damaging impact. This also requires that the load balancing service parse MySQL or T-SQL (the Microsoft Transact Structured Query Language). Databases, of course, are designed to parse these string-based commands and are optimized to do so. Load balancing services are generally not designed to parse these languages and depending on the implementation of their underlying parsing capabilities, may actually incur significant performance penalties to do so. Regardless of those issues, still there are an increasing number of organizations who view SQL load balancing as a means to achieve a more scalable data tier. Which brings us back to the challenge of managing credentials. MANAGING CREDENTIALS Many solutions attempt to address the issue of credential management by simply duplicating credentials locally; that is, they create a local identity store that can be used to authenticate requests against the database. Ostensibly the credentials match those in the database (or identity store used by the database such as can be configured for MSSQL) and are kept in sync. This obviously poses an operational challenge similar to that of any distributed system: synchronization and replication. Such processes are not easily (if at all) automated, and rarely is the same level of security and permissions available on the local identity store as are available in the database. What you generally end up with is a very loose “allow/deny” set of permissions on the load balancing device that actually open the door for exploitation as well as caching of credentials that can lead to unauthorized access to the data source. This also leads to potential security risks from attempting to apply some of the same optimization techniques to SQL connections as is offered by application delivery solutions for TCP connections. For example, TCP multiplexing (sharing connections) is a common means of reusing web and application server connections to reduce latency (by eliminating the overhead associated with opening and closing TCP connections). Similar techniques at the database layer have been used by application servers for many years; connection pooling is not uncommon and is essentially duplicated at the application delivery tier through features like SQL multiplexing. Both connection pooling and SQL multiplexing incur security risks, as shared connections require shared credentials. So either every access to the database uses the same credentials (a significant negative when considering the loss of an audit trail) or we return to managing duplicate sets of credentials – one set at the application delivery tier and another at the database, which as noted earlier incurs additional management and security risks. YOU CAN’T WIN FOR LOSING Ultimately the decision to load balance SQL must be a combination of business and operational requirements. Many organizations successfully leverage load balancing of SQL as a means to achieve very high scale. Generally speaking the resulting solutions – such as those often touted by e-Bay - are based on sound architectural principles such as sharding and are designed as a strategic solution, not a tactical response to operational failures and they rarely involve inspection of inline SQL commands. Rather they are based on the ability to discern which database should be accessed given the function being invoked or type of data being accessed and then use a traditional database connection to connect to the appropriate database. This does not preclude the use of application delivery solutions as part of such an architecture, but rather indicates a need to collaborate across the various application delivery and infrastructure tiers to determine a strategy most likely to maintain high-availability, scalability, and security across the entire architecture. Load balancing SQL can be an effective means of addressing database scalability, but it should be approached with an eye toward its potential impact on security and operational management. What are the pros and cons to keeping SQL in Stored Procs versus Code Mission Impossible: Stateful Cloud Failover Infrastructure Scalability Pattern: Sharding Streams The Real News is Not that Facebook Serves Up 1 Trillion Pages a Month… SQL injection – past, present and future True DDoS Stories: SSL Connection Flood Why Layer 7 Load Balancing Doesn’t Suck Web App Performance: Think 1990s.2.1KViews0likes1CommentQuantifying Reputation Loss From a Breach

#infosec #security Putting a value on reputation is not as hard as you might think… It’s really easy to quantify some of the costs associated with a security breach. Number of customers impacted times the cost of a first class stamp plus the cost of a sheet of paper plus the cost of ink divided by … you get the picture. Some of the costs are easier than others to calculate. Some of them are not, and others appear downright impossible. One of the “costs” often cited but rarely quantified is the cost to an organization’s reputation. How does one calculate that? Well, if folks sat down with the business people more often (the ones that live on the other side of the Meyer-Briggs Mountain) we’d find it’s not really as difficult to calculate as one might think. While IT folks analyze flows and packet traces, business folks analyze market trends and impacts – such as those arising from poor customer service. And if a breach of security isn’t interpreted by the general populace as “poor customer service” then I’m not sure what is. While traditionally customer service is how one treats the customer, increasingly that’s expanding to include how one treats the customer’s data. And that means security. This question “how much does it really cost” is one Jeremiah Grossman asks fairly directly in a recent blog, “Indirect Hard Losses”: As stated by InformationWeek regarding a Ponemon Institute study on the Cost of a Data Breach, “Customers, it seems, lose faith in organizations that can't keep data safe and take their business elsewhere.” The next logical question is how much? Jeremiah goes on to focus on revenue lost from web transactions after a breach and that’s certainly part of the calculation, but what about those losses that might have been but now will never be? How can we measure not only the loss of revenue (meaning a decrease in first-order customers) but the potential loss of revenue? That’s harder, but just as important as it more accurately represents the “reputation loss” often mentioned in passing but never assigned a concrete value (at least not publicly, some industries discretely share such data with trusted members of the same industry, but seeing these numbers in the wild? Good luck!) HERE COMES the ALMOST SCIENCE 20% of the businesses that lost data lost customers as a direct result. The impacts were most severe for companies with more than 100 employees. Almost half of them lost sales. Rubicon Survey One of the first things we have to calculate is influence, as that directly impacts reputation. It is the ability of even a single customer to influence a given number of others (negatively or positively) that makes up reputation. It’s word of mouth, what people say about you, after all. If we turn to studies that focus more on marketing and sales and businessy things, we can find a lot of this data. It’s a well-studied area. One study 1 indicates that the reach of a single dissatisfied customer will tell approximately 8-16 people. Each of those people has a circle of influence of about 250, with 25 of those being within an organization's primary target audience. Of all those told 2% (1 in 50) will defect or avoid an organization upon hearing of the victim's dissatisfaction. So for every angry customer, the reputation impact is a loss of anywhere from 40-80 customers, existing and future. So much for thinking 100 records stolen in a breach is small potatoes, eh? Thousands of existing and potential customers loss is nothing to sneeze at. Now, here’s where it gets a little harder, because you’re going to have to talk to the businessy folks to get some values to attach to those losses. See, there’s two numbers you need yet: customer lifetime value (CLV) and the cost to replace a customer (which is higher than the cost of acquire a customer, but don’t ask me why, I’m not a businessy folk). Customer values are highly dependent upon industry. For example, based on 2010 FDIC data, the industry average annual customer value for a banking customer is $209 2 . Facebook’s annual revenue per user (ARPU) is estimated at $2.00 3 . Estimates claim Google makes $9.85 annually off each Android user 4 . And Zynga’s ARPU is estimated at $3.96 (based on a reported $0.33 monthly per user revenue) 5 . This is why you actually have to talk to the businessy guys, they know what these values are and you’ll need them to plug in to the influence calculation to come up with a at-least-it’s-closer-than-guessing value. You also need to ask what the average customer lifetime is, so you can calculate the loss from dissatisfied and defecting customers. Then you just need to start plugging in the numbers. Remember, too, that it’s a model; an estimate. It’s not a perfect valuation system, but it should give you some kind of idea of what the reputational impact from a breach would be, which is more than most folks have today. Even if you can’t obtain the cost to replace value, try the model without it. Try a small breach, just for fun, say of 100 records. Let’s use $4.00 as an annual customer value and a lifetime of ten years as an example. Affected Customer Loss: 100 * ($4 *10) = $4000 Influenced Customer Loss: 100 * (40) = 4000 * 40 = $160,000 Total Reputation Cost: $164,000 Adding in the cost to replace can only make this larger and serves very little purpose except to show that even what many consider a relatively small breach (in terms of records lost) can be costly. WHY is THIS VALUABLE? The reason this is valuable is two-fold. First, it serves as the basis for a very logical and highly motivating business case for security solutions designed to prevent breaches. The problem with much of security is it’s intangible and incalculable. It is harder to put monetary value to risk than it is to put monetary value on solutions. Thus, the ability to perform a cost-benefit analysis that is based in part on “reputation loss” is difficult for security professionals and IT in general. The business needs to be able to justify investments, and to do that they need hard-numbers that they can balance against. It is the security professionals who so often are called upon to explain the “risk” of a breach and loss of data to the business. By providing them tangible data based on accepted business metrics and behavior offers them a more concrete view of the costs – in money – of a breach. That gives IT the leverage, the justification, for investing in solutions such as web application firewalls and vulnerability scanning services that are designed to detect and ultimately prevent such breaches from occurring. It gives infosec some firm ground upon which stand and talk in terms the business understands: dollar signs. [1] PUTTING A PRICE TAG ON A LOST CUSTOMER [2] Free Checking and Debit Incentives Post-Durbin [3] Facebook’s Annual Revenue Per User [4] Each Android User Will Make Google $9.85 per Year in 2012 [5] Zynga Doubled ARPU From Last Year Even as Facebook Platform Changes Slowed Growth1KViews0likes0CommentsSnippet #7: OWASP Useful HTTP Headers

If you develop and deploy web applications then security is on your mind. When I want to understand a web security topic I go to OWASP.org, a community dedicated to enabling the world to create trustworthy web applications. One of my favorite OWASP wiki pages is the list of useful HTTP headers. This page lists a few HTTP headers which, when added to the HTTP responses of an app, enhances its security practically for free. Let’s examine the list… These headers can be added without concern that they affect application behavior: X-XSS-Protection Forces the enabling of cross-site scripting protection in the browser (useful when the protection may have been disabled) X-Content-Type-Options Prevents browsers from treating a response differently than the Content-Type header indicates These headers may need some consideration before implementing: Public-Key-Pins Helps avoid *-in-the-middle attacks using forged certificates Strict-Transport-Security Enforces the used of HTTPS in your application, covered in some depth by Andrew Jenkins X-Frame-Options / Frame-Options Used to avoid "clickjacking", but can break an application; usually you want this Content-Security-Policy / X-Content-Security-Policy / X-Webkit-CSP Provides a policy for how the browser renders an app, aimed at avoiding XSS Content-Security-Policy-Report-Only Similar to CSP above, but only reports, no enforcement Here is a script that incorporates three of the above headers, which are generally safe to add to any application: And that's it: About 20 lines of code to add 100 more bytes to the total HTTP response, and enhanced enhanced application security! Go get your own FREE license and try it today!729Views0likes2CommentsF5 Friday: Goodbye Defense in Depth. Hello Defense in Breadth.

#adcfw #infosec F5 is changing the game on security by unifying it at the application and service delivery layer. Over the past few years we’ve seen firewalls fail repeatedly. We’ve seen business disrupted, security thwarted, and reputations damaged by the failure of the very devices meant to prevent such catastrophes from happening. These failures have been caused by a change in tactics from invaders who seek no longer to find away through or over the walls, but who simply batter it down instead. A combination of traditional attacks – network-layer – and modern attacks – application-layer – have become a force to be reckoned with; one that traditional stateful firewalls are often not equipped to handle. Encrypted traffic flowing into and out of the data center often bypasses security solutions entirely, leaving another potential source of a breach unaddressed. And performance is being impeded by the increasing number of devices that must “crack the packet” as it were and examine it, often times duplicating functionality with varying degrees of success. This is problematic because the resolution to this issue can be as disconcerting as the problem itself: disable security. Seriously. Security functions have been disabled, intentionally, in the name of performance. IT security personnel within large corporations are shutting off critical functionality in security applications to meet network performance demands for business applications. SURVEY: SECURITY SACRIFICED FOR NETWORK PERFORMANCE What the company [NSS Labs] found would likely startle any existing or potential customers: three of the six firewalls failed to stay operational when subjected to stability tests, five out of six didn't handle what is known as the "Sneak ACK attack," that would enable attackers to side-step the firewall itself. Finally, according to NSS Labs, the performance claims presented in the vendor datasheets "are generally grossly overstated." Independent lab tests find firewalls fall down on the job Add in the complexity from the sheer number of devices required to implement all the different layers of security needed, which increases costs while impairing performance, and you’ve got a broken model in need of repair. This is a failure of the defense in depth strategy; the layered, multi-device (silo) approach to operational security. Most importantly, it’s one that’s failing to withstand attacks. What we need is defense in breadth – the height of the stack –to assure availability and security using a more intelligent, unified security strategy. DEFENSE in BREADTH While it’s really not as catchy as “defense in the depth” the concept behind the admittedly awkward sounding phrase is sound: to assure availability and security simultaneously requires a strong security strategy from the bottom to the top of the networking stack, i.e. the application layer. The ability of the F5 BIG-IP platform to provide security up and down the stack has existed for many years, and its capabilities to detect, prevent, and withstand concerted attacks has been appreciated by its customers (quietly) for some time. While basic firewalling functions have been a part of BIG-IP for years, there are certain capabilities required of a firewall – specifically an ICSA certified firewall – that it didn’t have. So we decided to do something about that. The result is the ICSA certification of the BIG-IP platform as a network firewall. Combined with its existing ICSA certification for web application firewall (BIG-IP Application Security Manager) and SSL-TLS VPN 3.0 (BIG-IP Edge Gateway), the BIG-IP platform now supports a full-spectrum security solution in a single, unified system. What is unique about F5’s approach is that the security capabilities noted above can be deployed on BIG-IP Application Delivery Controllers (ADCs)—best known for providing industry-leading intelligent traffic management and optimization capabilities. This firewall solution is part of F5’s comprehensive security architecture that enables customers to apply a unified security strategy. For the first time in the industry, organizations can secure their networks, data, protocols, applications, and users on a single, flexible, and extensible platform: BIG-IP. Combining network-firewall services with the ability to plug the hole in modern security implementations (the application layer) with a platform-based solution provides the opportunity to consolidate security services and leverage a shared infrastructure platform resulting in a more comprehensive, strategic deployment that is not only more secure, but more cost effective. Resources: The Fundamental Problem with Traditional Inbound Protection The Ascendancy of the Application Layer Threat ISCA Certified Network Firewall for Data Centers Mature Security Organizations Align Security with Service Delivery BIG-IP Data Center Firewall Solution – SlideShare Presentation The New Data Center Firewall Paradigm – White Paper Independent lab tests find firewalls fall down on the job SURVEY: SECURITY SACRIFICED FOR NETWORK PERFORMANCE F5 Friday: When Firewalls Fail… Challenging the Firewall Data Center Dogma What We Learned from Anonymous: DDoS is now 3DoS The Many Faces of DDoS: Variations on a Theme or Two F5 Friday: Eliminating the Blind Spot in Your Data Center Security Strategy F5 Friday: Multi-Layer Security for Multi-Layer Attacks522Views0likes0CommentsF5 Friday: Zero-Day Apache Exploit? Zero-Problem

#infosec A recently discovered 0-day Apache exploit is no problem for BIG-IP. Here’s a couple of different options using F5 solutions to secure your site against it. It’s called “Apache Killer” and it’s yet another example of exploiting not a vulnerability, but a protocol’s behavior. UPDATE (8/26/2011) We're hearing that other Range-* HTTP headers are also vulnerable. Take care to secure against these potential attack vectors as well! In this case, the target is Apache and the “vulnerability” is in the way multiple ranges are handled by the Apache HTTPD server. The RANGE HTTP header is used to request one or more sub-ranges of the response, instead of the entire response entity. Ranges are sometimes used by thin clients (an example given was an eReader) that are memory constrained and may want to display just portions of the web page. Generally speaking, multiple byte ranges are not used very often. RFC 2616 Section 14.35.2 (Range retrieval request) explains: HTTP retrieval requests using conditional or unconditional GET methods MAY request one or more sub-ranges of the entity, instead of the entire entity, using the Range request header, which applies to the entity returned as the result of the request: Range = "Range" ":" ranges-specifier A server MAY ignore the Range header. However, HTTP/1.1 origin servers and intermediate caches ought to support byte ranges when possible, since Range supports efficient recovery from partially failed transfers, and supports efficient partial retrieval of large entities. The attack is simple. It’s a simple HTTP request with lots – and lots – of ranges. While this example uses the HEAD method, it can also be used with a GET. HEAD / HTTP/1.1 Host:xxxx Range:bytes=0-,5-1,5-2,5-3,… According to researchers testing the vulnerability, a successful attack requires a “modest” number of requests. BIG-IP SOLUTIONS There are several options to prevent this attack using BIG-IP solutions. HEADER SANITIZATION First, you can modify the HTTP profile to simply remove the Range header. HTTP header removal – and replacement – is a common means of manipulating request and response headers as a means to “fix” broken applications, clients, or enable other functionality. This is a form of header sanitization, used typically to remove non-compliant header values that may or may not be malicious, but are undesirable. The Apache suggestion is to remove any Range header with 5 or more values. Note that this could itself break clients whose functionality expects a specific data set as specified by the RANGE header. As it is a rarely used header it is unlikely to impact clients adversely, but caution is always advised. Collaborate with developers and understand the implications before arbitrarily removing HTTP headers that may be necessary to application functionality. HEADER VALUE SCRUBBING You can also use an iRule to scrub the headers. By inspecting and thus detecting large numbers of ranges in the RANGE header, you can subsequently handle the request based on your specific needs. Possible reactions include removal of the header, rejection of the request, redirection to a honey pot, or replacement of the header. Sample iRule code (always test before deploying into production!) when HTTP_REQUEST { # remove Range requests for CVE-2011-3192 if more than 5 ranges are requested if { [HTTP::header "Range"] matches_regex {bytes=(([0-9\- ])+,){5,}} } { HTTP::header remove Range } } Again, changing an HTTP header may have negative consequences on the functionality of the application and/or client, so tread carefully. BIG-IP ASM ATTACK SIGNATURE Another method of mitigation using BIG-IP solutions is to use a BIG-IP Application Security Manager (ASM) attack signature to detect and act upon an attack using this technique. The signature to add looks like: pcre:"/Range:[\t ]*bytes=(([0-9\- ])+,){5,}/Hi"; It is important to be aware of this exploit and how it works, as it is likely that once it is widely mitigated, attacks will begin (if they already are not) to explore the ways in which this header can be exploited. There are multiple “range” style headers, any of which may be vulnerable to similar exploitation, so it may be time to consider your current security strategy and determine whether the field of potential exploitable headers is such that a more negative approach (default deny unless specifically allowed) may be required to secure against future DoS attacks targeting HTTP headers. There are also alternative solutions available already, including this writeup from SpiderLabs with a link to an OWASP mod_security rule file for mitigations. Stay safe out there! Apache Warns Web Server Admins of DoS Attack Tool The Many Faces of DDoS: Variations on a Theme or Two How To Limit URI Length Without Recompiling Apache F5 Friday: Multi-Layer Security for Multi-Layer Attacks F5 Friday: Mitigating the ‘Padding Oracle’ Exploit for ASP.NET F5 Friday: The Art of Efficient Defense The Infrastructure 2.0–Security Connection F5 Friday: Eliminating the Blind Spot in Your Data Center Security Strategy483Views0likes1CommentBIG-IP Edge Client v1.0.4 for iOS

If you are running the BIG-IP Edge Client on your iPhone, iPod or iPad, you may have gotten an AppStore alert for an update. If not, I just wanted to let you know that version 1.0.4 of the iOS Edge Client is available at the AppStore. The main updates in v1.0.4: IPv6 Support Localization New iPad Retina Graphics The BIG-IP Edge Client application from F5 Networks secures and accelerates mobile device access to enterprise networks and applications using SSL VPN and optimization technologies. Access is provided as part of an enterprise deployment of F5 BIG-IP Access Policy Manager, Edge Gateway, or FirePass SSL-VPN solutions. BIG-IP Edge Client for iOS Features: Provides accelerated mobile access when used with F5 BIG-IP Edge Gateway. Automatically roams between networks to stay connected on the go. Full Layer 3 network access to all your enterprise applications and files. I updated mine today without a problem. ps474Views0likes0CommentsComplying with PCI DSS–Part 1: Build and Maintain a Secure Network

According to the PCI SSC, there are 12 PCI DSS requirements that satisfy a variety of security goals. Areas of focus include building and maintaining a secure network, protecting stored cardholder data, maintaining a vulnerability management program, implementing strong access control measures, regularly monitoring and testing networks, and maintaining information security policies. The essential framework of the PCI DSS encompasses assessment, remediation, and reporting. Over the next several blogs, we’ll explore how F5 can help organizations gain or maintain compliance. Today is Build and Maintain a Secure Network which includes PCI Requirements 1 and 2. PCI DSS Quick Reference Guide, October 2010 The PCI DSS requirements apply to all “system components,” which are defined as any network component, server, or application included in, or connected to, the cardholder data environment. Network components include, but are not limited to, firewalls, switches, routers, wireless access points, network appliances, and other security appliances. Servers include, but are not limited to, web, database, authentication, DNS, mail, proxy, and NTP servers. Applications include all purchased and custom applications, including internal and external web applications. The cardholder data environment is a combination of all the system components that come together to store and provide access to sensitive user financial information. F5 can help with all of the core PCI DSS areas and 10 of its 12 requirements. Requirement 1: Install and maintain a firewall and router configuration to protect cardholder data. PCI DSS Quick Reference Guide description: Firewalls are devices that control computer traffic allowed into and out of an organization’s network, and into sensitive areas within its internal network. Firewall functionality may also appear in other system components. Routers are hardware or software that connects two or more networks. All such devices are in scope for assessment of Requirement 1 if used within the cardholder data environment. All systems must be protected from unauthorized access from the Internet, whether via e-commerce, employees’ remote desktop browsers, or employee email access. Often, seemingly insignificant paths to and from the Internet can provide unprotected pathways into key systems. Firewalls are a key protection mechanism for any computer network. Solution: F5 BIG-IP products provide strategic points of control within the Application Delivery Network (ADN) to enable truly secure networking across all systems and network and application protocols. The BIG-IP platform provides a unified view of layers 3 through 7 for both general reporting and alerts and those required by ICSA Labs, as well as for integration with products from security information and event management (SIEM) vendors. BIG-IP Local Traffic Manager (LTM) offers native, high-performance firewall services to protect the entire infrastructure. BIG-IP LTM is a purpose-built, high-performance Application Delivery Controller (ADC) designed to protect Internet data centers. In many instances, BIG-IP LTM can replace an existing firewall while also offering scalability, performance, and persistence. Running on an F5 VIPRION chassis, BIG-IP LTM can manage up to 48 million concurrent connections and 72 Gbps of throughput with various timeout behaviors and buffer sizes when under attack. It protects UDP, TCP, SIP, DNS, HTTP, SSL, and other network attack targets while delivering uninterrupted service for legitimate connections. The BIG-IP platform, which offers a unique Layer 2–7 security architecture and full packet inspection, is an ICSA Labs Certified Network Firewall. Replacing stateful firewall services with BIG-IP LTM in the data center architecture Requirement 2: Do not use vendor-supplied defaults for system passwords and other security parameters. PCI DSS Quick Reference Guide description: The easiest way for a hacker to access your internal network is to try default passwords or exploits based on the default system software settings in your payment card infrastructure. Far too often, merchants do not change default passwords or settings upon deployment. This is akin to leaving your store physically unlocked when you go home for the night. Default passwords and settings for most network devices are widely known. This information, combined with hacker tools that show what devices are on your network, can make unauthorized entry a simple task if you have failed to change the defaults. Solution: All F5 products allow full access for administrators to change all forms of access and service authentication credentials, including administrator passwords, application service passwords, and system monitoring passwords (such as SNMP). Products such as BIG-IP Access Policy Manager (APM) and BIG-IP Edge Gateway limit remote connectivity to only a GUI and can enforce two-factor authentication, allowing tighter control over authenticated entry points. The BIG-IP platform allows the administrator to open up specific access points to be fitted into an existing secure network. BIG-IP APM and BIG-IP Edge Gateway offer secure, role-based administration (SSL/TLS and SSH protocols) and virtualization for designated access rights on a per-user or per-group basis. Secure Vault, a hardware-secured encrypted storage system introduced in BIG-IP version 9.4.5, protects critical data using a hardware-based key that does not reside on the appliance’s file system. In BIG-IP v11, companies have the option of securing their cryptographic keys in hardware, such as a FIPS card, rather than encrypted on the BIG-IP hard drive. The Secure Vault feature can also encrypt certificate passwords for enhanced certificate and key protection in environments where FIPS 140-2 hardware support is not required, but additional physical and role-based protection is preferred. Secure Vault encryption may also be desirable when deploying the virtual editions of BIG-IP products, which do not support key encryption on hardware. Next: Protect Cardholder Data ps445Views0likes0CommentsF5 Friday: Mitigating the THC SSL DoS Threat

The THC #SSL #DoS tool exploits the rapid resource consumption nature of the handshake required to establish a secure session using SSL. A new attack tool was announced this week and continues to follow in the footsteps of resource exhaustion as a means to achieve a DoS against target sites. Recent trends in attacks show an increasing interest in maximizing effect while minimizing effort. This means a move away from traditional denial of service attacks that focus on overwhelming sites with traffic and toward attacks that focus on rapidly consuming resources, instead. Both have the same ultimate goal: overwhelming infrastructure, whether server or router or insert infrastructure component of choice>. The latest SSL-based attack falls into the modern category of denial of service attacks in that it’s not an attempt to overwhelm with traffic, but rather to consume resources on servers such that capacity and the ability to respond to legitimate requests is eliminated. The blog post announcing the exploit tools explains: Establishing a secure SSL connection requires 15x more processing power on the server than on the client. THC-SSL-DOS exploits this asymmetric property by overloading the server and knocking it off the Internet. This problem affects all SSL implementations today. The vendors are aware of this problem since 2003 and the topic has been widely discussed. This attack further exploits the SSL secure Renegotiation feature to trigger thousands of renegotiations via single TCP connection. -- THC SSL DOS Tool Released As the blog points out, there is no resolution to this exploit. Common mitigation techniques include the use of an SSL accelerator, i.e. a reverse-proxy capable device with specialized hardware designed to improve the processing capability of SSL and associated cryptographic functions. Modern application delivery controllers like BIG-IP include such hardware by default and make use of its performance and capacity-enhancing abilities to offset the operational costs of supporting SSL-secured communication. BIG-IP MITIGATION There are actually several ways in which BIG-IP can mitigate the potential impact of this kind of attack. First and foremost is simply its higher capacity for connections and processing of SSL / RSA operations. BIG-IP can manage myriad more connections – secure or not – than a typical web server and thus it may be, depending on the hardware platform on which BIG-IP is deployed, that the mitigation rests merely on having a BIG-IP in the path of the attack. In the case that it is not, or if organizations desire a more proactive approach to mitigation, there are two additional options: 1. SSL renegotiation, which is in part the basis for the attack (it’s what allows a relatively few clients to force the server to consume more and more resources), can be disabled in BIG-IP v11 and v10.2.3. This may break some applications and/or clients so this option may want to be left as a “last resort” or the risks carefully weighed before deploying such a configuration. 2. An iRule that drops connections over which a client attempts to renegotiate more than five times in a given 60-second interval can be deployed. As noted by David Holmes and the iRule author, Jason Rahm, “By silently dropping the client connection, the iRule causes the attack tool to stall for long periods of time, fully negating the attack. There should be no false-positives dropped, either, as there are very few valid use cases for renegotiating more than once a minute.” The full details and code for the iRule can be found in the DevCentral article “SSL Renegotiation DOS attack – an iRule Countermeasure” UPDATE 11/1/2011: David Holmes has included an optimized version of the iRule in his latest blog, "The SSL Renegotation Attack is Back." His version uses the normal flow key (instead of a random key), adds a log message, and optimizes memory consumption. Regardless of the mitigating technique used, BIG-IP can provide the operational security necessary to prevent such consumption-leeching attacks from negatively impacting applications by defeating the attack before it reaches application infrastructure. Stay safe!444Views0likes1CommentVulnerability Assessment with Application Security

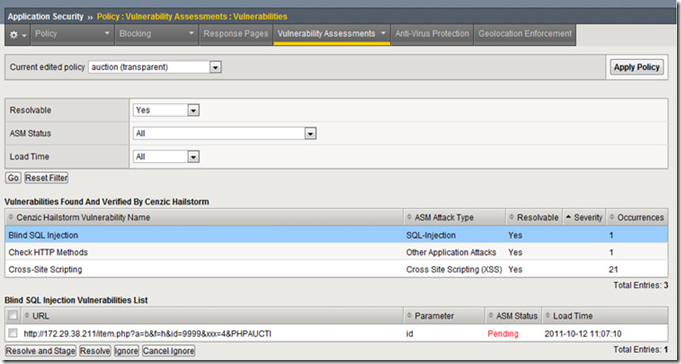

The longer an application remains vulnerable, the more likely it is to be compromised. Protecting web applications is an around-the-clock job. Almost anything that is connected to the Internet is a target these days, and organizations are scrambling to keep their web properties available and secure. The ramifications of a breach or downtime can be severe: brand reputation, the ability to meet regulatory requirements, and revenue are all on the line. A 2011 survey conducted by Merrill Research on behalf of VeriSign found that 60 percent of respondents rely on their websites for at least 25 percent of their annual revenue. And the threat landscape is only getting worse. Targeted attacks are designed to gather intelligence; steal trade secrets, sensitive customer information, or intellectual property; disrupt operations; or even destroy critical infrastructure. Targeted attacks have been around for a number of years, but 2011 brought a whole new meaning to advanced persistent threat. Symantec reported that the number of targeted attacks increased almost four-fold from January 2011 to November 2011. In the past, the typical profile of a target organization was a large, well-known, multinational company in the public, financial, government, pharmaceutical, or utility sector. Today, the scope has widened to include almost any size organization from any industry. The attacks are also layered in that the malicious hackers attempt to penetrate both the network and application layers. To defend against targeted attacks, organizations can deploy a scanner to check web applications for vulnerabilities such as SQL injection, cross site scripting (XSS), and forceful browsing; or they can use a web application firewall (WAF) to protect against these vulnerabilities. However a better, more complete solution is to deploy both a scanner and a WAF. BIG-IP Application Security Manager (ASM) version 11.1 is a WAF that gives organizations the tools they need to easily manage and secure web application vulnerabilities with multiple web vulnerability scanner integrations. As enterprises continue to deploy web applications, network and security architects need visibility into who is attacking those applications, as well as a big-picture view of all violations to plan future attack mitigation. Administrators must be able to understand what they see to determine whether a request is valid or an attack that requires application protection. Administrators must also troubleshoot application performance and capacity issues, which proves the need for detailed statistics. With the increase in application deployments and the resulting vulnerabilities, administrators need a proven multi-vulnerability assessment and application security solution for maximum coverage and attack protection. But as many companies also support geographically diverse application users, they must be able to define who is granted or denied application access based on geolocation information. Application Vulnerability Scanners To assess a web application’s vulnerability, most organizations turn to a vulnerability scanner. The scanning schedule might depend on a change control, like when an application is initially being deployed, or other factors like a quarterly report. The vulnerability scanner scours the web application, and in some cases actually attempts potential hacks to generate a report indicating all possible vulnerabilities. This gives the administrator managing the web security devices a clear view of all the exposed areas and potential threats to the website. It is a moment-in-time report and might not give full application coverage, but the assessment should give administrators a clear picture of their web application security posture. It includes information about coding errors, weak authentication mechanisms, fields or parameters that query the database directly, or other vulnerabilities that provide unauthorized access to information, sensitive or not. Many of these vulnerabilities would need to be manually re-coded or manually added to the WAF policy—both expensive undertakings. Another challenge is that every web application is different. Some are developed in .NET, some in PHP or PERL. Some scanners execute better on different development platforms, so it’s important for organizations to select the right one. Some companies may need a PCI DSS report for an auditor, some for targeted penetration testing, and some for WAF tuning. These factors can also play a role in determining the right vulnerability scanner for an organization. Ease of use, target specifics, and automated testing are the baselines. Once an organization has considered all those details, the job is still only half done. Simply having the vulnerability report, while beneficial, doesn’t mean a web app is secure. The real value of the report lies in how it enables an organization to determine the risk level and how best to mitigate the risk. Since re-coding an application is expensive and time-consuming, and may generate even more errors, many organizations deploy a web application firewall like BIG-IP ASM. A WAF enables an organization to protect its web applications by virtually patching the open vulnerabilities until it has an opportunity to properly close the hole. Often, organizations use the vulnerability scanner report to then either tighten or initially generate a WAF policy. Attackers can come from anywhere, so organizations need to quickly mitigate vulnerabilities before they become threats. They need a quick, easy, effective solution for creating security policies. Although it’s preferable to have multiple scanners or scanning services, many companies only have one, which significantly impedes their ability to get a full vulnerability assessment. Further, if an organization’s WAF and scanner aren’t integrated, neither is its view of vulnerabilities, as a non-integrated WAF UI displays no scanner data. Integration enables organizations both to manage the vulnerability scanner results and to modify the WAF policy to protect against the scanner’s findings—all in one UI. Integration Reduces Risk While finding vulnerabilities helps organizations understand their exposure, they must also have the ability to quickly mitigate found vulnerabilities to greatly reduce the risk of application exploits. The longer an application remains vulnerable, the more likely it is to be compromised. F5 BIG-IP ASM, a flexible web application firewall, enables strong visibility with granular, session-based enforcement and reporting; grouped violations for correlation; and a quick view into valid and attack requests. BIG-IP ASM delivers comprehensive vulnerability assessment and application protection that can quickly reduce web threats with easy geolocation-based blocking—greatly improving the security posture of an organization’s critical infrastructure. BIG-IP ASM version 11.1 includes integration with IBM Rational AppScan, Cenzic Hailstorm, QualysGuard WAS, and WhiteHat Sentinel, building more integrity into the policy lifecycle and making it the most advanced vulnerability assessment and application protection on the market. In addition, administrators can better create and enforce policies with information about attack patterns from a grouping of violations or otherwise correlated incidents. In this way, BIG-IP ASM enables organizations to mitigate threats in a timely manner and greatly reduce the overall risk of attacks and solve most vulnerabilities. With multiple vulnerability scanner assessments in one GUI, administrators can discover and remediate vulnerabilities within minutes from a central location. BIG-IP ASM offers easy policy implementation, fast assessment and policy creation, and the ability to dynamically configure policies in real time during assessment. To significantly reduce data loss, administrators can test and verify vulnerabilities from the BIG-IP ASM GUI, and automatically create policies with a single click to mitigate unknown application vulnerabilities. Security is a never-ending battle. The bad guys advance, organizations counter, bad guys cross over—and so the cat and mouse game continues. The need to properly secure web applications is absolute. Knowing what vulnerabilities exist within a web application can help organizations contain possible points of exposure. BIG-IP ASM v11.1 offers unprecedented web application protection by integrating with many market-leading vulnerability scanners to provide a complete vulnerability scan and remediate solution. BIG-IP ASM v11.1 enables organizations to understand inherent threats and take specific measures to protect their web application infrastructure. It gives them the tools they need to greatly reduce the risk of becoming the next failed security headline. ps Resources: F5’s Certified Firewall Protects Against Large-Scale Cyber Attacks on Public-Facing Websites IPS or WAF Dilemma F5 Case Study: WhiteHat Security Oracle OpenWorld 2011: BIG-IP ASM & Oracle Database Firewall Audio White Paper - Application Security in the Cloud with BIG-IP ASM The Big Attacks are Back…Not That They Ever Stopped Protection from Latest Network and Application Attacks The New Data Center Firewall Paradigm – White Paper Vulnerability Assessment with Application Security – White Paper F5 Security Vignette: Hacktivism Attack – Video F5 Security Vignette: DNSSEC Wrapping – Video Jeremiah Grossman blog Technorati Tags: F5, big-ip, virtualization, cloud computing, Pete Silva, security, waf, web scanners, compliance, application security, internet, TMOS, big-ip, asm442Views0likes0Comments