7 Steps Checklist before upgrading your F5 BIG-IP

Problem this snippet solves: This is a quick summary of steps you need to check before upgrading a BIG-IP. This is valid for version 11.x or later. How to use this snippet: In this example, I will assume that we are upgrading from 11.5.1 to 12.1.2 Step 1 : Check the compatibility matrix a) For appliance, check hardware/software compatibility Link:https://support.f5.com/csp/article/K9476 b) For virtual edition, check the supported hypervisors matrix Link :https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/ve-supported-hypervisor-matrix.html Note : If running vCMP systems, verify also the vCMP host and compatible guest version matrix Link :https://support.f5.com/csp/article/K14088 Step 2 : Check supported BIG-IP upgrade paths and determine if you can upgrade directly Link:https://support.f5.com/csp/article/K13845 In this case, you must be running BIG-IP 10.1.x - 11.x to upgrade directly to BIG-IP 12.x Step 3 : Download .iso files needed for the upgrade from F5 Downloads Link:https://downloads.f5.com/esd/index.jsp Step 4 : Check if you need to re-activate the license before upgrading Link:https://support.f5.com/csp/article/K7727 First, determine the "License Check Date" of the version you want to install. In this case, the version 12.1.2 was released on 2016-03-18 (License Check Date). Then, determine your "Service check date" by executing the following command from CLI : > grep "Service check date" /config/bigip.license The output appears similar to the following example: > Service check date : 20151008 Since the "Service check date" (20151008) is older than the "License Check Date" (2016-03-18), a license a reactivation is needed before upgrading. To reactivate, follow the steps under paragraph "Reactivating the system license" from the link given above. Step 5 : Use "iHealth Upgrade Advisor" to determine if any configuration modification is needed before/after the upgrade <no longer available> Step 6 : Backup the configuration by generating a UCS archive and download it on a safe place Link: https://support.f5.com/csp/article/K13132 a) If are using the "Configuration Utility", follow the procedure under "Backing up configuration data by using the Configuration utility" b) If you prefer using CLI, follow the procedure under "Backing up configuration data using the tmsh utility" Step 7 : From the release note of the version you wish to install read the "Installation checklist" Link:https://support.f5.com/kb/en-us/products/big-ip_ltm/releasenotes/product/relnote-ltm-12-1-2.html Under the paragraph "Installation checklist" of the release note, ensure that you have read and verified listed points. Code : No code Tested this on version: 11.023KViews4likes15CommentsF5 iApp Automated Backup

Problem this snippet solves: This is now available on GitHub! Please look on GitHub for the latest version, and submit any bugs or questions as an "Issue" on GitHub: (Note: DevCentral admin update - Daniel's project appears abandoned so it's been forked and updated to the link below. @damnski on github added some SFTP code that has been merged in as well.) https://github.com/f5devcentral/f5-automated-backup-iapp Intro Building on the significant work of Thomas Schockaert (and several other DevCentralites) I enhanced many aspects I needed for my own purposes, updated many things I noticed requested on the forums, and added additional documentation and clarification. As you may see in several of my comments on the original posts, I iterated through several 2.2.x versions and am now releasing v3.0.0. Below is the breakdown! Also, I have done quite a bit of testing (mostly on v13.1.0.1 lately) and I doubt I've caught everything, especially with all of the changes. Please post any questions or issues in the comments. Cheers! Daniel Tavernier (tabernarious) Related posts: Git Repository for f5-automated-backup-iapp (https://github.com/tabernarious/f5-automated-backup-iapp) https://community.f5.com/t5/technical-articles/f5-automated-backups-the-right-way/ta-p/288454 https://community.f5.com/t5/crowdsrc/complete-f5-automated-backup-solution/ta-p/288701 https://community.f5.com/t5/crowdsrc/complete-f5-automated-backup-solution-2/ta-p/274252 https://community.f5.com/t5/technical-forum/automated-backup-solution/m-p/24551 https://community.f5.com/t5/crowdsrc/tkb-p/CrowdSRC v3.2.1 (20201210) Merged v3.1.11 and v3.2.0 for explicit SFTP support (separate from SCP). Tweaked the SCP and SFTP upload directory handling; detailed instructions are in the iApp. Tested on 13.1.3.4 and 14.1.3 v3.1.11 (20201210) Better handling of UCS passphrases, and notes about characters to avoid. I successfully tested this exact passphrase in the 13.1.3.4 CLI (surrounded with single quote) and GUI (as-is): `~!@#$%^*()aB1-_=+[{]}:./? I successfully tested this exact passphrase in 14.1.3 (square-braces and curly-braces would not work): `~!@#$%^*()aB1-_=+:./? Though there may be situations these could work, avoid these characters (separated by spaces): " ' & | ; < > \ [ ] { } , Moved changelog and notes from the template to CHANGELOG.md and README.md. Replaced all tabs (\t) with four spaces. v3.1.10 (20201209) Added SMB Version and SMB Security options to support v14+ and newer versions of Microsoft Windows and Windows Server. Tested SMB/CIFS on 13.1.3.4 and 14.1.3 against Windows Server 2019 using "2.0" and "ntlmsspi" v3.1.0: Removed "app-service none" from iCall objects. The iCall objects are now created as part of the Application Service (iApp) and are properly cleaned up if the iApp is redeployed or deleted. Reasonably tested on 11.5.4 HF2 (SMB worked fine using "mount -t cifs") and altered requires-bigip-version-min to match. Fixing error regarding "script did not successfully complete: (can't read "::destination_parameters__protocol_enable": no such variable" by encompassing most of the "implementation" in a block that first checks $::backup_schedule__frequency_select for "Disable". Added default value to "filename format". Changed UCS default value for $backup_file_name_extension to ".ucs" and added $fname_noext. Removed old SFTP sections and references (now handled through SCP/SFTP). Adjusted logging: added "sleep 1" to ensure proper logging; added $backup_directory to log message. Adjusted some help messages. New v3.0.0 features: Supports multiple instances! (Deploy multiple copies of the iApp to save backups to different places or perhaps to keep daily backups locally and send weekly backups to a network drive.) Fully ConfigSync compatible! (Encrypted values now in $script instead of local file.) Long passwords supported! (Using "-A" with openssl which reads/writes base64 encoded strings as a single line.) Added $script error checking for all remote backup types! (Using 'catch' to prevent tcl errors when $script aborts.) Backup files are cleaned up after any $script errors due to new error checking. Added logging! (Run logs sent to '/var/log/ltm' via logger command which is compatible with BIG-IP Remote Logging configuration (syslog). Run logs AND errors sent to '/var/tmp/scriptd.out'. Errors may include plain-text passwords which should not be in /var/log/ltm or syslog.) Added custom cipher option for SCP! (In case BIG-IP and the destination server are not cipher-compatible out of the box.) Added StrictHostKeyChecking=no option. (This is insecure and should only be used for testing--lots of warnings.) Combined SCP and SFTP because they are both using SCP to perform the remote copy. (Easier to maintain!) Original v1.x.x and v2.x.x features kept (copied from an original post): It allows you to choose between both UCS or SCF as backup-types. (whilst providing ample warnings about SCF not being a very good restore-option due to the incompleteness in some cases) It allows you to provide a passphrase for the UCS archives (the standard GUI also does this, so the iApp should too) It allows you to not include the private keys (same thing: standard GUI does it, so the iApp does it too) It allows you to set a Backup Schedule for every X minutes/hours/days/weeks/months or a custom selection of days in the week It allows you to set the exact time, minute of the hour, day of the week or day of the month when the backup should be performed (depending on the usefulness with regards to the schedule type) It allows you to transfer the backup files to external devices using 4 different protocols, next to providing local storage on the device itself SCP (username/private key without password) SFTP (username/private key without password) FTP (username/password) SMB (now using TMOS v12.x.x compatible 'mount -t cifs', with username/password) Local Storage (/var/local/ucs or /var/local/scf) It stores all passwords and private keys in a secure fashion: encrypted by the master key of the unit (f5mku), rendering it safe to store the backups, including the credentials off-box It has a configurable automatic pruning function for the Local Storage option, so the disk doesn't fill up (i.e. keep last X backup files) It allows you to configure the filename using the date/time wildcards from the tcl [clock] command, as well as providing a variable to include the hostname It requires only the WebGUI to establish the configuration you desire It allows you to disable the processes for automated backup, without you having to remove the Application Service or losing any previously entered settings For the external shellscripts it automatically generates, the credentials are stored in encrypted form (using the master key) It allows you to no longer be required to make modifications on the linux command line to get your automated backups running after an RMA or restore operation It cleans up after itself, which means there are no extraneous shellscripts or status files lingering around after the scripts execute How to use this snippet: Find and download the latest iApp template on GitHub (e.g "f5.automated_backup.v3.2.1.tmpl.tcl"). Import the text file as an iApp Template in the BIG-IP GUI. Create an Application Service using the imported Template. Answer the questions (paying close attention to the help sections). Check /var/tmp/scriptd.out for general logs and errors. Tested this on version: 16.020KViews5likes101CommentsExport Virtual Server Configuration in CSV - tmsh cli script

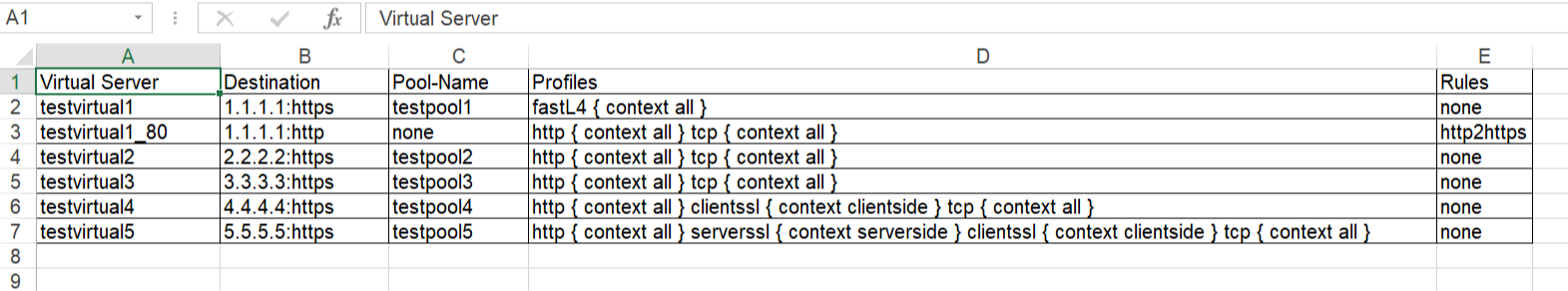

Problem this snippet solves: This is a simple cli script used to collect all the virtuals name, its VIP details, Pool names, members, all Profiles, Irules, persistence associated to each, in all partitions. A sample output would be like below, One can customize the code to extract other fields available too. The same logic can be allowed to pull information's from profiles stats, certificates etc. Update: 5th Oct 2020 Added Pool members capture in the code. After the Pool-Name, Pool-Members column will be found. If a pool does not have members - field not present: "members" will shown in the respective Pool-Members column. If a pool itself is not bound to the VS, then Pool-Name, Pool-Members will have none in the respective columns. Update: 21st Jan 2021 Added logic to look for multiple partitions & collect configs Update: 12th Feb 2021 Added logic to add persistence to sheet. Update: 26th May 2021 Added logic to add state & status to sheet. Update: 24th Oct 2023 Added logic to add hostname, Pool Status,Total-Connections & Current-Connections. Note: The codeshare has multiple version, use the latest version alone. The reason to keep the other versions is for end users to understand & compare, thus helping them to modify to their own requirements. Hope it helps. How to use this snippet: Login to the LTM, create your script by running the below commands and paste the code provided in snippet tmsh create cli script virtual-details So when you list it, it should look something like below, [admin@labltm:Active:Standalone] ~ # tmsh list cli script virtual-details cli script virtual-details { proc script::run {} { puts "Virtual Server,Destination,Pool-Name,Profiles,Rules" foreach { obj } [tmsh::get_config ltm virtual all-properties] { set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all"context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] puts "[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],[tmsh::get_field_value $obj "pool"],$profilelist,[tmsh::get_field_value $obj "rules"]" } } total-signing-status not-all-signed } [admin@labltm:Active:Standalone] ~ # And you can run the script like below, tmsh run cli script virtual-details > /var/tmp/virtual-details.csv And get the output from the saved file, cat /var/tmp/virtual-details.csv Old Codes: cli script virtual-details { proc script::run {} { puts "Virtual Server,Destination,Pool-Name,Profiles,Rules" foreach { obj } [tmsh::get_config ltm virtual all-properties] { set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all " context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] puts "[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],[tmsh::get_field_value $obj "pool"],$profilelist,[tmsh::get_field_value $obj "rules"]" } } total-signing-status not-all-signed } ###=================================================== ###2.0 ###UPDATED CODE BELOW ### DO NOT MIX ABOVE CODE & BELOW CODE TOGETHER ###=================================================== cli script virtual-details { proc script::run {} { puts "Virtual Server,Destination,Pool-Name,Pool-Members,Profiles,Rules" foreach { obj } [tmsh::get_config ltm virtual all-properties] { set poolname [tmsh::get_field_value $obj "pool"] set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all " context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] if { $poolname != "none" }{ set poolconfig [tmsh::get_config /ltm pool $poolname] foreach poolinfo $poolconfig { if { [catch { set member_name [tmsh::get_field_value $poolinfo "members" ]} err] } { set pool_member $err puts "[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"]" } else { set pool_member "" set member_name [tmsh::get_field_value $poolinfo "members" ] foreach member $member_name { append pool_member "[lindex $member 1] " } puts "[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"]" } } } else { puts "[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,none,$profilelist,[tmsh::get_field_value $obj "rules"]" } } } total-signing-status not-all-signed } ###=================================================== ### Version 3.0 ### UPDATED CODE BELOW FOR MULTIPLE PARTITION ### DO NOT MIX ABOVE CODE & BELOW CODE TOGETHER ###=================================================== cli script virtual-details { proc script::run {} { puts "Partition,Virtual Server,Destination,Pool-Name,Pool-Members,Profiles,Rules" foreach all_partitions [tmsh::get_config auth partition] { set partition "[lindex [split $all_partitions " "] 2]" tmsh::cd /$partition foreach { obj } [tmsh::get_config ltm virtual all-properties] { set poolname [tmsh::get_field_value $obj "pool"] set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all " context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] if { $poolname != "none" }{ set poolconfig [tmsh::get_config /ltm pool $poolname] foreach poolinfo $poolconfig { if { [catch { set member_name [tmsh::get_field_value $poolinfo "members" ]} err] } { set pool_member $err puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"]" } else { set pool_member "" set member_name [tmsh::get_field_value $poolinfo "members" ] foreach member $member_name { append pool_member "[lindex $member 1] " } puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"]" } } } else { puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,none,$profilelist,[tmsh::get_field_value $obj "rules"]" } } } } total-signing-status not-all-signed } ###=================================================== ### Version 4.0 ### UPDATED CODE BELOW FOR CAPTURING PERSISTENCE ### DO NOT MIX ABOVE CODE & BELOW CODE TOGETHER ###=================================================== cli script virtual-details { proc script::run {} { puts "Partition,Virtual Server,Destination,Pool-Name,Pool-Members,Profiles,Rules,Persist" foreach all_partitions [tmsh::get_config auth partition] { set partition "[lindex [split $all_partitions " "] 2]" tmsh::cd /$partition foreach { obj } [tmsh::get_config ltm virtual all-properties] { set poolname [tmsh::get_field_value $obj "pool"] set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all " context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] set persist [lindex [lindex [tmsh::get_field_value $obj "persist"] 0] 1] if { $poolname != "none" }{ set poolconfig [tmsh::get_config /ltm pool $poolname] foreach poolinfo $poolconfig { if { [catch { set member_name [tmsh::get_field_value $poolinfo "members" ]} err] } { set pool_member $err puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"],$persist" } else { set pool_member "" set member_name [tmsh::get_field_value $poolinfo "members" ] foreach member $member_name { append pool_member "[lindex $member 1] " } puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"],$persist" } } } else { puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,none,$profilelist,[tmsh::get_field_value $obj "rules"],$persist" } } } } total-signing-status not-all-signed } ###=================================================== ### 5.0 ### UPDATED CODE BELOW ### DO NOT MIX ABOVE CODE & BELOW CODE TOGETHER ###=================================================== cli script virtual-details { proc script::run {} { puts "Partition,Virtual Server,Destination,Pool-Name,Pool-Members,Profiles,Rules,Persist,Status,State" foreach all_partitions [tmsh::get_config auth partition] { set partition "[lindex [split $all_partitions " "] 2]" tmsh::cd /$partition foreach { obj } [tmsh::get_config ltm virtual all-properties] { foreach { status } [tmsh::get_status ltm virtual [tmsh::get_name $obj]] { set vipstatus [tmsh::get_field_value $status "status.availability-state"] set vipstate [tmsh::get_field_value $status "status.enabled-state"] } set poolname [tmsh::get_field_value $obj "pool"] set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all " context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] set persist [lindex [lindex [tmsh::get_field_value $obj "persist"] 0] 1] if { $poolname != "none" }{ set poolconfig [tmsh::get_config /ltm pool $poolname] foreach poolinfo $poolconfig { if { [catch { set member_name [tmsh::get_field_value $poolinfo "members" ]} err] } { set pool_member $err puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"],$persist,$vipstatus,$vipstate" } else { set pool_member "" set member_name [tmsh::get_field_value $poolinfo "members" ] foreach member $member_name { append pool_member "[lindex $member 1] " } puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"],$persist,$vipstatus,$vipstate" } } } else { puts "$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,none,$profilelist,[tmsh::get_field_value $obj "rules"],$persist,$vipstatus,$vipstate" } } } } total-signing-status not-all-signed } Latest Code: cli script virtual-details { proc script::run {} { set hostconf [tmsh::get_config /sys global-settings hostname] set hostname [tmsh::get_field_value [lindex $hostconf 0] hostname] puts "Hostname,Partition,Virtual Server,Destination,Pool-Name,Pool-Status,Pool-Members,Profiles,Rules,Persist,Status,State,Total-Conn,Current-Conn" foreach all_partitions [tmsh::get_config auth partition] { set partition "[lindex [split $all_partitions " "] 2]" tmsh::cd /$partition foreach { obj } [tmsh::get_config ltm virtual all-properties] { foreach { status } [tmsh::get_status ltm virtual [tmsh::get_name $obj]] { set vipstatus [tmsh::get_field_value $status "status.availability-state"] set vipstate [tmsh::get_field_value $status "status.enabled-state"] set total_conn [tmsh::get_field_value $status "clientside.tot-conns"] set curr_conn [tmsh::get_field_value $status "clientside.cur-conns"] } set poolname [tmsh::get_field_value $obj "pool"] set profiles [tmsh::get_field_value $obj "profiles"] set remprof [regsub -all {\n} [regsub -all " context" [join $profiles "\n"] "context"] " "] set profilelist [regsub -all "profiles " $remprof ""] set persist [lindex [lindex [tmsh::get_field_value $obj "persist"] 0] 1] if { $poolname != "none" }{ foreach { p_status } [tmsh::get_status ltm pool $poolname] { set pool_status [tmsh::get_field_value $p_status "status.availability-state"] } set poolconfig [tmsh::get_config /ltm pool $poolname] foreach poolinfo $poolconfig { if { [catch { set member_name [tmsh::get_field_value $poolinfo "members" ]} err] } { set pool_member $err puts "$hostname,$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_status,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"],$persist,$vipstatus,$vipstate,$total_conn,$curr_conn" } else { set pool_member "" set member_name [tmsh::get_field_value $poolinfo "members" ] foreach member $member_name { append pool_member "[lindex $member 1] " } puts "$hostname,$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,$pool_status,$pool_member,$profilelist,[tmsh::get_field_value $obj "rules"],$persist,$vipstatus,$vipstate,$total_conn,$curr_conn" } } } else { puts "$hostname,$partition,[tmsh::get_name $obj],[tmsh::get_field_value $obj "destination"],$poolname,none,none,$profilelist,[tmsh::get_field_value $obj "rules"],$persist,$vipstatus,$vipstate,$total_conn,$curr_conn" } } } } } Tested this on version: 13.07.8KViews9likes25CommentsAutomated backup F5 configuration to remote server

Problem this snippet solves: Hi, I made simple script that auto backup SCF and UCF files to the remote server. I read great article about autobackup based on the iApp (https://devcentral.f5.com/codeshare/f5-iapp-automated-backup-1114), but I wonder is that way to make it simplest. I don't think that my script is better, but only simple. This scritp based on TFTP communication so it isn't secure. What you have to do is: Create a script file on every f5 and place it for example on directory /var/tmp/. I named file script_backup.sh. Change IP address TFTP_SERVER to your remote server Change mod of file to execute: chmod 755 ./script_backup.sh Add line to the CRONTAB to run this script every X time Edit crontab: crontab -e Add line like this. Of course you can change the time when you want start script, it's only example: 30 0 * * 6 /var/tmp/script_backup.sh That's all. I hope you enjoy this script. I also wonder why f5 don't have native mechanism to auto backup on the remote server. It's the most basic function in other systems. Code : TFTP_SERVER=10.0.0.0 DATETIME="`date +%Y%m%d%H%M`" OUT_DIR='/var/tmp' FILE_UCS="f5_lan_${HOSTNAME}.ucs" FILE_SCF="f5_lan_${HOSTNAME}.scf" FILE_CERT="f5_lan_${HOSTNAME}.cert.tar" cd ${OUT_DIR} tmsh save /sys ucs "${OUT_DIR}/${FILE_UCS}" tmsh save /sys config file "${OUT_DIR}/${FILE_SCF}" no-passphrase tar -cf "${OUT_DIR}/${FILE_CERT}" /config/ssl tftp $TFTP_SERVER <<-END 1>&2 mode binary put ${FILE_UCS} put ${FILE_SCF} put ${FILE_CERT} quit END rm -f "${FILE_UCS}" rm -f "${FILE_SCF}" rm -f "${FILE_CERT}" rm -f "${FILE_SCF}.tar" RTN_CODE=$? exit $RTN_COD7KViews0likes6CommentsBIG-IP Upgrade Procedure Using CLI (vCMP Guest & Host)

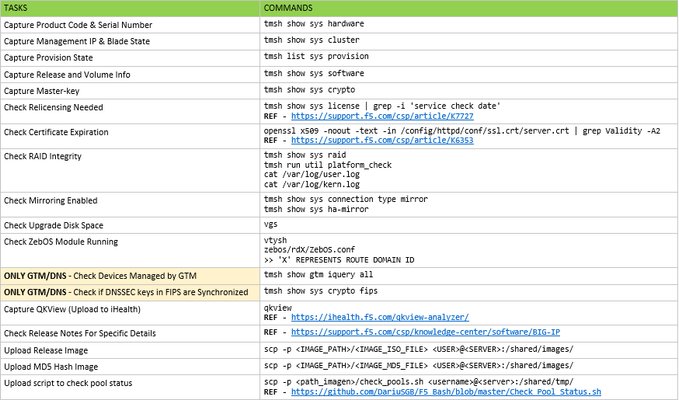

Problem this snippet solves: Next article describes an upgrade procedure to perform only using CLI commands. The idea is not to replace an official procedure, but to give a different approach for those guys who love using CLI and they want to execute an upgrade only using commands (without GUI access). The procedure is separated in 4 sections: Data Collection & Planning - for executing some days before the upgrade. Pre-Upgrade Tasks - for executing just before the upgrade (applies to all devices in the cluster). Upgrade Tasks - Only applies for one device in the cluster for each time (normally standby device). Post-Upgrade Tasks - for executing just after the upgrade (applies to all devices in the cluster). This procedure is valid for most of the BIP-IP set-ups: Standalone & clusters vCMP Host & vCMP Guests GTM/DNS Synchronization Groups Everything that helps to fix mistakes is great, so your comments are welcome. OFFICIAL REFERENCES: Release Notes - https://support.f5.com/csp/knowledge-center/software/BIG-IP General Upgrade Procedure - https://support.f5.com/csp/article/K84554955 GTM/DNS Upgrades - https://support.f5.com/csp/article/K11661449 VCMP Host Upgrades - https://support.f5.com/csp/article/K15930#p17 HW Life-Cycle - https://support.f5.com/csp/article/K4309 SW Life-Cycle - https://support.f5.com/csp/article/K5903 HW-SW Compatibility - https://support.f5.com/csp/article/K9476 Upgrade Path - https://support.f5.com/csp/article/K13845 How to use this snippet: >> DATA COLLECTION & PLANNING (ALL CLUSTER DEVICES) >> PRE-UPGRADE TASKS (ALL CLUSTER DEVICES) >> UPGRADE TASKS (ONE DEVICE AT TIME) >> POST-UPGRADE TASKS (ALL CLUSTER DEVICES) Code : ###################################################### ## DATA COLLECTION & PLANNING (ALL CLUSTER DEVICES) ## ###################################################### ## Capture Product Code & Serial Number tmsh show sys hardware ## Capture Management IP & Blade State tmsh show sys cluster ## Capture Provision State tmsh list sys provision ##Capture Release and Volume Info tmsh show sys software ## Capture Master-key tmsh show sys crypto ## Check Relicensing Needed tmsh show sys license | grep -i 'service check date' REF - https://support.f5.com/csp/article/K7727 ##Check Certificate Expiration openssl x509 -noout -text -in /config/httpd/conf/ssl.crt/server.crt | grep Validity -A2 REF - https://support.f5.com/csp/article/K6353 ##Check RAID Integrity tmsh show sys raid tmsh run util platform_check cat /var/log/user.log cat /var/log/kern.log ##Check Mirroring Enabled tmsh show sys connection type mirror tmsh show sys ha-mirror ## Check Upgrade Disk Space (At least 20Gb) vgs ## Check ZebOS Module Running vtysh zebos/rdX/ZebOS.conf >> 'X' REPRESENTS ROUTE DOMAIN ID ## ONLY GTM/DNS - Check Devices Managed by GTM tmsh show gtm iquery all ## ONLY GTM/DNS - Check if DNSSEC keys in FIPS are Synchronized tmsh show sys crypto fips ## Capture QKView (Upload to iHealth) qkview REF - https://ihealth.f5.com/qkview-analyzer/ ##Check Release Notes For Specific Details REF - https://support.f5.com/csp/knowledge-center/software/BIG-IP ## Upload Release Image scp -p / @ :/shared/images/ ## Upload MD5 Hash Image scp -p / @ :/shared/images/ ## Upload Script to Check Pool Status scp -p /Check_Pool_Status.sh @ :/shared/tmp/ REF - https://github.com/DariuSGB/F5_Bash/blob/master/Check_Pool_Status.sh ############################################# ## PRE-UPGRADE TASKS (ALL CLUSTER DEVICES) ## ############################################# ##Disable Virtual Server Mirroring REF - https://support.f5.com/csp/article/K13478 ## Disable Config Auto-Sync (if enabled) tmsh modify cm device-group auto-sync disabled ## ONLY GTM/DNS - Disable GSLB/ZoneRunner Synchronization tmsh modify gtm global-settings general { synchronization no synchronize-zone-files no auto-discovery no } ## Save Running Config tmsh save sys config ##Check HA Cluster Synchronization tmsh show cm sync-status tmsh run cm config-sync to-group ## Check Release Image Integrity cd /shared/images/ md5sum -c ##Create Initial UCS (Backup) tmsh save sys ucs /shared/tmp/$(date '+%Y%m%d')_initial.ucs ## Capture Initial Config tmsh save sys config file /shared/tmp/$(date '+%Y%m%d')_initial.scf no-passphrase ## Capture Initial Pool Status /shared/tmp/Check_Pool_Status.sh > /shared/tmp/$(date '+%Y%m%d')_initial_pools_output.txt ## Check No Upgrade Process Running tmsh show sys software status ## OPTIONAL - Get More Free Disk Space (At least 20Gb) tmsh delete sys software volume vgs ######################################## ## UPGRADE TASKS (ONE DEVICE AT TIME) ## ######################################## ## Restart AOM to Prevent Licensing Problems (iSeries) ipmiutil reset -k REF - https://support.f5.com/csp/article/K00415052 ## ONLY VCMP HOST - Check That All Guests Are In Standby tmsh show vcmp guest >> ACCESS INDIVIDUALLY TO EACH GUEST tmsh show cm sync-status ## ONLY VCMP HOST - Deprovision All Guests (Configured) tmsh show vcmp guest >> EXECUTE FOR EACH GUEST tmsh modify vcmp guest state configured tmsh save sys config ## Re-licensing Device >> BIG-IP WITH INTERNET ACCESS tmsh install sys license registration-key add-on-keys { } REF - https://support.f5.com/csp/article/K15055 >> BIG-IP WITHOUT INTERNET ACCESS cp /config/bigip.license /config/bigip.license.backup get_dossier -b -a ** ACCESS LICENSE ACTIVATION https://activate.f5.com/license/dossier.jsp ** PASTE LICENSE FILE (ENTER 'CTRL+D' AFTER PASTING) cat > /config/bigip.license reloadlic REF - https://support.f5.com/csp/article/K2595 ## Force Offline Mode tmsh run sys failover offline ## Verify Configuration Integrity tmsh load sys config verify ## Install Image tmsh install sys software image create-volume volume ## Check Installation State tmsh show sys software status cat /var/log/liveinstall.log ## OPTIONAL - Copy Configuration To New Volume ## (Only if you have made changes since installation) clsh --slot=X,Y cpcfg >> FROM VIPRION cpcfg >> FROM NOT VIPRION ## Boot On New Volume tmsh reboot volume ## ONLY VCMP GUEST - Check Boot Up Status >> FROM VCMP HOST vconsole ## Check Logs (LTM, APM, ASM,...) REF - https://support.f5.com/csp/article/K16197 ## Capture Final Config tmsh save sys config file /shared/tmp/$(date '+%Y%m%d')_final.scf no-passphrase ## Compare Initial-Final Config tmsh show sys config-diff /shared/tmp/$(date '+%Y%m%d')_initial.scf /shared/tmp/$(date '+%Y%m%d')_final.scf | egrep -e "\s{3}\|\s{3}" -e "[<]$" -e "^\s*[>]" ## Disable Force Offline tmsh run sys failover online ## ONLY GTM/DNS - Enable Metrics Collection tmsh start sys service big3d ## Capture Final Pool Status /shared/tmp/Check_Pool_Status.sh > /shared/tmp/$(date '+%Y%m%d')_final_pools_output.txt ## Compare Initial-Final Pool Status diff /shared/tmp/$(date '+%Y%m%d')_initial_pools_output.txt /shared/tmp/$(date '+%Y%m%d')_final_pools_output.txt ## ONLY VCMP HOST - Deploy All Guests (Deployed) tmsh show vcmp guest tmsh modify vcmp guest state deployed ## FROM ACTIVE NODE - Check Current Connections tmsh show sys traffic raw ## FROM ACTIVE NODE - Force Failover Event tmsh run sys failover standby ## Check CPU/Memory status tmsh show sys cpu tmsh show sys memory ## Check Current Connections tmsh show sys traffic raw ##Perfom Other Custom Tests Here ... ############################################## ## POST-UPGRADE TASKS (ALL CLUSTER DEVICES) ## ############################################## ## OPTIONAL - Install Big3d daemon in all managed members ## (Only necessary if you upgrade GTM/DNS before its members) big3d_install REF - https://support.f5.com/csp/article/K11661449#update-big3d ## ONLY GTM/DNS - Enable GSLB/ZoneRunner Synchronization tmsh modify gtm global-settings general { synchronization yes synchronize-zone-files yes auto-discovery yes } ## Re-enable Virtual Server Mirroring REF - https://support.f5.com/csp/article/K13478 ## Synchronize HA Cluster tmsh show cm sync-status tmsh run cm config-sync force-full-load-push to-group ## Re-enable Config Auto-Sync (if enabled) tmsh modify cm device-group auto-sync enabled ## Save running config tmsh save sys config ## Create Final UCS (Backup) tmsh save sys ucs /shared/tmp/$(date '+%Y%m%d')_final.ucs ##Delete Unused Images delete sys software image ## Delete Unused Volumes (Mandatory reboot) delete sys software volume Tested this on version: 12.15KViews12likes0CommentsConvert curl command to BIG-IP Monitor Send String

Problem this snippet solves: Convert curl commands into a HTTP or HTTPS monitor Send String. How to use this snippet: Save this code into a Python script and run as a replacement for curl. Related Article: How to create custom HTTP monitors with Postman, curl, and Python An example would be: % python curl_to_send_string.py -X POST -H "Host: api.example.com" -H "User-Agent: Custom BIG-IP Monitor" -H "Accept-Encoding: identity" -H "Connection: Close" -H "Content-Type: application/json" -d '{ "hello": "world" } ' "http://10.1.10.135:8080/post?show_env=1" SEND STRING: POST /post?show_env=1 HTTP/1.1\r\nHost: api.example.com\r\nUser-Agent: Custom BIG-IP Monitor\r\nAccept-Encoding: identity\r\nConnection: Close\r\nContent-Type: application/json\r\n\r\n{\n \"hello\":\n \"world\"\n}\n Code : Python 2.x import getopt import sys import urllib optlist, args = getopt.getopt(sys.argv[1:], 'X:H:d:') flat_optlist = dict(optlist) method = flat_optlist.get('-X','GET') (host,uri) = urllib.splithost(urllib.splittype(args[0])[1]) protocol = 'HTTP/1.1' headers = ["%s %s %s" %(method, uri, protocol)] headers.extend([h[1] for h in optlist if h[0] == '-H']) if not filter(lambda x: 'host:' in x.lower(),headers): headers.insert(1,'Host: %s' %(host)) send_string = "\\r\\n".join(headers) send_string += "\\r\\n\\r\\n" if '-d' in flat_optlist: send_string += flat_optlist['-d'].replace('\n','\\n') send_string = send_string.replace("\"", "\\\"") print "SEND STRING:" print send_string Python 3.x import getopt import sys from urllib.parse import splittype, urlparse optlist, args = getopt.getopt(sys.argv[1:], 'X:H:d:') flat_optlist = dict(optlist) method = flat_optlist.get('-X','GET') parts = urlparse(splittype(args[0])[1]) (host,uri) = (parts.hostname,parts.path) protocol = 'HTTP/1.1' headers = ["%s %s %s" %(method, uri, protocol)] headers.extend([h[1] for h in optlist if h[0] == '-H']) if not filter(lambda x: 'host:' in x.lower(),headers): headers.insert(1,'Host: %s' %(host)) send_string = "\\r\\n".join(headers) send_string += "\\r\\n\\r\\n" if '-d' in flat_optlist: send_string += flat_optlist['-d'].replace('\n','\\n') send_string = send_string.replace("\"", "\\\"") print("SEND STRING:") print(send_string) Tested this on version: 11.54.5KViews0likes17CommentsF5 Backup procedure over SCP using iCall

Problem this snippet solves: Purpose: You should consider using this procedure under the following condition. * You want to transfer BIG-IP Backup to the remote SCP Server on a specific frequency, without entering the password each time to transfer the file. Prerequisites: You must meet the following prerequisite to use this procedure. * You have administrator access to the BIG-IP Configuration utility and Command Line Access. * You have a user account on SCP Server with file transfer privileges. Description BIG-IP offers feature to transfer files over a remote SCP Server. Secure Copy (SCP) is the preferred means of transferring files to or from an F5 device. SCP securely transfers files between hosts using the Secure Shell (SSH) protocol for authentication and encryption. Unlike FTP, SCP provides an option to preserve the original date stamp on the file during file transfers. You can use SCP to transfer files between an F5 device and a remote host using either command line SCP or Windows-based SCP. We can automate the authentication process by export the public key from BIG-IP to SCP Server. By doing this, SCP Server will establish trust relationship with BIG-IP, and will not prompt for the password every-time we transfer files from BIG-IP to SCP Server. To achieve this, we need to have a user account on SCP Server, which has file transfer privileges. In this document, we will be using Linux based SCP Server. Once the transfer of files through the SCP works successfully. We can prepare the script done in iCall (as written following) and put it to run in the required interval. Login to SCP Server 1.Create a user account with permission to accept files from remote location, we will be using f5_user as user account, or you can use root user account (default user account on every Linux system). 2.It is good to have organized directory structure to receive F5 Backup. We will be creating 2 directories on our SCP Server as following. /F5Backup is the directory to receive F5 backup on a configured frequency (weekly, monthly, yearly etc). /authorized_key is temporary directory where will be sending the public key from F5 to SCP. 3.To create a directory in any Linux machine, you can use following command, mkdir /root/…path Eg. mkdir /home/f5_user/tmp/F5Backup/ 4.Create another directory to copy F5's public RSA key. mkdir /home/f5_user/tmp/authorized_keys Login to BIG-IP CLI Login to F5 CLI Generate RSA key by executing following. ssh-keygen -t rsa The RSA key will authenticate BIG-IP when it communicates with SCP Server. It would ask you to enter name and password, ignore the prompt by pressing ENTER. It will generate the Public / Private key under the directory /root/.ssh/id_rsa Verify the generated key by executing the following command. cat /root/.ssh/id_rsa.pub This should show you the public key, you have generated using above command. Send this public key to your Linux SCP Server. (We will be using SCP command to transfer the file from F5 to Linux Server). scp id_rsa.pub f5_user@10.1.20.222:/tmp/authorized_keys It will prompt for the password, enter the password for the f5_user user. In case if you are using other user account than f5_user, which has file transfer permission granted, you can replace f5_user with that user account, and keep the rest of the command as it is. Back to SCP Server Check if the Public key sent from F5 is received successfully under /tmp/authorized_keys or not. cat /f5_user/tmp/authorized_keys Note, in case if you have used other user account than "f5_user", replace the "f5_user" with the username you are using. Copy the key to right location, in order to authorize SCP connection from F5. cat /f5_user/tmp/mykey >> /f5_user/.ssh/authorized_keys If "authorized_keys" directory isn't exist, create a one by using mkdir command as mentioned earlier in the document. Verify the key is successfully placed under /f5_user/.ssh/authorized_keys or not. cat /f5_user/.ssh/authorized_keys Once the key successfully placed to the right location, it is time to test the connectivity from F5 to SCP Server. Switch back to F5 F5's CLI, scp filetest f5_user@10.1.60.240:/home/f5_user/tmp/ filetest 100% 5 0.0KB/s 00:00 If you have notices, this time it won’t ask for the password. In case if it still prompts, means you haven’t place the RSA key to the right place. The objective to place the RSA key under the user directory/.ssh/authorized_key. Once the connectivity is tested successfully, we can try to send F5’s UCS file over SCP to the remote server by the same method. Here, we can use the following script to generate the BIG-IP Backup and send it to the remote server. F5 CLI, type the following to type the script as following. Create a script with the command "tmsh create sys icall script <script name>" and then edit with vi and insert the content below.</p> </script> How to use this snippet: sys icall script auto_backup { app-service none definition { #Delete backup files exec rm -f /shared/tmp/*.ucs #Set Current Date/Time for Filename set cdate [clock format [clock seconds] -format "%Y%m%d"] #Set source repository set localpath "/var/local/ucs/" #Set destination repository set destinationpath "/home/teste/f5_backups" #Set remote host set host "10.1.20.222" #set remote user set user "f5_user" #Set device hostname set hostname [exec uname -n | cut -d "." -f1] #Set source repository cd $localpath #Delete files created more than 45 Days. catch { exec find "/var/local/ucs/" -type f -mtime +45 | grep -v .conf | xargs rm -f {} ; } #Delete UCS file if it exists catch { tmsh::delete sys ucs $hostname } #Export UCS tmsh::save sys ucs $hostname #Set temporary path set tmpdir "/shared/tmp/" append filename $hostname "_" $cdate #Copy UCS to temporary path exec cp $localpath$hostname.ucs $tmpdir$filename.ucs #Set Remote path append destination $user "@" $host ":" $destinationpath #Set source path append source $tmpdir $hostname "_" $cdate ".ucs" #Send the files via SCP. Prerequisite: The public key of BIG-IP must be registered in the file "authorized_keys" of the remote server if { [catch { exec scp -c aes128-ctr $source $destination > /dev/null 2> aux }] } { exec logger -p local0.info "Backup upload failed." } else { exec logger -p local0.info "The backup has been successfully sent to $destination." } exec rm -f aux } description none events none `</pre> } **Create the iCall Handler** I run the backup once a day; however, the periodicity can be adjusted according to the need of each one. In this example, I set the first-occurrence, the interval (once a day), and the script to call: <pre>`sys icall handler periodic auto_backup { first-occurrence 2019-03-27:05:01:00 interval 86400 script auto_backup } I know there are other scripts available in the community (much more sophisticated), the idea is just to share a simple and functional model. Remember that each one must adapt in the best way to meet your need. Code : 92596 Tested this on version: 12.13.9KViews3likes3CommentsPerformance Logging iRule (Rule_http_log)

Problem this snippet solves: Here's a logging iRule. You'll need a HSL syslog pool to log too. Various bits gathered from other posts on DevCentral. Sharing in case there is interest. Make sure your rsyslogd is setup to use the newer syslog format like RFC-5424 including milliseconds and timezone info.Includes Country (co) and logs individual request times for each request on a HTTP/1.1 connection. To configure F5 logging to use milliseconds and timezone, disable logging in the gui and use tmsh edit sys syslog and something like: include " # short hostnames options { use_fqdn(no); }; # Remote syslog in RFC5424 - Tim Riker <Tim@Rikers.org> destination remotesyslog { syslog(\"10.1.2.3\" transport(\"udp\") port(51443) ts_format(iso)); }; log { source(s_syslog_pipe); destination(remotesyslog); }; " Uses upvar and proc. Tested on 11.6 - 15.1 This tracks connection info in a table and then copies that down to the per-request log() to handle reporting on http2. This version works around a BIG-IP bug where HTTP::version does not report 2 or higher for http2 and later requests. With http2 profiles, subsequent requests using the same connection can generate this error in the logs if HTTP::respond HTTP::redirect or HTTP::retry is called from and earlier iRule. Reorder your iRules to avoid this. <HTTP_REQUEST> - No HTTP header is cached - ERR_NOT_SUPPORTED (line 1)invoked from within "HTTP::method" How to use this snippet: Add this iRule to whatever virtual hosts you desire. I always add it as the first rule. If you have a rule that sets headers you want to track, you may want this after the rule that sets headers. Interesting Splunk queries can be created like: index=* perflog | timechart avg(cpu_5sec) by host limit=10 to show load across multiple F5s. index=* perflog | timechart max(upstream_time) by http_host limit=10 to show long request times by http_host Any other iRule may add things to the log() array and those will get added to the single hsl output. If you create a dg_http_log datagroup, that will be used to filter what gets logged. Tested on version: 13.0 - 15.1 # Rule_http_log # http logging - Tim Riker <Tim@Rikers.org> # bits taken from this post: # https://devcentral.f5.com/questions/irule-for-getting-total-response-time-server-response-time-and-server-connection-time # iRule performance tracking # https://devcentral.f5.com/questions/Timing-iRules timing on # timing is on by default in 11.5.0+ to see stats: # tmsh show ltm rule Rule_http_log # # if the dg_http_log datagroup exists then vips or hosts/paths in dg_http_log that start with # "NONE" no logging (really anything other than empty) # "INFO" normal logging # "FINE" full request and response headers and CLIENT_CLOSED # # upstream_time := 15000 in the datagroup to log all requests over 15 seconds # # example: # "/Common/vs_www.example.com_HTTPS" := "FINE" - logged including CLIENT_CLOSED # "www.example.com/" := "INFO" - logged # "www.example.com/somepath" := "FINE" - full headers # "www.example.com/otherpath" := "NONE" - not logged when RULE_INIT { # hostname up to first dot set static::hostname [getfield [info hostname] "." 1] } # not calling /Common/proc:hsllog as this logs when the request occurred # instead of the time it calls hsllog at the end of the request proc hsllog {time mylog} { upvar 1 $mylog log # https://tools.ietf.org/html/rfc5424 <local0.info>version rfc-3339time host procid msgid structured_data log # should be able to use a "Z" here instead of "+00:00" but our splunk logs don't handle that # 134 = local0.info set output "<134>1 [clock format [string range $time 0 end-3] -gmt 1 -format %Y-%m-%dT%H:%M:%S.[string range $time end-2 end]+00:00] ${static::hostname} httplog [TMM::cmp_group].[TMM::cmp_unit] - -" foreach key [lsort [array names log]] { if { ($log($key) matches_regex {[\" ;,:]}) } { append output " $key=\"[string map {\" "|"} $log($key)]\"" } else { append output " $key=$log($key)" } } # avoid marking virtual server up when hsl pool is up # https://support.f5.com/csp/article/K14505 set hsl pool_syslog HSL::send [HSL::open -proto UDP -pool $hsl] $output } when CLIENT_ACCEPTED { # calculate and track milliseconds # is this / 1000 guaranteed to be clock seconds? TCL docs say no, but it looks like on f5 it is. set tcp_start_time [clock clicks -milliseconds] set log(loglevel) 0 if { [class exists dg_http_log] } { # virtual name entries need to be full path, ie: /Common/vs_www.example.com_HTTP switch -- [string range [class match -value -- [virtual name] equals dg_http_log] 0 3] { "FINE" { set log(loglevel) 2 } "INFO" { set log(loglevel) 1 } default { set log(loglevel) 0 } } } table set -subtable [IP::client_addr]:[TCP::client_port] loglevel $log(loglevel) table set -subtable [IP::client_addr]:[TCP::client_port] tmm "[TMM::cmp_group].[TMM::cmp_unit]" table set -subtable [IP::client_addr]:[TCP::client_port] client_addr [IP::client_addr] table set -subtable [IP::client_addr]:[TCP::client_port] client_port [TCP::client_port] table set -subtable [IP::client_addr]:[TCP::client_port] cpu_5sec [cpu usage 5secs] table set -subtable [IP::client_addr]:[TCP::client_port] virtual_name [virtual name] set co [whereis [IP::client_addr] country] if { $co eq "" } { set co unknown } table set -subtable [IP::client_addr]:[TCP::client_port] co $co } when HTTP_REQUEST { set http_request_time [clock clicks -milliseconds] set keys [table keys -subtable [IP::client_addr]:[TCP::client_port]] foreach key $keys { set log($key) "[table lookup -subtable "[IP::client_addr]:[TCP::client_port]" "$key"]" } if {[HTTP::has_responded]} { # The rule should come BEFORE any rules that do things like redirects set log(http_has_responded) [HTTP::has_responded] set log(loglevel) 1 set log(event) HTTP_REQUEST call hsllog $http_request_time log return } if { [class exists dg_http_log] } { set logsetting [class match -value -- [HTTP::host][HTTP::uri] starts_with dg_http_log] if { $logsetting ne "" } { # override log(loglevel) if we found something switch -- [string range $logsetting 0 3] { "FINE" { set log(loglevel) 2 } "INFO" { set log(loglevel) 1 } default { set log(loglevel) 0 } } } } set log(http_host) [HTTP::host] set log(http_uri) [HTTP::uri] set log(http_method) [HTTP::method] # request_num might not be accurate for HTTP2 set log(request_num) [HTTP::request_num] set log(request_size) [string length [HTTP::request]] # BUG http2 reported as http1 in pre 16.x # https://cdn.f5.com/product/bugtracker/ID842053.html set log(http_version) [HTTP::version] if { [catch \[HTTP2::version\] result] == 1 } { if { $result contains "Operation not supported" } { #log local0. "HTTP version is: [HTTP::version]" } else { set h2ver [eval "\HTTP2::version"] # we might have http2 support, but not be http2 if { $h2ver != 0 } { set log(http_version) $h2ver } } } #log local0. "http_version = $log(http_version)" if { $log(loglevel) > 1 } { foreach {header} [HTTP::header names] { set log(req-$header) [HTTP::header $header] } } else { foreach {header} {"connection" "content-length" "keep-alive" "last-modified" "policy-cn" "referer" "transfer-encoding" "user-agent" "x-forwarded-for" "x-forwarded-proto" "x-forwarded-scheme"} { if { [HTTP::header exists $header] } { set log(req-$header) [HTTP::header $header] } } } } when LB_SELECTED { set lb_selected_time [clock clicks -milliseconds] set log(server_addr) [LB::server addr] set log(server_port) [LB::server port] set log(pool) [LB::server pool] } when SERVER_CONNECTED { set log(connection_time) [expr {[clock clicks -milliseconds] - $lb_selected_time}] set log(snat_addr) [IP::local_addr] set log(snat_port) [TCP::local_port] } when LB_FAILED { set log(event_info) [event info] } when HTTP_REJECT { set log(http_reject) [HTTP::reject_reason] } when HTTP_REQUEST_SEND { set http_request_send_time [clock clicks -milliseconds] } when HTTP_RESPONSE { set log(upstream_time) [expr {[clock clicks -milliseconds] - $http_request_send_time}] set log(http_status) [HTTP::status] if { $log(loglevel) > 1 } { foreach {header} [HTTP::header names] { set log(res-$header) [HTTP::header $header] } } else { foreach {header} {"cache-control" "connection" "content-encoding" "content-length" "content-type" "content-security-policy" "keep-alive" "last-modified" "location" "server" "www-authenticate"} { if { [HTTP::header exists $header] } { set log(res-$header) [HTTP::header $header] } } } # if logging is off, but upstream_time is over threshold in datagroup, log anyway if { ($log(loglevel) < 1) && [class exists dg_http_log] } { set log_upstream_time [class match -value -- upstream_time equals dg_http_log] if {$log_upstream_time ne "" && $log(upstream_time) >= $log_upstream_time} { set log(over_upstream_time) $log_upstream_time set log(loglevel) 1 } } } when HTTP_RESPONSE_RELEASE { if { [info exists http_request_time] } { set log(http_time) "[expr {[clock clicks -milliseconds] - $http_request_time}]" # push http_time into table so CLIENT_CLOSED can see it in HTTP/2 table set -subtable [IP::client_addr]:[TCP::client_port] http_time $log(http_time) } else { set http_request_time [clock clicks -milliseconds] } set log(event) HTTP_RESPONSE_RELEASE if { $log(loglevel) > 0 } { call hsllog $http_request_time log } } when HTTP_DISABLED { set log(http_passthrough_reason) [HTTP::passthrough_reason] } when CLIENT_CLOSED { # grab log() values from table set keys [table keys -subtable [IP::client_addr]:[TCP::client_port]] foreach key $keys { set log($key) "[table lookup -subtable "[IP::client_addr]:[TCP::client_port]" "$key"]" } set log(tcp_time) "[expr {[clock clicks -milliseconds] - $tcp_start_time}]" set log(event) CLIENT_CLOSED # http_time didn't get set, log here (HTTP_RESPONSE_RELEASE never called, catch redirects, aborted connections) if { not ([info exists log(http_time)]) } { if { [info exists http_request_time] } { # called HTTP_REQUEST but not HTTP_RESPONSE_RELEASE using HTTP 1.0 or 1.1 set log(http_time) "[expr {[clock clicks -milliseconds] - $http_request_time}]" } call hsllog $tcp_start_time log } elseif { $log(loglevel) > 1 } { call hsllog $tcp_start_time log } # clean out table when client disconnects table delete -subtable [IP::client_addr]:[TCP::client_port] -all }3.3KViews3likes7CommentsDynamic FQDN Node DNS Resolution based on URI with Route Domains and Caching iRule

Problem this snippet solves: Following on from the code share Ephemeral Node FQDN Resolution with Route Domains - DNS Caching iRule that I posted, I have made a few modifications based on further development and comments/questions in the original share. On an incoming HTTP request, this iRule will dynamically query a DNS server (out of the appropriate route domain) defined in a pool to determine the IP address of an FQDN ephemeral node depending on the requesting URI. The IP address will be cached in a subtable so to prevent querying on every HTTP request It will also append the route domain to the node and replace the HTTP Host header. Features of this iRule * Dynamically resolves FQDN node from requesting URI * Iterates through other DNS servers in a pool if response is invalid or no response * Caches response in the session table using custom TTL * Uses cached result if present (prevents DNS lookup on each HTTP_REQUEST event) * Appends route domain to the resolved node * Replaces host header for outgoing request How to use this snippet: Add a DNS pool with appropriate health monitors, in this example the pool is called dns_pool Add the lookup datagroup, dns_lookup_dg . This defines the parameters of the DNS query. The values in the datagroup will built up an array using the key in capitals to define the array object e.g. $myArray(FQDN) Modify the values as required: FQDN: the FQDN of the node to load balance to. DNS-RD: the outbound route domain to reach the DNS servers NODE-RD: the outbound route domain to reach the node TTL: TTL value for the DNS cache in seconds ltm data-group internal dns_lookup_dg { records { /app1 { data "FQDN app1.my-domain.com|DNS-RD %10|NODE-RD %20|TTL 300|PORT 8443" } /app2 { data "FQDN app2.my-other-domain.com|DNS-RD %10|NODE-RD %20|TTL 300|PORT 8080" } default { data "FQDN default.domain.com|DNS-RD %10|NODE-RD %20|TTL 300|PORT 443" } } type string } Code : ltm data-group internal dns_lookup_dg { records { /app1 { data "FQDN app1.my-domain.com|DNS-RD %10|NODE-RD %20|TTL 300|PORT 8443" } /app2 { data "FQDN app2.my-other-domain.com|DNS-RD %10|NODE-RD %20|TTL 300|PORT 8080" } default { data "FQDN default.domain.com|DNS-RD %10|NODE-RD %20|TTL 300|PORT 443" } } type string } when CLIENT_ACCEPTED { set dnsPool "dns_pool" set dnsCache "dns_cache" set dnsDG "dns_lookup_dg" } when HTTP_REQUEST { # set datagroup values in an array if {[class match -value [HTTP::uri] "starts_with" $dnsDG]} { set dnsValues [split [string trim [class match -value [HTTP::uri] "starts_with" $dnsDG]] "| "] } else { # set to default if URI is not defined in DG set dnsValues [split [string trim [class match -value "default" "equals" $dnsDG]] "| "] } if {([info exists dnsValues]) && ($dnsValues ne "")} { if {[catch { array set dnsArray $dnsValues set fqdn $dnsArray(FQDN) set dnsRd $dnsArray(DNS-RD) set nodeRd $dnsArray(NODE-RD) set ttl $dnsArray(TTL) set port $dnsArray(PORT) } catchErr ]} { log local0. "failed to set DNS variables - error: $catchErr" event disable return } } # check if fqdn has been previously resolved and cached in the session table if {[table lookup -notouch -subtable $dnsCache $fqdn] eq ""} { log local0. "IP is not cached in the subtable - attempting to resolve IP using DNS" # initialise loop vars set flgResolvError 0 if {[active_members $dnsPool] > 0} { foreach dnsServer [active_members -list $dnsPool] { set dnsResolvIp [lindex [RESOLV::lookup @[lindex $dnsServer 0]$dnsRd -a $fqdn] 0] # verify result of dns lookup is a valid IP address if {[scan $dnsResolvIp {%d.%d.%d.%d} a b c d] == 4} { table set -subtable $dnsCache $fqdn $dnsResolvIp $ttl # clear any error flag and break out of loop set flgResolvError 0 set nodeIpRd $dnsResolvIp$nodeRd break } else { call ${partition}log local0."$dnsResolvIp is not a valid IP address, attempting to query other DNS server" set flgResolvError 1 } } } else { call ${partition}log local0."ERROR no DNS servers are availible" event disable return } #log error if query answers are invalid if {$flgResolvError} { call ${partition}log local0."ERROR unable to resolve $fqdn" unset -nocomplain dnsServer dnsResolvIp dnsResolvIp event disable return } } else { # retrieve cached IP from subtable (verification of resolved IP compelted before being commited to table) set dnsResolvIp [table lookup -notouch -subtable $dnsCache $fqdn] set nodeIpRd $dnsResolvIp$nodeRd log local0. "IP found in subtable: $dnsResolvIp with TTL: [table timeout -subtable $dnsCache -remaining $fqdn]" } # rewrite outbound host header and set node IP with RD and port HTTP::header replace Host $fqdn node $nodeIpRd $port log local0. "setting node to $nodeIpRd $port" } Tested this on version: 12.13.2KViews1like6Comments