Power of tmsh commands using Ansible

Why is data important Having accurate data has become an integral part of decision making. The data could be for making simple decisions like purchasing the newest electronic gadget in the market or for complex decisions on what hardware and/or software platform works best for your highly demanding application which would provide the best user experience for your customer. In either case research and data collection becomes essential. Using what kind of F5 hardware and/or software in your environment follows the same principals where your IT team would require data to make the right decision. Data could vary from CPU, Throughput and/or Memory utilization etc. of your F5 gear. It could also be data just for a period of a day, a month or a year depending the application usage patterns. Ansible to the rescue Your environment could have 10's or maybe 100 or even 1000's of F5 BIG-IP's in your environment, manually logging into each one to gather data would be a highly inefficient method. One way which is a great and simple way could be to use Ansible as an automation framework to perform this task, relieving you to perform your other job functions. Let's take a look at some of the components needed to use Ansible. An inventory file in Ansible defines the hosts against which your playbook is going to run. Below is an example of a file defining F5 hosts which can be expanded to represent your 10'/100's or 1000's of BIG-IP's. Inventory file: 'inventory.yml' [f5] ltm01 password=admin server=10.192.73.xxx user=admin validate_certs=no server_port=443 ltm02 password=admin server=10.192.73.xxx user=admin validate_certs=no server_port=443 ltm03 password=admin server=10.192.73.xxx user=admin validate_certs=no server_port=443 ltm04 password=admin server=10.192.73.xxx user=admin validate_certs=no server_port=443 ltm05 password=admin server=10.192.73.xxx user=admin validate_certs=no server_port=443 A playbook defines the tasks that are going to be executed. In this playbook we are using the bigip_command module which can take as input any BIG-IP tmsh command and provide the output. Here we are going to use the tmsh commands to gather performance data from the BIG-IP's. The output from each of the BIG-IP's is going to be stored in a file that can be referenced after the playbook finished execution. Playbook: 'performance-data/yml' --- - name: Create empty file hosts: localhost gather_facts: false tasks: - name: Creating an empty file file: path: "./{{filename}}" state: touch - name: Gather stats using tmsh command hosts: f5 connection: local gather_facts: false serial: 1 tasks: - name: Gather performance stats bigip_command: provider: server: "{{server}}" user: "{{user}}" password: "{{password}}" server_port: "{{server_port}}" validate_certs: "{{validate_certs}}" commands: - show sys performance throughput historical - show sys performance system historical register: result - lineinfile: line: "\n###BIG-IP hostname => {{ inventory_hostname }} ###\n" insertafter: EOF dest: "./{{filename}}" - lineinfile: line: "{{ result.stdout_lines }}" insertafter: EOF dest: "./{{filename}}" - name: Format the file shell: cmd: sed 's/,/\n/g' ./{{filename}} > ./{{filename}}_formatted - pause: seconds: 10 - name: Delete file hosts: localhost gather_facts: false tasks: - name: Delete extra file created (delete file) file: path: ./{{filename}} state: absent Execution: The execution command will take as input the playbook name, the inventory file as well as the filename where the output will be stored. (There are different ways of defining and passing parameters to a playbook, below is one such example) ansible-playbook performance_data.yml -i inventory.yml --extra-vars "filename=perf_output" Snippet of expected output: ###BIG-IP hostname => ltm01 ### [['Sys::Performance Throughput' '-----------------------------------------------------------------------' 'Throughput(bits)(bits/sec)Current3 hrs24 hrs7 days30 days' '-----------------------------------------------------------------------' 'Service223.8K258.8K279.2K297.4K112.5K' 'In212.1K209.7K210.5K243.6K89.5K' 'Out21.4K21.0K21.1K57.4K30.1K' '' '-----------------------------------------------------------------------' 'SSL TransactionsCurrent3 hrs24 hrs7 days30 days' '-----------------------------------------------------------------------' 'SSL TPS00000' '' '-----------------------------------------------------------------------' 'Throughput(packets)(pkts/sec)Current3 hrs24 hrs7 days30 days' '-----------------------------------------------------------------------' 'Service7982836362' 'In4140403432' 'Out4140403234'] ['Sys::Performance System' '------------------------------------------------------------' 'System CPU Usage(%)Current3 hrs24 hrs7 days30 days' '------------------------------------------------------------' 'Utilization1718181817' '' '------------------------------------------------------------' 'Memory Used(%)Current3 hrs24 hrs7 days30 days' '------------------------------------------------------------' 'TMM Memory Used1010101010' 'Other Memory Used5555545453' 'Swap Used00000']] ###BIG-IP hostname => ltm02 ### [['Sys::Performance Throughput' '-----------------------------------------------------------------------' 'Throughput(bits)(bits/sec)Current3 hrs24 hrs7 days30 days' '-----------------------------------------------------------------------' 'Service202.3K258.7K279.2K297.4K112.5K' 'In190.8K209.7K210.5K243.6K89.5K' 'Out19.6K21.0K21.1K57.4K30.1K' '' '-----------------------------------------------------------------------' 'SSL TransactionsCurrent3 hrs24 hrs7 days30 days' '-----------------------------------------------------------------------' 'SSL TPS00000' '' '-----------------------------------------------------------------------' 'Throughput(packets)(pkts/sec)Current3 hrs24 hrs7 days30 days' '-----------------------------------------------------------------------' 'Service7782836362' 'In3940403432' 'Out3740403234'] ['Sys::Performance System' '------------------------------------------------------------' 'System CPU Usage(%)Current3 hrs24 hrs7 days30 days' '------------------------------------------------------------' 'Utilization2118181817' '' '------------------------------------------------------------' 'Memory Used(%)Current3 hrs24 hrs7 days30 days' '------------------------------------------------------------' 'TMM Memory Used1010101010' 'Other Memory Used5555545453' 'Swap Used00000']] The data obtained is historical data over a period of time. Sometimes it is also important to gather the peak usage of throughout/memory/cpu over time and not the average. Stay tuned as we will discuss on how to obtain that information in a upcoming article. Conclusion Use the output of the data to learn the traffic patterns and propose the most appropriate BIG-IP hardware/software in your environment. This could be data collected directly in your production environment or a staging environment, which would help you make the decision on what purchasing strategy gives you the most value from your BIG-IP's. For reference: https://www.f5.com/pdf/products/big-ip-local-traffic-manager-ds.pdf The above is one example of how you can get started with using Ansible and tmsh commands. Using this method you can potentially achieve close to 100% automation on the BIG-IP.11KViews4likes3CommentsInfrastructure as Code: Automating F5 Distributed Cloud CEs with Ansible

Introduction Welcome to the first installment of our Infrastructure as Code (IaC) series, focusing on F5 products and Ansible. This series has been a long-standing desire of mine to showcase the ability of IaC utilizing Ansible Automation Platform to deliver Day 0 through Day 2 operations with multiple F5 virtualized platforms. Over time, I've encountered numerous financial clients expressing interest in this topic. For many of these clients, the prospect of leveraging IaC to redeploy an environment outweighs the traditional approach of performing upgrades. This series will hopefully provide insight, documentation, and code for anyone embarking on this journey. Why Ansible Automation Platform? Like most people, I started my journey with community editions of Ansible. As my coding became more complex, so did the need to ensure that my lab infrastructure adhered to the best security guidelines required by my company (my goal being to mimic how customers would/should do things in real life). I began utilizing Ansible Automation Platform to ensure my credentials were protected, as well as to organize and share my code with the rest of my team (following the 'just in case you got hit by a bus' theory). Ansible Automation Platform utilizes execution environments (EE) to ensure code runs efficiently and cleanly every time. Now, I am also creating Execution Environments via GitHub with workflows and pushing them up to Quay.io (https://github.com/VDI-Tech-Guy/f5-execution-engines). Huge thanks to Colin McNaughton at Red Hat for making my life so much easier with building EEs! Why deploy F5 Distributed Cloud on VMware vSphere? As I mentioned before, I had this desire to build this Infrastructure as Code (IaC) code a while back. This was prior to the Broadcom acquisition of VMware. Being an ex-VMware employee, I had a lot of knowledge of virtualization platform infrastructure going into this project, and I started my focus on deploying on VMware vSphere. F5 Distributed Cloud can be deployed in any cloud, anywhere. However, I really wanted to focus on on-premises deployments because not every customer can afford the cloud. Moreover, there's always a back-and-forth battle between on-premises and the cloud, which has evolved into the Hybrid Cloud and the Multi-Cloud. I do intend to extend this series to the Multi-Cloud, but these initial deployments will be focused on VMware vSphere, as it is still utilized in many organizations across the globe. Information about the Setup in the Demo Video If you watch the video (down below) on how the deployment works, you can see i did a bunch of the pre-work prior to launching the deployment, in the git repostory (link in Resources). Here are some Prework items i did Had a fully functional Ansible Automation Platform 2.4+ enviornment setup and working. (at the time the controller version was 4.4.4) Execution Environment was imported into Ansible Automation Platform Controller The Project was setup to import the Playbooks from the Git Repository (In Resources Section below) and setup the Default Execution Environment Demo Inventory was setup (in our usecase we only needed the vCenter Host) We Setup Network Credentials for the vCenter The Template was setup and had Variables populated in it (Note the API Key was hidden). As mentioned in the Video (Below) The variables were populated to my environment, this contains all the information, i have provided a Demo Example in the git repository for anyone to mimic my settings to their environment, also the example has comments about each field or area of a field and the purpose of the variable. { "rhel_location": "https://vesio.blob.core.windows.net/releases/rhel/9/x86_64/images/vmware/rhel-9.2023.29-20231212012955-single-nic.ova", "xc_api_credential": "_____________________________________", "xc_namespace": "mmabis-automation", "xc_console_host": "f5-bd", "xc_user": "admin", "xc_pass": "Ansible123!", "vcenter_hostname": "{{ ansible_host }}", "vcenter_username": "{{ ansible_env.ANSIBLE_NET_USERNAME }}", "vcenter_password": "{{ ansible_env.ANSIBLE_NET_PASSWORD }}", "vcenter_validate_certs": false, "datacenter_name": "Apex", "cluster_name": "Worlds-Edge", "datastore": "TrueNAS-SSD", "dvs_switch_name": "DSC-DVS", "dns_name_servers": [ "192.168.192.20", "192.168.192.1" ], "dns_name_search": [ "dsc-services.local", "localdomain" ], "ntp_servers": [ "0.pool.ntp.org", "1.pool.ntp.org", "2.pool.ntp.org" ], "domain_fqdn": "dsc-services.local", "DVS_Name": "{{dvs_switch_name}}", "Internal_Network": "DVS-Server-vLan", "External_Network": "DVS-DMZ-vLan", "resource_pool_name": "Lab-XC", "waiting_period": 2, "temp_download_location": "/tmp/xc-ova-download.ova", "xc_ova_builds": [ { "hostname": "xc-automation-rhel-demo", "tmpl_name": "xc-automation-rhel-demo", "admin_password": "Ansible123!", "cluster_name": "xc-automation-cluster-rhel-demo", "dhcp": "no", "external_ip": "172.16.192.170", "external_ip_subnet_prefix": "24", "external_ip_gw": "172.16.192.1", "external_ip_route": "0.0.0.0/0", "internal_ip": "192.168.192.170", "internal_ip_subnet_prefix": "22", "internal_ip_gw": "192.168.192.1", "certified_hw": "vmware-regular-nic-voltmesh", "latitude": "39.51833126", "longitude": "-104.759496962", "build_count": 3, "nic_config": "rhel-multi" } ] } Launching the Code With all of that prework Handled it was as easy as launch the code, there were a few caviats i learned over time when dealing with the atuomation that i wanted to share. Never re-use a cluster name in F5 Distributed Cloud, especially if it was used in a different version of the CE (there were communications issues with the CEs and previous cluster information that was stored in F5 Distributred Cloud Console) The Api Credentials are system level when trying to accept registration or create the token for importing in to the environment. This code is designed to check for "{{ xc-namespace}}-token" if it exists then it will utilize the existing token, if not it will try to create it so you need system level permissions to do this. Build Count should be 3 by default (still needs to be defined) or an ODD number based on recomendations i have heard from our F5 Field. If there are more that i think of ill definatly edit the post and make sure its up-to-date. When launching the code i was able to get the lab to build up correctly multiple times, so please if there is an issue or something i might not have documented well, feel free to let me know and give it a shot for yourself! YouTube Video now on DevCentral Channel Resources https://github.com/f5devcentral/f5-bd-ansible-day0-automation - The Code utilized for this deployment https://github.com/VDI-Tech-Guy/f5-execution-engines - Building Execution Environments with Github and Workflows Conclusion I do hope that this series will help everyone who wants to embrace IaC and if you have any questions feel free to reach out!301Views3likes0CommentsTelemetry streaming - One click deploy using Ansible

In this article we will focus on using Ansible to enable and install telemetry streaming (TS) and associated dependencies. Telemetry streaming The F5 BIG-IP is a full proxy architecture, which essentially means that the BIG-IP LTM completely understands the end-to-end connection, enabling it to be an endpoint and originator of client and server side connections. This empowers the BIG-IP to have traffic statistics from the client to the BIG-IP and from the BIG-IP to the server giving the user the entire view of their network statistics. To gain meaningful insight, you must be able to gather your data and statistics (telemetry) into a useful place.Telemetry streaming is an extension designed to declaratively aggregate, normalize, and forward statistics and events from the BIG-IP to a consumer application. You can earn more about telemetry streaming here, but let's get to Ansible. Enable and Install using Ansible The Ansible playbook below performs the following tasks Grab the latest Application Services 3 (AS) and Telemetry Streaming (TS) versions Download the AS3 and TS packages and install them on BIG-IP using a role Deploy AS3 and TS declarations on BIG-IP using a role from Ansible galaxy If AVR logs are needed for TS then provision the BIG-IP AVR module and configure AVR to point to TS Prerequisites Supported on BIG-IP 14.1+ version If AVR is required to be configured make sure there is enough memory for the module to be enabled along with all the other BIG-IP modules that are provisioned in your environment The TS data is being pushed to Azure log analytics (modify it to use your own consumer). If azure logs are being used then change your TS json file with the correct workspace ID and sharedkey Ansible is installed on the host from where the scripts are run Following files are present in the directory Variable file (vars.yml) TS poller and listener setup (ts_poller_and_listener_setup.declaration.json) Declare logging profile (as3_ts_setup_declaration.json) Ansible playbook (ts_workflow.yml) Get started Download the following roles from ansible galaxy. ansible-galaxy install f5devcentral.f5app_services_package --force This role performs a series of steps needed to download and install RPM packages on the BIG-IP that are a part of F5 automation toolchain. Read through the prerequisites for the role before installing it. ansible-galaxy install f5devcentral.atc_deploy --force This role deploys the declaration using the RPM package installed above. Read through the prerequisites for the role before installing it. By default, roles get installed into the /etc/ansible/role directory. Next copy the below contents into a file named vars.yml. Change the variable file to reflect your environment # BIG-IP MGMT address and username/password f5app_services_package_server: "xxx.xxx.xxx.xxx" f5app_services_package_server_port: "443" f5app_services_package_user: "*****" f5app_services_package_password: "*****" f5app_services_package_validate_certs: "false" f5app_services_package_transport: "rest" # URI from where latest RPM version and package will be downloaded ts_uri: "https://github.com/F5Networks/f5-telemetry-streaming/releases" as3_uri: "https://github.com/F5Networks/f5-appsvcs-extension/releases" #If AVR module logs needed then set to 'yes' else leave it as 'no' avr_needed: "no" # Virtual servers in your environment to assign the logging profiles (If AVR set to 'yes') virtual_servers: - "vs1" - "vs2" Next copy the below contents into a file named ts_poller_and_listener_setup.declaration.json. { "class": "Telemetry", "controls": { "class": "Controls", "logLevel": "debug" }, "My_Poller": { "class": "Telemetry_System_Poller", "interval": 60 }, "My_Consumer": { "class": "Telemetry_Consumer", "type": "Azure_Log_Analytics", "workspaceId": "<<workspace-id>>", "passphrase": { "cipherText": "<<sharedkey>>" }, "useManagedIdentity": false, "region": "eastus" } } Next copy the below contents into a file named as3_ts_setup_declaration.json { "class": "ADC", "schemaVersion": "3.10.0", "remark": "Example depicting creation of BIG-IP module log profiles", "Common": { "Shared": { "class": "Application", "template": "shared", "telemetry_local_rule": { "remark": "Only required when TS is a local listener", "class": "iRule", "iRule": "when CLIENT_ACCEPTED {\n node 127.0.0.1 6514\n}" }, "telemetry_local": { "remark": "Only required when TS is a local listener", "class": "Service_TCP", "virtualAddresses": [ "255.255.255.254" ], "virtualPort": 6514, "iRules": [ "telemetry_local_rule" ] }, "telemetry": { "class": "Pool", "members": [ { "enable": true, "serverAddresses": [ "255.255.255.254" ], "servicePort": 6514 } ], "monitors": [ { "bigip": "/Common/tcp" } ] }, "telemetry_hsl": { "class": "Log_Destination", "type": "remote-high-speed-log", "protocol": "tcp", "pool": { "use": "telemetry" } }, "telemetry_formatted": { "class": "Log_Destination", "type": "splunk", "forwardTo": { "use": "telemetry_hsl" } }, "telemetry_publisher": { "class": "Log_Publisher", "destinations": [ { "use": "telemetry_formatted" } ] }, "telemetry_traffic_log_profile": { "class": "Traffic_Log_Profile", "requestSettings": { "requestEnabled": true, "requestProtocol": "mds-tcp", "requestPool": { "use": "telemetry" }, "requestTemplate": "event_source=\"request_logging\",hostname=\"$BIGIP_HOSTNAME\",client_ip=\"$CLIENT_IP\",server_ip=\"$SERVER_IP\",http_method=\"$HTTP_METHOD\",http_uri=\"$HTTP_URI\",virtual_name=\"$VIRTUAL_NAME\",event_timestamp=\"$DATE_HTTP\"" } } } } } NOTE: To better understand the above declarations check out our clouddocs page: https://clouddocs.f5.com/products/extensions/f5-telemetry-streaming/latest/telemetry-system.html Next copy the below contents into a file named ts_workflow.yml - name: Telemetry streaming setup hosts: localhost connection: local any_errors_fatal: true vars_files: vars.yml tasks: - name: Get latest AS3 RPM name action: shell wget -O - {{as3_uri}} | grep -E rpm | head -1 | cut -d "/" -f 7 | cut -d "=" -f 1 | cut -d "\"" -f 1 register: as3_output - debug: var: as3_output.stdout_lines[0] - set_fact: as3_release: "{{as3_output.stdout_lines[0]}}" - name: Get latest AS3 RPM tag action: shell wget -O - {{as3_uri}} | grep -E rpm | head -1 | cut -d "/" -f 6 register: as3_output - debug: var: as3_output.stdout_lines[0] - set_fact: as3_release_tag: "{{as3_output.stdout_lines[0]}}" - name: Get latest TS RPM name action: shell wget -O - {{ts_uri}} | grep -E rpm | head -1 | cut -d "/" -f 7 | cut -d "=" -f 1 | cut -d "\"" -f 1 register: ts_output - debug: var: ts_output.stdout_lines[0] - set_fact: ts_release: "{{ts_output.stdout_lines[0]}}" - name: Get latest TS RPM tag action: shell wget -O - {{ts_uri}} | grep -E rpm | head -1 | cut -d "/" -f 6 register: ts_output - debug: var: ts_output.stdout_lines[0] - set_fact: ts_release_tag: "{{ts_output.stdout_lines[0]}}" - name: Download and Install AS3 and TS RPM ackages to BIG-IP using role include_role: name: f5devcentral.f5app_services_package vars: f5app_services_package_url: "{{item.uri}}/download/{{item.release_tag}}/{{item.release}}?raw=true" f5app_services_package_path: "/tmp/{{item.release}}" loop: - {uri: "{{as3_uri}}", release_tag: "{{as3_release_tag}}", release: "{{as3_release}}"} - {uri: "{{ts_uri}}", release_tag: "{{ts_release_tag}}", release: "{{ts_release}}"} - name: Deploy AS3 and TS declaration on the BIG-IP using role include_role: name: f5devcentral.atc_deploy vars: atc_method: POST atc_declaration: "{{ lookup('template', item.file) }}" atc_delay: 10 atc_retries: 15 atc_service: "{{item.service}}" provider: server: "{{ f5app_services_package_server }}" server_port: "{{ f5app_services_package_server_port }}" user: "{{ f5app_services_package_user }}" password: "{{ f5app_services_package_password }}" validate_certs: "{{ f5app_services_package_validate_certs | default(no) }}" transport: "{{ f5app_services_package_transport }}" loop: - {service: "AS3", file: "as3_ts_setup_declaration.json"} - {service: "Telemetry", file: "ts_poller_and_listener_setup_declaration.json"} #If AVR logs need to be enabled - name: Provision BIG-IP with AVR bigip_provision: provider: server: "{{ f5app_services_package_server }}" server_port: "{{ f5app_services_package_server_port }}" user: "{{ f5app_services_package_user }}" password: "{{ f5app_services_package_password }}" validate_certs: "{{ f5app_services_package_validate_certs | default(no) }}" transport: "{{ f5app_services_package_transport }}" module: "avr" level: "nominal" when: avr_needed == "yes" - name: Enable AVR logs using tmsh commands bigip_command: commands: - modify analytics global-settings { offbox-protocol tcp offbox-tcp-addresses add { 127.0.0.1 } offbox-tcp-port 6514 use-offbox enabled } - create ltm profile analytics telemetry-http-analytics { collect-geo enabled collect-http-timing-metrics enabled collect-ip enabled collect-max-tps-and-throughput enabled collect-methods enabled collect-page-load-time enabled collect-response-codes enabled collect-subnets enabled collect-url enabled collect-user-agent enabled collect-user-sessions enabled publish-irule-statistics enabled } - create ltm profile tcp-analytics telemetry-tcp-analytics { collect-city enabled collect-continent enabled collect-country enabled collect-nexthop enabled collect-post-code enabled collect-region enabled collect-remote-host-ip enabled collect-remote-host-subnet enabled collected-by-server-side enabled } provider: server: "{{ f5app_services_package_server }}" server_port: "{{ f5app_services_package_server_port }}" user: "{{ f5app_services_package_user }}" password: "{{ f5app_services_package_password }}" validate_certs: "{{ f5app_services_package_validate_certs | default(no) }}" transport: "{{ f5app_services_package_transport }}" when: avr_needed == "yes" - name: Assign TCP and HTTP profiles to virtual servers bigip_virtual_server: provider: server: "{{ f5app_services_package_server }}" server_port: "{{ f5app_services_package_server_port }}" user: "{{ f5app_services_package_user }}" password: "{{ f5app_services_package_password }}" validate_certs: "{{ f5app_services_package_validate_certs | default(no) }}" transport: "{{ f5app_services_package_transport }}" name: "{{item}}" profiles: - http - telemetry-http-analytics - telemetry-tcp-analytics loop: "{{virtual_servers}}" when: avr_needed == "yes" Now execute the playbook: ansible-playbook ts_workflow.yml Verify Login to the BIG-IP UI Go to menu iApps->Package Management LX. Both the f5-telemetry and f5-appsvs RPM's should be present Login to BIG-IP CLI Check restjavad logs present at /var/log for any TS errors Login to your consumer where the logs are being sent to and make sure the consumer is receiving the logs Conclusion The Telemetry Streaming (TS) extension is very powerful and is capable of sending much more information than described above. Take a look at the complete list of logs as well as consumer applications supported by TS over on CloudDocs: https://clouddocs.f5.com/products/extensions/f5-telemetry-streaming/latest/using-ts.html615Views3likes0CommentsUsing CryptoNice as a Sanity-Checking Tool for Automated Application Deployments with Ansible

Any good automation pipeline should have some validation built in to perform sanity checks on what it should have done. This article describes how you can use CryptoNice as a simple and easy way of sanity checking the SSL/TLS configuration of your automated application deployments, by integrating CryptoNice into your pipeline directly after your automation solution has deployed the application. I am going to show a simple Ansible playbook that illustrates this point. The Ansible playbook deploys an application to a BIG-IP that resides in AWS, but it could be any BIG-IP on premises or in any cloud. After the deployment is complete, I use CryptoNice to validate that the application is reachable via TLS and that the deployed VIP is using best practices for SSL deployments. What Is CryptoNice? CryptoNice is both a command line tool and Python library that is developed by F5 Labs and is publicly available; it provides the ability to scan and report on the configuration of SSL/TLS for your internet or internal-facing web services. Built using the sslyze API and SSL, http-client, and DNS libraries, CryptoNice collects data on a given domain and performs a series of tests to check TLS configuration. You can get CryptoNice here:https://github.com/F5-Labs/cryptonice What Is Ansible? Ansible is an open-source software-provisioning, configuration-management, and application-deployment tool enabling infrastructure as code. It runs on many Unix-like systems, and can configure both Unix-like systems as well as Microsoft Windows and also F5 BIG-IPs. You can learn how to install Ansible here:https://docs.ansible.com/ansible/latest/installation_guide/intro_installation.html F5 publishes instructions on how to integrate the Ansible plugins here:https://clouddocs.f5.com/products/orchestration/ansible/devel/ In this article I use the published version 1 F5 Ansible plugin that uses the iControl REST API. If we take a look briefly under the hood here, this plugin is using an imperative API. As of today, F5 has a version 2 plugin in preview that focuses on managing F5 BIG-IP/BIG-IQ through declarative APIs such asAS3, DO, TS, and CFE. You can also check the version 2 plugin preview out on F5 Cloud Docs. I used a stock Ubuntu image as a basis for my Ansible server and followed the instructions for installing Ansible on Ubuntu, then followed the instructions for installing the F5 plugin for Ansible referenced above. You can take a look at the F5/Ansible 1.0 plugin that is published on the Ansible Galaxy Hub here:https://galaxy.ansible.com/f5networks/f5_modules For my demonstration, I also installed CryptoNice on the same server where Ansible and the F5 Ansible plugin are installed; at that point you are off to the races and you can build a simple Ansible script and begin to automate a BIG-IP. The F5 Ansible Module provides you with a great deal of programmability for the BIG-IP basic onboarding, WAF, APM, and LTM. The following is a great reference to give you an idea of the scope of capabilities with Ansible examples that this module provides. You can also automate the infrastructure creation if you so choose, meaning you could stand up a BIG-IP in AWS and then configure everything required to get the device onboarded, and then after that, configure traffic-management objects like VIPs/pools, etc. https://clouddocs.f5.com/products/orchestration/ansible/devel/modules/module_index.html For my test, I created a simple Ansible script that automates the creation of a VIP with an iRule to respond to http get requests. The example also associates a pool and pool members to the VIP to give you an idea of what a simple application deployment may look like. After that, I run a CryptoNice to test the quality of the SSL/TLS. My simple Ansible playbook looks like this: --- - name: Create a VIP, pool and pool members hosts: f5 connection: local vars: provider: password: notmypassword server: f5cove.me user: auser validate_certs: no server_port: 8443 tasks: - name: Create a pool bigip_pool: provider: "{{ provider }}" lb_method: ratio-member name: examplepool slow_ramp_time: 120 delegate_to: localhost - name: Add members to pool bigip_pool_member: provider: "{{ provider }}" description: "webserver {{ item.name }}" host: "{{ item.host }}" name: "{{ item.name }}" pool: examplepool port: '80' with_items: - host: 10.0.0.68 name: web01 - host: 10.0.0.67 name: web02 delegate_to: localhost - name: Create a VIP bigip_virtual_server: provider: "{{ provider }}" description: avip destination: 10.0.0.66 name: vip-1 irules: - responder pool: examplepool port: '443' snat: Automap profiles: - http - f5cove delegate_to: localhost - name: Create Ridirect bigip_virtual_server: provider: "{{ provider }}" description: avipredirect destination: 10.0.0.66 name: vip-1-redirect irules: - _sys_https_redirect port: '80' snat: None profiles: - http delegate_to: localhost - name: play cryptonice nicely hosts: 127.0.0.1 connection: local tasks: - pause: seconds: 30 - name: run cryptonice shell: "cryptonice f5cove.me" register: output - debug: var=output.stdout_lines My output from running the playbook looks like this: PLAY [Create a VIP, pool and pool members] ************************************************************************************************************************************************************************************************* TASK [Gathering Facts] ********************************************************************************************************************************************************************************************************************* ok: [1.2.3.4] TASK [Create a pool] *********************************************************************************************************************************************************************************************************************** ok: [1.2.3.4 -> localhost] TASK [Add members to pool] ***************************************************************************************************************************************************************************************************************** ok: [1.2.3.4 -> localhost] => (item={'host': '10.0.0.68', 'name': 'web01'}) ok: [1.2.3.4 -> localhost] => (item={'host': '10.0.0.67', 'name': 'web02'}) TASK [Create a VIP] ************************************************************************************************************************************************************************************************************************ changed: [1.2.3.4 -> localhost] TASK [Create Ridirect] ********************************************************************************************************************************************************************************************************************* ok: [1.2.3.4 -> localhost] PLAY [play cryptonice nicely] ************************************************************************************************************************************************************************************************************** TASK [Gathering Facts] ********************************************************************************************************************************************************************************************************************* ok: [localhost] TASK [pause] ******************************************************************************************************************************************************************************************************************************* Pausing for 30 seconds (ctrl+C then 'C' = continue early, ctrl+C then 'A' = abort) ok: [localhost] TASK [run cryptonice] ********************************************************************************************************************************************************************************************************************** changed: [localhost] TASK [debug] ******************************************************************************************************************************************************************************************************************************* ok: [localhost] => { "output.stdout_lines": [ "Pre-scan checks", "-------------------------------------", "Scanning f5cove.me on port 443...", "Analyzing DNS data for f5cove.me", "Fetching additional records for f5cove.me", "f5cove.me resolves to 34.217.132.104", "34.217.132.104:443: OPEN", "TLS is available: True", "Connecting to port 443 using HTTPS", "Queueing TLS scans (this might take a little while...)", "Looking for HTTP/2", "", "", "RESULTS", "-------------------------------------", "Hostname:\t\t\t f5cove.me", "", "Selected Cipher Suite:\t\t ECDHE-RSA-AES128-GCM-SHA256", "Selected TLS Version:\t\t TLS_1_2", "", "Supported protocols:", "TLS 1.2:\t\t\t Yes", "TLS 1.1:\t\t\t Yes", "TLS 1.0:\t\t\t Yes", "", "TLS fingerprint:\t\t 29d29d15d29d29d21c29d29d29d29d930c599f185259cdd20fafb488f63f34", "", "", "", "CERTIFICATE", "Common Name:\t\t\t f5cove.me", "Issuer Name:\t\t\t R3", "Public Key Algorithm:\t\t RSA", "Public Key Size:\t\t 2048", "Signature Algorithm:\t\t sha256", "", "Certificate is trusted:\t\t False (Mozilla not trusted)", "Hostname Validation:\t\t OK - Certificate matches server hostname", "Extended Validation:\t\t False", "Certificate is in date:\t\t True", "Days until expiry:\t\t 29", "Valid From:\t\t\t 2021-02-12 23:28:14", "Valid Until:\t\t\t 2021-05-13 23:28:14", "", "OCSP Response:\t\t\t Successful", "Must Staple Extension:\t\t False", "", "Subject Alternative Names:", "\t f5cove.me", "", "Vulnerability Tests:", "No vulnerability tests were run", "", "HTTP to HTTPS redirect:\t\t False", "None", "", "RECOMMENDATIONS", "-------------------------------------", "HIGH - TLSv1.0 Major browsers are disabling TLS 1.0 imminently. Carefully monitor if clients still use this protocol. ", "HIGH - TLSv1.1 Major browsers are disabling this TLS 1.1 immenently. Carefully monitor if clients still use this protocol. ", "Low - CAA Consider creating DNS CAA records to prevent accidental or malicious certificate issuance.", "", "Scans complete", "-------------------------------------", "Total run time: 0:00:05.688562" ] } Note that after the playbook is complete, CryptoNice is telling me that I need to improve the quality of my SSL profile on my BIG-IP. As a result, I create a second SSL profile and Disable TLS 1.0 and 1.1. Upgrade the certificate from a 2048 bit key to a 4096 bit key. Make sure that the certificate bundle is configured correctly to ensure that the certificate is properly trusted. Create a cipher rule and group and use the following cipher string to improve the quality of the SSL connections: !EXPORT:!DHE+AES-GCM:!DHE+AES:ECDHE+AES-GCM:ECDHE+AES:RSA+AES-GCM:RSA+AES:-MD5:-SSLv3:-RC4:!3DES I then alter the playbook to reference the new SSL profile, and then re-run the Ansible playbook. Here is the relevant playbook snippet: - name: Create a VIP bigip_virtual_server: provider: "{{ provider }}" description: avip destination: 10.0.0.66 name: vip-1 irules: - responder pool: examplepool port: '443' snat: Automap profiles: - http - f5cove <change this to the new SSL Profile> delegate_to: localhost The resulting output from CryptoNice after running the playbook again to switch the SSL profile addresses all of the HIGH recommendations. TASK [debug] ********************************************************************************************************************************************************************** ok: [localhost] => { "output.stdout_lines": [ "Pre-scan checks", "-------------------------------------", "Scanning f5cove.me on port 443...", "Analyzing DNS data for f5cove.me", "Fetching additional records for f5cove.me", "f5cove.me resolves to 34.217.132.104", "34.217.132.104:443: OPEN", "TLS is available: True", "Connecting to port 443 using HTTPS", "Queueing TLS scans (this might take a little while...)", "Looking for HTTP/2", "", "", "RESULTS", "-------------------------------------", "Hostname:\t\t\t f5cove.me", "", "Selected Cipher Suite:\t\t ECDHE-RSA-AES256-GCM-SHA384", "Selected TLS Version:\t\t TLS_1_2", "", "Supported protocols:", "TLS 1.2:\t\t\t Yes", "", "TLS fingerprint:\t\t 2ad2ad0002ad2ad0002ad2ad2ad2adcb09dd549309271837f87ac5dad15fa7", "", "", "HTTP/2 supported:\t\t False", "", "", "CERTIFICATE", "Common Name:\t\t\t f5cove.me", "Issuer Name:\t\t\t R3", "Public Key Algorithm:\t\t RSA", "Public Key Size:\t\t 4096", "Signature Algorithm:\t\t sha256", "", "Certificate is trusted:\t\t True (No errors)", "Hostname Validation:\t\t OK - Certificate matches server hostname", "Extended Validation:\t\t False", "Certificate is in date:\t\t True", "Days until expiry:\t\t 88", "Valid From:\t\t\t 2021-04-13 00:55:09", "Valid Until:\t\t\t 2021-07-12 00:55:09", "", "OCSP Response:\t\t\t Successful", "Must Staple Extension:\t\t False", "", "Subject Alternative Names:", "\t f5cove.me", "", "Vulnerability Tests:", "No vulnerability tests were run", "", "HTTP to HTTPS redirect:\t\t False", "None", "", "RECOMMENDATIONS", "-------------------------------------", "Low - CAA Consider creating DNS CAA records to prevent accidental or malicious certificate issuance.", "", "Scans complete", "-------------------------------------", "Total run time: 0:00:05.638186" ] } Now you can see in the CryptoNice output that There are no trust issues with the certificate/certificate bundle. I have upgraded from 2048 bits to 4096 bit key length. I have disabled TLS1.0 and TLS1.1. The connection is handshaking with a more secure cryptographic algorithm. I no longer have any HIGH recommendations for improvement to the quality of TLS connection. Conclusion It is always best practice to perform a sanity check on the services that you create in your automation pipelines. This article describes using CryptoNice as part of a simple Ansible playbook to run an SSL/TLS sanity check on the application in order to improve the security of your application-delivery automation. Ultimately you should not just be checking the SSL/TLS; another good idea would be to introduce application-vulnerability scanners too as part of the automation pipeline.1.3KViews2likes1CommentManage Infrastructure and Services Lifecycle with Terraform and Ansible + Demo

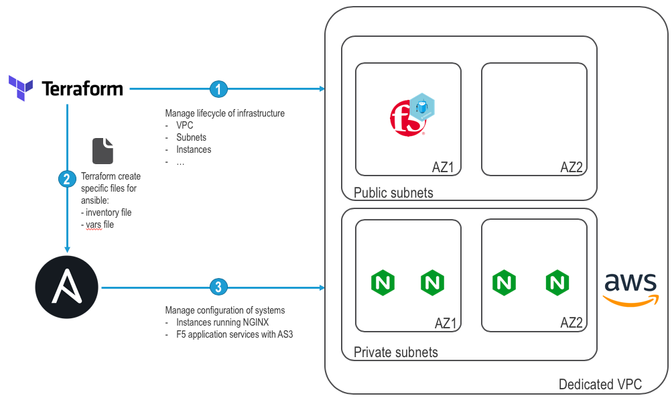

Working as a Solution Architect for F5, Ioften need to have access to a lab environment. 'Traditionally', the method to implement a lab was to leverage tools like Vagrant,VMWare,or others. A lab environment on a laptop is limited by its computing capacities (CPU/Memory/disk/...).Today we are often asked to show how we can integrate our solutions with many different tools(Orchestration solutions, Version Control systems, CI Servers, containerized environments, ...). Except if your laptop is a powerful one, it's difficult to build such an environment and have itrun smoothly. If the lab requirements are too demanding for my laptop, Iwould access one of our lab facility to do my work. Thisapproach itself is fine but bring some challenges: If you travel like Ido, latency can become a hindrance and be frustrating. Lab facilities leverage "shared resources". Which means you may face issues due toconflicting IP addresses, switch misconfiguration, maintenance operations, ... Some resources may already be reserved/used by another fellow colleague and not be available. You may also face other constraints making both deployment models difficult: Need to share access to the lab. Not easy when it runs on your laptop or in a private cloud that is not always opened to the outside world. People may need to be able to replicate your lab in their own environment. Stability/time needed for maintenance: Using a lab over and over will make it messy. You usually At some point, you'll reach a stage where you want to create a "new" environment that is clean and "trustworthy" (until you played too much with it again) I'm sure i've missed other constraints but you get the idea: maintaining a lab and using it in a collaborativemanner is challenging. Luckily, it's easier today to achieve those objectices: Leverage Public Cloud! Public Cloud gives you access to "unlimited" computing services over Internet that can be automated/orchestrated. With Public Cloud, you have access to an API allowing you to spin up a new environment with all therelevant tools deployed. This way, you may go straight into work (after enjoying a nice cup of coffee/tea while yourinfrastructure is being deployed! ).Once your work is done, you can destroy this environment and save money. When you'll need a lab again, you'll be able to spin a new/clean environment in a matter of minutes and be confident that it's a "healthy lab" When working on Automation/Orchestration of Public cloud environments, I see two dominant tools: Terraform andAnsible. https://www.terraform.io Terraform is an open source command line tool that can be used to provision an infrastructure on dozensof different platforms and services (AWS, Azure, ...).One of the strength of Terraform is that it is declarative: You specify the expected "state" of yourinfrastructure and Terraform will take care of all the underlying complexities (Does it need to be provisioned? Should I update the settings of a component? Which components should be created first? Do we need to deleteresources that are not required anymore, ... ).Terraform will store the "state" of your infrastructure and configuration to be more efficient in its work. https://www.ansible.com Ansible is a provisioning and configuration management tool. It is designed to automate application deployments.One of the strength of Ansible is that it doesn't require any "agents" to run on the targetted systems. Ansibleworks by leveraging "Modules". Those modules are consumed to define the "state" of the targetted systems. They areusually executed over SSH (by default). So how to leverage those tools to have a lab available on-demand? In the following demo, we will: Leverage Terraform to manage the lifecycle of a new AWS environment: manage a dedicated VPC with external/internal subnets, Ubuntu instances, F5 solution) In addition to deploying our infrastructure, it will generate the relevant files for Ansible (inventory file to know theIPs of our systems, ansible variable files to know how to configure the F5 solution with AS3) Use Ansible to manage the configuration of our systems: update our ubuntu instances, install NGINX Web serviceon our Ubuntu instances, deploy a standard F5 configuration to load balance our web application with AS3 Here is a summary for the demo: Demo time! By leveraging tools like Terraform or Ansible (you can achieve the same results with other tools), it is easy to handle thelifecycle of an infrastructure and the services running on top of it. This is what people IaC (Infrastructure as Code) Useful links:- If you want to learn more about the setup of this demo, it is posted on Github: here- F5 provides a list of templates to automate deployment in public cloud. It's available here: AWS Templates, Azure Templates, GCP Templates- F5 Application Services 3 (AS3) documentation/examples: here- If you want to learn more about our API and how to automate/orchestrate F5 solutions (free training): F5 A&O Training1KViews2likes1CommentHow to Use BIG-IQ and Ansible to Build Advanced BIG-IP Automation Workflows

It’s no secret that automation of networking, security, and application development processes offers a laundry list of benefits—reduced deployment time, lowered cost, fewer errors, and more resilient systems, to name a few. One of the most popular tools for building automation workflows is Ansible. Ansible is a powerful, open-source tool that simplifies and automates many common tasks and enables infrastructure as code for creating, deploying, and managing F5 application delivery and security services. This is accomplished through playbooks and roles available on Ansible Galaxy. Another way to streamline working with BIG-IP is with BIG-IQ Centralized Management. BIG-IQ combines deep, app-centric visibility and dashboarding together with device, configuration, and policy management in a unified, intuitive user interface. From BIG-IQ, you can create new BIG-IP Virtual Editions (VEs), provision them with Declarative Onboarding, create advanced AS3 services, move deployments, upgrade software, and much more. Together Ansible and BIG-IQ make automation and management of your BIG-IP environment simple and straightforward—enabling an effective, intuitive, data-rich, and highly visual solution that offers value to networking/F5 gurus, security practitioners, and application owners/developers alike. To make things even easier, the F5 team has developed several community-supported Ansible roles that are designed to inject automation into workflows and make BIG-IQ’s simple app-centric management functionality even better. Please note that this workflow assumes that you already have a BIG-IQ Centralized Management deployment up and running. The end result will be a fully provisioned BIG-IP deployment that can be fully managed—client-to-server visibility, troubleshooting, object level configuration, etc.—from BIG-IQ’s intuitive, role-specific GUI. You can get started with these roles and workflows today by checking out F5’s repository on Ansible Galaxy. To use these Ansible roles and playbooks, you’ll need to download and install them to a local workstation that will be used for managing F5 deployments. Use the Ansible roles for BIG-IQ below to: Create new VEs Onboard VEs with DO Create and deploy common objects such as SSL certs and WAF policies Create AS3 application delivery and security services Move deployments across BIG-IPs For additional how-to-use resources, guidance, and labs for BIG-IQ, check out the video library and the BIG-IQ labs. Create a BIG-IP VE in AWS tasks: - name: Create a VE in AWS include_role: name: f5devcentral.bigiq_create_ve vars: cloud_environment: "BIG-IQ AWS US-East" ve_name: "bigipvm01" register: status - name: Get AWS BIG-IP VE IP address (port 8443) debug: msg: "{{ ve_ip_address }}" - name: Get AWS BIG-IP VE private Key Filename debug: msg: "{{ private_key_filename }}" Onboard the New BIG-IP VE with Declarative Onboarding tasks: - name: Onboard BIG-IP VE with DO include_role: name: f5devcentral.atc_deploy vars: atc_service: Device atc_method: POST atc_declaration: "{{ lookup('template','do_bigip_aws.j2') }}" atc_delay: 30 atc_retries: 15 register: atc_DO_status do_bigip_aws.j2: { "class": "DO", "declaration": { "schemaVersion": "1.5.0", "class": "Device", "async": true, "Common": { "class": "Tenant", "myLicense": { "class": "License", "licenseType": "licensePool", "licensePool": "byol-pool", "bigIpUsername": "admin", "bigIpPassword": "secret" }, "myProvision": { "class": "Provision", "ltm": "nominal", "avr": "nominal" }, "myNtp": { "class": "NTP", "servers": [ "169.254.169.123" ], "timezone": "UTC" }, "admin": { "class": "User", "shell": "bash", "userType": "regular", "partitionAccess": { "all-partitions": { "role": "admin" } }, "password": "secret" }, "hostname": "bigipvm01.example.com" } }, "targetUsername": "admin", "targetHost": "{{ ve_ip_address }}", "targetPort": 8443, "targetSshKey": { "path": "{{ private_key_filename }}" }, "bigIqSettings": { "conflictPolicy": "USE_BIGIQ", "deviceConflictPolicy": "USE_BIGIP", "failImportOnConflict": false, "versionedConflictPolicy": "KEEP_VERSION", "statsConfig": { "enabled": true } } } Create SSL Certificate and Key on BIG-IQ tasks: - name: Authenticate to BIG-IQ uri: url: https://{{ provider.server }}:{{ provider.server_port }}/mgmt/shared/authn/login method: POST headers: Content-Type: application/json body: username: "{{ provider.user }}" password: "{{ provider.password }}" loginProviderName: "{{ provider.auth_provider | default('tmos') }}" body_format: json timeout: 60 status_code: 200, 202 validate_certs: "{{ provider.validate_certs }}" register: auth - name: Create SSL Certificate and Key on BIG-IQ uri: url: https://{{ provider.server }}:{{ provider.server_port }}/mgmt/cm/adc-core/tasks/certificate-management method: POST headers: Content-Type: application/json X-F5-Auth-Token: "{{ auth.json.token.token }}" body: | { "issuer": "Self", "itemName": "mywebapp.crt", "itemPartition": "Common", "durationInDays": 365, "country": "US", "commonName": "mywebapp.example.com ", "division": "MyDiv", "organization": "MyOrg", "locality": "Seattle", "state": "WA", "subjectAlternativeName": "DNS: mywebapp.example.com", "securityType": "normal", "keyType": "RSA", "keySize": 2048, "command": "GENERATE_CERT" } body_format: json timeout: 60 status_code: 200, 202 validate_certs: "{{ provider.validate_certs }}" register: json_response Pin and Deploy SSL Certificates and Key to BIG-IP tasks: - name: Pin and deploy SSL certificate and key to BIG-IP include_role: name: f5devcentral.bigiq_pinning_deploy_objects vars: bigiq_task_name: "Deployment through Ansible/API - mywebapp" modules: - name: ltm pins: - { type: "sslCertReferences", name: "mywebapp.crt" } - { type: "sslKeyReferences", name: "mywebapp.key" } device_address: "{{ ve_ip_address }}" register: status Deploy an AS3 Service to BIG-IP tasks: - name: Deploy AS3 application services to BIG-IP include_role: name: f5devcentral.atc_deploy vars: atc_service: AS3 atc_method: POST atc_declaration: "{{ lookup('template','as3_bigiq_https_app.j2') }}" atc_delay: 30 atc_retries: 15 register: atc_AS3_status as3_bigiq_https_app.j2: { "class": "AS3", "action": "deploy", "declaration": { "class": "ADC", "schemaVersion": "3.12.0", "target": { "address": "{{ ve_ip_address }}" }, "myorg": { "class": "Tenant", "mywebapp": { "class": "Application", "schemaOverlay": "AS3-F5-HTTPS-offload-lb-existing-cert-template-big-iq-default-v1", "template": "https", "serviceMain": { "class": "Service_HTTPS", "pool": "Pool", "enable": true, "serverTLS": "TLS_Server", "virtualPort": 443, "profileAnalytics": { "use": "Analytics_Profile" }, "virtualAddresses": [ "0.0.0.0" ] }, "Pool": { "class": "Pool", "members": [ { "adminState": "enable", "servicePort": 80, "serverAddresses": 10.1.3.23 } ] }, "TLS_Server": { "class": "TLS_Server", "certificates": [ { "certificate": "Certificate" } ] }, "Certificate": { "class": "Certificate", "privateKey": { "bigip": "/Common/mywebapp.key" }, "certificate": { "bigip": "/Common/mywebapp.crt" } }, "Analytics_Profile": { "class": "Analytics_Profile", "collectIp": false, "collectGeo": false, "collectUrl": false, "collectMethod": false, "collectUserAgent": false, "collectOsAndBrowser": false, "collectPageLoadTime": false, "collectResponseCode": true, "collectClientSideStatistics": true } } } } } Move an AS3 Service Within BIG-IQ Dashboard tasks: - name: Move an AS3 application service in BIG-IQ dashboard. include_role: name: f5devcentral.bigiq_move_app_dashboard vars: apps: - name: myWebApp pins: - name: "myorg_mywebapp" register: status1.4KViews2likes0CommentsF5 Automation with Ansible Tips and Tricks

Getting Started with Ansible and F5 In this article we are going to provide you with a simple set of videos that demonstrate step by step how to implement automation with Ansible. In the last video, however we will demonstrate how telemetry and automation may be used in combination to address potential performance bottlenecks and ensure application availability. To start, we will provide you with details on how to get started with Ansible automation using the Ansible Automation Platform®: Backing up your F5 device Once a user has installed and configured Ansible Automation Platform, we will now transition to a basic maintenance function – an automated backup of a BIG-IP hardware device or Virtual Edition (VE). This is always recommended before major changes are made to our BIG-IP devices Configuring a Virtual Server Next, we will use Ansible to configure a Virtual Server, a task that is most frequently performed via manual functions via the BIG-IP. When changes to a BIG-IP are infrequent, manual intervention may not be so cumbersome. However large enterprise customers may need to perform these tasks hundreds of times: Replace an SSL Certificate The next video will demonstrate how to use Ansible to replace an SSL certificate on a BIG-IP. It is important to note that this video will show the certificate being applied on a BIG-IP and then validated by browsing to the application website: Configure and Deploy an iRule The next administrative function will demonstrate how to configure and push an iRule using the Ansible Automation Platform® onto a BIG-IP device. Again this is a standard administrative task that can be simply automated via Ansible: Delete the Existing Virtual Server Ok so now we have to delete the above configuration to roll back to a steady state. This is a common administrative task when an application is retired. We again demonstrate how Ansible automation may be used to perform these simple administrative tasks: Telemetry and Automation: Using Threshold Triggers to Automate Tasks and Fix Performance Bottlenecks Now you have a clear demonstration as to how to utilize Ansible automation to perform routine tasks on a BIG-IP platform. Once you have become proficient with more routine Ansible tasks, we can explore more high-level, sophisticated automation tasks. In the below demonstration we show how BIG-IP administrators using SSL Orchestrator® (SSLO) can combine telemetry with automation to address performance bottlenecks in an application environment: Resources: So that is a short series of tutorials on how to perform routine tasks using automation plus a preview of a more sophisticated use of automation based upon telemetry and automatic thresholds. For more detail on our partnership, please visit our F5/Ansible page or visit the Red Hat Automation Hub for information on the F5 Ansible certified collections. https://www.f5.com/ansible https://www.ansible.com/products/automation-hub https://galaxy.ansible.com/f5networks/f5_modules5.2KViews2likes1CommentParsing complex BIG-IP json structures made easy with Ansible filters like json_query

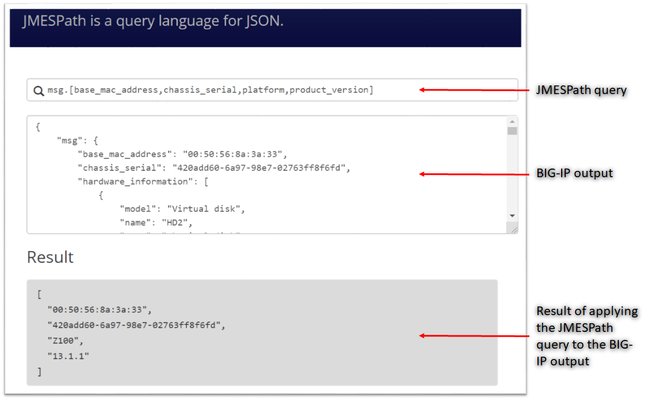

JMESPath and json_query JMESPath (JSON Matching Expression paths) is a query language for searching JSON documents. It allows you to declaratively extract elements from a JSON document. Have a look at this tutorial to learn more. The json_query filter lets you query a complex JSON structure and iterate over it using a loop structure.This filter is built upon jmespath, and you can use the same syntax as jmespath. Click here to learn more about the json_query filter and how it is used in Ansible. In this article we are going to use the bigip_device_info module to get various facts from the BIG-IP and then use the json_query filter to parse the output to extract relevant information. Ansible bigip_device_info module Playbook to query the BIG-IP and gather system based information. - name: "Get BIG-IP Facts" hosts: bigip gather_facts: false connection: local tasks: - name: Query BIG-IP facts bigip_device_info: provider: validate_certs: False server: "xxx.xxx.xxx.xxx" user: "*****" password: "*****" gather_subset: - system-info register: bigip_facts - set_fact: facts: '{{bigip_facts.system_info}}' - name: debug debug: msg="{{facts}}" To view the output on a different subset, below are a few examples to change the gather_subset and set_fact values in the above playbook from gather_subset: system-info, facts: bigip_facts.system_info to any of the below: gather_subset: vlans , facts: bigip_facts.vlans gather_subset: self-ips, facts: bigip_facts.self_ips gather_subset: nodes. facts: bigip_facts.nodes gather_subset: software-volumes, facts: bigip_facts.software_volumes gather_subset: virtual-servers, facts: bigip_facts.virtual_servers gather_subset: system-info, facts: bigip_facts.system_info gather_subset: ltm-pools, facts: bigip_facts.ltm_pools Click here to view all the information that can be obtained from the BIG-IP using this module. Parse the JSON output Once we have the output lets take a look at how to parse the output. As mentioned above the jmespath syntax can be used by the json_query filter. Step 1: We will get the jmespath syntax for the information we want to extract Step 2: We will see how the jmespath syntax and then be used with json_query in an Ansible playbook The website used in this article to try out the below syntax: https://jmespath.org/ Some BIG-IP sample outputs are attached to this article as well (Check the attachments section after the References). The attachment file is a combined output of a few configuration subsets.Copy paste the relevant information from the attachment to test the below examples if you do not have a BIG-IP. System information The output for this section is obtained with above playbook using parameters: gather_subset: system-info, facts: bigip_facts.system_info Once the above playbook is run against your BIG-IP or if you are using the sample configuration attached, copy the output and paste it in the relevant text box. Try different queries by placing them in the text box next to the magnifying glass as shown in image below # Get MAC address, serial number, version information msg.[base_mac_address,chassis_serial,platform,product_version] # Get MAC address, serial number, version information and hardware information msg.[base_mac_address,chassis_serial,platform,product_version,hardware_information[*].[name,type]] Software volumes The output for this section is obtained with above playbook using parameters: gather_subset: software-volumes facts: bigip_facts.software_volumes # Get the name and version of the software volumes installed and its status msg[*].[name,active,version] # Get the name and version only for the software volume that is active msg[?active=='yes'].[name,version] VLANs and Self-Ips The output for this section is obtained with above playbook using parameters gather_subset: vlans and self-ips facts: bigip_facts Look at the following example to define more than one subset in the playbook # Get all the self-ips addresses and vlans assigned to the self-ip # Also get all the vlans and the interfaces assigned to the vlan [msg.self_ips[*].[address,vlan], msg.vlans[*].[full_path,interfaces[*]]] Nodes The output for this section is obtained with above playbook using parameters gather_subset: nodes, facts: bigip_facts.nodes # Get the address and availability status of all the nodes msg[*].[address,availability_status] # Get availability status and reason for a particular node msg[?address=='192.0.1.101'].[full_path,availability_status,status_reason] Pools The output for this section is obtained with above playbook using parameters gather_subset: ltm-pools facts: bigip_facts.ltm_pools # Get the name of all pools msg[*].name # Get the name of all pools and their associated members msg[*].[name,members[*]] # Get the name of all pools and only address of their associated members msg[*].[name,members[*].address] # Get the name of all pools along with address and status of their associated members msg[*].[name,members[*].address,availability_status] # Get status of pool members of a particular pool msg[?name=='/Common/pool'].[members[*].address,availability_status] # Get status of pool # Get address, partition, state of pool members msg[*].[name,members[*][address,partition,state],availability_status] # Get status of a particular pool and particular member (multiple entries on a member) msg[?full_name=='/Common/pool'].[members[?address=='192.0.1.101'].[address,partition],availability_status] Virtual Servers The output for this section is obtained with above playbook using parameters gather_subset: virtual-servers facts: bigip_facts.virtual_servers # Get destination IP address of all virtual servers msg[*].destination # Get destination IP and default pool of all virtual servers msg[*].[destination,default_pool] # Get me all destination IP of all virtual servers that a particular pool as their default pool msg[?default_pool=='/Common/pool'].destination # Get me all profiles assigned to all virtual servers msg[*].[destination,profiles[*].name] Loop and display using Ansible We have seen how to use the jmespath syntax and extract information, now lets see how to use it within an Ansible playbook - name: Parse the output hosts: localhost connection: local gather_facts: false tasks: - name: Setup provider set_fact: provider: server: "xxx.xxx.xxx.xxx" user: "*****" password: "*****" server_port: "443" validate_certs: "no" - name: Query BIG-IP facts bigip_device_info: provider: "{{provider}}" gather_subset: - system_info register: bigip_facts - debug: msg="{{bigip_facts.system_info}}" # Use json query filter. The query_string will be the jmespath syntax # From the jmespath query remove the 'msg' expression and use it as it is - name: "Show relevant information" set_fact: result: "{{bigip_facts.system_info | json_query(query_string)}}" vars: query_string: "[base_mac_address,chassis_serial,platform,product_version,hardware_information[*].[name,type]]" - debug: "msg={{result}}" Another example of what would change if you use a different query (only highlighting the changes that need to made below from the entire playbook) - name: Query BIG-IP facts bigip_device_info: provider: "{{provider}}" gather_subset: - ltm-pools register: bigip_facts - debug: msg="{{bigip_facts.ltm_pools}}" - name: "Show relevant information" set_fact: result: "{{bigip_facts.ltm_pools | json_query(query_string)}}" vars: query_string: "[*].[name,members[*][address,partition,state],availability_status]" The key is to get the jmespath syntax for the information you are looking for and then its a simple step to incorporate it within your Ansible playbook References Try the queries - https://jmespath.org/ Learn more jmespath syntax and example - https://jmespath.org/tutorial.html Ansible lab that can be used as a sandbox - https://clouddocs.f5.com/training/automation-sandbox/1.9KViews2likes2CommentsAutomate BIG-IP in customer environments using Ansible

There are a lot of technical resources on how Ansible can be used to automte the F5 BIG-IP. A consolidated list of links to help you brush up on Ansible as well as help you understand the Ansible BIG-IP solution Getting started with Ansible Ansible and F5 solution overview F5 and Ansible Integration - Webinar Dig deeper into F5 and Ansible Integration - Webinar I am going to dive directly into how customers are using ansible to automate their infrastructure today. If you are considering using Ansible or have already gone through a POC maybe a few of these use cases will resonate with you and you might see use of them in your environment. Use Case: Rolling application deployments Problem Consider a web application whose traffic is being load balanced by the BIG-IP. When the web servers running this application need to be updated with a new software package, an organization would consider going in for a rolling deployment where rather than updating all servers or tiers simultaneously. This means, the organization installs the updated software package on one server or subset of servers at a time. The organization would need to bring down one server at a time to have minimal traffic disruption. Solution This is a repeatitive and time consuming task for an organization considering there are newer updates coming out every so often. Ansible’s F5 modules can be used to automate operations like disabling a node (pool member), performing an update on that node and then bring that node back into service. This workflow is applicable for every node member of the pool sequentially resulting in zero down time for the application Modules bigip_ltm_facts bigip_node Sample - name: Update software on Pool Member - "{{node_name}}" hosts: bigip gather_facts: false vars: pool_name: "{{pool_name}}" node_name: "{{node_name}}" tasks: - name: Query BIG-IP facts for Pool Status - "{{pool_name}}" bigip_facts: validate_certs: False server: "{{ bigip_ip_address }}" user: "{{ bigip_username}}" bigip_password: "{{ bigip_password}}" include: "pool" delegate_to: localhost register: bigip_facts - name: Assert that Pool - "{{pool_name}}" is available before disabling node assert: that: - "'AVAILABILITY_STATUS_GREEN' in bigip_facts['ansible_facts']['pool']['/Common/{{iapp_name}}/{{pool_name}}']['object_status']['availability_status']|string" msg: "Pool is NOT available DONOT want to disable any more nodes..Check your BIG-IP" - name: Disable Node - "{{node_name}}" bigip_node: server: "{{ bigip_ip_address }}" user: "{{ bigip_username}}" bigip_password: "{{ bigip_password}}" partition: "Common" name: "{{node_name}}" state: "present" session_state: "disabled" monitor_state: "disabled" delegate_to: localhost - name: Get Node facts - "{{node_name}}" bigip_facts: validate_certs: False server: "{{ bigip_ip_address }}" user: "{{ bigip_username}}" bigip_password: "{{ bigip_password}}" include: "node" delegate_to: localhost register: bigip_facts - name: Assert that node - "{{node_name}}" did get disabled assert: that: - "'AVAILABILITY_STATUS_RED' in bigip_facts['ansible_facts']['node']['/Common/{{node_name}}']['object_status']['availability_status']|string" msg: "Pool member did NOT get DISABLED" - name: Perform software update on node - "{{node_name}}" hosts: "{{node_name}}" gather_facts: false vars: pool_name: "{{pool_name}}" node_name: "{{node_name}}" tasks: - name: "Update 'curl' package" apt: name=curl state=present - name: Enable Node hosts: bigip gather_facts: false vars: pool_name: "{{pool_name}}" node_name: "{{node_name}}" tasks: - name: Enable Node - "{{node_name}}" bigip_node: server: "{{ bigip_ip_address }}" user: "{{ bigip_username}}" bigip_password: "{{ bigip_password}}" partition: "Common" name: "{{node_name}}" state: "present" session_state: "enabled" monitor_state: "enabled" delegate_to: localhost Use Case: Deploying consistent policies across different environment using F5 iApps Problem Consider an organization has many different environments (development/QA/Production). Each environment is a replica of each other and has around 100 BIG-IP’s in each environment. When a new application is added to one environment it needs to be added to other environments in a fast, safe and secure manner. There is also a need to make sure the virtual server bought online adheres to all the traffic rules and security policies. Solution To ensure that the virtual server is deployed correctly and efficiently, the entire application can be deployed on the BIG-IP using the iApps module. iApps also has the power to reference ASM policies hence helping to consistently deploy an application with the appropriate security policies Modules bigip_iapp_template bigip_iapp_service Sample - hosts: localhost tasks: - name: Get iApp from Github get_url: url: "{{ Git URL from where to download the iApp }}" dest: /var/tmp validate_certs: False - name: Upload iApp template to BIG-IP bigip_iapp_template: server: "{{ bigip_ip_address }}" user: "{{ bigip_username }}" password: "{{ bigip_password }}" content: "{{ lookup('file', '/var/tmp/appsvcs_integration_v2.0.003.tmpl') }}" #Name of iApp state: "present" validate_certs: False delegate_to: localhost - name: Deploy iApp bigip_iapp_service: name: "HTTP_VS_With_L7Firewall" template: "appsvcs_integration_v2.0.003" parameters: "{{ lookup('file', 'final_iapp_with_asm.json') }}" #JSON blob for body content to the iApp server: "{{ bigip_ip_address }}" user: "{{ bigip_username}}" password: "{{ bigip_password }}" state: "present" delegate_to: localhost Use Case: Disaster Recovery Problem All organizations should have a disaster recovery plan in place incase of a catastrophic failure resulting in loss of data including BIG-IP configuration data. Re-configuring the entire infrastructure from scratch can be an administrative nightmare. The procedure in place for disaster recovery can also be used for migrating data from one BIG-IP to another as well as for performing hardware refresh and RMA’s. Solution Use BIG-IP user configuration set (UCS) configuration file to restore the configuration on all the BIG-IP’s and have your environment back to its original configuration in minutes. Modules bigip_ucs Sample --- - name: Create UCS file hosts: bigip gather_facts: false tasks: - name: Create ucs file and store it bigip_ucs: server: "{{ bigip_ip_address }}" user: "{{ bigip_username }}" password: "{{ bigip_password }}" ucs: "/root/test.ucs" state: "installed" delegate_to: localhost Use Case: Troubleshooting Problem Any problem on the BIG-IP for example a backend server not receiving traffic or a virtual server dropping traffic unexpectedly or a monitor not responding correctly needs extensive troubleshooting. These problems can be hard to pin point and need an expert to debug and look through the logs. Solution Qkview is a great utility on the BIG-IP which that an administrator can use to automatically collect configuration and diagnostic information from BIG-IP and other F5 systems. Use this ansible module and run it against all BIG-IP’s in your network and collect the diagnostic information to pass it onto to F5 support. Modules bigip_qkview Sample - name: Create qkview hosts: bigip gather_facts: false tasks: - name: Generate and store qkview file bigip_qkview: server: "{{ bigip_ip_address }}" user: "{{ bigip_username }}" password: "{{ bigip_password }}" asm_request_log: "no" dest: "/tmp/localhost.localdomain.qkview" validate_certs: "no" delegate_to: localhost Conclusion These are just some of the use cases that can be tackled using our BIG-IP ansible modules. We have several more modules in Ansible 2.4 release that the ones mentioned above which can help in other scenarios. To view a complete list of BIG-IP modules available in ansible 2.4 release click here Module overview at a glance BIG-IP modules in Ansible release 2.3 New BIG-IP modules in Ansible release 2.4 To get started and save time download a BIG-IP onboarding ansible role from ansible galaxy and run the playbook against your BIG-IP1.5KViews1like6CommentsF5 and Cisco ACI Essentials: Automate automate automate !!!