Run AI LLMs Centrally and Protect AI Inferencing with F5 Distributed Cloud API Security

Employee

Employee Altostratus

AltostratusCentralized AI LLM and F5 Distributed Cloud API Security to Secure Your AI Inferencing

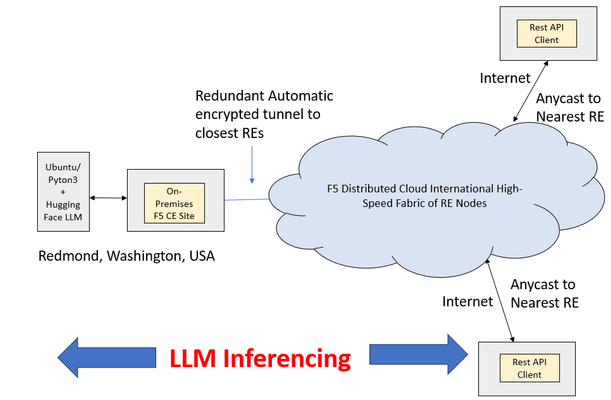

As the world of artificial intelligence continues to evolve, most especially about distributed large language models (LLMs), performance, security, and scalability become increasingly important. To solve these issues, F5's Distributed Cloud API Security takes the form of running AI LLMs centrally and enforcing strong security for the inferencing stages.

Managent AALLeforest LLMs

In this regard, one of the most distinctive elements when comparing F5 to its peers is how it brings AI LLM operations into a centralized mode. This means an enterprise can simplify its AI workflows with endless possibilities for running many models from a single management point. This has important implications: it allows organizations to cut down on a great deal of overhead, dramatically reduce latency, and ultimately increase the efficiency of operating their AI systems. Combined with optimized model deployment and resource consolidation, F5 removes the burden of managing distributed models so that teams can focus on innovation.

Security at the Forefront

But F5's Distributed Cloud API Security is powerful because it can guard against a broad range of AI inferencing threats. As companies rely more and more on AI for their mission-critical applications, the risk of unauthorized access to these data, as well as other security issues exposed. F5's platform uses hardened threat detection techniques to encode protective measures for API endpoints.

F5´s security features play a key role in the quality of output: automated threat intelligence, customized access controls, and anomaly detection must be in place as their main objective is for AI output integrity. Protecting the inferencing process also allows organizations to guarantee stakeholders in robust and secure AI-powered insights they deliver.

Performance and Scalability

Performance optimization: One of the best parts of F5 API Security Using an extended global security component, F5 ensures that large-scale workloads processed by AI LLMs no longer depend on the user's location. This translates into better response times and user experience, especially when deploying AI applications at scale.

Further, F5 is also attractive from a technology perspective in that it can be easily integrated with existing tech stacks. Additionally, organizations should be able to more easily integrate F5's security capabilities into their processes without requiring far-reaching reconfigurations or realignments of resources.