Programmability in the Network: Canary Deployments

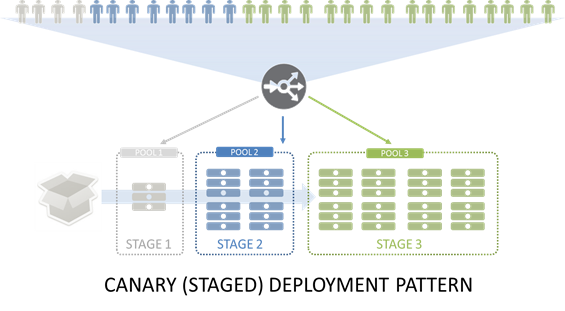

#devops The canary deployment pattern is another means of enabling continuous delivery. Deployment patterns (or as I like to call them of late, devops patterns) are good examples of how devops can put into place systems and tools that enable continuous delivery to be, well, continuous. The goal of these patterns is, for the most part, to make sure operations can smoothly move features, functions, releases or applications into production. We've previously looked at the Blue Green deployment pattern and today we're going to look at a variation: Canary deployments. Canary deployments are applicable when you're running a cluster of servers. In other words, you've got lots and lots of (probably active right now while you're considering pushing that next release) users. What you don't want is to do the traditional "we're sorry, we're down for maintenance, here's a picture of a funny squirrel to amuse you while you wait" maintenance page. You want to be able to roll out the new release without disruption. Yeah, that's quite the ask, isn't it? The Canary deployment pattern is an incremental upgrade methodology. First, the build is pushed to a small set of servers to which only a select group of users are directed. If that goes well, the release is pushed to a larger set of servers with a limited set of users. Finally, if that goes well, then the release is pushed out to all servers and all users. If issues occur at any stage, the release is halted - it goes no further. Hence the naming of the pattern - after the miner's canary, used because "its demise provided a warning of dangerous levels of toxic gases". The trick to implementing this pattern is two fold: first, being able to group the servers used in each step into discrete pools and second, the ability to direct specific sets of users to the appropriate pools. Both capabilities requires the ability to execute some logic to perform user-based load balancing. Nolio, in its first Devops Best Practices video, implements Canary deployments by manipulating the pools of servers at the load balancing tier, removing them to upgrade and then reinserting them for testing before moving onto the next phase. If your load balancing solution is programmable, there's no need to actually remove them as you can simply insert logic to remove them from being selected until they've been upgraded. You can also then insert the logic to determine which users are directed to which pool of servers. If the load balancing platform is really programmable, you can even extend that to determination to querying a database to determine user inclusion in certain groups, such as those you might use to perform AB testing. Such logic might base the decision on IP address (not the best option but an option) or later, when you're actually rolling out to a percentage of users you can write logic that randomly selects users based on location or their user name - like sharding, only in reverse - or pretty much anything you can think of. You can even split that further if you're rolling out an update to an API that's used by both mobile and traditional clients, to catch both or neither or specific types in an orderly fashion so you can test methodically - because you want to test methodically when you're using live users as test subjects. The beauty of this pattern is that allows continuous delivery. Users are never disrupted (if you do it right) and the upgrade occurs in a safely staged, incremental fashion. That enables you to back out quickly if necessary, because you do have a back button plan, right? Right?807Views1like1CommentBack to Basics: Health Monitors and Load Balancing

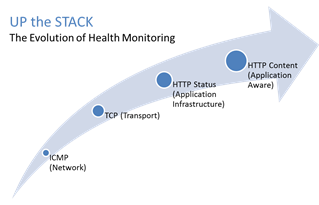

#webperf #ado Because every connection counts One of the truisms of architecting highly available systems is that you never, ever want to load balance a request to a system that is down. Therefore, some sort of health (status) monitoring is required. For applications, that means not just pinging the network interface or opening a TCP connection, it means querying the application and verifying that the response is valid. This, obviously, requires the application to respond. And respond often. Best practices suggest determining availability every 5 seconds or so. That means every X seconds the load balancing service is going to open up a connection to the application and make a request. Just like a user would do. That adds load to the application. It consumes network, transport, application and (possibly) database resources. Resources that cannot be used to service customers. While the impact on a single application may appear trivial, it's not. Remember, as load increases performance decreases. And no matter how trivial it may appear, health monitoring is adding load to what may be an already heavily loaded application. But Lori, you may be thinking, you expound on the importance of monitoring and visibility all the time! Are you saying we shouldn't be monitoring applications? Nope, not at all. Visibility is paramount, providing the actionable data necessary to enable highly dynamic, automated operations such as elasticity. Visibility through health-monitoring is a critical means of ensuring availability at both the local and global level. What we may need to do, however, is move from active to passive monitoring. PASSIVE MONITORING Passive monitoring, as the modifier suggests, is not an active process. The Load balancer does not open up connections nor query an application itself. Instead, it snoops on responses being returned to clients and from that infers the current status of the application. For example, if a request for content results in an HTTP error message, the load balancer can determine whether or not the application is available and capable of processing subsequent requests. If the load balancer is a BIG-IP, it can mark the service as "down" and invoke an active monitor to probe the application status as well as retrying the request to another available instance – insuring end-users do not see an error. Passive (inband) monitors are not binary. That is, they aren't simple "on" or "off" based on HTTP status codes. Such monitors can be configured to track the number of failures and evaluate failure rates against a configurable failure interval. When such thresholds are exceeded, the application can then be marked as "down". Passive monitors aren't restricted to availability status, either. They can also monitor for performance (response time). Failure to meet response time expectations results in a failure, and the application continues to be watched for subsequent failures. Passive monitors are, like most inline/inband technologies, transparent. They quietly monitor traffic and act upon that traffic without adding overhead to the process. Passive monitoring gives operations the visibility necessary to enable predictable performance and to meet or exceed user expectations with respect to uptime, without negatively impacting performance or capacity of the applications it is monitoring.2.8KViews1like2CommentsThe Limits of Cloud: Gratuitous ARP and Failover

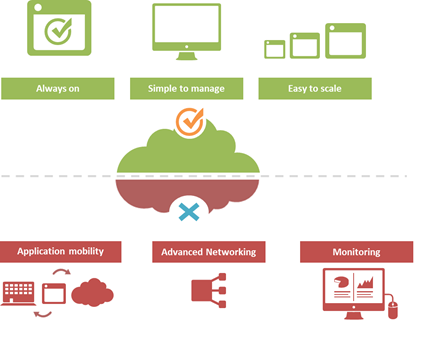

#Cloud is great at many things. At other things, not so much. Understanding the limitations of cloud will better enable a successful migration strategy. One of the truisms of technology is that takes a few years of adoption before folks really start figuring out what it excels at – and conversely what it doesn't. That's generally because early adoption is focused on lab-style experimentation that rarely extends beyond basic needs. It's when adoption reaches critical mass and folks start trying to use the technology to implement more advanced architectures that the "gotchas" start to be discovered. Cloud is no exception. A few of the things we've learned over the past years of adoption is that cloud is always on, it's simple to manage, and it makes applications and infrastructure services easy to scale. Some of the things we're learning now is that cloud isn't so great at supporting application mobility, monitoring of deployed services and at providing advanced networking capabilities. The reason that last part is so important is that a variety of enterprise-class capabilities we've come to rely upon are ultimately enabled by some of the advanced networking techniques cloud simply does not support. Take gratuitous ARP, for example. Most cloud providers do not allow or support this feature which ultimately means an inability to take advantage of higher-level functions traditionally taken for granted in the enterprise – like failover. GRATUITOUS ARP and ITS IMPLICATIONS For those unfamiliar with gratuitous ARP let's get you familiar with it quickly. A gratuitous ARP is an unsolicited ARP request made by a network element (host, switch, device, etc… ) to resolve its own IP address. The source and destination IP address are identical to the source IP address assigned to the network element. The destination MAC is a broadcast address. Gratuitous ARP is used for a variety of reasons. For example, if there is an ARP reply to the request, it means there exists an IP conflict. When a system first boots up, it will often send a gratuitous ARP to indicate it is "up" and available. And finally, it is used as the basis for load balancing failover. To ensure availability of load balancing services, two load balancers will share an IP address (often referred to as a floating IP). Upstream devices recognize the "primary" device by means of a simple ARP entry associating the floating IP with the active device. If the active device fails, the secondary immediately notices (due to heartbeat monitoring between the two) and will send out a gratuitous ARP indicating it is now associated with the IP address and won't the rest of the network please send subsequent traffic to it rather than the failed primary. VRRP and HSRP may also use gratuitous ARP to implement router failover. Most cloud environments do not allow broadcast traffic of this nature. After all, it's practically guaranteed that you are sharing a network segment with other tenants, and thus broadcasting traffic could certainly disrupt other tenant's traffic. Additionally, as security minded folks will be eager to remind us, it is fairly well-established that the default for accepting gratuitous ARPs on the network should be "don't do it". The astute observer will realize the reason for this; there is no security, no ability to verify, no authentication, nothing. A network element configured to accept gratuitous ARPs does so at the risk of being tricked into trusting, explicitly, every gratuitous ARP – even those that may be attempting to fool the network into believing it is a device it is not supposed to be. That, in essence, is ARP poisoning, and it's one of the security risks associated with the use of gratuitous ARP. Granted, someone needs to be physically on the network to pull this off, but in a cloud environment that's not nearly as difficult as it might be on a locked down corporate network. Gratuitous ARP can further be used to execute denial of service, man in the middle and MAC flooding attacks. None of which have particularly pleasant outcomes, especially in a cloud environment where such attacks would be against shared infrastructure, potentially impacting many tenants. Thus cloud providers are understandably leery about allowing network elements to willy-nilly announce their own IP addresses. That said, most enterprise-class network elements have implemented protections against these attacks precisely because of the reliance on gratuitous ARP for various infrastructure services. Most of these protections use a technique that will tentatively accept a gratuitous ARP, but not enter it in its ARP cache unless it has a valid IP-to-MAC mapping, as defined by the device configuration. Validation can take the form of matching against DHCP-assigned addresses or existence in a trusted database. Obviously these techniques would put an undue burden on a cloud provider's network given that any IP address on a network segment might be assigned to a very large set of MAC addresses. Simply put, gratuitous ARP is not cloud-friendly, and thus it is you will be hard pressed to find a cloud provider that supports it. What does that mean? That means, ultimately, that failover mechanisms in the cloud cannot be based on traditional techniques unless a means to replicate gratuitous ARP functionality without its negative implications can be designed. Which means, unfortunately, that traditional failover architectures – even using enterprise-class load balancers in cloud environments – cannot really be implemented today. What that means for IT preparing to migrate business critical applications and services to cloud environments is a careful review of their requirements and of the cloud environment's capabilities to determine whether availability and uptime goals can – or cannot – be met using a combination of cloud and traditional load balancing services.1.1KViews1like0CommentsIP::addr and IPv6

Did you know that all address internal to tmm are kept in IPv6 format? If you’ve written external monitors, I’m guessing you knew this. In the external monitors, for IPv4 networks the IPv6 “header” is removed with the line: IP=`echo $1 | sed 's/::ffff://'` IPv4 address are stored in what’s called “IPv4-mapped” format. An IPv4-mapped address has its first 80 bits set to zero and the next 16 set to one, followed by the 32 bits of the IPv4 address. The prefix looks like this: 0000:0000:0000:0000:0000:ffff: (abbreviated as ::ffff:, which looks strickingly simliar—ok, identical—to the pattern stripped above) Notation of the IPv4 section of the IPv4-formatted address vary in implementations between ::ffff:192.168.1.1 and ::ffff:c0a8:c8c8, but only the latter notation (in hex) is supported. If you need the decimal version, you can extract it like so: % puts $x ::ffff:c0a8:c8c8 % if { [string range $x 0 6] == "::ffff:" } { scan [string range $x 7 end] "%2x%2x:%2x%2x" ip1 ip2 ip3 ip4 set ipv4addr "$ip1.$ip2.$ip3.$ip4" } 192.168.200.200 Address Comparisons The text format is not what controls whether the IP::addr command (nor the class command) does an IPv4 or IPv6 comparison. Whether or not the IP address is IPv4-mapped is what controls the comparison. The text format merely controls how the text is then translated into the internal IPv6 format (ie: whether it becomes a IPv4-mapped address or not). Normally, this is not an issue, however, if you are trying to compare an IPv6 address against an IPv4 address, then you really need to understand this mapping business. Also, it is not recommended to use 0.0.0.0/0.0.0.0 for testing whether something is IPv4 versus IPv6 as that is not really valid a IP address—using the 0.0.0.0 mask (technically the same as /0) is a loophole and ultimately, what you are doing is loading the equivalent form of a IPv4-mapped mask. Rather, you should just use the following to test whether it is an IPv4-mapped address: if { [IP::addr $IP1 equals ::ffff:0000:0000/96] } { log local0. “Yep, that’s an IPv4 address” } These notes are covered in the IP::addr wiki entry. Any updates to the command and/or supporting notes will exist there, so keep the links handy. Related Articles F5 Friday: 'IPv4 and IPv6 Can Coexist' or 'How to eat your cake ... Service Provider Series: Managing the ipv6 Migration IPv6 and the End of the World No More IPv4. You do have your IPv6 plan running now, right ... Question about IPv6 - BIGIP - DevCentral - F5 DevCentral ... Insert IPv6 address into header - DevCentral - F5 DevCentral ... Business Case for IPv6 - DevCentral - F5 DevCentral > Community ... We're sorry. The IPv4 address you are trying to reach has been ... Don MacVittie - F5 BIG-IP IPv6 Gateway Module1.1KViews1like1CommentFun with iRules: Haiku Error Responses

One of our stellar sales engineers, Rob Eberhardt, whipped up a fun iRule after one of his customers showed him some HTTP 404 errors returning haiku in BeOS. The class, and iRule, followed by the result. Enjoy! The Class Stored as an external class in /var/class/haiku_int.class (integer type). 1 := "The web site you seek Lies beyond our perception But others await.", 2 := "Sites you are seeking From your path they are fleeing Their winter has come.", 3 := "A truth found, be told You are far from the fold, Go Come back yet again.", 4 := "Wind catches lily Scatt'ring petals to the wind: Your site is not found.", 5 := "These three are certain: Death, taxes, and site not found. You, victim of one.", 6 := "Ephemeral site. I am the Blue Screen of Death. No one hears your screams.", This continues on through 38, but the remaining ones removed for brevity. Now the class reference: class haiku_class { type value filename "/var/class/haiku_int.class" separator ":=" } The iRule when RULE_INIT { set static::error_404 { "#E7E7E7"> class=Section1> class=MsoNormal align=center> table width=500 height=258> 'background:#0078AD'> 'font-family:"Arial","sans-serif"; color: white; font-size: large'> Error 404: File Not Found 'background:white'> 'font-family:"Arial","sans-serif"'> } } when HTTP_RESPONSE { if { [HTTP::status] == 404 } { set randomnumber [expr { int (38 * rand()) }] set haiku [class match -value $randomnumber equals haiku_class] set response [concat $static::error_404 $haiku] HTTP::respond 200 content [subst $response] } } The Result Here’s a couple snapshots of the browser (I commented out the if clause to generate the error every request) Related Articles more fun with iRules - DevCentral - F5 DevCentral > Community ... iRule: Fun with 404s iRules: Rewriting URIs for Fun and Profit Fun with 404s - DevCentral - F5 DevCentral > Community > Group ... Fun with regexp... - DevCentral - F5 DevCentral > Community ... Fun with 404s - DevCentral - F5 DevCentral > Community > Group ... Fun with ldap - DevCentral - F5 DevCentral > Community > Group ... more fun with jsessionid persistence - DevCentral - F5 DevCentral ... Akamai, True-Client-IP, and fun with logging - DevCentral - F5 ... Fun with Hash Performance and Google Charts > DevCentral > F5 ...242Views1like0CommentsBIG-IP Configuration Conversion Scripts

Kirk Bauer, John Alam, and Pete White created a handful of perl and/or python scripts aimed at easing your migration from some of the “other guys” to BIG-IP.While they aren’t going to map every nook and cranny of the configurations to a BIG-IP feature, they will get you well along the way, taking out as much of the human error element as possible.Links to the codeshare articles below. Cisco ACE (perl) Cisco ACE via tmsh (perl) Cisco ACE (python) Cisco CSS (perl) Cisco CSS via tmsh (perl) Cisco CSM (perl) Citrix Netscaler (perl) Radware via tmsh (perl) Radware (python)1.6KViews1like13CommentsThe Full-Proxy Data Center Architecture

Why a full-proxy architecture is important to both infrastructure and data centers. In the early days of load balancing and application delivery there was a lot of confusion about proxy-based architectures and in particular the definition of a full-proxy architecture. Understanding what a full-proxy is will be increasingly important as we continue to re-architect the data center to support a more mobile, virtualized infrastructure in the quest to realize IT as a Service. THE FULL-PROXY PLATFORM The reason there is a distinction made between “proxy” and “full-proxy” stems from the handling of connections as they flow through the device. All proxies sit between two entities – in the Internet age almost always “client” and “server” – and mediate connections. While all full-proxies are proxies, the converse is not true. Not all proxies are full-proxies and it is this distinction that needs to be made when making decisions that will impact the data center architecture. A full-proxy maintains two separate session tables – one on the client-side, one on the server-side. There is effectively an “air gap” isolation layer between the two internal to the proxy, one that enables focused profiles to be applied specifically to address issues peculiar to each “side” of the proxy. Clients often experience higher latency because of lower bandwidth connections while the servers are generally low latency because they’re connected via a high-speed LAN. The optimizations and acceleration techniques used on the client side are far different than those on the LAN side because the issues that give rise to performance and availability challenges are vastly different. A full-proxy, with separate connection handling on either side of the “air gap”, can address these challenges. A proxy, which may be a full-proxy but more often than not simply uses a buffer-and-stitch methodology to perform connection management, cannot optimally do so. A typical proxy buffers a connection, often through the TCP handshake process and potentially into the first few packets of application data, but then “stitches” a connection to a given server on the back-end using either layer 4 or layer 7 data, perhaps both. The connection is a single flow from end-to-end and must choose which characteristics of the connection to focus on – client or server – because it cannot simultaneously optimize for both. The second advantage of a full-proxy is its ability to perform more tasks on the data being exchanged over the connection as it is flowing through the component. Because specific action must be taken to “match up” the connection as its flowing through the full-proxy, the component can inspect, manipulate, and otherwise modify the data before sending it on its way on the server-side. This is what enables termination of SSL, enforcement of security policies, and performance-related services to be applied on a per-client, per-application basis. This capability translates to broader usage in data center architecture by enabling the implementation of an application delivery tier in which operational risk can be addressed through the enforcement of various policies. In effect, we’re created a full-proxy data center architecture in which the application delivery tier as a whole serves as the “full proxy” that mediates between the clients and the applications. THE FULL-PROXY DATA CENTER ARCHITECTURE A full-proxy data center architecture installs a digital "air gap” between the client and applications by serving as the aggregation (and conversely disaggregation) point for services. Because all communication is funneled through virtualized applications and services at the application delivery tier, it serves as a strategic point of control at which delivery policies addressing operational risk (performance, availability, security) can be enforced. A full-proxy data center architecture further has the advantage of isolating end-users from the volatility inherent in highly virtualized and dynamic environments such as cloud computing . It enables solutions such as those used to overcome limitations with virtualization technology, such as those encountered with pod-architectural constraints in VMware View deployments. Traditional access management technologies, for example, are tightly coupled to host names and IP addresses. In a highly virtualized or cloud computing environment, this constraint may spell disaster for either performance or ability to function, or both. By implementing access management in the application delivery tier – on a full-proxy device – volatility is managed through virtualization of the resources, allowing the application delivery controller to worry about details such as IP address and VLAN segments, freeing the access management solution to concern itself with determining whether this user on this device from that location is allowed to access a given resource. Basically, we’re taking the concept of a full-proxy and expanded it outward to the architecture. Inserting an “application delivery tier” allows for an agile, flexible architecture more supportive of the rapid changes today’s IT organizations must deal with. Such a tier also provides an effective means to combat modern attacks. Because of its ability to isolate applications, services, and even infrastructure resources, an application delivery tier improves an organizations’ capability to withstand the onslaught of a concerted DDoS attack. The magnitude of difference between the connection capacity of an application delivery controller and most infrastructure (and all servers) gives the entire architecture a higher resiliency in the face of overwhelming connections. This ensures better availability and, when coupled with virtual infrastructure that can scale on-demand when necessary, can also maintain performance levels required by business concerns. A full-proxy data center architecture is an invaluable asset to IT organizations in meeting the challenges of volatility both inside and outside the data center. Related blogs & articles: The Concise Guide to Proxies At the Intersection of Cloud and Control… Cloud Computing and the Truth About SLAs IT Services: Creating Commodities out of Complexity What is a Strategic Point of Control Anyway? The Battle of Economy of Scale versus Control and Flexibility F5 Friday: When Firewalls Fail… F5 Friday: Platform versus Product3.9KViews1like1CommentDevCentral Announces Inaugural MVP Class

DevCentral as a community relies upon the talents and contributions of its users to help peers and those who are new to F5 products and technologies. Without users who are willing to take a moment from their busy day and help resolve the problems of complete strangers, DevCentral would be far less community, resembling more of a corporate news site. Due in large part to the contributions of a select few, the community continues to flourish. They are in the trenches facing challenges daily, and it is their expertise the community craves. Without their help, some of our members might still be struggling to get the most out of their F5 gear, or more likely, the core DevCentral members would be working much longer hours as we attempt to assist our ever-growing user base. We recognize the time and effort put into the DevCentral community. To that end, we have created the DevCentral MVP program to honor those who, without incentive, contribute to the greater good of our community. The 2010 DevCentral MVP Class (by username) hoolio - I have to quote Drago from Rocky 4 here: "He is not human, he is a piece of iron." Mr. forums has more posts than Joe, Colin, and me--combined. bhattman - 2009 iRules contest winner and ever-present in the forums and wiki. hamish - Contributor in the iControl and monitoring/management forums. Contributed several slick templates for the F5 host template. hwidjaja - Perl nut, which excites Colin. Active in several forums. smp - He's gotta change his username. I type snmp every time. Really--every time. Also an active contributor in several of the forums. naladar - Not only a member of our community, but carries the F5 love out to the world with his own TheF5Guy blog. Interview guest on podcast 107. mikejo - Unashamed Firepass specialist. Active contributor in said forum. If you want to hear more about the MVPs, podcast 117 was a dedicated highlight show. Also, make sure to check out the MVP profile pages. MVPs – we salute and thank you, and we know the community at large thanks you as well!146Views1like0CommentsA read/write MySQL proxy for BIG-IP

Greetings everyone! It has been a very long time since I have blogged here, but this post should be worth the wait. It is with great excitement that I announce the availability of the MySQL Proxy iRule. This is a project I have been working on and off on for quite a while. I would have loved to given this solution to the MySQL and iRule communities a long time ago, but the nature of the MySQL Protocol threw me a curveball. MySQL Protocol Funny-business Skip this part if you don't want to know the nitty-gritty protocol details. MySQL is a banner-based protocol; meaning that when a client connects, the server talks first. Unlike some other banner-based protocols (like SMTP), the server does not tolerate any chatter from the client prior to it delivering it's WELCOME message. As a result, if you want to proxy the protocol, you also have to be a silent client during that initial connect. The nature of BIG-IP does not allow this. At it's most basic level, BIG-IP is still a full Layer 7 proxy and it's job is to do just that: take a connection, flow, stream, whatever; make an intelligent decision as to where it should go, optionally make some changes, and send it on it's way; not connect to a backend server without sending a payload. Seeing the need to support more and varied protocols going forward, including banner-based ones, a new iRule command was added in version 11.1. This command, LB::connect, instructs the server-side of the proxy to establish a connection (provided a load-balancing decision has been made), but to wait until we are ready to send the payload. As a result, I was able to use this functionality to work with MySQL's banner-based protocol. What does the MySQL Proxy iRule do? The MySQL Proxy iRule implements a full Layer 7 MySQL proxy. In short, using BIG-IP's dual TCP/IP stack, it pretends to be a MySQL server to the client on the frontend and pretends to be a MySQL client to the MySQL server on the backend. If a user sends a read-only query (such as SELECT * from data), the proxy will direct that query to your pool of MySQL worker servers. However, if the user sends a write query (such as one that uses INSERT, UPDATE, or DELETE), the proxy will direct that query to your MySQL controlling server. It will also keep subsequent read-only queries directed to the controlling server for a limited time to allow for replication to your MySQL worker servers to complete. Where to get the MySQL Proxy iRule / iApp? The MySQL Proxy is available both as a stand-alone iRule and as an iApp. The iApp version allows simple creation and management of your MySQL Proxy, while the iRule allows you make changes as you see fit. Note that the iRule version requires quite a bit of manual configuration. Both the iRule and the iApp require BIG-IP version 11.1.0 or later. MySQL Proxy iRule MySQL Proxy iApp1KViews1like4Comments20 Lines or Less #1

Yesterday I got an idea for what I think will be a cool new series that I wanted to bring to the community via my blog. I call it "20 lines or less". My thought is to pose a simple question: "What can you do via an iRule in 20 lines or less?". Each week I'll find some cool examples of iRules doing fun things in less than 21 lines of code, not counting white spaces or comments, round them up, and post them here. Not only will this give the community lots of cool examples of what iRules can do with relative ease, but I'm hoping it will continue to show just how flexible and light-weight this technology is - not to mention just plain cool. I invite you to follow along, learn what you can and please, if you have suggestions, contributions, or feedback of any kind, don't hesitate to comment, email, IM, whatever. You know how to get a hold of me...please do. ;) I'd love to have a member contributed version of this once a month or quarter or ... whatever if you guys start feeding me your cool, short iRules. Ok, so without further adieu, here we go. The inaugural edition of 20 Lines or Less. For this first edition I wanted to highlight some of the things that have already been contributed by the awesome community here at DevCentral. So I pulled up the Code Share and started reading. I was quite happy to see that I couldn't even get halfway through the list of awesome iRule contributions before I found 5 entries that were neat, and under 20 lines (These are actually almost all under 10 lines of code - wow!) Kudos to the contributors. I'll grab another bunch next week to keep highlighting what we've got already! Cipher Strength Pool Selection Ever want to check the type of encryption your users are using before allowing them into your most secure content? Here's your solution. when HTTP_REQUEST { log local0. "[IP::remote_addr]: SSL cipher strength is [SSL::cipher bits]" if { [SSL::cipher bits] < 128 }{ pool weak_encryption_pool } else { pool strong_encryption_pool } } Clone Pool Based On URI Need to clone some of your traffic to a second pool, based on the incoming URI? Here you go... when HTTP_REQUEST { if { [HTTP::uri] starts_with "/clone_me" } { pool real_pool clone pool clone_pool } else { pool real_pool } } Cache No POST Have you been looking for a way to avoid sending those POST responses to your RAMCache module? You're welcome. when HTTP_REQUEST { if { [HTTP::method] equals "POST" } { CACHE::disable } else { CACHE::enable } } Access Control Based on IP Here's a great example of blocking unwelcome IP addresses from accessing your network and only allowing those Client-IPs that you have deemed trusted. when CLIENT_ACCEPTED { if { [matchclass [IP::client_addr] equals $::trustedAddresses] }{ #Uncomment the line below to turn on logging. #log local0. "Valid client IP: [IP::client_addr] - forwarding traffic" forward } else { #Uncomment the line below to turn on logging. #log local0. "Invalid client IP: [IP::client_addr] - discarding" discard } } Content Type Tracking If you're looking to keep track of the different types of content you're serving, this iRule can help in a big way. # First, create statistics profile named "ContentType" with following entries: # HTML # Images # Scripts # Documents # Stylesheets # Other # Now associate this Statistics Profile to the virtual server. Then apply the following iRule. # To view the results, go to Statistics -> Profiles - Statistics when HTTP_RESPONSE { switch -glob [HTTP::header "Content-type"] { image/* { STATS::incr "ContentType" "Images" } text/html { STATS::incr "ContentType" "HTML" } text/css { STATS::incr "ContentType" "Stylesheets" } *javascript { STATS::incr "ContentType" "Scripts" } text/vbscript { STATS::incr "ContentType" "Scripts" } application/pdf { STATS::incr "ContentType" "Documents" } application/msword { STATS::incr "ContentType" "Documents" } application/*powerpoint { STATS::incr "ContentType" "Documents" } application/*excel { STATS::incr "ContentType" "Documents" } default { STATS::incr "ContentType" "Other" } } } There you have it, the first edition of "20 Lines or Less"! I hope you enjoyed it...I sure did. If you've got feedback or examples to be featured in future editions, let me know. #Colin4.5KViews1like1Comment