SANS 20 Critical Security Controls

A couple days ago, The SANS Institute announced the release of a major update (Version 3.0) to the 20 Critical Controls, a prioritized baseline of information security measures designed to provide continuous monitoring to better protect government and commercial computers and networks from cyber attacks. The information security threat landscape is always changing, especially this year with the well publicized breaches. The particular controls have been tested and provide an effective solution to defending against cyber-attacks. The focus is critical technical areas than can help an organization prioritize efforts to protect against the most common and dangerous attacks. Automating security controls is another key area, to help gauge and improve the security posture of an organization. The update takes into account the information gleaned from law enforcement agencies, forensics experts and penetration testers who have analyzed the various methods of attack. SANS outlines the controls that would have prevented those attacks from being successful. Version 3.0 was developed to take the control framework to the next level. They have realigned the 20 controls and the associated sub-controls based on the current technology and threat environment, including the new threat vectors. Sub-controls have been added to assist with rapid detection and prevention of attacks. The 20 Controls have been aligned to the NSA’s Associated Manageable Network Plan Revision 2.0 Milestones. They have added definitions, guidelines and proposed scoring criteria to evaluate tools for their ability to satisfy the requirements of each of the 20 Controls. Lastly, they have mapped the findings of the Australian Government Department of Defence, which produced the Top 35 Key Mitigation Strategies, to the 20 Controls, providing measures to help reduce the impact of attacks. The 20 Critical Security Controls are: Inventory of Authorized and Unauthorized Devices Inventory of Authorized and Unauthorized Software Secure Configurations for Hardware and Software on Laptops, Workstations, and Servers Secure Configurations for Network Devices such as Firewalls, Routers, and Switches Boundary Defense Maintenance, Monitoring, and Analysis of Security Audit Logs Application Software Security Controlled Use of Administrative Privileges Controlled Access Based on the Need to Know Continuous Vulnerability Assessment and Remediation Account Monitoring and Control Malware Defenses Limitation and Control of Network Ports, Protocols, and Services Wireless Device Control Data Loss Prevention Secure Network Engineering Penetration Tests and Red Team Exercises Incident Response Capability Data Recovery Capability Security Skills Assessment and Appropriate Training to Fill Gaps And of course, F5 has solutions that can help with most, if not all, the 20 Critical Controls. ps Resources: SANS 20 Critical Controls Top 35 Mitigation Strategies: DSD Defence Signals Directorate NSA Manageable Network Plan (pdf) Internet Storm Center Google Report: How Web Attackers Evade Malware Detection F5 Security Solutions1.2KViews0likes0CommentsIPS or WAF Dilemma

As they endeavor to secure their systems from malicious intrusion attempts, many companies face the same decision: whether to use a web application firewall (WAF) or an intrusion detection or prevention system (IDS/IPS). But this notion that only one or the other is the solution is faulty. Attacks occur at different layers of the OSI model and they often penetrate multiple layers of either the stack or the actual system infrastructure. Attacks are also evolving—what once was only a network layer attack has shifted into a multi-layer network and application attack. For example, malicious intruders may start with a network-based attack, like denial of service (DoS), and once that takes hold, quickly launch another wave of attacks targeted at layer 7 (the application). Ultimately, this should not be an either/or discussion. Sound security means not only providing the best security at one layer, but at all layers. Otherwise organizations have a closed gate with no fence around it. Often, IDS and IPS devices are deployed as perimeter defense mechanisms, with an IPS placed in line to monitor network traffic as packets pass through. The IPS tries to match data in the packets to data in a signature database, and it may look for anomalies in the traffic. IPSs can also take action based on what it has detected, for instance by blocking or stopping the traffic. IPSs are designed to block the types of traffic that they identify as threatening, but they do not understand web application protocol logic and cannot decipher if a web application request is normal or malicious. So if the IPS does not have a signature for a new attack type, it could let that attack through without detection or prevention. With millions of websites and innumerable exploitable vulnerabilities available to attackers, IPSs fail when web application protection is required. They may identify false positives, which can delay response to actual attacks. And actual attacks might also be accepted as normal traffic if they happen frequently enough since an analyst may not be able to review every anomaly. WAFs have greatly matured since the early days. They can create a highly customized security policy for a specific web application. WAFs can not only reference signature databases, but use rules that describe what good traffic should look like with generic attack signatures to give web application firewalls the strongest mitigation possible. WAFs are designed to protect web applications and block the majority of the most common and dangerous web application attacks. They are deployed inline as a proxy, bridge, or a mirror port out of band and can even be deployed on the web server itself, where they can audit traffic to and from the web servers and applications, and analyze web application logic. They can also manipulate responses and requests and hide the TCP stack of the web server. Instead of matching traffic against a signature or anomaly file, they watch the behavior of the web requests and responses. IPSs and WAFs are similar in that they analyze traffic; but WAFs can protect against web-based threats like SQL injections, session hijacking, XSS, parameter tampering, and other threats identified in the OWASP Top 10. Some WAFs may contain signatures to block well-known attacks, but they also understand the web application logic. In addition to protecting the web application from known attacks, WAFs can also detect and potentially prevent unknown attacks. For instance, a WAF may observe an unusually large amount of traffic coming from the web application. The WAF can flag it as unusual or unexpected traffic, and can block that data. A signature-based IPS has very little understanding of the underlying application. It cannot protect URLs or parameters. It does not know if an attacker is web-scraping, and it cannot mask sensitive information like credit cards and Social Security numbers. It could protect against specific SQL injections, but it would have to match the signatures perfectly to trigger a response, and it does not normalize or decode obfuscated traffic. One advantage of IPSs is that they can protect the most commonly used Internet protocols, such as DNS, SMTP, SSH, Telnet, and FTP. The best security implementation will likely involve both an IPS and a WAF, but organizations should also consider which attack vectors are getting traction in the malicious hacking community. An IDS or IPS has only one solution to those problems: signatures. Signatures alone can’t protect against zero-day attacks for example; proactive URLs, parameters, allowed methods, and deep application knowledge are essential to this task. And if a zero-day attack does occur, an IPS’s signatures can’t offer any protection. However if a zero-day attack occurs that a WAF doesn’t detect, it can still be virtually patched using F5’s iRules until a there’s a permanent fix. A security conversation should be about how to provide the best layered defense. Web application firewalls like BIG-IP ASM protects traffic at multiple levels, using several techniques and mechanisms. IPS just reads the stream of data, hoping that traffic matches its one technique: signatures. Web application firewalls are unique in that they can detect and prevent attacks against a web application. They provide an in-depth inspection of web traffic and can protect against many of the same vulnerabilities that IPSs look for. They are not designed, however, to purely inspect network traffic like an IPS. If an organization already has an IPS as part of the infrastructure, the ideal secure infrastructure would include a WAF to enhance the capabilities offered with an IPS. This is a best practice of layered defenses. The WAF provides yet another layer of protection within an organization’s infrastructure and can protect against many attacks that would sail through an IPS. If an organization has neither, the WAF would provide the best application protection overall. ps Related: 3 reasons you need a WAF even if your code is (you think) secure Web App Attacks Rise, Disclosed Bugs Decline Next-Gen Firewalls Make Old Arguments New Again Why Developers Should Demand Web App Firewalls. Too Dangerous to Enter? Asian IT security study finds enterprises revising strategy to accommodate new IT trends Protecting the navigation layer from cyber attacks OWASP Top Ten Project F5 Case Study: WhiteHat Security Technorati Tags: F5, PCI DSS, waf, owasp, Pete Silva, security, ips, vulnerabilities, compliance, web, internet, cybercrime, web application, identity theft1.1KViews0likes1CommentIdentify the Most Probable Threats to an Organization

Two weeks ago I was in the cinema watching the movie Moneyball. The movie is all about the story of Oakland A's general manager Billy Beane's successful attempt to put together a baseball club not based on collected wisdom of baseball insiders (including players, managers, coaches, scouts, and the front office) but rather by choosing the players and the game plan based on each player statistics such as on-base percentage and slugging percentage. I'm not a big expert in the game of baseball, but in the security arena it is a well-known fact that statistics should also be part in decision making. One of the steps that should be taken as part of organization risk assessment should include identification of the most probable threats to an organization's assets. This assessment should result in a score that represents quantified risks that will be a part of the decision making process. It is clear that we cannot gain a security suite that will give us 100% protection, but we need to minimize the risk waiting at our doorway. In order to do that we need to use a score mechanism that will help us make the right decision. In the last decade the Internet has become a phenomenon that has significant effect to most of the people around the world. Most of us store our personal information on Facebook, buy our clothes on ecommerce sites, and manage our bank accounts from the web. The Internet is available to all, therefore the risks that are folded in its very existence derive from its power. Organizations that have open a door to this platform need to understand the risks that are out there, and make sure the proper controls are in place. According to Symantec report 2010: “A growing proliferation of Webattack toolkits drove a 93% increase in the volume of Web-based attacks in 2010 over the volume observed in 2009. Shortened URLs appear to be playing a role here too. During a three-month observation period in 2010, 65% of the malicious URLs observed on social networks were shortened URLs.” According to Sophos Security Threat Report 2012: “According to SophosLabs more than 30,000 websites are infected every day and 80% of those infected sites are legitimate." According to McAfee Threats Report, Third Quarter 2011: “Last quarter McAfee Labs recorded an average of 7,300 new bad sites per day; in this period that figure dropped a bit to 6,500 sites, which is comparable to the same time last year. In August we saw an average of more than 3.5 sites rated “red” each minute." When numbers and statistics are part of the decision making process we should take under consideration the information presented above emphasizing the fact that threats on the Internet are increased exponentially. This obligates us to choose the right controls for our organization, making sure our security filters suited to this job.355Views0likes1CommentWhere Do You Wear Your Malware?

The London Stock Exchange, Android phones and even the impenetrable Mac have all been malware targets recently. If you’re connected to the internet, you are at risk. It is no surprise that the crooks will go after whatever device people are using to conduct their life – mobile for example, along with trying to achieve that great financial heist….’if we can just get this one big score, then we can hang up our botnets and retire!’ Perhaps Homer Simpson said it best, ‘Ooh, Mama! This is finally really happening. After years of disappointment with get-rich-quick schemes, I know I'm gonna get Rich with this scheme...and quick!’ Maybe we call this the Malware Mantra! Malware has been around for a while, has changed and evolved over the years and we seem to have accepted it as part of the landmines we face when navigating the internet. I would guess that we might not even think about malware until it has hit us….which is typical when it comes to things like this. Out of sight, Out of mind. I don’t think ‘absence makes the heart grow fonder’ works with malware. We certainly take measures to guard ourselves, anti-virus/firewall/spoof toolbars/etc, which gives us the feeling of protection and we click away thinking that our sentinels will destroy anything that comes our way. Not always so. It was reported that the London Stock Exchange was delivering malvertising to it’s visitors. The LSE site itself was not infected but the pop-up ads from the site delivered some nice fake warnings saying the computer was infected and in danger. This is huge business for cybercriminals since they insert their code with the third-party advertiser and never need to directly attack the main site. Many sites rely on third-party ads so this is yet another area to be cautious of. One of the things that Web 2.0 brought was the ability to deliver or feed other sites with content. If you use NoScript with Firefox on your favorite news site (or any major site for that matter), you can see the amazing amount of content coming from other sources. Sometimes, 8-10 or more domains are listed as content generators so be very careful as to which ones you allow. With the success of the Android platform, it also becomes a target. This particular mobile malware looks and acts like the actual app. The problem is that it also installs a backdoor to the phone and asks for additional permissions. Once installed, it can connect to a command server and receive instructions; including sending text messages, add URL’s/direct a browser to a site along with installing additional software. The phone becomes part of a botnet. Depending on your contract, all these txt can add up leading to a bill that looks like you just bought a car. In fact, Google has just removed 21 free apps from the Android Market saying its malware designed to get root access to the user’s device. They were all masquerading as legitimate games and utilities. If you got one of these, it’s highly recommended that you simply take your phone back to the carrier and swap it for a new one, since there’s no way of telling what has been compromised. As malware continues to evolve, the mobile threat is not going away. This RSA2011 recap predicts mobile device management as the theme for RSA2012. And in related news, F5 recently released our Edge Portal application for the Android Market – malware free. Up front, I’m not a Mac user. I like them, used them plenty over the years and am not opposed to getting one in the future, just owned Windows devices most of my life. Probably due to the fact that my dad was an IBM’r for 30 years. Late last week, stories started to appear about some beta malware targeting Macs. It is called BlackHole RAT. It is derived from a Windows family of trojans and re-written to target Mac. It is spreading through torrent sites and seems to be a proof-of-concept of what potentially can be accomplished. Reports say that it can do remote control of an infected machine, open web pages, display messages and force re-boots. There is also some disagreement around the web as to the seriousness of the threat but despite that, criminals are trying. Once we all get our IPv6 chips installed in our earlobes and are able to take calls by pulling on our ear, a la Carol Burnett style, I wonder when the first computer to human virus will be reported. The wondering is over, it has already happened. ps Resources: London Stock Exchange site shows malicious adverts When malware messes with the markets Android an emerging target for cyber criminals Google pulls 21 apps in Android malware scare More Android mobile malware surfaces in third-party app repositories Infected Android app runs up big texting bills Ignoring mobile hype? Don't overlook growing mobile device threats "BlackHole" malware, in beta, aims for Mac users Mac Trojan uses Windows backdoor code I'll Believe Mac malware is a problem when I see it BlackHole RAT is Really No Big Deal 20 years of innovative Windows malware Edge Portal application on Android Market311Views0likes0CommentsApplication Security is a Stack

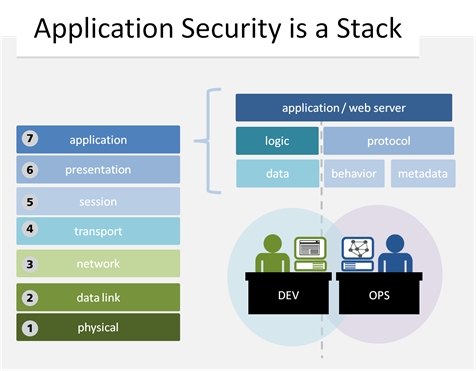

#infosec #web #devops There’s the stuff you develop, and the stuff you don’t. Both have to be secured. On December 22, 1944 the German General von Lüttwitz sent an ultimatum to Gen. McAuliffe, whose forces (the Screaming Eagles, in case you were curious) were encircled in the city Bastogne. McAuliffe’s now famous reply was, “Nuts!” which so confounded the German general that it gave the 101st time to hold off the Germans reinforcements arrived four days later. This little historical tidbit illustrates perfectly the issue with language, and how it can confuse two different groups of people who interpret even a simple word like “nuts” in different ways. In the case of information security, such a difference can have as profound an impact as that of McAuliffe’s famous reply. Application Security It may have been noted by some that I am somewhat persnickety with respect to terminology. While of late this “word rage” has been focused (necessarily) on cloud and related topics, it is by no means constrained to that technology. In fact, when we look at application security we can see that the way in which groups in IT interpret the term “application” has an impact on how they view application security. When developers hear the term “application security” they of course focus in on those pieces of an application over which they have control: the logic, the data, the “stuff” they developed. When operations hears the term “application security” they necessarily do (or should) view the term in a much broader sense. To operations the term “application” encompasses not only what developers tossed over the wall, but its environment and the protocols being used. Operations must view application security in this light, because an increasing number of “application” layer attacks focus not on vulnerabilities that exist in code, but on manipulation of the protocol itself as well as the metadata that is inherently a part of the underlying protocol. The result is that the “application layer” is really more of a stack than a singular entity, much in the same way the transport layer implies not just TCP, but UDP as well, and all that goes along with both. Layer 7 is comprised of both the code tossed over the wall by developers as well as the operational components and makes “application security” a much broader – and more difficult – term to interpret. Differences in interpretation are part of what causes a reluctance for dev and ops to cooperate. When operations starts talking about “application security” issues, developers hear what amounts to an attack on their coding skills and promptly ignores whatever ops has to say. By acknowledging that application security is a stack and not a single entity enables both dev and ops to cooperate on application layer security without egregiously (and unintentionally) offending one another. Cooperation and Collaboration But more than that, recognizing that “application” is really a stack ensures that a growing vector of attack does not go ignored. Protocol and metadata manipulation attacks are a dangerous source of DDoS and other disruptive attacks that can interrupt business and have a real impact on the bottom line. Developers do not necessarily have the means to address protocol or behavioral (and in many cases, metadata) based vulnerabilities. This is because application code is generally written within a framework that encapsulates (and abstracts away) the protocol stack and because an individual application instance does not have the visibility into client-side behavior necessary to recognize many protocol-based attacks. Metadata-based attacks (such as those that manipulate HTTP headers) are also difficult if not impossible for developers to recognize, and even if they could it is not efficient from both a cost and time perspective for them to address such attacks. But some protocol behavior-based attacks may be addressed by either group. Limiting file-upload sizes, for example, can help to mitigate attacks such as slow HTTP POSTs, merely by limiting the amount of data that can be accepted through configuration-based constraints and reducing the overall impact of exploitation. But operations can’t do that unless it understands what the technical limitations – and needs – of the application are, which is something that generally only the developers are going to know. Similarly, if developers are aware of attack and mitigating solution constraints during the design and development phase of the application lifecycle, they may be able to work around it, or innovate other solutions that will ultimate make the application more secure. The language we use to communicate can help or hinder collaboration – and not just between IT and the business. Terminology differences within IT – such as those that involve development and operations – can have an impact on how successfully security initiatives can be implemented.299Views0likes0CommentsCustom Code for Targeted Attacks

Botnets? Old school. Spam? So yesterday. Phishing? Don’t even bother…well, on second thought. Spaghetti hacking like spaghetti marketing, toss it and see what sticks, is giving way to specific development of code (or stealing other code) to breach a particular entity. In the past few weeks, giants like Sony, Google, Citibank, Lockheed and others have fallen victim to serious intrusions. The latest to be added to that list: The IMF – International Monetary Fund. IMF is an international, intergovernmental organization which oversees the global financial system. First created to help stabilize the global economic system, they oversee exchange rates and functions to improve the economies of the member countries, which are primarily the 187 members of the UN. In this latest intrusion, it has been reported that this might have been the result of ‘spear phishing,’ getting someone to click a malicious but valid looking link to install malware. The malware however was apparently developed specifically for this attack. There was also a good amount of exploration prior to the attempt – call it spying. So once again, while similar to other breaches where unsuspecting human involvement helped trigger the break, this one seems to be using purpose built malware. As with any of these high-profile attacks, the techniques used to gain unauthorized access are slow to be divulged but insiders have said it was a significant breach with emails and other documents taken in this heist. While a good portion of the recent attacks are digging for personal information, this certainly looks more like government espionage looking for sensitive information pertaining to nations. Without directly pointing, many are fingering groups backed by foreign governments in this latest encroachment. A year (and longer) ago, most of these types of breaches would be kept under wraps for a while until someone leaked it. There was a hesitation to report it due to the media coverage and public scrutiny. Now that many of these attacks are targeting large international organizations with very sophisticated methods there seems to be a little more openness in exposing the invasion. Hopefully this can lead to more cooperation amongst many different groups/organizations/governments to help defend against these. Exposing the exposure also informs the general public of the potential dangers even though it might not be happening to them directly. If an article, blog or other story helps folks be a little more cautious with whatever they are doing online, even preventing someone from simply clicking an email/social media/IM/txt link, then hopefully less people will fall victim. Since we have Web 2.0 and Infrastructure 2.0, it might be time to adopt Hacking 2.0, except for the fact that Noah Schiffman talks about misuse and all the two-dot-oh-ness, particularly Hacking 2.0 in an article 3 years ago. He mentions, ‘Security is a process’ and I certainly agree. Plus I love, ‘If the term Hacking 2.0 is adopted, or even suggested, by anyone, their rights to free speech should be revoked.’ So how about Intrusion 2.0? ps Resources: Inside The Terrifying IMF Hack: Who The Hackers Were And What They Took IMF Hacked; No End in Sight to Security Horror Shows Join the Club: International Monetary Fund Gets Hacked IMF State-Backed Cyber-Attack Follows Hacks of Atomic Lab, G-20 IMF cyber attack boosts calls for global action I.M.F. Reports Cyberattack Led to ‘Very Major Breach’ IMF Network Hit By Sophisticated Cyberattack Where Do You Wear Your Malware? The Big Attacks are Back…Not That They Ever Stopped Technology Can Only Do So Much 3 Billion Malware Attacks and Counting Unplug Everything! And The Hits Keep Coming Security Phreak: Web 2.0, Security 2.0 and Hacking 2.0 F5 Security Solutions270Views0likes0CommentsIs Your DNS Vulnerable?

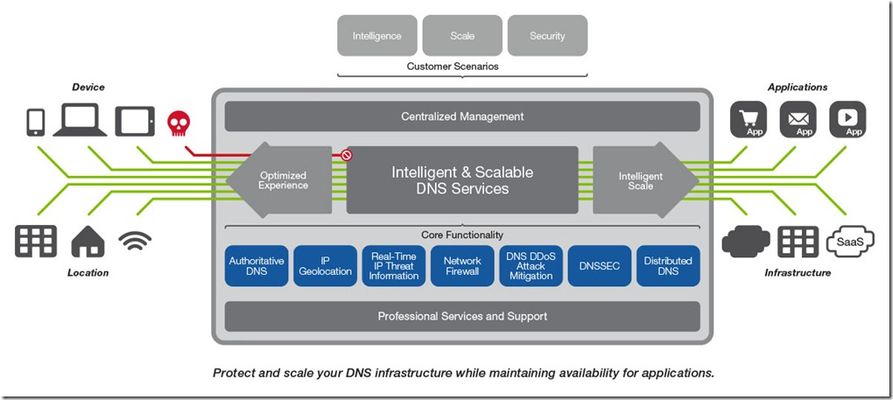

This article originally appeared on F5.com on 7.29.15. A recent report from The Infoblox DNS Threat Index (in conjunction with Internet Identity) shows that phishing attacks has raised the DNS threat level to a record high of 133 for second quarter of 2015, up 58% from the same time last year. The biggest factor for the jump is the creation of malicious domains for phishing attacks. Malicious domains are all those very believable but fake sites that are used to mimic real sites to get you to enter sensitive details. You get a phishing email, you click the link and get sent to a financial site that looks and operates just like your real bank site. If you're fooled and enter your credentials or other personal information, you could be giving the bad guys direct access to your money. These sites can also pretend to be corporate portals to gather employee credentials for future attacks. Along with the malicious domains, demand for exploit kits also helped propel the DNS threat. Exploit kits are those wonderful packaged software that can run, hidden, on websites and load nasty controls and sniffers on your computer without you even knowing. The Infoblox DNS Threat Index has a baseline of 100, which is essentially the quarterly averages over 2013 and 2014. In the first quarter 2015, the threat index jumped to 122 and then another 11 ticks for Q2 2015, hitting the high mark. Phishing was up by 74% in the second quarter and Rod Rasmussen, CTO at IID, noted that they saw a lot of phishing domains put up in the second quarter. You'd think after all these years this old trick would die but it is still very successful for criminals and with domain names costing less than $20 and available in minutes, it is a cheap investment for a potentially that big score. DNS is what translates the names we type into a browser (or mobile app, etc.) into an IP address so that the resource can be found on the internet. It is one of the most important components to a functioning internet and as I've noted on several occasions, something you really do not think about until it isn't working...or is hacked. Second to http, DNS is one of the most targeted protocols and is often the source of many attacks. This year alone, the St. Louis Federal Reserve suffered a DNS breach, Malaysia Airlines' DNS was hacked, and Lenovo.com to name a few. In addition, new exploits are surfacing targeting vulnerable home network routers to divert people to fake websites and DNS DDoS is always a favorite for riff-raff. Just yesterday 3 people were sent to prison in the DNS Changer Case. With more insecure IoT devices coming on line and relying on DNS for resolution, this could be the beginning of a wave of DNS related incidents. But it doesn't have to be. DNS will become even more critical as additional IoT devices are connected and we want to find them by name. F5 DNS Solutions, especially DNSSEC solutions, can help you manage this rapid growth with complete solutions that increase the speed, availability, scalability, overall security and intelligently manages global app traffic. At F5 we are so passionate about DNS hyperscale and security that we are now even more focused with our new BIG-IP DNS (formerly BIG-IP GTM) solution. ps @psilvas Related: Phishing Attacks Drive Spike In DNS Threat The growing threat of DDoS attacks on DNS Infoblox DNS Threat Index Hits Record High in Second Quarter Due to Surge in Phishing Attacks Infoblox DNS Threat Index Eight Internet of Things Security Fails Intelligent DNS Animated Whiteboard (Video) CloudExpo 2014: The DNS of Things (Video) DNS Doldrums Technorati Tags: breach,dns,f5,phishing,securitymalware,threats,silva Connect with Peter: Connect with F5:261Views0likes0CommentsCSRF Prevention with F5's BIG-IP ASM v10.2

Watch how BIG-IP ASM v10.2 can prevent Cross-site request forgery. Shlomi Narkolayev demonstrates how to accomplish a CSRF attack and then shows how BIG-IP ASM stops it in it's tracks. The configuration of CSRF protection is literally a checkbox.258Views0likes0CommentsUnplug Everything!

Just kidding…partially. Have you seen the latest 2011 Verizon Data Breach Investigations Report? It is chock full of data about breaches, vulnerabilities, industry demographics, threats and all the other internet security terms that make the headlines. It is an interesting view into cybercrime and like last year, there is also information and analysis from the US Secret Service, who arrested more than 1200 cybercrime suspects in 2010. One very interesting note from the Executive Summary is that while the total number of records compromised has steadily gone down – ‘08: 361 million, ‘09: 144 million, ‘10: 4 million – the case loads for cybercrime is at an all time high – 141 breaches in 2009 to a whopping 760 in 2010. One reason may be is that the criminals themselves are doing the time-honored ‘risk vs. reward’ scenario when determining their bounty. Hey, just like the security pros! Oh yeah….the crooks are pros too. Rather than going after the huge financial institutions in one fell swoop or mega-breach, they are attempting many more low risk type intrusions against restaurants, hotels and smaller retailers. Hospitality is back on the top of the list this year, followed by retail. Financial services round out pole position, but as noted, the criminals will always have their eye on our money. Riff-raff also focused more on grabbing intellectual property rather than credit card numbers. The Highlights: The majority of breaches, 96%, were avoidable through simple or intermediate controls; if only someone decided to prevent them. 89% of companies breached are still not PCI compliant today, let alone when they were breached. External attacks exploded in 2010, and now account for the vast majority at 92% and over 99% of the lost records. 83% of victims were targets of opportunity. Most attacks are opportunistic, with criminal rings relying on automation to discover susceptible systems for them. Most breaches aren’t discovered for weeks to months, and most breaches, 86%, are discovered by third-parties, not internal security teams. Malware and ‘hacking’ are the top two threat actions by percentage of breaches, 50%/49% respectively, along with tops in percentage of records 89%/79%. Misuse, a strong contender last year, went down in 2010. Within malware, sending data to an external source, installing backdoors and key logger functions were the most common types and all increased in 2010. 92% of the attacks were not that difficult. You may ask, ‘what about mobile devices?’ since those are a often touted avenue of data loss. The Data Breach Report says that data loss from mobile devices are rarely part of their case load since they typically investigate deliberate breaches and compromises rather than accidental data loss. Plus, they focus on confirmed incidents of data compromise. Another question might have to do with Cloud Computing breaches. Here they answer, ‘No, not really,’ to question of whether the cloud factors into the breaches they investigate. They say that it is more about giving up control of the systems and the associated risk than any cloud technology. Now comes word that subscribers of Sony’s PlayStation Network have had their personal information stolen. I wonder how this, and the other high profile attacks this year will alter the Data Breach Report next year. I’ve written about this type of exposure and felt it was only a matter of time before something like this occurred. Gamers are frantic about this latest intrusion but if you are connected to the internet in any way shape or form, there are risks involved. We used to joke years ago that the only way to be safe from attacks was to unplug the computers from the net. With the way things are going, the punch line is not so funny anymore. ps Resources: 2011 Verizon Data Breach Investigations Report Verizon data breach report 2011: Hackers target more, smaller victims Data Attacks Increase 81.5% in 2010 Verizon study: data breaches quintupled in 2010 Sony comes clean: Playstation Network user data was stolen X marks the Games Microsoft issues phishing alert for Xbox Live Today's Target: Corporate Secrets The Big Attacks are Back…Not That They Ever Stopped Sony Playstation Network Security Breach: Credit Card Data At Risk Breach Complicates Sony's Network Ambitions Everything You Need to Know About Sony's PlayStation Network Fiasco 244Views0likes1CommentCloudFucius Tunes into Radio KCloud

Set the dial and rip it off – all the hits from the 70s, 80s, 90s and beyond – you’re listening to the K-Cloud. We got The Puffy & Fluffy Show to get you going in the morning, Cumulous takes you through midday with lunchtime legion, Mist and Haze get you home with 5 o’clock funnies and drive-time traffic while Vapor billows overnight for all you insomniacs. K-Cloud; Radio Everywhere. I came across this article which discusses Radio’s analogue to digital transition and it’s slow but eventual move to cloud computing. How ‘Embracing cloud computing requires a complete rethinking of the design, operation and planning of a station’s data center.’ Industries like utilities, technology, insurance, government and others are already using the cloud while the broadcast community is just starting it’s exploration, according to Tom Vernon, a long-time contributor to Radio World. Like many of you, I grew up listening to the radio (music, I’m not that old) and still have a bunch of hole-punched record albums for being the 94th caller. I listened to WHJY (94-HJY) in Providence and still remember the day in 1981 when it switched from JOY, a soft, classical station to Album-Orientated Rock. Yes, I loved the hair-metal, arena rock, new wave, pop and most what they now call classic rock. It’s weird remembering ‘Emotional Rescue’ and ‘Love Rollercoaster’ playing on the radio as Top 40 hits and now they are considered ‘classics.’ Um, what am I then?!? That article prompted me to explore the industries that have not embraced the cloud, and why. Risk adverse industries immediately come to mind, like financial and health care. There have been somewhat contradictory stories and surveys recently indicating both that, they are hesitant to adopt the cloud and ready to embrace the cloud. A survey by LogLogic says that 60% of the financial services sector felt that cloud computing was not a priority or they were risk-averse to cloud computing. This is generally an industry that historically has been an early adopter of new technologies. The survey indicates that they will be spending IT dollars on ‘essential’ needs and that security questions and data governance concerns is what’s holding them back from cloud adoption. About a week later, results from a survey done by The Securities Industry and Financial Markets Association (SIFMA) and IBM reports that there is now a strong interest in cloud computing after a couple years of reluctance. The delay was due to the cost of implementing new technologies and the lack of talent needed to mange those systems. Security is not the barrier that it once was since their cloud strategies include security ramifications. They better understand the security risks and calculate that into their deployment models. This InformationWeek.com story says that the financial services industry is indeed interested in cloud computing, as long as it’s a Private Cloud. The one’s behind the corporate firewall, not Public floaters. And that security was not the real issue, regulations and compliance with international border laws were the real holdback. In the healthcare sector, according to yet another survey, Accenture says that 73% said they are planning cloud movements while nearly one-third already have deployed cloud environments. This story also says that ‘healthcare firms are beginning to realize that cloud providers actually may offer more robust security than is available in-house.’ Is there a contradiction? Maybe. More, I think it shows natural human behavior and progression when facing fears. If you don’t understand something and there is a significant risk involved we’ll generally say, ‘no thanks’ to preserve our safety and security. As the dilemma is better understood and some of the fears are either addressed or accounted for, the threat level is reduced and progress can be made. This time around, while there are still concerns, we are more likely to give it a try since we know what to expect. A risk assessment exercise gives us the tools to manage the fears. Maybe the threat is high but the potential of it occurring is low or the risk is medium but we now know how to handle it. It’s almost like jumping out of a plane. If you’ve never done it, that first 3000ft tethered leap can be freighting – jumping at that height, hoping a huge piece of fabric will hold and glide you to a safe landing on the ground. But once you’ve been through training, practiced it a few times, understand how to deploy your backup ‘chute and realize the odds are in your favor, then it’s not so daunting. This may be what’s happening with risk averse industries and cloud computing. Initially, the concerns, lack of understanding, lack of visibility, lack of maturity, lack of control, lack of security mechanisms and their overall fear kept these entities away, even with the lure of flexibility and potential cost savings. Now that there is a better understanding of what types of security solutions a cloud provider can and cannot offer along with the knowledge of how to address specific security concerns, it’s not so scary any more. Incidentally, I had initially used KCLD and WCLD for my cloud stations until I realized that they were already taken by real radio stations out of Minnesota and Mississippi. And one from Confucius: Everything has its beauty but not everyone sees it. ps The CloudFucius Series: Intro, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12242Views0likes2Comments