F5 Distributed Cloud Services: API Security and Control

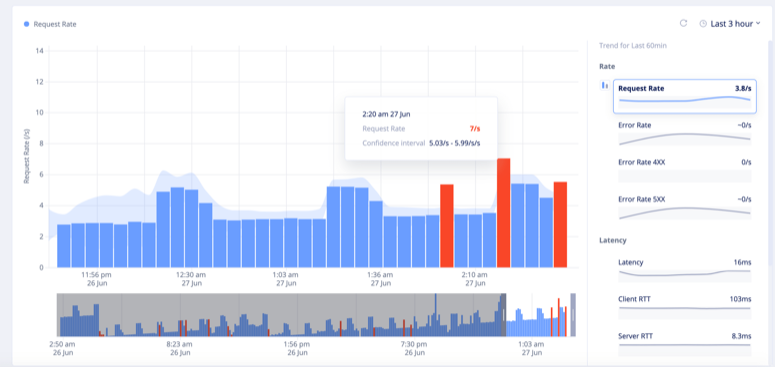

The previous article gave great coverage of API Discovery functionality on the F5 Distributed Cloud Services Web App and API Protection (XC WAAP) platform. This one gives an overview of what is available in terms of API control features. The XC WAAP platform provides a rich set of techniques to securely deliver applications and APIs. It goes beyond industry-standard features like WAF and DoS and introduces cutting-edge AI/ML technologies that significantly increase accuracy and decrease the number of false positives. The following paragraphs give an overview of available technologies and provide links to get started. Per API Request Analysis Per requests analysis name is self-explaining. The technique analyzes every single API call and extracts various metrics out of it such as request/response size, latency, etc. Based on observed data it builds a model that identifies a valid request structure for a particular API endpoint. Once the model is applied to the traffic it automatically catches any request that deviates from learned models and notifies a user. This approach is good to discover any per request-based threats. Getting started documentation Time-Series Anomaly Detection TS anomaly detection is somewhat similar to the previous mechanism but instead of looking into each API request, it monitors the traffic stream as a whole. Therefore, metrics of interest relate to the entire stream such as requests rate, errors, latency, and throughput. Then statistical algorithms detect the following types of anomalies: Unexpected spikes/drops Unexpected traffic patterns Such detection instantly notifies an administrator about any event that potentially means a service disruption. Whether it is a traffic drop caused by a misconfiguration or a traffic spike due to a DoS attack. Getting Started Documentation User Behavior Analysis Behavior analysis uses AI and ML to profile valid user behavior. It detects clear suspicious behavior based on WAF events, login failures, L7 policy violations, and other anomalous activity based on user behavioral aspects. Once enabled an administrator can observe a whole list of suspicious users in the HTTP load balancer app firewall dashboard. Getting started documentation Web Application Firewall As a platform with a mature security stack, the XC WAAP matches and exceeds all industry-standard features. In addition to that, it significantly simplifies the WAAP configuration. It only asks for application language, CMS, and web server type to set up the best level of protection for an application. Getting started documentation Service Policies Another name of the service policy is the L7 policy. When applied to a load balancer it allows to granularly define what traffic is allowed to a service and what is not in terms of L7 metrics such as hostname, method, path, or any other property available from a request. Service policy consists of rules that match a request by one or more metrics and then apply one of the following actions allow, deny or check next. Getting started documentation Rate Limiting Rate limiting protects an application or an API from excessive usage via limiting the rate of requests from a single user or client executed in a period of time. There is a variety of ways to identify a user: By IP address By cookie By HTTP Header By query string parameter The feature can limit the rate or total amount of requests which an entity is allowed to execute. Getting started documentation DoS Protection Similar to rate limiting DoS protection helps to keep service up and running under an excessive amount of traffic. However, opposite to rate limiting XC WAAP's DoS protection operates on L3/4 and deals with much higher volumes of traffic. Getting started documentation Allow/Deny Lists Self-explaining feature as well. Allows to set up lists of trusted and untrusted IP prefixes. Clients from trusted prefixes bypass all security checks. Clients from untrusted prefixes are simply blocked before any security check happens. Both lists support the expiration time setting. Meaning that rule continues to exist but isn't applied to the traffic after expiration time comes. That is handy to set up temporary rules e.g. for testing or security purposes. Getting started documentation Conclusion All security features above are available on top of the F5 Distributed Cloud Services HTTP load balancer. A combination of those provides reliable protection for an API and automatically supports it in up and running conditions.1.1KViews4likes2CommentsCloud Template for App Protect WAF

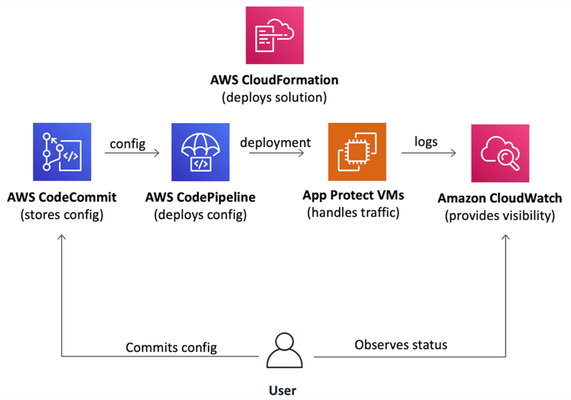

Introduction Everybody needs a WAF. However, when it gets to a deployment stage a team usually realizes that production-grade deployment going to be far more complex than a demo environment. In the case of a cloud deployment VPC networking, infrastructure security, VM images, auto-scaling, logging, visibility, automation, and many more topics require detailed analysis. Usually, it takes at least a few weeks for an average team to design and implement a production-grade WAF in a cloud. That is the one side of the problem. Additionally, cloud deployment best practices are the same for everyone, therefore most of well-made WAF deployments follow a similar path and become similar at the end. The statements above bring us to an obvious conclusion that proper WAF deployment can be templatized. So a team doesn’t spend time on deployment and maintenance but starts to use a WAF from day zero. The following paragraphs introduce a project that implements a Cloud Formation template to deploy production-grade WAF in AWS cloud just in a few clicks. Project (GitHub) On a high level, the project implements a Cloud Formation template that automatically deploys a production-grade WAF to AWS cloud. The template aims to follow cloud deployment best practices to set up a complete solution that is fully automated, requires minimum to no infrastructure management, therefore, allows a team to focus on application security. The following picture represents the overall solution structure. The solution includes a definition of three main components. Auto-scalingdata planebased on official NGINX App Protect AWS AMI images. Git repository as the source of data plane and securityconfiguration. Visibilitydashboards displaying the WAF health and security data. Therefore it becomes a complete and easy-to-use solution to protect applications whether they run in AWS or in any other location. Data Plane: Data plane auto-scales based on the amount of incoming traffic and uses official NGINX App Protect AWS AMIs to spin up new VM instances. That removes the operational headache and optimizes costs since WAF dynamically adjusts the amount of computing resources and charges a user on an as-you-go basis. Configuration Plane: Solution configuration follows GitOps principles. The template creates the AWS CodeCommit git repository as a source of forwarding and security configuration. AWS CodeDeploy pipeline automatically delivers a configuration across all data plane VMs. Visibility: Alongside the data plane and configuration repository the template sets up a set of visibility dashboards in AWS CloudWatch. Data plane VMs send logs and metrics to CloudWatch service that visualizes incoming data as a set of charts and tables showing WAF health and security violations. Therefore these three components form a complete WAF solution that is easy to deploy, doesn't impose any operational headache and provides handy interfaces for WAF configuration and visibility right out of the box. Demo As mentioned above, one of the main advantages of this project is the ease of WAF deployment. It only requires downloading the AWS CloudFormation template file from the project repository and deploy it whether via AWS Console or AWS CLI. Template requests a number of parameters, however, all they are optional. As soon as stack creation is complete WAF is ready to use. Template outputs contain WAF URL and pointer to configuration repository. By default the WAF responds with static page. As a next step, I'll put this cloud WAF instance in front of a web application. Similar to any other NGINX instance, I'll configure it to forward traffic to the app and inspect all requests with App Protect WAF. As mentioned before, all config lives in a git repo that resides in the AWS CodeCommit service. I'm adjusting the NGINX configuration to forward traffic to the protected application. Once committed to the repo, a pipeline delivers the change to all data plane VMs. Therefore all traffic redirects to a protected application (screenshot below is not of a real company, and used for demo purposes only). Similar to NGINX configuration App Protect policy resides in the same repository. Similarly, all changes reflect running VMs. Once the configuration is complete, a user can observe system health and security-related data via pre-configured AWS CloudWatch dashboards. Outline As you can see, the use of a template to deploy a cloud WAF allows to significantly reduce time spent on WAF deployment and maintenance. Handy interfaces for configuration and visibility turn this project into a boxed solution allowing a user to easily operate a WAF and focus on application security. Please comment if you find useful to have this kind of solution in major public clouds marketplaces. It is a community project so far, and we need as much feedback as possible to steer one properly. Feel free to give it a try and leave feedback here or at the project's git repository. P.S.: Take a look to another community project that contributes to F5 WAF ecosystem: WAF Policy Editor639Views3likes0CommentsDeclarative Advanced WAF policy lifecycle in a CI/CD pipeline

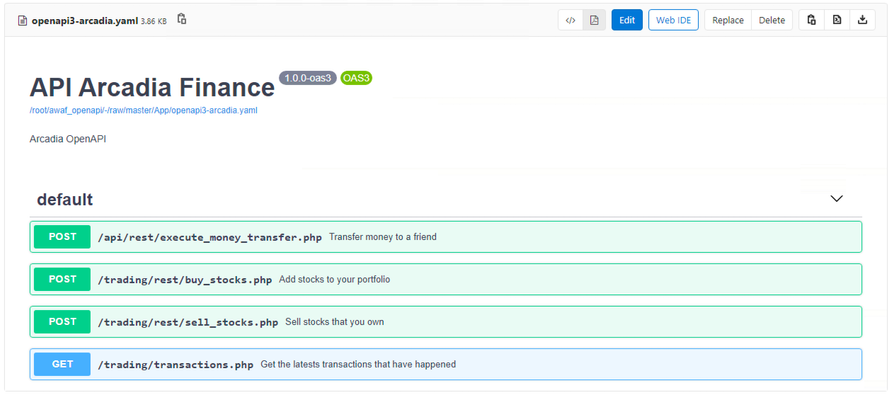

The purpose of this article is to show the configuration used to deploy a declarative Advanced WAF policy to a BIG-IP and automatically configure it to protect an API workload by consuming an OpenAPI file describing the application. For this experiment, a Gitlab CI/CD pipeline was used to deploy an API workload to Kubernetes, configure a declarative Adv. WAF policy to a BIG-IP device and tuning it by incorporating learning suggestions exported from the BIG-IP. Lastly, the F5 WAF tester tool was used to determine and improve the defensive posture of the Adv. WAF policy. Deploying the declarative Advanced WAF policy through a CI/CD pipeline To deploy the Adv. WAF policy, the Gitlab CI/CD pipeline is calling an Ansible playbook that will in turn deploy an AS3 application referencing the Adv.WAF policy from a separate JSON file. This allows the application definition and WAF policy to be managed by 2 different groups, for example NetOps and SecOps, supporting separation of duties. The following Ansible playbook was used; --- - hosts: bigip connection: local gather_facts: false vars: my_admin: "xxxx" my_password: "xxxx" bigip: "xxxx" tasks: - name: Deploy AS3 API AWAF policy uri: url: "https://{{ bigip }}/mgmt/shared/appsvcs/declare" method: POST headers: "Content-Type": "application/json" "Authorization": "Basic xxxxxxxxxx body: "{{ lookup('file','as3_waf_openapi.json') }}" body_format: json validate_certs: no status_code: 200 The Advanced WAF policy 'as3_waf_openapi.json' was specified as follows: { "class": "AS3", "action": "deploy", "persist": true, "declaration": { "class": "ADC", "schemaVersion": "3.2.0", "id": "Prod_API_AS3", "API-Prod": { "class": "Tenant", "defaultRouteDomain": 0, "arcadia": { "class": "Application", "template": "generic", "VS_API": { "class": "Service_HTTPS", "remark": "Accepts HTTPS/TLS connections on port 443", "virtualAddresses": ["xxxxx"], "redirect80": false, "pool": "pool_NGINX_API", "policyWAF": { "use": "Arcadia_WAF_API_policy" }, "securityLogProfiles": [{ "bigip": "/Common/Log all requests" }], "profileTCP": { "egress": "wan", "ingress": { "use": "TCP_Profile" } }, "profileHTTP": { "use": "custom_http_profile" }, "serverTLS": { "bigip": "/Common/arcadia_client_ssl" } }, "Arcadia_WAF_API_policy": { "class": "WAF_Policy", "url": "http://xxxx/root/awaf_openapi/-/raw/master/WAF/ansible/bigip/policy-api.json", "ignoreChanges": true }, "pool_NGINX_API": { "class": "Pool", "monitors": ["http"], "members": [{ "servicePort": 8080, "serverAddresses": ["xxxx"] }] }, "custom_http_profile": { "class": "HTTP_Profile", "xForwardedFor": true }, "TCP_Profile": { "class": "TCP_Profile", "idleTimeout": 60 } } } } } The AS3 declaration will provision a separate Administrative Partition ('API-Prod') containing a Virtual Server ('VS_API'), an Adv. WAF policy ('Arcadia_WAF_API_policy') and a pool ('pool_NGINX_API'). The Adv.WAF policy being referenced ('policy-api.json') is stored in the same Gitlab repository but can be downloaded from a separate location. { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "transparent", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "http://xxxx/root/awaf_openapi/-/raw/master/App/openapi3-arcadia.yaml" } ] }, "modifications": [ ] } The declarative Adv.WAF policy is referencing in turn the OpenAPI file ('openapi3-arcadia.yaml') that describes the application being protected. Executing the Ansible playbook results in the AS3 application being deployed, along with the Adv.WAF policy that is automatically configured according to the OpenAPI file. Handling learning suggestions in a CI/CD pipeline The next step in the CI/CD pipeline used for this experiment was to send legitimate traffic using the API and collect the learning suggestions generated by the Adv.WAF policy, which will allow a simple way to customize the WAF policy further for the specific application being protected. The following Ansible playbook was used to retrieve the learning suggestions: --- - hosts: bigip connection: local gather_facts: true vars: my_admin: "xxxx" my_password: "xxxx" bigip: "xxxxx" tasks: - name: Get all Policy_key/IDs for WAF policies uri: url: 'https://{{ bigip }}/mgmt/tm/asm/policies?$select=name,id' method: GET headers: "Authorization": "Basic xxxxxxxxxxx" validate_certs: no status_code: 200 return_content: yes register: waf_policies - name: Extract Policy_key/ID of Arcadia_WAF_API_policy set_fact: Arcadia_WAF_API_policy_ID="{{ item.id }}" loop: "{{ (waf_policies.content|from_json)['items'] }}" when: item.name == "Arcadia_WAF_API_policy" - name: Export learning suggestions uri: url: "https://{{ bigip }}/mgmt/tm/asm/tasks/export-suggestions" method: POST headers: "Content-Type": "application/json" "Authorization": "Basic xxxxxxxxxxx" body: "{ \"inline\": \"true\", \"policyReference\": { \"link\": \"https://{{ bigip }}/mgmt/tm/asm/policies/{{ Arcadia_WAF_API_policy_ID }}/\" } }" body_format: json validate_certs: no status_code: - 200 - 201 - 202 - name: Get learning suggestions uri: url: "https://{{ bigip }}/mgmt/tm/asm/tasks/export-suggestions" method: GET headers: "Authorization": "Basic xxxxxxxxx" validate_certs: no status_code: 200 register: result - name: Print learning suggestions debug: var=result A sample learning suggestions output is shown below: "json": { "items": [ { "endTime": "xxxxxxxxxxxxx", "id": "ZQDaRVecGeqHwAW1LDzZTQ", "inline": true, "kind": "tm:asm:tasks:export-suggestions:export-suggestions-taskstate", "lastUpdateMicros": 1599953296000000.0, "result": { "suggestions": [ { "action": "add-or-update", "description": "Enable Evasion Technique", "entity": { "description": "Directory traversals" }, "entityChanges": { "enabled": true }, "entityType": "evasion" }, { "action": "add-or-update", "description": "Enable HTTP Check", "entity": { "description": "Check maximum number of parameters" }, "entityChanges": { "enabled": true }, "entityType": "http-protocol" }, { "action": "add-or-update", "description": "Enable HTTP Check", "entity": { "description": "No Host header in HTTP/1.1 request" }, "entityChanges": { "enabled": true }, "entityType": "http-protocol" }, { "action": "add-or-update", "description": "Enable enforcement of policy violation", "entity": { "name": "VIOL_REQUEST_MAX_LENGTH" }, "entityChanges": { "alarm": true, "block": true }, "entityType": "violation" } Incorporating the learning suggestions in the Adv.WAF policy can be done by simple copy&pasting the self-contained learning suggestions blocks into the "modifications" list of the Adv.WAF policy: { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "transparent", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "http://xxxxxx/root/awaf_openapi/-/raw/master/App/openapi3-arcadia.yaml" } ] }, "modifications": [ { "action": "add-or-update", "description": "Enable Evasion Technique", "entity": { "description": "Directory traversals" }, "entityChanges": { "enabled": true }, "entityType": "evasion" } ] } Enhancing Advanced WAF policy posture by using the F5 WAF tester The F5 WAF tester is a tool that generates known attacks and checks the response of the WAF policy. For example, running the F5 WAF tester against a policy that has a "transparent" enforcement mode will cause the tests to fail as the attacks will not be blocked. The F5 WAF tester can suggest possible enhancement of the policy, in this case the change of the enforcement mode. An abbreviated sample output of the F5 WAF Tester: ................................................................ "100000023": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt (AWS Metadata Server)", "results": { "parameter": { "expected_result": { "type": "signature", "value": "200018040" }, "pass": false, "reason": "ASM Policy is not in blocking mode", "support_id": "" } }, "system": "All systems" }, "100000024": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt - Local network IP range 10.x.x.x", "results": { "request": { "expected_result": { "type": "signature", "value": "200020201" }, "pass": false, "reason": "ASM Policy is not in blocking mode", "support_id": "" } }, "system": "All systems" } }, "summary": { "fail": 48, "pass": 0 } Changing the enforcement mode from "transparent" to "blocking" can easily be done by editing the same Adv. WAF policy file: { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "blocking", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "http://xxxxx/root/awaf_openapi/-/raw/master/App/openapi3-arcadia.yaml" } ] }, "modifications": [ { "action": "add-or-update", "description": "Enable Evasion Technique", "entity": { "description": "Directory traversals" }, "entityChanges": { "enabled": true }, "entityType": "evasion" } ] } A successful run will will be achieved when all the attacks will be blocked. ......................................... "100000023": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt (AWS Metadata Server)", "results": { "parameter": { "expected_result": { "type": "signature", "value": "200018040" }, "pass": true, "reason": "", "support_id": "17540898289451273964" } }, "system": "All systems" }, "100000024": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt - Local network IP range 10.x.x.x", "results": { "request": { "expected_result": { "type": "signature", "value": "200020201" }, "pass": true, "reason": "", "support_id": "17540898289451274344" } }, "system": "All systems" } }, "summary": { "fail": 0, "pass": 48 } Conclusion By adding the Advanced WAF policy into a CI/CD pipeline, the WAF policy can be integrated in the lifecycle of the application it is protecting, allowing for continuous testing and improvement of the security posture before it is deployed to production. The flexible model of AS3 and declarative Advanced WAF allows the separation of roles and responsibilities between NetOps and SecOps, while providing an easy way for tuning the policy to the specifics of the application being protected. Links UDF lab environment link. Short instructional video link.1.8KViews3likes1CommentA basic OWASP 2017 Top 10-compliant declarative WAF policy

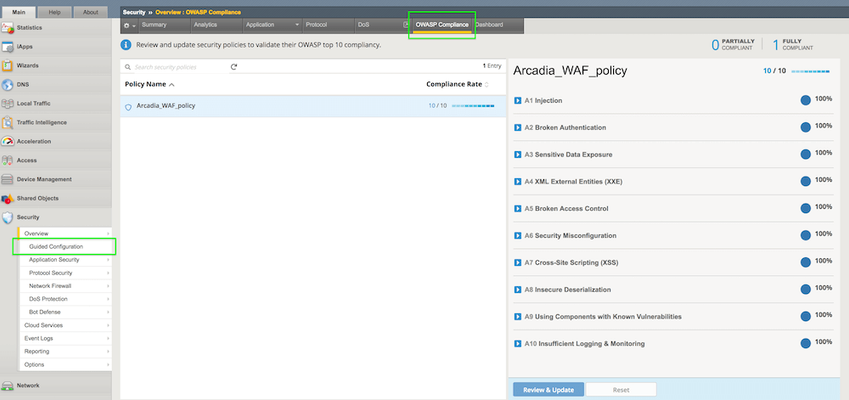

As OWASP Application Security Risks Top 10 is the most recognized report outlining the top security concerns for web application security, it is important to see how to configure F5's declarative Advanced WAF policy to protect against those threats. This article describes an example of a basic declarative WAF policy that is OWASP 2017 Top 10-compliant. Note that there are policy entities that are customized for the application being protected, in this case a demo application named Arcadia Finance so they will need to be adapted for each particular application to be protected. The policy was configured following the pattern described in K52596282: Securing against the OWASP Top 10 guide and its conformance with OWASP Top 10 is being verified by consulting the OWASP Compliance Dashboard bundled with F5's Advanced WAF. Introduction As described in the above K52596282: Securing against the OWASP Top 10, the current OWASP Top 10 vulnerabilities are: Injection attacks (A1) Broken authentication attacks (A2) Sensitive data exposure attacks (A3) XML external entity attacks (A4) Broken access control attacks (A5) Security misconfiguration attacks (A6) Cross-site scripting attacks (A7) Insecure deserialization attacks (A8) Components with known vulnerabilities attacks (A9) Insufficient logging and monitoring attacks (A10) Most of these vulnerabilities can be mitigated with a properly configured WAF policy while, for the few of them that depend on security measures implemented in the application itself, there are recommended guidelines on application security which will prevent the exploitation of OWASP 10 vulnerabilities. The declarative Advanced WAF policies are security policies defined using the declarative JSON format, which facilitates integration with source control systems and CI/CD pipelines. The documentation of the declarative WAF policy (v16.0) can be found here while its schema can be consulted here. One of the most basic declarative Advanced WAF policies that can be applied is as follows: { "policy": { "name": "Complete_OWASP_Top_Ten", "description": "A basic, OWASP Top 10 protection items v1.0", "template": { "name": "POLICY_TEMPLATE_RAPID_DEPLOYMENT" } } As you can see from the OWASP Compliance Dashboard screenshot, this policy is far from being OWASP-compliant but we will use it as a starting point to build a fully compliant configuration. This article will go through each vulnerability class and show an example of declarative WAF policy configuration that would mitigate that respective vulnerability. Injection attacks (A1) As K51836239: Securing against the OWASP Top 10 | Chapter 2: Injection attacks (A1) states: "An injection attack occurs when: An attacker injects a command, query, or code into a vulnerable element of the application. The web application server executes the injection. The attacker gains unauthorized access, causes a denial of service, steals sensitive data, or causes a similarly nefarious outcome." Securing against injection attacks entails configuring attack signatures and evasion techniques as well as restricting meta characters and parameters. The following configuration, added to the above basic declarative policy, will meet those requirements: "signature-settings":{ "signatureStaging": false, "minimumAccuracyForAutoAddedSignatures": "high" }, "blocking-settings": { "evasions": [ { "description": "Bad unescape", "enabled": true, "learn": true }, { "description": "Apache whitespace", "enabled": true, "learn": true }, { "description": "Bare byte decoding", "enabled": true, "learn": true }, { "description": "IIS Unicode codepoints", "enabled": true, "learn": true }, { "description": "IIS backslashes", "enabled": true, "learn": true }, { "description": "%u decoding", "enabled": true, "learn": true }, { "description": "Multiple decoding", "enabled": true, "learn": true, "maxDecodingPasses": 3 }, { "description": "Directory traversals", "enabled": true, "learn": true } ] } Broken authentication attacks (A2) According to K35371357: Securing against the OWASP Top 10 | Chapter 3: Broken authentication (A2): "The Open Web Application Security Project (OWASP) defines broken authentication as incorrect implementation of application functions related to authentication and session management. Using broken authentication, attackers can compromise passwords, keys, or session tokens, or exploit other implementation flaws to assume other users' identities, either temporarily or permanently." Mitigations include session hijacking protection and tracking user sessions, brute force protection, credential stuffing protection and CSRF protection. Here is an example of configuration that will enable those features: "blocking-settings": { "violations": [ { "alarm": true, "block": true, "description": "ASM Cookie Hijacking", "learn": false, "name": "VIOL_ASM_COOKIE_HIJACKING" }, { "alarm": true, "block": true, "description": "Access from disallowed User/Session/IP/Device ID", "name": "VIOL_SESSION_AWARENESS" }, { "alarm": true, "block": true, "description": "Modified ASM cookie", "learn": true, "name": "VIOL_ASM_COOKIE_MODIFIED" }, { "name": "VIOL_LOGIN_URL_BYPASSED", "alarm": true, "block": true, "learn": false } ], "evasions": [ <...> ] }, "session-tracking": { "sessionTrackingConfiguration": { "enableTrackingSessionHijackingByDeviceId": true } }, "urls": [ { "name": "/trading/auth.php", "method": "POST", "protocol": "https", "type": "explicit" } ], "login-pages": [ { "accessValidation": { "headerContains": "302 Found" }, "authenticationType": "form", "passwordParameterName": "password", "usernameParameterName": "username", "url": { "name": "/trading/auth.php", "method": "POST", "protocol": "https", "type": "explicit" } } ], "login-enforcement": { "authenticatedUrls": [ "/trading/index.php" ] }, "brute-force-attack-preventions": [ { "bruteForceProtectionForAllLoginPages": true, "leakedCredentialsCriteria": { "action": "alarm-and-blocking-page", "enabled": true } } ], "csrf-protection": { "enabled": true }, "csrf-urls": [ { "enforcementAction": "verify-csrf-token", "method": "GET", "url": "/trading/index.php" } ] Note: this is a configuration example customized for Arcadia Finance demo application. The URLs specified in your configuration might be different. Sensitive data exposure (A3) According to K05354050: Securing against the OWASP Top 10 | Chapter 4: Sensitive data exposure (A3): "A sensitive data exposure flaw can occur when you: Store or transit data in clear text (most common). Protect data with an old or weak encryption. Do not properly filter or mask data in transit." BIG-IP Advanced WAF can protect sensitive data from being transmitted using Data Guard response scrubbing and from being logged with request log masking. Note: masking sensitive data in the request log is enabled by default. You can enable Data Guard by adding the following configuration to the policy: "data-guard": { "enabled": true } XML external entity attacks (A4) As per K50262217: Securing against the OWASP Top 10 | Chapter 5: XML external entity attacks (A4): "According to the Open Web Application Security Project (OWASP), an XXE attack is an attack against an application that parses XML input. The attack occurs when a weakly configured XML parser processes XML input containing a reference to an external entity. XXE attacks exploit Document Type Definitions (DTDs), which are considered obsolete; however, they are still enabled in many XML parsers." Securing against XXE can be done through disallowing DTDs and enabling XXE attack signatures. The following configuration achieves that: "blocking-settings": { "violations": [ <...> { "alarm": true, "block": true, "description": "XML data does not comply with format settings", "learn": true, "name": "VIOL_XML_FORMAT" } ], "evasions": [ <...> ] }, "xml-profiles": [ { "name": "Default", "defenseAttributes": { "allowDTDs": false, "allowExternalReferences": false } } ] Note: XXE attack signatures are enabled by default. Broken access control (A5) According to K36157617: Securing against the OWASP Top 10 | Chapter 6: Broken access control (A5): "Access control, also called authorization, allows or denies access to your application's features and resources. Misuse of access control enables: Unauthorized access to sensitive information. Inappropriate creation or deletion of resources. User impersonation. Privilege escalation." Preventing attacks exploiting broken access control can be done by login enforcement, enabling path traversal or authentication/authorization attack signatures and disallowing file types and URLs. For example, the following configuration will enable the disallowed file type and URL violations and add a disallowed URL: "blocking-settings": { <...> { "name": "VIOL_FILETYPE", "alarm": true, "block": true, "learn": true }, { "name": "VIOL_URL", "alarm": true, "block": true, "learn": true } ], "evasions": [ <...> ] }, "urls": [ <...> { "name": "/internal/test.php", "method": "GET", "protocol": "https", "type": "explicit", "isAllowed": false } ] Note: the policy already has a list of disallowed file types configured by default: Security misconfiguration attacks (A6) As per K10622005: Securing against the OWASP Top 10 | Chapter 7: Security misconfiguration attacks (A6): "Misconfiguration vulnerabilities are configuration weaknesses that may exist in software components or subsystems. For example, web server software may ship with default user accounts that an attacker can use to access the system, or the software may contain sample files, such as configuration files, and scripts that an attacker can exploit. In addition, software may have unneeded services enabled, such as remote administration functionality." Since these vulnerabilities depend on the way the application has been configured, F5 has a number of recommendations for the system administrators describing some techniques used to mitigate this class of attacks - please see the referenced article. You can then check the relevant items under the OWASP Compliance Dashboard: Click "Review & Update" to apply the changes. Cross-site scripting (A7) According to K22729008: Securing against the OWASP Top 10 | Chapter 8: Cross-site scripting (A7): "Cross-site scripting (XSS or CSS) occurs when a web application receives untrusted data and then sends that data, without validation, to an end user's web browser. This transaction enables an attacker to send executable code to the victim’s browser and, ultimately, manipulate the end user's session by doing one of the following: Session hijacking Defacing the website Redirecting the user to a site with malicious intent (most common)" Securing against XSS attacks can be done by enabling the relevant attack signatures and disallowing meta-characters in parameters - the following demonstrates this configuration: "blocking-settings": { "violations": [ <...> { "name": "VIOL_URL_METACHAR", "alarm": true, "block": true, "learn": true }, { "name": "VIOL_PARAMETER_VALUE_METACHAR", "alarm": true, "block": true, "learn": true }, { "name": "VIOL_PARAMETER_NAME_METACHAR", "alarm": true, "block": true, "learn": true } ] Insecure deserialization (A8) As per K01034237: Securing against the OWASP Top 10 | Chapter 9: Insecure deserialization (A8): "Serialization occurs when an application converts data structures and objects into a different format, such as binary or structured text like XML and JSON, so that it is suitable for other purposes, including the following: Data storage Messaging Remote procedure call (RPC)/inter-process communication (IPC) Caching HTTP cookies HTML form parameters API authentication tokens Deserialization is when an application reverts the serialized output into its original form. To exploit insecure deserialization, attackers typically tamper with the data in data structures or objects that modify an object's content or change an application's logic." Protecting against deserialization attacks can be done by performing HTTP header, XML or JSON validation and by enabling the insecure deserialization attack signatures - note that these signatures are enabled by default. An example of JSON validation configuration can be seen below: "urls": [ <...> { "name": "/trading/rest/portfolio.php", "method": "GET", "protocol": "https", "type": "explicit", "urlContentProfiles": [ { "headerName": "Content-Type", "headerValue": "text/html", "type": "json", "contentProfile": { "name": "portfolio" } }, { "headerName": "*", "headerValue": "*", "type": "do-nothing" } ] } ], "json-profiles": [ { "name": "portfolio" } ] Using components with known vulnerabilities (A9) According to K90977804: Securing against the OWASP Top 10 | Chapter 10: Using components with known vulnerabilities (A9): "Component-based vulnerabilities occur when a web application component is unsupported, out of date, or vulnerable to a known exploit. The BIG-IP ASM system provides security features, such as attack signatures, that protect your web application against component-based vulnerability attacks." As a way to customize the WAF policy for the server technologies relevant to your back-end, consider adding them to the policy by enabling, for example, server technologies detection: "policy-builder-server-technologies": { "enableServerTechnologiesDetection": true } For a list of recommended actions to mitigate this vulnerability class, please consult the referred article. Once the mitigation measures are in place, check the relevant entries in the OWASP Conformance Dashboard. Insufficient logging and monitoring (A10) K97756490: Securing against the OWASP Top 10 | Chapter 11: Insufficient logging and monitoring (A10) describes the recommended configuration to enable comprehensive logging on BIG-IP Advanced WAF. This configuration is not part of the declarative WAF policy so it will not be described here - please follow the instructions in the referred article. Once logging has been configured, check the relevant items in the OWASP Compliance Dashboard. After updating the policy, the OWASP Compliance Dashboard should show a full compliance: Other recommended configurations In case client connections are passing through a trusted reverse proxy that replaces the source IP address and places the client's IP address in the X-Forwarded-For HTTP header, it is recommended to enable the "Trust X-Forwarded-For" option under the WAF policy: "general": { "trustXff": true } The enforcement mode can also be controlled through the policy file, the recommendation being to set it to "transparent" mode while running the CI/CD pipeline in Dev or QA environments (or, if the policy is build outside a CI/CD pipeline, in a similar Pre-Prod environment) and configure it as "blocking" when running into a Production environment: "enforcementMode":"transparent" Conclusion This article has shown a very basic OWASP Top 10 - compliant declarative WAF policy. It is worth noting that, although this WAF policy is fully compliant to OWASP Top 10 recommendations, it contains elements that need to be customized for each individual application and should only be treated as a starting point for a production-ready WAF policy that would most likely need to be configured with additional elements. The full configuration of the declarative policy used in this example can be found on CodeShare: Example OWASP Top 10-compliant declarative WAF policy3.2KViews3likes0CommentsSecuring GraphQL with Advanced WAF declarative policies

While REST has become the industry standard for designing Web APIs, GraphQL is rising in popularity as a more flexible and efficient alternative. Problem statement Similarly to REST, GraphQL is usually served over HTTP and is prone to the typical Web APIs security vulnerabilities, such as injection attacks, Denial of Service (DoS) attacks and abuse of flawed authorization. However, the mitigation strategies required to prevent a security breach of the GraphQL server must be specifically tailored to GraphQL. Unlike REST, where Web resources are identified by multiple URLs, GraphQL server operates on a single URL. Therefore, Web Application Firewalls (WAFs) configured to filter traffic based on URLs and query strings would not effectively protect GraphQL app. Instead, WAF policies for GraphQL must analyze and operate on the query level. In addition, GraphQL allows batching multiple queries in a single network call, which makes possible a batching attack specific to GraphQL. Proposed solution BIG-IP Advanced WAF has a number of features specifically designed for securing GraphQL APIs: A GraphQL Security Policy Template that enables quick deployment of GraphQL WAF policies A GraphQL Content Profile that groups all the relevant configurations relevant to GraphQL Support for the most common GraphQL use cases, where JSON payload is sent over POST (body) or GET (URL parameter) requests Native parsing of GraphQL enables the application of attack signature against each JSON field, with very low rate of false positives Protection against complexity-based Denial of Service attacks by allowing the configuration of a maximum depth of queries Support for enforcing the best practices of deployment GraphQL APIs with disabled introspection, which is the primary way for attackers to understand the API specification and tailor their attacks accordingly An option to control the number of allowed batched requests GraphQL-specific security violations allowing the fine tuning of the WAF policy Example configuration GraphQL configuration of the WAF policy can be done through the GUI or programatically, through the declarative policy model, allowing easy integration in automated environments that leverage, for example, CI/CD tools. As an example, below is a basic GraphQL declarative policy, demonstrating some of the features listed above: { "policy" : { "applicationLanguage" : "utf-8", "caseInsensitive" : false, "description" : "WAF Policy with GraphQL Profile", "enablePassiveMode" : false, "enforcementMode" : "blocking", "signature-settings": { "signatureStaging": false }, "filetypes" : [ { "allowed" : true, "checkPostDataLength" : true, "checkQueryStringLength" : true, "checkRequestLength" : true, "checkUrlLength" : true, "name" : "php", "performStaging" : true, "postDataLength" : 1000, "queryStringLength" : 1000, "requestLength" : 5000, "responseCheck" : false, "type" : "explicit", "urlLength" : 100 } ], "fullPath" : "/Common/waf_policy_withgraphql", "graphql-profiles" : [ { "attackSignaturesCheck" : true, "defenseAttributes" : { "allowIntrospectionQueries" : true, "maximumBatchedQueries" : 10, "maximumStructureDepth" : 10, "maximumTotalLength" : 100000, "maximumValueLength" : 10000, "tolerateParsingWarnings" : true }, "description" : "", "metacharElementCheck" : false, "name" : "graphql_profile" } ], "name" : "waf_policy_withgraphql", "protocolIndependent" : false, "softwareVersion" : "16.1.0", "template" : { "name" : "POLICY_TEMPLATE_GRAPHQL" }, "type" : "security", "urls" : [ { "attackSignaturesCheck" : true, "clickjackingProtection" : false, "description" : "", "disallowFileUploadOfExecutables" : false, "html5CrossOriginRequestsEnforcement" : { "enforcementMode" : "disabled" }, "isAllowed" : true, "mandatoryBody" : false, "method" : "*", "methodsOverrideOnUrlCheck" : false, "name" : "/graphql", "performStaging" : false, "protocol" : "https", "type" : "explicit", "urlContentProfiles" : [ { "contentProfile" : { "name" : "graphql_profile" }, "headerName" : "*", "headerOrder" : "default", "headerValue" : "*", "type" : "graphql" } ] } ] } } Conclusion As the adoption of GraphQL increases, so is the likelihood of emergence of security threats tailored for this (comparatively) new Web API technology. The features present in Advanced WAF enable a solid response against GraphQL-specific attacks, while allowing for integration in the most advanced CI/CD-driven environments. Other resources UDF lab: AWAF advanced security for GraphQL in CI/CD pipeline Many thanks to Serge Levin for his contribution to this article.2.2KViews3likes1CommentF5 powered API security and management

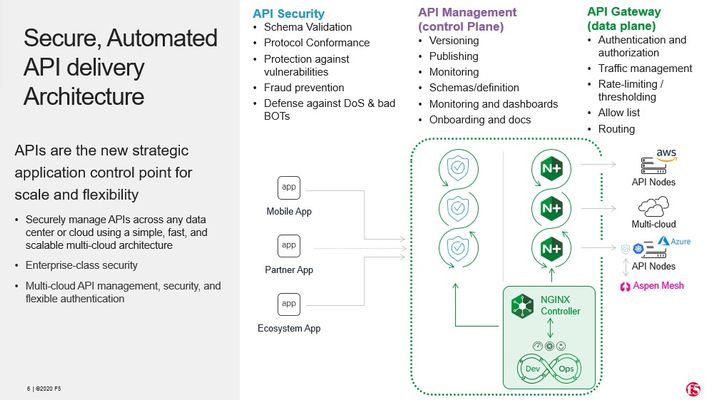

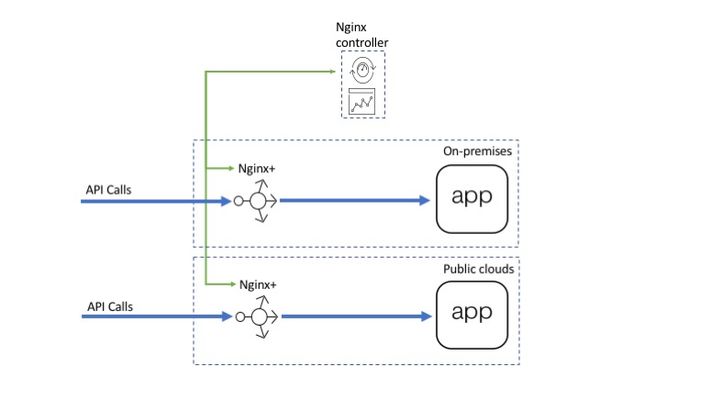

Editor's Note:The F5 Beacon capabilities referenced in this article hosted on F5 Cloud Services are planning a migration to a new SaaS Platform - Check out the latesthere. Introduction Application Programming Interfaces (APIs) enable application delivery systems to communicate with each other. According to a survey conducted by IDC, security is the main impediment to delivery of API-based services.Research conducted by F5 Labs shows that APIs are highly susceptible to cyber-attacks. Access or injection attacks against the authentication surface of the API are launched first, followed by exploitation of excessive permissions to steal or alter data that is reachable via the API.Agile development practices, highly modular application architectures, and business pressures for rapid development contribute to security holes in both APIs exposed to the public and those used internally. API delivery programs must include the following elements : (1) Automated Publishing of APIs using Swagger files or OpenAPI files, (2) Authentication and Authorization of API calls, (3) Routing and rate limiting of API calls, (4) Security of API calls and finally (5) Metric collection and visualization of API calls.The reference architecture shown below offers a streamlined way of achieving each element of an API delivery program. F5 solution works with modern automation and orchestration tools, equipping developers with the ability to implement and verify security at strategic points within the API development pipeline. Security gets inserted into the CI/CD pipeline where it can be tested and attached to the runtime build, helping to reduce the attack surface of vulnerable APIs. Common Patterns Enterprises need to maintain and evolve their traditional APIs, while simultaneously developing new ones using modern architectures. These can be delivered with on-premises servers, from the cloud, or hybrid environments. APIs are difficult to categorize as they are used in delivering a variety of user experiences, each one potentially requiring a different set of security and compliance controls. In all of the patterns outlined below, NGINX Controller is used for API Management functions such as publishing the APIs, setting up authentication and authorization, and NGINX API Gateway forms the data path.Security controls are addressed based on the security requirements of the data and API delivery platform. 1.APIs for highly regulated business Business APIs that involve the exchange of sensitive or regulated information may require additional security controls to be in compliance with local regulations or industry mandates.Some examples are apps that deliver protected health information or sensitive financial information.Deep payload inspection at scale, and custom WAF rules become an important mechanism for protecting this type of API. F5 Advanced WAF is recommended for providing security in this scenario. 2.Multi-cloud distributed API Mobile App users who are dispersed around the world need to get a response from the API backend with low latency.This requires that the API endpoints be delivered from multiple geographies to optimize response time.F5 DNS Load Balancer Cloud Service (global server load balancing) is used to connect API clients to the endpoints closest to them.In this case, F5 Cloud Services Essential App protect is recommended to offer baseline security, and NGINX APP protect deployed closer to the API workload, should be used for granular security controls. Best practices for this pattern are described here. 3.API workload in Kubernetes F5 service mesh technology helps API delivery teams deal with the challenges of visibility and security when API endpoints are deployed in Kubernetes environment. NGINX Ingress Controller, running NGINX App Protect, offers seamless North-South connectivity for API calls. F5 Aspen Mesh is used to provide East-West visibility and mTLS-based security for workloads.The Kubernetes cluster can be on-premises or deployed in any of the major cloud provider infrastructures including Google’s GKE, Amazon’s EKS/Fargate, and Microsoft’s AKS. An example for implementing this pattern with NGINX per pod proxy is described here, and more examples are forthcoming in the API Security series. 4.API as Serverless Functions F5 cloud services Essential App Protect offering SaaS-based security or NGINX App Protect deployed in AWS Fargate can be used to inject protection in front of serverless API endpoints. Summary F5 solutions can be leveraged regardless of the architecture used to deliver APIs or infrastructure used to host them.In all patterns described above, metrics and logs are sent to one or many of the following: (1) F5 Beacon (2) SIEM of choice (3) ELK stack.Best practices for customizing API related views via any of these visibility solutions will be published in the following DevCentral series. DevOps can automate F5 products for integration into the API CI/CD pipeline.As a result, security is no longer a roadblock to delivering APIs at the speed of business. F5 solutions are future-proof, enabling development teams to confidently pivot from one architecture to another. To complement and extend the security of above solutions, organizations can leverage the power of F5 Silverline Managed Services to protect their infrastructure against volumetric, DNS, and higher-level denial of service attacks.The Shape bot protection solutions can also be coupled to detect and thwart bots, including securing mobile access with its mobile SDK.790Views2likes0CommentsSecuring APIs with BigIP

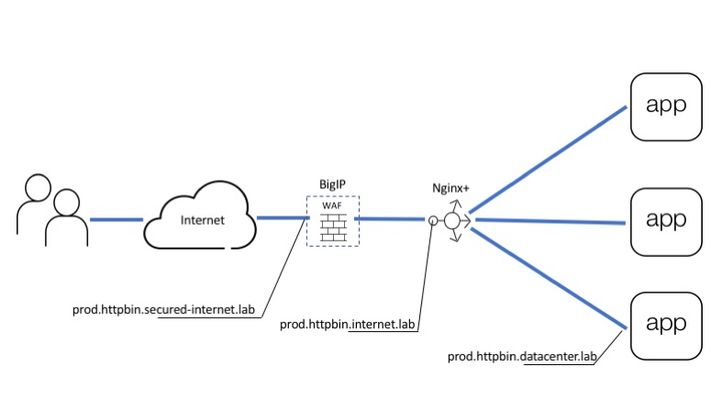

Introduction API servers respond to requests using the HTTP protocol, much like Web Servers. Therefore, API servers are susceptible to HTTP attacks in ways similar to Web Servers. Previous articles covered how to publish an API using the NGINX platform as an API management gateway. These APIs are still exposed to web attacks and defensive mechanisms are needed to defend the API against web attacks, denial of service, and Bots.The diagram below shows all the layers needed to deliver and defend APIs. BIGIP provides the protection and NGINX Plus provides API management. Picture 1. This article covers Advanced Web Application Firewall (AWAF) to protect against HTTP vulnerabilities Unified Bot Defense to protect against bots Behavioral Anomaly DoS Defense to prevent DoS attacks As shown in Picture 1, above BIG-IP goes in front of the API management gateway as an additional security gateway. The beauty of this approach is that BIG-IP can be initially deployed on the side while the API is being delivered to users through the NGINX Plus gateway directly. Once BIG-IP is configured to forward good requests to NGINX Plus and security policies are in place, BIG-IP can be brought into the traffic flow by simply changing the DNS records for "prod.httpbin.internet.lab" to point to BIG-IP instead of NGINX Plus. From this point on all calls will automatically arrive at BIG-IP for inspection and only those that pass all verifications will be forwarded to the next layer. Configuring Data Path Data path configuration for this use case is pretty common for BIG-IP which is historically a load balancer. It includes: Virtual Server (listens for API calls) SSL profile (defines SSL settings) SSL certificate and key (cryptographically identifies virtual server) Pool (destination for passed calls) Picture below shows how all of the configuration pieces work together. Picture 3. At first upload server certificate and key Setup SSL profile to use a certificate from the previous step Finally, create a virtual server and a pool to accept API calls and forward them to the backend From this point IG-IP accepts all requests which go to "prod.httpbin.secured-internet.lab" hostname and forwards them to API management gateway powered by NGINX Plus. Setting up WAF policy As you may already know every API starts from the OpenAPI file which describes all available endpoints, parameters, authentication methods, etc. This file contains all details related to API definition and it is widely used by most tools including F5's WAF for self-configuration. Imported OpenAPI file automatically configures policy with all API specific parameters as a list of allowed URLs, parameters, methods, and so on. Therefore WAF configuration narrows down to importing OpenAPI file and using policy template for API security. Create a policy Specify policy name, template, swagger file, virtual server and logging profile. API security template pre-configures WAF policy with all necessary violations and signatures to protect API backend. OpenAPI file introduces application-specific configuration to a policy as a list of allowed URLs, parameters, and methods. That is it. WAF policy is configured and assigned to the virtual server. Now we can test that only legitimate requests to allowed resources go through. For example request to URL which does not exist in the policy will be blocked: ubuntu@ip-10-1-1-7:~$ http -v https://prod.httpbin.secured-internet.lab/urldoesntexist GET /urldoesntexist HTTP/1.1 Accept: */* Accept-Encoding: gzip, deflate Connection: keep-alive Host: prod.httpbin.secured-internet.lab User-Agent: HTTPie/0.9.2 HTTP/1.1 403 Forbidden Cache-Control: no-cache Connection: close Content-Length: 38 Content-Type: application/json; charset=utf-8 Pragma: no-cache { "supportID": "1656927099224588298" } Request with SQL injection also blocked: Configuring Bot Defense Starting from BIG-IP release 14.1 proactive bot defense, web scraping, and bot detection features are combined under Bot Defense profile. Therefore current bot defense forms a unified tool to prevent all types of bots from accessing your web asset. Bot detection and mitigation mechanisms heavily rely on signatures and javascript (JS) based challenges. JS challenges run in a client browser and help to identify client type/malicious activities or apply mitigation by injecting a CAPTCHA or slowing down a client by making a browser perform a heavy calculation. Since this article is focused on protecting APIs it is important to note that JS challenges need to be used with caution in this case. Keep in mind that robots might be legitimate users for an API. However, robots similar to bots can not execute javascript. So when a robot receives JS it considers JS as API response. Such response does not align with what a robot expects and the application may break. If you know an API is serving automated processes avoid the use of JS-based challenges or test every JS-based feature in the staging environment first. Configuration to detect and handle many different types of Bots can be simplified by using any of the three pre-configured security modes: Relaxed (Challenge free, mitigates only 100% bad bots based on signatures ) Balanced (Let suspicious clients prove good behavior by executing JS challenges or solving CAPTCHA) Strict (Blocks all kinds of bots, verifies browsers, and collects device id from all clients) It is best to start with the relaxed template and tighten up the configuration as familiarity grows with the traffic that the API endpoints see. Once the profile is created assign it to the same virtual server at "Local Traffic ›› Virtual Servers : Virtual Server List ›› prod.httpbin.secured-internet.lab ›› Security" page. Such configuration performs bot detection based on data that is available in requests such as URL, user-agent, or header order. This mode is safe for all kinds of API users (browser-based or code-based robots) and you can see transaction outcome on "Security ›› Event Logs : Bot Defense : Bot Requests" page. If there are false positives you can adjust bot status or create a new trusted one for your robots through "Security ›› Bot Defense : Bot Signatures : Bot Signatures List". Setting Up DDoS Defense WAF and BOT defenses can detect requests with attack signatures or requests that are generated by malicious clients. However, attackers can send attacks composed of legitimate requests at a high scale, that can bring down an API endpoint. The following features present in the BIG-IP can be used to defeat Denial of Service attacks against API endpoints. Transaction per second (TPS) Based DoS Defense Stress Based DoS Defense Behavioral Anomaly Based DoS Defense Eviction Policies TPS-based DoS defense is the most straightforward protection mechanism. In this mode, BigIP measures requests rate for parameters such as Source IP, URL, Site, etc. In case the per minute rate becomes higher than the configured threshold then the attack gets triggered, and selected mitigation modes are applied to ‘all’ requests identified by the parameter. The stress-based mode works similarly to TPS, but instead of applying mitigation right after the threshold is crossed it only mitigates when the protected asset is under stress. This approach significantly reduces false positives. Behavioral anomaly detection (BADoS) mode offers the most advanced security and accuracy. This mode does not require the administrator to perform any configuration, other than turning the feature on. A machine learning algorithm is used to detect whether the protected asset is under attack or not. Another machine learning algorithm is used to baseline the traffic in peacetime. When the ‘attack detector algorithm’ identifies that the protected asset is under stress and non-responsive, then the second algorithm stops learning and looks for anomalies. Signatures matching these anomalies are automatically created. Anomalies discovered during attack time are likely nefarious and are eliminated from the traffic by application of dynamically discovered signatures. BADoS will automatically build a good traffic baseline, detect anomalies and stop them if the API endpoint is under stress. Conclusion F5 offers a multi-layered solution for protecting APIs, which is easy to configure.Please connect with me via comments and keep an eye on more articles in this series. Good luck!3.4KViews2likes2CommentsProtecting gRPC based APIs with NGINX App Protect

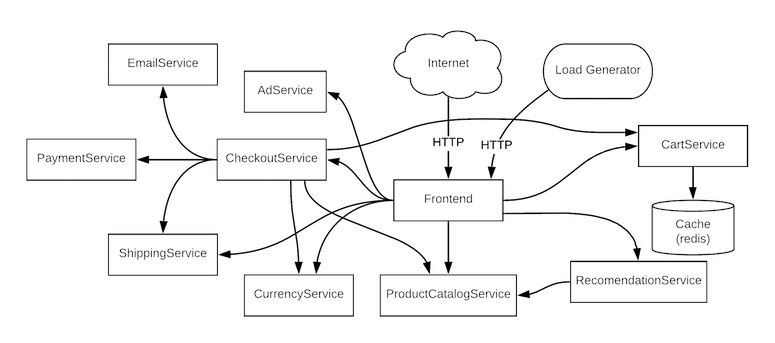

gRPC support on NGINX Developed back in 2015, gRPC keeps attracting more and more adopters due to the use of HTTP/2.0 as efficient transport, tight integration with interface description language (IDL), bidirectional streaming, flow control, bandwidth effective binary payload, and a lot more other benefits. About two years ago NGINX started to support gRPC (link) as a gateway. However, the market quickly realized that (like any other gateway)it is subject to cyber-attacks and requires strong defense. As a response for such challenges, App Protect WAF for NGINX just released a compelling set of security features to defend gRPC based services. gRPC Security It is afact that App Protect for NGINX provides much more advanced security and performance than any ModSecurity based WAFs (most of the WAF market). Hence, even before explicit gRPC support, App Protect armory in conjunction with NGINX itself could protect web services from a wide variety of threats like: Injection attacks Sensitive data leakage OS Command execution Buffer Overflow Threat Campaigns Authentication attacks Denial-of-service and more (link) With gRPC support, App Protect provides an even deeper level of security. The newly added gRPC content profile allows to parse binary payload, make sure there is no malicious data, and ensures its structure conforms to interface definition (protocol buffers) (link). gRPC Profile Similar to JSON and XML profiles gRPC profile attaches to a subset of URLs and serves to define and enforce a payload structure. gRPC profile extracts application URLs and request/response structures from Interface Definition Language (IDL) file. IDL file is a mandatory part of every gRPC based application. Following policy listing shows an example of referencing the IDL file from a gRPC profile. { "policy": { "name": "online-boutique-policy", "grpc-profiles": [ { "name": "hipstershop-grpc-profile", "defenseAttributes": { "maximumDataLength": 100, "allowUnknownFields": true }, "idlFiles": [ { "idlFile": { "$ref": "file:///hipstershop/demo.proto" }, "isPrimary": true } ] ...omitted... } ], ...omitted... } } gRPC profile references the IDL file to extract all required data to instantiate a positive security model. This means that all URLs and payload formats from it will be considered as valid and pass. To catch anomalies in the gRPC traffic, App Protect introduces three kinds of violations. Requests that don't match IDL trigger "VIOL_GRPC_MALFORMED" or "VIOL_GRPC_METHOD". Requests with unknown or longer than allowed fields cause "VIOL_GRPC_FORMAT" violation. In addition to the above checkups, App Protect looks up signatures or disallowed meta characters in gRPC data. Because of this, it protects applications from a wide variety of attacks that worked for plain HTTP traffic. The following listing gives an example of signatures and meta characters enforcement in gRPS profile (docs). "grpc-profiles": [ { "name": "online-boutique-profile", "attackSignaturesCheck": true, "signatureOverrides": [ { "signatureId": 200001213, "enabled": false }, { "signatureId": 200089779, "enabled": false } ], "metacharCheck": true, ...omitted... } ], Example Sandbox Here is an example of how to configure NGINX as a gRPC gateway and defend it with the App Protect. As a demo application, I use "Online Boutique" (link). This application consists of multiple micro-services that talk to each other in gRPC. The picture below represents the structure of entire application. Picture 1. I slightly modified the application deployment such that the frontend doesn't talk to micro-services directly but through NGINX gateway that proxies all calls. Picture 2. Before I jump to App Protect configuration, here is how NGINX config looks like for proxying gRPC services. http { include /etc/nginx/mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; include /etc/nginx/conf.d/upstreams.conf; server { server_name boutique.online; listen 443 http2 ssl; ssl_certificate /etc/nginx/ssl/nginx.crt; ssl_certificate_key /etc/nginx/ssl/nginx.key; ssl_protocols TLSv1.2 TLSv1.3; include conf.d/errors.grpc_conf; default_type application/grpc; app_protect_enable on; app_protect_policy_file "/etc/app_protect/conf/policies/online-boutique-policy.json"; app_protect_security_log_enable on; app_protect_security_log "/opt/app_protect/share/defaults/log_grpc_all.json" stderr; include conf.d/locations.conf; } } The listing above is self-explaining. NGINX virtual server "boutique.online" listens on port 443 for HTTP2 requests. Requests are routed to one of a micro-service from "upstreams.conf" based on the map defined in "locations.conf". Below are examples of these config files. $ cat conf.d/locations.conf upstream adservice { server 10.101.11.63:9555; } location /hipstershop.CartService/ { grpc_pass grpc://cartservice; } ...omitted... $ cat conf.d/uptsreams.conf location /hipstershop.AdService/ { grpc_pass grpc://adservice; } upstream cartservice { server 10.99.75.80:7070; } ...omitted... For instance, any call with URL starting from "/hipstershop.AdService" is routed to "adservice" upstream and so on. For more details on NGINX configuration for gRPC refer to this blog article or official documentation. App Protect is enabled on the virtual server. Hence, all requests are subject to inspection. Let's take a closer look at the security policy applied to the virtual server above. { "policy": { "name": "online-boutique-policy", "grpc-profiles": [ { "name": "online-boutique-profile", "idlFiles": [ { "idlFile": { "$ref": "https://raw.githubusercontent.com/GoogleCloudPlatform/microservices-demo/master/pb/demo.proto" }, "isPrimary": true } ] "associateUrls": true, "defenseAttributes": { "maximumDataLength": 10000, "allowUnknownFields": false }, "attackSignaturesCheck": true, "signatureOverrides": [ { "signatureId": 200001213, "enabled": true }, { "signatureId": 200089779, "enabled": true } ], "metacharCheck": true, } ], "urls": [ { "name": "*", "type": "wildcard", "method": "*", "$action": "delete" } ] } } The policy has one gRPC profile called "online-boutique-profile". Profile references IDL file for the demo application (similar to open API file reference) as a source of the application structure. "associateUrls: true" directive instructs App Protect to extract all possible URLs from IDL file and enforce parent profile on them. Notice URL section removes wildcard URL "*" from the policy to only allow URLs that are in IDL and therefore establish a positive security model. "defenseAttributes" directive enforces payload length and tolerance to unknown parameters. "attackSignaturesCheck" and "metaCharactersCheck" directives look for a malicious pattern in the entire request. Now let's see what does this policy block and pass. Experiments First of all, let's make sure that valid traffic passes. As an example, I construct a valid call to "Ads" micro-service based on IDL content below. syntax = "proto3"; package hipstershop; service AdService { rpc GetAds(AdRequest) returns (AdResponse) {} } message AdRequest { repeated string context_keys = 1; } Based on the definition above a valid call to the service should go to "/hipstershop.AdService/GetAds" URL and contain "context_keys" identifiers in a payload. I use the "grpcurl" tool to construct and send the call that passes. $ grpcurl -proto ../microservices-demo/pb/demo.proto -d '{"context_keys": "example"}' boutique.online:8443 hipstershop.AdService/GetAds { "ads": [ { "redirectUrl": "/product/2ZYFJ3GM2N", "text": "Film camera for sale. 50% off." }, { "redirectUrl": "/product/0PUK6V6EV0", "text": "Vintage record player for sale. 30% off." } ] } As you may note, the URL constructs out of package name, service name, and method name from IDL. Therefore it is expected that all calls which don't comply IDL definition will be blocked. A call to invalid service. $ curl -X POST -k --http2 -H "Content-Type: application/grpc" -H "TE: trailers" https://boutique.online:8443/hipstershop.DoesNotExist/GetAds <html><head><title>Request Rejected</title></head><body>The requested URL was rejected. Please consult with your administrator.<br><br>Your support ID is: 16472380185462165521<br><br><a href='javascript:history.back();'>[Go Back]</a></body></html> A call to an unknown service. Notice that the previous call got "html" response page when this one got a special "grpc" response. This happened because only valid URLs considered as type gRPC others are "html" by default. A call to an invalid method. $ curl -v -X POST -k --http2 -H "Content-Type: application/grpc" -H "TE: trailers" https://boutique.online:8443/hipstershop.AdService/DoesNotExist < HTTP/2 200 < content-type: application/grpc; charset=utf-8 < cache-control: no-cache < grpc-message: Operation does not comply with the service requirements. Please contact you administrator with the following number: 16472380185462166031 < grpc-status: 3 < pragma: no-cache < content-length: 0 A call with junk in the payload. curl -v -X POST -k --http2 -H "Content-Type: application/grpc" -H "TE: trailers" https://boutique.online:8443/hipstershop.AdService/GetAds -d@trash_payload.bin < HTTP/2 200 < content-type: application/grpc; charset=utf-8 < cache-control: no-cache < grpc-message: Operation does not comply with the service requirements. Please contact you administrator with the following number: 13966876727165538516 < grpc-status: 3 < pragma: no-cache < content-length: 0 All gRPC wise invalid calls are blocked. In the same way attack signatures are caught in gRPC payload ("alert() (Parameter)" signature). $ grpcurl -proto ../microservices-demo/pb/demo.proto -d '{"context_keys": "example"}' boutique.online:8443 hipstershop.AdService/GetAds { "ads": [ { "redirectUrl": "/product/2ZYFJ3GM2N", "text": "Film camera for sale. 50% off." }, { "redirectUrl": "/product/0PUK6V6EV0", "text": "Vintage record player for sale. 30% off." } ] } Conclusion With gRPC support App Protect provides even deeper controls for gRPC traffic along with all existing security inventory available for plain HTTP traffic. Keep in mind that this release only supports Unary gRPC traffic and doesn't support the server reflection feature. Refer to the official documentation for detailed information on gRPC support (link).1.8KViews2likes0CommentsAPI Discovery and Auto Generation of Swagger Schema

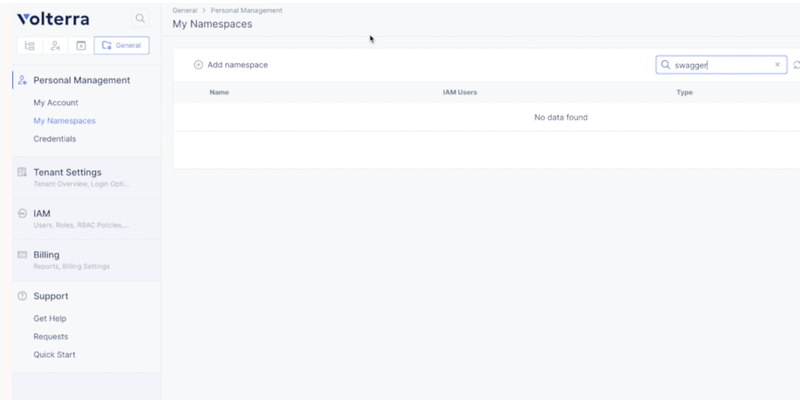

APIDiscovery API discovery helps inlearning, searching,and identifying loopholes when app communicatesvia APIsespecially in a large micro-services ecosystem.After discovery, the systemanalyzesthe APIs, then will know how to secure the APIs from malicious requests. Customer Challenges With large ecosystems, integrations,and distributed microservices, there is a huge increase in the number of API attacks. Most of these API attacks authenticated users and are very hard to detect. Also, security controls for web apps are totally different than security controls for APIs. Customersincreased adoption of microservice architecture has increased the complexity of managing, debugging,and securingapplications. With microservices,all the traffic between apps is using REST/gRPC APIs that are multiplexed onthesame network port (eg. HTTP 443) and as a result, the network level security and connectivityisnot very usefulanymore. Withcustomers adoptinga multi-cloud strategy, services might be residing on different cloud/vpc, so customers need a uniform way to apply app-level policies which will enforce zero trust API security acrossservice-to-servicecommunication. Also,the SecOps team has an administrative overhead to manually track the changes toAPI’sin a multi-cloud environment.Dev/DevOps team needs an easier tool to debug the apps. Solution Description Volterra’s AI/ML learning engine uniquely discovers API endpoints used during aservice-to-servicecommunication. This feature provides users debugging capabilities for distributed apps that are deployed on a single cloud or across multiple clouds. API endpoints discovered could be used to create API-level security policy to enforce tighter service-to-service communication. Also, the swagger schema helps in analyzing and debugging the request logs.Secopscan download and use it for audit purposes. This also serves as an input to thethird-partytools. Step by step process to enable API discovery and auto-generation of swagger schema There are mainly three steps in enabling the API discovery and downloading the swagger schema: Step1 .Enabling the API discoverywhich is to create an app-type config. Step2 .Deploying an applicationon Kubernetes and creating the HTTP load balancer. Step3 .Observing an appthrough service mesh graph and HTTP load balancer dashboardwhich includes downloading of swagger schema. Let us seehowto configureonVoltConsole: Step 1 Create anapplication namespaceunder generalnamespace tab: General > My Namespaces > Add name space. Step 2 Next,we need to create an app type which is under the shared tab: Shared > AI&ML > Add app type Here, while creating a new apptype,we need to enable the API discovery feature and markup setting to learn from direct traffic. Save and exit. Step 3 Next,let'sgo to the app tab to deploy the app: App > Applications >Virtual K8s > Add virtual K8s While creating new virtual K8s, you give it a name and selectves-io-all-res as a virtual site.Save and exit.Wehave towait till the cluster status is ready. Next, we will have to download thekubeconfigfile for the virtual K8S cluster. Now, we will use theKubectlto deploy the applicationusing the below command: kubeconfig$YOURKUBECONFIGFILE apply -fkubenetes-manifest.yml–namespace $YOURNAMESPACE Step 4 Now, we can go to theVoltConsoleto go and check the status of this app. We will wait for the pods to become ready and once all the pods are ready, we can now create theload balancer(LB)for frontend service. Next, we give the LB aname andlabelthe HTTP LB asves-io/app_typeand value as default (which is the name of the app type). Provide the domain nameunder basic config. This guideprovides instructions on how to delegate your DNS domain to Volterra using theVoltConsole. Delegating the domain to Volterra enables Volterra to manage the domain and be the authoritative domain name server for the domain. Volterra checks the DNS domain configured in the virtual host and verifies that the tenant owns that domain. Step 5 Now select theoriginpool, andcreate a new poolwith theoriginserver type and name as below.Also, select the network on the site as vK8s network on site.Select the correct port number for the service and save & exit the configuration. Step 6 Now,let'snavigate to HTTP LB dashboard > security monitoring > API endpoints. Here you willsee all the API endpoints that are discovered on the HTTPLBand you can download the swagger right from the HTTP LB dashboard page. Step 7 Now ,letsnavigate to the service mesh graph where we can view the entire application micro-services and how they are connected, and how the east-west and north-south traffic is flowing. App Namespace -> Mesh -> Service Mesh-> API endpoints tab Step 8 Now,let'sclick on the API endpoints tab, and we will see all the API endpoints discovered for the default app type. Now you can click on the Schema and then go to the PII& learned APIdata, andclick on the download button to download the swagger schema for each specific API endpoint. If we want to download the swagger schema for the entire application, then we go back to the API endpoints tab and download swagger as seen below: Conclusion With the increased attack surfaces with distributed microservices and other large ecosystems, having a feature such as API discovery andbeable to auto-generate the swagger file really helps in mitigating the malicious API attacks. This feature provides users debugging capabilities for distributed apps that are deployed on a single cloud or across multiple clouds. In addition,API endpoints discovered could be used to create API level security policy to enforce tighter service-to-service communication. The request logs are continuously analyzed to learn the schema for every learned API endpoint. The generated swagger schema could be downloaded through UI or Volterra API.SecOps can download and use it for audit purposesandDev/DevOps team can use to see the delta variables/path between differentversion.It can be used as input to otherthird-partytoolsand is less administrative overhead of managing the documents.2.6KViews2likes4CommentsPublishing an API using NGINX Controller

Overview API management is a complex process of governing the design, and implementation of APIs. This article introduces a solution to implement an API management system based on the market-leading NGINX Plus platform. The NGINX solution contains two main components which are NGINX Plus and NGINX Controller. NGINX Plus is the data processing unit that handles the API traffic. NGINX Controller manages NGINX Plus instances and provides a human consumable interface to handle API lifecycle. Managing a single NGINX Plus instance and its configuration is relatively straightforward. However, for managing multiple NGINX Plus instances, a management system is necessary. NGINX Controller allows administrators to centrally configure, monitor, and analyze telemetry from multiple NGINX Plus instances regardless of their location.Instances can be deployed on-premises or in any public cloud infrastructure. Architecture and Network Topology NGINX Controller manages multiple NGINX Plus instances, that act as API gateways.In the diagram below, data plane communication flows are shown in blue and the control plane communications are shown in green. Picture 1. “Controller to Nginx Plus interactions” The NGINX Plus instances run the Controller agent, which registers the instances with the NGINX Controller. The agent, running on NGINX Plus, uses an API key issued by the Controller to register itself with the Controller. The key is used to authenticate control-plane data in transit between the NGINX Plus instance and the Controller. Once NGINX Plus is registered with the Controller, the latter fully control the instance. Subsequently, the Controller pushes the configuration to the NGINX Plus instance and monitors telemetry. IP connectivity is provided by the networking stack of the underlying operating systems where the NGINX Plus and the NGINX Controller instances run. Those systems need to be able to reach each other over a network. Note: Following ports need to be open to allow communications between NGINX Plus, controller, and database DB: port 5432 TCP (incoming to DB from NGINX Controller host) NGINX Controller: 80 TCP (incoming from NGINX Plus instances) NGINX Controller: 443 TCP (incoming from where you are accessing from a browser, for example, an internal network) NGINX Controller: 8443 TCP (incoming from NGINX Plus instances) NGINX Controller: 6443 TCP (incoming requests to the Kubernetes master node; used for the Kubernetes API server) NGINX Controller: 10250 TCP (incoming requests to the Kubernetes worker node; used for the Kubelet API) NGINX Plus uses the underlying operating system’s networking stack to accept and forward data plane traffic. As a daemon on a Linux system, it listens on all available IP interfaces and ports (sockets) specified in its configuration. NGINX Plus can reuse a socket for delivering data to many different applications that sit behind it. As an example, assume NGINX Plus listens on network socket 192.168.1.1:80 and receives requests to multiple applications such as api.xyz.com, www.xyz.com, api.pqr.com, www.pqr.com, etc. NGINX Plus is configured with virtual servers for each of the applications that it is serving. When requests come, NGINX Plus examines the hostname header in the request and matches it to the appropriate virtual server. This feature makes it possible to host multiple applications behind a single socket 192.168.1.1:80 instead of running them on some random port that is not native to most web apps. Thus multiple applications can be served on the same machine using a single socket, rather than having to allocate different ports for each of the applications. Publishing an API Once the NGINX Plus and NGINX Controller instances are deployed and installed on the target systems, they can be configured to handle API traffic. This article doesn’t contain step-by-step instructions to register NGINX Plus instances on Controller.Administrators are welcome to use the official documentation, that is available online: link. Once the registration process is complete, an administrator can access a list of all registered instances and review graphs created from the telemetry data sent back to the controller. Picture 2. Controller dashboard lists managed NGINX Plus instances The system is now ready to define APIs and publish them through selected NGINX Plus instances regardless of their location. The diagram below visually describes the scenario where a company publishes and maintains both a ‘test’ API and a ‘production’ API deployments. Picture 3. Deployment layout As an example API I use Httpbin app. It provides number of API endpoints that generate all kinds of responses depending on request. Following steps describe how to publish 'test' version of API using NGINX controller. 1) Create an environment. Environment is an logical container that aggregates all kinds of resources (certificates, gateways, apps, etc...) for particular deployment. For example all resources that belong to testing deployment go to 'test' environment and resources for production use go to 'prod' environment. Such segregation helps to make configuration more error prone. 2) Add a certificate to publish an API via secure channel. 3) Create a gateway. It is similar to virtual server concept that defines HTTP listener properties. 4) Create an application. It provides a logical abstraction for an application. An application may include multiple components including API. 5) Create an API definition. A logical container for an API. 6) Create an API version. An API version enumerates all endpoints for an API. 7) Create a published API. A published API represents an API version deployed to particular gateway and forwarding API calls to a backend. Once API is published NGINX Controller pushes configuration to corresponding NGINX Plus instances. user@nginx-plus-2$ cat /etc/nginx/nginx.conf | grep -ie "server {" -A 7 server { listen 80; listen 443 ssl; server_name test.httpbin.internet.lab; status_zone test.httpbin.internet.lab; set $apimgmt_entry_point 3; ssl_certificate /etc/controller-agent/configurator/auxfiles/cert.crt; ssl_certificate_key /etc/controller-agent/configurator/auxfiles/cert.key; Now NGINIX Plus is ready to process API calls and forward them towards the backend user@client-vm$ http -v https://test.httpbin.internet.lab/uuid GET /uuid HTTP/1.1 Accept: */* Accept-Encoding: gzip, deflate Connection: keep-alive Host: test.httpbin.internet.lab User-Agent: HTTPie/0.9.2 HTTP/1.1 200 OK Access-Control-Allow-Credentials: true Access-Control-Allow-Origin: * Connection: keep-alive Content-Length: 53 Content-Type: application/json Date: Thu, 12 Dec 2019 22:27:59 GMT Server: nginx/1.17.6 { "uuid": "08232fcb-1e41-4433-adc3-2818a971647f" } As you may noticed API definition and API version abstractions don't belong to an environment. This means that exactly the same definition and version of an API may be published to any environment. E.g. once all tests are complete in 'test' environment it is easy to re-publish to production by creating another published API in 'prod' environment. Therefore NGINX controller significantly simplifies API lifecycle management.1.2KViews1like0Comments