How to onboard F5 BIG-IP VE in Cisco CSP 2100/5000 for NFV solutions deployment

Are you considering Network Functions Virtualization (NFV) solutions for your data center? Are you wondering how your current F5 BIG-IP solutions can be translated into NFV environment? What NFV platform can be used with F5 NFV solutions in your data center? Good News! F5 has certified its BIG-IP NFV solutions with Cisco Cloud Services Platform (CSP). Click here for a complete list of versions validated. Cisco CSP is an open x86 Linux Kernel-based virtual machine (KVM) software and hardware platform is ideal for colocation and data center network functions virtualization (NFV). F5 has a broad portfolio of VNFs available on BIG-IP which include Virtual Firewall (vFW), Virtual Application Delivery Controllers (vADC), Virtual Policy Manager (vPEM), Virtual DNS (vDNS) and other BIG-IP products. F5 VNF + Cisco CSP 2100: together provides a joint solution that allow network administrators to quickly and easily deploy F5 VNFs through a simple, built-in, native web user interface (WebUI), command-line interface (CLI), or REST API. BIG-IP VE Key Features in CSP 10G throughput with SR-IOV PCIE or SR-IOV passthrough Intel X710 NIC - Quad 10G port supported All BIG-IP modules can run in CSP 2100 Follow the steps below to onboard F5 BIG-IP VE in Cisco CSP with a Day0 file Day0 file contents and creation Sample user_data #cloud-config write_files: - path: /config/onboarding/waitForF5Ready.sh permissions: 0755 owner: root:root content: | #!/bin/bash # This script checks the prompt while the device is # booting up, waiting until it is ready to accept # the provisioning commands. echo `date` -- Waiting for F5 to be ready sleep 5 while [[ ! -e '/var/prompt/ps1' ]]; do echo -n '.' sleep 5 done sleep 5 STATUS=`cat /var/prompt/ps1` while [[ ${STATUS}x != 'NO LICENSE'x ]]; do echo -n '.' sleep 5 STATUS=`cat /var/prompt/ps1` done echo -n ' ' while [[ ! -e '/var/prompt/cmiSyncStatus' ]]; do echo -n '.' sleep 5 done STATUS=`cat /var/prompt/cmiSyncStatus` while [[ ${STATUS}x != 'Standalone'x ]]; do echo -n '.' sleep 5 STATUS=`cat /var/prompt/cmiSyncStatus` done echo echo `date` -- F5 is ready... - path: /config/onboarding/setupLogging.sh permissions: 0755 owner: root:root content: | #!/bin/bash # This script creates a file to collect the output # of the provisioning commands for debugging. FILE=/var/log/onboard.log if [ ! -e $FILE ] then touch $FILE nohup $0 0<&- &>/dev/null & exit fi exec 1<&- exec 2<&- exec 1<>$FILE exec 2>&1 - path: /config/onboarding/onboard.sh permissions: 0755 owner: root:root content: | #!/bin/bash # This script sets up the logging, waits until the device # is ready to provision and then executes the commands # to set up networking, users and register with F5. . /config/onboarding/setupLogging.sh if [ -e /config/onboarding/waitForF5Ready.sh ] then echo "/config/onboarding/waitForF5Ready.sh exists" /config/onboarding/waitForF5Ready.sh else echo "/config/onboarding/waitForF5Ready.sh is missing" echo "Failsafe sleep for 5 minutes..." sleep 5m fi echo "Configure access" tmsh modify sys global-settings hostname <<hostname>> tmsh modify auth user admin shell bash password <<admin_password>> tmsh modify sys db systemauth.disablerootlogin value true tmsh save /sys config echo "Disable mgmt-dhcp..." tmsh modify sys global-settings mgmt-dhcp disabled echo "Set Management IP..." tmsh create /sys management-ip <<mgmt_ip/mask>> Example: 10.192.74.46/24 tmsh create /sys management-route default gateway <<gateway_ip>> echo "Save changes..." tmsh save /sys config partitions all echo "Set NTP..." tmsh modify sys ntp servers add { 0.pool.ntp.org 1.pool.ntp.org } tmsh modify sys ntp timezone America/Los_Angeles echo "Add DNS server..." tmsh modify sys dns name-servers add { <<ntp_ip>> } tmsh modify sys httpd ssl-port 8443 tmsh modify net self-allow defaults add { tcp:8443 } if [[ \ "8443\ " != \ "443\ " ]] then tmsh modify net self-allow defaults delete { tcp:443 } fi tmsh mv cm device bigip1 <<hostname>> tmsh save /sys config echo "Register F5..." tmsh install /sys license registration-key <<license_key>> tmsh show sys license date runcmd: [nohup sh -c '/config/onboarding/onboard.sh' &] Sample meta_data.json { "uuid": "1d9d6d3a-1d36-4db7-8d7c-63963d4d6f20", "hostname": "<<hostname>>" } Preparation: Assuming the content are in a directory named ‘example_files/iso_contents/openstack/2012-08-10’ Once the values above are entered into the user_data file, create the ISO file: genisoimage -volid config-2 -rock -joliet -input-charset utf-8 -output f5.iso example_files/iso_contents/ or (depending on you OS) mkisofs -R -V config-2 -o f5.iso example_files/iso_contents/ Process on CSP Download F5 BIG-IP VE (release 12.1.2 of later) qcow image from http://downloads.f5.com Log into Cisco CSP 2100 Go to "Configuration" -> "Repository" -> "+" Click on “Browse” and locate the F5 BIG-IP VE qcow image, then click "Upload" Go back to “Configuration” -> “Repository and follow the same upload process for the Day0 iso file. At this point you should be to view both the qcow and Day0 iso image in the repository tab To create a F5 BIG-IP virtual function, go to "Configuration" -> "Services" -> "+" A wizard will pop up After deployment F5 BIG-IP VE virtual function deployment in Cisco CSP 2100 is completed, you can monitor the BIG-IP VE boot up progress by clicking "Console Since the BIG-IP is being booted with a Day0 file, NTP/DNS configurations are already present on the BIG-IP. The BIG-IP will be licensed and ready to be configured. The MGMT IP, default username/password was specified in the Day0 file. The Day0 file can be enhanced to add more networking and other configuration parameters if needed by specifying the appropriate tmsh commands. Make sure the BIG-IP interface mapping to CSP 2100 VNIC is correct by verifying the MAC address assignment. Consult with CSP 2100 guide in obtaining CSP 2100 VF VNIC MAC address info. To check BIG-IP MAC address, go to "Network" -> "Interfaces" To check on the CSP, click on the service deployed, scroll to the bottom, expand the VNIC information tab Configure VLAN consistent with the CSP 2100 VLAN tag configuration, make sure VLANs are untagged at the BIG-IP level After BIG-IP VE connectivity is established in the network rest of the configurations, such as Self-IP, default gateway, virtual servers are consistent with any BIG-IP VE configuration. To learn more about the F5 and Cisco partnership and joint solutions, visithttps://f5.com/solutions/technology-alliances/cisco For more information about Cisco CSP visithttp://www.cisco.com/go/csp Click here for a complete list of BIG-IP and CSP versions validated.1.2KViews0likes3CommentsSDN: An architecture for operationalizing networks

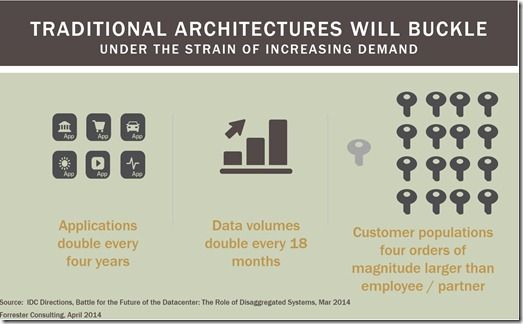

As we heard at last week’s Open Networking Summit 2014, managing change and complexity in data centers is becoming increasingly difficult as IT and operations are constantly being pressured to deliver more features, more services, and more applications at ever increasing rates. The solution is to design the network for rapid evolution in a controlled and repeatable manner similar to how modern web applications are deployed. This is happening because it is no longer sufficient for businesses to deliver a consistent set of services to their customers. Instead, the world has become hyper-competitive and it has become necessary to constantly deliver new features to not only capture new customers but to retain existing customers. This new world order poses a significant conflict for the operations side of the business as their charter is to ensure continuity of service and have traditionally used careful (often expensive) planning efforts to ensure continuity of service when changes are needed. The underling problem is that the network is not operationalized and instead changes are accomplished through manual and scripted management. The solution for operations is to move towards architectures that are designed for rapid evolution and away from manual and scripted processes. Software Defined Networking address these challenges by defining a family of architectures for solving these types operational challenges and operations teams are latching on with a rarely seen appetite. The key to the success of SDN architectures is the focus on abstraction of both the control and data planes for the entire network via open APIs (not just the stateless Layer 0-4 portions). The first layer of abstraction allows for a centralized control plane system called an SDN Controller, or just Controller, that understands the entire configuration of the network and programmatically configures the network increasing the velocity and fidelity of configurations by removing humans from configuration loop – humans are still needed to teach the Controller what to do. These Controllers allow for introspection on the configuration and allow for automated deployments. As Controllers mature, I expect them to gain the capabilities of a configuration management system (CMS) allowing for network architects to rapidly revert changes virtually instantaneously. The second layer of abstraction allows for network architects or third parties to programmatically extend the capabilities of a data path element. This can be as seemingly simple as adding a match-and-forward rule to a switch (e.g., OpenFlow) or as seemingly complex as intercepting a fully parsed HTTP request, parsing an XML application object contained within, and then interrogating a database for a forwarding decision (e.g., LineRate and F5) based on the parsed application data. However, realizing the fully operational benefits of SDN architectures requires that the entire network be designed with SDN architectural principles including both the stateless components (e.g., switching, routing, and virtual networking) and the stateful components (e.g., L4 firewalls, L7 application firewalls, and advanced traffic mangement). Early on SDN proponents, as SDN evolved from a university research project, proposed pure stateless Layer 2-3 networking ignoring the complexities of managing modern networks that call for stateful L4-7 services. The trouble with this approach is that every additional operational domain disproportionately increases operational complexities, as the domains need to be “manually” kept in sync. Recognizing this need, major Layer 2-4 vendors, including Cisco, have formed partnerships with F5 and other stateful Layer 4-7 vendors to complement their portfolios. With the goal of helping customers operationalize their networks, I offer the following unifying definition of SDN for discussion: “SDN is a family of architectures (not technologies) for operationalizing networks with reduced operating expenses, reduced risks, and improved time to market by centralizing control into a control plane that programmatically configures and extends all network data path elements and services via open APIs.” Over the next few months I’ll dig deeper into different aspects of SDN – stay tuned!503Views0likes2CommentsIoT Ready Infrastructure

IoT applications will come in all shapes and sizes but no matter the size, availability is paramount to support both customers and the business. The most basic high-availability architecture is the typical three-tier design. A pair of ADCs in the DMZ terminates the connection. They in turn intelligently distribute the client request to a pool (multiple) of IoT application servers which then query the database servers for the appropriate content. Each tier has redundant servers so in the event of a server outage, the others take the load and the system stays available. This is a tried and true design for most operations and provides resilient application availability, IoT or not, within a typical data center. But fault tolerance between two data centers is even more reliable than multiple servers in a single location, simply because that one data center is a single point of failure. Cloud: The Enabler of IoT The cloud has become one of the primary enablers for IoT. Within the next five years, more than 90% of all IoT data will be hosted on service provider platforms as cloud computing reduces the complexity of supporting IoT “Data Blending”. In order to achieve or even maintain continuous IoT application availability and keep up with the pace of new IoT application rollouts, organizations must explore expanding their data center options to the cloud, to ensure IoT applications are always available. Having access to cloud resources provides organizations with the agility and flexibility to quickly provision IoT services. The Cloud offers organizations a way to manage IoT services rather than boxes along with just-in-time provisioning. Cloud enables IT as a Service, just as IoT is a service, along with the flexibility to scale when needed. Integrating cloud-based IoT resources into the architecture requires only a couple of pieces: connectivity, along with awareness of how those resources are being used. The connectivity between a data center and the cloud is generally referred to as a cloud bridge. The cloud bridge connects the two data center worlds securely and provides a network compatibility layer that “bridges” the two networks. This provides a transparency that allows resources in either environment to communicate without concern for the underlying network topology. Once a connection is established and network bridging capabilities are in place, resources provisioned in the cloud can be non-disruptively added to the data center-hosted pools. From there, load is distributed per the ADC platform’s configuration for the resource, such as an IoT application. By integrating your enterprise data center to external clouds, you make the cloud a secure extension of the enterprise’s IoT network. This enterprise-to-cloud network connection should be encrypted and optimized for performance and bandwidth, thereby reducing the risks and lowering the effort involved in migrating your IoT workloads to cloud. Maintain seamless delivery This hybrid infrastructure approach, including cloud resources, for IoT deployments not only allows organizations to distribute their IoT applications and services when it makes sense but also provides global fault tolerance to the overall system. Depending on how an organization’s disaster recovery infrastructure is designed, this can be an active site, a hot standby, a leased hosting space, a cloud provider, or some other contained compute location. As soon as that IoT server, application, or even location starts to have trouble, an organization can seamlessly maneuver around the issue and continue to deliver its services to the devices. Advantages for a range of industries The various combinations of hybrid infrastructure types can be as diverse as the IoT situations that use them. Enterprises probably already have some level of hybrid, even if it is a mix of owned space plus SaaS. They typically prefer to keep sensitive assets in-house but have started to migrate workloads to hybrid data centers. Financial industries have different requirements than retail. Retail will certainly need a boost to their infrastructure as more customers will want to test IoT devices in the store. The Service Provider industry is also well on their way to building out IoT ready infrastructures and services. A major service provider we are working with is in the process of deploying BIG-IP Virtual Editions to provide ADC functionality needed for the scale and flexibility of the carrier’s connected car project. Virtualized solutions are required for Network Functions Virtualization (NFV) to enable the agility and elasticity necessary to support the IoT infrastructure demands. ps Related: The Digital Dress Code Is IoT Hype For Real? What are These "Things”? IoT Influence on Society IoT Effect on Applications CloudExpo 2014: The DNS of Things Intelligent DNS Animated Whiteboard The Internet of Me, Myself & I Technorati Tags: f5,iot,things,infrastructure,nfv,sensors,cloud,silva Connect with Peter: Connect with F5:501Views0likes0CommentsF5 Synthesis: The Real Value of Consolidation Revealed

#SDAS #SDN #NFV #CSP It's not about a "god box", it's about the platform It's often the case that upon hearing the word "consolidation" in conjunction with the network people conjure up visions of what's commonly known as a "god box." This mythical manifestation of data center legend allegedly enables every network-related services to be deployed on a single box and gloriously performs without any impact on performance, reliability or security. You caught the "mythical" qualifier, didn't you? There is no such thing. Consolidation in the network, like that of server and application infrastructure, isn't about the goal of deploying every application or service on a single "box", rather it's about taking advantage of the benefits of standardizing on a common, shared platform. Consolidation through server virtualization lowered costs and increased service velocity through standardization on a common platform. Efficiency gains resulting in lower costs were realized by the ability to consistently repeat deployment processes and, ultimately, to automate them through an open API. Cloud is really about the same thing: commoditization is the result of standardization at the platform layer, and rapid provisioning and deployment of applications and services is enabled by a well-defined set of APIs. Service providers - and large enterprises as well - suffer from network service sprawl. As pressures mount from daily increases in subscribers and consumers demanding resources, services must scale higher and higher, resulting in what really isn't all that different than "throwing more hardware at the problem." We've just virtualized the hardware is all. Unfortunately, the breadth of services needed to deliver applications reliably, securely and with attention to performance, are often just point solutions. Each has its own set of APIs (if you're lucky) and management systems, and each comes with a price tag attached to management, maintenance, and integration. It doesn't matter if they're all virtualized. That standardizes only the server and deployment platforms, the hypervisor and the hardware, and does nothing to address the disparity at the service platform layer. That's the layer where the actual services are deployed - and managed, and automated and integrated. It's at the platform layer where opportunities to realize significant cost and efficiency savings exist because that's where the overhead of an extremely heterogeneous service fabric is greatest. Consider that the administrative cost of ownership of a solution is pegged at about 20% of the cost (8% administrative overhead, including training, and 12% operational costs such as maintenance and management). So if you spent $100,000 on a firewall, for example, the cost of administration for that solution is approximately $20,000. Some of that cost is a one-time investment, so let's agree for this discussion it's actually closer to 10% over time. So each $100,000 solution you deploy for a specific purpose - a functional approach - is going to cost you $10,000. If you need four different functions, that's a total of $40,000. Now, consider a platform approach, where much of the administrative and operational costs can be collapsed. There's only one management console, one API style, one taxonomy, one terminology set, one core set of technologies to learn. That knowledge is shared across every solution. Once you invest in the training and know the platform, you know the platform for every other solution you might deploy atop it - whether it's on the same physical box or not. The same core technologies, the same terminology, the same management paradigm. That translates into lower costs. If 10% of administrative cost of the solution is shared because of a common (standardized) platform, then you're saving a lot of money every time you deploy a new function. In this hypothetical example, you're saving $30,000 by taking a platform approach to deploying network functions. The point is not the numbers, which I totally made up, but that there are significant advantages to standardizing on a platform-based approach in the network. It is this premise upon which emerging technologies like SDN are based. It's the commonality of the platform which results in standardized management and administrative that produces significant cost (and time) savings for organizations. Consolidation is not about buying the biggest box you can find and deploying everything on that one box. Sure, that is probably more cost-effective but we all know that's not realistic - performance and capacity are constrained by operational axiom #2: as load increases, performance decreases. Consolidation is about adopting a strategic, platform approach. Not a one box to rule them all approach. Additional Resources: F5 Synthesis: The Time is Right F5 and Cisco: Application-Centric from Top to Bottom and End to End F5 Synthesis: Software Defined Application Services F5 Synthesis: Integration and Interoperability F5 Synthesis: High-Performance Services Fabric F5 Synthesis: Leave no application behind288Views0likes0CommentsF5 Synthesis for Service Providers: Scaling in Three Dimensions

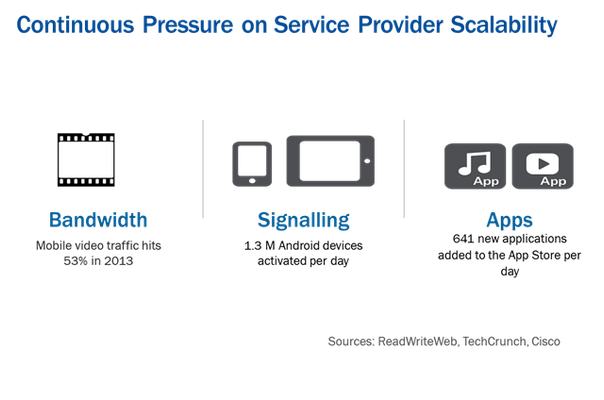

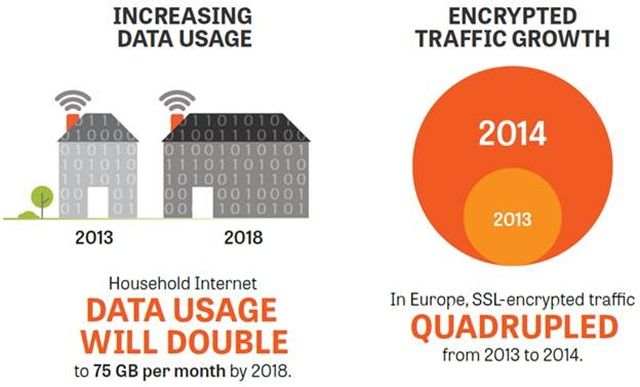

#MWC14 #SDAS #NFV #SDN It's not just about changing the economy of service scale, it's about operations, too. Estimates based on reports from Google put the number of daily activations of new Android phones at 1.3 Million. Based on reported data from Apple, there are 641 new applications per day added to the App Store. According to Cisco's Visual Networking Index, mobile video now accounts for more than 50% of mobile data traffic. Put them all together and consider the impact on the data, application and control planes of a network. Of a mobile network. Now consider how a service provider might scale to meet the demands imposed on their networks by continued growth, but make sure to factor in the need to maintain a low cost per subscriber and the ability to create new revenue streams through service creation. Scaling service provider networks in all three dimensions is no trivial effort, but adding on the requirement to maintain or lower the cost-per-subscriber and enable new service creation? Sounds impossible - but it's not. That's exactly what F5 Synthesis for Service Providers is designed to do: enable mobile network operators to optimize, secure and monetize their networks. F5 Synthesis for Service Providers F5 Synthesis for Service Providers is an architectural framework enabling mobile network operators to optimize, secure and monetize their networks. F5 Synthesis achieves this by changing the service economy of scale by taking advantage of a common, shared platform to reduce operational overhead and improve service provisioning velocity while addressing key security concerns across the network. F5 Synthesis for Service Providers enables mobile network operators to scale in three dimensions: control, data and application planes. Control Plane The control plane is the heart of a service provider network. Tasked with the responsibility for managing subscriber use and ensuring the appropriate services are applied to traffic, it can easily become overwhelmed by signaling storms that occur due to spikes in activations or an Internet-wide gaming addiction that causes millions of concurrent players to join in. The control plane is driven by Diameter, and F5 Synthesis for Service Providers includes F5's Traffix Signaling Delivery Controller, nominated this year for Best Mobile Infrastructure at Mobile World Congress. With unparalleled performance, flexibility and programmability, F5 Traffix SDC helps mobile network operators scale the control plane while enabling the creation of new control plane services. Less often considered but no less important in the control plane are DNS services. A scalable, highly resilient and secure DNS service is critical to both the performance and security of service provider networks. F5 Synthesis for Service Providers includes DNS services. F5 Synthesis is capable of scaling to 418 million response queries per second (RQPS) and includes comprehensive protection against DNS-targeting DDoS attacks. Data Plane The service provider data plane serves as the backbone between the mobile network and the Internet, and must be able to support millions of consumer requests for applications. Banking, browsing, shopping, watching video and sharing via social media are among the most popular activities, many of which are nearly continuous for some subscribers. Bandwidth hungry applications like video can become problematic for the data plane and cause degradations in performance that hamper the subscriber experience and send them off looking for a new provider. To combat performance, security and reliability challenges, service providers have invested in a variety of targeted solutions that has led to a complex, hyper-heterogeneous infrastructure comprising the Gi network. This complexity increases the cost per subscriber by introducing operational overhead and can degrade performance by adding latency due to the number of disparate devices through which data must traverse. F5 Synthesis for Service Providers includes a high-performance service fabric comprised of any combination of hardware or virtual appliances capable of supporting over 20 Tbps. Hardware and appliances from F5 are enabled with its unique vCMP technology, which allows the creation of right-sized service instances that can be scaled up and down dynamically and ultimately reduce the cost per subscriber of the services delivered. The F5 Synthesis High Performance Service Fabric is built on a common, shared and highly optimized platform on which key service provider functions can be consolidated. By consolidating services in the Gi network on a single, unified platform like F5 Synthesis service fabric, providers can eliminate the operational overhead incurred by the need to manage multiple point products, each with its own unique management paradigm. Consolidation also means services deployed on F5 Synthesis High Performance Service Fabric gain the performance and scale advantages of a network stack highly optimized for mobile networking. Application Plane Value added services are a key differentiator and key revenue opportunity for service providers, but can also be the source of poor performance due to the requirement to route all data traffic through all services, regardless of their applicability. Sending text through a video optimization service, or video through an ad insertion service does not add value, but it does consume resources and time that impact the overall subscriber experience. F5 Synthesis services include policy enforcement management capable of selectively routing data through only the value added services that make sense for a given subscriber and application combination. Using Dynamic Service Chaining, F5 Synthesis optimizes service chains to ensure more efficient resource utilization and improved performance for subscribers. This in turn allows service providers to selectively scale highly utilized value added services that saves time and money and reduces costs to deliver. F5 Synthesis for Service Providers works in concert with virtual machine provisioning systems to enable service providers to move toward NFV-based architectures. Intelligent monitoring of value added services combined with awareness of load and demand enables F5 Synthesis for Service Providers to ensure VAS can be scaled up and down individually, resulting in significant cost savings across the VAS infrastructure. by eliminating VAS silos and the need to scale the entire VAS infrastructure at the same time. F5 Synthesis for Service Providers also offers the most flexible set of programmability features in the industry. Control plane, data plane, management plane. APIs for integration, scripting languages for service creation, iApps and a cloud-ready, multi-tenant services fabric that can be combined with a self-servicing service management platform (BIG-IQ). This level of programmability changes the operational economy of scale through automation and orchestration opportunities. With F5 Synthesis for Service Providers, mobile network operators can simplify their Gi Network while laying the foundation for rapid service creation and deployment on a highly flexible, manageable virtualized service fabric that helps providers execute on NFV initiatives.262Views0likes1CommentService Chaining is Business Process Orchestration in the Network

#SDN #NFV #devops #interop Service chaining allows the network to grow up and really participate in business processes The term "service chaining" hasn't quite yet made it into the mainstream IT vernacular. It's current viewed as a technical mechanism for directing packets ,flows or messages (depending on where you sit in the network stack) around the network. Service chaining is the answer to "how do orchestrate the flow of data across the great divide that exists between L2-3 and L4-7"? There are already multiple implementations, some that take advantage of virtual overlay protocols like VXLAN and NVGRE, others that use proprietary tags, and some that even operate at layer 7 and take advantage of HTTP's natural redirection capabilities to move data from one service to another. What's important is not only the mechanism (a variety of IETF proposals already exist discussing this) but recognizing that what we're really trying to do is orchestrate a business process across application and network services. The one thing SDN and NFV have been unable to do is break the belief that the network is just a pipe and the services hosted in the network are just packet pushing variations on a theme. To many, "network services" still means "a VLAN" or "QoS policies" or "bandwidth limitations". Even higher order stack services like load balancing and application acceleration are viewed not as services but as discrete network functions. This fails to recognize what a "service" is in the context of infrastructure and emerging technologies like SDN, cloud and NFV, which in turn keeps their relationship to business processes from becoming readily apparent. Service providers are likely closer to recognizing that technologies like service chaining are as much about orchestrating a business process as they are how to direct traffic around a network. Consider a few of the more common "value added services (VAS)" offered by service providers to both application providers and subscribers (consumers) alike. Consider, now, that these services are applied only in the case when they are appropriate to the subscriber (and one hopes that traffic). If I have not subscribed to the "parental controls" service, my data should not be wasting cycles by being processed by said service. This is true for all VAS in a service provider's network - cycles wasted on processing is wasted money. So there must be a way for operators to designate the appropriate "chain of services" through which a given subscriber's traffic should flow based on whether or not they are customers of that service (i.e. they've paid for it). That's a business problem and a business process being orchestrated, not a technical exercise in determining out which port a packet should be tossed. In the enterprise, there are similar services that must be orchestrated as part of a larger process. As applications decompose into APIs and micro-services, the need to orchestrate application flows across services becomes imperative. But this is not just about the applications, but also the application and network services that are part of those flows; those processes. There are a variety of application services that live in the network and application infrastructure that are part of larger, orchestrated processes. Not every response to a consumer request needs authentication, nor does every response or request need to be examined by the fraud system. What determines the need to evaluate, analyze or further process inbound or outbound traffic in both data centers and service provider networks is a business process. The ability to orchestrate the network and application services based on that business process is in part what emerging technologies like SDN and NFV are offering. We can call it flexibility or agility or some other "ity" if you like or even start saying "policy-driven" again, but the reality is that technologies that enable orchestration of services do so in order to implement a business process - whether that's across applications or networks or both. Thus, service chaining is likely to be a critical and foundational technology, as it answers the question of "how are we going to orchestrate in the network" to enable and support the orchestration of the business processes that run the business and impact the bottom line. Service chaining is business process orchestration in the network. The organizations that figure this out and use it to their advantage will have, well, a significant advantage.261Views0likes0CommentsNFV is Much More than Virtualization

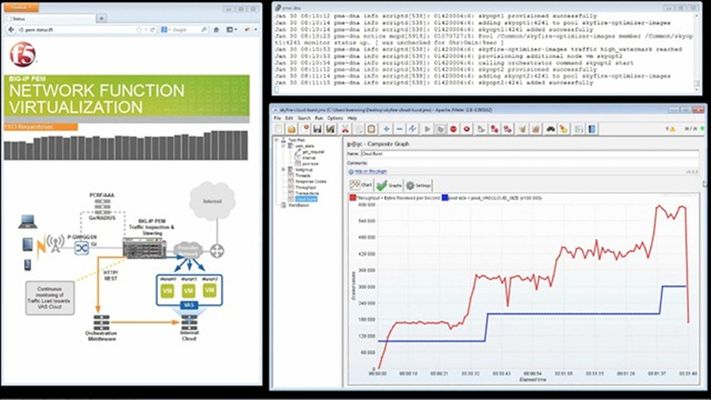

There has been a lot of attention given to Network Functions Virtualization (NFV) and how it is driving a paradigm shift in the architecture of today’s communications service providers (CSPs) networks. NFV is a popular topic and has been getting a lot if visibility in the recent months. Recently, I have been seeing announcements from vendors stating that they are delivering a software version of their application or solution eliminating the need for proprietary hardware. All of these announcements always mention that since the product is now available as a ‘virtualized’ solution, they are NFV compliant or based on NFV standards. Let’s get something straight. First, there are no standards. There is an ETSI NFV working group that is defining the requirements for NFV. They have produced an initial white paper describing the benefits, enablers, and challenges for NFV in the CSP environment. Most of the industry is using this white paper as the basis for their concept of NFV. The working group is continuing to meet and determine what NFV consists of with a goal of having the concept fully defined by the end of 2014. Second, and more importantly, just because your solution can be installed and run on commoditized hardware, it does not mean that it is ‘NFV enabled’. In a recent interview, Margaret Chiosi from AT&T said, ‘Orchestration and management is a key part in realizing NFV’. While running on commoditized hardware is part of the NFV story, it is not the complete story. Virtualization does not achieve anything except reduced capital costs due to the use of common off the shelf (COTS) hardware, and flexibility in the deployment of services since there is not a proprietary hardware dependency. We, at F5 Networks, believe that the orchestration and management of these virtualized services are a critical aspect to make NFV successful. We have been delivering virtualized versions of our solutions since 2010. Since we have experience delivering virtualized solutions, this means that we understand how important it is to deliver a framework allowing for the integration of these solutions with other key components of the architecture. Even though services such as DPI, Carrier Grade NAT, PGW, and other Evolved Packet Core (EPC) functions have been virtualized, they are not automatically part of a flexible and dynamic architecture. Orchestration and management are necessary to enable the potential of the virtualized service. As I mention in a previous blog post, orchestration is where NFV can really shine because it allows the CSPs to automate service delivery and create an ecosystem where the infrastructure can react and respond to changing conditions. The infrastructure can monitor itself, determining the health of the services and instigate changes based on policies as defined by the architects and operators. An Orchestra needs a Conductor F5 has been providing the foundation for orchestration services ever since the company’s inception. The concept of load balancing and providing application delivery controller (ADC) services is all about managing connections and sessions to pools of services. This functionality inherently provides a level of security since the ADC is providing proxy services to the applications as well as availability since the ADC is also monitoring the health of the service through extensive application health checks. As Tom Nolle states in one of his blog posts, he likes the idea of ‘creating a set of abstract service models that are instantiated by linking them to an API’. This sounds like a great template for delivering orchestration services. Orchestration is the fact that these service models are linked to the services via APIs. Service models are defined via operator policies and APIs allow for the bi-directional communication between service components. The application delivery controller (ADC) is a key component for this orchestration to occur. It is the ADC that has insight into the resources and their availability. It is the ADC that manages the forwarding of sessions to these resources. In the CSP network architecture, especially as we evolve to LTE, this includes much more than the traditional load balancers and content management solutions that sit in front of server farms in data centers that we typically think of when discussing ADCs. The high value content is in the packet data network (Gi and SGi) and the control plane messaging (DNS, Diameter, SIP). With this critical role in the real-time traffic engineering of the services being virtualized along with the visibility into the health and availability of the resources the service can access, it makes sense that the ADC play a pivotal role in the management and orchestration of the virtualized infrastructure. It takes more than content inspection, load balancing, and subscriber policy control via PCRF/PCEF to enable the full orchestration required for the NFV vision to come to fruition. There needs to be an intelligence that can correlate the data from these services and technologies to determine the health and state of the infrastructure. Based on this information along with policies that have been defined by the operator and programmed into this intelligence, it becomes possible to 1) create a dynamic orchestration ecosystem that can monitor the different services and functions within the EPC, 2) collect data and provide analytics, and 3) proactively and reactively adjust the configuration and availability of resources based on the operator defined policies. Along with the intelligence, it is necessary to have open APIs that allow for inbound and outbound communications to allow for the sharing of the data collected in addition to being the conduit to deliver policy and configuration changes as determined by the orchestration system. It is critical for the orchestration of a NFV architecture in the EPC to be open and allow for multiple, potentially disparate vendors and technologies working together to create a dynamic environment that provides the flexibility and scalability that NFV is looking to achieve. As an example to demonstrate this orchestration functionality and how it is able to take advantage of virtualization within the NFV architecture, I am reposting this video of value-added services (VAS) bursting in a virtual EPC (vEPC) environment. In this scenario, the F5 BIG-IP Policy Enforcement Manager (PEM) is identifying and tracking the connections being delivered to the VAS solution, video optimization in this case. Based on the data received, such as number of concurrent connections, BIG-IP PEM is able to signal a virtual orchestration client, such as one of many varieties of virtual machine hypervisors to enable additional servers for the video optimization solution and have them added to the available resources within the traffic steering policy. This demonstration shows the initial potential of a virtualized infrastructure when one is able to deliver on the promise of the orchestration and management of the entire infrastructure as an ecosystem, not a pool of different technologies and vendor-specific implementations. It is critical for this collaboration and orchestration development to continue if we expect this NFV architecture to be successful. It is important for everyone, the CSPs, vendors, and technologists, to see and understand that NFV is much more than virtualization and the ability to deliver services as software. NFV requires a sound management and orchestration framework to become a proper success.243Views0likes1CommentTrying to Envision the Future through Eyes of Today

#MWC15 What an incredible week it was at Mobile World Congress this year! With 1,900+ exhibitors and around 100,000 attendees it is hard to focus on any single topic or find a common theme among all of us. But through the many pitches and discussions, we are able to bring every concept together to find a couple ideas that were repeated throughout the show. Does IoT require a new Internet? The new and trendy hot topic of the congress was Internet of Things (IoT). Everyone is talking about IoT and how it is going to change how service providers and consumers are managing their lives. It is estimated that there could be anywhere from 20 billion to 200 billion connected devices by 2020. Keeping in mind that reality often exceeds expectations in technology, this is a bit overwhelming. IoT is still in the upwards phase of the hype cycle and it is hard to nail down any specific directions of framework around the IoT concept. There are companies talking about connected cars which will provide Internet access and functional capabilities within the vehicles. There are other companies looking to add more devices that the average human will carry around from connected health devices, to watches, to shoes. Everyone wants to be involved with the IoT movement, but few understand what is necessary for IoT to be successful. Many conversations revolve around security for IoT. Some are talking about the security of the devices and their capabilities such as Internet-enabled home surveillance. Others are talking about the security and integrity of the data for healthcare devices that may be online such as pacemakers or CPAP machines. But, IoT is in such an early stage of development, that it is hard to congeal a central vision or thought around what else we need to be thinking about to ensure a smooth and successful evolution. I had many discussions last week discussing an important issue that we may not be focusing our attention on, but is essential for IoT to become mature and accepted. The number of IoT devices connecting to the service provider network on a regular basis will be daunting and potentially overwhelm the mobile network’s infrastructure. The influx of the large number of connected devices does not only impact the consumers and the various application providers, but there is a very direct impact to the mobile service providers beyond the data growth. These devices behave differently from human-enabled devices that are pseudo-randomly initiated, these devices will automatically send updates on a regular periodic interval. As the number of devices increases over time, the number of simultaneous connections that will be occurring at any given interval point will eventually overwhelm the mobile service provider’s connection and registration infrastructure. This problem and others need to be investigated and addressed along with the potential security issues before we embrace the IoT vision of the future. Virtualization is not a fad, it is a reality Continuing its popularity from last year, virtualization, and specifically NFV, continues to be an extremely strong item of interest. Vendors are starting to progress beyond the first step of virtualization and are now asking each other how these virtualized functions will work together within the NFV architecture framework. NFV is proving itself to be more than a passing fad as vendors and service providers work to establish functional multi-vendor NFV deployments. As scenarios are being built in the labs and trialed in the field, everyone is coming to the realization that the true benefit of NFV is not the CapEx or even the OpEx savings that was initially outlined over 2 ½ years ago. The value of NFV is the delivery of cloud-like technologies to the service provider’s core network environment. It is the agility and elasticity - the bringing of services to bear efficiently, and the on-demand resourcing - through the abstraction and orchestration that delivers the value of the NFV architecture. There are more vendors discussing and demonstrating the requirements for the management and orchestration of NFV this year. There is a consensus that the virtualization vision of the future is not one where the entire network is virtualized. It is unrealistic to believe that existing hardware will be tossed aside in lieu of virtualized functions in every use case. There are situations where proprietary vendor delivered hardware is still the best choice due to cost, performance, or functionality. The network of the future will be a hybrid architecture with both proprietary hardware and virtualized solutions. What is important in this hybrid architecture is that the management and orchestration of this network be consistent and unified when working with physical and virtualized components. In an ideal scenario, the management and orchestration solution need not know whether a component is physical or virtualized. From a functional perspective, both versions of the service should look the same and be configured and managed in the same way. Not so stodgy after all Service providers are known as old school, slow moving, and taking a long time to adopt new technologies and concepts. This year, MWC has proven that this is not the case. NFV is less than three years old and we are already seeing mature architectures being built and tested. And while there have been Internet enabled devices almost since the development of the Internet, there is an explosive trend to bring IoT to bear in every way imaginable. It will be interesting to see these technologies along with others continue to transform the mobile service provider networks as the world continues to get more interconnected. These life-changing and network-altering technologies are the beginning of a new voyage towards the visions of our interconnected future. With a positive perspective, I look forward to seeing what the networks of the future hold for us as a global community, but only after a voyage of my own to recover from this enormous and insightful event! Bon Voyage!238Views0likes0CommentsNFV is Emerging, but Here are 4 Key Challenges to Success

Nobody would argue that Network Functions Virtualization (NFV) has made significant progress in the past two and one half years. There are dozens of proof of concepts being run by operators in conjunction with vendors working together in communities and consortiums. Some service providers and vendors have announced that they have functional NFV architectures in their production networks. But, everyone needs to take a step back and look at some of the realities associated with the NFV architectural model as it stands today. There are success stories, but there are still significant challenges and hurdles to the smooth implantation of a NFV-based solution. Here are four key challenges to the success of NFV as an architectural standard today. Challenge 1 is the fact that there are no standards today for communications between the NFV components. We have interfaces such as the Ve-Vnfm, Nf-Vi, or Vn-Nf, but these interfaces have no definition beyond their name and which components are communicating with each other. Standards bodies and various communities are working on creating communications models for the named interfaces, but it will take time for consensus standards to be created. There are competing bodies and competing standards. In the meantime, we need to get NFV working. Challenge 2 is that NFV is a multivendor landscape by the nature of the architecture. Different components from the NFVI, different VNFs, and MANO will need to be delivered by multiple vendors and the interconnectivity needs to be open and standardized. As mentioned, these standards are still being developed. In the meantime, we need open standards today. This does not necessarily open source, but open where everyone in this multivendor architecture can view, program to, and interact with the different components as necessary. Challenge 3 is using a single orchestration system to bridge the existing hardware solutions with the virtualized solutions. For legacy, performance, and functionality reasons, the foreseeable future is a hybrid network with both proprietary hardware and virtualized services. Management and orchestration systems should have the ability to support and interact with physical, proprietary solutions as much as the logical and virtualized technologies. If the function is identical, then there is no reason for a management and orchestration system to treat the two systems differently except for their physical footprint. Challenge 4 is the fact that true orchestration that delivers desired automation for agility and elasticity requires analytics and heuristics combining different technologies and vendors. These systems are being developed but they do not fully exist today. It will take time for vendors, service providers and organizations to work together to fully develop these systems. It is essential for the system to be ‘carrier class’ and support the needs of a typical service provider while having the capability to manage multiple vendors, multiple disparate technologies, customer specific policies, and do this all with a holistic view of the entire core network as an ecosystem. These are challenges that need to be viewed and addressed. There are expected benefits of the NFV architecture based on cost, operational efficiencies, business agility. The service provider industry will find ways to resolve these challenges and make NFV successful. Let us just hope that this happens sooner, rather than later.232Views0likes0CommentsNFV 확산일로, 성공 열쇠는 ‘운영화’

This post is an adaption of the original blog entry in English here. 네트워크 기능 가상화(NFV)는 아직 속도는 느리지만 확실히 진행 중이다. 소수의 통신사업자들은 이미 상업적으로 NFV를 구축한 상태고, 대부분은 개념증명(PoC) 단계에 있다. 그 중 일부는 내부 네트워크 특정 부분의 제한된 범위에서의 NFV 인가를 진행 중이며 또 다른 일부는 확장된 엔드 투 엔드 네트워크 생태계의 한 부분으로 넓은 범위의 NFV를 추진하고 있다. 서비스 제공업체들이 주도하는 다양한 NFV 이니셔티브 이면의 주요 동인은 각각 다를 수 있지만 가장 중요한 이유는 동일하다. 바로 유연한 용량 확장, CAPEX 예측 가능성, 신속하고 유연한 애플리케이션 및 서비스 구축 등이며 이는 오픈소스 소프트웨어 및 일반용도의 하드웨어 기술들을 활용하는 요소들이다. NFV 성공 열쇠 ‘운영화’ NFV의 주요 동인은 여전히 유효하다. 서비스 제공업체들 사이에서는 NFV 성공의 열쇠가 바로 ‘운영화’라는 점에 크게 이견이 없어 보인다. · 다수 벤더의 VNF(Virtual Network Function)를 각기 다른 부분에서 성공적으로 통합 · 통합 네트워크 기능을 개방된 표준형으로 관리 및 오케스트레이션 · 새로운 NFV 기반 인프라에서 현재와 같은 수준의 신뢰성 및 가용성 제공 · 이미 운영 중인 레거시용 하드웨어 인프라와 NFV 기반 인프라의 호환성 서비스 제공업체들이 위와 같은 구성 요소들을 만족하면서 NFV를 운영하기 위해서는 무엇을 할 수 있을까? 성공적인 NFV를 위한 도전과제와 이를 해결할 수 있는 방안들에 대해 알아보자. 오픈소스 기술, 양날의 검일까? 운영화는 상위 단계로의 기술 및 접근법이 무궁무진하고 각 벤더마다 상위단계를 실행하는 독자적인 메커니즘을 가지고 있어 모두가 호환이 가능하지 않다는 점으로 인해 실제로 이를 실현하는 것은 매우 어려운 일이다. NFV가 제공하는 실질적인 운영 효율성을 얻기 위해서는 표준이 주요 역할을 하는 오케스트레이션 방법들의 단일화를 반드시 이룰 필요가 있다. 사실 오픈소스 표준들이 개방돼 있더라도 핵심 기능들의 개발은 더디게 이뤄질 것이며 구축 이전에 주요 커스터마이징에 있어 조정돼야 할 부분들 또한 있을 것이다. 오픈소스 기술들은 특징 및 기능들이 상대적으로 저가에 공급되고 특정 벤더에 종속되지 않으므로 서비스 제공업체들에게는 매력적인 제안이다. 그러나 반대로 실제로 빠른 발전을 도모하고 개방된 표준과 관련된 지원 문제를 해결하기 위해서는 오픈소스 커뮤니티의 영향을 크게 받는다는 것은 문제다. 즉, 서비스 제공업체들이 오픈소스 기능을 차용하고 특정 필요에 맞춰 커스터마이징을 하기 위해 여전히 개별 벤더들에게 의존해야 한다는 것이다. NFV – 하이브리드 네트워크 아키텍처 혜택 NFV 구축 시에 고려해야 할 가장 중요한 요소는 운영자들이 수백만 명의 고객에게 제공하고 있는 음성, 데이터 및 멀티 미디어 서비스를 가능하게 하는 특정 목적을 위한 하드웨어와 함께 레거시 네트워크 아키텍처를 현재 보유하고 있다는 점이다. 운영자들은 네트워크에 NFV 도입을 고려할 때, 새로운 NFV 기반 가상화 인프라가 기존의 레거시 인프라와 함께 공존가능하고, 관리 및 권한 설정 과정에 있어 유연한 통합이 가능하도록 보장받기를 원한다. 서비스 제공업체들도 새로운 가상 인프라를 기존에 있는 동일한 메커니즘 및 툴로 관리하는 것을 선호하므로 NFV 솔루션의 성공적인 구축을 위해서는 표준 및 벤더 커뮤니티가 이러한 요구를 신속히 해결해야 할 것이다. 또한 대용량의 트래픽을 처리하고 연산집약적인 기능들을 수행해야만 하는 SSL, IPSec, 영상 압축 등과 같은 특정 네트워크 기능에는 특별한 용도의 솔루션과 진화하는 가상 네트워크 기능이 함께 존재할 수 있는 확장 가능한 솔루션이 필요로 하게 될 것이다. 결국, ‘하이브리드’ 네트워크 아키텍처 개념은 NFV의 용이한 도입과 네트워크 기반의 레거시 하드웨어와의 매끄러운 통합을 위해 필수적인 것이다. NFV – 오케스트레이션: 다양한 기술 및 접근법 등의 융합을 위한 필수요소 NFV 성공의 주요 척도 중 하나는 바로 오케스트레이션 및 관리다. ETSI/NFV-ISG 표준 그룹은 NFV의 관리 및 오케스트레이션(MANO)에 대한 명확한 가이드라인 및 요건을 발표한 바 있다. 그러나, 해당 가이드라인에는 NFV를 위한 오케스트레이션 기술의 융합 또는 통합은 포함되지 않았으며 다양한 벤더 및 커뮤니티가 도입한 각각의 대체 솔루션은 네트워크의 각기 다른 OSI 레이어에서 운영된다. 일부는 레이어 4~7에 적합하는가 하면 다른 일부는 레이어 1~3의 관리 및 설정에 최적화돼 있기도 하다. 어떤 경우에는 7개의 모든 레이어에서 사용될 수 있는 단일 스택 기술이 있기도 하다. 이와 같이 NFV를 위한 오케스트레이션 기술의 융합 및 통합의 움직임이 명백히 파편적이다보니 서비스 제공업체 및 벤더들은 NFV 기반 네트워크 인프라의 효율적인 구축과 운영에 있어 융합 및 표준화된 오케스트레이션 기술들이 가용될 수 있는 지의 여부와 함께 그 시점을 기다리는 실정이다. NFV의 미래는? 현재에는 NFV 도입과 그 효과 증대를 기대하는 수많은 서비스 제공업체들에 의해 NFV 도입을 위한 혁신적이며 효율적인 방법들이 탐색 중에 있다. 서비스 제공업체들에게 있어 하나의 원칙은 NFV의 도입이 기존 네트워크의 운영에 영향을 끼치지 않도록 내부 네트워크 및 서비스 인프라에 필수적이지 않은 부분에 NFV를 구축해야 한다는 점이다. 몇몇 대형 서비스 제공업체들이 최근 떠오르는 사물인터넷(IoT) 비즈니스 모델을 지원하기 위해 NFV 네트워크 인프라를 도입하고 있다. 이는 IoT 인프라가 기존 가입자 트래픽(human paying subscriber traffic)을 지원하는 레거시 네트워크 장비에 영향을 미치지 않기 때문이다. 주목해야 할 또 다른 중요한 점은 많은 서비스 제공업체들이 NFV를 시험하고 구축할 때에 SDN과 함께 고려하고 있다는 점이다. 그들은 NFV와 SDN가 서로 상호보완적이며 두 기술을 함께 구축하면 더 많은 혜택을 만들어 낼 것이라고 믿고 있다. 이들은 NFV를 통해 CAPEX 절감과 더 빠른 새로운 서비스 제공이 가능해질 것으로 보고 있으며, SDN을 통해서는 OPEX 절감과 네트워크 자원을 최적으로 사용할 수 있을 거라고 기대한다. NFV는 확실히 부상하고 있다. F5는 레이어 4~7 솔루션의 모든 대역폭과 NFV 및 SDN의 구축을 가능하게 하는 폭넓은 하이브리드 네트워크 아키텍처 지원 역량으로 서비스 제공업체들이 가진 문제점들을 해결할 수 있도록 한다. F5는 NFV 확산에 일조하며 NFV의 고가용성, 운영비용 최소화, 신속하고 유연한 애플리케이션 및 서비스 구축 등의 뛰어난 장점들이 최대한 활용되기를 기대한다.222Views0likes0Comments