iapp

47 TopicsWhat is an iApp?

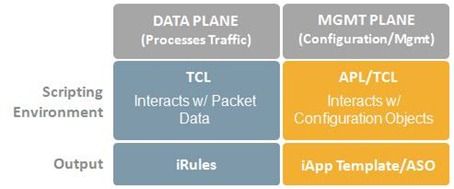

iApp is a seriously cool, game changing technology that was released in F5’s v11. There are so many benefits to our customers with this tool that I am going to break it down over a series of posts. Today we will focus on what it is. Hopefully you are already familiar with the power of F5’s iRules technology. If not, here is a quick background. F5 products support a scripting language based on TCL. This language allows an administrator to tell their BIG-IP to intercept, inspect, transform, direct and track inbound or outbound application traffic. An iRule is the bit of code that contains the set of instructions the system uses to process data flowing through it, either in the header or payload of a packet. This technology allows our customers to solve real-time application issues, security vulnerabilities, etc that are unique to their environment or are time sensitive. An iApp is like iRules, but for the management plane. Again, there is a scripting language that administrators can build instructions the system will use. But instead of describing how to process traffic, in the case of iApp, it is used to describe the user interface and how the system will act on information gathered from the user. The bit of code that contains these instructions is referred to as an iApp or iApp template. A system administrator can use F5-provided iApp templates installed on their BIG-IP to configure a service for a new application. They will be presented with the text and input fields defined by the iApp author. Once complete, their answers are submitted, and the template implements the configuration. First an application service object (ASO) is created that ties together all the configuration objects which are created, like virtual servers and profiles. Each object created by the iApp is then marked with the ASO to identify their membership in the application for future management and reporting. That about does it for what an iApp is…..next up, how they can work for you.1.3KViews0likes4CommentsVMware Horizon and F5 iAPP Deployments Backed by Ansible Automation

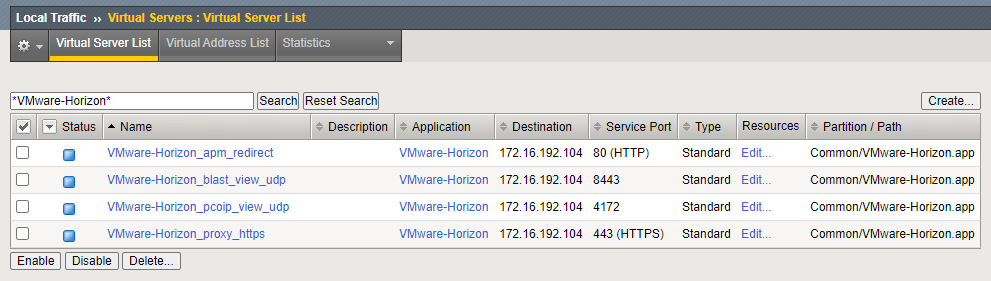

The Intro: A little over a year ago I knew barely anything about automation, zero about ansible, and didn't even think it would be something so tied to my life like it is now. I spend all my moments trying to think about how I can make Automation easier in my life, and being in Business Development I spend a lot of time testing F5 solutions and integrations between vendors (specifically between F5 and VMware as well as F5 and RedHat Ansible). I figured why not bring them a little closer together? It takes forever to build Labs and setup environments, and with automation I can do this in mere minutes compared to the hours it use to take (we are talking fresh builds, clean environments). I plan on sharing more about more of my VMware and Ansible automation integrations down the chain (like Horizon labs that can be built from scratch and ready to test in 30 minutes or less). But I wanted to start out with something that I get a lot of questions about:is it possible to automate iApp Deployments? Specifically the VMware Horizon iApp? The answer is YOU CAN NOW! grant you this like all automation is a work in progress. My suggestion is if you have a use case you want to build using what I have started with I encourage it!! TAKE, FORK and Expand!!!! The Code: All of the code I am using is completely accessible via the F5 DevCentral Git Repository and feel free to use it! What does it do? Well, if you are an F5 Guru then you might think it looks similar to how our AS3 code works, if you aren't a Guru its basically taking one set of variables and sending off a single command to the F5 to build the Application (I tell it the things that make it work, and how I want it deployed and it does all the work for me). Keep in mind this isn't using F5 AS3 code, it just mimics the same methods bytaking a JSON declaration of how I want things to be and the F5 does all of the imperative commands for me. --- - name: Build JSON payload ansible.builtin.template: src=f5.horizon.{{deployment_type |lower }}.j2 dest=/tmp/f5.horizon.json - name: Deploy F5 Horizon iApp f5networks.f5_modules.bigip_iapp_service: #Using Collections if not use - bigip_iapp_service: name: "VMware-Horizon" template: "{{iapp_template_name}}" parameters: "{{ lookup('template', '/tmp/f5.horizon.json') }}" provider: server: "{{f5_ip}}" user: "{{f5_user}}" password: "{{f5_pass}}" validate_certs: no delegate_to: localhost All of this code can be found at - https://github.com/f5devcentral/f5-bd-horizon-iapp-deploy/ Deployments: Using the F5 iApp for Horizon provided many options of deployment but they were all categorized into 3 buckets F5 APM with VMware Horizon - Where the F5 acts as the Gateway for all VMware Horizon Connections (Proxying PCoIP/Blast) F5 LTM with VMware Horizon - Internal Connections to an environment from a LAN and being able to secure and load balance Connection Servers F5 LTM with VMware Unified Access Gateway - Using the F5 to load balance the VMware Unified Access Gateways (UAGs) and letting the UAGs proxy the connections. The deployments offer the ability to utilize pre-imported certificates, set the Virtual IP, add additional Connection Servers, Create the iRule for internal connections (origin header check) and much more. All of this is dependent on your deployment and the way you need it setup. The current code doesn't import in the iApp Template nor the certificates, this could be done with other code but currently is not part of this code. All three of these deployment models are considered and part of the code and how its deployed is based on the variables file "{{code_directory}}/vars/horizon_iapp_vars.yml" as shown below. Keep in mind this is using clear text (i.e. username/password for AD) for some variables you can add other ways of securing your passwords like an Ansible VAULT. #F5 Authentication f5_ip: 192.168.1.10 f5_user: admin f5_pass: "my_password" f5_admin_port: 443 #All Deployment Types deployment_type: "apm" #option can be APM, LTM or UAG #iApp Variables iapp_vip_address: "172.16.192.100" iapp_template_name: "f5.vmware_view.v1.5.9" #SSL Info iapp_ssl_cert: "/Common/Wildcard-2022" # If want to use F5 Default Cert for Testing use "/Common/default.crt" iapp_ssl_key: "/Common/Wildcard-2022" # If want to use F5 Default Cert for Testing use "/Common/default.key" iapp_ssl_chain: "/#do_not_use#" #Horizon Info iapp_horizon_fqdn: "horizon.mycorp.com" iapp_horizon_netbios: "My-Corp" iapp_horizon_domainname: "My-Corp.com" iapp_horizon_nat_addresss: "" #enter NAT address or leave empty for none # LTM Deployment Type iapp_irule_origin: - "/Common/Horizon-Origin-Header" # APM and LTM Deployment Types iapp_horizon_connection_servers: - { ip: "192.168.1.50", port: "443" } # to add Connection Servers just add additional line - { ip: "192.168.1.51", port: "443" } #APM Deployment Type iapp_active_directory_username: "my_ad_user" iapp_active_directory_password: "my_ad_password" iapp_active_directory_password_encrypted: "no" # This is still being validated but requires the encrypted password from the BIG-IP iapp_active_directory_servers: - { name: "ad_server_1.mycorp.com", ip: "192.168.1.20" } # to add Active Directory Servers just add additional lines - { name: "ad_server_2.mycorp.com", ip: "192.168.1.21" } # UAG Deployment Type iapp_horizon_uag_servers: - { ip: "192.168.199.50", port: "443" } # to add UAG Servers Just add additional lines - { ip: "192.168.199.51", port: "443" } How do the Variables integrate with the Templates? The templates are JSON based code which Ansible will inject the variables into them depending on the deployment method called. This makes it easier to templates to specific deployments because we don't hard code specific values that aren't necessary or are part of the default deployments. Advanced Deployments would require modification of the JSON code to apply specialized settings that aren't apart of the default. If you want to see more about the templates for each operation (APM/LTM/UAG) check out the JSON Code at the link below: https://github.com/f5devcentral/f5-bd-horizon-iapp-deploy/tree/main/roles/ansible-deploy-iapp/templates The Results: Within seconds I can deploy, configure and make changes to my deployments or even change my deployment type. Could I do this in the GUI? Absolutely but the point is to Automate ALL THE THINGS, and being able to integrate this with solutions like Lab in a box (built from scratch including the F5) saves massive amounts of time. Example of a VMware Horizon iApp Deployment with F5 APM done in ~12 Seconds [root@Elysium f5-bd-horizon-iapp-deploy]# time ansible-playbook horizon_iapp_deploy.yaml PLAY [localhost] ******************************************************************************************************************************************************************** TASK [bypass-variables : ansible.builtin.stat] ************************************************************************************************************************************** ok: [localhost] TASK [bypass-variables : ansible.builtin.include_vars] ****************************************************************************************************************************** ok: [localhost] TASK [create-irule : Create F5 iRule] *********************************************************************************************************************************************** skipping: [localhost] TASK [ansible-deploy-iapp : Build JSON payload] ************************************************************************************************************************************* ok: [localhost] TASK [ansible-deploy-iapp : Deploy F5 Horizon iApp] ********************************************************************************************************************************* changed: [localhost] PLAY RECAP ************************************************************************************************************************************************************************** localhost : ok=4 changed=1 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0 real 0m11.954s user 0m6.114s sys 0m0.542s Links: All of this code can be found at - https://github.com/f5devcentral/f5-bd-horizon-iapp-deploy/1.2KViews0likes0CommentsSupport for NIST 800-53?

#f5friday There’s an iApp for that! NIST publication 800-53 is a standard defined to help government agencies (and increasingly enterprises) rein in sprawling security requirements while maintaining a solid grip on the lockdown lever. It defines the concept of a “security control” that ranges from physical security to AAA, and then subdivides controls into “common” – those used frequently across an organization, “custom” – those defined explicitly for use by a single application or device, and “hybrid” – those that start with a common control and then customize it for the needs of a specific application or device. The standard lists literally hundreds of controls, their purpose, and when they’re needed. When these controls are “common”, they can be reused by multiple applications or devices. For government entities and their contractors, this standard is not optional, but there is a lot of good in here for everyone else also. Of course external access to applications should be considered, allowed or not, and if allowed, locked down to only those who absolutely need it. That is the type of thing you’ll find in the common controls, and any enterprise could benefit from studying and understanding the standard. For applications, using this standard and the ones it is based off of, IT can develop a security checklist. The thing is that for hardware devices, support is very difficult from the outside. It is far better if the device – particularly networking devices that run outside of the application context – implement the information security portions internally. And F5BIG-IP does. With an iApp. No doubt you’ve heard us talk about how great we think iApps are, well now we have a solid example to point out, where we use it to configure the objects that allow access to our own device. Since iApps are excellent at manipulating data heading for an application, the fact that BIG-IP UI is a web application should make it easy to understand how we quickly built support for 800-53 through development of an iApp that configures all of the right objects/settings for you, if you but answer a few questions. 800-53 is a big standard, and iApps were written with the intent that they configure objects that BIG-IP manipulates more than the BIG-IP configuration objects themselves, so there are a couple of caveats in the free downloadable iApp – check the help section after you install and before you configure the iApp. But even if the caveats affect you, they are not show-stoppers that invalidate compliance with the standard, so the iApp is still useful for you. One of my co-workers was kind enough to give me a couple of enhanced screenshots to share with you, if you already know you need to support this standard in your organization, these will show you the type of support we’ve built. If you’re not sure whether you’ll be supporting 800-53, they’re still pretty information-packed and you’ll get why I say this stuff is useful for any organization. The thing is that this iApp is not designed as a “Yes, now you can check that box” solution, it aims to actually give you control over who has access to the BIG-IP system and how they have access, from where, while utilizing the language and format of the standard. All of these things can be done without the iApp, but this tool makes it far easier to implement and maintain compliance because under the covers it changes several necessary settings for you, and you do not have to search down each individual object and configure it by hand. The iApp is free. iApp support is built into BIG-IP. If you need to comply with the standard for regulatory reasons, or have decided as an organization to comply, download it and install. Then run the iApp and off you go to configuration land. Note that this iApp makes changes to the configuration of your BIG-IP. It should be used by knowledgeable staff that are aware of how their choices will impact the existing BIG-IP configuration.236Views0likes1CommentF5 Friday: Applications aren't protocols. They're Opportunities.

Applications are as integral to F5 technologies as they are to your business. An old adage holds that an individual can be judged by the company he keeps. If that holds true for organizations, then F5 would do well to be judged by the vast array of individual contributors, partners, and customers in its ecosystem. From its long history of partnering with companies like Microsoft, IBM, HP, Dell, VMware, Oracle, and SAP to its astounding community of over 160,000 engineers, administrators and developers speaks volumes about its commitment to and ability to develop joint and custom solutions. F5 is committed to delivering applications no matter where they might reside or what architecture they might be using. Because of its full proxy architecture, F5’s ADC platform is able to intercept, inspect and interact with applications at every layer of the network. That means tuning TCP stacks for mobile apps, protecting web applications from malicious code whether they’re talking JSON or XML, and optimizing delivery via HTTP (or HTTP 2.0 or SPDY) by understanding the myriad types of content that make up a web application: CSS, images, JavaScript and HTML. But being application-driven goes beyond delivery optimization and must cover the broad spectrum of technologies needed not only to deliver an app to a consumer or employee, but manage its availability, scale and security. Every application requires a supporting cast of services to meet a specific set of business and user expectations, such as logging, monitoring and failover. Over the 18 years in which F5 has been delivering applications it has developed technologies specifically geared to making sure these supporting services are driven by applications, imbuing each of them with the application awareness and intelligence necessary to efficiently scale, secure and keep them available. With the increasing adoption of hybrid cloud architectures and the need to operationally scale the data center, it is important to consider the depth and breadth to which ADC automation and orchestration support an application focus. Whether looking at APIs or management capabilities, an ADC should provide the means by which the services applications need can be holistically provisioned and managed from the perspective of the application, not the individual services. Technology that is application-driven, enabling app owners and administrators the ability to programmatically define provisioning and management of all the application services needed to deliver the application is critical moving forward to ensure success. F5 iApps and F5 BIG-IQ Cloud do just that, enabling app owners and operations to rapidly provision services that improve the security, availability and performance of the applications that are the future of the business. That programmability is important, especially as it relates to applications according to our recent survey (results forthcoming)in which a plurality of respondents indicated application templates are "somewhat or very important" to the provisioning of their applications along with other forms of programmability associated with software-defined architectures including cloud computing. Applications increasingly represent opportunity, whether it's to improve productivity or increase profit. Capabilities that improve the success rate of those applications are imperative and require a deeper understanding of an application and its unique delivery needs than a protocol and a port. F5 not only partners with application providers, it encapsulates the expertise and knowledge of how best to deliver those applications in its technologies and offers that same capability to each and every organization to tailor the delivery of their applications to meet and exceed security, reliability and performance goals. Because applications aren't just a set of protocols and ports, they're opportunities. And how you respond to opportunity is as important as opening the door in the first place.330Views0likes0CommentsF5 BIG-IP Platform Security

When creating any security-enabled network device, development teams must fully investigate security of the device itself to ensure it cannot be compromised. A gate provides no security to a house if the gap between the bars is large enough to drive a truck through. Many highly effective exploits have breached the very software and hardware that are designed to protect against them. If an attacker can breach the guards, then they don’t need to worry about being stealthy, meaning if one can compromise the box, then they probably can compromise the code. F5 BIG-IP Application Delivery Controllers are positioned at strategic points of control to manage an organization’s critical information flow. In the BIG-IP product family and the TMOS operating system, F5 has built and maintained a secure and robust application delivery platform, and has implemented many different checks and counter-checks to ensure a totally secure network environment. Application delivery security includes providing protection to the customer’s Application Delivery Network (ADN), and mandatory and routine checks against the stack source code to provide internal security—and it starts with a secure Application Delivery Controller. The BIG-IP system and TMOS are designed so that the hardware and software work together to provide the highest level of security. While there are many factors in a truly secure system, two of the most important are design and coding. Sound security starts early in the product development process. Before writing a single line of code, F5 Product Development goes through a process called threat modeling. Engineers evaluate each new feature to determine what vulnerabilities it might create or introduce to the system. F5’s rule of thumb is a vulnerability that takes one hour to fix at the design phase, will take ten hours to fix in the coding phase and one thousand hours to fix after the product is shipped—so it’s critical to catch vulnerabilities during the design phase. The sum of all these vulnerabilities is called the threat surface, which F5 strives to minimize. F5, like many companies that develop software, has invested heavily in training internal development staff on writing secure code. Security testing is time-consuming and a huge undertaking; but it’s a critical part of meeting F5’s stringent standards and its commitment to customers. By no means an exhaustive list but the BIG-IP system has a number of features that provide heightened and hardened security: Appliance mode, iApp Templates, FIPS and Secure Vault Appliance Mode Beginning with version 10.2.1-HF3, the BIG-IP system can run in Appliance mode. Appliance mode is designed to meet the needs of customers in industries with especially sensitive data, such as healthcare and financial services, by limiting BIG-IP system administrative access to match that of a typical network appliance rather than a multi-user UNIX device. The optional Appliance mode “hardens” BIG-IP devices by removing advanced shell (Bash) and root-level access. Administrative access is available through the TMSH (TMOS Shell) command-line interface and GUI. When Appliance mode is licensed, any user that previously had access to the Bash shell will now only have access to the TMSH. The root account home directory (/root) file permissions have been tightened for numerous files and directories. By default, new files are now only user readable and writeable and all directories are better secured. iApp Templates Introduced in BIG-IP v11, F5 iApps is a powerful new set of features in the BIG-IP system. It provides a new way to architect application delivery in the data center, and it includes a holistic, application-centric view of how applications are managed and delivered inside, outside, and beyond the data center. iApps provide a framework that application, security, network, systems, and operations personnel can use to unify, simplify, and control the entire ADN with a contextual view and advanced statistics about the application services that support business. iApps are designed to abstract the many individual components required to deliver an application by grouping these resources together in templates associated with applications; this alleviates the need for administrators to manage discrete components on the network. F5’s new NIST 800-53 iApp Template helps organizations become NIST-compliant. F5 has distilled the 240-plus pages of guidance from NIST into a template with the relevant BIG-IP configuration settings—saving organizations hours of management time and resources. Federal Information Processing Standards (FIPS) Developed by the National Institute of Standards and Technology (NIST), Federal Information Processing Standards are used by United States government agencies and government contractors in non-military computer systems. FIPS 140 series are U.S. government computer security standards that define requirements for cryptography modules, including both hardware and software components, for use by departments and agencies of the United States federal government. The requirements cover not only the cryptographic modules themselves but also their documentation. As of December 2006, the current version of the standard is FIPS 140-2. A hardware security module (HSM) is a secure physical device designed to generate, store, and protect digital, high-value cryptographic keys. It is a secure crypto-processor that often comes in the form of a plug-in card (or other hardware) with tamper protection built in. HSMs also provide the infrastructure for finance, government, healthcare, and others to conform to industry-specific regulatory standards. FIPS 140 enforces stronger cryptographic algorithms, provides good physical security, and requires power-on self tests to ensure a device is still in compliance before operating. FIPS 140-2 evaluation is required to sell products implementing cryptography to the federal government, and the financial industry is increasingly specifying FIPS 140-2 as a procurement requirement. The BIG-IP system includes a FIPS cryptographic/SSL accelerator—an HSM option specifically designed for processing SSL traffic in environments that require FIPS 140-1 Level 2–compliant solutions. Many BIG-IP devices are FIPS 140-2 Level 2–compliant. This security rating indicates that once sensitive data is imported into the HSM, it incorporates cryptographic techniques to ensure the data is not extractable in a plain-text format. It provides tamper-evident coatings or seals to deter physical tampering. The BIG-IP system includes the option to install a FIPS HSM (BIG-IP 6900, 8900, 11000, and 11050 devices). BIG-IP devices can be customized to include an integrated FIPS 140-2 Level 2–certified SSL accelerator. Other solutions require a separate system or a FIPS-certified card for each web server; but the BIG-IP system’s unique key management framework enables a highly scalable secure infrastructure that can handle higher traffic levels and to which organizations can easily add new services. Additionally the FIPS cryptographic/SSL accelerator uses smart cards to authenticate administrators, grant access rights, and share administrative responsibilities to provide a flexible and secure means for enforcing key management security. Secure Vault It is generally a good idea to protect SSL private keys with passphrases. With a passphrase, private key files are stored encrypted on non-volatile storage. If an attacker obtains an encrypted private key file, it will be useless without the passphrase. In PKI (public key infrastructure), the public key enables a client to validate the integrity of something signed with the private key, and the hashing enables the client to validate that the content was not tampered with. Since the private key of the public/private key pair could be used to impersonate a valid signer, it is critical to keep those keys secure. Secure Vault, a super-secure SSL-encrypted storage system introduced in BIG-IP version 9.4.5, allows passphrases to be stored in an encrypted form on the file system. In BIG-IP version 11, companies now have the option of securing their cryptographic keys in hardware, such as a FIPS card, rather than encrypted on the BIG-IP hard drive. Secure Vault can also encrypt certificate passwords for enhanced certificate and key protection in environments where FIPS 140-2 hardware support is not required, but additional physical and role-based protection is preferred. In the absence of hardware support like FIPS/SEEPROM (Serial (PC) Electrically Erasable Programmable Read-Only Memory), Secure Vault will be implemented in software. Even if an attacker removed the hard disk from the system and painstakingly searched it, it would be nearly impossible to recover the contents due to Secure Vault AES encryption. Each BIG-IP device comes with a unit key and a master key. Upon first boot, the BIG-IP system automatically creates a master key for the purpose of encrypting, and therefore protecting, key passphrases. The master key encrypts SSL private keys, decrypts SSL key files, and synchronizes certificates between BIG-IP devices. Further increasing security, the master key is also encrypted by the unit key, which is an AES 256 symmetric key. When stored on the system, the master key is always encrypted with a hardware key, and never in the form of plain text. Master keys follow the configuration in an HA (high-availability) configuration so all units would share the same master key but still have their own unit key. The master key gets synchronized using the secure channel established by the CMI Infrastructure as of BIG-IP v11. The master key encrypted passphrases cannot be used on systems other than the units for which the master key was generated. Secure Vault support has also been extended for vCMP guests. vCMP (Virtual Clustered Multiprocessing) enables multiple instances of BIG-IP software to run on one device. Each guest gets their own unit key and master key. The guest unit key is generated and stored at the host, thus enforcing the hardware support, and it’s protected by the host master key, which is in turn protected by the host unit key in hardware. Finally F5 provides Application Delivery Network security to protect the most valuable application assets. To provide organizations with reliable and secure access to corporate applications, F5 must carry the secure application paradigm all the way down to the core elements of the BIG-IP system. It’s not enough to provide security to application transport; the transporting appliance must also provide a secure environment. F5 ensures BIG-IP device security through various features and a rigorous development process. It is a comprehensive process designed to keep customers’ applications and data secure. The BIG-IP system can be run in Appliance mode to lock down configuration within the code itself, limiting access to certain shell functions; Secure Vault secures precious keys from tampering; and optional FIPS cards ensure organizations can meet or exceed particular security requirements. An ADN is only as secure as its weakest link. F5 ensures that BIG-IP Application Delivery Controllers use an extremely secure link in the ADN chain. ps Resources: F5 Security Solutions Security is our Job (Video) F5 BIG-IP Platform Security (Whitepaper) Security, not HSMs, in Droves Sometimes It Is About the Hardware Investing in security versus facing the consequences | Bloor Research White Paper Securing Your Enterprise Applications with the BIG-IP (Whitepaper) TMOS Secure Development and Implementation (Whitepaper) BIG-IP Hardware Updates – SlideShare Presentation Audio White Paper - Application Delivery Hardware A Critical Component F5 Introduces High-Performance Platforms to Help Organizations Optimize Application Delivery and Reduce Costs Technorati Tags: F5, PCI DSS, virtualization, cloud computing, Pete Silva, security, coding, iApp, compliance, FIPS, internet, TMOS, big-ip, vCMP485Views0likes1CommentTo Err is Human

#devops Automating incomplete or ineffective processes will only enable you to make mistakes faster – and more often. Most folks probably remember the play on "to err is human…" proverb when computers first began to take over, well, everything. The saying was only partially tongue-in-cheek, because as we've long since learned the reality is that computers allow us to make mistakes faster and more often and with greater reach. One of the statistics used to justify a devops initiative is the rate at which human error contributes to a variety of operational badness: downtime, performance, and deployment life-cycle time. Human error is a non-trivial cause of downtime and other operational interruptions. A recent Paragon Software survey found that human error was cited as a cause of downtime by 13.2% of respondents. Other surveys have indicated rates much higher. Gartner analysts Ronni J. Colville and George Spafford in "Configuration Management for Virtual and Cloud Infrastructures" predict as much as 80% of outages through 2015 impacting mission-critical services will be caused by "people and process" issues. Regardless of the actual rates at which human error causes downtime or other operational disruptions, reality is that it is a factor. One of the ways in which we hope to remediate the problem is through automation and devops. While certainly an appropriate course of action, adopters need to exercise caution when embarking on such an initiative, lest they codify incomplete or inefficient processes that simply promulgate errors faster and more often. DISCOVER, REMEDIATE, REFINE, DEPLOY Something that all too often seems to be falling by the wayside is the relationship between agile development and agile operations. Agile isn't just about fast(er) development cycles, it's about employing a rapid, iterative process to the development cycle. Similarly, operations must remember that it is unlikely they will "get it right" the first time and, following agile methodology, are not expected to. Process iteration assists in discovering errors, missing steps, and other potential sources of misconfiguration that are ultimately the source of outages or operational disruption. An organization that has experienced outages due to human error are practically assured that they will codify those errors into automation frameworks if they do not take the time to iteratively execute on those processes to find out where errors or missing steps may lie. It is process that drives continuous delivery in development and process that must drive continuous delivery in devops. Process that must be perfected first through practice, through the application of iterative models of development on devops automation and orchestration. What may appear as a tedious repetition is also an opportunity to refine the process. To discover and eliminate inefficiencies that streamline the deployment process and enable faster time to market. Inefficiencies that are generally only discovered when someone takes the time to clearly document all steps in the process – from beginning (build) to end (production). Cross-functional responsibilities are often the source of such inefficiencies, because of the overlap between development, operations, and administration. The outage of Microsoft’s cloud service for some customers in Western Europe on 26 July happened because the company’s engineers had expanded capacity of one compute cluster but forgot to make all the necessary configuration adjustments in the network infrastructure. -- Microsoft: error during capacity expansion led to Azure cloud outage Applying an agile methodology to the process of defining and refining devops processes around continuous delivery automation enables discovery of the errors and missing steps and duplicated tasks that bog down or disrupt the entire chain of deployment tasks. We all know that automation is a boon for operations, particularly in organizations employing virtualization and cloud computing to enable elasticity and improved provisioning. But what we need to remember is that if that automation simply encodes poor processes or errors, then automation just enables to make mistakes a whole lot faster. Take care to pay attention to process and to test early, test often. How to Prevent Cascading Error From Causing Outage Storm In Your Cloud Environment? DOWNTIME, OUTAGES AND FAILURES - UNDERSTANDING THEIR TRUE COSTS Devops Proverb: Process Practice Makes Perfect 1024 Words: The Devops Butterfly Effect Devops is a Verb BMC DevOps Leadership Series308Views0likes0CommentsIt is All About Repeatability and Consistency.

#f5 it is often more risky to skip upgrading than to upgrade. Know the risk/benefits of both. Not that I need to tell you, but there are several things in your network that you could have better control of. Whether it is consistent application of security policy or consistent configuration of servers, or even the setup of network devices, they’re in there, being non-standard. And they’re costing you resources in the long run. Sure, the staff today knows exactly how to tweak settings on each box to make things perform better, and knows how to improve security on this given device for this given use, but eventually, it won’t be your current staff responsible for these things, and that new staff will have one heck of a learning curve unless you’re far better at documentation of exceptions than most organizations. Sometimes, exceptions are inevitable. This device has a specific use that requires specific settings you would not want to apply across the data center. That’s one of the reasons IT exists, is to figure that stuff out so the business runs smoothly, no? But sometimes it is just technology holding you back from standardizing. Since I’m not slapping around anyone else by doing so, I’ll use my employer as an example of technology and how changes to it can help or hinder you. Version 9.X of TMOS – our base operating system – was hugely popular, and is still in use in a lot of environments, even though we’re on version 11.X and have a lot of new and improved things in the system. The reason is change limitation (note: Not change control, but limitation). Do you upgrade a network device that is doing what it is supposed to simply because there’s a newer version of firmware? it is incumbent upon vendors to give you a solid reason why you should. I’ve had reason to look into an array of cloud based accounting services of late, and frankly, there is not a compelling reason offered by the major software vendors to switch to their cloud model and become even more dependent upon the vendor (who would now be not only providing software but storing your data also). I feel that F5 has offered plenty of solid reasons to upgrade, but if you’re in a highly complex or highly regulated environment, solid reasons to upgrade do not always equate to upgrades being undertaken. Again, the risk/reward ratio has to be addressed at some point. And I think there is a reluctance in many enterprises to consider the benefits of upgrading. I was at a large enterprise that was using Windows 95 as a desktop standard in 2002. Why? Because they believed the risks inherent to moving to a new version of Windows corporate wide were greater than the risks of staying. Frankly, by the time it was 2002, there was PLENTY of evidence that Windows 98 was stable and a viable replacement for Windows 95. You see the same phenomenon today. Lots of enterprises are still limping along with Windows XP, even though by-and-large, Windows 7 is a solid OS. In the case of F5, there is a feature in the 11.X series of updates to TMOS that should, by itself, offer driving reason to upgrade. I think that it has not been seriously considered by some of our customers for the same reason as the Windows upgrades are slow – if you don’t look at what benefits it can bring, the risk of upgrading can scare you. But BIG-IP running TMOS 11.X has an astounding set of functionality called iApps that allow you to standardize how network objects – for load balancing, security, DNS services, WAN Optimization, Web App Firewalling, and a host of other network services – are deployed for a given type of application. Need to deploy, load balance, and protect Microsoft Exchange? Just run the iApp in the web UI. It asks you a few questions, and then creates everything needed, based upon your licensing options and your answers to the questions. Given that you can further implement your own iApps, you can guarantee that every instance of a given application has the exact same network objects deployed to make it secure, fast, and available. From an auditing perspective, it gives a single location (the iApp) for information about all applications of the same type. There are pre-generated iApps for a whole host of applications, and a group here on DevCentral that is dedicated to user developed iApps. There is even a repository of iApps on DevCentral. And what risk is perceived from upgrading is more than mitigated by the risk reduction in standardizing the deployment and configuration of network objects to support applications. IIS has specific needs, but all IIS can be configured the same using the IIS iApp, reducing the risk of operator error or auditing gotcha. I believe that Microsoft did a good job of putting out info about Windows 7, and that organizations were working on risk avoidance and cost containment. The same is true of F5 and TMOS 11.X. I believe that happens a lot in the enterprise, and it’s not always the best solution in the long run. You cannot know which is more risky – upgrading or not – until you know what the options are. I don’t think there are very many professional IT staff that would say staying with Windows 95 for years after Windows 98 was out was a good choice, hindsight being 20/20 and all. Look around your datacenter. Consider the upgrade options. Do some research, make sure you are aware of what not upgrading a device, server, desktop, whatever is as well as you understand the risks of performing the upgrade. And yeah, I know you’re crazy busy. I also know that many upgrades offer overall time savings, with an upfront cost. If you don’t invest in time-saving, you’ll never reap time savings. Rocking it every day, like most of you do, is only enough as long as there are enough hours in the day. And there are never enough hours in the IT day. As I mentioned at #EnergySec2012 last week, there are certainly never enough hours in the InfoSec day.239Views0likes0CommentsF5 ... Wednesday: Bye Bye Branch Office Blues

#virtualization #VDI Unifying desktop management across multiple branch offices is good for performance – and operational sanity. When you walk into your local bank, or local retail outlet, or one of the Starbucks in Chicago O'Hare, it's easy to forget that these are more than your "local" outlets for that triple grande dry cappuccino or the latest in leg-warmer fashion (either I just dated myself or I'm incredibly aware of current fashion trends, you decide which). For IT, these branch offices are one of many end nodes on a corporate network diagram located at HQ (or the mother-ship, as some of us known as 'remote workers' like to call it) that require care and feeding – remotely. The number of branch offices continues to expand and, regardless of how they're counted, number in the millions. In a 2010 report, the Internet Research Group (IRG) noted: Over the past ten years the number of branch office locations in the US has increased by over 21% from a base of about 1.4M branch locations to about 1.7M at the end of 2009. While back in 2004, IDC research showed four million branch offices, as cited by Jim Metzler: The fact that there are now roughly four million branch offices supported by US businesses gives evidence to the fact that branch offices are not going away. However, while many business leaders, including those in the banking industry, were wrong in their belief that branch offices were unnecessary, they were clearly right in their belief that branch offices are expensive. One of the reasons that branch offices are expensive is the sheer number of branch offices that need to be supported. For example, while a typical company may have only one or two central sites, they may well have tens, hundreds or even thousands of branch offices. -- The New Branch Office Network - Ashton, Metzler & Associates Discrepancies appear to derive from the definition of "branch office" – is it geographic or regional location that counts? Do all five Starbucks at O'Hare count as five separate branch offices or one? Regardless how they're counted, the numbers are big and growth rates say it's just going to get bigger. From an IT perspective, which has trouble scaling to keep up with corporate data center growth let alone branch office growth, this spells trouble. Compliance, data protection, patches, upgrades, performance, even routine troubleshooting are all complicated enough without the added burden of accomplishing it all remotely. Maintaining data security, too, is a challenge when remote offices are involved. It is just these challenges that VMware seeks to address with its latest Branch Office Desktop solution set, which lays out two models for distributing and managing virtual desktops (based on VMware View, of course) to help IT mitigate if not all then most of the obstacles IT finds most troubling when it comes to branch office anything. But as with any distributed architecture constrained by bandwidth and technological limitations, there are areas that benefit from a boost from VMware partners. As long-time strategic and technology partners, F5 brings its expertise in improving performance and solving unique architectural challenges to the VMware Branch Office Desktop (BOD) solution, resulting in LAN-like convenience and a unified namespace with consistent access policy enforcement from HQ to wherever branch offices might be located. F5 Streamlines Deployments of Branch Office Desktops KEY BENEFITS · Local and global intelligent traffic management with single namespace and username persistence support · Architectural freedom of combining Virtual Editions with Physical Appliances · Optimized WAN connectivity between branches and primary data centers Using BIG-IP Global Traffic Manager (GTM), a single namespace (for example, https://desktop.example.com) can be provided to all end users. BIG-IP GTM and BIG-IP Local Traffic Manager (LTM) work together to ensure that requests are sent to a user’s preferred data center, regardless of the user’s current location. BIG-IP Access Policy Manager (APM) validates the login information against the existing authentication and authorization mechanisms such as Active Directory, RADIUS, HTTP, or LDAP. In addition, BIG-IP LTM works with the F5 iRules scripting language, which allows administrators to configure custom traffic rules. F5 Networks has tested and published an innovative iRule that maintains connection persistence based on the username, irrespective of the device or location. This means that a user can change devices or locations and log back in to be reconnected to a desktop identical to the one last used. By taking advantage of BIG-IP LTM to securely connect branch offices with corporate headquarters, users benefit from optimized WAN services that dramatically reduce transfer times and performance of applications relying on data center-hosted resources. Together, F5 and VMware can provide more efficient delivery of virtual desktops to the branch office without sacrificing performance or security or the end-user experience.211Views0likes0CommentsIn the Cloud, It's the Little Things That Get You. Here are nine of them.

#F5 Eight things you need to consider very carefully when moving apps to the cloud. Moving to a model that utilizes the cloud is a huge proposition. You can throw some applications out there without looking back – if they have no ties to the corporate datacenter and light security requirements, for example – but most applications require quite a bit of work to make them both mobile and stable. Just connections to the database raise all sorts of questions, and most enterprise level applications require connections to DC databases. But these are all problems people are talking about. There are ways to resolve them, ugly though some may be. The problems that will get you are the ones no one is talking about. So of course, I’m happy to dive into the conversation with some things that would be keeping me awake were I still running a datacenter with a lot of interconnections and getting beat up with demands for cloudy applications. The last year has proven that cloud services WILL go down, you can’t plan like it won’t, regardless of the hype. When they do, your databases must be 100% in synch, or business will be lost. 100%. Your DNS infrastructure will need attention, possibly for the first time since you installed it. Serving up addresses from both local and cloud providers isn’t so simple. Particularly during downtimes. Security – both network and app - will have to be centralized. You can implement separate security procedures for each deployment environment, but you are only as strong as your weakest link, and your staff will have to remember which policies apply where if you go that route. Failure plans will have to be flexible. What if part of your app goes down? What if the database is down, but the web pages are fine – except for that “failed to connect to database” error? No matter what the hype says, the more places you deploy, the more likelihood that you’ll have an outage. The IT Managers’ role is to minimize that increase. After a failure, recovery plans will also need to be flexible. What if part of your app comes up before the rest? What if the database spins up, but is now out of synch with your backup or alternate database? When (not if) a security breech occurs on a cloud hosted server, how much responsibility does the cloud provider have to help you clean up? Sometimes it takes more than spinning down your server to clean up a mess, after all. If you move mission-critical data to the cloud, how are you protecting it? Contrary to the wild claims of the clouderati, your data is in a location you do not have 100% visibility into, you’re going to have to take extra steps to protect it. If you’re opening connections back to the datacenter from the cloud, how are you protecting those connections? They’re trusted server to trusted server, but “trusted” is now relative. Of course there are solutions brewing for most of these problems. Here are the ones I am aware of, I guarantee that, since I do not “read all of the Internets” each day (Lori does), I’m missing some, but it can get you started. Just include cloud in your DR plans, what will you do if service X disappears? Is the information on X available somewhere else? Can you move the app elsewhere and update DNS quickly enough? Global Server Load Balancing (GSLB) will help with this problem and others on the list – it will eliminate the DNS propagation lag at least. But beware, for many cloud vendors it is harder to do DR. Check what capabilities your provider supports. There are tools available that just don’t get their fair share of thunder, IMO – like Oracle GoldenGate – that replicate each SQL command to a remote database. These systems create a backup that exactly mirrors the original. As long as you don’t get a database modifying attack that looks valid to your security systems, these architectures and products are amazing. People generally don’t care where you host apps, as long as when they type in the URL or click on the URL, it takes them to the correct location. Global DNS and GSLB will take care of this problem for you. Get policy-based security that can be deployed anywhere, including the cloud, or less attractively (and sometimes impractically), code security into the app so the security moves with it. Application availability will have to go through another round like it did when we went distributed and then SOA. Apps will have to be developed with an eye to “is critical service X up?” where service X might well be in a completely different location from the app. If not, remedial steps will have to occur before the App can claim to be up. Or local Load Balancing can buffer you by making service X several different servers/virtuals. What goes down (hopefully) must come back up. But the same safety steps implemented in #5 will cover #6 nicely, for the most part. Database consistency checks are the big exception, do those on recovery. Negotiate this point if you can. Lots of cloud providers don’t feel the need to negotiate anything, but asking the questions will give you more information. Perhaps take your business to someone who will guarantee full cooperation in fixing your problems. If you actually move critical databases to the cloud, encrypt them. Yeah, I do know it’s expensive in processing power, but they’re outside the area you can 100% protect. So take the necessary step. Secure tunnels are your friend. Really. Don’t just open a hole in your firewall and let “trusted” servers in, because it is possible to masquerade as a trusted server. Create secure tunnels, and protect the keys. That’s it for now. The cloud has a lot of promise, but like everything else in mid hype cycle, you need to approach the soaring commentary with realistic expectations. Protect your data as if it is your personal charge, because it is. The cloud provider is not the one (or not the only one) who will be held accountable when things go awry. So use it to keep doing what you do – making your organization hum with daily business – and avoid the pitfalls where ever possible. In my next installment I’ll be trying out the new footer Lori is using, looking forward to your feedback. And yes, I did put nine in the title to test the “put an odd number list in, people love that” theory. I think y’all read my stuff because I’m hitting relatively close to the mark, but we’ll see now, won’t we?205Views0likes0CommentsF5 Friday: Automating Operations with F5 and VMware

#cloud #virtualization #vmworld #devops Integrating F5 and VMware with the vCloud Ecosystem Framework to achieve automated operations A third of IT professionals, when asked about the status of their IT cross-collaboration efforts 1 (you know, networking and server virtualization groups working together) indicate that sure, it's a high priority, but a lack of tools makes it difficult to share information and collaborate proactively. Whether we're talking private cloud or dynamic data center efforts, that collaboration is essential to realizing the efficiency promised by these modern models in part by the ability to automate scalability, i.e. elasticity. While virtualization vendors have invested a lot of effort in developing APIs that provide extensibility and control, automating those infrastructures is simply not a part of the core virtualization feature set. And yet, controlling a virtualized infrastructure is going to be a key point of any automation strategy, because virtualization is where your resource pools and elasticity live. -- Information Week reports, "Automating the Private Cloud", Jake McTigue Consider that in a recent sampling of more than 2003 BIG-IPs the majority of resource pools comprised either 10 to 50 members or anywhere from 100 to 999 members, with the average across all BIG-IPs being about 102 members. The member of a pool, in the load balancing vernacular by the way, is an application service: the combination of an IP address and a port, such as defines a web or e-mail or other application service. Such services might be traditional (physical) or hosted in a virtual machine. That's a lot of individual services that need to be managed and, more importantly, at some point deployed. And as we know, deploying an application isn't just launching a VM – it's managing the network components that may go along with it, as well. While leveraging an application delivery controller as a strategic point of control insulates organizations from the impact of such voluminous change on delivery services such as security, access control, and capacity, it doesn't mean it is immune from the impact of such change itself. After all, for elasticity to occur the load balancing service must be aware of changes in its pool of resources. Members must be added or removed, and the appropriate health monitoring enabled or disabled to ensure real-time visibility into status. A lack of tools to automate the infrastructure collaboration necessary to deploy and subsequently manage changes to applications is a part of the perception that IT is sluggish to respond, and why many cite lengthy application deployment times as problematic for their organization. THE TOOLS to COLLABORATE and ENABLE AUTOMATION VMware and F5 both seek to provide technologies that make software defined data centers a reality. A key component is the ability to integrate application services into data center operations and thus enable the automation of the application deployment lifecycle. One way we're enabling that is through the VMware vCloud Ecosystem Framework (vCEF). Designed to allow third-parties to integrate with VMware vShield Manager which can then integrate with VMware vCloud Director, enabling private or public cloud or dynamic data center deployments. The integrated solution takes advantage of F5's northbound API as well as vShield Manager's REST-based API to enable bi-directional collaboration between vShield Manager and F5 management solutions. Through this collaboration, a VMware vApp as well as an F5 iApp can be deployed. Together, these two packages describe an application – from end-to-end. Deployment of required application delivery services occurs when F5's management solution uses its southbound API to instruct appropriate F5 BIG-IP devices to execute the appropriate iApp. The iApp is automatically executed again upon any change in resource pool make-up, i.e. a virtual machine is launched or de-provisioned. This enables the automatic elasticity desired to manage volatility automatically, without requiring lengthy manual processes to add or remove resources from a pool. It also enables newly deployed application to be delivered with the appropriate set of application delivery settings, such as those encapsulated in F5 developed iApps that define the optimal TCP, HTTP, and network parameters for specific applications. The business and operational benefits are fairly straightforward – you're automating a process that spans IT groups and infrastructure, and gaining the ability to create repeatable, successful application deployments that can be provisioned in minutes rather than days. This is just one of the many joint solutions F5 and VMware have developed over the past few years. Whether it's VDI or server virtualization, intra or inter-data center, we've got a solution for VMware technology that will enhance the security, performance, and reliability of not just the delivery of applications, but their deployment. 1 Enterprise Management Associates' 2012 Network Automation Survey Results Additional Resources for F5 and VMware Solutions Related blogs and articles Enabling IT Agility with the BIG-IP System and VMware vCloud Operationalizing Elastic Applications F5 and vCloud Solutions Username Persistence for VMware View Deployments Enable Single Namespace for VMware View Deployments F5 BIG-IP Enhances VMware View 5.0 on FlexPod How to Have Your (VDI) Cake and Deliver it Too F5 Solutions for VMware View Mobile Secure Desktop The Cloud’s Hidden Costs Hype Cycles, VDI, and BYOD Devops Proverb: Process Practice Makes Perfect F5 Friday: Programmability and Infrastructure as Code Lori MacVittie is a Senior Technical Marketing Manager, responsible for education and evangelism across F5’s entire product suite. Prior to joining F5, MacVittie was an award-winning technology editor at Network Computing Magazine. She holds a B.S. in Information and Computing Science from the University of Wisconsin at Green Bay, and an M.S. in Computer Science from Nova Southeastern University. She is the author of XAML in a Nutshell and a co-author of The Cloud Security Rules241Views0likes0Comments