Getting Started with iRules LX, Part 4: NPM & Best Practices

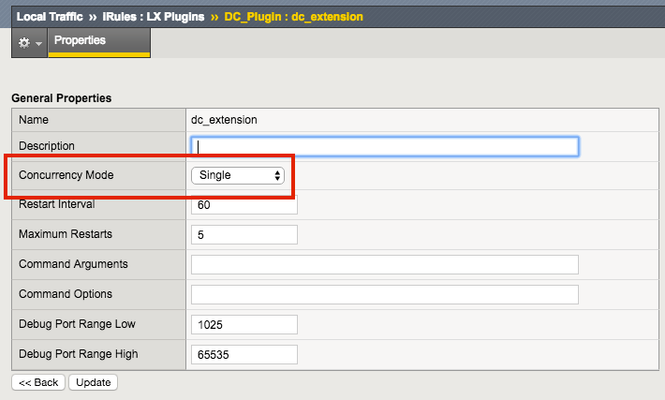

So far in this series we've covered basic nomenclature and concepts, and in the last article actually dug into the code that makes it all work. At this point, I'm sure the wheels of possibilities are turning in your minds, cooking up all the nefarious interesting ways to extend your iRules repertoire. The great thing about iRules LX, as we'll discuss in the onset of this article, is that a lot of the heavy lifting has probably already been done for you. The Node.js package manager, or NPM, is a living, breathing, repository of 280,000+ modules you won't have to write yourself should you need them! Sooner or later you will find a deep desire or maybe even a need to install packages from NPM to help fulfill a use case. Installing packages with NPM NPM on BIG-IP works much of the same way you use it on a server. We recommend that you not install modules globally because when you export the workspace to another BIG-IP, a module installed globally won't be included in the workspace package. To install an NPM module, you will need to access the Bash shell of your BIG-IP. First, change directory to the extension directory that you need to install a module in. Note: F5 Development DOES NOT host any packages or provide any support for NPM packages in any way, nor do they provide security verification, code reviews, functionality checks, or installation guarantees for specific packages. They provide ONLY core Node.JS, which currently, is confined only to versions 0.12.15 and 6.9.1. The extension directory will be at /var/ilx/workspaces/<partition_name>/<workspace_name>/extensions/<extension_name>/ . Once there you can run NPM commands to install the modules as shown by this example (with a few ls commands to help make it more clear) - [root@test-ve:Active:Standalone] config # cd /var/ilx/workspaces/Common/DevCentralRocks/extensions/dc_extension/ [root@test-ve:Active:Standalone] dc_extension # ls index.js node_modules package.json [root@test-ve:Active:Standalone] dc_extension # npm install validator --save validator@5.3.0 node_modules/validator [root@test-ve:Active:Standalone] dc_extension # ls node_modules/ f5-nodejs validator The one caveat to installing NPM modules on the BIG-IP is that you can not install native modules. These are modules written in C++ and need to be complied. For obvious security reasons, TMOS does not have a complier. Best Practices Node Processes It would be great if you could spin up an unlimited amount of Node.js processes, but in reality there is a limit to what we want to run on the control plane of our BIG-IP. We recommend that you run no more than 50 active Node processes on your BIG-IP at one time (per appliance or per blade). Therefore you should size the usage of Node.js accordingly. In the settings for an extension of a LX plugin, you will notice there is one called concurrency - There are 2 possible concurrency settings that we will go over. Dedicated Mode This is the default mode for all extensions running in a LX Plugin. In this mode there is one Node.js process per TMM per extension in the plugin. Each process will be "dedicated" to a TMM. To know how many TMMs your BIG-IP has, you can run the following TMSH command - root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # show sys tmm-info | grep Sys::TMM Sys::TMM: 0.0 Sys::TMM: 0.1 This shows us we have 2 TMMs. As an example, if this BIG-IP had a LX plugin with 3 extensions, I would have a total of 6 Node.js processes. This mode is best for any type of CPU intensive operations, such as heavy parsing data or doing some type of lookup on every request, an application with massive traffic, etc. Single Mode In this mode, there is one Node.js process per extension in the plugin and all TMMs share this "single" process. For example, one LX plugin with 3 extensions will be 3 Node.js processes. This mode is ideal for light weight processes where you might have a low traffic application, only do a data lookup on the first connection and cache the result, etc. Node.js Process Information The best way to find out information about the Node.js processes on your BIG-IP is with the TMSH command show ilx plugin . Using this command you should be able to choose the best mode for your extension based upon the resource usage. Here is an example of the output - root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # show ilx plugin DC_Plugin --------------------------------- ILX::Plugin: DC_Plugin --------------------------------- State enabled Log Publisher local-db-publisher ------------------------------- | Extension: dc_extension ------------------------------- | Status running | CPU Utilization (%) 0 | Memory (bytes) | Total Virtual Size 1.1G | Resident Set Size 7.7K | Connections | Active 0 | Total 0 | RPC Info | Total 0 | Notifies 0 | Timeouts 0 | Errors 0 | Octets In 0 | Octets Out 0 | Average Latency 0 | Max Latency 0 | Restarts 0 | Failures 0 --------------------------------- | Extension Process: dc_extension --------------------------------- | Status running | PID 16139 | TMM 0 | CPU Utilization (%) 0 | Debug Port 1025 | Memory (bytes) | Total Virtual Size 607.1M | Resident Set Size 3.8K | Connections | Active 0 | Total 0 | RPC Info | Total 0 | Notifies 0 | Timeouts 0 | Errors 0 | Octets In 0 | Octets Out 0 | Average Latency 0 | Max Latency 0 From this you can get quite a bit of information, including which TMM the process is assigned to, PID, CPU, memory and connection stats. If you wanted to know the total number of Node.js processes, that same command will show you every process and it could get quite long. You can use this quick one-liner from the bash shell (not TMSH) to count the Node.js processes - [root@test-ve:Active:Standalone] config # tmsh show ilx plugin | grep PID | wc -l 16 File System Read/Writes Since Node.js on BIG-IP is pretty much stock Node, file system read/writes are possible but not recommended. If you would like to know more about this and other properties of Node.js on BIG-IP, please see AskF5 Solution ArticleSOL16221101. Note:NPMs with symlinks will no longer work in 14.1.0+ due to SELinux changes In the next article in this series we will cover troubleshooting and debugging.2.5KViews1like4CommentsThe PingIntelligence and F5 BIG-IP Solution for Securing APIs

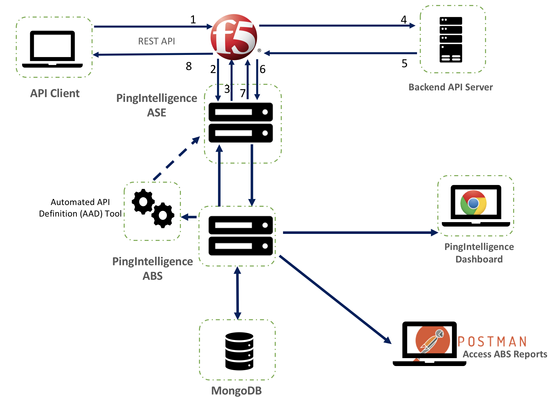

This article describes the PingIntelligence and F5 BIG-IP solution deployment for securing APIs. The integration identifies and automatically blocks cyber attacks on APIs, exposes active APIs, and provides detailed reporting on all API activity. Solution Overview PingIntelligence is deployed in a side-band configuration with F5 BIG-IP. A PingIntelligence policy is installed in F5 BIG-IP and passes API metadata to PingIntelligence for detailed API activity reporting and attack detection with optional client blocking. PingIntelligence software includes support for reporting and attack detection based on usernames captured from JSON Web Token (JWT). Following is a description of the traffic flow through F5 BIG-IP and PingIntelligence API Security Enforcer (ASE): The client sends an incoming request to F5 BIG-IP F5 BIG-IP makes an API call to send the request metadata to ASE ASE checks the request against a registered set of APIs and looks for the origin IP, cookie, OAuth2 token, or API key in PingIntelligence AI engine generated Blacklist. If all checks pass, ASE returns a 200-OK response to the F5 BIG-IP. If not, a different response code is sent to F5 BIG-IP. The request information is also logged by ASE and sent to the AI Engine for processing. F5 BIG-IP receives a 200-OK response from ASE, then it forwards the request to the backend server pool. A request is blocked only when ASE sends a 403 error code. The response from the back-end server poll is received by F5 BIG-IP. F5 BIG-IP makes a second API call to pass the response information to ASE which sends the information to the AI engine for processing. ASE receives the response information and sends a 200-OK to F5 BIG-IP. F5 BIG-IP sends the response received from the backend server to the client. Pre-requisites BIG-IP system must be running TMOS v13.1.0.8 or higher version. Sideband authentication is enabled on PingIntelligence for secure communication with the BIG-IP system. Download the PingIntelligence policy from the download site. Solution Deployment Step-1: Import and Configure PingIntelligence Policy Login to your F5 BIG-IP web UI and navigate to Local Traffic > iRules > LX Workspaces. On the LX Workspaces page, click on the Import button. Enter a Name and choose the PingIntelligence policy that you downloaded from the Ping Identity download site. Then, click on the Import button. This creates LX workspace Open the Workspace by clicking on the name. The policy is pre-loaded with an extension named oi_ext . Edit the ASE configuration by clicking on the ASEConfig.js file. It opens the PingIntelligence policy in the editor: Click on this link to understand various ASE variables. Step-2: Create LX Plugin Navigate to Local Traffic > iRules > LX Plugins. On the New Plugin page, click on the Create button to create a new plugin with the name pi_plugin. Select the workspace that you created earlier from the Workspace drop-down list and click on the Finished button. Step-3: Create a Backend Server Pool and Frontend Virtual Server (Optional) If you already created the virtual server, skip this step Create a Backend Server pool Navigate to Local Traffic > Pools > Pool List and click on the Create button. In the configuration page, configure the fields and add a new node to the pool. When done, click on the Finished button. This creates a backend server pool that is accessed from clients connecting to the frontend virtual server Create a Frontend Virtual Server Navigate to Local Traffic > Virtual Server > Virtual Server List and click on the Create button. Configure the virtual server details. At a minimum, configure the Destination Address, Client SSL Profile and Server SSL Profile When done, click on the Finished button. Under the Resource tab, add the backend server pool to the virtual server and click on the Update button. Step-4: Add PingIntelligence Policy The imported PingIntelligence policy must be tied to a virtual server. Add the PingIntelligence policy to the virtual server. Navigate to Local Traffic > Virtual Servers > Virtual Server List. Select the virtual server to which you want to add the PingIntelligence policy. Click on the Resources tab. In the iRules section, click on the Manage button. Choose the iRule under the pi_plugin that you want to attach to the virtual server. Move the pi_irule to the Enabled window and click on the Finished button. Once the solution is deployed, you can gain insights into user activity, attack information, blocked connections, forensic data, and much more from the PingIntelligence dashboard References Ping Intelligence for API Overview F5 BIG-IP PingIntelligence Integration710Views0likes0CommentsGetting Started with iRules LX, Part 1: Introduction & Conceptual Overview

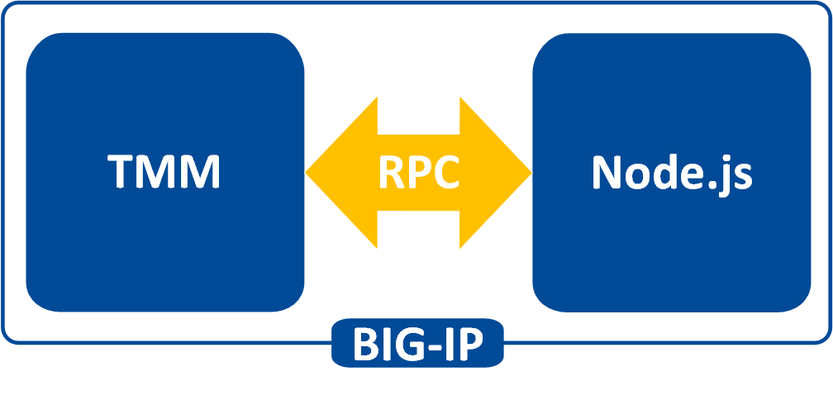

What is iRules LX? When BIG-IP TMOS 12.1.0 released a couple weeks ago, Danny wrote an intro piece to a new feature called iRules LX. iRules LX is the next generation of iRules that allows you to programmatically extend the functionality of BIG-IP with Node.js. In this article series, you will learn the basics of what iRules LX is, some use cases, how the components fit together, troubleshooting techniques, and some introductory code solutions. What is Node.js ? Node.js provides an environment for running JavaScript independent of a web browser. With Node.js you can write both clients and servers, but it is mostly used for writing servers. There are 2 main componets to Node.js - Google v8 JavaScript Engine Node.js starts with the same open source JavaScript engine used in the Google Chrome web browser. v8 has a JIT complier that optimizes JavaScript code to allow it to run incrediable fast. It also has an optimizing complier that, during run time, further optimizes the code to increase the performance up to 200 times faster. LibUV Asynchronous I/O Library A JavaScript engine by itself would not allow a full set of I/O operations that are needed for a server (you dont want your web browser accessing the file system of computer after all). LubUV gives Node.js the capability to have those I/O operations. Because v8 is a single threaded process, I/O (which have the most latency) would normally be blocking; that is, the CPU would be doing nothing while waiting from a response of the I/O operation. LibUV allows for the v8 to continue running JavaScript while waiting for the response, maximizing the use of CPU cycles and providing great concurrency. Why Node.js? Some might ask "Why was Node.js chosen?" While there is more than one reason, the biggest factor is that you can use JavaScript. JavaScript has been a staple of web development for almost 2 decades and is a common skillset amongst developers. Also, Node.js has a vibrant community that not only contributes to the Node.js core itself, but also a plethora of people writing libraries (called modules). As of May 2016 there are over 280,000 Node.js modules available in the Node Package Manager (NPM) registry, so chances are you can find a library for almost any use case. An NPM client is included with distributions of Node.js. For more information about the specifics of Node.js on BIG-IP, please refer to AskF5 Solution ArticleSOL16221101. iRules LX Conceptual Operation Unlike iRules which has a TCL interpreter running inside the Traffic Management Micro-kernel (TMM), Node.js is running as a stand alone processes outside of TMM. Having Node.js outside of TMM is an advantage in this case because it allow F5 to keep the Node.js version more current than if it was integrated into TMM. In order to get data from TMM to the Node.js processes, a Remote Procedural Call (RPC) is used so that TMM can send data to Node.js and tell it what "procedure" to run. The conceptual model of iRules LX looks something like this - To make this call to Node.js , we still need iRules TCL. We will cover the coding much more extensively in a few days, so dont get too wrapped up in understanding all the new commands just yet. Here is an example of TCL code for doing RPC to Node.js - when HTTP_REQUEST { set rpc_hdl [ILX::init my_plugin my_extension] set result [ILX::call $rpc_hdl my_method $arg1] if { $result eq "yes" } { # Do something } else { # Do something else } } In line 2 you can see with the ILX::init command that we establish an RPC handle to a specific Node.js plugin and extension (more about those tomorrow). Then in line 3 we use the ILX::call command with our handle to call the method my_method (our "remote procedure") in our Node.js process and we send it the data in variable arg1 . The result of the call will be stored in the variable result . With that we can then evaluate the result as we do in lines 5-9. Here we have an example of Node.js code using the iRules LX API we provide - ilx.addMethod('my_method', function(req, res){ var data = req.params()[0]; <…ADDITIONAL_PROCESSING…> res.reply('<some_reply>'); }); You can see the first argument my_method in addMethod is the name of our method that we called from TCL. The second argument is our callback function that will get executed when we call my_method . On line 2 we get the data we sent over from TCL and in lines 3-5 we perform whatever actions we wish with JavaScript. Then with line 6 we send our result back to TCL. Use Cases While TCL programming is still needed for performing many of the tasks, you can offload the most complicated portions to Node.js using any number of the available modules. Some of the things that are great candidates for this are - Sideband connections -Anyone that has used sideband in iRules TCL knows how difficult it is to implement a protocol from scratch, even if it is only part of the protocol. Simply by downloading the appropriate module with NPM, you can easily accomplish many of the following with ease - Database lookups - SQL, LDAP, Memcached, Redis HTTP and API calls - RESTful APIs, CAPTCHA, SSO protocols such as SAML, etc. Data parsing - JSON - Parser built natively into JavaScript XML Binary protocols In the next installment we will be covering iRules LX configuration and workflow.3.4KViews0likes1CommentGetting Started with iRules LX, Part 3: Coding & Exception Handling

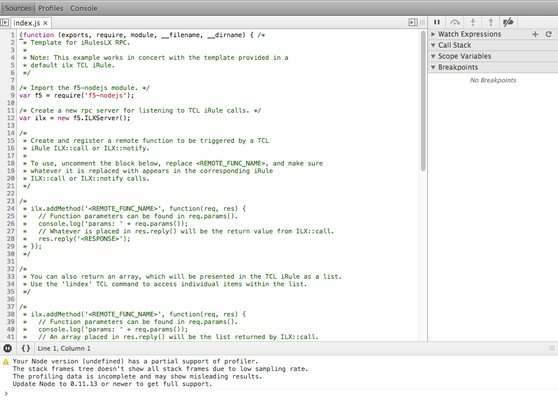

So far in this series, we have covered the conceptual overview, components, workflows, licensing and provisioning of iRules LX. In this article, we will actually get our hands wet with some iRules LX code! TCL Commands As mentioned in the first article, we still need iRules TCL to make a RPC to Node.js. iRules LX introduces three new iRules TCL commands - ILX::init - Creates a RPC handle to the plugin's extension. We will use the variable from this in our other commands. ILX::call - Does the RPC to the Node.js process to send data and receive the result (2 way communication). ILX::notify - Does an RPC to the Node.js process to send data only (1 way communication, no response). There is one caveat to the ILX::call and ILX::notify commands: you can only send 64KB of data. This includes overhead used to encapsulate the RPC so you will need to allow about 500B-1KB for the overhead. If you need to send more data you will need to create code that will send data over in chunks. TCL Coding Here is a TCL template for how we would use iRules LX. when HTTP_REQUEST { set ilx_handle [ILX::init "my_plgin" "ext1"] if {[catch {ILX::call $ilx_handle "my_method" $user $passwd} result]} { log local0.error "Client - [IP::client_addr], ILX failure: $result" # Send user graceful error message, then exit event return } # If one value is returned, it becomes TCL string if {$result eq 'yes'} { # Some action } # If multiple values are returned, it becomes TCL list set x [ lindex $result 0] set y [ lindex $result 1] } So as not to overwhelm you, we'll break this down to bite size chunks. Taking line 2 from above, we will use ILX::init command to create our RPC handle to extension ext1 of our LX plugin my_plugin and store it in the variable ilx_handle . This is why the names of the extensions and plugin matter. set ilx_handle [ILX::init "my_plgin" "ext1"] Next, we can take the handle and do the RPC - if {[catch {ILX::call $ilx_handle "my_method" $user $passwd} result]} { log local0.error "Client - [IP::client_addr], ILX failure: $result" # Send user graceful error message with whatever code, then exit event return } You can see on line 3, we make the ILX::call to the extension by using our RPC handle, specifying the method my_method (our "remote procedure") and sending it one or more args (in this case user and passwd which we got somewhere else). In this example we have wrapped ILX::call in a catch command because it can throw an exception if there is something wrong with the RPC (Note: catch should actually be used on any command that could throw an exception, b64decode for example). This allows us to gracefully handle errors and respond back to the client in a more controlled manner. If ILX::call is successful, then the return of catch will be a 0 and the result of the command will be stored in the variable result . If ILX::call fails, then the return of catch will be a 1 and the variable result will contain the error message. This will cause the code in the if block to execute which would be our error handling. Assuming everything went well, we could now start working with data in the variable result . If we returned a single value from our RPC, then we could process this data as a string like so - # If one value is returned, it becomes TCL string if {$result eq 'yes'} { # Some action } But if we return multiple values from our RPC, these would be in TCL list format (we will talk more about how to return multiple values in the Node.js coding section). You could use lindex or any suitable TCL list command on the variable result - # If multiple values are returned, it becomes TCL list set x [ lindex $result 0] set y [ lindex $result 1] Node.js Coding On the Node.js side, we would write code in our index.js file of the extension in the workspace. A code template will load when the extension is created to give you a starting point, so you dont have to write it from scratch. To use Node.js in iRules LX, we provide an API for receiveing and sending data from the TCL RPC. Here is an example - var f5 = require('f5-nodejs'); var ilx = new f5.ILXServer(); ilx.addMethod('my_method', function (req, res) { // req.params() function is used to get values from TCL. Returns JS Array var user = req.params()[0]; var passwd = req.params()[1]; <DO SOMETHING HERE> res.reply('<value>'); // Return one value as string or number res.reply(['value1','value2','value3']); // Return multiple values with an Array }); ilx.listen(); Now we will run through this step-by-step. On line 1, you see we import the f5-nodejs module which provides our API to the ILX server. var f5 = require('f5-nodejs'); On line 3 we instantiate a new instance of the ILXServer class and store the object in the variable ilx . var ilx = new f5.ILXServer(); On line 5, we have our addMethod method which stores methods (our remote procedures) in our ILX server. ilx.addMethod('my_method', function (req, res) { // req.params() function is used to get values from TCL. Returns JS Array var user = req.params()[0]; var passwd = req.params()[1]; <DO SOMETHING HERE> res.reply('<value>'); // Return one value as string or number res.reply(['value1','value2','value3']); // Return multiple values with an Array }); This is where we would write our custom Node.js code for our use case. This method takes 2 arguments - Method name - This would be the name that we call from TCL. If you remember from our TCL code above in line 3 we call the method my_method . This matches the name we put here. Callback function - This is the function that will get executed when we make the RPC to this method. This function gets 2 arguments, the req and res objects which follow standard Node.js conventions for the request and response objects. In order to get our data that we sent from TCL, we must call the req.param method. This will return an array and the number of elements in that array will match the number of arguments we sent in ILX::call . In our TCL example on line 3, we sent the variables user and passwd which we got somewhere else. That means that the array from req.params will have 2 elements, which we assign to variables in lines 7-8. Now that we have our data, we can use any valid JavaScript to process it. Once we have a result and want to return something to TCL, we would use the res.reply method. We have both ways of using res.reply shown on lines 12-13, but you would only use one of these depending on how many values you wish to return. On line 12, you would put a string or number as the argument for res.reply if you wanted to return a single value. If we wished to return multiple values, then we would use an array with strings or numbers. These are the only valid data types for res.reply . We mentioned in the TCL result that we could get one value that would be a string or multiple values that would be a TCL list. The argument type you use in res.reply is how you would determine that. Then on line 16 we start our ILX server so that it will be ready to listen to RPC. ilx.listen(); That was quick a overview of the F5 API for Node.js in iRules LX. It is important to note that F5 will only support using Node.js in iRules LX within the provided API. A Real Use Case Now we can take what we just learned and actually do something useful. In our example, we will take POST data from a standard HTML form and convert it to JSON.In TCL we would intercept the data, send it to Node.js to transform it to JSON, then return it to TCL to replace the POST data with the JSON and change the Content-Type header - when HTTP_REQUEST { # Collect POST data if { [HTTP::method] eq "POST" }{ set cl [HTTP::header "Content-Length"] HTTP::collect $cl } } when HTTP_REQUEST_DATA { # Send data to Node.js set handle [ILX::init "json_plugin" "json_ext"] if {[catch {ILX::call $handle "post_transform" [HTTP::payload]} json]} { log local0.error "Client - [IP::client_addr], ILX failure: $json" HTTP::respond 400 content "<html>Some error page to client</html>" return } # Replace Content-Type header and POST payload HTTP::header replace "Content-Type" "application/json" HTTP::payload replace 0 $cl $json } In Node.js, would only need to load the built in module querystring to parse the post data and then JSON.stringify to turn it into JSON. 'use strict' var f5 = require('f5-nodejs'); var qs = require('querystring'); var ilx = new f5.ILXServer(); ilx.addMethod('post_transform', function (req, res) { // Get POST data from TCL and parse query into a JS object var postData = qs.parse(req.params()[0]); // Turn postData into JSON and return to TCL res.reply(JSON.stringify(postData)); }); ilx.listen(); Note: Keep in mind that this is only a basic example. This would not handle a POST that used 100 continue or mutlipart POSTs. Exception Handling iRules TCL is very forgiving when there is an unhanded exception. When you run into an unhandled runtime exception (such as an invalid base64 string you tried to decode), you only reset that connection. However, Node.js (like most other programming languages) will crash if you have an unhandled runtime exception, so you will need to put some guard rails in your code to avoid this. Lets say for example you are doing JSON.parse of some JSON you get from the client. Without proper exception handling any client could crash your Node.js process by sending invalid JSON. In iRules LX if a Node.js process crashes 5 times in 60 seconds, BIG-IP will not attempt to restart it which opens up a DoS attack vector on your application (max restarts is user configurable, but good code eliminates the need to change it). You would have to manually restart it via the Web UI or TMSH. In order to catch errors in JavaScript, you would use the try/catch statement. There is one caveat to this: code inside a try/catch statement is not optimized by the v8 complier and will cause a significant decrease in performance. Therefore, we should keep our code in try/catch to a minimum by putting only the functions that throw exceptions in the statement. Usually, any function that will take user provided input can throw. Note: The subject of code optimization with v8 is quite extensive so we will only talk about this one point. There are many blog articles about v8 optimization written by people much smarter than me. Use your favorite search engine with the keywords v8 optimization to find them. Here is an example of try/catch with JSON.parse - ilx.addMethod('my_function', function (req, res) { try { var myJson = JSON.parse(req.params()[0]) // This function can throw } catch (e) { // Log message and stop processing function console.error('Error with JSON parse:', e.message); console.error('Stack trace:', e.stack); return; } // All my other code is outside try/catch var result = ('myProperty' in myJson) ? true : false; res.reply(result); }); We can also work around the optimization caveat by hoisting a custom function outside try/catch and calling it inside the statement - ilx.addMethod('my_function', function (req, res) { try { var answer = someFunction(req.params()[0]) // Call function from here that is defined on line 16 } catch (e) { // Log message an stop processing function console.error('Error with someFunction:', e.message); console.error('Stack trace:', e.stack); return; } // All my other code is outside try/catch var result = (answer === 'hello') ? true : false; res.reply(result); }); function someFuntion (arg) { // Some code in here that can throw return result } RPC Status Return Value In our examples above, we simply stopped the function call if we had an error but never let TCL know that we encountered a problem. TCL would not know there was a problem until the ILX::call command reached it's timeout value (3 seconds by default). The client connection would be held open until it reached the timeout and then reset. While it is not required, it would be a good idea for TCL to get a return value on the status of the RPC immediately. The specifics of this is pretty open to any method you can think of but we will give an example here. One way we can accomplish this is by the return of multiple values from Node.js. Our first value could be some type of RPC status value (say an RPC error value) and the rest of the value(s) could be our result from the RPC. It is quite common in programming that make an error value would be 0 if everything was okay but would be a positive integer to indicate a specific error code. Here in this example, we will demonstate that concept. The code will verify that the property myProperty is presentin JSON data and put it's value into a header or send a 400 response back to the client if not. THe Node.js code - ilx.addMethod('check_value', function (req, res) { try { var myJson = JSON.parse(req.params()[0]) // This function can throw } catch (e) { res.reply(1); //<---------------------------------- The RPC error value is 1 indicating invalid JSON return; } if ('myProperty' in myJson){ // The myProperty property was present in the JSON data, evaluate its value var result = (myJson.myProperty === 'hello') ? true : false; res.reply([0, result]); //<-------------------------- The RPC error value is 0 indicating success } else { res.reply(2); //<-------------- The RPC error value is 2 indicating myProperty was not present } }); In the code above the first value we return to TCL is our RPC error code. We have defined 3 possible values for this - 0 - RPC success 1 - Invalid JSON 2 - Property "myProperty" not present in JSON One our TCL side we would need to add logic to handle this value - when HTTP_REQUEST { # Collect POST data if { [HTTP::method] eq "POST" }{ set cl [HTTP::header "Content-Length"] HTTP::collect $cl } } when HTTP_REQUEST_DATA { # Send data to Node.js set handle [ILX::init "json_plugin" "json_checker"] if {[catch [ILX::call $handle "check_value" [HTTP::payload]] json]} { log local0.error "Client - [IP::client_addr], ILX failure: $result" HTTP::respond 400 content "<html>Some error page to client</html>" return } # Check the RPC error value if {[lindex $json 0] > 0} { # RPC error value was not 0, there is a problem switch [lindex $json 0] { 1 { set error_msg "Invalid JSON"} 2 { set error_msg "myProperty property not present"} } HTTP::respond 400 content "<html>The following error occured: $error_msg</html>" } else { # If JSON was okay, insert header with myProperty value HTTP::header insert "X-myproperty" [lindex $json 1] } } As you can see on line 19, we check the value of the first element in our TCL list. If it is greater than 0 then we know we have a problem. We move on down further to line 20 to determine the problem and set a variable that will become part of our error message back to the client. Note:Keep in mind that this is only a basic example. This would not handle a POST that used 100 continue or mutlipart POSTs. In the next article in this series, we will cover how to install a module with NPM and some best practices.2.7KViews0likes2CommentsGetting Started with iRules LX, Part 5: Troubleshooting

When you start writing code, you will eventually run into some issue and need to troubleshoot. We have a few tools available for you. Logging One of the most common tools use for troubleshooting is logging. STDOUT and STDERR for the Node.js processes end up in the file /var/log/ltm . By temporarily inserting functions such as console.log , console.error , etc. you can easily check the state of different objects in your code at run time. There is one caveat to logging in iRules LX; logging by most of most processes on BIG-IP is throttled by default. Basically, if 5 messages from a process come within 1 second, all other messages with be truncated. For Node.js, every line is a separate message so something like a stack trace will get automatically truncated. To remove this limit we can change the DB variable log.sdmd.level to the value debug in TMSH as such - root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # modify sys db log.sdmd.level value debug SDMD is the process that manages the Node.js instances. This turns on verbose logging for SDMD and all the Node.js processes so you will want to turn this back to info when you are finished troubleshooting. Once that is done you can start adding logging statements to your code. Here, I added a line to tell me when the process starts (or restarts) - var f5 = require('f5-nodejs'); console.log('Node.js process starting.'); var ilx = new f5.ILXServer(); The logs would then show me this - Jun 7 13:26:08 test-ve info sdmd[12187]: 018e0017:6: pid[21194] plugin[/Common/test_pl1.ext1] Node.js process starting. Node Inspector BIG-IP comes packaged with Node Inspector for advanced debugging. We won't cover how to use Node Inspector because most developers skilled in Node.js already know how to use it and there are already many online tutorials for those that dont. Note: We highly recommend that you do not use Node Inspector in a production environment. Debuggers lock the execution of code at break points pausing the entire application, which does not work well for a realtime network device. Ideally debugging should only be done on a dedicated BIG-IP (such as a lab VE instance) in an isolated development environment. Turn on Debugging The first thing we want to do is to put our extension into single concurrency mode so that we are only working with one Node.js process while debugging. Also, while we are doing that we can put the extension into debugging mode. This can be done from TMSH like so - root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # list ilx plugin DC_Plugin ilx plugin DC_Plugin { disk-space 156 extensions { dc_extension { } dc_extension2 { } } from-workspace DevCentralRocks staged-directory /var/ilx/workspaces/Common/DevCentralRocks } root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # modify ilx plugin DC_Plugin extensions { dc_extension { concurrency-mode single command-options add { --debug } }} root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # list ilx plugin DC_Plugin ilx plugin DC_Plugin { disk-space 156 extensions { dc_extension { command-options { --debug } concurrency-mode single } dc_extension2 { } } from-workspace DevCentralRocks staged-directory /var/ilx/workspaces/Common/DevCentralRocks } root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # restart ilx plugin DC_Plugin immediate Increase ILX::call Timeout Also, if you recall from part 3 of this series, the TCL command ILX::call has a 3 second timeout by default that if there is no response to the RPC, the command throws an exception. We don't need to worry about this for the command ILX::notify because it does not expect a response from the RPC. While we have the debugger paused on a break point you are pretty much guaranteed to hit this timeout, so we need to increase it to allow for our whole method to finish execution. To do this, you will need to add arguments to the ILX::call highlighted in red - ILX::call $ilx_handle -timeout big_number_in_msec method arg1 This number should be big enough to walk through the entire method call while debugging. If it will take you 2 minutes, then the value should be 120000. Once you update this make sure to reload the workspace. Launching Node Inspector Once you have put Node.js into debugging mode and increased the timeout, you now you can fire up Node Inspector. We will need to find the debugging port that our Node.js process has been assigned via TMSH - root@(eflores-ve3)(cfg-sync Standalone)(Active)(/Common)(tmos) # show ilx plugin DC_Plugin <----------------snipped -----------------> --------------------------------- | Extension Process: dc_extension --------------------------------- | Status running | PID 25975 | TMM 0 | CPU Utilization (%) 0 | Debug Port 1033 | Memory (bytes) | Total Virtual Size 1.2G | Resident Set Size 5.8K Now that we have the debugging port we can put that and the IP of our management interface into the Node Inspector arguments - [root@test-ve:Active:Standalone] config # /usr/lib/node_modules/.bin/node-inspector --debug-port 1033 --web-host 192.168.0.245 --no-inject Node Inspector v0.8.1 Visit http://192.168.0.245:8080/debug?port=1033 to start debugging. We simply need to take the URL given to us and put it in our web browser. Then we can start debugging away! Conclusion We hope you have found the Getting Started with iRules LX series very informative and look forward to hearing about how iRules LX has helped you solved problems. If you need any help with using iRules LX, please dont hesitate to ask a question on DevCentral.2.5KViews0likes3CommentsiRules LX Logger Class

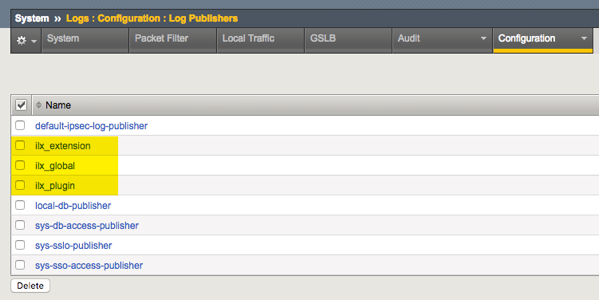

In version 13.1, the ILXLogger class was added to expand the ability to log from iRules LX. The API detailshave been added to to the wikibut in this article, I’ll add context and an exampleto boot. Let’s get to it! The first step is to create a log publisher. For test purposes any destination for the publisher will do, so I’ll just choose local syslog for reach of the three publishers I’ll create here for the three tiers of logging you can do within iRules LX: the global level, the plugin level, and the extension level. In the GUI, you can apply the plugin and extension publishers as shown in the two images below: The log publishers can also be created via tmsh with the sys log-config publisher command. One you have a log publisher defined, you can also use tmsh to apply the publishers to any/all levels of your iRules LX environments. # Global tmsh modify ilx global-settings log-publisher ilx_global # Plugin tmsh modify ilx plugin a_plugin log-publisher ilx_plugin # Extension tmsh modify ilx plugin b_plugin extensions { b_extension { log-publisher ilx_extension } } This works similar to profile parent/child inheritance, with some caveats. Scenario #1 The global publisher is ilx_global The plugin publisher is ilx_plugin The extension publisher is ilx_extension Result: Calling logger.send() will send to the ilx_extension publisher. Scenario #2 The global publisher is ilx_global The plugin publisher is ilx_plugin The extension publisher is none Result: Calling logger.send() will send to the ilx_plugin publisher. Scenario #3 The global publisher is ilx_global The plugin publisher is none The extension publisher is ilx_extension Result: Calling logger.send() will send to the ilx_extension publisher. Scenario #4 The global publisher is ilx_global The plugin publisher is none The extension publisher is none Result: Calling logger.send() will send to the ilx_global publisher. Scenario #5 The global publisher is none The plugin publisher is none The extension publisher is none Result: Calling logger.send() will cause an error as there is no configured publisher. Note: You can alternatively use console.log() to send log data. If a global publisher is configured it will log there and if not it will log to /var/log/ltm by default. Example This is a bare bones example from Q&A posted by F5’s own Satoshi Toyosawa. I created an iRules LX Workspace (my workspace), an iRule (ilxtest), a plugin (ILXLOggerTestRpcPlugin), and an extension (ILXLoggerTestRpcExt). Configuration details for each: The Workspace ilx workspace myworkspace { extensions { ILXLoggerTestRpcExt { files { index.js { } node_modules { } package.json { } } } } node-version 6.9.1 partition none rules { ilxtest { } } staged-directory /var/ilx/workspaces/Common/myworkspace version 14.0.0 } The iRule when HTTP_REQUEST { log local0. "I WANT THE TRUTH!" set RPC_HANDLE [ILX::init ILXLoggerTestRpcPlugin "ILXLoggerTestRpcExt"] ILX::call $RPC_HANDLE func } The Plugin ilx plugin ILXLoggerTestRpcPlugin { extensions { ILXLoggerTestRpcExt { log-publisher ilx_extension } } from-workspace myworkspace log-publisher ilx_plugin node-version 6.9.1 staged-directory /var/ilx/workspaces/Common/myworkspace } The Extension (all defaults except the updated index.js contents shown below) var f5 = require('f5-nodejs'); var ilx = new f5.ILXServer(); var logger = new f5.ILXLogger(); ilx.addMethod('func', function(req, res) { logger.send('YOU CAN\'T HANDLE THE TRUTH!'); res.reply('done'); }); ilx.listen(); Applying this iRules LX package against one of my test virtual servers with an HTTP profile, I anticipate Lt. Daniel Kaffee and Col. Nathan R. Jessup (From A Few Good Men) going at it in the log file: Aug 30 19:33:20 ltm3 info sdmd[25253]: 018e0017:6: pid[35416] plugin[/Common/ILXLoggerTestRpcPlugin.ILXLoggerTestRpcExt] YOU CAN'T HANDLE THE TRUTH! Aug 30 19:33:20 ltm3 info tmm1[29992]: Rule /Common/ILXLoggerTestRpcPlugin/ilxtest : I WANT THE TRUTH! Interesting that the node process dumps the log statement faster than Tcl, though that is not consistent and not guaranteed, as another run shows a different (and more appropriate according to the movie dialog) order: Aug 30 22:47:35 ltm3 info tmm1[29992]: Rule /Common/ILXLoggerTestRpcPlugin/ilxtest : I WANT THE TRUTH! Aug 30 22:47:35 ltm3 info sdmd[25253]: 018e0017:6: pid[35416] plugin[/Common/ILXLoggerTestRpcPlugin.ILXLoggerTestRpcExt] YOU CAN'T HANDLE THE TRUTH! This is by no means a thorough detour into the possibilities of your log publisher options with iRules LX on version 13.1+, but hopefully this gets you started with greater logging flexibility and if you are new to iRules LX, some sample code to play with. Happy coding!574Views0likes2CommentsBIG-IP iRulesLX FakeADFS - WS-Federation/SAML11

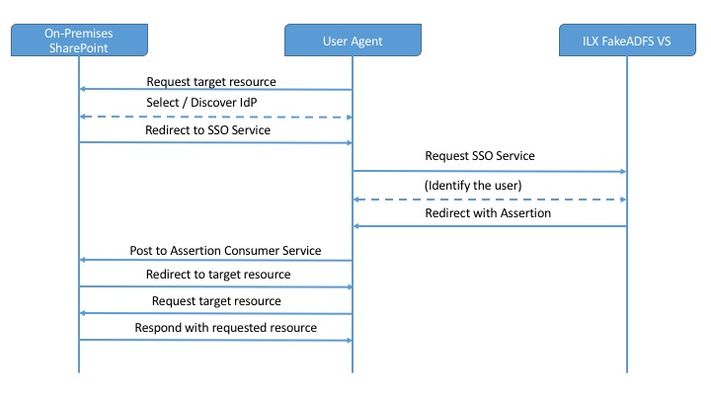

Details This was created as a solution to REPLACE the need for AD FS to tie APM into SharePoint. The goal was originally to demonstrate the flexibility of iRulesLX and also to find a way to add WS-Federation support very quickly. This solution is currently SP initiated, but wouldn't take much to handle IdP initiated as well. An example of the process flow is detailed in the following image. All that's needed for this solution is to create or import the iRulesLX workspace, configure SharePoint as if you are connecting to AD FS as a trusted Identity Provider, but point to a virtual server on the BIG-IP. Instructions First, ensure that you have a certificate / key for the IDP, as well as the CA Chain/Root CA. These do not have to be from the same domain CA that SharePoint lives on, if it exists. We are going to add these to SharePoint as trusted. On one of the SharePoint servers, open the SharePoint Management Powershell, issue the following. To import the Trusted Root CA, that issued the signing cert: $root = New-Object System.Security.Cryptography.X509Certificates.X509Certificate2("") New-SPTrustedRootAuthority -Name "Token Signing Cert Parent" -Certificate $root To import the Trusted Certificate $cert = New-Object System.Security.Cryptography.X509Certificates.X509Certificate2("") Next, create the Claim mappings for SharePoint: $emailClaimMap = New-SPClaimTypeMapping -IncomingClaimType "http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress " -IncomingClaimTypeDisplayName "EmailAddress" –SameAsIncoming $upnClaimMap = New-SPClaimTypeMapping -IncomingClaimType "http://schemas.xmlsoap.org/ws/2005/05/identity/claims/upn " -IncomingClaimTypeDisplayName "UPN" –SameAsIncoming $roleClaimMap = New-SPClaimTypeMapping -IncomingClaimType "http://schemas.microsoft.com/ws/2008/06/identity/claims/role " -IncomingClaimTypeDisplayName "Role" –SameAsIncoming $sidClaimMap = New-SPClaimTypeMapping -IncomingClaimType "http://schemas.microsoft.com/ws/2008/06/identity/claims/primarysid " -IncomingClaimTypeDisplayName "SID" –SameAsIncoming Note: Additional claim options here. Next, create the Trusted Identity Provider. $realm = "urn:sharepoint:" $signInURL = "https:///adfs/ls" $ap = New-SPTrustedIdentityTokenIssuer -Name (ProviderName) -Description (ProviderDescription) -realm $realm -ImportTrustCertificate $cert -ClaimsMappings $emailClaimMap,$upnClaimMap,$roleClaimMap,$sidClaimMap -SignInUrl $signInURL -IdentifierClaim $emailClaimmap.InputClaimType To Associate a SharePoint Web Application with the new Identity Provider. To configure an existing web application to use the FakeADFS identity provider Verify that the user account that is performing this procedure is a member of the Farm Administrators SharePoint group. In Central Administration, on the home page, click Application Management. On the Application Management page, in the Web Applications section, click Manage web applications. Click the appropriate web application. From the ribbon, click Authentication Providers. Under Zone, click the name of the zone. For example, Default. On the Edit Authentication page in the Claims Authentication Types section, select Trusted Identity provider, and then click the name of your SAML provider (<ProviderName> from the New-SPTrustedIdentityTokenIssuer command). Click OK. Next, you must enable SSL for this web application. You can do this by adding an alternate access mapping for the “https://” version of the web application’s URL and then configuring the web site in the Internet Information Services (IIS) Manager console for an HTTPS binding. APM Policy Flow The current workflow works as follows: 1. A user opens a link to SharePoint. 2. SharePoint presents Authentication options for the WebApp. 3. A user selects Authentication Source (FakeADFS), enters credentials, which then redirects the user to Virtual Server configured with FakeADFS iRulesLX solution. 4. FakeADFS Generates a SAML11 assertion, then wraps it in the WS-Federation elements. <t:RequestSecurityTokenResponse xmlns:t="http://schemas.xmlsoap.org/ws/2005/02/trust"> <t:Lifetime> <wsu:Created xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd">2016-12-20T13:56:01.349Z</wsu:Created> <wsu:Expires xmlns:wsu="http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd">2016-12-20T14:56:01.349Z</wsu:Expires> </t:Lifetime> <wsp:AppliesTo xmlns:wsp="http://schemas.xmlsoap.org/ws/2004/09/policy"> <wsa:EndpointReference xmlns:wsa="http://www.w3.org/2005/08/addressing"> <wsa:Address>urn:sharepoint:f5test</wsa:Address> </wsa:EndpointReference> </wsp:AppliesTo> <t:RequestedSecurityToken> <saml:Assertion xmlns:saml="urn:oasis:names:tc:SAML:1.0:assertion" MajorVersion="1" MinorVersion="1" AssertionID="_8Ed1lOeqLhnGTETTOn9BHJn72TZ8lE25" IssueInstant="2016-12-20T13:56:01.363Z" Issuer="http://fakeadfs.f5ttest.com/adfs/services/trust"> <saml:Conditions NotBefore="2016-12-20T13:56:01.363Z" NotOnOrAfter="2016-12-20T14:56:01.363Z"> <saml:AudienceRestrictionCondition> <saml:Audience>urn:sharepoint:f5test</saml:Audience> </saml:AudienceRestrictionCondition> </saml:Conditions> <saml:AttributeStatement> <saml:Subject> <saml:SubjectConfirmation> <saml:ConfirmationMethod>urn:oasis:names:tc:SAML:1.0:cm:bearer</saml:ConfirmationMethod> </saml:SubjectConfirmation> </saml:Subject> <saml:Attribute AttributeNamespace="http://schemas.xmlsoap.org/ws/2005/05/identity/claims" AttributeName="emailaddress"> <saml:AttributeValue>flip@f5test.com</saml:AttributeValue> </saml:Attribute> <saml:Attribute AttributeNamespace="http://schemas.xmlsoap.org/ws/2005/05/identity/claims" AttributeName="upn"> <saml:AttributeValue>0867530901@f5test.com</saml:AttributeValue> </saml:Attribute> </saml:AttributeStatement> <saml:AuthenticationStatement AuthenticationMethod="urn:oasis:names:tc:SAML:1.0:am:password" AuthenticationInstant="2016-12-20T14:56:01.363Z"> <saml:Subject> <saml:ConfirmationMethod>urn:oasis:names:tc:SAML:1.0:cm:bearer</saml:ConfirmationMethod> </saml:SubjectConfirmation> </saml:Subject> </saml:AuthenticationStatement> <Signature xmlns="http://www.w3.org/2000/09/xmldsig#"> <SignedInfo> <CanonicalizationMethod Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/> <SignatureMethod Algorithm="http://www.w3.org/2001/04/xmldsig-more#rsa-sha256"/> <Reference URI="#_8Ed1lOeqLhnGTETTOn9BHJn72TZ8lE25"> <Transforms> <Transform Algorithm="http://www.w3.org/2000/09/xmldsig#enveloped-signature"/> <Transform Algorithm="http://www.w3.org/2001/10/xml-exc-c14n#"/> </Transforms> <DigestMethod Algorithm="http://www.w3.org/2001/04/xmlenc#sha256"/> <DigestValue>fqLP9yVFDNveaOWwyEVl2Bc9M6bEzKb7KMZ2x33VgUc=</DigestValue> </Reference> </SignedInfo> <SignatureValue>tSLsHUu5m1Mc7qNmdfa5daEK2+noAgbuZ5faGaXQw7qCPEvNihXFUjGuwT4qgeIWFsiFcinin 6Q42DwjRZBL1jcYpAYxP3WQFc+JvRlOaaWecklLmlLGBp9qjyzNzNhgT374T1YkgWJLTm4Jky7bW6HAMWJzT2vCpbSWLbtU=</SignatureValue> <KeyInfo> <X509Data> <X509Certificate>MIIGgjCCBGqgAwIBAgITFQAAAAVVlNqAr99awAAAAAAABTANBgkqhkiG9w0BAQsFADBJMRMwEQYKCZImiZ PyLGQBGRYDY29tMRUwEwYKCZImiZPyLGQBGRZjVsYWIxGzAZBgNVBAMTEkY1IExhYiBDQSAoMksxNlIyKTAeFw0xNjEyMTYxN TE1MjNaFw0xODEyMTYxNTE1MjNaMG8xCzAJBgNVBAYTAlVTMQswCQYDVQQIEwJQQTEUMBIGA1UEBxMLRG93bmluZ3Rvd24xDzAN BgNVBAoTBkY1IExhYjEPMA0GA1UECxMGRjUgTGFiMRswGQYDVQQDExJmYWtlYWRmcy5mNWxhYi5jb20wgZ8wDQYJKoZIhvcNAQE BBQADgY0AMIGJAoGBALgtr7ROiet3GPUg0yWa2dGPoirQ9dI8XiA7BsUwjTUG5yhAysKm0ZsCstKN92a2e8HxoHxiEZL39XzTxg 5+3fY4A8hWttlqkKoWutnUS3GpPhfoVdufr8bTcr/vhLPCkuy9GsiDqAMwuiX/B3r0EHqFk3utfL3KDxZ5V94ArwqJAgMBAAGjg gK/MIICuzAOBgNVHQ8BAf8EBAMCBaAwEwYDVR0AwwCgYIKwYBBQUHAwEweAYJKoZIhvcNAQkPBGswaTAOBggqhkiG9w0DAgIC AIAwDgYIKoZIhvcNAwQCAgCAMAsGCWCGSAFlAwQBKjALBglghkgBZQMEAS0wCwYJYIZIAWUDBAECMAsGCWCGSAFlAwQBBTAHBgU rDgMCBzAKBggqhkiG9w0DBzAdBgNVHQ4EFgQUL8AJuPouaekEIK8JuQAthnBS8wHwYDVR0jBBgwFoAUeyV8LWPBfaUCaLG/UR cYpOrjK48wgeMGA1UdHwSB2zCB2DCB1aCB0qCBz4aBzGxkYXA6Ly8vQ049RjUlMjBMYWIlMjBDQSUyMCEwMDI4MksxNlIyITAwM jksQ049V0lOMksxMlIyREMwMDEsQ049Q0RQLENOPVB1YmxpYyUyMEtleSUyMFNlcnZpY2VzLENOPVNlcnZpY2VzLENOPUNvbmZp Z3VyYXRpb24sREM9ZjVsYWrIsREM9Y29tP2NlcnRpZmljYXRl2b2NhdGlvbkxpc3Q/YmFzZT9vYmplY3RDbGFzcz1jUkxEaXN 0cmlidXRpb25Qb2ludDCB0AYIKwYBBQUHAQEEgcMwgcAwgb0GCCsGAQxUFBzAChoGwbGRhcDovLy9DTj1GNSUyMExhYiUyMENBJT IwITAwMjgySzE2UjIhMDAyOSxDTj1BSUEsQ049UHVibGljJTIwS2V5JTIwU2VydmljZXMsQ049U2VydmljZXMsQ049Q29uZmlnd XJhdGlvbixEQz1mNWxhYixEQz1jb20/Y0FDZXJ0aWZpY2F0ZT9iYXNlP29iamVjdENsYXNzPWNlcnRpZmljYXRpb25BdXRob3Jp dHkwIQYJKwYBBAGCNxQCBBQeEgBXAGUAYgBTAGUAcgB2AGUAcjANBgkqhkiG9w0BAQsFAAOCAgEAdom2Hvlw9MTKZbr6Ic3MLDR I10QGnflAq9w0/t6H8HN1jnEW8RTikIEpp2nOK7GknFq2161mJ4l5cRGroCyWsHN8to6VqhxqnESYHRyxwZDpS6a8JO4AYc111G fRWpB4nOIqs86aboUJDU+vRzrJHeuI1NzsI502i7fjlYqQVtE3FO2VIbekqx9zjHnliAX6l+VbDMFX8P8lP40U9rAIzHUPF+j3p 34i+4tPtv1/bwTco2EZE8hy2XvJ4xHXzpXYytchRhv+8glYNKK229vhML0micJfnCJQ3xaiJ2e08/GSVoBF9x4J+z4V+XS9aZSn P2+N3tZESVVBA8U4kk9u6syfmDc4+ryoIw5XGcBIyitaH7FbKbYyUHY0XuWPHx6FOWWnCe2kIA/Zfs9IDCP/z07egIJabLymLC vRhOMyd1s5lajnTFfoFaDd7LlL1ipz94OdhxJ5/Aga7sTEtFPbjnfcSZ8lFglQUOkaKuZt6D/LQ/TW6TyDqPC3RDCoaqkY4MXgnm P0dUk9ql1y2qFU2l692ZDZQPB4Tiaa3yXDKwDwCWITQ0YBvIiCcSWoMKXXea96Q2lB3R9n7v9Y6I7eSniZjGqlYYQ3Bdi3FMVz+ HGPeMOFq6HbzgnNtjFKwjqokUbwpwA7vZOQmFwEahEEaCPTpK25h4LSpLPtYa3fjfQ=</X509Certificate> </X509Data> </KeyInfo> </Signature> </saml:Assertion> </t:RequestedSecurityToken> <t:TokenType>urn:oasis:names:tc:SAML:1.0:assertion</t:TokenType> <t:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue</t:RequestType> <t:KeyType>http://schemas.xmlsoap.org/ws/2005/05/identity/NoProofKey</t:KeyType> </t:RequestSecurityTokenResponse> 5. The results are injected into a form embedded in an HTTP::respond 200 content with javascript that will automatically POST the form data to SharePoint. SharePoint accepts the assertion from its Trusted Identity Provider. The current solution pulls attributed from LDAP, but this isn't required, you can basically enter anything as username and SharePoint accepts it without question. iRule/TCL Portion when HTTP_REQUEST { # Wctx: This is some session data that the application wants sent back to # it after the user authenticates. set wctx [URI::decode [URI::query [HTTP::uri] wctx]] # Wa=signin1.0: This tells the ADFS server to invoke a login for the user. set wa [URI::decode [URI::query [HTTP::uri] wa]] # Wtrealm: This tells ADFS what application I was trying to get to. # This has to match the identifier of one of the relying party trusts # listed in ADFS. wtrealm is used in the Node.JS side, but we dont need it # here. # Kept getting errors from APM, this fixed it. node 127.0.0.1 # Make sure that the user has authenticated and APM has created a session. if {[HTTP::cookie exists MRHSession]} { #log local0. "Generate POST form and Autopost " # tmpresponse is the WS-Fed Assertion data, unencoded, so straight XML set tmpresponse [ACCESS::session data get session.custom.idam.response] # This was the pain to figure out. The assertion has to be POSTed to # SharePoint, this was the easiest way to solve that issue. Set timeout # to half a second, but can be adjusted as needed. set htmltop "<html><script type='text/javascript'>window.onload=function(){ window.setTimeout(document.wsFedAuth.submit.bind(document.wsFedAuth), 500);};</script><body>" set htmlform "<form name='wsFedAuth' method=POST action='https://sharepoint.f5test.com/_trust/'><input type=hidden name=wa value=$wa><input type=hidden name=wresult value='$tmpresponse'><input type=hidden name=wctx value=$wctx><input type='submit' value='Continue'></form/>" set htmlbottom "</body></html>" set page "$htmltop $htmlform $htmlbottom" HTTP::respond 200 content $page } } when ACCESS_POLICY_AGENT_EVENT { # Create the ILX RPC Handler set fakeadfs_handle [ILX::init fakeadfs_extension] # Payload is just the incoming Querystring set payload [ACCESS::session data get session.server.landinguri] # Currently, the mapped attributes are Email & UPN. In some environments, # this may match, for my use case, they will not, so there is an LDAP AAA # which is queried based on the logon name (email), and the UPN is retrieved # from LDAP. set AttrUserName [ACCESS::session data get session.logon.last.username] set AttrUserPrin [ACCESS::session data get session.ldap.last.attr.userPrincipalName ] # Current solution uses Node.JS SAML module and can support SAML11, as well # as SAML20. The APM policy calls the irule even ADFS, with generates the token # based on the submitted QueryString and the logon attributed. switch [ACCESS::policy agent_id] { "ADFS" { log local0. "Received Process request for FakeADFS, $payload" set fakeadfs_response [ILX::call $fakeadfs_handle Generate-WSFedToken $payload $AttrUserName $AttrUserPrin] ACCESS::session data set session.custom.idam.response $fakeadfs_response } } } # This may or may not be needed, they arent populated with actual values, but # have not tested WITHOUT yet. # # MSISAuth and MSISAuth1 are the encrypted cookies used to validate the SAML # assertion produced for the client. These are what we call the "authentication # cookies", and you will see these cookies ONLY when AD FS 2.0 is the IDP. # Without these, the client will not experience SSO when AD FS 2.0 is the IDP. # # MSISAuthenticated contains a base64-encoded timestamp value for when the client # was authenticated. You will see this cookie set whether AD FS 2.0 is the IDP # or not. # # MSISSignout is used to keep track of the IDP and all RPs visited for the SSO # session. This cookie is utilized when a WS-Federation sign-out is invoked. # You can see the contents of this cookie using a base64 decoder. # MSISLoopDetectionCookie is used by the AD FS 2.0 infinite loop detection # mechanism to stop clients who have ended up in an infinite redirection loop # to the Federation Server. For example, if an RP is having an issue where it # cannot consume the SAML assertion from AD FS, the RP may continuously redirect # the client to the AD FS 2.0 server. When the redirect loop hits a certain # threshold, AD FS 2.0 uses this cookie to detect that threshold being met, # and will throw an exception which lands the user on the AD FS 2.0 error page # rather than leaving them in the loop. The cookie data is a timestamp that is # base64 encoded. when ACCESS_ACL_ALLOWED { HTTP::cookie insert name "MSISAuth" value "ABCD" path "/adfs" HTTP::cookie insert name "MSISSignOut" value "ABCD" path "/adfs" HTTP::cookie insert name "MSISAuthenticated" value "ABCD" path "/adfs" HTTP::cookie insert name "MSISLoopDetectionCookie" value "ABCD" path "/adfs" } iRulesLX / Node.JS Portion If you you are starting from scratch, the npm install xxx --save option below is required. If you are importing the workspace or the github solution, npm update will pull in the latest version of all the referenced modules. /* Import the f5-nodejs module. */ var f5 = require('f5-nodejs'); /* Import the additional Node.JS Modules npm install saml npm install querystring npm install fs npm install moment npm install https */ var saml11 = require('saml').Saml11; var queryString = require('querystring'); var fs = require('fs'); var moment = require('moment'); var https = require('https'); /* timeout is the length of time for the assertion validity wsfedIssuer is, believe it or not, the Issuer SigningCert, SigningKey are the required certificate and key pair for signing the assertion and specifically the DigestValue. */ var timeout = 3600; var wsfedIssuer = "http://fakeadfs.f5test.com/adfs/services/trust"; var SigningCert = "/fakeadfs.f5test.com.crt"; var SigningKey = "/fakeadfs.f5test.com.key"; /* Create a new rpc server for listening to TCL iRule calls. */ var ilx = new f5.ILXServer(); ilx.addMethod('Generate-WSFedToken', function(req,res) { /* Extract the ILX parameters to add to the Assertion data req.params()[0] is the first passed argument req.params()[1] is the second passed argument, and so on. */ var query = queryString.unescape(req.params()[0]); var queryOptions = queryString.parse(query); var AttrUserName = req.params()[1]; var AttrUserPrincipal = req.params()[2]; var wa = queryOptions.wa; var wtrealm = queryOptions.wtrealm; var wctx = queryOptions.wctx; /* This is where the WS-Fed gibberish is assembled. Moment is required to insert the properly formatted time stamps.*/ var now = moment.utc(); var wsfed_wrapper_head = "<t:RequestSecurityTokenResponse xmlns:t=\"http://schemas.xmlsoap.org/ws/2005/02/trust\">"; wsfed_wrapper_head += "<t:Lifetime><wsu:Created xmlns:wsu=\"http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd\">" + now.format('YYYY-MM-DDTHH:mm:ss.SSS[Z]') +"</wsu:Created>"; wsfed_wrapper_head += "<wsu:Expires xmlns:wsu=\"http://docs.oasis-open.org/wss/2004/01/oasis-200401-wss-wssecurity-utility-1.0.xsd\">" + now.add(timeout, 'seconds').format('YYYY-MM-DDTHH:mm:ss.SSS[Z]') + "</wsu:Expires>"; wsfed_wrapper_head += "</t:Lifetime><wsp:AppliesTo xmlns:wsp=\"http://schemas.xmlsoap.org/ws/2004/09/policy\"><wsa:EndpointReference xmlns:wsa=\"http://www.w3.org/2005/08/addressing\">"; wsfed_wrapper_head += "<wsa:Address>" + wtrealm + "</wsa:Address>"; wsfed_wrapper_head += "</wsa:EndpointReference></wsp:AppliesTo><t:RequestedSecurityToken>"; /* Generate and insert the SAML11 Assertion. These attributed are configured previously in the code. cert: this is the cert used for encryption key: this is the key used for the cert issuer: the assertion issuer lifetimeInSeconds: timeout audiences: this is the application ID for sharepoint, urn:sharepoint:webapp attributes: these should map to the mappings created for the IDP in SharePoint */ var saml11_options = { cert: fs.readFileSync(__dirname + SigningCert), key: fs.readFileSync(__dirname + SigningKey), issuer: wsfedIssuer, lifetimeInSeconds: timeout, audiences: wtrealm, attributes: { 'http://schemas.xmlsoap.org/ws/2005/05/identity/claims/emailaddress': AttrUserName , 'http://schemas.xmlsoap.org/ws/2005/05/identity/claims/upn': AttrUserPrincipal } }; /* Sign the Assertion */ var signedAssertion = saml11.create(saml11_options); /* Add the WS-Fed footer */ var wsfed_wrapper_foot = "</t:RequestedSecurityToken><t:TokenType>urn:oasis:names:tc:SAML:1.0:assertion</t:TokenType><t:RequestType>http://schemas.xmlsoap.org/ws/2005/02/trust/Issue</t:RequestType><t:KeyType>http://schemas.xmlsoap.org/ws/2005/05/identity/NoProofKey</t:KeyType></t:RequestSecurityTokenResponse>"; /* Put them all together */ var wresult = wsfed_wrapper_head + signedAssertion + wsfed_wrapper_foot; /* respond back to TCL with the complete assertion */ res.reply(wresult); }); /* Start listening for ILX::call and ILX::notify events. */ ilx.listen(); And thats it. You can now get away with WS-Fed and SAML1.1 assertions with a quick import to your BIG-IP.2.4KViews0likes17CommentsAPM Cookbook: Modify LDAP Attribute Values using iRulesLX

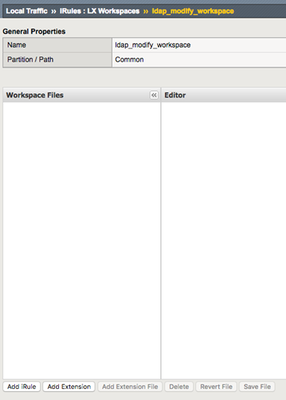

Introduction Access Policy Manager (APM) does not have the ability to modify LDAP attribute values using the native features of the product. In the past I’ve used some creative unsupported solutions to modify LDAP attribute values, but with the release of BIG-IP 12.1 and iRulesLX, you can now modify LDAP attribute values, in a safe and in supported manner. Before we get started I have a pre-configured Active Directory 2012 R2 server which I will be using as my LDAP server with an IP address of 10.1.30.101. My BIG-IP is running TMOS 12.1 and the iRules Language eXtension has been licensed and provisioned. Make sure your BIG-IP has internet access to download the required Node.JS packages. For this solution I’ve opted to use the ldapjs package. The iRulesLX requires the following session variable to be set for the LDAP Modify to execute: Distinguished Name (DN): session.ad.last.attr.dn Attribute Name (e.g. carLicense): session.ldap.modify.attribute Attribute Value: session.ldap.modify.value This guide also assumes you have a basic level of understanding and troubleshooting at a Local Traffic Manager (LTM) level and you BIG-IP Self IP, VLANs, Routes, etc.. are all configured and working as expected. Step 1 – iRule and iRulesLX Configuration 1.1 Create a new iRulesLX workspace Local Traffic >> iRules >> LX Workspaces >> “Create” Supply the following: Name: ldap_modify_workspace Select “Finished" to save. You will now have any empty workspace, ready to cut/paste the TCL iRule and Node.JS code. 1.2 Add the iRule Select “Add iRule” and supply the following: Name: ldap_modify_apm_event Select OK Cut / Paste the following iRule into the workspace editor on the right hand side. Select “Save File” to save. # Author: Brett Smith @f5 when RULE_INIT { # Debug logging control. # 0 = debug logging off, 1 = debug logging on. set static::ldap_debug 0 } when ACCESS_POLICY_AGENT_EVENT { if { [ACCESS::policy agent_id] eq "ldap_modify" } { # Get the APM session data set dn [ACCESS::session data get session.ad.last.attr.dn] set ldap_attribute [ACCESS::session data get session.ldap.modify.attribute] set ldap_value [ACCESS::session data get session.ldap.modify.value] # Basic Error Handling - Don't execute Node.JS if LDAP attribute name or value is null if { (([string trim $ldap_attribute] eq "") or ([string trim $ldap_value] eq "")) } { ACCESS::session data set session.ldap.modify.result 255 } else { # Initialise the iRulesLX extension set rpc_handle [ILX::init ldap_modify_extension] if { $static::ldap_debug == 1 }{ log local0. "rpc_handle: $rpc_handle" } # Pass the LDAP Attribute and Value to Node.JS and save the iRulesLX response set rpc_response [ILX::call $rpc_handle ldap_modify $dn $ldap_attribute $ldap_value] if { $static::ldap_debug == 1 }{ log local0. "rpc_response: $rpc_response" } ACCESS::session data set session.ldap.modify.result $rpc_response } } } 1.3 Add an Extension Select “Add extenstion” and supply the following: Name: ldap_modify_extension Select OK Cut / Paste the following Node.JS and replace the default index.js. Select “Save File” to save. // Author: Brett Smith @f5 // index.js for ldap_modify_apm_events // Debug logging control. // 0 = debug off, 1 = debug level 1, 2 = debug level 2 var debug = 1; // Includes var f5 = require('f5-nodejs'); var ldap = require('ldapjs'); // Create a new rpc server for listening to TCL iRule calls. var ilx = new f5.ILXServer(); // Start listening for ILX::call and ILX::notify events. ilx.listen(); // Unbind LDAP Connection function ldap_unbind(client){ client.unbind(function(err) { if (err) { if (debug >= 1) { console.log('Error Unbinding.'); } } else { if (debug >= 1) { console.log('Unbind Successful.'); } } }); } // LDAP Modify method, requires DN, LDAP Attribute Name and Value ilx.addMethod('ldap_modify', function(ldap_data, response) { // LDAP Server Settings var bind_url = 'ldaps://10.1.30.101:636'; var bind_dn = 'CN=LDAP Admin,CN=Users,DC=f5,DC=demo'; var bind_pw = 'Password123'; // DN, LDAP Attribute Name and Value from iRule var ldap_dn = ldap_data.params()[0]; var ldap_attribute = ldap_data.params()[1]; var ldap_value = ldap_data.params()[2]; if (debug >= 2) { console.log('dn: ' + ldap_dn + ',attr: ' + ldap_attribute + ',val: ' + ldap_value); } var ldap_modification = {}; ldap_modification[ldap_attribute] = ldap_value; var ldap_change = new ldap.Change({ operation: 'replace', modification: ldap_modification }); if (debug >= 1) { console.log('Creating LDAP Client.'); } // Create LDAP Client var ldap_client = ldap.createClient({ url: bind_url, tlsOptions: { 'rejectUnauthorized': false } // Ignore Invalid Certificate - Self Signed etc.. }); // Bind to the LDAP Server ldap_client.bind(bind_dn, bind_pw, function(err) { if (err) { if (debug >= 1) { console.log('Error Binding to: ' + bind_url); } response.reply('1'); // Bind Failed return; } else { if (debug >= 1) { console.log('LDAP Bind Successful.'); } // LDAP Modify ldap_client.modify(ldap_dn, ldap_change, function(err) { if (err) { if (debug >= 1) { console.log('LDAP Modify Failed.'); } ldap_unbind(ldap_client); response.reply('2'); // Modify Failed } else { if (debug >= 1) { console.log('LDAP Modify Successful.'); } ldap_unbind(ldap_client); response.reply('0'); // No Error } }); } }); }); You will need to modify the bind_url, bind_dn, and bind_pw variables to match your LDAP server settings. 1.4 Install the ldapjs package SSH to the BIG-IP as root cd /var/ilx/workspaces/Common/ldap_modify_workspace/extensions/ldap_modify_extension npm install ldapjs -save You should expect the following output from above command: [root@big-ip1:Active:Standalone] ldap_modify_extension # npm install ldapjs -save ldapjs@1.0.0 node_modules/ldapjs ├── assert-plus@0.1.5 ├── dashdash@1.10.1 ├── asn1@0.2.3 ├── ldap-filter@0.2.2 ├── once@1.3.2 (wrappy@1.0.2) ├── vasync@1.6.3 ├── backoff@2.4.1 (precond@0.2.3) ├── verror@1.6.0 (extsprintf@1.2.0) ├── dtrace-provider@0.6.0 (nan@2.4.0) └── bunyan@1.5.1 (safe-json-stringify@1.0.3, mv@2.1.1) 1.5 Create a new iRulesLX plugin Local Traffic >> iRules >> LX Plugin >> “Create” Supply the following: Name: ldap_modify_plugin From Workspace: ldap_modify_workspace Select “Finished" to save. If you look in /var/log/ltm, you will see the extension start a process per TMM for the iRuleLX plugin. big-ip1 info sdmd[6415]: 018e000b:6: Extension /Common/ldap_modify_plugin:ldap_modify_extension started, pid:24396 big-ip1 info sdmd[6415]: 018e000b:6: Extension /Common/ldap_modify_plugin:ldap_modify_extension started, pid:24397 big-ip1 info sdmd[6415]: 018e000b:6: Extension /Common/ldap_modify_plugin:ldap_modify_extension started, pid:24398 big-ip1 info sdmd[6415]: 018e000b:6: Extension /Common/ldap_modify_plugin:ldap_modify_extension started, pid:24399 Step 2 – Create a test Access Policy 2.1 Create an Access Profile and Policy We can now bring it all together using the Visual Policy Editor (VPE). In this test example, I will not be using a password just for simplicity. Access Policy >> Access Profiles >> Access Profile List >> “Create” Supply the following: Name: ldap_modify_ap Profile Type: LTM-APM Profile Scope: Profile Languages: English (en) Use the default settings for all other settings. Select “Finished” to save. 2.2 Edit the Access Policy in the VPE Access Policy >> Access Profiles >> Access Profile List >> “Edit” (ldap_modify_ap) On the fallback branch after the Start object, add a Logon Page object. Change the second field to: Type: text Post Variable Name: attribute Session Variable Name: attribute Read Only: No Add a third field: Type: text Post Variable Name: value Session Variable Name: value Read Only: No In the “Customization” section further down the page, set the “Form Header Text” to what ever you like and change “Logon Page Input Field #2” and “Logon Page Input Field #3” to something meaningful, see my example below for inspiration. Leave the “Branch Rules” as the default. Don’t forget to “Save”. On the fallback branch after the Logon Page object, add an AD Query object. This step verifies the username is correct against Active Directory/LDAP, returns the Distinguished Name (DN) and stores the value in session.ad.last.attr.dn which will be used by the iRulesLX. Supply the following: Server: Select your LDAP or AD Server SearchFilter: sAMAccountName=%{session.logon.last.username} Select Add new entry Required Attributes: dn Under Branch Rules, delete the default and add a new one, by selecting Add Branch Rule. Update the Branch Rule settings: Name: AD Query Passed Expression (Advanced): expr { [mcget {session.ad.last.queryresult}] == 1 } Select “Finished”, then “Save” when your done. On the AD Query Passed branch after the AD Query object, add a Variable Assign object. This step assigns the Attribute Name to session.ldap.modify.attribute and the Attribute Value entered on the Logon Page to session.ldap.modify.value. Supply the following: Name: Assign LDAP Variables Add the Variable assignments by selecting Add new entry >> change. Variable Assign 1: Custom Variable (Unsecure): session.ldap.modify.attribute Session Variable: session.logon.last.attribute Variable Assign 2: Custom Variable (Secure): session.ldap.modify.value Session Variable: session.logon.last.value Select “Finished”, then “Save” when your done.Leave the “Branch Rules” as the default. On the fallback branch after the Assign LDAP Variables object, add a iRule object. Supply the following: Name: LDAP Modify ID: ldap_modify Under Branch Rules, add a new one, by selecting Add Branch Rule. Update the Branch Rule settings: Name: LDAP Modify Successful Expression (Advanced): expr { [mcget {session.ldap.modify.result}] == "0" } Select “Finished”, then “Save” when your done. The finished policy should look similar to this: As this is just a test policy I used to test my Node.JS and to show how the LDAP Modify works, I will not have a pool member attached to the virtual server, I have just left the branch endings as Deny. In a real word scenario, you would not allow a user to change any LDAP Attributes and Values. Apply the test Access Policy (ldap_modify_ap) to a HTTPS virtual server for testing and the iRuleLX under the Resources section. Step 3 - OK, let’s give this a test! To test, just open a browser to the HTTPS virtual server you created, and supply a Username, Attribute and Value to be modified. In my example, I want to change the Value of the carLicense attribute to test456. Prior to me hitting the Logon button, I did a ldapsearch from the command line of the BIG-IP: ldapsearch -x -h 10.1.30.101 -D "cn=LDAP Admin,cn=users,dc=f5,dc=demo" -b "dc=f5,dc=demo" -w 'Password123' '(sAMAccountName=test.user)' | grep carLicense carLicense: abc123 Post submission, I performed the same ldapsearch and the carLicense value has changed. It works! ldapsearch -x -h 10.1.30.101 -D "cn=LDAP Admin,cn=users,dc=f5,dc=demo" -b "dc=f5,dc=demo" -w 'Password123' '(sAMAccountName=test.user)' | grep carLicense carLicense: test456 Below is some basic debug log from the Node.JS: big-ip1 info sdmd[6415]: 018e0017:6: pid[24399] plugin[/Common/ldap_modify_plugin.ldap_modify_extension] Creating LDAP Client. big-ip1 info sdmd[6415]: 018e0017:6: pid[24399] plugin[/Common/ldap_modify_plugin.ldap_modify_extension] LDAP Bind Successful. big-ip1 info sdmd[6415]: 018e0017:6: pid[24399] plugin[/Common/ldap_modify_plugin.ldap_modify_extension] LDAP Modify Successful. big-ip1 info sdmd[6415]: 018e0017:6: pid[24399] plugin[/Common/ldap_modify_plugin.ldap_modify_extension] Unbind Successful. Conclusion You can now modify LDAP attribute values, in safe and in supported manner with iRulesLX. Think of the possibilities! For an added bonus, you can add addtional branch rules to the iRule Event - LDAP Modify, as the Node.JS returns the following error codes: 1 - LDAP Bind Failed 2 - LDAP Modified Failed I would also recommend using Macros. Please note, this my own work and has not been formally tested by F5 Networks.2.7KViews0likes8CommentsConfiguring Smart Card Authentication to the BIG-IP Traffic Management User Interface (TMUI) using F5's Privileged User Access Solution

As promised in my last article which discussed configuring the BIG-IP as an SSH Jump Server using smart card authentication, I wanted to continue the discussion of F5's privileged user access with additional use cases. The first follow on article is really dedicated to all those customers who ask, "how do I use a smart card to authenticate to the BIG-IP TMUI?" While yes, I did provide a guide on how to do this natively, I'm here to tell you I think this is a bit easier but don't take my word for it. Try them both! To reduce duplicating content, I am going to begin with the final configuration deployed in the previous article which has been published at https://devcentral.f5.com/s/articles/configuring-the-big-ip-as-an-ssh-jump-server-using-smart-card-authentication-and-webssh-client-31586. If you have not completed that guide, please do so prior to continuing with the Traffic Management User Interface (TMUI). With that, let's begin. Prerequisites LTM Licensed and Provisioned APM Licensed and Provisioned iRulesLX Provisioned 8Gb of Memory Completed the PUA deployment based on my previous guide. https://devcentral.f5.com/s/articles/configuring-the-big-ip-as-an-ssh-jump-server-using-smart-card-authentication-and-webssh-client-31586 Create a Virtual Server and BIG-IP Pool Now you may be asking yourself why would I need this? Well, if any of you have attempted this in the past you will notice you will receive an ACL error when trying to access the management IP directly from a portal access resource. Because of this, we will need to complete this step and point our portal access resource to the IP of our virtual server. Navigate to Local Traffic >> Virtual Servers >> Click Create Name: BIG-IPMgmtInt Destination Address: This IP is arbitrary, select anything Service Port: 443 SSL Profile (Client): clientssl SSL Profile (Server): serverssl Source Address Translation: Automap Scroll until you reach the Default Pool option and click the + button to create a new pool. Name: BIG-IP Health Monitors: HTTP Address: Management IP address Service Port: 443 Click Add and Finished The pool should be selected for you after creation. Click Finished Creating a Single Sign On Profile for TMUI Navigate to Access >> Single Sign On >> Forms Based >> Click Create Name: f5mgmtgui_SSO SSO Template: None Headers Name: Referer Headers Value: https://IPofBIGIPVS/tmui/login.jsp Username Source: session.logon.last.username Password Source: session.custom.ephemeral.last.password Start URI: /tmui/login.jsp Form Action: /tmui/logmein.html Form Parameter For User Name: username Form Parameter For Password: passwd Click Finished Creating a Portal Access List and Resource Navigate to Access >> Connectivity / VPN >> Portal Access >> Portal Access Lists >> Click Create Name: BIG-IPMgmtIntPA Link Type: Application URI Application URI: https://IPofBIGIPVS/tmui/login.jsp Click Create After the Portal Access List is created, click the Add button in to add a resource. Link Type: Paths Destination Type: IP Destination IP Address: IP of the BIGIP virtual server Paths: /* Scheme: https Port: 443 SSO Configuration: Select the SSO profile created previously in this article Click Finished Assign the new Portal Access Resource Navigate to Access >> Profiles / Policies >> Click the Edit button in the row of the PUA Policy created using the previous guide. From the Admin Access Macro click Advanced Resource Assign Click the Add / Delete Button from the Resource Assignment page. Select the Portal Access tab and place a check mark next to the portal access resource created in the previous steps. Click Update Click Apply Access Policy Validation Testing From a web browser navigate to webtop.demo.lab. Click OK, Proceed to Application Select your user certificate when prompted and click OK From the Webtop, select the portal access resource you created in previous steps. If successful, you will be redirected to the BIG-IP TMUI as shown below. Now you have successfully configured SSO to the BIG-IP TMUI using forms based authentication. I'm sure many of you are wondering how it is possible to perform forms based authentication when I provided no password in this entire article. This is possible because of the ability for the F5 PUA solution to generate a one-time password on behalf of the user and present it to the application. Thanks for following and I will continue with additional use cases and capabilities of the F5 BIG-IP. Appendix If for any reason you attempt to logout of TMUI and are logged back in immediately, it is likely because of middle ware you have in place on your workstation though no need to worry, there's an iRule for that! Simply add the following iRule to the Webtop virtual server and you will be good to go. when HTTP_REQUEST { #log local0. "[HTTP::uri]" switch -glob [HTTP::uri] { "*/tmui/logout.html" { HTTP::respond 200 content "Logged Out. Close Browser." } default { } } }1.3KViews0likes6CommentsCreating iRules LX via iControl REST