F5 BIG-IP as a Terminating Gateway for HashiCorp Consul

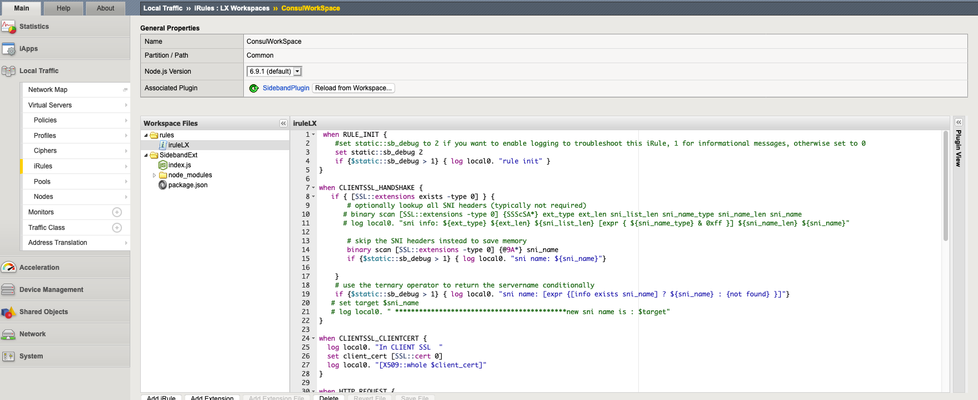

Our joint customers have asked for it, so the HashiCorp Consul team and the F5 BIG-IP teams have been working to provide this early look at the technology and configurations needed to have BIG-IP perform the Terminating Gateway functionality for HashiCorp Consul. A Bit of an Introduction HashiCorp Consul is a platform that is multi cloud and is used to secure service to service communication. You have all heard about microservices and how within an environment like Consul there is a need to secure and control microservice to microservice communications. But what happens when you want a similar level of security and control when a microservice inside the environment needs to communicate with a service outside of the environment. Enter F5 BIG-IP - the Enterprise Terminating Gateway solution for HashiCorp. HashiCorp has announced the GA of their Terminating Gateway functionality, and we here at F5 want to show our support for this milestone by showing the progress we have made to date in answering the requests of our joint customers. One of the requirements of a Terminating Gateway is that it must respect the security policies defined within Consul. HashiCorp calls these policies Intentions. What Should You Get Out of this Article This article is focused on how BIG-IP when acting as a Terminating Gateway can understand those Intentions and can securely apply those policies/Intentions to either allow or disallow microservice to microservice communications. Update We have been hard at work on this solution, and have created a method to automate the manual processes that I had detailed below. You can skip executing the steps below and jump directly to the new DevCentral Git repository for this solution. Feel free to read the below to get an understanding of the workflows we have automated using the code in the new DevCentral repo. And you can also check out this webinar to hear more about the solution and to see the developer, Shaun Empie, demo the automation of the solution. First Steps Before we dive into the iRulesLX that makes this possible, one must configure the BIG-IP Virtual server to secure the connectivity with mTLS, and configure the pool, profiles, and other configuration options necessary for one's environment. Many here on DevCentral have shown how F5 can perform mTLS with various solutions. Eric Chen has shown how to configure theBIG-IP to use mTLS with Slack.What I want to focus on is how to use an iRuleLX to extract the info necessary to respect the HashiCorp Intentions and allow or disallow a connection based on the HashiCorp Consul Intention. I have to give credit where credit is due. Sanjay Shitole is the one behind the scenes here at F5 along with Dan Callao and Blake Covarrubias from HashiCorp who have worked through the various API touch points, designed the workflow, and F5 Specific iRules and iRulesLX needed to make this function. Now for the Fun Part Once you get your Virtual Server and Pool created the way you would like them with the mTLS certificates etc., you can focus on creating the iLX Workspace where you will write the node.js code and iRules. You can follow the instructions here to create the iLX workspace, add an extension, and an LX plugin. Below is the tcl-based iRule that you will have to add to this workspace. To do this go to Local Traffic > iRules > LX Workspaces and find the workspace you had created in the steps above. In our example, we used "ConsulWorkSpace". Paste the text of the rule listed below into the text editor and click save file. There is one variable (sb_debug) you can change in this file depending on the level of logging you want done to the /var/log/ltm logs. The rest of the iRule Grabs the full SNI value from the handshake. This will be parsed later on in the node.js code to populate one of the variables needed for checking the intention of this connection in Consul. The next section grabs the certificate and stores it as a variable so we can later extract the serial_id and the spiffe, which are the other two variables needed to check the Consul Intention. The next step in the iRule is to pass these three variables via an RPC_HANDLE function to the Node.js code we will discuss below. The last section uses that same RPC_HANDLE to get responses back from the node code and either allows or disallows the connection based on the value of the Consul Intention. when RULE_INIT { #set static::sb_debug to 2 if you want to enable logging to troubleshoot this iRule, 1 for informational messages, otherwise set to 0 set static::sb_debug 0 if {$static::sb_debug > 1} { log local0. "rule init" } } when CLIENTSSL_HANDSHAKE { if { [SSL::extensions exists -type 0] } { binary scan [SSL::extensions -type 0] {@9A*} sni_name if {$static::sb_debug > 1} { log local0. "sni name: ${sni_name}"} } # use the ternary operator to return the servername conditionally if {$static::sb_debug > 1} { log local0. "sni name: [expr {[info exists sni_name] ? ${sni_name} : {not found} }]"} } when CLIENTSSL_CLIENTCERT { if {$static::sb_debug > 1} {log local0. "In CLIENTSSL_CLIENTCERT"} set client_cert [SSL::cert 0] } when HTTP_REQUEST { set serial_id "" set spiffe "" set log_prefix "[IP::remote_addr]:[TCP::remote_port clientside] [IP::local_addr]:[TCP::local_port clientside]" if { [SSL::cert count] > 0 } { HTTP::header insert "X-ENV-SSL_CLIENT_CERTIFICATE" [X509::whole [SSL::cert 0]] set spiffe [findstr [X509::extensions [SSL::cert 0]] "Subject Alternative Name" 39 ","] if {$static::sb_debug > 1} { log local0. "<$log_prefix>: SAN: $spiffe"} set serial_id [X509::serial_number $client_cert] if {$static::sb_debug > 1} { log local0. "<$log_prefix>: Serial_ID: $serial_id"} } if {$static::sb_debug > 1} { log local0.info "here is spiffe:$spiffe" } set RPC_HANDLE [ILX::init "SidebandPlugin" "SidebandExt"] if {[catch {ILX::call $RPC_HANDLE "func" $sni_name $spiffe $serial_id} result]} { if {$static::sb_debug > 1} { log local0.error"Client - [IP::client_addr], ILX failure: $result"} HTTP::respond 500 content "Internal server error: Backend server did not respond." return } ## return proxy result if { $result eq 1 }{ if {$static::sb_debug > 1} {log local0. "Is the connection authorized: $result"} } else { if {$static::sb_debug > 1} {log local0. "Connection is not authorized: $result"} HTTP::respond 400 content '{"status":"Not_Authorized"}'"Content-Type" "application/json" } } Next is to copy the text of the node.js code below and paste it into the index.js file using the GUI. Here though there are two lines you will have to edit that are unique to your environment. Those two lines are the hostname and the port in the "const options =" section. These values will be the IP and port on which your Consul Server is listening for API calls. This node.js takes the three values the tcl-based iRule passed to it, does some regex magic on the sni_name value to get the target variable that is used to check the Consul Intention. It does this by crafting an API call to the consul server API endpoint that includes the Target, the ClientCertURI, and the ClientCertSerial values. The Consul Server responds back, and the node.js code captures that response, and passes a value back to the tcl-based iRule, which means the communication is disallowed or allowed. const http = require("http"); const f5 = require("f5-nodejs"); // Initialize ILX Server var ilx = new f5.ILXServer(); ilx.addMethod('func', function(req, res) { var retstr = ""; var sni_name = req.params()[0]; var spiffe = req.params()[1]; var serial_id = req.params()[2]; const regex = /[^.]*/; let targetarr = sni_name.match(regex); target = targetarr.toString(); console.log('My Spiffe ID is: ', spiffe); console.log('My Serial ID is: ', serial_id); //Construct request payload var data = JSON.stringify({ "Target": target, "ClientCertURI": spiffe, "ClientCertSerial": serial_id }); //Strip off newline character(s) data = data.replace(/\\n/g, '') ; // Construct connection settings const options = { hostname: '10.0.0.100', port: 8500, path: '/v1/agent/connect/authorize', method: 'POST', headers: { 'Content-Type': 'application/json', 'Content-Length': data.length } }; // Construct Consul sideband HTTP Call const myreq = http.request(options, res2 => { console.log(`Posting Json to Consul -------> statusCode: ${res2.statusCode}`); res2.on('data', d => { //capture response payload process.stdout.write(d); retstr += d; }); res2.on('end', d => { //Check response for Valid Authorizaion and return back to TCL iRule var isVal = retstr.includes(":true"); res.reply(isVal); }); }); myreq.on('error', error => { console.error(error); }); // Intiate Consul Call myreq.write(data); myreq.end(); }); // Start ILX listener ilx.listen(); This iRulesLX solution will allow for multiple sources to connect to the BIG-IP Virtual Server, exchange mTLS info, but only allow the connection once the Consul Intentions are verified. If your Intentions looked something similar to the ones below the Client microservice would be allowed to communicate with the services behind the BIG-IP, whereas the socialapp microservice would be blocked at the BIG-IP since we are capturing and respecting the Consul Intentions. So now that we have shown how BIG-IP acts as terminating Gateway for HashiCorp Consul - all the while respecting the Consul Intentions, What’s next? Well next is for F5 and HashiCorp to continue working together on this solution. We intend to take this a level further by creating a prototype that automates the process. The Automated prototype will have a mechanism to listen to changes within the Consul API server, and when a new service is defined behind the BIG-IP acting as the terminating Gateway, the Virtual server, the pools, the ssl profiles, and the iRulesLX workspace can be automatically configured via an AS3 declaration. What Can You Do Next You can find all of the iRules used in this solution in our DevCentral Github repo. Please reach out to me and the F5 HashiCorp Business Development team hereif you have any questions, feature requests, or any feedback to make this solution better.960Views3likes0CommentsUnbreaking the Internet and Converting Protocols

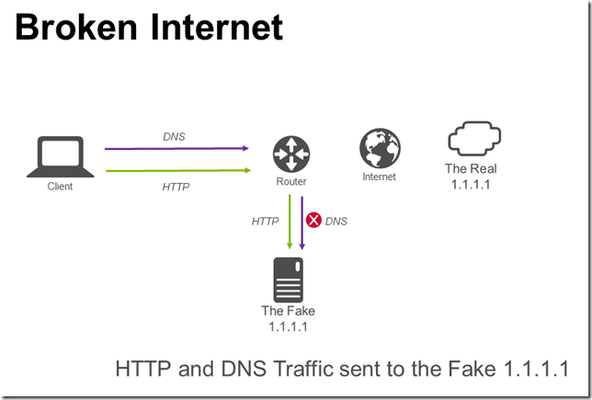

When CloudFlare took over 1.1.1.1 for their DNS service; this got me thinking about a couple of issues: A. What do you do if you’ve been using 1.1.1.1 on your network, how do you unbreak the Internet? B. How can you enable use of DNS over TLS for clients that don’t have native support? C. How can you enable use of DNS over HTTPS for clients that don’t have native support? A. Unbreaking the Internet RFC1918 lays out “private” addresses that you should use for internal use, i.e. my home network is 192.168.1.0/24. But, we all have issues where sometimes we “forget” about the RFC and you are using routable IP space on your internal network. 1.1.1.1 is a good example of this, it’s just so nice looking and easy to type. In my home network I created a contrived situation to simulate breaking the Internet; creating a network route on my home router that sends all traffic for 1.1.1.1/32 to a Linux host on my internal network. I imagined a situation where I wanted to continue to send HTTP traffic to my own server, but send DNS traffic to the “real” 1.1.1.1. In the picture above, I have broken the Internet. It is now impossible for me to route traffic to 1.1.1.1/32. To “fix” the Internet (image below), I used a F5 BIG-IP to send HTTP traffic to my internal server and DNS traffic to 1.1.1.1. The BIG-IP configuration is straight-forward. 1.1.1.1:80 points to the local server and 1.1.1.1:53 points to 1.0.0.1. Note that I have used 1.0.0.1 since I broke routing to 1.1.1.1 (1.0.0.1 works the same as 1.1.1.1). B. DNS over TLS After fixing the Internet, I started to think about the challenge of how to use DNS over TLS. RFC7858 is a proposed standard for wrapping DNS traffic in TLS. Many clients do not support DNS over TLS unless you install additional software. I started to wonder, is it possible to have a BIG-IP “upgrade” a standard DNS request to DNS over TLS? There are two issues. DNS over TLS uses TLS (encryption) DNS over TLS uses TCP (most clients default to UDP unless handling large DNS requests) For the first issue, the BIG-IP can already wrap a TCP connection with TLS (often used in providing SSL visibility to security devices that cannot inspect SSL traffic, BIG-IP terminates SSL connection, passes traffic to security device, re-encrypts traffic to final destination). The second issue can be solved with configuring BIG-IP DNS as a caching DNS resolver that accepts UDP and TCP DNS requests and only forwards TCP DNS requests. This results in an architecture that looks like the following. The virtual server is configured with a TCP profile and serverssl profile. The serverssl profile is configure to verify the authenticity of the server certificate. The DNS cache is configured to use the virtual server that performs the UDP to TCP over TLS connection. .wlemoticon { behavior: url(#default#.WLEMOTICON_WRITER_BEHAVIOR) } img { behavior: url(#default#IMG_WRITER_BEHAVIOR) } .wlwritereditablesmartcontent { behavior: url(#default#.WLWRITEREDITABLESMARTCONTENT_WRITER_BEHAVIOR) } .wlwritersmartcontent, .wlwriterpreserve { behavior: url(#default#.WLWRITERSMARTCONTENT,_.WLWRITERPRESERVE_WRITER_BEHAVIOR) } .wlwritereditablesmartcontent > .wleditfield { behavior: url(#default#.WLWRITEREDITABLESMARTCONTENT_>_.WLEDITFIELD_WRITER_BEHAVIOR) } blockquote { behavior: url(#default#BLOCKQUOTE_WRITER_BEHAVIOR) } #extendedentrybreak { behavior: url(#default##EXTENDEDENTRYBREAK_WRITER_BEHAVIOR) } .postbody table { behavior: url(#default#.POSTBODY_TABLE_WRITER_BEHAVIOR) } .postbody td { behavior: url(#default#.POSTBODY_TD_WRITER_BEHAVIOR) } .postbody th { behavior: url(#default#.POSTBODY_TH_WRITER_BEHAVIOR) } .posttitle {margin: 0px 0px 10px 0px; padding: 0px; border: 0px;} .postbody {margin: 0px; padding: 0px; border: 0px; min-height: 400px;} A bonus of using the DNS cache is that subsequent DNS queries are extremely fast! C. DNS over HTTPS I felt pretty good after solving these two issues (A and B), but there was a third nagging issue (C) of how to support DNS over HTTPS. If you have ever looked at DNS traffic it is a binary format over UDP or TCP that is visible on the network. HTTPS traffic is very different in comparison; TCP traffic that is encrypted. The draft RFC (draft-ietf-doh-dns-over-https-05) has a handy specification for “DNS Wire Format”. Take a DNS packet, shove it into an HTTPS message, get back a DNS packet in a HTTPS response. Hmm, I bet an iRule could solve this problem of converting DNS to HTTPS. Using iRulesLX I created a process where a TCL iRule takes a UDP payload off the network from a DNS client, sends it to a Node.JS iRuleLX extension that invokes the HTTPS API, returns the payload to the TCL iRule that sends the result back to the DNS client on the network. The solution looks like the following. This works on my home network, but I did notice occasional 405 errors from the HTTPS services while testing. The TCL iRule is simple, grab a UDP payload. when CLIENT_DATA { set payload [UDP::payload] set encoded_payload [b64encode $payload] set RPC_HANDLE [ILX::init dns_over_https_plugin dns_over_https] set rpc_response [ILX::call $RPC_HANDLE query_dns $encoded_payload] UDP::respond [b64decode $rpc_response] } The Node.JS iRuleLX Extension makes the API call to the HTTPS service. ... ilx.addMethod('query_dns', function(req, res) { var dns_query = atob(req.params()[0]); var options = { host: '192.168.1.254', port: 80, path: '/dns-query?ct=application/dns-udpwireformat&dns=' + req.params()[0], method: 'GET', headers: { 'Host':'cloudflare-dns.com' } }; var cfreq = http.request(options, function(cfres) { var output = ""; cfres.setEncoding('binary'); cfres.on('data', function (chunk) { output += chunk; }); cfres.on('end', () => { res.reply(btoa(output)); }); }); cfreq.on('error', function(e) { console.log('problem with request: ' + e.message); }); cfreq.write(dns_query); cfreq.end(); }); ... After deploying the iRule; you can update your DNS cache to point to the Virtual Server hosting the iRule. Note that the Node.JS code connects back to another Virtual Server on the BIG-IP that does a similar upgrade from HTTP to HTTPS and uses a F5 OneConnect profile. You could also have it connect directly to the HTTPS server and recreate a new connection on each call. All done? Before I started I wasn’t sure whether I could unbreak the Internet or upgrade old DNS protocols into more secure methods, but F5 BIG-IP made it possible. Until the next time that the Internet breaks.2.4KViews2likes5CommentsiRules LX Sideband Connection - Handling timeouts

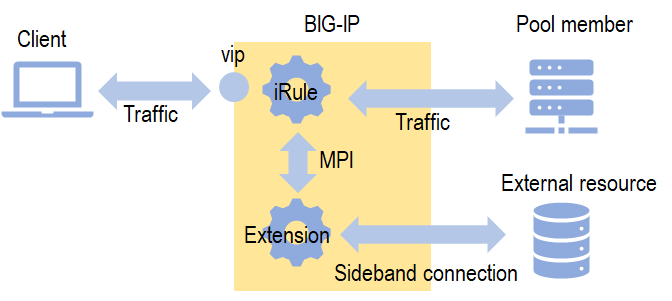

Introduction You often find external information necessary for traffic handling. It could be additional user information for authentication. It could be a white list to determine if the source IP addresses are legitimate. It could be whois data from Regional Internet Registries. Such information can be locally stored on the BIG-IP (e.g., datagroup), however, it is often easier to obtain them from elsewhere by accessing external entities such as a web server or database. This type of implementation is called a sideband connection. On BIG-IP, a sideband connection can be implemented using iRules or iRules LX. The former has been there since BIG-IP Version 11, so you might have tried it before (see also Advanced iRules: Sideband Connections). The latter became available from v12, and is gaining popularity in recent days, thanks to its easy-to-use built-in modules. In the simplest form, only thing you need in an iRules LX implementation is a single call to the external entity: For example, an HTTP call to the web server using the Node.js http module (see also iRules LX Sideband Connection). It does work most of the time, but you would like to add additional robustness to cater for corner cases. Otherwise, your production traffic will be disrupted. ILX::call timeout One of the most common errors in iRules LX sideband connection implementations is timeout. It occurs when the sideband connection does not complete in time. An iRules LX RPC implementation (plugin) consists of two types of codes: iRule (TCL) and extension (Node.js) as depicted below. The iRule handles events and data of traffic just like usual iRules. When external data is required, the iRule sends a request to the extension over the separate channel called MPI (Message Passing Interface) using ILX::call, and waits for the response. The extension establishes the connection to the external server, waits for the response, processes the response if required, and sends it back to the iRule. When the extension cannot complete the sideband task within a predefined period, ILX::call times out. The default timeout is 3s. The following list shows a number of common reasons why ILX::call times out: High latency to the external server Large response data Heavy computation (e.g., cryptographic operations) High system load which makes the extension execution slow In any case, when timeout occurs, ILX::call raises an error: e.g., Aug 31 07:15:00 bigip err tmm1[24040]: 01220001:3: TCL error: /Common/Plugin/rule <HTTP_REQUEST> - ILX timeout. invoked from within "ILX::call $rpc_handle function [IP::client_addr]" You may consider increasing the timeout to give time for the extension to complete the task. You can do so from the -timeout option of ILX::call. This may work, but not ideal for some cases. Firstly, a longer timeout means longer user wait time. If you set the timeout to 20s, the end user may need to wait up to 20s to see the initial response, which is typically just an error. Secondly, even after the timeout of iRule, the extension keeps running up to the end. Upon completion, the extension sends back the data requested, but it is ignored because the iRule is no longer waiting for it. It is not only unnecessary but also consumes resources. Typically, the default timeout of the Node.js HTTP get request on a typical Unix box is 120s. Under a burst of incoming connections, the resources for the sideband connection would accumulate and stay for 120s, which is not negligible. To avoid unnecessary delay and resource misuse, it is recommended to set an appropriate timeout on the extension side and return gracefully to the iRule. If you are using the Node.js bundled http module, it can be done by its setTimeout function. If the modules or classes that your sideband connection utilizes do not have a ready-made timeout mechanism, you can use the generic setTimeout function. Sample HTTP sideband connection implementation with timeout handling The specification of a sample HTTP sideband connection with timeout handling on the extension side are as follows: iRule sends its timeout value to the extension. Extension sets its timeout to the sideband server. In here, it is set to the iRule's timeout minus 300 ms. For example, if the iRule's timeout is 3s, it is set to 2,700 ms. The 300 is empirical. You may want to try different values to come up with the most appropriate one for your environment. Extension catches any error on the sideband connection and reply error to the iRule. Note that you need to send only one return message for each ILX::call irrespective of a number of events it catches. Let's move on to a sample implementation. iRule (TCL) code 1 when HTTP_REQUEST { 2 set timeout 3000 ;# Default 3 set start [clock seconds] 4 5 set RPC_HANDLE [ILX::init "SidebandPlugin" "SidebandExt"] 6 set response [ILX::call $RPC_HANDLE -timeout $timeout func $timeout] 7 set duration [expr [clock seconds] - $start] 8 if { $response == "timeout" } { 9 HTTP::respond 500 content "Internal server error: Backend server did not respond within $timeout ms." 10 return 11 } elseif { $response == "error" || $response == "abort" } { 12 HTTP::respond 500 content "Internal server error: Backend server was not available." 13 return 14 } 15 else { 16 log local0. "Response in ${duration}s: $response" 17 } 18 } The iRule kicks off when the HTTP_REQUEST event is raised. It then sends a request to the extension (ILX::call). If an error occurs, it processes accordingly. Otherwise, it just logs the duration of the extension execution. Line #6 sets the timeout of ILX::call to 3s (3,000 ms), which is the default value (change Line #2 if necessary). The timeout value is also sent to the extension (the last argument of the ILX::call). Lines #8 to #9 capture the 'timeout' string that the extension returns when it times out (after 3000 - 300 = 2,700 ms). In this case, it returns the 500 response back to the client. Lines #11 to #12capture the 'error' or 'response' string that the extension returns. It returns the 500 response too. Line #16 only reports the time it took for the sideband processing to complete. Change this line to whatever you want to perform. Extension (Node.js) code 1 const http = require("http"); 2 const f5 = require("f5-nodejs"); 3 4 function httpRequest (req, res) { 5 let tclTimeout = (req.params() || [3000])[0]; 6 let thisTimeout = tclTimeout - 300; // Exit 300ms prior to the TCL timeout 7 let start = (new Date()).getTime(); 8 9 let request = http.get('http://192.168.184.10:8080/', function(response) { 10 let data = []; 11 response.on('data', function(chunk) { 12 data.push(chunk); 13 }); 14 response.on('end', function() { 15 console.log(`Sideband success: ${(new Date()).getTime() - start} ms.`); 16 res.reply(data.join('').toString()); 17 return; 18 }); 19 }); 20 21 // Something might have happened to the sideband connectin 22 let events = ['error', 'abort', 'timeout']; // Possible erroneous events 23 let resSent = false; // Send reply only once 24 for(let e of events) { 25 request.on(e, function(err) { 26 let eMessage = err ? err.toString() : ''; 27 console.log(`Sideband ${e}: ${(new Date()).getTime() - start} ms. ${eMessage}`); 28 if (!resSent) 29 res.reply(e); // Send just once. 30 resSent = true; 31 if (! request.aborted) 32 request.abort(); 33 return; 34 }); 35 } 36 request.setTimeout(thisTimeout); 37 request.end(); 38 } 39 40 var ilx = new f5.ILXServer(); 41 ilx.addMethod('func', httpRequest); 42 ilx.listen(); The overall composition is same as a boilerplate iRules LX extension code: Require necessary modules (Lines #1 and #2), create a method for processing the request from the iRule (Lines #4 to #38), and register it to the event listener (Line #41). The differences are: Lines #5 and #6 are for determining the timeout on the sideband connection. As shown in the iRule code, the iRule sends its ILX::call timeout value to the extension. The code here receives it and subtracts 300 ms from there. Line #5 looks unnecessarily complex because it checks if it has received the timeout properly. If it is not present in the request, it falls back to the default 3,000 ms. It is advisable to check the data received before accessing it. Accessing an array (req.params() returns an array) element that does not exist raises an error, and subsequently kills the Node.js process (unless the error is caught). Line #9 sends an HTTP request to the sideband server. Lines #10 to #19 are usual routines for receiving the response. The message in Line #15 is for monitoring the latency between the BIG-IP and the sideband server. You may want to adjust the timeout value depending on statistics gathered from the messages. Obviously, you may want to remove this line for performance sake (disk I/O is not exactly cheap). Lines #21 to #35 are for handling the error events: error, abort and timeout. Line #28 sends the reply to the iRule only once (if statement is necessary as multiple events may be raised from a single error incident). Line #32 aborts the sideband connection for any of the error events to make sure the connection resources are freed. Line #36 sets the timeout on the sideband connection: The iRule timeout value minus 300 ms. Conclusion A sideband connection implementation is one of the most popular iRules LX use-cases. In the simplest form, the implementation is as easy as a few lines of HTTP request handling, however, the iRule side sometimes times out due to the latency on the extension side and the traffic is subsequently disturbed. It is important to control the timeout and handle the errors properly in the extension code. References F5: iRules Home - ILX, Clouddocs. F5: iRules Home - Sideband, Clouddocs. F5: iRules LX Home, Clouddocs. Flores: Getting Started with iRules LX, DevCentral. Node.js Foundation: Node.js v6.17.1 Documentation. Sutcliff: iRules LX Sideband Connection, DevCentral. Walker: Advanced iRules: Sideband Connections, DevCentral.1.2KViews1like7CommentsWhere you Rate-Limit APIs Matters

Seriously, let’s talk about this because architecture is a pretty important piece of the scalability puzzle. Rate limiting is not a new concept. We used to call it “quality of service” to make it sound nicer, but the reality is that when you limited bandwidth availability based on application port or protocol, you were rate limiting. Today we have to apply that principle to APIs (which are almost always RESTful HTTP requests) because just as there was limited bandwidth in the network back in the day, there are limited resources available to any given server. This is not computer science 301, so I won’t dive into the system-level details as to why it is TCP sockets are tied to file handles and thus limit the number of concurrent connections available. Nor will I digress into the gory details of memory management and CPU scheduling algorithms that ultimately support the truth of operational axiom #2 – as load increases, performance decreases. Anyone who has tried to do anything on a very loaded system has experienced this truth. So, now we’ve got modern app architectures that rely primarily on APIs. Whether invoked from a native mobile or web-based client, APIs are the way we exchange data these days. We scale APIs like we scale most HTTP-based resources. We stick a load balancer in front of two or more servers and algorithmically determine how to distribute requests. It works, after all. That can be seen every day across the Internet. Chances are if you’re doing anything with an app, it’s been touched by a load balancer. Now, I mention that because it’ll be important later. Right now, let’s look a bit closer at API rate limiting. The way API rate limiting works in general is that each client is allowed X requests per time_interval. The time interval might be minutes, hours, or days. It might even be seconds. The reason for this is to prevent any given client (user) from consuming so many resources (memory, CPU, database) as to prevent the system from responding to other users. It’s an attempt to keep the server from being overwhelmed and falling over. That’s why we scale. The way API rate limiting is often implemented is that the app, upon receiving a request, checks with a service (or directly with a data source) to figure out whether or not this request should be fulfilled or not based on user-defined quotas and current usage. This is the part where I let awkward silence fill the room while you consider the implication of the statement. In an attempt to keep from overwhelming servers with API requests, that same server is tasked with determining whether or not the request should be fulfilled or not. Now, I know that many API rate limiting strategies are used solely to keep data sources from being overwhelmed. Servers, after all, scale much easier than their database counterparts. Still, you’re consuming resources on a server unnecessarily. You’re also incurring some pretty heavy architectural debt by coupling metering and processing logic together (part of the argument for microservices and decomposition but that’s another post) and making it very difficult to change how that rate limiting is enforced in the future. Because it’s coupled with the app. If you recall back to the beginning of this post, I mentioned there is almost always (I’d be willing to bet on it) a load balancer upstream from the servers in question. It is upstream logically and often physically, too, and it is, by its nature, capable of managing many, many, many more connections (sockets) than a web server. Because that’s what they’re designed to do. So if you moved the rate limiting logic from the server to the load balancer… you get back resources and reduce architectural debt and ensure some agility in case you want to rapidly change rate limiting logic in the future. After all, changing that logic in 1 or 2 instances of a load balancer is far less disruptive than making code changes to the app (and all the testing and verification and scheduling that may require). Now, as noted in this article laying out “Best Practices for a Pragmatic RESTful API” there are no standards for API rate limiting. There are, however, suggested best practices and conventions that revolve around the use of custom HTTP headers: At a minimum, include the following headers (using Twitter's naming conventions as headers typically don't have mid-word capitalization): X-Rate-Limit-Limit - The number of allowed requests in the current period X-Rate-Limit-Remaining - The number of remaining requests in the current period X-Rate-Limit-Reset - The number of seconds left in the current period And of course when a client has reached the limit, be sure to respond with HTTP status code 429 Too Many Requests, which was introduced in RFC 6585. Now, if you’ve got a smart load balancer; one that is capable of actually interacting with requests and responses (not just URIs or pre-defined headers, but one that can actually reach all the way into the TCP payload, if you want) and is enabled with some sort of scripting language (like TCL or node.js) then you can move API rate limiting logic to a load balancer-hosted service and stop consuming valuable compute. Inserting custom headers using node.js (as we might if we were using iRules LX on a BIG-IP load balancing service) is pretty simple. The following is not actual code (I mean it is, but it’s not something I’ve tested). This is just an example of how you can grab limits (from a database, a file, another service) and then insert those into custom headers. 1: limits = api_user_limit_lookup(); 2: req.headers["X-Rate-Limit-Limit"] = limits.limit; 3: req.headers["X-Rate-Limit-Remaining"] = limits.remaining; 4: req.headers["X-Rate-Limit-Reset"] = limits.resettime; You can also simply refuse to fulfill the request and return the suggested HTTP status code (or any other, if your app is expecting something else). You can also send back a response with a JSON payload that contains the same information. As long as you’ve got an agreed upon method of informing the client, you can pretty much make this API rate limiting service do what you want. Why in the network? There are three good reasons why you should move API rate limiting logic upstream, into the load balancing proxy: 1. Eliminates technical debt If you’ve got rate limiting logic coupled in with app logic, you’ve got technical debt you don’t need. You can lift and shift that debt upstream without having to worry about how changes in rate limiting strategy will impact the app. 2. Efficiency gains You’re offloading logic upstream, which means all your compute resources are dedicated to compute. You can better predict capacity needs and scale without having to compensate for requests that are unequal consumers. 3. Security It’s well understood that application layer (request-response) attacks are on the rise, including denial of service. By leveraging an upstream proxy with greater capacity for connections you can stop those attacks in their tracks, because they never get anywhere near the actual server. Like almost all things app and API today, architecture matters more than algorithms. Where you execute logic matters in the bigger scheme of performance, security, and scale.1.4KViews1like1CommentGetting Started with iRules LX, Part 4: NPM & Best Practices

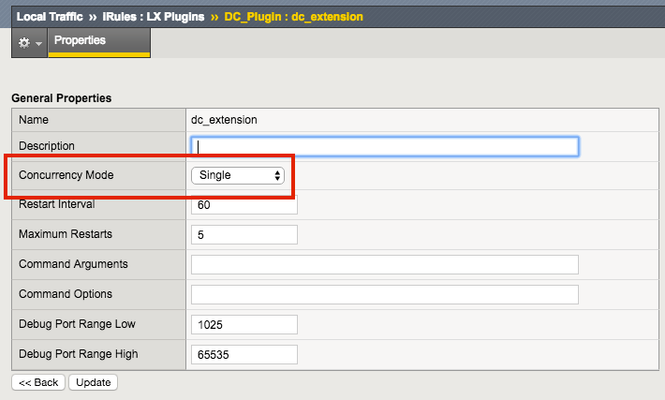

So far in this series we've covered basic nomenclature and concepts, and in the last article actually dug into the code that makes it all work. At this point, I'm sure the wheels of possibilities are turning in your minds, cooking up all the nefarious interesting ways to extend your iRules repertoire. The great thing about iRules LX, as we'll discuss in the onset of this article, is that a lot of the heavy lifting has probably already been done for you. The Node.js package manager, or NPM, is a living, breathing, repository of 280,000+ modules you won't have to write yourself should you need them! Sooner or later you will find a deep desire or maybe even a need to install packages from NPM to help fulfill a use case. Installing packages with NPM NPM on BIG-IP works much of the same way you use it on a server. We recommend that you not install modules globally because when you export the workspace to another BIG-IP, a module installed globally won't be included in the workspace package. To install an NPM module, you will need to access the Bash shell of your BIG-IP. First, change directory to the extension directory that you need to install a module in. Note: F5 Development DOES NOT host any packages or provide any support for NPM packages in any way, nor do they provide security verification, code reviews, functionality checks, or installation guarantees for specific packages. They provide ONLY core Node.JS, which currently, is confined only to versions 0.12.15 and 6.9.1. The extension directory will be at /var/ilx/workspaces/<partition_name>/<workspace_name>/extensions/<extension_name>/ . Once there you can run NPM commands to install the modules as shown by this example (with a few ls commands to help make it more clear) - [root@test-ve:Active:Standalone] config # cd /var/ilx/workspaces/Common/DevCentralRocks/extensions/dc_extension/ [root@test-ve:Active:Standalone] dc_extension # ls index.js node_modules package.json [root@test-ve:Active:Standalone] dc_extension # npm install validator --save validator@5.3.0 node_modules/validator [root@test-ve:Active:Standalone] dc_extension # ls node_modules/ f5-nodejs validator The one caveat to installing NPM modules on the BIG-IP is that you can not install native modules. These are modules written in C++ and need to be complied. For obvious security reasons, TMOS does not have a complier. Best Practices Node Processes It would be great if you could spin up an unlimited amount of Node.js processes, but in reality there is a limit to what we want to run on the control plane of our BIG-IP. We recommend that you run no more than 50 active Node processes on your BIG-IP at one time (per appliance or per blade). Therefore you should size the usage of Node.js accordingly. In the settings for an extension of a LX plugin, you will notice there is one called concurrency - There are 2 possible concurrency settings that we will go over. Dedicated Mode This is the default mode for all extensions running in a LX Plugin. In this mode there is one Node.js process per TMM per extension in the plugin. Each process will be "dedicated" to a TMM. To know how many TMMs your BIG-IP has, you can run the following TMSH command - root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # show sys tmm-info | grep Sys::TMM Sys::TMM: 0.0 Sys::TMM: 0.1 This shows us we have 2 TMMs. As an example, if this BIG-IP had a LX plugin with 3 extensions, I would have a total of 6 Node.js processes. This mode is best for any type of CPU intensive operations, such as heavy parsing data or doing some type of lookup on every request, an application with massive traffic, etc. Single Mode In this mode, there is one Node.js process per extension in the plugin and all TMMs share this "single" process. For example, one LX plugin with 3 extensions will be 3 Node.js processes. This mode is ideal for light weight processes where you might have a low traffic application, only do a data lookup on the first connection and cache the result, etc. Node.js Process Information The best way to find out information about the Node.js processes on your BIG-IP is with the TMSH command show ilx plugin . Using this command you should be able to choose the best mode for your extension based upon the resource usage. Here is an example of the output - root@(test-ve)(cfg-sync Standalone)(Active)(/Common)(tmos) # show ilx plugin DC_Plugin --------------------------------- ILX::Plugin: DC_Plugin --------------------------------- State enabled Log Publisher local-db-publisher ------------------------------- | Extension: dc_extension ------------------------------- | Status running | CPU Utilization (%) 0 | Memory (bytes) | Total Virtual Size 1.1G | Resident Set Size 7.7K | Connections | Active 0 | Total 0 | RPC Info | Total 0 | Notifies 0 | Timeouts 0 | Errors 0 | Octets In 0 | Octets Out 0 | Average Latency 0 | Max Latency 0 | Restarts 0 | Failures 0 --------------------------------- | Extension Process: dc_extension --------------------------------- | Status running | PID 16139 | TMM 0 | CPU Utilization (%) 0 | Debug Port 1025 | Memory (bytes) | Total Virtual Size 607.1M | Resident Set Size 3.8K | Connections | Active 0 | Total 0 | RPC Info | Total 0 | Notifies 0 | Timeouts 0 | Errors 0 | Octets In 0 | Octets Out 0 | Average Latency 0 | Max Latency 0 From this you can get quite a bit of information, including which TMM the process is assigned to, PID, CPU, memory and connection stats. If you wanted to know the total number of Node.js processes, that same command will show you every process and it could get quite long. You can use this quick one-liner from the bash shell (not TMSH) to count the Node.js processes - [root@test-ve:Active:Standalone] config # tmsh show ilx plugin | grep PID | wc -l 16 File System Read/Writes Since Node.js on BIG-IP is pretty much stock Node, file system read/writes are possible but not recommended. If you would like to know more about this and other properties of Node.js on BIG-IP, please see AskF5 Solution ArticleSOL16221101. Note:NPMs with symlinks will no longer work in 14.1.0+ due to SELinux changes In the next article in this series we will cover troubleshooting and debugging.2.5KViews1like4CommentsIntroducing iRules LX

iRules is a powerful scripting language that allows you to control network traffic in real time that can route, redirect, modify, drop, log or do just about anything else with network traffic passing through a BIG-IP proxy. iRules enables network programmability to consolidate functions across applications and services. iRules LX: The Next Evolution of Network Programmability iRules LX is the next stage of evolution for network programmability that brings Node.js language support to the BIG-IP withversion 12.1. Node.js allows JavaScript developers access to over 250,000 npm packages that makes code easier to write and maintain. Development teams can access and work on code with the new iRules LX Workspace environment and the new plug-in available for the Eclipse IDE and can be used for continuous integration builds. Extend with Node.js Support iRules Language eXtensions (LX) enables node.js capabilitieson the BIG-IP platform. By using iRules LX (Node.js) extensions you can program iRules LX using javascript to control network traffic in real time. There are only three simple Tcl commands that you need to extend iRules to Node.js ILX::init – create Tcl handle, bind extension ILX::call – send/receive data to/from extension method ILX::notify – send data one-way to method, no response There is only one Node.js command to know: ILX.addMethod – create method, for use by above Share and Reuse Code iRules LX makes it easy for JavaScript developers to share and reuse Node.js code, and it makes it easy to update the code. These bits of reusable code are called packages, or sometimes modules. This makes it possible for you to compose complex business logic in node.js without sophisticated developers who know Tcl in-depth. There are over 250,000 npm packages that makes code easier to write and maintain. iRules LX Workspaces LX Workspaces provides a portable development package environment with NPM-like directory structure that acts as the source for creation and modification of plugins and node.js extensions. Workspace LX is developer-friendly, with Git-like staging and publishing that simplifies export and import to keep your files in sync for continuous integration and development. iRules IDE Integration / Eclipse Plugin Available soon, F5 is delivering a free downloadable plugin to enable our customers to develop iRules & iRules LX scripts via their own Eclipse IDE. The pluginprovides modern IDE editing capabilities including syntax highlighting & validation, code completion, and code formatting for both Tcl and Javascript.This plugin will enable authenticated push and pull of iRules code files to and from the BIG-IP platform.This simplifies development workflows by enabling our customers to develop iRules scripts in a familiar development environment. iRules LX Example: Use Node.js to Make an Off Box MySQL Lookup This iRules example showsa connection to MySQL database via Node.js. This example uses Tcl iRulesand JavaScript code to make a MySQL call. The iRules prompts the user for a basic auth username and password, then we lookup the username in a MySQL database table and return the user's groups. MySQL iRule (Tcl) Example: ################################### # mysql_irulelx # 2015-05-17 - Aaron Hooley - First draft example showing iRulesLX querying MySQL database ################################### when RULE_INIT { # Enable logging to /var/log/ltm? # 0=none, 1=error, 2=verbose set static::mysql_debug 2 } when HTTP_REQUEST { if {$static::mysql_debug >= 2}{log local0. "New HTTP request. \[HTTP::username\]=[HTTP::username]"} # If the client does not supply an HTTP basic auth username, prompt for one. # Else send the HTTP username to node.js and respond to client with result if {[HTTP::username] eq ""}{ HTTP::respond 401 content "Username was not included in your request.\nSend an HTTP basic auth username to test" WWW-Authenticate {Basic realm="iRulesLX example server"} if {$static::mysql_debug >= 2}{log local0. "No basic auth username was supplied. Sending 401 to prompt client for username"} } else { # Do some basic validation of the HTTP basic auth username # First fully URI decode it using https://devcentral.f5.com/s/articles?sid=523 set tmp_username [HTTP::username] set username [URI::decode $tmp_username] # Repeat decoding until the decoded version equals the previous value while { $username ne $tmp_username } { set tmp_username $username set username [URI::decode $tmp_username] } # Check that username only contains valid characters using https://devcentral.f5.com/s/articles?sid=726 if {$username ne [set invalid_chars [scan $username {%[-a-zA-Z0-9_]}]]}{ HTTP::respond 401 content "\nA valid username was not included in your request.\nSend an HTTP basic auth username to test\n" WWW-Authenticate {Basic realm="iRulesLX example server"} if {$static::mysql_debug >= 1}{log local0. "Invalid characters in $username. First invalid character was [string range $username [string length $invalid_chars] [string length $invalid_chars]]"} # Exit this iRule event return } # Client supplied a valid username, so initialize the iRulesLX extension set RPC_HANDLE [ILX::init mysql_extension] if {$static::mysql_debug >= 2}{log local0. "\$RPC_HANDLE: $RPC_HANDLE"} # Make the call and save the iRulesLX response # Pass the username and debug level as parameters #set rpc_response [ILX::call $RPC_HANDLE myql_nodejs $username $static::mysql_debug] set rpc_response [ILX::call $RPC_HANDLE myql_nodejs $username] if {$static::mysql_debug >= 2}{log local0. "\$rpc_response: $rpc_response"} # The iRulesLX rule will return -1 if the query succeeded but no matching username was found if {$rpc_response == -1}{ HTTP::respond 401 content "\nYour username was not found in MySQL.\nSend an HTTP basic auth username to test\n" WWW-Authenticate {Basic realm="iRulesLX example server"} if {$static::mysql_debug >= 1}{log local0. "Username was not found in MySQL"} } elseif {$rpc_response eq ""}{ HTTP::respond 401 content "\nDatabase connection failed.\nPlease try again\n" WWW-Authenticate {Basic realm="iRulesLX example server"} if {$static::mysql_debug >= 1}{log local0. "MySQL query failed"} } else { # Send an HTTP 200 response with the groups retrieved from the iRulesLX plugin HTTP::respond 200 content "\nGroup(s) for '$username' are '$rpc_response'\n" if {$static::mysql_debug >= 1}{log local0. "Looked up \$username=$username and matched group(s): $rpc_response"} } } } MySQL iRule LX (Node.js) Example /* ###################################### */ /* index.js counterpart for mysql_irulelx */ /* Log debug to /var/log/ltm? 0=none, 1=errors only, 2=verbose */ var debug = 2; if (debug >= 2) {console.log('Running extension1 index.js');} /* Import the f5-nodejs module. */ var f5 = require('f5-nodejs'); /* Create a new rpc server for listening to TCL iRule calls. */ var ilx = new f5.ILXServer(); /* Start listening for ILX::call and ILX::notify events. */ ilx.listen(); /* Add a method and expect a username parameter and reply with response */ ilx.addMethod('myql_nodejs', function(username, response) { if (debug >= 1) {console.log('my_nodejs' + ' ' + typeof(username.params()) + ' = ' + username.params());} var mysql = require('mysql'); var connection = mysql.createConnection({ host : '10.0.0.110', user : 'bigip', password : 'bigip' }); // Connect to the MySQL server connection.connect(function(err) { if (err) { if (debug >= 1) {console.error('Error connecting to MySQL: ' + err.stack);} return; } if (debug >= 2) {console.log('Connected to MySQL as ID ' + connection.threadId);} }); // Perform the query. Escape the user-input using mysql.escape: https://www.npmjs.com/package/mysql#escaping-query-values connection.query('SELECT * from users_db.users_table where name = ' + mysql.escape(username.params(0)), function(err, rows, fields) { if (err) { // MySQL query failed for some reason, so send a null response back to the Tcl iRule if (debug >= 1) {console.error('Error with query: ' + err.stack);} response.reply(''); return; } else { // Check for no result from MySQL if (rows < 1){ if (debug >= 1) {console.log('No matching records from MySQL');} // Return -1 to the Tcl iRule to show no matching records from MySQL response.reply('-1'); } else { if (debug >= 2) {console.log('First row from MySQL is: ', rows[0]);} //Return the group field from the first row to the Tcl iRule response.reply(rows.pop()); } } }); // Close the MySQL connection connection.end(); }); Getting Started To get started with iRules LX, download a BIG-IP trial and follow the upcoming tutorials available on the DevCentral community.6.2KViews1like2Comments