The days of IP-based management are numbered

The focus of cloud and virtualization discussions today revolve primarily around hypervisors, virtual machines, automation, network and application network infrastructure; on the dynamic infrastructure necessary to enable a truly dynamic data center. In all the hype we’ve lost sight of the impact these changes will have on other critical IT systems such as network systems management (NSM) and application performance management (APM). You know their names: IBM, CA, Compuware, BMC, HP. There are likely one or more of their systems monitoring and managing applications and systems in your data center right now. They provide alerts, notifications, and the reports IT managers demand on a monthly or weekly basis to prove IT is meeting the service-level agreements around performance and availability made with business stakeholders. In a truly dynamic data center, one in which resources are shared in order to provide the scalability and capacity needed to meet those service-level agreements, IP addresses are likely to become as mobile as the applications and infrastructure that need them. An application may or may not use the same IP address when it moves from one location to another; an application will use multiple IP addresses when it scales automatically and those IP addresses may or may not be static. It is already apparent that DHCP will play a larger role in the dynamic data center than it does in a classic data center architecture. DHCP is not often used within the core data center precisely because it is not guaranteed. Oh, you can designate that *this* MAC address is always assigned *that* dynamic IP address, but essentially what you’re doing is creating a static map that is in execution no different than a static bound IP address. And in a dynamic data center, the MAC address is not guaranteed precisely because virtual instances of applications may move from hardware to hardware based on current performance, availability, and capacity needs. The problem then is that NMS and APM is often tied to IP addresses. Using aging standards like SNMP to monitor infrastructure and utilizing agents installed at the OS or application server layer to collect performance data that is ultimately used to generate those eye-candy charts and reports for management. These systems can also generate dependency maps, tying applications to servers to network segments and their support infrastructure such that if any one dependent component fails, an administrator is notified. And it’s almost all monitored based on IP address. When those IP addresses change, as more and more infrastructure is virtualized and applications become more mobile within the data center, the APM and NMS systems will either fail to recognize the change or, more likely, “cry wolf” with alerts and notifications stating an application is down when in truth it is running just fine. The potential to collect erroneous data is detrimental to the ability of IT to show its value to the business, prove its adherence to agreed upon service-level agreements, and to the ability to accurately forecast growth. NMS and APM will be affected by the dynamic data center; they will need to alter the basic premise upon which they have always acted: every application and network device and application network infrastructure solution is tied to an IP address. The bonds between IP address and … everything are slowly being dissolved as we move into an architectural model that abstracts the very network foundations upon which data centers have always been built and then ignores it. While in many cases the bond between a device or application and an IP address will remain, it cannot be assumed to be true. The days of IP-based management are numbered, necessarily, and while that sounds ominous it is really a blessing in disguise. Perhaps the “silver lining in the cloud”, even. All the monitoring and management that goes on in IT is centered around one thing: the application. How well is it performing, how much bandwidth does it need/is it using, is it available, is it secure, is it running. By forcing the issue of IP address management into the forefront by effectively dismissing IP address as a primary method of identification, the cloud and virtualization have done the IT industry in general a huge favor. The dismissal of IP address as an integral means by which an application is identified, managed, and monitored means there must be another way to do it. One that provides more information, better information, and increased visibility into the behavior and needs of that application. NMS and APM, like so many other IT systems management and monitoring solutions, will need to adjust the way in which they monitor, correlate, and manage the infrastructure and applications in the new, dynamic data center. They will need to integrate with whatever means is used to orchestrate and manage the ebb and flow of infrastructure and applications within the data center. The coming network and data center revolution - the move to a dynamic infrastructure and a dynamic data center - will have long-term effects on the systems and applications traditionally used to manage and monitor them. We need to start considering the ramifications now in order to be ready before it becomes an urgent need.305Views0likes4CommentsF5 Friday: HP Cloud Maps Help Navigate Server Flexing with BIG-IP

The economy of scale realized in enterprise cloud computing deployments is as much (if not more) about process as it is products. HP Cloud Maps simplify the former by automating the latter. When the notion of “private” or “enterprise” cloud computing first appeared, it was dismissed as being a non-viable model due to the fact that the economy of scale necessary to realize the true benefits were simply not present in the data center. What was ignored in those arguments was that the economy of scale desired by enterprises large and small was not necessarily that of technical resources, but of people. The widening gap between people and budgets and data center components was a primary cause of data center inefficiency. Enterprise cloud computing promised to relieve the increasing burden on people by moving it back to technology through automation and orchestration. As a means to achieve such a feat – and it is a non-trivial feat – required an ecosystem. No single vendor could hope to achieve the automation necessary to relieve the administrative and operational burden on enterprise IT staff because no data center is ever comprised of components provided by a single vendor. Partnerships – technological and practical partnerships – were necessary to enable the automation of processes spanning multiple data center components and achieve the economy of scale promised by enterprise cloud computing models. HP, while providing a wide variety of data center components itself, has nurtured such an ecosystem of partners. Combined with its HP Operations Orchestration, such technologically-focused partnerships have built out an ecosystem enabling the automation of common operational processes, effectively shifting the burden from people to technology, resulting in a more responsive IT organization. HP CLOUD MAPS One of the ways in which HP enables customers to take advantage of such automation capabilities is through Cloud Maps. Cloud Maps are similar in nature to F5’s Application Ready Solutions: a package of configuration templates, guides and scripts that enable repeatable architectures and deployments. Cloud Maps, according to HP’s description: HP Cloud Maps are an easy-to-use navigation system which can save you days or weeks of time architecting infrastructure for applications and services. HP Cloud Maps accelerate automation of business applications on the BladeSystem Matrix so you can reliably and consistently fast- track the implementation of service catalogs. HP Cloud Maps enable practitioners to navigate the complex operational tasks that must be accomplished to achieve even what seems like the simplest of tasks: server provisioning. It enables automation of incident resolution, change orchestration and routine maintenance tasks in the data center, providing the consistency necessary to enable more predictable and repeatable deployments and responses to data center incidents. Key components of HP Cloud Maps include: Templates for hardware and software configuration that can be imported directly into BladeSystem Matrix Tools to help guide planning Workflows and scripts designed to automate installation more quickly and in a repeatable fashion Reference whitepapers to help customize Cloud Maps for specific implementation HP CLOUD MAPS for F5 NETWORKS The partnership between F5 and HP has resulted in many data center solutions and architectures. HP’s Cloud Maps for F5 Networks today focuses on what HP calls server flexing – the automation of server provisioning and de-provisioning on-demand in the data center. It is designed specifically to work with F5 BIG-IP Local Traffic Manager (LTM) and provides the necessary configuration and deployment templates, scripts and guides necessary to implement server flexing in the data center. The Cloud Map for F5 Networks can be downloaded free of charge from HP and comprises: The F5 Networks BIG-IP reference template to be imported into HP Matrix infrastructure orchestration Workflow to be imported into HP Operations Orchestration (OO) XSL file to be installed on the Matrix CMS (Central Management Server) Perl configuration script for BIG-IP White papers with specific instructions on importing reference templates, workflows and configuring BIG-IP LTM are also available from the same site. The result is an automation providing server flexing capabilities that greatly reduces the manual intervention necessary to auto-scale and respond to capacity-induced events within the data center. Happy Flexing! Server Flexing with F5 BIG-IP and HP BladeSystem Matrix HP Cloud Maps for F5 Networks F5 Friday: The Dynamic Control Plane F5 Friday: The Evolution of Reference Architectures to Repeatable Architectures All F5 Friday Posts on DevCentral Infrastructure 2.0 + Cloud + IT as a Service = An Architectural Parfait What is a Strategic Point of Control Anyway? The F5 Dynamic Services Model Unleashing the True Potential of On-Demand IT302Views0likes1CommentA Storage (Capacity) Optimization Buying Spree!

Remember when Beanie Babies were free in Happy Meals, and tons of people ran out to buy the Happy Meals but only really wanted the Beanie Babies? Yeah, that’s what the storage compression/dedupe market is starting to look like these days. Lots of big names are out snatching up at-rest de-duplication and compression vendors to get the products onto their sales sheets, we’ll have to see if they wanted the real value of such an acquisition – the bright staff that brought these products to fruition – or they’re buying for the product and going to give or throw away the meat of the transaction. Yeah, that sentence is so pun laden that I think I’ll leave it like that. Except there is no actual meat in a Happy Meal, I’m pretty certain of that. Today IBM announced that it is formally purchasing Storwize, a file compression tool designed to compress data on NAS devices. That leaves few enough players in the storage optimization space, and only one – Permabit – whose name I readily recognize. Since I wrote the blog about Dellpicking up Ocarina, and this is happening while that blog is still being read pretty avidly, I figured I’d weigh in on this one also. Storwize is a pretty smart purchase for IBM on the surface. The products support NAS at the protocol level – they claim “storage agnostic”, but personal experience in the space is that there’s no such thing… CIFs and NFS tend to require tweaks from vendor A to vendor B, meaning that to be “agnostic” you have to “write to the device”. An interesting conundrum. Regardless, they support CIFS and NFS, are stand-alone appliances that the vendors claim are simple to set up and require little or no downtime, and offer straight-up compression. Again, Storewize and IBM are both claiming zero performance impact, I cannot imagine how that is possible in a compression engine, but that’s their claim. The key here is that they work on everyone’s NAS devices. If IBM is smart, the products still will work on everyone’s devices in a year. Related Articles and Blogs IBM Buys Storewize Dell Buys Ocarina Networks Wikipedia definition – Capacity Optimization Capacity Optimization – A Core Storage Technology (PDF)259Views0likes1CommentMaking Infrastructure 2.0 reality may require new standards

Managing a heterogeneous infrastructure is difficult enough, but managing a dynamic, ever changing heterogeneous infrastructure that must be stable enough to deliver dynamic applications makes the former look like a walk in the park. Part of the problem is certainly the inability to manage heterogeneous network infrastructure devices from a single management system. SNMP (Simple Network Management Protocol), the only truly interoperable network management standard used by infrastructure vendors for over a decade, is not robust enough to deal with the management nightmare rapidly emerging for cloud computing vendors. It's called "Simple" for a reason, after all. And even if it weren't, SNMP, while interoperable with network management systems like HP OpenView and IBM's Tivoli, is not standardized at the configuration level. Each vendor generally provides their own customized MIB (Management Information Base). Customized, which roughly translates to "proprietary"; if not in theory then in practice. MIBs are not interchangeable, they aren't interoperable, and they aren't very robust. Generally they're used to share information and are not capable of being used to modify device configuration. In other words, SNMP and customized MIBs are just not enough to support efficient management of a very large heterogeneous data center. As Greg Ness pointed out in his latest blog post on Infrastructure 2.0, the diseconomies of scale in the IP address management space are applicable more generally to the network management space. There's just no good way today to efficiently manage the kind of large, heterogeneous environment required of cloud computing vendors. SNMP wasn't designed for this kind of management any more than TCP/IP was designed to handle the scaling needs of today's applications. While some infrastructure vendors, F5 among them, have seen fit to provide a standards-based management and configuration framework, none of us are really compatible with the other in terms of methodology. The way in which we, for example, represent a pool or a VIP (Virtual IP address), or a VLAN (Virtual LAN) is not the same way Cisco or Citrix or Juniper represent the same network objects. Indeed, our terminology may even be different; we use pool, other ADC vendors use "farm" or "cluster" to represent the same concept. Add virtualization to the mix and yet another set of terms is added to the mix, often conflicting with those used by network infrastructure vendors. "Virtual server" means something completely different when used by an application delivery vendor than it does when used by a virtualization vendor like VMWare or Microsoft. And the same tasks must be accomplished regardless of which piece of the infrastructure is being configured. VLANs, IP addresses, gateway, routes, pools, nodes, and other common infrastructure objects must be managed and configured across a variety of implementations. Scaling the management of these disparate devices and solutions is quickly becoming a nightmare for vendors involved in trying to build out large-scale data centers, whether those are large enterprises or cloud computing vendors or service providers. In a response to Cloud Computing and Infrastructure 2.0, "johnar" points out: Companies are forced to either roll the dice on single-vendor solutions for simplicity, or fill the voids with their own home-brew solutions and therefore assume responsibility for a lot of very complex code that is tightly coupled with ever-changing vendor APIs and technology. The same technology that vendors tout as their differentiator is what is causing the integrators grey hair. Because we all "do it different" with our modern day equivalents of customized MIBs it makes it difficult to integrate all the disparate nodes that make up a full application delivery network and infrastructure into a single, cohesive, efficient management mechanism. We're standards-based, but we aren't based on a single management standard. And as "johnar" points out, it seems unlikely that we'll "unite for data center peace" any time soon: "Unlike ratifying a new Ethernet standard, there's little motivation for ADC vendors to play nice with each other." I think there is motivation and reason for us to play nice with each other in this regard. Disparate competitive vendors came together in the past to ratify Ethernet standards, which led to interoperability and simpler management as we built out the infrastructure that makes the web work today. If we can all agree that application delivery controllers (ADCs) are an integral part of Infrastructure 2.0 (and I'm betting we all can) then in order to forward adoption of ADCs in general and make it possible for customers to choose based on features and functionality then we must make an effort to come together and consider standardizing a management model across the industry. And if we're really going to do it right, we need to encourage other infrastructure vendors to agree on a common base network management model to further simplify management of large heterogeneous network infrastructures. A VLAN is a VLAN regardless of whether it's implemented in a switch, an ADC, or on a server. If a lack of standards might hold back adoption or prevent the ability of vendors to compete for business, then that's a damn good motivating factor right there for us to unite for data center peace. If Microsoft, IBM, BEA, and Oracle were able to unite and agree upon a single web services interoperability standard (which they were, the result of which is WS-I) then it is not crazy to think that F5 and its competitors can come together and agree upon a single, standards-based management interface that will drive Infrastructure 2.0 to be reality. Major shifts in architectural paradigms often require new standards. That's where we got all the WS-* specifications and that's where we got all the 802.x standards: major architectural paradigm shifts. Cloud computing and the pervasive webification of, well, everything is driving yet another major architectural paradigm shift. And that may very well mean we need new standards to move forward and make the shift as painless as possible for everyone.249Views0likes0CommentsIT Chaos Theory: The PeopleSoft Effect

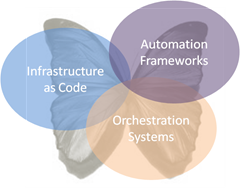

#cloud #devops A robust integration ecosystem is critical to prevent the PeopleSoft effect within the network In chaos theory, the butterfly effect is the sensitive dependence on initial conditions, where a small change at one place in a nonlinear system can result in large differences to a later state. The name of the effect, coined by Edward Lorenz, is derived from the theoretical example of a hurricane's formation being contingent on whether or not a distant butterfly had flapped its wings several weeks before. -- Wikipedia, Butterfly Effect Many may not recognize the field of IT chaos theory (because technically I made it up for this post) but its premise is similar in nature to that of chaos theory. The big difference is that while Chaos Theory has a butterfly effect, IT Chaos Theory has a PeopleSoft effect. In IT Chaos Theory, the PeopleSoft effect is the sensitive dependence on initial integrations between operational components where a small change in one place results in large amounts of technical debt when any single component is upgraded. This name was chosen due to the history of PeopleSoft implementations in which even small customizations of one version generally leads to increasing amounts of time and effort being expended to reproduce after upgrades which obliterated the original customizations. Lest you think I jest with respect to the heartache caused by PeopleSoft in the past, consider this excerpt from an article on PeopleSoft Planet regarding customization of the software: Although you are currently succeeding in resisting the temptation to customize the software, your excuse of “let’s get the routine established first” is losing ground. Experienced users can imagine countless ways to “tweak” the system to do everything the previous solution did, plus take advantage of all the features that were why you purchased this software originally. In truth, you have already made a few customizations, but those you had to do. Nagging you is the persistent worry that once you customize, that your options to upgrade will be seriously jeopardized, or at least the prospect of a relatively simple, smooth, even seamless upgrade is reduced to a myth. How can you guarantee that when you upgrade, your customizations will not be lost? That days of productivity will not be compromised? Yet, if you do not make a few more concessions, how many man hours will be spent, attempting to recreate the solutions you had previously? There is a reason there is an entire sub-market of PeopleSoft developers within the larger development community. There are legions of folks out there who focus solely on PeopleSoft and whose daily grind is, in fact, to maintain and continually update new versions of PeopleSoft. If you’ve worked within an enterprise you will recognize these dedicated teams as a reality. These are the kinds of situations and realities we want to – nay, must – avoid within operations. While integration of infrastructure with automation frameworks and orchestration systems is critical to the successful implementation of cloud computing models, we must be sensitive to the impact of customization and integration downstream. The reason for this is the technical debt incurred by each small change grows non-linearly over time. As this becomes reality, rigidity begins to take hold and agility begins to rapidly decline as operations becomes increasingly aggressive towards changes in the underlying integrations. Rigidity of the systems takes root in the slowness or outright refusal to enact change in the system by those reluctant to take on the task of identifying the impact across an ever broadening set of integrated systems. AVOIDING the PEOPLESOFT EFFECT: A ROBUST INTEGRATION ECOSYSTEM One of the ways in which IT can avoid the PeopleSoft effect is to take advantage of existing integration ecosystems whenever possible so as to minimize the amount of custom integrations that must be managed. One of the benefits of automation and orchestration – of cloud computing, really – is to reduce the burden on manual procedures and processes. Which in turn reduces the already high burden on IT operations – on admins. A recent GFI stress survey found that IT admins are a particularly stressed-out lot already, and anything that can be done to reduce this burden should be viewed as a positive step. Automation and orchestration enhances the scalability of the processes as well as the speed with which they can be executed, and has additional benefits in the form of reducing the potential for human error to cause delays or outages. And perhaps it’s the thing that ensures those 67% of admins considering switching careers due to job stress don’t actually follow through. A mixture of pre-packaged integration for automation purposes affords operations the ability to focus on process codification via orchestration engines, and not on writing or tweaking code to fit APIs and SDKs. Codification of customization should occur as much as possible in the processes and policies that govern automation, not in the integration layer that interconnects the systems and environments being controlled. Taking advantage of pre-existing integrations with automation frameworks and provisioning systems enables IT to alleviate the potential PeopleSoft effect that occurs when APIs, SDKs or frameworks invariably change. Cloud is ultimately built on an ecosystem: a robust integration ecosystem wherein the focus lies on process engineering and policy development as a means to create repeatable deployment processes and automation objects that form the foundation for IT as a Service. When evaluating infrastructure, in particular, pay careful attention to the integration available with frameworks and orchestration engines and in particular those upon which you may have or may be considering standardizing: Popular frameworks and orchestration managers include: Some customization is always necessary, but application integration nightmares involving integration have taught us that minimizing the amount of customization is the best strategy for minimizing the potential impact of changes later on. This is especially true for cloud computing environments, where integration and the processes orchestrated atop it may start out simple, but rapidly grow more complex in terms of interdependencies and interrelationships. The more intertwined this systems become, the more likely it is that a small change in one part of the system will have a dramatic impact on another later on. F5 Friday: Addressing the Unintended Consequences of Cloud At the Intersection of Cloud and Control… Cloud is an Exercise in Infrastructure Integration The Impact of Security on Infrastructure Integration The API is the Center of the Application (Integration) Universe An Aristotlean Approach to Devops and Infrastructure Integration The Importance Of Integration In The Future Of The Cloud With BlueLock Web 2.0: Integration, APIs, and Scalability246Views0likes0CommentsF5 Friday: BIG DDoS Umbrella powered by the HP VAN SDN Controller

#SDN #DDoS #infosec Integration and collaboration is the secret sauce to breaking down barriers between security and networking Most of the focus of SDN apps has been, to date, on taking layer 4-7 services and making them into extensions of the SDN controller. But HP is taking a different approach and the results are tantalizing. HP's approach, as indicated by the recent announcement of its HP SDN App Store, focuses more on the use of SDN apps as a way to enable the sharing of data across IT silos to create a more robust architecture. These apps are capable of analyzing events and information that enable the HP VAN SDN Controller to prescriptively modify network behavior to address issues and concerns that impact networks and the applications that traverse them. One such concern is security (rarely mentioned in the context of SDN). For example, how the network might response more rapidly to threat events, such as in progress DDoS attack. Which is where the F5 BIG DDoS Umbrella for HP's VAN (Virtual Application Network) comes into play. The focus of F5 BIG DDoS Umbrella is on mitigating in-progress attacks and the implementation depends on a collaboration between two disparate devices: the HP VAN SDN Controller and F5 BIG-IP. The two devices communicate via an F5 SDN app deployed on the HP VAN SDN Controller. The controller is all about the network, while the F5 SDN app is focused on processing and acting on information obtained from F5 security services deployed on the BIG-IP. This is collaboration and integration at work, breaking down barriers between groups (security and network operations) by sharing data and automating processes*. F5 BIG DDoS Umbrella The BIG DDoS Umbrella relies upon the ability of F5 BIG-IP to intelligently intercept, inspect and identity DDoS attacks in flight. BIG-IP is able to identify DDoS events targeting the network, application layers, DNS or SSL. Configuration (available as an iApp upon request) is flexible, enabling the trigger to be one, a combination of or all of the events. This is where collaboration between security and network operations is critical to ensure the response to a DDoS event meets defined business and operational goals. When BIG-IP identifies a threat, it sends the relevant information with a prescribed action to the HP VAN SDN Controller. The BIG DDoS Umbrella agent (the SDN "app") on the HP VAN SDN Controller processes the information, and once the location of entry for the attacker is isolated, the prescribed action is implemented on the device closest to the attacker. The BIG DDoS Umbrella App is free, and designed to extend the existing DDoS protection capabilities of BIG-IP to the edge of the network. It is a community framework which users may use, enhance or improve. Additional Resources: DDoS Umbrella for HP SDN AppStore - Configuration Guide HP SDN App Store - F5 BIG DDoS Umbrella App Community * If that sounds more like DevOps than SDN, you're right. It's kind of both, isn't it? Interesting, that...235Views0likes0CommentsDell Buys Ocarina Networks. Dedupe For All?

Storage at rest de-duplication has been a growing point of interest for most IT staffs over the last year or so, just because de-duplication allows you to purchase less hardware over time, and if that hardware is a big old storage array sucking a ton of power and costing a not-insignificant amount to install and maintain, well, it’s appealing. Most of the recent buzz has been about primary storage de-duplication, but that is merely a case of where the market is. Backup de-duplication has existed for a good long while, and secondary storage de-duplication is not new. Only recently have people decided that at-rest de-dupe was stable enough to give it a go on their primary storage – where all the most important and/or active information is kept. I don’t think I’d call it a “movement” yet, but it does seem that the market’s resistance to anything that obfuscates data storage is eroding at a rapid rate due to the cost of the hardware (and attendant maintenance) to keep up with storage growth. Related Articles and Blogs Dell-Ocarina deal will alter landscape of primary storage deduplication Data dedupe technology helps curb virtual server sprawl Expanding Role of Data Deduplication The Reality of Primary Storage Deduplication212Views0likes0CommentsWhy IT Needs to Take Control of Public Cloud Computing

IT organizations that fail to provide guidance for and governance over public cloud computing usage will be unhappy with the results… While it is highly unlikely that business users will “control their own destiny” by provisioning servers in cloud computing environments that doesn’t mean they won’t be involved. In fact it’s likely that IaaS (Infrastructure as a Service) cloud computing environments will be leveraged by business users to avoid the hassles they perceive (and oft times actually do) exist in their quest to deploy a given business application. It’s just that they won’t themselves be pushing the buttons. There have been many experts that have expounded upon the ways in which cloud computing is forcing a shift within IT and the way in which assets are provisioned, acquired, and managed. One of those shifts is likely to also occur “outside” of IT with external IT-focused services, such as system integrators like CSC and EDS HP Enterprise Services.202Views0likes2CommentsStandardized Cloud APIs? Yes.

Mike Fratto over at Network Computinghas a blog that declares the need for standards in Cloud Management APIs is non-existent or at least premature. Now Mike is a smart guy and has enough experience to have a clue what he’s writing about, unlike many cloud pundits out there, but like all smart people I like to read information from, I reserve the right to completely disagree. And in this case I am going to have to. He’s right that Cloud Management is immature, and he’s right that it is not a simple topic. Neither was the conquering of standardized APIs for graphical monitors back in the day, or the adoption of XML standards for a zillion things. And he’s right that the point of standards is interoperability. But in the case of cloud, there’s more to it than that. Cloud is infrastructure. Imagine if you couldn’t pull out a Cisco switch and drop in the equivalent HP switch? That’s what we’re talking about here, infrastructure. There’s a reason that storage, networks, servers, etc. all have interoperability standards. And those reasons apply to Cloud also. If you’re a regular reader, you no doubt have heard my disdain for Cloud Storage vendors who implemented CLOUD storage and thereby guaranteed that enterprises would need cloud storage gateways just to make use of the cloud storage offerings. At least in the short term while standards-compliant cloud interfaces or drivers for servers are implemented. The same is true of all cloud services, and for many of the same reasons. Do not tell an enterprise that they should put their applications out in your space by using a proprietary API that locks them into your solutions. Tell them they should put their applications out on your cloud space because it is competitively the best available. And the way to do that is through standards. Mike gets 20 or so steps ahead of himself by listing the problems without considering the minimum cost of entry. To start, you don’t need an API for every single possible option that might ever be considered to bring up a VM. How about “startVM ImageName Priority IPAddress Netmask or something similar? That tells the cloud provider to start a VM using the image file named, giving it a certain priority (priority is a placeholder for number of CPUs, memory, etc), using the mentioned IP Address and Network Mask. That way clones can be started with unique networking addresses. Is it all-encompassing? No. Is it the last API we’ll ever need? No. Does it mean that today I can be with Amazon today and tomorrow move to Rackspace? Yes. And that’s all the industry needs – the ability for an enterprise to keep their options open. There’s another huge benefit to standardization – employee reusability/mobility. Once you know how to implement the standard for your enterprise, you can implement it on any provider, rather than having to gain experience with each new provider. That makes employees more productive, and keeps the pool of available cloud developers and devops people large enough to fulfill staffing needs without having to train or retrain everyone. The burden on IT training budgets is minimized, and the choices when hiring are broadened. That doesn’t mean they’ll come cheap – it’s still going to be a small, in-demand crowd – but it does mean you won’t have to say “must have experience programming for Rackspace”. Though the way standards work is that there will be benefits to finding someone specialized in the vendor you’re using, it will only be a “nice to have”, not a “requirement”, broadening the pool of prospective employees. And as long as users are involved in the standards process, it is never too early to standardize something that is out there being utilized. Indeed, the longer you wait to standardize, the more inertia builds to resist standardization because each vendor’s customers have a ton of stuff built in the pre-standards manner. Until you start the standardization process and get user input into what’s working and what’s not, you can’t move the ball down the court, so to speak, and standards written in absence of those who have to use them do not have a huge track record of success. The ones that work in this manner tend to have tiny communities where it’s a badge of honor to overcome the idiosyncrasies of the standard (NAS standards spring to mind here). So do we need standardized cloud APIs? I’ll say yes. Customers need mobility not just for their apps, but for their developers to keep the cost of cloud out of the clouds. And it’s not simple, but the first step is. Let’s take it, and get this infrastructure choice closer to being an actual option that can be laid on the table next to “buy more hardware” and considered equally.198Views0likes0CommentsGet out your dice! It’s time for a game of Datacenters & Dragons

It’s the all new revised fifth edition of the popular real-life fantasy game we call Datacenters and Dragons DM (Datacenter Manager): “Through the increasingly cloudy windows of the datacenter you see empty racks and abandoned servers where once there were rumored to be blinking lights and application consoles. Only a few brave and stalwart applications remain, somehow immune to the siren-like call of the Cloud Empire through the ancient and long forgotten secret rituals found only in the now-lost COBOL copybook. As you stand, awestruck at the destructive power of the Empire, a shadow falls across the remaining rack, dimming the few remaining fluorescent lights. It is…a cloud dragon. As you stand, powerless to move in your abject terror, the cloud dragon breathes on another rack and its case dissolves. A huge claw lifts the application server and clutches it to its breast, another treasure to add to its growing hoard. And then, just as you are finally able to move, it reaches out with the other claw and bats aside the operators with a powerful blow, scattering them beyond the now ethereal walls of the datacenter. Then it turns its cloudy eye on you and rears back, drawing in its breath as it prepares to breathe on you. Roll initiative.” The cries of “Change or die” and “IT is dead” and “cloud is a threat to IT” are becoming more and more common across the greater kingdoms of IT, pitting cloud as the evil dragon that you will either agree to serve as part of a much larger, nebulous empire known as ‘the cloud’ or you’ll find yourself asking “would you like fries with that?” According to some industry pundits, cloud computing has already passed from the realm of hype into a technology that is seriously impacting the business of IT. The basis for such claims point to small organizations for whom cloud computing makes the most sense (at least early on) and at large organizations like HP who are reducing the size of their IT staff based on their cloud computing efforts. IT as we know it, some say, is doomed*. Yet surveys and research conducted in the past year show a very different story – cloud computing is an intriguing option that is more interesting as a way to transform IT into a more efficient business resource than it is as an off-premise, wash-your-hands of the problem outsourcing option. In fact, a Vanson Bourne survey conducted on behalf of cloud provider RackSpace shows a very different story; at the beginning of 2009 less than 1/3 of small businesses were even considering cloud computing and only 11 percent of UK mid-sized businesses were using cloud as part of their strategy, though more than half indicated cloud would be incorporated in the future.188Views0likes0Comments