Scrubbing away DDoS attacks

Bigger and badder than ever DDoS attacks are an IT professionals’ nightmare – they can knock out applications that generate revenue and facilitate communications, or even more fundamentally, take down entire networks. What’s worse, they are becoming increasingly sophisticated, large and frequent as cybercriminals get more aggressive and creative. All businesses must now expect DDoS attacks, rather than consider them remote possibilities, and prepare accordingly. One recent study found that in just one year, a whopping 38% of companies providing online services (such as ecommerce, online media and others) had been on the receiving end of an attack. Such attacks have moved up the network stack over time, climbing from network attacks in the 1990s to session attacks and application layer attacks today. Now, application attacks at layer 7 represent approximately half of all attacks. We’re also seeing attacks go even further, into business logic, which often exists as a layer above the OSI model. Protecting your business So how do we protect our content and applications and keep them running in the face of attacks? With attack traffic reaching new heights (the record set in 2014 was 500Gbps), few organizations have sufficient bandwidth to hold up to such magnitudes. Increasingly, businesses are having to turn to scrubbing services to handle such volumetric attacks. You can think of a scrubbing service as a first line of defense against DDoS attacks. Such services process your incoming traffic and detect, identify and mitigate threats in real time. The clean traffic is then returned to your site, keeping any attacks from reaching your network and enabling your businesses to stay online and available. Your users are left blissfully unaware. How scrubbing works For more detail, let’s take a look at how this process works at the new F5 scrubbing center here in Singapore, which is a key piece of our Silverline DDoS Protection hybrid solution. Part of F5’s fully redundant and globally distributedglobal security operation center (SOC), the facility is built with advanced systems and tools engineered to deal with the increasing threats, escalating scale and complexity of DDoS attacks. F5 determines the best scrubbing routes for each segment of traffic and automatically directs traffic through the cloud scrubbing centers for real-time mitigation. As traffic enters a scrubbing center, it is triaged based on a various traffic characteristics and possible attack methodologies. Traffic continues to be checked as it traverses the scrubbing center to confirm the malicious traffic has been fully removed. Clean traffic is then returned through your website with little to no impact to the end user. Silverline DDoS Protection provides attack mitigation bandwidth capacity of over 2.0 Tbps and scrubbing capacity of over 1.0 Tbps to protect your business from even the largest DDoS attacks. It can run continuously to monitor all traffic and stop attacks from ever reaching your network, or it can be initiated on demand when your site is under DDoS attack.1.4KViews0likes1CommentSSL通信のアクティブ・スタンバイのフェイルオーバ切り替え、可用性が大幅に強化!

意外と知られていないSSL通信冗長化の課題を業界で初めてF5が解決 アプリケーション・デリバリ・コントローラ(ADC)を利用する際、多くのユーザ様はバックアップ含めて2台構成で導入されているかと思います。現在では通信機器の導入形態として冗長構成は一般的で、通信不具合時にはアクティブ機からスタンバイ機に切り替わるわけですが、ここで重要なのはこの「切り替わったこと」によるインパクトをユーザに与えない事になります。 そのための手法としてコネクションミラーリングというものがあります。このコネクション同期技術により、切り替わった後も切り替わる前のセッション情報を複製(ミラーリング)し、ユーザへの影響をなくすことが出来ます。通常、Webで一番多く使われているProtocolであるHTTPの場合はコネクションをミラーしなくてもTCPの再送でユーザへの影響は最小限にされます。一方、FTPやTelnetなどの長時間継続する通信においてコネクションミラーは使われています。しかし、最近のTrendとしてはHTTPの場合でも通信は長時間継続するケースは増えており、コネクションミラーの必要性も出てきます。 実は、今までこのミラーリングをセキュアな通信プロトコルであるSSLに対して提供するのは非常に難易度が高く、L4-L7通信の設計で課題となっていました。しかも、SSLといえば、現在はGoogle, Facebookなどのサービス、またオンラインショッピングや動画、オンラインバンキングなど、考えてみると利用されていないサービスを探すのが難しいほど一般的になってきています。SSL通信のコネクションがバックアップに切り替わるたびにミラーリングができないとどうなるか。例えばバンキングだと、オンライン株取引が操作中に中断され、再度ログインを要求される可能性もあります。取引内容次第ではサービス利用者の受ける損失は甚大です。また、オンラインゲームでも同様です。例えばオンライン格闘ゲームで凌ぎを削っていた戦いが中断され、ゲームをやり直しになったとします。たかがゲームですが、ユーザの満足度の低下は避けられません。 まもなく、F5は従来困難だったSSL通信のコネクションミラーリングを実現できる最新版をリリースします。現在、ADCソリューションとしてはF5のみが提供するものとなります。SSL通信を使ったサービス提供基盤の構築に対して高い可用性を、しかも簡単に導入できる – そんなご提案を目指して開発を進めております。 具体的な提供開始時期については、是非弊社担当営業、販売代理店にお問い合わせ頂ければ幸いです910Views0likes0CommentsF5 Predicts: Education gets personal

The topic of education is taking centre stage today like never before. I think we can all agree that education has come a long way from the days where students and teachers were confined to a classroom with a chalkboard. Technology now underpins virtually every sector and education is no exception. The Internet is now the principal enabling mechanism by which students assemble, spread ideas and sow economic opportunities. Education data has become a hot topic in a quest to transform the manner in which students learn. According to Steven Ross, a professor at the Centre for Research and Reform in Education at Johns Hopkins University, the use of data to customise education for students will be the key driver for learning in the future[1].This technological revolution has resulted in a surge of online learning courses accessible to anyone with a smart device. A two-year assessment of the massive open online courses (MOOCs) created by HarvardX and MITxrevealed that there were 1.7 million course entries in the 68 MOOC [2].This translates to about 1 million unique participants, who on average engage with 1.7 courses each. This equity of education is undoubtedly providing vast opportunities for students around the globe and improving their access to education. With more than half a million apps to choose from on different platforms such as the iOS and Android, both teachers and students can obtain digital resources on any subject. As education progresses in the digital era, here are some considerations for educational institutions to consider: Scale and security The emergence of a smogasborad of MOOC providers, such as Coursera and edX, have challenged the traditional, geographical and technological boundaries of education today. Digital learning will continue to grow driving the demand for seamless and user friendly learning environments. In addition, technological advancements in education offers new opportunities for government and enterprises. It will be most effective if provided these organisations have the ability to rapidly scale and adapt to an all new digital world – having information services easily available, accessible and secured. Many educational institutions have just as many users as those in large multinational corporations and are faced with the issue of scale when delivering applications. The aim now is no longer about how to get fast connection for students, but how quickly content can be provisioned and served and how seamless the user experience can be. No longer can traditional methods provide our customers with the horizontal scaling needed. They require an intelligent and flexible framework to deploy and manage applications and resources. Hence, having an application-centric infrastructure in place to accelerate the roll-out of curriculum to its user base, is critical in addition to securing user access and traffic in the overall environment. Ensuring connectivity We live in a Gen-Y world that demands a high level of convenience and speed from practically everyone and anything. This demand for convenience has brought about reform and revolutionised the way education is delivered to students. Furthermore, the Internet of things (IoT), has introduced a whole new raft of ways in which teachers can educate their students. Whether teaching and learning is via connected devices such as a Smart Board or iPad, seamless access to data and content have never been more pertinent than now. With the increasing reliance on Internet bandwidth, textbooks are no longer the primary means of educating, given that students are becoming more web oriented. The shift helps educational institutes to better personalise the curriculum based on data garnered from students and their work. Duty of care As the cloud continues to test and transform the realms of education around the world, educational institutions are opting for a centralised services model, where they can easily select the services they want delivered to students to enhance their learning experience. Hence, educational institutions have a duty of care around the type of content accessed and how it is obtained by students. They can enforce acceptable use policies by only delivering content that is useful to the curriculum, with strong user identification and access policies in place. By securing the app, malware and viruses can be mitigated from the institute’s environment. From an outbound perspective, educators can be assured that students are only getting the content they are meant to get access to. F5 has the answer BIG-IP LTM acts as the bedrock for educational organisations to provision, optimise and deliver its services. It provides the ability to publish applications out to the Internet in a quickly and timely manner within a controlled and secured environment. F5 crucially provides both the performance and the horizontal scaling required to meet the highest levels of throughput. At the same time, BIG-IP APM provides schools with the ability to leverage virtual desktop infrastructure (VDI) applications downstream, scale up and down and not have to install costly VDI gateways on site, whilst centralising the security decisions that come with it. As part of this, custom iApps can be developed to rapidly and consistently deliver, as well as reconfigure the applications that are published out to the Internet in a secure, seamless and manageable way. BIG-IP Application Security Manager (ASM) provides an application layer security to protect vital educational assets, as well as the applications and content being continuously published. ASM allows educational institutes to tailor security profiles that fit like a glove to wrap seamlessly around every application. It also gives a level of assurance that all applications are delivered in a secure manner. Education tomorrow It is hard not to feel the profound impact that technology has on education. Technology in the digital era has created a new level of personalised learning. The time is ripe for the digitisation of education, but the integrity of the process demands the presence of technology being at the forefront, so as to ensure the security, scalability and delivery of content and data. The equity of education that technology offers, helps with addressing factors such as access to education, language, affordability, distance, and equality. Furthermore, it eliminates geographical boundaries by enabling the mass delivery of quality education with the right policies in place. [1] http://www.wsj.com/articles/SB10001424052702304756104579451241225610478 [2] http://papers.ssrn.com/sol3/papers.cfm?abstract_id=2586847843Views0likes3CommentsThree Key Business Driver in Cloud Adoption

Surprisingly, business owners and business line C-level executives increasingly become the driving force of cloud adoption in the organizations- In the last couple of years, business around the world have witnessed the shift toward cloud computing. They have seen the advent of cloud technology, from simple usage such as store personal data to a more complex one such as being used by government to store public data (healthcare and social security data), as well as business to extend the functionalities of their current IT system. In Asia Pacific’s business world, according to a Frost & Sullivan’s study in collaboration with F5 Networks: The New Language of Cloud Computing, only 9% of enterprise don’t have plans for usage of cloud technology at the moment, while the rest: 23.8% are at the planning stage, 32.6% are in the process of implementing cloud technology, and 34.5% are currently using cloud services. Looking from business standpoint, beyond ‘only’ cost saving reason, there are three key business factors that drive the growing adoption of cloud technology. The three factors are: Business Owners: They become the main driving forces of cloud adoption in organization as they are starting to roll out more services through application to both of their customer and employees. The New Language of Cloud Computing also reveal the growing involvement of other C-level executive -apart from CTO/IT director- in the cloud service purchase lifecycle. Business-line executive are heavily involved at every stage of the cloud service purchase lifecycle with the percentage that close to IT-line executives. The transformation of IT consumption in business: as enterprise begins to embrace outsourcing IT workloads and also cloud computing, everything as a service IT environment will “disrupt” the way technology is consumed and inspire business model of innovation. Today’s businesses are looking to implement new services and functionality using as-a service model rather than they have to pay for new infrastructure (hardware and software) and expert to operate it. They extend their IT functionality through cloud platform and combine it with the current on-premise infrastructure. Internet of things: as smartphone and other smart devices – such as smart wearable device and even smart toothbrush – adoption is proliferating, users demands that the services and data that offered through these platform is highly available and have fast access. For these reasons, many business are deploy their application and store data in cloud. Nevertheless, with the growing popularity and adoption of cloud computing in business, applications become increasingly critical in IT infrastructure. For users, the application accessibility has become the sole factor that count and can massively impact their experience. To tackle that challenge, the Enterprises need to have the means to deliver applications in a fast, secure and highly available manner to users regardless of the of deployment model that business has chosen; whether on-premises, hybrid, and/or as-a-service. Specifically In the cloud and hybrid deployment model, F5 provides the application delivery capabilities through F5 Silverline™ and F5® BIG-IQ™ Cloud which specifically developed to help businesses seamlessly configure and automate application delivery services in cloud and hybrid infrastructures.595Views0likes0CommentsOpflex: Cisco Flexing its SDN Open Credentials [End of Life]

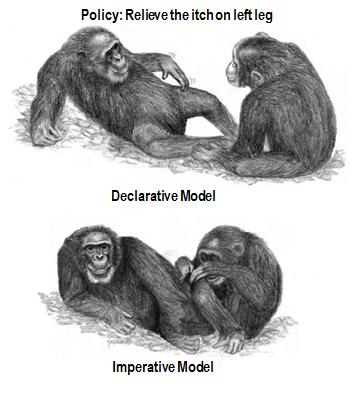

The F5 and Cisco APIC integration based on the device package and iWorkflow is End Of Life. The latest integration is based on the Cisco AppCenter named ‘F5 ACI ServiceCenter’. Visit https://f5.com/cisco for updated information on the integration. There has been a lot of buzz this past few month about OpFlex. As an avid weight lifter, it sounded to me like Cisco was branching out into fitness products with its Internet of Things marketing drive. But nope...OpFlex is actually a new policy protocol for its Application Centric Infrastructure (ACI) architecture. Just as Cisco engineered its own SDN implementation with ACI and the Application Policy Infrastructure Controller (APIC), it engineered Opflex as its Open SouthBound protocol in order to relay in XML or JSONthe application policy from a network policy controller like the APIC to any End Points such as switches, hypervisors and Application Delivery Controllers (ADC). It is important to note that Cisco is pushing OpFlex an as a standard in the IETF forum in partnership with Microsoft, IBM, Redhat, F5, Canonical and Citrix. Cisco is also contributing to the OpenDayLight project by delivering a completely open-sourced OpFlex architecture for the APIC and other SDN controllers with the OpenDayLight Group (ODL) Group Policy plugin. Simply put, this ODL group policy would just state the application policy intent instead of comunication every policy implementation details and commands. Quoting the IETF Draft: The OpFlex architecture provides a distributed control system based on a declarative policy information model. The policies are defined at a logically centralized policy repository (PR) and enforced within a set of distributed policy elements (PE). The PR communicates with the subordinate PEs using the OpFlex Control protocol. This protocol allows for bidirectional communication of policy, events, statistics, and faults. This document defines the OpFlex Control Protocol. " This was a smart move from Cisco to silence its competitors labelling its ACI SDN architecture as another closed and proprietary architecture. The APIC actually exposes its NorthBound interfaces with Open APIs for Orchestrators such as OpenStack and Applications. The APIC also supports plugins for other open protocols such as JSON and Python. However, the APIC pushes its application policy SouthBound to the ACI fabric End Points via a device package. Cisco affirms the openness of the device package as it is composed of files written with open languages such as XML and python which can be programmed by anyone. As discussed in my previous ACI blogs, the APIC pushes the Application Network Profile and its policy to the F5 Synthesis Fabric and its Big-IP devices via an F5 device package. This worked well for F5 as it allowed it to preserve its Synthesis fabric programmable model with iApps/iRules and the richness of its L4-L7 features. SDN controllers have been using OpenFlow and OVSDB as open SouthBound protocols to push the controller policy unto the network devices.OpenFlow and OVSDB are IETF standards and supports the OpenDayLight project as a SouthBound interfaces. However, OpenFlow has been targeting L2 and L3 flow programmability which has limited its use case for L4-L7 network service insertion with SDN controllers. OpenFlow and OVSDB are used by Cisco ACI's nemesis.. VMware NSX. OpenFlow is used for flow programmability and OVSDB for device configuration. One of the big difference between OpFlex and OpenFlow lies with their respective SDN model. Opflex uses a Declarative SDN model while OpenFlow uses an Imperative SDN model.As the name entails, an OpenFlow SDN controller takes a dictatorial approach with a centralized controller (or clustered controllers) by delivering downstream detailed and complex instructions to the network devices control plane in order to show them how to deploy the application policy unto their dataplane. With OpFlex, the APIC or network policy controller take a more collaborative approach by distributing the intelligence to the network devices. The APIC declares its intent via its defined application policy and relays the instructions downstream to the network devices while trusting them to deploy the application policy requirements unto their dataplane based on their control plane intelligence. With Opflex declarative model, the network devices are co-operators with the APIC by retaining control of their control plane while with OpenFlow and the SDN imperative model, the network devices will be mere extensions of the SDN controller. According to Cisco, there are merits with OpFlex and its declarative model: 1- The APIC declarative model would be more redundant and less dependent on the APIC availability as an APIC or APIC cluster failure will not impact the ACI switch fabric operation. Traffic will still be forwarded and F5 L4-L7 services applied until the APIC comes back online and a new application policy is pushed. In the event that an OpenFlow SDN controller and controller cluster was to fail, the network will no longer be able to forward traffic and apply services based on the application policy requirement. 2- A declarative model would be a more scalable and distributed architecture as it allows the network devices to determine the method to implement the application policy and extend of the list of its supported features without requiring additional resources from the controller. On a light note, it is good to assume that the rise of the planet of network devices against their SDN controller Overlords is not likely to happen any time soon and that the controller instructions will be implemented in both instances in order to meet the application policy requirements. Ultimately, this is not an OpFlex vs OpenFlow battle and the choice between OpenFlow and OpFlex protocols will be in the hands of customers and their application policies. We all just need to keep in mind that the goal of SDN is to keep a centralized and open control plane to deploy applications. F5 welcomes OpFlex and is working to implement it as an agent for its Big-IP physical and virtual devices. With OpFlex, the F5 Big-IP physical or virtual devices will be responsible for the implementation of the L4-L7 network services defined by the APIC Application Network Profile unto the ACI switch fabric. The OpFlex protocol will enable F5 to extend its Synthesis Stateful L4-L7 fabricarchitecture to the Cisco ACI Stateless network fabric. To Quote Soni Jiandani, a founding member of Cisco ACI: The declarative model assumes the controller is not the centralized brain of the entire system. It assumes the centralized policy manager will help you in the definition of policy, then push out the intelligence to the edges of the network and within the infrastructure so you can continue to innovate at the endpoint. Let’s take an example. If I am an F5 or a Citrix or a Palo Alto Networks or a hypervisor company, I want to continue to add value. I don’t want a centralized controller to limit innovation at the endpoint. So a declarative model basically says that, using a centralized policy controller, you can define the policy centrally and push it out and the endpoint will have the intelligence to abide by that policy. They don’t become dumb devices that stop functioning the normal way because the intelligence solely resides in the controller.516Views0likes0CommentsDNS DDoS 공격이 발생했다

Adapted from Lori MacVittie's "F5 Friday: So That DNS DDoS Thing Happened" 다른 모든 퍼블릭 서비스와 마찬가지로 DNS 역시 취약하다. 이 말은 DNS 자체가 취약성을 가지고 있다는 말이 아니라 이 서비스의 목적과 성격으로 인해 공중에게 공개되고 공중이 접근할 수 있어야 한다는 의미에서 취약하다는 뜻이다. 어떤 디지털 액세스 방법에서든 내 자신과 내 서비스의 위치를 찾아내는 방법을 제공하기 위한 것이 DNS의 존재의미이기 때문에 DNS는 액세스 컨트롤 또는 다른 전통적인 보안 메카니즘 뒤에 숨어있을 수 없다. 따라서 전세계적으로 발생하고 있고 그 피해가 매우 큰 DDoS 공격의 주된 대상으로 DNS가 뉴스에 등장한다고 해서 놀랄만한 일은 아니다. 그렇기는 하지만 이미 널리 보도된 바와 같이 이런 공격에 필요한 기술적 투자가 매우 적은데 반해 그 피해의 크기와 결과는 놀랍기 그지없다. 초당 30Mbps 인터넷 접속 라인을 가진 친구 약 30명만 있으면 이런 공격을 충분히 모방할 수 있다. 많은 수의 프로토콜에 내재된 요청(request) 트래픽 크기 대 응답(response) 트래픽 크기의 불균형으로 인해, 이런 유형의 공격들은 공격하는 측에서는 큰 노력을 들이지 않고도 원하는 결과를 얻을 수 있다는 점을 보안 분야에 어느 정도 종사해온 사람이라면 누구나 알고 있고, DNS 역시 이러한 프로토콜 중 하나이다. 보안 분류학적으로는 이런 공격들을 “증폭 (amplification)” 공격이라 부른다. 이들은 새롭게 등장한 것이 아니고 (신뢰성이 없는 IP를 대신하여 네트워크의 IP 상태 및 에러 정보를 전달해주는 프로토콜인) ICMP (Internet Control Message Protocol)를 이용한 ‘스머프 공격 (Smurf 공격)’이 1990년대에 처음 나타났고, 브로드캐스트 애드레스 (broadcast address)를 이용해 피해를 입혔다. DNS 증폭 역시 질의(query)들은 사이즈가 작고 응답(response)들은 사이즈가 크다는 동일한 전제 때문에 작동한다. ICMP와 DNS 증폭 공격 둘 모두는 UDP를 사용하기 때문에 공격자의 측면에서 보면 매우 효과적인데, UDP에서는 핸드쉐이크 (handshake - 상호간에 수신하는 신호를 하나씩 확인해 가면서 제어를 진행해가는 방법)를 요구하지 않으며, 요청을 하는 IP 주소가 다른 요청을 한 IP 주소와 같은 주소인지를 전혀 확인하지 않기 때문이다. 따라서 TCP처럼 연결지향적인 프로토콜의 경우보다 훨씬 더 적은 노력으로 스푸핑 (spoofing)을 하기에 안성맞춤이다. 이 공격에서 요청 트래픽의 크기와 응답 트래픽의 크기 간에 불균형이 어느 정도 심한지 이해하기 위해, 요청 내용이 “dig ANY ripe.net @ <OpenDNSResolverIP> +edns0=0 +bufsize=4096”였다고 할 경우 이 요청 트래픽의 크기는 불과 36바이트에 지나지 않는데 반해, 보통 이에 대한 응답에 소요되는 트래픽 사이즈는 요청 트래픽의 100배인 3킬로 바이트에 달한다. 미국의 클라우드 서비스 업체인 CloudFare에 대한 DNS DDoS 공격에는 약 30,000 개의 DNS 리졸버가 공격에 이용되어, 각각 2.5Mbps의 트래픽을 공격대상에게 전송했다. 이 공격이 환상적이면서도 다른 공격들과 다른 점은 타켓이 된 서버에게 엄청난 양의 DNS (질의가 아닌) 응답이 전송되었고 이 응답 중 어떤 것도 이 서버가 질의 또는 요청한 적이 없다는 것이다. 문제는 DNS이고, DNS는 누구나 사용할 수 있도록 열려있다는 것이다. 응답을 제한시키는 것은 의도와는 다르게, 표면상으로는, 적법한 클라이언트 리졸버를 막는 것이 되므로 자체적으로 서비스 거부 (DoS)를 초래하는 셈이 되고, 이것은 받아들일 수 없는 방법이다. 핸드쉐이킹의 이점을 이용해서 공격시도를 감지하고 막아내기 위해 요청/질의들을 TCP로 전환하는 것도 물론 한 방법이 되겠지만, 이 경우 심각한 성능저하를 감수해야만 한다. F5의 BIG-IP DNS 솔루션들은 이런 반대급부가 발생하지 않도록 최적화되어 있지만, 대부분의 DNS 인프라는 그렇지 못하고 따라서 많은 이용자들이 이미 ‘너무 느리다’고 인식하고 있는 과정을 더욱 느리게 만들 것이며, 특히 모바일 디바이스를 통해 인터넷을 검색하는 사용자들에게는 더 그렇게 느껴질 것이다. 따라서 우리는 UDP를 사용하지 않을 수 없고, 이로 인해 당연히 공격을 받게 된다. 하지만 그렇다고 그냥 주저앉아 당해야 한다는 말은 아니다. 이런 유형의 공격에서 자신을 방어하고 아직까지는 어둠 속에 숨어 있는 또 다른 형태의 공격에서도 우리를 방어할 수 있는 방법이 있다. 1. 유효하지 않은 질의 요청들을 퇴출시킨다 (그리고 응답들도 검사한다) 클라이언트가 보내는 질의들이 DNS 서버들이 답을 하고 싶고 할 수 있는 질의들임을 검증하는 것이 중요하다. DNS 방화벽이나 기타 보안 제품들을 사용해서, DNS 질의들을 검증하고 DNS 서버가 응답하도록 구성된 DNS 질의들에만 응답을 하도록 만들 수 있다. 끊임없이 진화하는 인터넷의 속성으로 인해 현재는 사용되지 않는 수많은 특징들이 DNS 프로토콜이 디자인 되었을 당시에 이 프로토콜에 포함되었다. 여기에는 다양한 DNS 질의 형태들, 플래그들, 그리고 기타 세팅들이 포함된다. DNS 요청에 어떤 종류의 파라미터들이 표시될 수 있고, 이것들이 어떻게 조작될 수 있는지 알게 된다면 놀랄 것이다. 한 예로, DNS type 17=RP는 이 레코드에 대한 책임이 있는 사람을 말한다. 또한 이런 필드들 중 많은 것에 악성 데이터를 넣어서 DNS 커뮤니케이션을 망가뜨리는 방법들이 있다. DNS 방화벽은 이런 DNS 질의들을 검사해서 DNS 표준을 준수하지 않는 요청들과 DNS 서버가 대응하도록 구성되지 않은 요청들을 제외시킬 수 있다. 하지만 위에서 예로 들은 공격에서 증명되었듯이 주의해서 지켜봐야 할 것이 질의만은 아니고, 응답에도 주의를 기울여야 한다. F5의 DNS 방화벽은 응답들에 대해 스테이트풀 인스펙션 (stateful inspection – 상태 기반 검사) 기능이 포함되어 있는데, 이것은 요청하지 않은 DNS 응답들은 즉각적으로 버려짐을 의미한다. 이 기능이 대역폭에 오는 부하를 줄여주지는 못하겠지만, 서버들이 불필요한 응답들을 처리하느라고 허덕거리는 것은 방지해준다. F5’s DNS Services includes industry-leading DNS Firewall services 2. 충분한 용량을 확보한다 탄력적이고 항상 가용한 DNS 인프라를 유지하는 데는 DNS 질의를 처리할 수 있는 용량에 대한 고려가 필수적이다. 대부분의 조직들은 이 점을 잘 인식하고 있으며, DNS의 가용성과 확장성을 확보하는 솔루션들을 채택하고 있다. 종종 이런 솔루션들은 단지 DNS 로드밸런싱 솔루션들을 캐시하는 것일 뿐인데, 이 역시 무작위의 알 수 없는 호스트 이름을 사용한 공격에 대한 취약성을 비롯해 위험성을 내포하고 있다. DNS 캐시 솔루션은 오직 권한이 있는 소스로부터 돌아오는 응답들만 캐시할 뿐이기에 호스트 네임이 알려지지 않은 응답들의 경우에는 응답을 보낸 서버에 질의해야 한다. 질의의 갯수가 엄청날 경우에는, DNS 캐시 용량에 상관 없이 응답을 보낸 서버에 과부하가 걸리게 된다. (F5 BIG-IP 글로벌 트래픽 매니저의 한 부분인) F5 DNS Express와 같은 고성능의, 권위 있는, 인-메모리 (in-memory) DNS 서버는 트래픽을 보낸 출처 서버에 과부하가 걸리는 것을 방지한다. 3. 하이재킹 (HIJACKING)에 대한 방어 하이재킹과 유해 데이터 삽입에 대한 DNS의 취약성은 매우 실제적이다. 2008년에 Evgeniy Polyakov라는 연구자는 패치가 되어 10 시간이 채 지나지 않은 코드를 실행하는 DNS 서버에 유해 데이터를 삽입하는 것이 가능함을 증명했다. 이것은 DNS의 유효성과 건전성에 궁극적으로 의존하는 오늘날의 인터넷 중심 세상에서는 절대로 용납될 수 없는 것이다. 이런 취약점과 DNS 정보의 건전성을 해치는 다른 취약점들에 대한 최선의 솔루션은 DNSSEC이다. DNSSEC는 DNS 프로토콜이 처음부터 가지고 있던 개방적이고 신뢰받아야 하는 성질을 특별히 교정하기 위해 도입되었다. DNS 답이 훼손되지 않았고 이 응답이 신뢰할 수 있는 DNS 서버로부터 온 것임을 증명할 수 있는 키를 이용해 DNS 질의와 응답들에 서명이 이루어진다. F5의 BIG-IP 글로벌 트래픽 매니저(F5 BIG-IP Global Traffic Manager - GTM)는 DNSSEC를 지원할 뿐 아니라, 글로벌 서버 로드밸런싱 테크닉들을 위배하지 않으면서 이 작업을 수행한다. 의도하지 않은 상태에서 자신도 모르는 사이 오픈 리졸버를 가동하지 않도록 확실히 하는 것이 일반적인 법칙이다. 결론은 ICSA 인증을 취득했고, 오픈 리졸버로 작동하지 않는 더 강력한 솔루션을 이용해 DNS를 구현해야 한다는 것이며, 이를 위해서는 F5가 최고의 선택이라는 것이다.429Views0likes0Comments新しいウェブプロトコルHTTP 2.0にいち早く対応するための「ゲートウェイ」という考え方

HTTPからSPDY、そしていよいよHTTP2.0へ ― 進化するウェブサービス最適な最新のウェブのプロトコル導入を見据え、様々な取り組みが始まっています アプリケーション、デバイス、サイバーセキュリティの進化は目まぐるしく進んでいますが、インターネットの大部分の通信を担う通信プロトコル「HTTP」は実は初代からあまり大きな進化を遂げていない ― これは意外と知られていない現実です。 インターネット標準化団体であるIETFの資料によると、初代のHTTP 1.0が標準化されたのが1996年、そしてその次世代版であるHTTP1.1が標準化されたのが1997年です。これは、Googleという会社が生まれる前の話です。また、日本で一世を風靡したimodeサービスが始まる前のタイミングでもあります。いかに長い間この仕様が大きく変わっていないかを実感頂けると思います。また、その後、御存知の通りインターネットは爆発的に普及し、トラフィック量も増加の一途を辿っています。 2015年にHTTP2.0の仕様はIETFによって標準化される予定です。すぐに多くの企業の現場で導入が進むとは考えにくいものの、膨大なアクセス数が継続的に続くアプリケーションやサービスのインフラではその恩恵をより多く受けるため、前向きに検討するモチベーションにはなると予想されています。 数年前、HTTP 2.0の前身であるSPDYの導入が始まった際に、いち早く移行ソリューションをご提案したのがF5ネットワークスでした。F5のセキュアかつ安定したADCサービスと、BIG-IPによるゲートウェイ機能は、自社サービスのインフラをSPDYに対応させるにあたり、最大の導入障壁となる「サーバ側のSPDY対応作業」を集約させることでスムーズな移行を実現できたのです。 実はこのSPDYゲートウェイとも言うべき考え方は、激変するビジネス環境に柔軟に対応するインフラを構築するのにADCが様々な場面で貢献する好例なのです。古くはADCでSSLコネクションの終端とパフォーマンス向上、前述のSPDY、そして今回のHTTP2.0、技術は違えど、根幹となるアプローチは一貫しています。新システムへの移行が急務となったときに、IT設備の大掛かりな変更はコストも時間も人員も大きなものになりがちです。BIG-IPによるHTTP 2.0ゲートウェイという付加価値によって、少ない投資で素早くインフラをHTTP 2.0に対応させ、リクエスト数の最大値などの管理、リクエスト優先順位付け等ができるようになります。 このADCならではの付加価値をF5はユーザ様と共に高め続けていきます。そして、F5のソリューションをお使いのユーザ様は将来生まれる新しい技術への対応を気づかないうちに準備できているのかもしれません。 F5のBIG-IPはHTTP 2.0の最新版のドラフトに対応する形でearly access(早期検証プログラム)を実施しており、標準化された時点でGeneral Availability(一般向け提供開始)を予定しております。 For the English version of this article, please go here.420Views0likes0CommentsThe full proxy makes a comeback!

Proxies are hardware or software solutions that sit between an End-user and an Organization’s server, and mediate communication between the two. The most often heard use of the term “proxy” is in conjunction with surfing the Internet. For example, an End-user’s specific requests are sent to the Organization’s server and its dedicated responses are sent back to the End-user, via the proxy. However, neither the End-user nor the web server is connected directly. There are different kinds of proxies, but they mainly fall into either of two categories: the half proxies or the full proxies. There are two types of half proxies. The deployment-focused half-proxy is associated with a direct server return (DSR) configuration. In this instance, requests are forwarded through the device; the responses, however, do not return through the device, but are sent directly to the client. With just one direction of the connection (incoming) sent through the proxy, this configuration results in improved performance, particularly when streaming protocols. The delayed-binding half proxy, on the other hand, examines a request before determining where to send it. Once the proxy determines where to route the request, the connection between the client and the server are "stitched" together. Because the initial TCP “handshaking” and first requests are sent through the proxy before being forwarded without interception, this configuration provides additional functionality. Full proxies terminate and maintain two separate sets of connections: one with the End-user client and a completely separate connection with the server. Because the full proxy is an actual protocol endpoint, it must fully implement the protocols as both a client and a server (while a packet-based proxy design does not). Full proxies can look at incoming requests and outbound responses and can manipulate both if the solution allows it. Intelligent as this configuration may be, performance speeds can be compensated. In the early 1990s, the very first firewall was a full proxy. However, the firewall industry, enticed by faster technology and greater flexibility of packet-filtering firewalls, soon cast the full proxy to the back room for various reasons, including having too many protocols to support. Each time any of the protocols changed, it required the proxy to change, and maintaining the different proxies proved too difficult. The much slower CPUs of the 90s were another factor. Performance became an issue when the Internet exploded with End-users and full proxy firewalls were not able to keep up. Eventually firewall vendors had to switch to other technologies like the stateless firewall, which uses high-speed hardware like ASICS/FPGAs. These could process individual packets faster, even though they were inherently less secure. Thankfully, technology has evolved since and today’s modern firewalls are built atop a full proxy architecture, enabling them to be active security agents. Featuring a digital Security Air Gap in the middle allows the full proxy to inspect the data flow, instead of just funneling, thus providing the opportunity to apply security in many ways to the data, including protocol sanitization, resource obfuscation, and signature-based scanning. Previously, security and performance was usually mutually exclusive: being able to implement security capabilities led to lower performance abilities. With today's technology, full proxies are moving towards tightened security at a good performance level. The Security Air Gap aids that, and, it has proven itself to be a secure and viable solution for organizations to consider. With this inherent Security Air Gap tier, a full proxy now provides a secure and flexible architecture supportive of the challenges of volatility today’s organizations must deal with.374Views0likes0CommentsF5 IoT Ready平台

This blog is adapted from the original posthere. “物聯網“的應用與支持物連網服的各項應用程式正在改變著我們的生活方式。研究顯示運動與健康相關產業部門到2017年有41%的年增長率,將增長到近170萬台設備,在未來幾年隨著越來越多的設備、服裝或感應器成為司空見慣的的事情,職場工作模式的變化將是一個巨大的挑戰。 由於物聯網世代需要更多的應用程式來運行,訪問流量需要服務也必須更快配置,一個可靠強大的、可擴展的智能基礎設施將成為必要的關鍵。IT專業人員的任務是設計和建造的基礎設施已經準備好為未來的挑戰,包括物聯網。為了確保安全、智能路由器和分析等需求,網絡層需要精通基礎架構設備上使用的語言。了解網路中的這些協議將允許流量進行安全保障、,優先層級等與路由器相對應,進而做出更好的規模和設備的流量和數據的管理。 由於F5應用服務分享一個共同的控制面(F5平台),因此我們已簡化了IoT應用交付服務的部署與優化程序。藉由軟體定義應用服務(Software Defined Application Services; SDAS)所提供的彈性功能,我們可以快速的將IoT應用服務配置到資料中心和雲端運算環境,節省新應用與架構部署的時間和成本。 SDAS吸引人的地方在於它可以提供整體性的服務,根據IoT裝置的服務請求、情境與應用健康狀態,將IoT導引到最適當的資料中心或混合雲端環境。因此,客戶、員工與IoT裝置本身都能獲得最安全且最快速的服務經驗。 F5高效能服務架構支援傳統和新興的基底網路。它可以部署一個頂級的傳統IP與VLAN-based網路,利用NVGRE或VXLAN (以及其他較不為人知的疊層網路協定)以與SDN疊層網路(overlay network)搭配作業,以及整合例如Cisco/Insieme、Arista和BigSwitch等廠商的SDN網路。 硬體、軟體或雲端 服務架構模型(services fabric model)支援將眾多服務統合到一個可以部署在硬體、軟體或雲端的共同平台。這讓我們可以藉由建立標準化的管理與部署程序,以支援持續的服務交付並降低營運上資源負荷。透過服務資源的分享以及運用細緻分級的多租戶(multi-tenancy)技術,我們可以大幅降低個別服務的成本,讓所有IoT應用(不論規模大小)都能享有有助於提升安全性、可靠性與效能的服務。 F5平台: 提供網路安全性以防範內傳流量(inbound)攻擊 卸載SSL以改善應用伺服器效能 非僅了解應用並且知道它何時出現問題 非僅確保最佳終端使用者經驗並且提供快速且高效率的資料複製 F5雲端方案可以在傳統和雲端基礎設施之間為IoT應用交付服務提供自動化部署與協調,並且動態的將工作負荷重導至最適當的地點。這些應用交付服務確保可預測的IoT經驗、安全政策複製以及工作負荷敏捷性。 F5 BIG-IQ™ Cloud可以建立跨越傳統與雲端基礎設施的F5 BIG-IP®方案聯合管理,協助企業以快速、一致且可重複的方式來部署和管理IoT交付服務,而不論基底的基礎設施為何。再者,BIG-IQ Cloud能夠整合或者與既有的雲端協調引擎(例如VMware vCloud Director)互動,簡化整體應用部署程序。 延伸、延展與安全 F5雲端方案提供一個快速應用交付網路(Application Delivery Network)配置方案,讓企業能以大幅縮短的時間跨越資料中心(不論私有或公共)擴充IoT交付能力。因此,企業能夠有效的做到包括: 延伸資料中心至雲端以支援IoT部署。 於有需要的時候,將IoT應用延展至資料中心以外。 確保安全並加速IoT與雲端的連結。 當有維護的需要時,企業不再需要以人工方式組態應用以重導流量。相反的,IoT應用可以在實施維護之前,主動的重導至一個替代的資料中心。 在持續性的DDoS保護方面,藉由F5 Silverline雲端平台提供的F5 Silverline DDoS Protection服務能夠偵測並舒緩最大量的DDoS攻擊,避免危及你的IoT網路。 BIG-IP平台具備應用與地點獨立性,這意謂著不論應用類型為何或者應用駐留在什麼地方都無所謂。你只需要告訴BIG-IP平台到何處尋找IoT應用,BIG-IP平台就會達成交付程序。 F5新融合架構(F5 Synthesis)將這所有整合起來,讓雲端與應用供應商以及行動網路運營商擁有所需的架構,以確保IoT應用效能、可靠性與安全。 連結裝置將持續發展,促使我們跨入這個幾乎任何東西都會產生資料流的嶄新世界。雖然需要考慮的層面還很多,但是企業若能主動迎接挑戰並採納新的方法以促成IoT-ready網路,將能開啟一個邁向成功的明確路線。 一個IoT-ready環境讓IT能夠開始掌握此種轉變所蘊含的效益,而不需要對既有技術進行全面性的汰換。它也提供IT所需的緩衝空間,確保持續湧現的連結裝置不會癱瘓基礎設施。這個程序確保其效益可以被實現,而不需要為了維護IoT網路、資料和應用資源的可用性與安全而在營運治理上做出折衷。它也意謂著IT可以管理IoT服務而非機器。 不論一個IoT ready基礎設施如何建構,對於IT與商務上而言都是一種轉型之旅。這不是一件可以掉以輕心或忽略長期策略的轉變。若能採取適切的部署程序,F5的IoT ready基礎設施將能為企業及其員工帶來顯著的效益。351Views0likes0CommentsReady or not, HTTP 2.0 is here

From HTTP to SPDY, to HTTP 2.0. The introduction of a new protocol optimized for today’s evolving web services opens the door to a world of possibilities. But are you ready to make this change? While the breakneck speed with which applications, devices and servers are evolving is common knowledge, few are aware that the HTTP protocol, such a key part of the modern internet, has seen only a few changes of significance since its inception. HTTP – behind the times? According to the Internet Engineering Task Force (IETF), HTTP 1.0 was first officially introduced in 1996. The following version, HTTP 1.1, and the version most commonly used to this day was officially released in 1997. 1997! And we’re still using it! To give you some idea of how old this makes HTTP 1.1 in terms of the development of the internet - in 1997 there was no Google! There was no Paypal ! It goes without saying, of course, that since 1997, both the internet and the amount of traffic it has to handle have grown enormously. Standardization of HTTP 2.0 by the IETF is scheduled for 2015. It’s unrealistic to expect most businesses to immediately deploy HTTP 2.0 in their organizations. However seeing the clear benefits of HTTP 2.0, which is to enable infrastructure to handle huge volume of traffic to access applications and services, many organizations will soon implement this new protocol. F5: Ready for SPDY, ready for HTTP 2.0 The initial draft of HTTP 2.0 was based on the SPDY protocol. When SPDY was first introduced some years ago, the first company to provide a solution to enable businesses to “switch over” to SPDY was F5, we introduced a SPDY Gateway then. SPDY Gateway allowed our enterprise customers to overcome the biggest hurdle in the transition to adopt SPDY protocol on the server end, with the stable and secure ADC and gateway services of BIG-IP. Organizations have the option to make servers upgrade to support the protocol, or use the gateway to handle the connection. This gives businesses the time necessary to make a smooth and gradual transition from HTTP 1.0 to SPDY on the server side. SPDY Gateway is just one example of the many ways ADC can contribute to a flexible and agile infrastructure for businesses. One of the well-known ADC use case in SPDY protocol is SSL termination. Similarly in the transition to HTTP 2.0, a HTTP 2.0 gateway will help organizations plan their adoption and migration to accommodate demands of HTTP 2.0. Thinking ahead F5 is always in the forefront of technology evolution. HTTP 2.0 Gateway is just one example of F5’s dedication in the evolution of Application Delivery technology, providing guidance to our customers making transitions to new technology as simple as possible. Currently, F5’s BIG-IP supports HTTP 2.0 under our early access program. We expect its general availability after HTTP 2.0 standardization is completed in 2015. For a Japanese version of this post, please go here.342Views0likes0Comments