Enabling Azure Active Directory Tenant Restrictions with F5

Microsoft’s Azure Active Directory(Azure AD) is the largest cloud-based enterprise directory in the world. According to the data presented at the Microsoft Ignite conference, it has more than 750 million user accounts and handles more than 1.3 billion authentications per day. Azure AD is the heart that powers access to Microsoft’s Office 365 application suite, so every customer that uses Office 365 or Azure cloud is using Azure AD. Of course, with adoption of SaaS apps such as Office 365, enterprises face challenge with data security and access restrictions. For example, many customers from various compliance-intensive verticals need to have stricter controls over which Azure AD identities can access Office 365 from with the boundaries of the corporate network(or even outside of it from corporate-owned assets). For many years, customers struggled with that challenge, as Microsoft did not have a native solution to address it. For example, take a look at how one of the Office 365 customers frames the question about their needs to restrict access to Office 365 from their network: Fortunately, Microsoft has listened to their customer needs, and has recently released the Tenant Restriction option for Azure AD. Microsoft says that they have developed this feature with extensive input from their customers, especially those in financial, healthcare, and pharmaceutical industries. From the description that Microsoft provides, their implementation is similar to Google's, but they actually require two headers: Restrict-Access-To-Tenants: and Restrict-Access-Context: This approach appears to be more sophisticated, because it not only ensures a variety of tenants to be customized to meet the organizational access needs, but it also specifies the Azure AD anchor - the tenant that is setting these restrictions. Since the directory id is not commonly accessible to anyone but the tenant admin, this feature provides greater security against abuse and/or misuse by unauthorized parties. Below, you can find a sample Microsoft diagram and flow of how the Tenant Restriction options works, where I took liberty of placing an F5 device in place of a generic proxy that handles header insertion. Of course, your deployment of proxies or F5 devices on your network might differ, but this is just a start to explain how F5 helps facilitate the implementation of this feature. F5 already provides a broad range of unique solutions forenhancing securityto Office 365. In addition, the need for overall SSL visibility and dynamic service chaining of the outbound traffic are driving rapid adoption of new F5 solutions such asSSL OrchestratorandSecure Web Gateway. All this aligns really well with enabling customers to implement new Azure AD Tenant Restrictions using their F5 investment by making a small change to existing configuration. For example, in order to implement Azure AD Tenant Restrictions in my Secure Web Gateway demo environment, I added a simple macro to take care of identifying traffic destined to Microsoft’s authentication service and insert the required headers. And here’s how I am inserting the required headers: Of course, if you’re running SSL Orchestrator, you can implement similar functionality in the construct of that configuration. I’m really excited about Microsoft’s release of the Tenant Restrictions feature, as it will drive increased adoption and better security for enterprises using Office 365, and I hope that many of our existing and future customers will leverage the appropriate F5 product to help them easily achieve better security posture with using Office 365.1.2KViews0likes1CommentSSL Orchestrator Use Case: SWGaaS

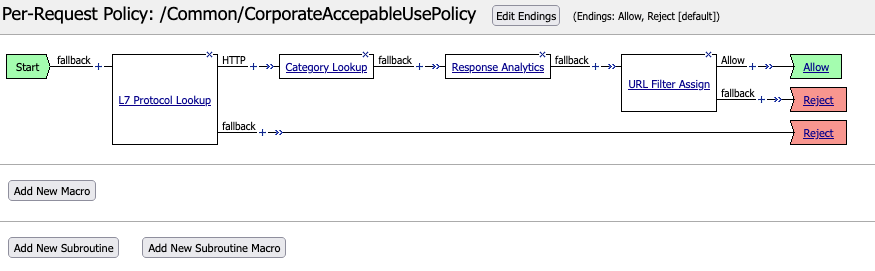

Introduction BIG-IP 16.0 with SSL Orchestrator 9.0 has support for running Secure Web Gateway (SWG) “as a Service” inside the Service Chain.This allows you to take an existing F5 SWG solution and migrate or move it to the same BIG-IP as SSL Orchestrator. Typical SWG features include: User authentication (not covered here) Enforcement of an Acceptable Use Policy (AUP) Website category database (google.com = Search Engines) Logging and Reporting (not covered here) A typical SWG deployment will have a Per-Session Policy that handles authentication.Then a Per-Request Policy that enforces the AUP. User authentication (not covered here) Refer to this Dev/Central Article for more information on this topic. Enforcement of an Acceptable Use Policy (AUP) A Per-Request Policy is used to enforce the AUP.You can find this from the Configuration Utility under Access > Profiles / Policies > Per-Request Policies.Click Edit for the Per-Session Policy and a new window like this should open: This policy does a Protocol Lookup to determine if the content is HTTP, then performs a Category Lookup based on the host header in the URI.Response Analytics will check for malicious content and pass that information on to the URL Lookup Agent. The Category is compared to the URL Filter which maps URL categories to Allow/Deny Actions.As a final result the request is either Allowed or Denied (Reject). Note: In a per-request SWG policy you would typically have a Protocol Lookup for HTTP and HTTPS.But in this case the SSL Orchestrator will perform SSL decryption so the SWG Service will receive plain-text, HTTP content.Therefore, this SWG policy is ready to be used with SSL Orchestrator. Website category database (google.com = Search Engines) The URL Filter is configured from Access > Secure Web Gateway > URL Filters. Select CorporateURLFilter in this example. This opens the Category editor.Different Categories and sub-categories are available to make Allow or Deny decisions.In this example the Games and Shopping categories have been set to Deny. Logging and Reporting (not covered here)Refer to the AskF5 Knowledge Center for more information. Configuration Export / Import the SWG Per-Request Policy The SWG Per-Request Policy is easy to export from one BIG-IP to another.From the Configuration Utility select Access > Profiles / Policy > Per-Request Policies. Click Export then OK to save the policy. The policy file can be directly imported into another BIG-IP device.On the Per-Request Policies screen click Import. Give the Policy a name, click Browse to select the policy file then Import. This policy is ready for SSL Orchestrator to use with SWGaaS.You can click Edit to verify the policy is correct. Configure the F5 SWGaaS From the SSL Orchestrator Configuration page select Services then click Add. F5 Secure Web Gateway is available on the F5 tab.Double-click the icon to configure. Give it a name.Set the Access Profile Scope to Profile.Set the Per Request Policy to the policy imported previously.Click Save and Next. Add the newly created SWGaaS to an existing Service Chain or create a new one. Select the F5_SWG Service on the left and click the right arrow to move it to the Selected column.Click Save. Save & Next. Then Deploy. Test SWG Functionality Note: be sure that a Security Policy has the Service Chain applied.Go to a client computer and test access to various web sites.News sites are allowed but Shopping is set to Block so sites like amazon.com and walmart.com should be blocked. Details from espn.com.The padlock indicates the connection is encrypted.The Issued By field indicates that this was intercepted & signed by SSL Orchestrator. Any attempts to visit a site categorized as Shopping or Games will be blocked. The configuration is now complete.935Views1like1CommentIntelligent Proxy Steering - Office365

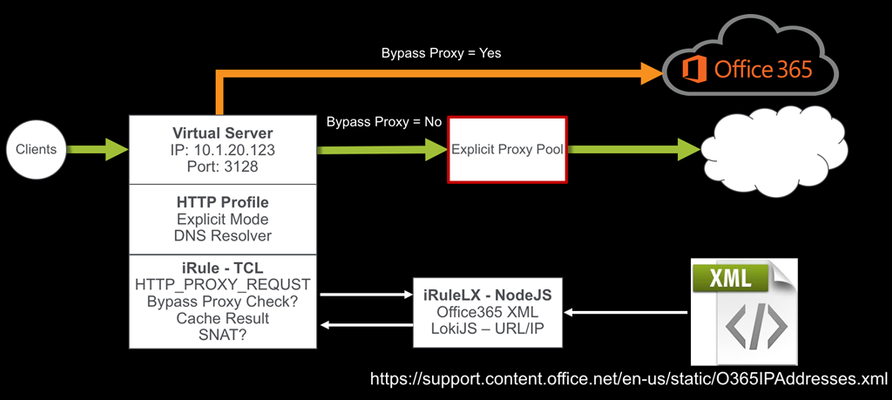

Introduction This solution started back in May 2015 when I was helping a customer bypass their forward proxy servers due to the significant increase in the number of client connections after moving to Office365. Luckily they had a BIG-IP in front of their forward proxy servers load balancing the traffic and F5 had introduced a new “Proxy Mode” feature in the HTTP profile in TMOS 11.5. This allowed the BIG-IP to terminate Explicit Proxy connections, instead of passing them through to the pool members. The original solution was a simple iRule that referenced a data-group to determine if the connection should bypass the forward proxy pool or reverse proxy and load balance the connection as normal. Original iRule: when HTTP_PROXY_REQUEST { # Strip of the port number set hostname [lindex [split [HTTP::host] ":"] 0] # If the hostname matches a MS 0ffice365 domain, enable the Forward Proxy on BIG-IP. # BIG-IP will then perform a DNS lookup and act as a Forward Proxy bypassing the Forward Proxy # Server Pool (BlueCoat/Squid/IronPort etc..) if { [class match $hostname ends_with o365_datagroup] } { # Use a SNAT pool - recommended snatpool o365_snatpool HTTP::proxy } else { # Load balance/reverse proxy to the Forward Proxy Server Pool (BlueCoat/Squid/IronPort etc..) HTTP::proxy disable pool proxy_pool } } As more organisations move to Office365, they have been facing similar problems with firewalls and other security devices unable to handle the volume of outbound connections as they move the SaaS world. The easiest solution may have been just to create proxy PAC file and send the traffic direct, but this would have involved allowing clients to directly route via the firewall to those IP address ranges. How secure is that? I decided to revisit my original solution and look at a way to dynamically update the Office365 URL list. Before I started, I did a quick search on DevCentral and found that DevCentral MVP Niels van Sluis had already written an iRule to download the Microsoft Office365 IP and URL database. Perfect starting point. I’ve since made some modifications to his iRulesLX and a new TCL iRule to support the forward proxy use case. How the solution works The iRuleLX is configured to pull the O365IPAddresses.xml every hour. Reformat into JSON and store in a LokiJS DB. The BIG-IP is configured as an Explicit Proxy in the Clients network or browser settings. The Virtual Server has a HTTP profile attached with the Proxy Mode set to Explicit along we a few other settings. I will go in the detail later. An iRule is attached that executes on the HTTP_PROXY_REQUEST event to check if the FQDN should bypass the Explicit Proxy Pool. If the result is not in the Cache, then a lookup is performed in the iRuleLX LokiJS DB for a result. The result is retuned to the iRule to make a decision to bypass or not. The bypass result is Cached in a table with a specified timeout. A different SNAT pool can be enabled or disabled when bypassing the Explicit Proxy Pool Configuration My BIG-IP is running TMOS 13.1 and the iRules Language eXtension has been licensed and provisioned. Make sure your BIG-IP has internet access to download the required Node.JS packages. This guide also assumes you have a basic level of understanding and troubleshooting at a Local Traffic Manager (LTM) level and your BIG-IP Self IP, VLANs, Routes, etc.. are all configured and working as expected. Before we get started The iRule/iRuleLX for this solution can be found on DevCentral Code Share. Download and install my Explicit Proxy iApp Copy the Intelligent Proxy Steering - Office365 iRule Copy the Microsoft Office 365 IP Intelligence - V0.2 iRuleLX Step 1 – Create the Explicit Proxy 1.1 Run the iApp iApps >> Application Services >> Applications >> “Create” Supply the following: Name: o365proxy Template: f5.explicit_proxy Explicit Proxy Configuration IP Address: 10.1.20.100 FQDN of this Proxy: o365proxy.f5.demo VLAN Configuration - Selected: bigip_int_vlan SNAT Mode: Automap DNS Configuration External DNS Resolvers: 1.1.1.1 Do you need to resolve any Internal DNS zones: Yes or No Select “Finished" to save. 1.2 Test the forward proxy $ curl -I https://www.f5.com --proxy http://10.1.20.100:3128 HTTP/1.1 200 Connected HTTP/1.0 301 Moved Permanently location: https://f5.com Server: BigIP Connection: Keep-Alive Content-Length: 0 Yep, it works! 1.3 Disable Strict Updates iApps >> Application Services >> Applications >> o365proxy Select the Properties tab, change the Application Service to Advanced. Uncheck Strict Updates Select “Update" to save. 1.4 Add an Explicit Proxy server pool In my test environment I have a Squid Proxy installed on a Linux host listening on port 3128. Local Traffic >> Pools >> Pool List >> “Create” Supply the following: Name: squid_proxy_3128_pool Node Name: squid_node Address: 10.1.30.105 Service Port: 3128 Select “Add" and “Finished” to Save. Step 2 – iRule and iRuleLX Configuration 2.1 Create a new iRulesLX workspace Local Traffic >> iRules >> LX Workspaces >> “Create” Supply the following: Name: office365_ipi_workspace Select “Finished" to save. You will now have any empty workspace, ready to cut/paste the TCL iRule and Node.JS code. 2.2 Add the iRule Select “Add iRule” and supply the following: Name: office365_proxy_bypass_irule Select OK Cut / Paste the following Intelligent Proxy Steering - Office365 iRule into the workspace editor on the right hand side. Select “Save File” when done. 2.3 Add an Extension Select “Add extension” and supply the following: Name: office365_ipi_extension Select OK Cut / Paste the following Microsoft Office 365 IP Intelligence - V0.2 iRuleLX and replace the default index.js. Select “Save File” when done. 2.4 Install the NPM packages SSH to the BIG-IP as root cd /var/ilx/workspaces/Common/office365_ipi_workspace/extensions/office365_ipi_extension/ npm install xml2js https repeat lokijs ip-range-check --save 2.5 Create a new iRulesLX plugin Local Traffic >> iRules >> LX Plugin >> “Create” Supply the following: Name: office365_ipi_plugin From Workspace: office365_ipi_workspace Select “Finished" to save. 2.6 Verify the Office365 XML downloaded SSH to the BIG-IP and tail -f /var/log/ltm The Office365 XML has been downloaded, parsed and stored in the LokiJS: big-ip1 info sdmd[5782]: 018e0017:6: pid[9603] plugin[/Common/office365_ipi_plugin.office365_ipi_extension] Info: update finished; 20 product records in database. 2.7 Add the iRule and the Explicit Proxy pool to the Explicit Proxy virtual server Local Traffic >> Virtual Servers >> Virtual Server List >> o365proxy_3128_vs >> Resources Edit the following: Default Pool: squid_proxy_3128_pool Select “Update" to save. Select “Manage…” and move office365_proxy_bypass_irule to the Enabled section. Select “Finished" to save. Step 3 – Test the solution SSH to the BIG-IP and tail -f /var/log/ltm 3.1 Test a non-Office365 URL first $ curl -I https://www.f5.com --proxy http://10.1.20.100:3128 HTTP/1.1 200 Connected HTTP/1.0 301 Moved Permanently location: https://f5.com Server: BigIP Connection: Keep-Alive Content-Length: 0 Output from /var/log/ltm: big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : 10.10.99.31:58190 --> 10.1.20.100:3128 big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : ## HTTP Proxy Request ## big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : CONNECT www.f5.com:443 HTTP/1.1 big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : Host: www.f5.com:443 big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : User-Agent: curl/7.54.0 big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : Proxy-Connection: Keep-Alive big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : www.f5.com not in cache - perform DB lookup big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : www.f5.com - bypass: 0 big-ip1 info tmm2[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : 10.10.99.31:58190 (10.1.30.245:24363) --> 10.1.30.105:3128 3.2 Test the same non-Office365 URL again to confirm the cache works Output from /var/log/ltm: big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : 10.10.99.31:58487 --> 10.1.20.100:3128 big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : ## HTTP Proxy Request ## big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : CONNECT www.f5.com:443 HTTP/1.1 big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : Host: www.f5.com:443 big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : User-Agent: curl/7.54.0 big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : Proxy-Connection: Keep-Alive big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : www.f5.com found in cache big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : www.f5.com - bypass: 0 big-ip1 info tmm1[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : 10.10.99.31:58487 (10.1.30.245:25112) --> 10.1.30.105:3128 3.3 Test a Office365 URL and check it bypasses the Explicit Proxy pool $ curl -I https://www.outlook.com --proxy http://10.1.20.100:3128 HTTP/1.1 200 Connected HTTP/1.1 301 Moved Permanently Cache-Control: no-cache Pragma: no-cache Content-Length: 0 Location: https://outlook.live.com/ Server: Microsoft-IIS/10.0 Connection: close Output from /var/log/ltm: big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : 10.10.99.31:58692 --> 10.1.20.100:3128 big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : ## HTTP Proxy Request ## big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : CONNECT www.outlook.com:443 HTTP/1.1 big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : Host: www.outlook.com:443 big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : User-Agent: curl/7.54.0 big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : Proxy-Connection: Keep-Alive big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : www.outlook.com not in cache - perform DB lookup big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : www.outlook.com - bypass: 1 big-ip1 info tmm3[12384]: Rule /Common/office365_ipi_plugin/office365_proxy_bypass_irule : 10.10.99.31:58692 (10.1.10.245:21666) --> 40.100.144.226:443 It works! Conclusion By combining Microsoft Office365 IP and URL intelligence with LTM, produces a simple and effective method to steer around overloaded Forward Proxy servers without the hassle of messy proxy PAC files.9.7KViews0likes41CommentsConfiguring the F5 BIG-IP as an Explicit Forward Web Proxy Using Secure Web Gateway (SWG)

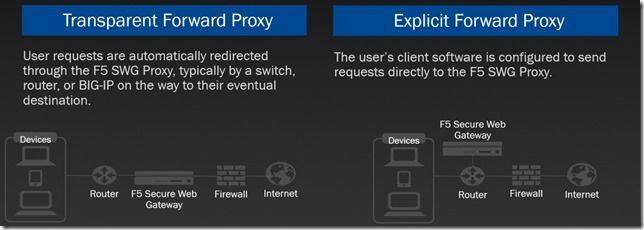

In previous articles, we have discussed the use of F5 BIG-IP as a SSL VPN and other use cases for external or inbound access. I now wanted to take some time to discuss an outbound access use case using F5 BIG-IP as an explicit forward web proxy. In laymen terms, this use case allows you to control end user web access with malware prevention, URL and content filtering. This is made possible with a great partnership between F5 and Forcepoint, previously known as Websense. The BIG-IP can also be used as a transparent forward proxy though this will be outside the scope of this article. Below is a diagram and description of each. OK, so now that we've discussed the intent of the article, let's go over the requirements before getting started. The customer requirement is to identify a forward web proxy solution that provides URL filtering, content filtering as well as the ability to export logs and statistics on end user browsing. They also require single sign on using Kerberos authentication. As the integrator, you're wondering how much it would cost to bring in a new vendor and appliances to meet this requirement. Then you remember hearing that F5 is somewhat of a Swiss Army Knife, can they do this? So as many of us do, we go back to our handy dandy search engine and type in web proxy site:f5.com. What do you know, you see BIG-IP APM Secure Web Gateway Overview. After reading the overview you will now identify the requirements to successfully deploy this solution. They include: BIG-IP LTM Licensed APM Licensed SWG Licensed Note: SWG is a subscription based licenses which includes Forcepoint (Websense DB updates) Obtain a signing cert and private key Keytab generated using KTPass Latest SWG iApp from https://downloads.f5.com DNS Configured on BIG-IP to resolve external web addresses Downloading the IP Intelligence database Configure browser with explicit web proxy Now looking at this it seems like it must include much much more than F5 but let's go deeper. Running on the F5 BIG-IP is LTM, APM and SWG. From SWG you will download the IP intelligence database which will be stored on the local BIG-IP and if connected to the internet can download updates on a reoccurring basis. With all of that now covered and you have provided a project timeline and requirements to your local PM, let's get started! We will begin by validating the required modules have been provisioned on the BIG-IP. Navigate to System > Resource Provisioning Validate LTM, APM and Secure Web Gateway are provisioned as you see in the screenshot below. If each of these modules is not provisioned, select the appropriate resources and click Submit. Note: Additional details regarding resource provisioning can be found here https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/bigip-system-essentials-12-1-1/7.html Now that you have provisioned the necessary F5 modules, you must obtain a signing cert and key which you will import into the BIG-IP for use later in the article. For this use case, I used a Windows 2012 box to submit a custom certificate request to my CA. For the sake of time, I am not going to walk through the certificate request process though I will provide one very important detail when performing the certificate request. When submitting the custom request, you must enable basic constraints allowing the subject to issue certificates behalf of the BIG-IP. Import the cert and key into the BIG-IP. Navigate to System > Certificate Management > Traffic Certificate Management > Click SSL Certificate List. Click Import Specify PKCS 12 as the import type. Specify Demo_SWG_CA as the certificate name. Browse to the location that the PFX file was exported to and Import. Provide the password created when exporting cert and key. Click Import Before deploying the SWG iApp, we are going to configure our Active Directory AAA server, create a Keytab file, configure a Kerberos AAA server, create an explicit access policy, custom URL filter as well as a per-request access policy. Create an Active Directory AAA Navigate to Access > Authentication >Active Directory. Click Create Specify an AAA Server Name. Specify an Domain Name. Select the Direct radio button. Specify the IP Address of your domain controller. Provide an Admin username. Provide the Admin Password. Click Finished Create a Keytab for Kerberos Authentication From Active Directory Users and Computers, create a w user account that will be used to generate a Keytab file. From a command prompt run the following ktpass command. ktpass /princ HTTP/demouser.demo.lab@DEMO.LAB /mapuser demo\demouser /ptype KRB5_NT_PRINCIPAL /pass Password#1 /out c:\demo.keytab After running the command above, navigate back to active directory users and computers and notice the changes made to the AD user account. Create a Kerberos AAA Server Create the AAA object by navigating to Access ‑> Authentication -> Kerberos. Specify a Kerberos AAA server Name. Specify the Active Directory domain as the Auth Realm. Specify HTTP as the Service Name. Browse for the Keytab File. Click Finished Create an explicit access policy Navigate to Access > Profiles / Policies > Access Profiles (Per-Session Policies). Click Create Specify a name for the Per-Session access policy. Specify SWG-Explicit as the policy type. Accepted Languages: English or any that applies to you. Click Finished Once redirected back to Profiles / Policies: Access Profiles (Per-Session Policies) click Edit on the policy that was created in the previous step. Select the + between Start and Deny and add a 407 Response. From the HTTP Auth Level drop down select negotiate and click Save. Navigating back to the VPE, following the Basic branch select+ between the 407 Response created in the previous step and Deny. From the Authentication tab select AD Auth and click Add. You will then be presented with the AD Auth configuration item. Select the AAA AD server we created in previous steps by using the drop down next to Server. Click Save Navigate back to the VPE. Following the Negotiate branch select the + between the HTTP 407 Response and Deny. From the Assignment tab select Variable Assign and click Add Item. From the Variable Assign configuration item, select Add new entry. Select change from the Assignment created in the previous step. Within the Custom Variable text field, type session.server.network.name . Within the Custom Expression text field, type expr { "demo.lab" } . Click Finished Click Save Following the same workflow, select + between Variable Assign and Deny. Select the Authentication tab. Select Kerberos Auth and Click Add Item. From the Kerberos Auth configuration item, select the Kerberos AAA server created in previous steps using the drop down next to AAA Server. Click Save From the VPE, modify the endings following Kerberos Auth and AD Auth to Allow. Create a Custom URL Filter Navigate to Access > Secure Web Gateway > URL Filter and click Create. Specify a URL Filter name. Click Finished Once the page refreshes, you will be presented with a page to Allow or Deny URL categories. For demo purposes only, select the check in the first column as shown in the screenshot below. Click Allow at the bottom left of the screen. Next place a single check mark next to Social Web - YouTube and click Block. Create a Per-Request Access Policy With a Per-Request Access Policy, we can identify the category of each website a user browsers to. Navigate to Access > Profiles / Policies > Per-Request Policies and click Create. Specify a Per-Request Policy name. Click Finished Click on Edit for the Per-Request Policy created in the previous step and you will be redirected to a visual policy editor. Click on the + symbol between Start and Allow. Select the General Purpose tab and add Category Lookup. Click Add Item Leave all default settings and click Save. Following Category Lookup, click the + , select the General Purpose tab and add URL Filter Assign. Click Add Item From the URL Filter drop down select the URL filter created in previous steps. Click Save Review of Completed Steps Provisioned the required BIG-IP Modules. Created and exported a signing certificate and key into a pfx file format. Created a Keytab for Kerberos Auth. Created an AAA for AD authentication. Created an AAA for Kerberos authentication using Keytab. Created a URL filter to restrict access to YouTube. Created a per-session access policy. Created a per-request access policy. iApp Download After completing each of the steps above, we can now move onto creating an explicit proxy configuration using the latest SWG iApp. From a browser navigate to downloads.f5.com and login. Note: This is a no cost account. If you have not done so, register using a valid email address and you will have access to F5 downloads. Select Find a Download From the BIG-IP Product Line, select iApp Templates. You will then be redirected to the iApps download page Select iApp-Templates Accept the End User Software License Agreement. Select the iapps-1.x.x.x.x.zip file. Select the link to your nearest location. Save File Return to the BIG-IP TMUI Navigate to iApps > Templates > Click Import Click Choose File or Browse to the iApp tmpl file located within the compressed zip file. Click Open and then Upload. Navigate to Application Services > Applications. Click Create Specify a name for the application. From the Template drop down, change the template to “f5.secure_web_gateway.x.x” Use the following responses to complete the iApp configuration. Click Finished and once the page is refreshed you will see all objects created by the iApp. Configure Trusted CA in Windows Group Policy Before we begin testing it is recommended to configure the local workstation to trust the CA that will be forging new certificates on behalf of the origin servers. In order to ensure all domain joined workstations receive the certificate and it is placed in the Trusted Root Certification Authorities store I utilized group policy to deploy the certificate. Click Start, point to Administrative Tools, and then click Group Policy Management. In the console tree, double-click Group Policy Objects in the forest and domain containing the Default Domain Policy Group Policy object (GPO) that you want to edit. Right-click the Default Domain Policy GPO, and then click Edit. In the Group Policy Management Console (GPMC), go to Computer Configuration, Windows Settings, Security Settings, and then click Public Key Policies. Right-click the Trusted Root Certification Authorities store. Click Import and follow the steps in the Certificate Import Wizard to import the certificates. From the workstation you will be testing from perform a group policy update using gpupdate /force or reboot the machine. Launch iexplore.exe Navigate to the Content tab and click Certificates. Click on the Trusted Root Certification Authorities tab and scroll down and validate the certificate chosen in the previous step is present. In order to configure your browser with a Proxy Server, within Internet Explorer Settings navigate to the Connections tab and click LAN settings. Enable the checkbox for Use a proxy server for your LAN. Address: swgkrb.demo.lab Port: 3128 Click OK twice. Testing our Solution Now the time you've been waiting for. F5 said it has the ability but only way to validate is test yourself. So let's get to the bottom of this F5 explicit web proxy claim by F5 and test for ourselves. From the browser configured in the step above, navigate to https://nfl.com. Once the page is rendered, you should not receive a certificate error. Click the Lock next to the refresh button and validate the certificate was issued from the signing certificate configured in previous steps. Once validated, navigate back to the TMUI > Access > Overview > Active Sessions Validate an access session is present for the request for https://nfl.com Next we will validate users are not able to access YouTube.com as defined in our URL filter. From the client browser, attempt to navigate to youtube.com where you should be presented with an blocked page from the BIG-IP. Validate and View Reporting Now that we have successfully validated functionality, we must ensure that we can meet the customers final requirement which is reporting. From the TMUI navigate to Access > Overview > SWG Reports > Overview Navigate to Access > Overview > SWG Reports > All Requests Navigate to Access > Overview > SWG Reports > Blocked Requests As a review, the customer requirements were to provide the following: URL filtering Content filtering Logs and statistics on end user browsing. Single Sign On using Kerberos authentication. Well, there you have it. You have successfully deployed a forward web proxy solution using something you may already have in your data center. No time to celebrate though, you've got 10 more priority one projects that came into your queue in the hour it took you to deploy SWG! Until next time. Reference Documentation https://www.f5.com/pdf/solution-center/websense-overview.pdf https://support.f5.com/kb/en-us/products/big-ip_apm/manuals/product/apm-secure-web-gateway-implementations-12-1-0/14.html https://support.f5.com/kb/en-us/products/big-ip_apm/manuals/product/apm-secure-web-gateway-implementations-12-1-0/13.html https://support.f5.com/content/kb/en-us/products/big-ip_apm/manuals/product/apm-secure-web-gateway-implementations-12-1-0/_jcr_content/pdfAttach/download/file.res/BIG-IP%C2%AE_Access_Policy_Manager%C2%AE__Secure_Web_Gateway.pdf https://support.f5.com/kb/en-us/products/big-ip_ltm/manuals/product/bigip-system-essentials-12-1-1/7.html http://clouddocs.f5.com/training/community/iam/html/class1/kerberos.html https://docs.microsoft.com/en-us/previous-versions/windows/it-pro/windows-server-2008-R2-and-2008/cc772491(v=ws.11)4.2KViews0likes4CommentsAPM Cookbook: Dynamic APM Variables

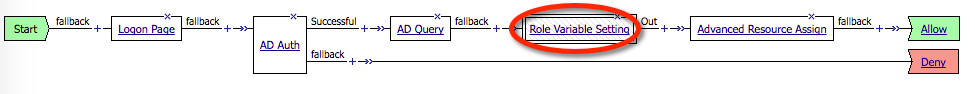

Introduction In this article we’ll discuss how to set a variable dynamically. The most common use case is setting something like a role attribute to use in SAML. We’ll use the example of setting a SAML attribute name “role” based on group membership. You need to set the value of “role” to either “managers”, “finance”, or “users”, depending on group membership. The trick is you can only send one value even if the user is a member of multiple groups. You have hierarchical preference first to “managers”, then “finance”, and everyone else gets the role “users”. You could do this in an iRule or in a TCL expression in the Variable Assign object. However, I like to leverage the Visual Policy Editor GUI wherever possible so that I can quickly examine a policy flow and determine what’s happening without reading code, and most importantly, so that those who come after me don’t have to decipher what I was doing. Macros Are Your Friend We’ll assume you’ve already got a policy with a logon page, AD Auth, AD Query, and resource assignment for the SAML resource. We will create a macro named “Role Variable Setting” to perform this action after the AD Query. It goes after the AD Query because first we need to collect the group information. Here’s what the policy will look like with the piece we’re adding. We want to create our flow in a macro because otherwise it could clutter up the policy itself, imagine twenty differetn conditions and all the branches you'd have. The macro can have all those branches exit to one place mkaing it all much cleaner and simpler to maintain. To create the macro you click “Add New Macro” inside the Visual Policy Editor (VPE). You should use the “empty template” and name it something relevant to you, I’ve obviously named mine “Role Variable Setting”. After building it out, here’s what my macro looks like when completed. Setting the Conditions We will start by building this section of the macro: First I added an “empty” object from the general purpose tab. I named it “Empty (Group Check)”. Go to the branch rules tab and add rules as appropriate. Below is my complete macro. Notice the arrows on the right hand side of the branch rules list, you can reorder for preference with most preferred at the top. Remember how we said preference went to the managers role, then finance, and finally everyone else (fallback). Your branch rules could be based on conditions like geolocation, landing URI, and many more. You can also go to the Advanced tab and modify the TCL expression to meet your needs if the simple GUI builder doesn’t meet them. The branch rule will be used if it resolves to “true”, and you can create complex logical statements with AND/OR even using the GUI. To build those branch rules I went into the Empty object and selected the Branch Rules tab. Then Add Branch Rule, selected the Simple tab, Add Expression, then AD Query, then User is a Member Of and entered the full DN path as you can see here, then Add Expression and Finished. This uses the data from our AD Query earlier in the policy flow. Setting the Variable Now we have three branches out of the Empty object and need to do something on them. We'll be building this section of the macro now: On each branch I added a Variable Assign from the Assignment tab to set my custom variable. Here’s what it looks like inside the Variable Assign objects. I got that by clicking Add New Entry and then inputting the values as you can see below. Now that I have my macro complete all I need to do is add it from the Macros tab into the policy after the AD Query. Using the Variable Now to use that dynamically set variable I simply need to call %{session.custom.role} within APM, or if I need it in an iRule I can use [ACCESS::session data get session.custom.role], or if I need it in an TCL expression such as a branch rule I can use [mcget {session.custom.role}]. Here is an example where I’m using it as the value for a SAML attribute named “role”. p.p1 {margin: 0.0px 0.0px 0.0px 0.0px; font: 12.0px Helvetica} p.p2 {margin: 0.0px 0.0px 0.0px 0.0px; font: 12.0px Helvetica; min-height: 14.0px}996Views0likes0CommentsSecurity Sidebar: Your Device Just Attacked Someone…And You Didn’t Even Know It

Large scale Distributed Denial of Service (DDoS) attacks are no joke, and attackers can use them to inflict substantial damage to just about any target they want. Depending on the target, the result of a DDoS attack could run the gamut of a simple pain in the neck because your website just went down to a significant financial loss for major corporations and customers. But regardless, these attacks are serious business, and the bad news is that they are becoming both easier to launch and more devastating to endure. Large-scale DDoS attacks will utilize a network of unsuspecting devices to inflict pain on their target. This network of unsuspecting devices is known as a Botnet. In order to build a Botnet, an attacker needs to gain access to many different Internet-connected devices. Back in the day, that was a tough job because there weren’t very many Internet-connected devices to go around. But today? No problem. Just think about all the devices that are Internet-connected. You probably own at least 5 of them yourself (smart phone, smart TV, computer, tablet, etc). With the proliferation of these Internet-connected devices, building a Botnet is easier now than ever before. Botnets are created by scanning the Internet for vulnerable devices in order to install malware on a device that will be used later to help launch the attack. The scanning typically happens one of two ways. The first is to port scan for specific servers and attempt to gain access by brute force guessing the username and password of the device. The second uses external scanners to find new bots and, in some cases, botnet servers that already control a multitude of bots. If you can gain control of a botnet server, then you gain control of all the bots it controls. Alternatively, if you don’t want to go through the hassle of building your own botnet, then you can always rent one out from one of many DDoS providers who will DDoS a target for you. Either way, it’s a powerful weapon. So, when these botnets are created or expanded, which vulnerable devices should they look for? I guess it doesn’t significantly matter what the device is as long as it has the capability to help launch the attack. That said, you have to wonder how many vulnerable devices are out there to be used in one of these botnets. No one knows the exact number of vulnerable devices (and it depends on the vulnerability being exploited as to which device is vulnerable), but suffice it to say, the explosion of Internet-connected devices have made it extremely easy to find millions of vulnerable devices. The truth is, attackers don’t need a desktop or laptop computer to launch an attack anymore. Now, they can go after devices like your home router, DVR, or IP camera to launch an attack. How many times do you change the default username/password on your home router? Or your IP camera? Or what about another device that gets shipped from the manufacturer with preloaded credentials that you don’t even have the ability to change? You can see how easy it is to find vulnerable devices. Security researcher and advocate Brian Krebs knows all too well about attacks from botnets. Last month, his site KrebsOnSecurity.com was hit by a DDoS attack that launched over 620 Gbps at his site. The site was taken down for the better part of a week. He had a DDoS protection provider in place, but when 620 Gbps of traffic is hurled at one target, it’s extremely difficult for a DDoS protection provider to keep up. In the end, the provider said they couldn’t handle it and they told him he had to find another provider to protect his site. This attack was almost double the size of the largest attack they had ever seen…and they are a big, capable DDoS protection provider. Krebs has since turned to Google and their new Project Shield program for protection. As for the attack, Krebs said “the huge assault this week on my site appears to have been launched almost exclusively by a very large botnet of hacked devices.” Brian Krebs is certainly not the only target of a massive DDoS attack. I could spend hours listing known DDoS attacks and still not cover them all. These things are real, and they are serious. To add insult to injury, many experts believe that people are actively researching ways to use these massive botnets to take down the Internet itself. Once upon a time, only well-funded nation states had the resources to launch massive attacks against a given enemy. That’s no longer the case. Certainly, a well-funded nation state could launch a devastating attack against a target…but so could the lowly owner of the massive botnet. Could someone literally take down the Internet? And, will your unsuspecting device help do it?216Views0likes0CommentsLightboard Lessons: SSL Outbound Visibility

You’ve been having trouble sleeping because of the SSL visibility problem with all the fancy security tools that don’t do decryption. Put down that ambien, because this Lightboard Lesson solves it. In episode, David Holmes diagrams the Right Way (tm) to decrypt and orchestrate outbound SSL traffic, improving SSL visibility, decreasing failures and improving network performance.1.3KViews1like10CommentsNew Elliptic Curve X25519 Trips Up ProxySG

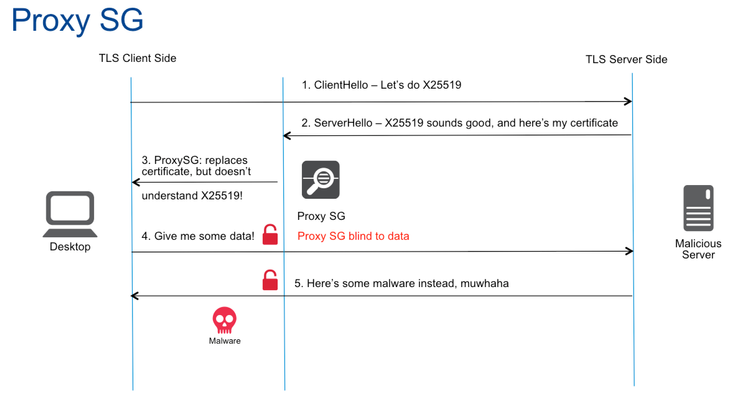

I happened to be with a customer in Oslo last month when Google threw them for loop by upgrading the main encryption cipher used between the Chrome browser and Google services such as Google.com and Gmail. I wrote with more specifics about this in my SecurityWeek article, but for those of you who somehow missed it, let me recap the salient points. In 2005, Daniel J. Bernstein introduced a high-performance elliptic curve cipher, Curve 25519 (which we are calling X25519 now). X25519 avoids many side-channel attacks and weaknesses in deterministic random number generators. In the decade since, X25519 has withstood enough scrutiny and gained enough market share to be supported by many clients and is now OpenSSH’s default cipher. In May of 2016, Google switched X25519 on for their servers and their client software such as Chrome. Since this affected only communications between Google clients and Google services it should be no problem, right? Wrong. The Oslo customer, like many customers, must intercept outbound SSL connections from within their data centers and headquarters for scanning purposes. Specifically, this customer’s security policy requires the scanning of email services. Their policy works to prevent: Phishing Malware infection Data loss prevention When Google switched on X25519, the customer’s Blue Coat ProxySG solution was no longer able to “tap” the communications between Google client software and Google services, meaning the customer was no longer able to decrypt and scan Gmail. Let me pause the conversation here to address this point. Perhaps you, dear reader, are thinking “Hey, maybe IT shouldn’t be reading my Gmail in the first place!” That sentiment is understandable, but if you really feel that way, don’t be checking your personal email at work. It’s that simple. I try never to spread fear, uncertainty, or doubt, but let me be clear about this: the Internet is hard enough to secure even when everyone in the organization follows all the rules. When users go around the rules, creating holes in the security policy, it becomes impossible. How do you think ransomware, business email fraud attacks, and APT get into organizations? Because, users. IT departments are, as a whole, actually quite respectful of privacy where possible and go out of their way not to intercept your banking or healthcare information. But email? Yes, it must be scanned. Most corporate users understand that by using corporate equipment and corporate networks they are consenting to their email being scanned for malware for the protection of the organization. So, back to our story. Once the ProxySG was blind to Google traffic, the Oslo customer was faced with a difficult choice: disable Google services or stop scanning. Their security policy dictated the former, but they were loath to do that. It didn’t have to be this way. If ProxySG were a full-proxy it could have survived the Google cipher upgrade. The problem with ProxySG is that it snoops just enough of the SSL connection to auto-generate an intercept certificate and retrieve the SSL session key that it will need to decrypt the session. It’s more of a tap than a proxy. Since ProxySG didn’t support X25519, it wasn’t able to retrieve the session key and decrypt the session. A full-proxy, like F5’s SSL Orchestrator, does not have this problem. A full-proxy establishes two connections: one from the client to the proxy, and a second from the proxy to the end-server. By using two connections, a true proxy can fully control the parameters of the conversation and enforce ciphers and policies that it understands. In this example, the SSL Orchestrator would have negotiated a cipher that it understood (such as AES256-SHA256) with the client and a different one with the server (perhaps ECDHE-AES256-GCM-SHA256). No X25519. Lori MacVittie wrote an excellent piece, “Three things your proxy can’t do unless it’s a full-proxy,” on DevCentral last year. In it, she explains the fundamental difference between half-proxies like ProxySG and full-proxies like the F5 SSL Orchestrator. She also (as telegraphed by the title) showcases three things you can’t do with just a half-proxy. I won’t spoil the whole article, but number three is terminate SSL/TLS.> See, it’s not just me saying this. Getting back to our Oslo customer: if they had been using the F5 SSL Orchestrator when Google flipped on X25519, they would have been able to continue their scanning and not suffered from the SSL blind spot. Ultimately, both Blue Coat ProxySG and the F5 SSL Orchestrator are being upgraded to support X25519, but F5 customers won’t be blind to SSL traffic during the transition. Remember that when something like this happens again.461Views0likes0CommentsYou Don’t Own Anything Anymore Including Your Privacy

You purchased a Tesla Model S and live the %1 eco-warrior dream. Now imagine if GM purchased Tesla and sent an over-the-air update to disable all Tesla cars because they’re working on their own electric substitute? Literally even if you were on the freeway to pick up your fajita skillet from Chili's To Go. You’d be pretty sassed, right? Google is doing just that and setting a new precedence of poor choices. Arlo Gilbert recently reported his discovery of Nest’s decision to permanently disable the Revolv home automation tool that parent company Google acquired 17 months ago. To clarify, if you bought the Revolv device, not only will it no longer be supported for future updates but Google is going to disable existing models already purchased and in use. The app will stop to function and the hub will cease operating. There’s no logical business reasoning stated on Revolv’s FAQ, but Google's very visible middle finger is another recent example of exercising the terms and conditions of the rarely read or understood EULA. The End User License Agreement or EULA is a masterful contract, washing away ethical and legal responsibilities of the issuing body. In this example, it allows Google to disable a service you purchased even before Google acquired the company. You'd think this is just another tin-foil hat security rant... Increasing coverage on various EULA’s terms of acceptable use and ownership are coming to light; specifically when related to corporate disclosure of personal data. What's more disturbing are the increases in terminology for allowances to actively track you beyond metadata analytics. Oculus’s new RIFT VR headset has some rather unsettling EULA conditions, made more creepy due to the very nature of the device’s intended use. U.S. Senator Al Franken recently wrote an open letter to Brendan Iribe, CEO of Oculus VR (owned by Facebook, masters of the EULA) questioning the depth of “collection, storage, and sharing of user’ personal data”. Given the intended use of Oculus as an immersive input device, their EULA is akin to a keyboard manufacturer being allowed to record your keystrokes, for the purpose of “making sure heavily used keys are strengthened for future clientele”. The exhausted cries over NSA intrusion into our lives are muffled by the petabytes of voluntary metrics we provide private companies to share and sell, all decided by clicking “I Agree” to the EULA. So where’s the backlash? It’s taken some time, but as Fortune noted this week, people are finally realizing that sharing their most intimate secrets on the internet may not be such a wise thing to do (I'd say stupid or dumb but that’s insensitive). People are now recognizing that everything posted on the internet comes at a cost of privacy and privacy isn’t such a bad thing. Yet now we have corporations disabling products, justified by esoteric clauses defined in ammended EULAs, so maybe it’s time to reevaluate where we stand as end users. You don’t have to update iTunes or use Apple’s App store, but that makes owning an Apple device pretty difficult. You don’t have to agree to Windows default telemetry settings but it’ll be difficult to disable everything in Windows. Yea, I can run Bastille Linux and wear a trilby in my dark cave of knowledge but I’ll miss out on some pretty neat advances in technology, and life in general. So what do we do? Read the fine print and make educated and logical choices based off our desired needs for the product or service’s intended use. Yea right.... I need my Instagram filters more than my privacy. Ok, maybe making sure people don’t use iTunes to produce biological weapons is a good idea, but “how” would be something interesting to see. That person should receive a grant or something.256Views0likes0CommentsVerhindert das Silo-Konstrukt in Unternehmen ein umfassendes Sicherheitskonzept?

Buchbinder-Wanninger – Sie kennen das sicherlich. Keiner fühlt sich verantwortlich und jeder reicht die Anfrage von Einem zum Anderen. In Unternehmen ist es ähnlich geartet, wenn es um das Thema Sicherheit geht. Ist hier die Abteilung für Applikationen verantwortlich, muss das Thema im Bereich Netzwerk aufgehoben sein oder (aber) ist es in einem eigenen Silo als Anwendungssicherheit hinterlegt? Oft wird das Thema Sicherheit von Anwendungen daher leider so lange nicht beachtet, bis es zum Angriff kommt und unter Umständen, Geld, Reputation oder gar im schlimmsten Fall Kundenverlust führt und damit zum „Super-GAU“ wird - dem Verlust von Geschäft. Die IT und damit alle Abteilungen, die sich damit befassen, sind nicht mehr länger nur „Ausstatter“ sondern vielmehr zum Business-Enabler geworden. Sie sind die, die darüber entscheiden, wie und ob ein Unternehmen geschäftlichen Erfolg erzielt, denn wir alle benötigen Hard- und Software und vor allem Sicherheit, um Umsatz zu generieren. Ist es nicht eine Überlegung wert, das Thema Sicherheit und somit ein umfassendes Konzept auf eine höhere Ebene zu bringen? Einer Ebene, die über den eigentlichen Abteilungen liegt und Teil der Geschäftsleitung ist? Das hätte den Vorteil von einem übergeordneten Blick auf vor allem den Fokus auf das Geschäft. Diese Stabsstelle – nennen wir sie CDO (Chief Digital Officer) unterliegt nicht wie meist ein CIO dem Finanzchef sondern hat freie Handlungsweise und Umsatzziele, so daß ein persönliches Interesse an dem reibungslosen Ablauf und dem Hand-in-Hand-Gehen der IT-Abteilungen besteht. Ich möchte Sie gerne einladen, meinen Blogbeitrag zu diesem Thema unter: http://www.silicon.de/blog/ist-ein-cdo-wirklich-noetig/ zu lesen und mit mir zu diskutieren. Wie sieht es in Ihrem Unternehmen aus, wie werden Sicherheitsbelange und Fragen dazu gelöst, wer ist verantwortlich und haben Sie einen holistischen Sicherheitsansatz?181Views0likes0Comments