SSL Profiles Part 8: Client Authentication

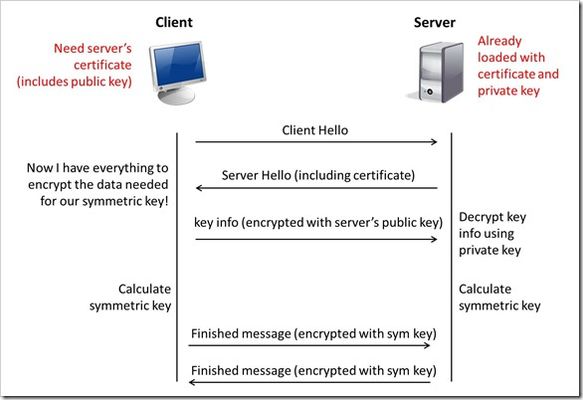

This is the eighth article in a series of Tech Tips that highlight SSL Profiles on the BIG-IP LTM. SSL Overview and Handshake SSL Certificates Certificate Chain Implementation Cipher Suites SSL Options SSL Renegotiation Server Name Indication Client Authentication Server Authentication All the "Little" Options This article will discuss the concept of Client Authentication, how it works, and how the BIG-IP system allows you to configure it for your environment. Client Authentication In a TLS handshake, the client and the server exchange several messages that ultimately result in an encrypted channel for secure communication. During this handshake, the client authenticates the server's identity by verifying the server certificate (for more on the TLS handshake, see SSL Overview and Handshake - Article 1in this series). Although the client always authenticates the server's identity, the server is not required to authenticate the client's identity. However, there are some situations that call for the server to authenticate the client. Client authentication is a feature that lets you authenticate users that are accessing a server. In client authentication, a certificate is passed from the client to the server and is verified by the server. Client authentication allow you to rest assured that the person represented by the certificate is the person you expect. Many companies want to ensure that only authorized users can gain access to the services and content they provide. As more personal and access-controlled information moves online, client authentication becomes more of a reality and a necessity. How Does Client Authentication Work? Before we jump into client authentication, let's make sure we understand server authentication. During the TLS handshake, the client authenticates the identity of the server by verifying the server's certificate and using the server's public key to encrypt data that will be used to compute the shared symmetric key. The server can only generate the symmetric key used in the TLS session if it can decrypt that data with its private key. The following diagram shows an abbreviated version of the TLS handshake that highlights some of these concepts. Ultimately, the client and server need to use a symmetric key to encrypt all communication during their TLS session. In order to calculate that key, the server shares its certificate with the client (the certificate includes the server's public key), and the client sends a random string of data to the server (encrypted with the server's public key). Now that the client and server each have the random string of data, they can each calculate (independently) the symmetric key that will be used to encrypt all remaining communication for the duration of that specific TLS session. In fact, the client and server both send a "Finished' message at the end of the handshake...and that message is encrypted with the symmetric key that they have both calculated on their own. So, if all that stuff works and they can both read each other's "Finished" message, then the server has been authenticated by the client and they proceed along with smiles on their collective faces (encrypted smiles, of course). You'll notice in the diagram above that the server sent its certificate to the client, but the client never sent its certificate to the server. When client authentication is used, the server still sends its certificate to the client, but it also sends a "Certificate Request" message to the client. This lets the client know that it needs to get its certificate ready because the next message from the client to the server (during the handshake) will need to include the client certificate. The following diagram shows the added steps needed during the TLS handshake for client authentication. So, you can see that when client authentication is enabled, the public and private keys are still used to encrypt and decrypt critical information that leads to the shared symmetric key. In addition to the public and private keys being used for authentication, the client and server both send certificates and each verifies the certificate of the other. This certificate verification is also part of the authentication process for both the client and the server. The certificate verification process includes four important checks. If any of these checks do not return a valid response, the certificate verification fails (which makes the TLS handshake fail) and the session will terminate. These checks are as follows: Check digital signature Check certificate chain Check expiration date and validity period Check certificate revocation status Here's how the client and server accomplish each of the checks for client authentication: Digital Signature: The client sends a "Certificate Verify" message that contains a digitally signed copy of the previous handshake message. This message is signed using the client certificate's private key. The server can validate the message digest of the digital signature by using the client's public key (which is found in the client certificate). Once the digital signature is validated, the server knows that public key belonging to the client matches the private key used to create the signature. Certificate Chain: The server maintains a list of trusted CAs, and this list determines which certificates the server will accept. The server will use the public key from the CA certificate (which it has in its list of trusted CAs) to validate the CA's digital signature on the certificate being presented. If the message digest has changed or if the public key doesn't correspond to the CA's private key used to sign the certificate, the verification fails and the handshake terminates. Expiration Date and Validity Period: The server compares the current date to the validity period listed in the certificate. If the expiration date has not passed and the current date is within the period, everything is good. If it's not, then the verification fails and the handshake terminates. Certificate Revocation Status: The server compares the client certificate to the list of revoked certificates on the system. If the client certificate is on the list, the verification fails and the handshake terminates. As you can see, a bunch of stuff has to happen in just the right way for the Client-Authenticated TLS handshake to finalize correctly. But, all this is in place for your own protection. After all, you want to make sure that no one else can steal your identity and impersonate you on a critically important website! BIG-IP Configuration Now that we've established the foundation for client authentication in a TLS handshake, let's figure out how the BIG-IP is set up to handle this feature. The following screenshot shows the user interface for configuring Client Authentication. To get here, navigate to Local Traffic > Profiles > SSL > Client. The Client Certificate drop down menu has three settings: Ignore (default), Require, and Request. The "Ignore" setting specifies that the system will ignore any certificate presented and will not authenticate the client before establishing the SSL session. This effectively turns off client authentication. The "Require" setting enforces client authentication. When this setting is enabled, the BIG-IP will request a client certificate and attempt to verify it. An SSL session is established only if a valid client certificate from a trusted CA is presented. Finally, the "Request" setting enables optional client authentication. When this setting is enabled, the BIG-IP will request a client certificate and attempt to verify it. However, an SSL session will be established regardless of whether or not a valid client certificate from a trusted CA is presented. The Request option is often used in conjunction with iRules in order to provide selective access depending on the certificate that is presented. For example: let's say you would like to allow clients who present a certificate from a trusted CA to gain access to the application while clients who do not provide the required certificate be redirected to a page detailing the access requirements. If you are not using iRules to enforce a different outcome based on the certificate details, there is no significant benefit to using the "Request" setting versus the default "Ignore" setting. In both cases, an SSL session will be established regardless of the certificate presented. Frequency specifies the frequency of client authentication for an SSL session. This menu offers two options: Once (default) and Always. The "Once" setting specifies that the system will authenticate the client only once for an SSL session. The "Always"setting specifies that the system will authenticate the client once when the SSL session is established as well as each time that session is reused. The Retain Certificate box is checked by default. When checked, the client certificate is retained for the SSL session. Certificate Chain Traversal Depth specifies the maximum number of certificates that can be traversed in a client certificate chain. The default for this setting is 9. Remember that "Certificate Chain" part of the verification checks? This setting is where you configure the depth that you allow the server to dig for a trusted CA. For more on certificate chains, see article 2 of this SSL series. Trusted Certificate Authorities setting is used to specify the BIG-IP's Trusted Certificate Authorities store. These are the CAs that the BIG-IP trusts when it verifies a client certificate that is presented during client authentication. The default value for the Trusted Certificate Authorities setting is None, indicating that no CAs are trusted. Don't forget...if the BIG-IP Client Certificate menu is set to Require but the Trusted Certificate Authorities is set to None, clients will not be able to establish SSL sessions with the virtual server. The drop down list in this setting includes the name of all the SSL certificates installed in the BIG-IP's /config/ssl/ssl.crt directory. A newly-installed BIG-IP system will include the following certificates: default certificate and ca-bundle certificate. The default certificate is a self-signed server certificate used when testing SSL profiles. This certificate is not appropriate for use as a Trusted Certificate Authorities certificate bundle. The ca-bundle certificate is a bundle of CA certificates from most of the well-known PKIs around the world. This certificate may be appropriate for use as a Trusted Certificate Authorities certificate bundle. However, if this bundle is specified as the Trusted Certificate Authorities certificate store, any valid client certificate that is signed by one of the popular Root CAs included in the default ca-bundle.crt will be authenticated. This provides some level of identification, but it provides very little access control since almost any valid client certificate could be authenticated. If you want to trust only certificates signed by a specific CA or set of CAs, you should create and install a bundle containing the certificates of the CAs whose certificates you trust. The bundle must also include the entire chain of CA certificates necessary to establish a chain of trust. Once you create this new certificate bundle, you can select it in the Trusted Certificate Authorities drop down menu. The Advertised Certificate Authorities setting is used to specify the CAs that the BIG-IP advertises as trusted when soliciting a client certificate for client authentication. The default value for the Advertised Certificate Authorities setting is None, indicating that no CAs are advertised. When set to None, no list of trusted CAs is sent to a client with the certificate request. If the Client Certificate menu is set to Require or Request, you can configure the Advertised Certificate Authorities setting to send clients a list of CAs that the server is likely to trust. Like the Trusted Certificate Authorities list, the Advertised Certificate Authorities drop down list includes the name of all the SSL certificates installed in the BIG-IP /config/ssl/ssl.crt directory. A newly-installed BIG-IP system includes the following certificates: default certificate and ca-bundle certificate. The default certificate is a self-signed server certificate used for testing SSL profiles. This certificate is not appropriate for use as an Advertised Certificate Authorities certificate bundle. The ca-bundle certificate is a bundle of CA certificates from most of the well-known PKIs around the world. This certificate may be appropriate for use as an Advertised Certificate Authorities certificate bundle. If you want to advertise only a specific CA or set of CAs, you should create and install a bundle containing the certificates of the CA to advertise. Once you create this new certificate bundle, you can select it in the Advertised Certificate Authorities setting drop down menu. You are allowed to configure the Advertised Certificate Authorities setting to send a different list of CAs than that specified for the Trusted Certificate Authorities. This allows greater control over the configuration information shared with unknown clients. You might not want to reveal the entire list of trusted CAs to a client that does not automatically present a valid client certificate from a trusted CA. Finally, you should avoid specifying a bundle that contains a large number of certificates when you configure the Advertised Certificate Authorities setting. This will cut down on the number of certificates exchanged during a client SSL handshake. The maximum size allowed by the BIG-IP for native SSL handshake messages is 14,304 bytes. Most handshakes don't result in large message lengths, but if the SSL handshake is negotiating a native cipher and the total length of all messages in the handshake exceeds the 14,304 byte threshold, the handshake will fail. The Certificate Revocation List (CRL) setting allows you to specify a CRL that the BIG-IP will use to check revocation status of a certificate prior to authenticating a client. If you want to use a CRL, you must upload it to the /config/ssl/ssl.crl directory on the BIG-IP. The name of the CRL file may then be entered in the CRL setting dialog box. Note that this box will offer no drop down menu options until you upload a CRL file to the BIG-IP. Since CRLs can quickly become outdated, you should use either OCSP or CRLDP profiles for more robust and current verification functionality. Conclusion Well, that wraps up our discussion on Client Authentication. I hope the information helped, and I hope you can use this to configure your BIG-IP to meet the needs of your specific network environment. Be sure to come back for our next article in the SSL series. As always, if you have any other questions, feel free to post a question here or Contact Us directly. See you next time!23KViews1like21CommentsSNI Routing with BIG-IP

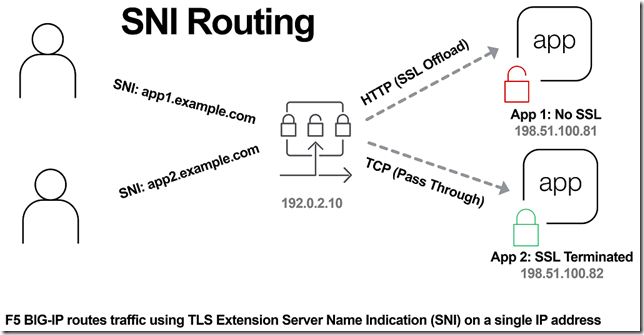

In the previous article, The Three HTTP Routing Patterns, Lori MacVittie covers 3 methods of routing. Today we will look at Server Name Indication (SNI) routing as an additional method of routing HTTPS or any protocol that uses TLS and SNI. Using SNI we can route traffic to a destination without having to terminate the SSL connection. This enables several benefits including: Reduced number of Public IPs Simplified configuration More intelligent routing of TLS traffic Terminating SSL Connections When you havea SSL certificate and key you can perform the cryptographic actions required to encrypt traffic using TLS. This is what I refer to as “terminating the SSL connection” throughout this article. When you want to route traffic this is a chickenand an egg problem, becausefor TLS traffic you want to be able to route the traffic by being able to inspect the contents, but this normally requires being able to “terminate the SSL connection”. The goal of this article is to layer in traffic routing for TLS traffic without having to require having/knowing the original SSL certificate and key. Server Name Indication (SNI) SNI is a TLS extension that makes it possible to "share" certificates on a single IP address. This is possible due to a client using a TLS extension that requests a specific name before the server responds with a SSL certificate. Prior to SNI, the other options would be a wildcard certificate or Subject Alternative Name (SAN) that allows you to specify multiple names with a single certificate. SNI with Virtual Servers It has been possible to use SNI on F5 BIG-IP since TMOS 11.3.0. The following KB13452 outlines how it can be configured. In this scenario (from the KB article) the BIG-IP is terminating the SSL connection. Not all clients support SNI and you will always need to specify a “fallback” profile that will be used if a SNI name is not used or matched. The next example will look at how to use SNI without terminating the SSL connection. SNI Routing Occasionally you may have the need to have a hybrid configuration of terminating SSL connections on the BIG-IP and sending connections without terminating SSL. One method is to create two separate virtual servers, one for SSL connections that the BIG-IP will handle (using clientssl profile) and one that it will not handle SSL (just TCP). This works OK for a small number of backends, but does not scale well if you have many backends (run out of Public IP addresses). Using SNI Routing we can handle everything on a single virtual server / Public IP address. There are 3 methods for performing SNI Routing with BIG-IP iRule with binary scan a. Article by Colin Walker code attribute to Joel Moses b. Code Share by Stanislas Piron iRule with SSL::extensions Local Traffic Policy Option #1 is for folks that prefer complete control of the TLS protocol. It only requires the use of a TCP profile. Options #2 and #3 only require the use of a SSL persistence profile and TCP profile. SNI Routing with Local Traffic Policy We will skip option #1 and #2 in this article and look at using a Local Traffic Policy for SNI Routing. For a review of Local Traffic Policies check out the following DevCentral articles: LTM Policy Jan 2015 Simplifying Local Traffic Policies in BIG-IP 12.1 June 2016 In previous articles about Local Traffic Policiesthe focus was on routing HTTP traffic, but today we will use it to route SSL connections using SNI. In the following example, using a Local Traffic Policy named “sni_routing”, we are setting a condition on the SSL Extension “servername” and sending the traffic to a pool without terminating the SSL connection. The pool member could be another server or another BIG-IP device. The next example will forward the traffic to another virtual server that is configured with a clientssl profile. This uses VIP targeting to send traffic to another virtual server on the same device. In both examples it is important to note that the “condition”/“action” has been changed from occurring on “request” (that maps to a HTTP L7 request) to “ssl client hello”. By performing the action prior to any L7 functions occurring, we can forward the traffic without terminating the SSL connection. The previous example policy, “sni_routing”, can be attached to a Virtual Server that only has a TCP profile and SSL persistence profile. No HTTP or clientssl profile is required! This method can also be used to solve the issue of how to consolidate multiple SSL virtual servers behind a single virtual server that have different APM and/or ASM policies. This is similar to the architecture that is used by the Container Connector for Cloud Foundry; in creating a two-tier load balancing solution on a single device. Routed Correctly? TLS 1.3 has interesting proposals on how to obscure the servername (TLS in TLS?), but for now this is a useful and practical method of handling multiple SSL certs on a single IP. In the future this may still be possible as well with TLS 1.3. For example the use of a HTTP Fronting service could be a tier 1 virtual server (this is just my personal speculation, I have not tried, at the time of publishing this was still a draft proposal). In other news it has been demonstrated that a combination of using SNI and a different host header can be used for “domain fronting”. A method to enforce consistent policy (prevent domain fronting) would be to layer in additional conditions that match requested SNI servername (TLS extension) with requested HOST header (L7 HTTP header). This would help enforce that a tenant is using a certificate that is associated with their application and not “borrowing” the name and certificate that is being used by an adjacent service. We don’t think of a TLS extension as an attribute that can be used to route application traffic, but it is useful and possible on BIG-IP.21KViews0likes16CommentsBIG-IP Configuration Conversion Scripts

Kirk Bauer, John Alam, and Pete White created a handful of perl and/or python scripts aimed at easing your migration from some of the “other guys” to BIG-IP.While they aren’t going to map every nook and cranny of the configurations to a BIG-IP feature, they will get you well along the way, taking out as much of the human error element as possible.Links to the codeshare articles below. Cisco ACE (perl) Cisco ACE via tmsh (perl) Cisco ACE (python) Cisco CSS (perl) Cisco CSS via tmsh (perl) Cisco CSM (perl) Citrix Netscaler (perl) Radware via tmsh (perl) Radware (python)1.6KViews1like13CommentsSMTP Smugglers Blues

The SMTP protocol has been vulnerable to email smuggling for decades. Many of the mail servers out there have mitigations in place to handle this vulnerability but not all of them, especially the quick libraries and add-ons you can find on web sites. Protecting your server from these attacks is simple with F5 BIG-IP Advanced WAF and our SMTP Protocol Security profiles. Read to learn how to give those bad actors the “Smugglers Blues”188Views2likes2CommentsHow I did it - "Integrating Fortanix SDKMS with the BIG-IP"

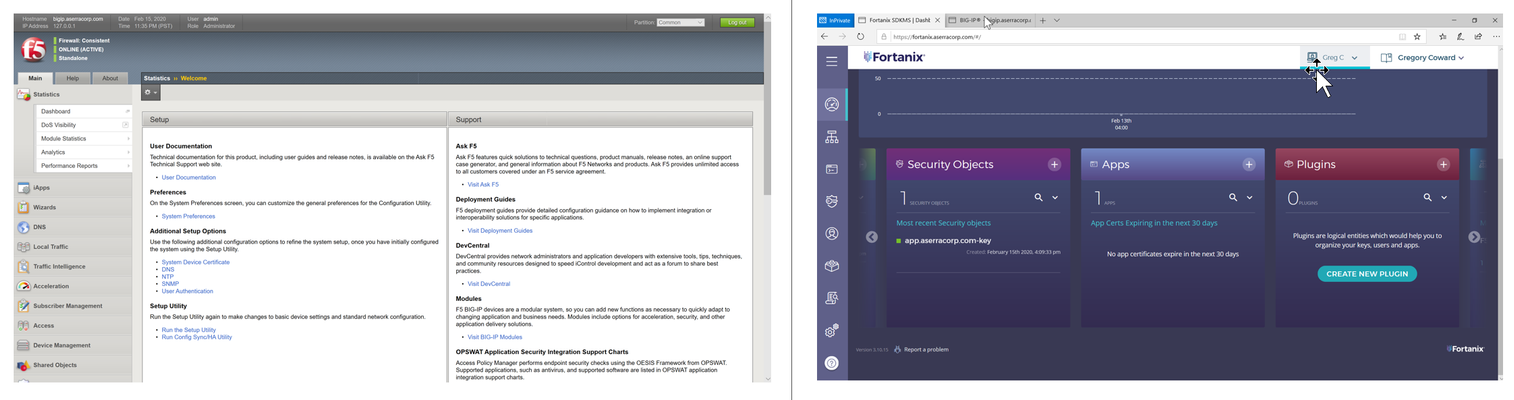

Let Me Ask You a Question I need to implement a key management service (KMS) to manage my organization’s TLS keys.The KMS/HSM needs to be cloud-agnostic, secure, scalable, and available to handle crypto operations offloaded from web applications deployed on a variety of platforms across the globe.What’s more, there is a requirement that the organization maintains full control and ownership of the KMS and it’s operations so using a SaaS offering is not an option. Here’s the question, what can I use? Why Fortanix? Answering the above question isn’t an easy one.When I hear phrases like “scalable and highly available” and “...across the globe”, I immediately start looking at the public cloud.But, I still need to be cloud agnostic and maintain full control so cloud HSMs and SaaS offerings don’t fit the bill. To address the above requirements, I turned to one of F5’s partners and deployed the Fortanix Self-Defending Key Management Service (SDKMS).The Fortanix SDKMS system checks all the boxes, including: Cloud Agnostic - I am able to make use of KMS services across all public cloud platforms as well as on-premises. Secure/Scalable/Highly-Available - Deploys in the public cloud on hardware that utilizes Intel SGX technology and runs every operation in HSM-grade security. Full Control - Deploys in your infrastructure and provides web-based UI for centralized management. Solution Overview In this article, we’ll walk through configuring the BIG-IP to offload TLS crypto operations to a Fortanix SDKMS.The deployment process is quite similar to F5’s integration with Equinix SmartKey, (Fortanix SDKMS SaaS offering).The following steps are based upon F5’s guidance for implementing a network HSM. Okay, let’s take a look at how I did it. Prerequisites F5 BIG-IP LTM 14.1.0 or later - Virtual Edition (VE) utilized for this article.Both hardware and virtual edition platforms support network HSM integration. Additionally, you will need to provide a license covering the network HSM module. Fortanix SDKMS Cluster - We’re going to go right to the source, (Fortanix installation guidance). Setting up the SDKMS cluster is relatively straightforward and runs on Azure’s new DC-series instances, (currently in preview).Currently, the DC-series is available for public preview in the US East region only.The cool thing about the DC-series is that it makes use of Intel SGX technology.A better way to put it is it allows Azure infrastructure customers to make use of SGX. Fortanix SDKMS makes use of the underlying SGX technology to providesecure scalable data at rest. Step 1 - Create SDKMS Application Assuming the prerequisites have been met, (i.e. I have a Fortanix SDKMS stood up), the first thing I need to do is create an application object in SDKMS. The application object can access certificates, keys, and secrets that will be used by my application, (delivered via the BIG-IP).. A. Login to the Fortanix SDKMS UI.Select the ‘Apps’ icon from the left blade and then the ‘+’ to open a new application form. B. Provide the name of the application and select ‘API Key’ for the authentication method. C. Create a group and assign the application to the group.The group represents a collection of security objects, (applications, keys, certificates, etc.) that are available to members of the group. D. Select ‘Save’ to create the application, (see below left). With the application created, select ‘COPY API KEY’, (see above right) to capture the api key and store for later use. The key will be used by the BIG-IP as the password to authenticate calls to SDKMS. Step 2 - Install Fortanix Plugin Now that we have SDKMS prepared, I need to turn my attention to the BIG-IP.In this step, I will use my favorite ssh client to log into the BIG-IP as root. From there I will use the following commands to download and install the Fortanix plugin onto the BIG-IP.The plugin, (RPM) is available for download from here. cd /shared/ mkdir nethsm cd nethsm curl -O https://d2bzqwib4mjc49.cloudfront.net/3.11.1281/fortanix-pkcs11-4.25.2353-0.x86_64.rpm rpm -ivh ./fortanix-pkcs11-4.25.2353-0.x86_64.rpm Step 3 - Configure BIG-IP netHSM Integration A. Add the Fortanix HSM library to the BIG-IP tmsh create sys crypto fips external-hsm vendor auto pkcs11-lib-path /opt/fortanix/pkcs11/fortanix_pkcs11.sofortanix-pkcs11-3.11.1281-0.x86_64.rpm B. Configure the netHSM partition tmsh create sys crypto fips nethsm-partition auto password <copied API key> C. Restart thepkcs11d service bigstart restart pkcs11d tmm D. Testing Connectivity I will now use the BIG-IP management GUI to test connectivity between the BIG-IP and SDKMS. After logging into the BIG-IP GUI navigate to System --> Certificate Management --> HSM Management --> External HSM. Under the 'Partitions' section check the checkbox next to the partition in the partition list and select 'Test'. Below is example output of a successful connectivity test. Step 4 - Import SSL Certificate/key to BIG-IP and SDKMS A. Import private key into SDKMS Now that we have our external HSM, (Fortanix), https://fortanix.aserracorp.com integrated with our BIG-IP let’s put it to use. To start with, I will import a private key into SDKMS. Login to the Fortanix SDKMS UI and select the ‘Security Objects’ icon from the left blade and then the ‘+’ to open a new security object form. Provide the name for the key and select ‘Import’. Select 'RSA' for the object type Select 'Base64' and upload the my key. Associate the key to previously created group. Select ‘Import' to create the security object, (see below). B. Import SSL Certificate and netHSM Key Pointer into BIG-IP With the SDKMS now hostng the private key, I now import the corresponding certificate into the BIG-IP. Additionally, I must create a key resource pointing to the Fortanix SDKMS-hosted key. Login to the BIG-IP management GUI and navigate to System --> Certificate Management --> SSL Certificate List --> Import Select 'Certificate' for import type and provide a name. Browse to and upload certificate, select 'Import', (see below left). navigate to System --> Certificate Management --> SSL Certificate List --> Import Select 'Key' for import type and provide a name. The name must match the security object name of the SDKMS-stored key. Select 'From NetHSM' for 'Key Source', select 'Import', (see below right). C. Create SSL Profile and Attach to Virtual Server The last thing I need to do is create a Client SSL profile and associate it with my virtual server. Login to the BIG-IP management GUI and navigate to Local Traffic --> Profiles --> SSL --> CLIENT --> + Provide a name and check the 'Custom' checkbox. In the 'Certificate Key Chain' section select 'Add' Select the previously imported certificate and key from the drop-down menus Select 'Finished' to create the profile, (see below left) navigate to Local Traffic --> Virtual Servers and select the appropriate virtual server Under the 'SSLProfile (Client)' section select the previously create SSL profile, (see below right). Select 'Update' to save the modified virtual server. Well, that's how I did it. Now with the setup and configuration completed, my application, (https://app.aserracorp.com) is now secured with the BIG-IP offloading the crypto workload to Fortanix SDKMS. Additional Links Fortanix Self-Defending Key Management Service Setting Up the Network HSM BIG-IP Local Traffic Manager Azure Confidential Computing1.3KViews0likes0CommentsTACACS+ Remote Role Configuration for BIG-IP

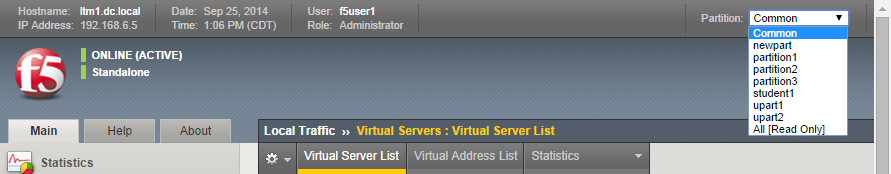

Several years ago (can it really have been 2009?) I wrote up a solution for using tacacs+ as the authentication and authorization source for BIG-IP user management. Much has changed in five years: new roles have been added to the system, tmsh has replaced bigpipe, and unrelated to our end of the solution, my favorite flavor of the free tacacs daemon, tac_plus, is no longer available! This article will cover all the steps necessary to get a tacacs+ installation established on a Ubuntu server, configure tacacs+, configure the BIG-IP to utilize that tacacs+ server, and test the installation. Before that, however, I'll address the role information necessary to make it all work. The tacacs config in this article is dependent on a version that I am no longer able to get installed on a modern linux flavor. Instead, try this Dockerized tacacs+ server for your testing. The details in the rest of the article are still appropriate. BIG-IP Remote Role Details There are quite a few more roles than previously. The table below shows all the roles available as of TMOS version 11.5.1. Role Role Value admin 0 resource-admin 20 user-manager 40 auditor 80 manager 100 application-editor 300 operator 400 certificate-manager 500 irule-manager 510 guest 700 web-application-security-administrator 800 web-application-security-editor 810 acceleration-policy-editor 850 no-access 900 In addition to the role, the console (tmsh or disabled) and partition (all, Common (default) or specified partition) settings need to be addressed. Installing tac_plus First, download the tac_plus package from pro-bono to /var/tmp. I'm assuming you already have gcc installed, if you don't, please check google for installing gcc on your Ubuntu installation. Change directory to /var/tmp and extract the package. cd /var/tmp/ #current file is DEVEL.201407301604.tar.bz2 tar xvf DEVEL.201407301604.tar.bz2 Change directory into PROJECTS, configure the package for tacacs, then compile and install it. Do these steps one at a time (don't copy and paste the group.) cd PROJECTS ./configure tac_plus make sudo make install After a successful installation, copy the sample configuration to the config directory, and copy the init script over to the system init script directory, modify the file attributes and permissions, then apply the init script to the system. sudo cp /usr/local/etc/mavis/sample/tac_plus.cfg /usr/local/etc/ sudo cp /var/tmp/PROJECTS/tac_plus/extra/etc_init.d_tac_plus /etc/init.d/tac_plus sudo chmod 755 /etc/init.d/tac_plus sudo update-rc.d tac_plus defaults Configuring tac_plus Now that the installation is complete, the configuration file needs to be cleaned up and configured. There are many options that can extend the power of the tac_plus daemon, but this article will focus on authentication and authorization specific to the BIG-IP role information described above. Starting with the daemon listener itself, this is contained in the spawnd id. I changed the port to the default tacacs port, which is 49 (tcp). id = spawnd { listen = { port = 49 } spawn = { instances min = 1 instances max = 10 } background = no } Next, the logging locations and host information need to be set. I left the debug values alone, as well as the binding address. Assume all the remaining code snippets from the tac_plus configuration are wrapped in the id = tac_plus { } section. debug = PACKET AUTHEN AUTHOR access log = /var/log/access.log accounting log = /var/log/acct.log host = world { address = ::/0 prompt = "\nAuthorized access only!\nTACACS+ Login\n" key = f5networks } After the host data is configured, the groups need to be configured. For this exercise, the groups will be aligned to the administrator, application editor, user manager, and ops roles, with admins and ops getting console access. Admins will have access to all partitions, ops will have access only to partition1, and the remaining groups will have access to the Common partition. group = adm { service = ppp { protocol = ip { set F5-LTM-User-Info-1 = adm set F5-LTM-User-Console = 1 set F5-LTM-User-Role = 0 set F5-LTM-User-Partition = all } } } group = appEd { service = ppp { protocol = ip { set F5-LTM-User-Info-1 = appEd set F5-LTM-User-Console = 0 set F5-LTM-User-Role = 300 set F5-LTM-User-Partition = Common } } } group = userMgr { service = ppp { protocol = ip { set F5-LTM-User-Info-1 = userMgr set F5-LTM-User-Console = 0 set F5-LTM-User-Role = 40 set F5-LTM-User-Partition = Common } } } group = ops { service = ppp { protocol = ip { set F5-LTM-User-Info-1 = ops set F5-LTM-User-Console = 1 set F5-LTM-User-Role = 400 set F5-LTM-User-Partition = partition1 } } } Finally, map a user to each of those groups for testing the solution. I would not recommend using a clear key (host configuration) or clear passwords in production, these are shown here for demonstration purposes only. Mapping to /etc/password, or even a centralized ldap/ad solution would be far better for operational considerations. user = f5user1 { password = clear letmein member = adm } user = f5user2 { password = clear letmein member = appEd } user = f5user3 { password = clear letmein member = userMgr } user = f5user4 { password = clear letmein member = ops } Save the file, and then start the tac_plus daemon by typing service tac_plus start. Configuring BIG-IP Now that the tacacs configuration is complete and the service is available, the BIG-IP needs to be configured to use it! The remote role configuration is pretty straight forward in tmsh, and note that the role info aligns with the groups configured in tac_plus. auth remote-role { role-info { adm { attribute F5-LTM-User-Info-1=adm console %F5-LTM-User-Console line-order 1 role %F5-LTM-User-Role user-partition %F5-LTM-User-Partition } appEd { attribute F5-LTM-User-Info-1=appEd console %F5-LTM-User-Console line-order 2 role %F5-LTM-User-Role user-partition %F5-LTM-User-Partition } ops { attribute F5-LTM-User-Info-1=ops console %F5-LTM-User-Console line-order 4 role %F5-LTM-User-Role user-partition %F5-LTM-User-Partition } userMgr { attribute F5-LTM-User-Info-1=userMgr console %F5-LTM-User-Console line-order 3 role %F5-LTM-User-Role user-partition %F5-LTM-User-Partition } } } Note: Because we defined the behaviors for each role in tac_plus, they don't need to be redefined here, which is why the % syntax is used in this configuration for the console, role, and user-partition. However, if it is preferred to define the behaviors on box, that can be done instead and then you can just define the F5-LTM-User-Info-1 attribute on tac_plus. Either way is supported. Here's an example of the alternative on the BIG-IP side for the admin role. adm { attribute F5-LTM-User-Info-1=adm console enabled line-order 1 role administrator user-partition All } Final step is to set the authentication source to tacacs and set the host parameters. auth source { type tacacs } auth tacacs system-auth { debug enabled protocol ip secret $M$2w$jT3pHxY6dqGF1tHKgl4mWw== servers { 192.168.6.10 } service ppp } Testing the Solution It wouldn't be much of a solution if it didn't work, so the following screenshots show the functionality as expected in the GUI and the CLI. F5user1 This user is in the admin group, and should have access to all the partitions, be an administrator, and be able to not only connect to the console, but jump out of tmsh to the advanced shell. You can do this with the run util bash command in tmsh. F5user2 This user is an application editor, and should have access only to the common partition with no access to the console. Notice the failed logins at the CLI, and the partition is firm with no drop down. F5user3 This user has the user manager role and like the application editor has no access to the console. The partition is hard-coded to common as well. F5user4 Finally, the last user is mapped to the ops group, so they will be bound to partition1, and whereas they have console access, they do not have access to the advanced shell as they are not an admin user.4.6KViews1like5CommentsUsing BIG-IP GTM to Integrate with Amazon Web Services

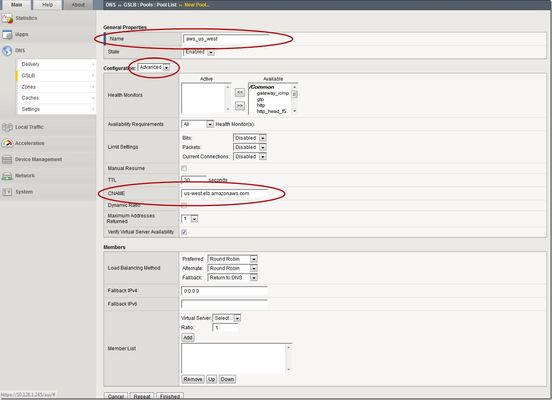

This is the latest in a series of DNS articles that I've been writing over the past couple of months. This article is taken from a fantastic solution that Joe Cassidy developed. So, thanks to Joe for developing this solution, and thanks for the opportunity to write about it here on DevCentral. As a quick reminder, my first six articles are: Let's Talk DNS on DevCentral DNS The F5 Way: A Paradigm Shift DNS Express and Zone Transfers The BIG-IP GTM: Configuring DNSSEC DNS on the BIG-IP: IPv6 to IPv4 Translation DNS Caching The Scenario Let's say you are an F5 customer who has external GTMs and LTMs in your environment, but you are not leveraging them for your main website (example.com). Your website is a zone sitting on your windows DNS servers in your DMZ that round robin load balance to some backend webservers. You've heard all about the benefits of the cloud (and rightfully so), and you want to move your web content to the Amazon Cloud. Nice choice! As you were making the move to Amazon, you were given instructions by Amazon to just CNAME your domain to two unique Amazon Elastic Load Balanced (ELB) domains. Amazon’s requests were not feasible for a few reasons...one of which is that it breaks the RFC. So, you engage in a series of architecture meetings to figure all this stuff out. Amazon told your Active Directory/DNS team to CNAME www.example.com and example.com to two AWS clusters: us-east.elb.amazonaws.com and us-west.elb.amazonaws.com. You couldn't use Microsoft DNS to perform a basic CNAME of these records because of the BIND limitation of CNAME'ing a single A record to multiple aliases. Additionally, you couldn't point to IPs because Amazon said they will be using dynamic IPs for your platform. So, what to do, right? The Solution The good news is that you can use the functionality and flexibility of your F5 technology to easily solve this problem. Here are a few steps that will guide you through this specific scenario: Redirect requests for http://example.com to http://www.example.com and apply it to your Virtual Server (1.2.3.4:80). You can redirect using HTTP Class profiles (v11.3 and prior) or using a policy with Centralized Policy Matching (v11.4 and newer) or you can always write an iRule to redirect! Make www.example.com a CNAME record to example.lb.example.com; where *.lb.example.com is a sub-delegated zone of example.com that resides on your BIG-IP GTM. Create a global traffic pool “aws_us_east” that contains no members but rather a CNAME to us-east.elb.amazonaws.com. Create another global traffic pool “aws_us_west” that contains no members but rather a CNAME to us-west.elb.amazonaws.com. The following screenshot shows the details of creating the global traffic pools (using v11.5). Notice you have to select the "Advanced" configuration to add the CNAME. Create a global traffic Wide IP example.lb.example.com with two pool members “aws_us_east” and “aws_us_west”. The following screenshot shows the details. Create two global traffic regions: “eastern” and “western”. The screenshot below shows the details of creating the traffic regions. Create global traffic topology records using "Request Source: Region is eastern" and "Destination Pool is aws_us_east". Repeat this for the western region using the aws_us_west pool. The screenshot below shows the details of creating these records. Modify Pool settings under Wide IP www.example.com to use "Topology" as load balancing method. See the screenshot below for details. How it all works... Here's the flow of events that take place as a user types in the web address and ultimately receives the correct IP address. External client types http://example.com into their web browser Internet DNS resolution takes place and maps example.com to your Virtual Server address: IN A 1.2.3.4 An HTTP request is directed to 1.2.3.4:80 Your LTM checks for a profile, the HTTP profile is enabled, the redirect request is applied, and redirect user request with 301 response code is executed External client receives 301 response code and their browser makes a new request to http://www.example.com Internet DNS resolution takes place and maps www.example.com to IN CNAME example.lb.example.com Internet DNS resolution continues mapping example.lb.example.com to your GTM configured Wide IP The Wide IP load balances the request to one of the pools based on the configured logic: Round Robin, Global Availability, Topology or Ratio (we chose "Topology" for our solution) The GTM-configured pool contains a CNAME to either us_east or us_west AWS data centers Internet DNS resolution takes place mapping the request to the ELB hostname (i.e. us-west.elb.amazonaws.com) and gives two A records External client http request is mapped to one of the returned IP addresses And, there you have it. With this solution, you can integrate AWS using your existing LTM and GTM technology! I hope this helps, and I hope you can implement this and other solutions using all the flexibility and power of your F5 technology.2.7KViews1like11CommentsSSL Orchestrator Advanced Use Cases: Outbound SNAT Persistence

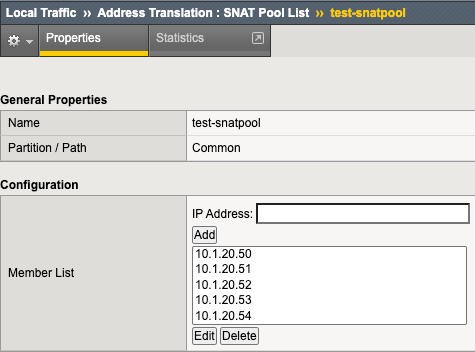

Introduction F5 BIG-IP is synonymous with "flexibility". You likely have few other devices in your architecture that provide the breadth of capabilities that come native with the BIG-IP platform. And for each and every BIG-IP product module, the opportunities to expand functionality are almost limitless. In this article series we examine the flexibility options of the F5 SSL Orchestrator in a set of "advanced" use cases. If you haven't noticed, the world has been steadily moving toward encrypted communications. Everything from web, email, voice, video, chat, and IoT is now wrapped in TLS, and that's a good thing. The problem is, malware - that thing that creates havoc in your organization, that exfiltrates personnel records to the Dark Web - isn't stopped by encryption. TLS 1.3 and multi-factor authentication don't eradicate malware. The only reasonable way to defend against it is to catch it in the act, and an entire industry of security products are designed for just this task. But ironically, encryption makes this hard. You can't protect against what you can't see. F5 SSL Orchestrator simplifies traffic decryption and malware inspection, and dynamically orchestrates traffic to your security stack. But it does much more than that. SSL Orchestrator is built on top of F5's BIG-IP platform, and as stated earlier, is abound with flexibility. SSL Orchestrator Use Case: Outbound SNAT Persistence It may not be the most obvious thing to think about persistence in the vein of outbound traffic. We are all groomed to accept that any given load balancer can handle persistence (or "affinity", or "stickiness") to backend servers. This is an important characteristic for sure. But in an outbound scenario, you don't load balance remote servers, so why on Earth would you need persistence? Well, I'm glad you asked. There indeed happens to be a somewhat unique, albeit infrequent use case where two different servers need to persist on YOUR IP address. The classic example is a site that requires federated authentication, where the service provider (SP) generates a token (perhaps a SAML auth request) and inside of that request the SP has embedded the client IP. The client receives this message and is redirected to the IdP to authenticate. But in this case the client is talking to the outside world through a forward proxy, and outbound source NAT (SNAT) could be required in this environment. That means there's a potential that the client IP address as seen from the two remote servers could be different. So if the IdP needs to verify the client IP based on what's embedded in the authentication request token, that could possibly fail. The good news here is that federated authentication doesn't normally require client IP verification, and there aren't many other similar use cases, but it can happen. The F5 BIG-IP, as with ANY proxy server, load balancer, or ADC device, clearly supports server affinity, and in a highly flexible way. But, as with ANY proxy server, load balancer, or ADC device, that doesn't apply to SNAT addresses. Nevertheless, the F5 BIG-IP can be configured to do this, which is exactly what this article is about. We're going to flex some BIG-IP muscle to derive a unique and innovative way to enable outbound SNAT persistence. What we're basically talking about is ensuring that a single internal client persists a single outbound SNAT IP address, when and where needed, and as long as possible. It's important to note here that we're not really talking about persistence in the same way you think about load balanced server affinity. With affinity, you're stapling a single (remote) client "session" to a single load balanced server. With SNAT persistence, you're stapling a single outbound SNAT IP to a single internal client so that all remote servers see that same source address. Same-same but different-different. To do this we'll need a SNAT pool and an iRule. We need the SNAT pool to define the SNAT addresses we can use. And since SNAT pools don't provide a persistence option like regular pools do, we'll use an iRule to provide the stickiness. It's also worth noting here, again since we're not really talking about load balancing stickiness, that the IP persistence mechanism in the iRule may not (likely will not) evenly distribute the IPs in the SNAT pool. Your best bet is to provide as many SNAT pool IPs as possible and reasonable. The good news here is that, because you're using a BIG-IP, you can define exactly how you assert that IP stickiness. In most cases, you'll probably just want to persist on the internal client IP, but you could also persist on: Client source address and remote server port Client source address and remote destination addresses Client source, day of the week, the year+month+day % mod 2, a hash of the word-of-the-day...and hopefully you get the idea. Lot's of options. To make this work, let's start with the SNAT pool. Navigate to Local Traffic -> Address Translation -> SNAT Pool List in the BIG-IP and click Create. In the Member List section, add as many SNAT IPs as you can afford. Remember, these are going to be IPs on your outbound VLAN, so in the same subnet as your outbound VLAN self-IP. Figure: SNAT pool list You don't need to assign the SNAT pool to anything directly. The iRule will handle that. And now onto the iRule. Navigate to Local Traffic -> iRules -> iRule List in the BIG-IP, and click Create. Copy the following into the iRule editor: when RULE_INIT { ## This iRule should be applied to your SSLO intercaption rule ending with in-t-4. catch { unset -nocomplain static::snat_ips } ## For each SNAT IP needed define the IP versus dynamically looking it up. ## These need to be in the real SNAT pool as well so ARP works. set static::snat_ips(1) 10.1.20.50 set static::snat_ips(2) 10.1.20.51 set static::snat_ips(3) 10.1.20.52 set static::snat_ips(4) 10.1.20.53 set static::snat_ips(5) 10.1.20.54 ## Set to how many SNAT IPs were added set static::array_size 5 } when CLIENT_ACCEPTED priority 100 { ## Select and uncomment only ONE of the below SNAT persistence options ## Persist SNAT based on client address only snat $static::snat_ips([expr {[crc32 [IP::client_addr]] % $static::array_size}]) ## Persist SNAT based on client address and remote port #snat $static::snat_ips([expr {[crc32 [IP::client_addr] [TCP::remote_port]] % $static::array_size}]) ## Persist SNAT based on client address and remote address #snat $static::snat_ips([expr {[crc32 [IP::client_addr] [IP::local_addr]] % $static::array_size}]) } Let's take a moment to explain what this iRule is actually doing, and it is fairly straightforward. In RULE_INIT, which fires ONCE when you update the iRule, the members of the defined SNAT pool are read into an array. Then a second static variable is created to store the size of the array. These values are stored as static, global variables. In CLIENT_ACCEPTED we set a priority of 100 to control the order of execution under SSL Orchestrator as there is already a CLIENT_ACCEPTED iRule event on the topology (we want our new event to run first). Below that you're provided with three choices for persistence: persist on source IP only, source IP and destination port, or source IP and destination IP. You'll want to uncomment only ONE of these. Each basically performs a quick CRC hash on the selected value, then calculates a modulus based on the array size. This returns a number within the size of the array, that is then applied as the index to the array to extract one of the array values. This calculation is always the same for the same input value(s), so effectively persisting on that value. The selected SNAT IP is then fed to the 'snat' command, and there you have it. As stated, you're probably only going to need the source-only persistence option. Using either of the others will pin a SNAT IP to a client IP and protocol port (ex. client IP:443 or client IP:80), or pin a SNAT IP to a specific host (ex. client IP:www.example.com), respectively. At the end of the day, you can insert any reasonable expression that will result in the selection of one of the values in the SNAT pool array, so the sky is really the limit here. The last step is easiest of all. You need to attach this iRule to your SSL Orchestrator topology. To do that. navigate to SSL Orchestrator -> Configuration in the UI, select the Interception Rules tab, and click to edit the respective outbound interception rule. Scroll to the bottom of this page, and under Resources, add the new iRule to the Selected column. The order doesn't matter. Click Deploy to complete the change, and you're done. You can do a packet capture on your outbound VLAN to see what is happening. tcpdump -lnni [outbound vlan] host 93.184.216.34 And then access https://www.example.com to test. For your IP address you should see a consistent outgoing SNAT IP. If you have access to a Linux client, you can add multiple IP addresses to an interface and test with each: ifconfig eth0:1 10.1.10.51 ifconfig eth0:2 10.1.10.52 ifconfig eth0:3 10.1.10.53 ifconfig eth0:4 10.1.10.54 ifconfig eth0:5 10.1.10.55 curl -vk https://www.example.com --interface 10.1.10.51 curl -vk https://www.example.com --interface 10.1.10.52 curl -vk https://www.example.com --interface 10.1.10.53 curl -vk https://www.example.com --interface 10.1.10.54 curl -vk https://www.example.com --interface 10.1.10.55 And again there you have it. In just a few steps you've been able to enable outbound SNAT persistence, and along the way you have hopefully recognized the immense flexibility at your command.1.7KViews1like5CommentsConfigure the F5 BIG-IP as an Explicit Forward Web Proxy Using LTM

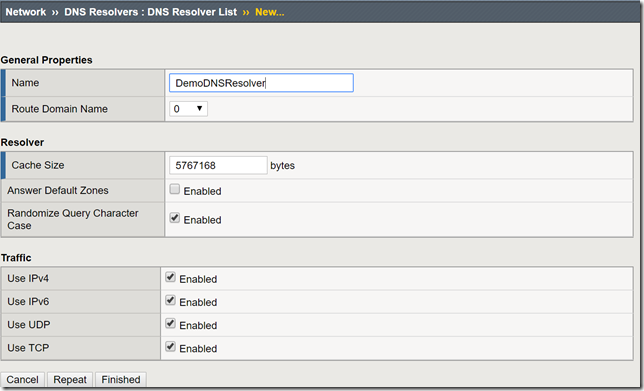

In a previous article, I provided a guide on using F5's Access Policy Manager (APM) and Secure Web Gateway (SWG) to provide forward web proxy services. While that guide was for organizations that are looking to provide secure internet access for their internal users, URL filtering as well as securing against both inbound and outbound malware, this guide will use only F5's Local Traffic Manager to allow internal clients external internet access. This week I was working with F5's very talented professional services team and we were presented with a requirement to allow workstation agents internet access to known secure sites to provide logs and analytics. Of course, this capability can be used to meet a number of other use cases, this was a real-world use case I wanted to share. So with that, let's get to it! Creating a DNS Resolver Navigate to Network > DNS Resolvers > click Create Name: DemoDNSResolver Leave all other settings at their defaults and click Finished Click the newly created DNS resolver object Click Forward Zones Click Add In this use case, we will be forwarding all requests to this DNS resolver. Name: . Address: 8.8.8.8 Note: Please use the correct DNS server for your use case. Service Port: 53 Click Add and Finished Creating a Network Tunnel Navigate to Network > Tunnels > Tunnel List > click Create Name: DemoTunnel Profile: tcp-forward Leave all other settings default and click Finished Create an http Profile Navigate to Local Traffic > Profiles > Services > HTTP > click Create Name: DemoExplicitHTTP Proxy Mode: Explicit Parent Profile: http-explict Scroll until you reach Explicit Proxy settings. DNS Resolver: DemoDNSResolver Tunnel Name: DemoTunnel Leave all other settings default and click Finish Create an Explicit Proxy Virtual Server Navigate to Local Traffic > Virtual Servers > click Create Name: explicit_proxy_vs Type: Standard Destination Address/Mask: 10.1.20.254 Note: This must be an IP address the internal clients can reach. Service Port: 8080 Protocol: TCP Note: This use case was for TCP traffic directed at known hosts on the internet. If you require other protocols or all, select the correct option for your use case from the drop-down menu. Protocol Profile (Client): f5-tcp-progressive Protocol Profile (Server): f5-tcp-wan HTTP Profile: DemoExplicitHTTP VLAN and Tunnel Traffic Enabled on: Internal Source Address Translation: Auto Map Leave all other settings at their defaults and click Finish. Create a Fast L4 Profile Navigate to Local Traffic > Profiles: Protocol: Fast L4 > click Create Name: demo_fastl4 Parent Profile: fastL4 Enable Loose Initiation and Loose Close as shown in the screenshot below. Click Finished Create a Wild Card Virtual Server In order to catch and forward all traffic to the BIG-IP's default gateway, we will create a virtual server to accept traffic from our explicit proxy virtual server created in the previous steps. Navigate to Local Traffic > Virtual Servers > Virtual Server List > click Create Name: wildcard_VS Type: Forwarding (IP) Source Address: 0.0.0.0/0 Destination Address: 0.0.0.0/0 Protocol: *All Protocols Service Port: 0 *All Ports Protocol Profile: demo_fastl4 VLAN and Tunnel Traffic: Enabled on...DemoTunnel Source Address Translation: Auto Map Leave all other settings at their defaults and click Finished. Testing and Validation Navigate to a workstation on your internal network. Launch Internet Explorer or the browser of your preference. Modify the proxy settings to reflect the explicit_proxy_VS created in previous steps. Attempt to access several sites and validate you are able to reach them. Whether successful or unsuccessful, navigate to Local Traffic > Virtual Servers > Virtual Server List > click the Statistics tab. Validate traffic is hitting both of the virtual servers created above. If it is not, for troubleshooting purposes only configure to the virtual servers to accept traffic on All VLANs and Tunnels as well as useful tools such as curl and tcpdump. You have now successfully configured your F5 BIG-IP to act as an explicit forward web proxy using LTM only. As stated above, this use case is not meant to fulfill all forward proxy use cases. If URL filtering and malware protection are required, APM and SWG integration should be considered. Until next time!33KViews6likes34CommentsF5 and Cisco ACI Essentials - Design guide for a Single Pod APIC cluster

Deployment considerations It is usually an easy decision to have BIG-IP as part of your ACI deployment as BIG-IP is a mature feature rich ADC solution. Where time is spent is nailing down the design and the deployment options for the BIG-IP in the environment. Below we will discuss a few of the most commonly asked questions: SNAT or no SNAT There are various options you can use to insert the BIG-IP into the ACI environment. One way is to use the BIG-IP as a gateway for servers or as a routing next hop for routing instances. Another option is to use Source Network Address Translation (SNAT) on the BIG-IP, however with enabling SNAT the visibility into the real source IP address is lost. If preserving the source IP is a requirement then ACI's Policy-Based Redirect (PBR) can be used to make sure the return traffic goes back to the BIG-IP. BIG-IP redundancy F5 BIG-IP can be deployed in different high-availability modes. The two common BIG-IP deployment modes: active-active and active-standby. Various design considerations, such as endpoint movement during fail-overs, MAC masquerade, source MAC-based forwarding, Link Layer Discovery Protocol (LLDP), and IP aging should also be taken into account for each of the deployment modes. Multi-tenancy Multi-tenancy is supported by both Cisco ACI and F5 BIG-IP in different ways. There are a few ways that multi-tenancy constructs on ACI can be mapped to multi-tenancy on BIG-IP. The constructs revolve around tenants, virtual routing and forwarding (VRF), route domains, and partitions. Multi-tenancy can also be based on the BIG-IP form factor (appliance, virtual edition and/or virtual clustered multiprocessor (vCMP)). Tighter integration Once a design option is selected there are questions around what more can be done from an operational or automation perspective now that we have a BIG-IP and ACI deployment? The F5 ACI ServiceCenter is an application developed on the Cisco ACI App Center platform built for exactly that purpose. It is an integration point between the F5 BIG-IP and Cisco ACI. The application provides an APIC administrator a unified way to manage both L2-L3 and L4-L7 infrastructure. Once day-0 activities are performed and BIG-IP is deployed within the ACI fabric using any of the design options selected for your environment, then the F5 ACI ServiceCenter can be used to handle day-1 and day-2 operations. The day-1 and day-2 operations provided by the application are well suited for both new/greenfield and existing/brownfield deployments of BIG-IP and ACI deployments. The integration is loosely coupled, which allows the F5 ACI ServiceCenter to be installed or uninstalled with no disruption to traffic flow, as well as no effect on the F5 BIG-IP and Cisco ACI configuration. Check here to find out more. All of the above topics and more are discussed in detail here in the single pod white paper.1.6KViews3likes0Comments