Declarative Advanced WAF policy lifecycle in a CI/CD pipeline

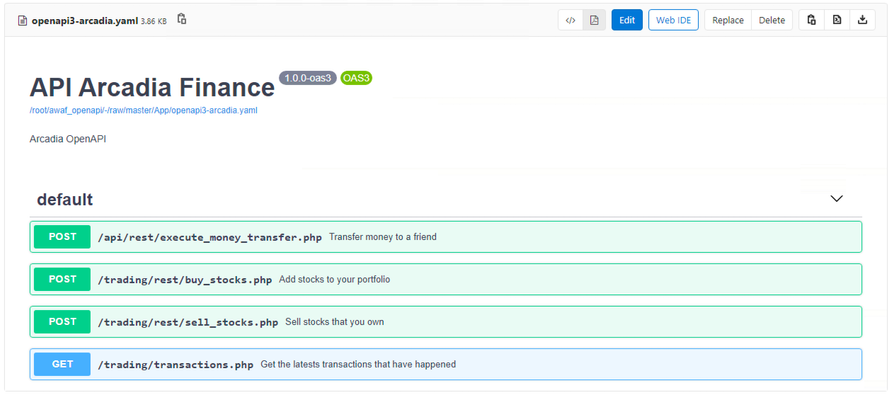

The purpose of this article is to show the configuration used to deploy a declarative Advanced WAF policy to a BIG-IP and automatically configure it to protect an API workload by consuming an OpenAPI file describing the application. For this experiment, a Gitlab CI/CD pipeline was used to deploy an API workload to Kubernetes, configure a declarative Adv. WAF policy to a BIG-IP device and tuning it by incorporating learning suggestions exported from the BIG-IP. Lastly, the F5 WAF tester tool was used to determine and improve the defensive posture of the Adv. WAF policy. Deploying the declarative Advanced WAF policy through a CI/CD pipeline To deploy the Adv. WAF policy, the Gitlab CI/CD pipeline is calling an Ansible playbook that will in turn deploy an AS3 application referencing the Adv.WAF policy from a separate JSON file. This allows the application definition and WAF policy to be managed by 2 different groups, for example NetOps and SecOps, supporting separation of duties. The following Ansible playbook was used; --- - hosts: bigip connection: local gather_facts: false vars: my_admin: "xxxx" my_password: "xxxx" bigip: "xxxx" tasks: - name: Deploy AS3 API AWAF policy uri: url: "https://{{ bigip }}/mgmt/shared/appsvcs/declare" method: POST headers: "Content-Type": "application/json" "Authorization": "Basic xxxxxxxxxx body: "{{ lookup('file','as3_waf_openapi.json') }}" body_format: json validate_certs: no status_code: 200 The Advanced WAF policy 'as3_waf_openapi.json' was specified as follows: { "class": "AS3", "action": "deploy", "persist": true, "declaration": { "class": "ADC", "schemaVersion": "3.2.0", "id": "Prod_API_AS3", "API-Prod": { "class": "Tenant", "defaultRouteDomain": 0, "arcadia": { "class": "Application", "template": "generic", "VS_API": { "class": "Service_HTTPS", "remark": "Accepts HTTPS/TLS connections on port 443", "virtualAddresses": ["xxxxx"], "redirect80": false, "pool": "pool_NGINX_API", "policyWAF": { "use": "Arcadia_WAF_API_policy" }, "securityLogProfiles": [{ "bigip": "/Common/Log all requests" }], "profileTCP": { "egress": "wan", "ingress": { "use": "TCP_Profile" } }, "profileHTTP": { "use": "custom_http_profile" }, "serverTLS": { "bigip": "/Common/arcadia_client_ssl" } }, "Arcadia_WAF_API_policy": { "class": "WAF_Policy", "url": "http://xxxx/root/awaf_openapi/-/raw/master/WAF/ansible/bigip/policy-api.json", "ignoreChanges": true }, "pool_NGINX_API": { "class": "Pool", "monitors": ["http"], "members": [{ "servicePort": 8080, "serverAddresses": ["xxxx"] }] }, "custom_http_profile": { "class": "HTTP_Profile", "xForwardedFor": true }, "TCP_Profile": { "class": "TCP_Profile", "idleTimeout": 60 } } } } } The AS3 declaration will provision a separate Administrative Partition ('API-Prod') containing a Virtual Server ('VS_API'), an Adv. WAF policy ('Arcadia_WAF_API_policy') and a pool ('pool_NGINX_API'). The Adv.WAF policy being referenced ('policy-api.json') is stored in the same Gitlab repository but can be downloaded from a separate location. { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "transparent", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "http://xxxx/root/awaf_openapi/-/raw/master/App/openapi3-arcadia.yaml" } ] }, "modifications": [ ] } The declarative Adv.WAF policy is referencing in turn the OpenAPI file ('openapi3-arcadia.yaml') that describes the application being protected. Executing the Ansible playbook results in the AS3 application being deployed, along with the Adv.WAF policy that is automatically configured according to the OpenAPI file. Handling learning suggestions in a CI/CD pipeline The next step in the CI/CD pipeline used for this experiment was to send legitimate traffic using the API and collect the learning suggestions generated by the Adv.WAF policy, which will allow a simple way to customize the WAF policy further for the specific application being protected. The following Ansible playbook was used to retrieve the learning suggestions: --- - hosts: bigip connection: local gather_facts: true vars: my_admin: "xxxx" my_password: "xxxx" bigip: "xxxxx" tasks: - name: Get all Policy_key/IDs for WAF policies uri: url: 'https://{{ bigip }}/mgmt/tm/asm/policies?$select=name,id' method: GET headers: "Authorization": "Basic xxxxxxxxxxx" validate_certs: no status_code: 200 return_content: yes register: waf_policies - name: Extract Policy_key/ID of Arcadia_WAF_API_policy set_fact: Arcadia_WAF_API_policy_ID="{{ item.id }}" loop: "{{ (waf_policies.content|from_json)['items'] }}" when: item.name == "Arcadia_WAF_API_policy" - name: Export learning suggestions uri: url: "https://{{ bigip }}/mgmt/tm/asm/tasks/export-suggestions" method: POST headers: "Content-Type": "application/json" "Authorization": "Basic xxxxxxxxxxx" body: "{ \"inline\": \"true\", \"policyReference\": { \"link\": \"https://{{ bigip }}/mgmt/tm/asm/policies/{{ Arcadia_WAF_API_policy_ID }}/\" } }" body_format: json validate_certs: no status_code: - 200 - 201 - 202 - name: Get learning suggestions uri: url: "https://{{ bigip }}/mgmt/tm/asm/tasks/export-suggestions" method: GET headers: "Authorization": "Basic xxxxxxxxx" validate_certs: no status_code: 200 register: result - name: Print learning suggestions debug: var=result A sample learning suggestions output is shown below: "json": { "items": [ { "endTime": "xxxxxxxxxxxxx", "id": "ZQDaRVecGeqHwAW1LDzZTQ", "inline": true, "kind": "tm:asm:tasks:export-suggestions:export-suggestions-taskstate", "lastUpdateMicros": 1599953296000000.0, "result": { "suggestions": [ { "action": "add-or-update", "description": "Enable Evasion Technique", "entity": { "description": "Directory traversals" }, "entityChanges": { "enabled": true }, "entityType": "evasion" }, { "action": "add-or-update", "description": "Enable HTTP Check", "entity": { "description": "Check maximum number of parameters" }, "entityChanges": { "enabled": true }, "entityType": "http-protocol" }, { "action": "add-or-update", "description": "Enable HTTP Check", "entity": { "description": "No Host header in HTTP/1.1 request" }, "entityChanges": { "enabled": true }, "entityType": "http-protocol" }, { "action": "add-or-update", "description": "Enable enforcement of policy violation", "entity": { "name": "VIOL_REQUEST_MAX_LENGTH" }, "entityChanges": { "alarm": true, "block": true }, "entityType": "violation" } Incorporating the learning suggestions in the Adv.WAF policy can be done by simple copy&pasting the self-contained learning suggestions blocks into the "modifications" list of the Adv.WAF policy: { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "transparent", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "http://xxxxxx/root/awaf_openapi/-/raw/master/App/openapi3-arcadia.yaml" } ] }, "modifications": [ { "action": "add-or-update", "description": "Enable Evasion Technique", "entity": { "description": "Directory traversals" }, "entityChanges": { "enabled": true }, "entityType": "evasion" } ] } Enhancing Advanced WAF policy posture by using the F5 WAF tester The F5 WAF tester is a tool that generates known attacks and checks the response of the WAF policy. For example, running the F5 WAF tester against a policy that has a "transparent" enforcement mode will cause the tests to fail as the attacks will not be blocked. The F5 WAF tester can suggest possible enhancement of the policy, in this case the change of the enforcement mode. An abbreviated sample output of the F5 WAF Tester: ................................................................ "100000023": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt (AWS Metadata Server)", "results": { "parameter": { "expected_result": { "type": "signature", "value": "200018040" }, "pass": false, "reason": "ASM Policy is not in blocking mode", "support_id": "" } }, "system": "All systems" }, "100000024": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt - Local network IP range 10.x.x.x", "results": { "request": { "expected_result": { "type": "signature", "value": "200020201" }, "pass": false, "reason": "ASM Policy is not in blocking mode", "support_id": "" } }, "system": "All systems" } }, "summary": { "fail": 48, "pass": 0 } Changing the enforcement mode from "transparent" to "blocking" can easily be done by editing the same Adv. WAF policy file: { "policy": { "name": "policy-api-arcadia", "description": "Arcadia API", "template": { "name": "POLICY_TEMPLATE_API_SECURITY" }, "enforcementMode": "blocking", "server-technologies": [ { "serverTechnologyName": "MySQL" }, { "serverTechnologyName": "Unix/Linux" }, { "serverTechnologyName": "MongoDB" } ], "signature-settings": { "signatureStaging": false }, "policy-builder": { "learnOnlyFromNonBotTraffic": false }, "open-api-files": [ { "link": "http://xxxxx/root/awaf_openapi/-/raw/master/App/openapi3-arcadia.yaml" } ] }, "modifications": [ { "action": "add-or-update", "description": "Enable Evasion Technique", "entity": { "description": "Directory traversals" }, "entityChanges": { "enabled": true }, "entityType": "evasion" } ] } A successful run will will be achieved when all the attacks will be blocked. ......................................... "100000023": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt (AWS Metadata Server)", "results": { "parameter": { "expected_result": { "type": "signature", "value": "200018040" }, "pass": true, "reason": "", "support_id": "17540898289451273964" } }, "system": "All systems" }, "100000024": { "CVE": "", "attack_type": "Server Side Request Forgery", "name": "SSRF attempt - Local network IP range 10.x.x.x", "results": { "request": { "expected_result": { "type": "signature", "value": "200020201" }, "pass": true, "reason": "", "support_id": "17540898289451274344" } }, "system": "All systems" } }, "summary": { "fail": 0, "pass": 48 } Conclusion By adding the Advanced WAF policy into a CI/CD pipeline, the WAF policy can be integrated in the lifecycle of the application it is protecting, allowing for continuous testing and improvement of the security posture before it is deployed to production. The flexible model of AS3 and declarative Advanced WAF allows the separation of roles and responsibilities between NetOps and SecOps, while providing an easy way for tuning the policy to the specifics of the application being protected. Links UDF lab environment link. Short instructional video link.1.8KViews3likes1CommentIntegrating NGINX Controller API Management with PingFederate to secure financial services API transactions

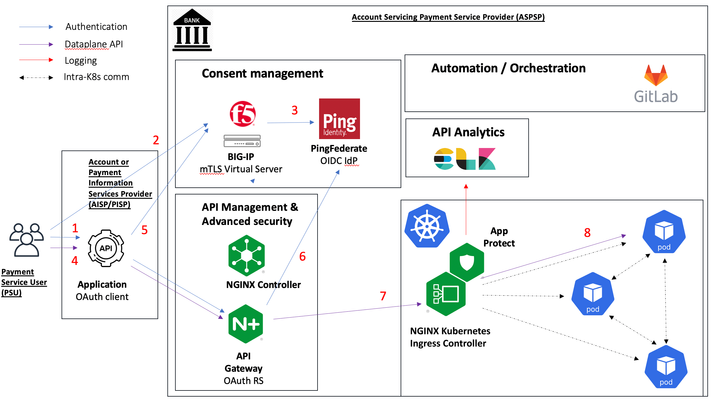

Introduction The previous article in the "Securing financial services APIs" series, "Using NGINX Controller API Management Module and NGINX App Protect to secure financial services API transactions", described a setup where NGINX Controller APIm, acting as an OAuth Resource Server, was using F5's APM as an OIDC IdP / OAuth Autorization Server in an OAuth/OIDC authentication flow. The current article explores the integration of NGINX Controller APIm with PingFederate, one of the market leading identity management solutions, in a similar setup. Ping Identity has partnered with OBIE (Open Banking Implementation Entity) the body responsible for UK Open Banking implementation as a response to EU's PSD2 directive and, as such, it acquired a front seat in the development of Open Banking initiative, one of the most mature examples of financial service API. Ping Identity technology is also Financial-Grade API (FAPI) compliant, supporting the features critical in ensuring higher security for financial API transactions, while maintaining seamless user experience and ease of configuration. Ping Identity's PDS2 & Open Banking technical solution guide can be found here, while this article focusses primarily on the ease of configuration of NGINX Controller APIm to interact with PingFederate solution in a basic financial services API scenario. Setup For demo purposes, as a backend banking application we used a server stub generated from UK Open Banking's OpenAPI spec deployed in a Kubernetes environment, having NGINX App Protect deployed on Kubernetes Ingress controller as an API WAF. The API Gateway and API Management function is implemented by NGINX API Gateway and NGINX Controller APIm, placed in front of the Kubernetes environment. The configuration of all the above (backend server, NAP/KIC and NGINX APIm) is managed through a CI/CD pipeline configured in Gitlab, simulating a modern application development environment. Authentication and API flow This demo is implementing the Authorization Code flow to enable a "domestic payment" transaction. Summarising the steps of the authentication and API flow (refer to the setup diagram above): 1. The user logs into the Third Party Provider application ("client") and creates a new funds transfer 2. The TPP application redirects the user to the OAuth Authorization Server / OIDC IdP - PingFederate 3. The user provides its credentials to PingFederate and gets access to the consent management screen where the required "payments" scope will be listed 4. If the user agrees to give consent to the TPP client to make payments out of his/her account, PingFederate will generate an authorization code (and an ID Token) and redirect the user to the TPP client 5. The TPP client exchanges the authorization code for an access token and attaches it as a bearer token to the /domestic-payments call sent to the API gateway 6. The API Gateway authenticates the access token by downloading the JSON Web Keys from PingFederate and grants conditional access to the backend application 7. The Kubernetes Ingress receives the API call and performs WAF security checks via NGINX App Protect 8. The API call is forwarded to the backend server pod NGINX APIm configuration In this scenario, NGINX APIm is performing the Resource Server OAuth role, where it downloads the JWKs from the OAuth Authorization Server / OIDC IdP (PingFederate) and checks the authenticity of the access token presented in the API call. Additionally, it may apply further checks to conditionally grant access to the application - in this demo it will check for the presence of the "payments" scope. The NGINX APIm configuration is straightforward and consists of two steps: 1. Configuring the IdP Go to Services => Identity Providers and click on Create identity Providers. Fill in the mandatory parameters Name, Environment and Type (JWT). Enter the JWKs URL location and the caching duration. 2. Configuring the OAuth authorization and conditional access criteria Go to Services =>APIs , select an API Definition and edit the associated Published API. Navigate to Routing and edit the Component to be protected, navigating to Security/Authentication. Select the previously created Identity Provider and optionally enable conditional access. As an example, access is granted if "payments" is one of the scopes found in the access token. Conclusion NGINX APIm offers a very simple yet granular way of configuring NGINX API Gateway as an OAuth Resource Server and allows the integration with an industry-leading IAM solution, PingFederate, to protect financial services API transactions. Links UDF lab environment link.756Views1like0CommentsGitOps: Declarative Infrastructure and Application Delivery with NGINX App Protect

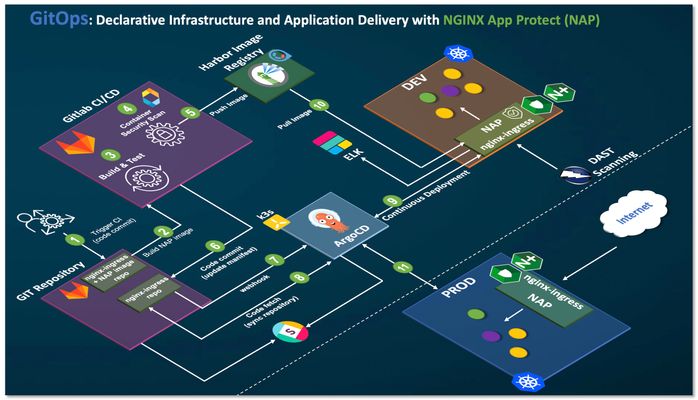

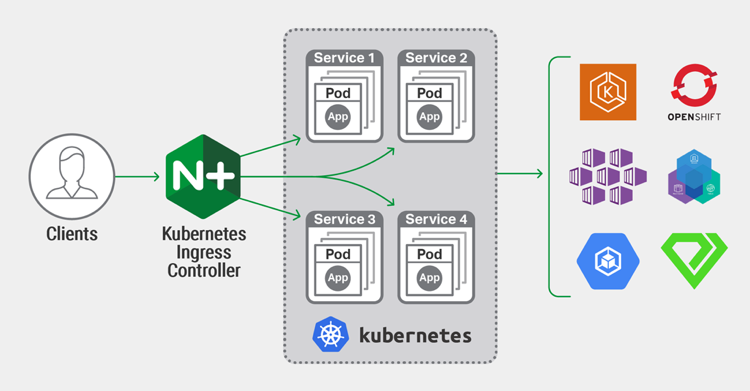

First thing first. What is GitOps? In a nutshell, GitOps is a practice (Git Operation) that allows you to use GIT and code repository as your configuration source of truth (Declarative Infrastructure as Code and Application Delivery as Code) couple with various supporting tools. The state of your git repository syncs with your infrastructure and application states. As operation team runs daily operations (CRUD - "Create, Read, Update and Delete") leveraging the goodness and philosophy of DevOps, they no longer required to store configuration manifest onto various configuration systems. THe Git repo will be the source of truth. Typically, the target systems or infrastructures runs on Kubernetes base platform. For further details and better explanation of GitOps, please refer to below or Google search. https://www.weave.works/technologies/gitops/ https://www.atlassian.com/git/tutorials/gitops Why practice GitOps? I havebeen managing my lab environment for many years. I use my lab for research of technologies, customer demos/Proof-of-Concepts, applications testing, and code development. Due to the nature of constant changes to my environment (agile and dynamic nature), especially with my multiple versions of Kubernetes platform, I have been spending too much time updating, changing, building, deploying and testing various cloud native apps. Commands like docker build, kubectl, istioctl and git have been constantly and repetitively used to operate environments. Hence, I practice GitOps for my Kubernetes infrastructure. Of course, task/operation can be automated and orchestrated with tools such as Ansible, Terraform, Chef and Puppet. You may not necessarily need GitOps to achieve similar outcome. I managed with GitOps practice partly to learn the new "language" and toexperience first-hand the full benefit of GitOps. Here are some of my learnings and operations experience that I have been using to manage F5's NGINX App Protect and many demo apps protected by it,which may benefit you and give you some insight on how you can run your own GitOps. You may leverage your own GitOps workflow from here. For details and description on F5's NGINX App Protect, please refer to https://www.nginx.com/products/nginx-app-protect/ Key architecture decision of my GitOps Workflow. Modular architecture - allows me to swap in/out technologies without rework. "Lego block" Must reduce my operational works - saves time, no repetitive task, write once and deploy many. Centralize all my configuration manifest - single source of truth. Currently, my configuration exists everywhere - jump hosts, local laptop, cloud storage and etc. I had lost track of which configuration was the latest. Must be simple, modern, easy to understand and as native as possible. Use Case and desirable outcome Build and keep up to date NGINX Plus Ingress Controller with NGINX App Protect in my Kubernetes environment. Build and keep up to date NGINX App Protect's attack signature and Threat Champaign signature. Zero downtime/impact to apps protected by NGINX App Protect with frequent releases, update and patch cycle for NGINX App Protect. My Problem Statement I need to ensure that my infrastructure (Kubernetes Ingress controller) and web application firewall (NGINX App Protect) is kept up to date with ease. For example, when there are new NGINX-ingress and NGINX App Protect updates (e.g., new version, attack signature and threat campaign signature), I would like to seamlessly push changes out to NGINX-ingress and NGINX App Protect (as it protects my backend apps) without impacting applications protected by NGINX App Protect. GitOps Workflow Start small, start with clear workflow. Below is a depiction of the overall GitOps workflow. NGINX App Protect is the target application. Hence, before description of the full GitOps Workflow, let us understand deployment options for NGINX App Protect. NGINX App Protect Deployment model There are four deployment models for NGINX App Protect. A common deployment models are: Edge - external load balancer and proxies (Global enforcement) Dynamic module inside Ingress Controller (per service/URI/ingress resource enforcement) Per-Service Proxy model - Kubernetes service tier (per service enforcement) Per-pod Proxy model - proxy embedded in pod (per endpoint enforcement) Pipeline demonstrated in this article will workwith either NGINX App Protect deployed as Ingress Controller (2) or per-service proxy model (3). For the purposes of this article, NGINX App Protect is deployed at the Ingress controller at the entry point to Kubernetes (Kubernetes Edge Proxy). For those who prefer video, Video Demonstration Part 1 /3 – GitOps with NGINX Plus Ingress and NGINX App Protect - Overview Part 2 /3 – GitOps with NGINX Plus Ingress and NGINX App Protect – Demo in Action Part 3/3 - GitOps with NGINX Plus Ingress and NGINX App Protect - WAF Security Policy Management. Description of GitOps workflow Operation (myself) updates nginx-ingress + NAP image repo (e.g. .gitlab-ci.yml in nginx-plus-ingress) via VSCODE and perform code merge and commit changes to repo stored in Gitlab. Gitlab CI/CD pipeline triggered. Build, test and deploy job started. Git clone kubernetes-ingress repo from https://github.com/nginxinc/kubernetes-ingress/. Checkout latest kubernetes-ingress version and build new image with DockerfilewithAppProtectForPlus dockerfile. [ Ensure you have appropriate nginx app protect license in place ] As part of the build process, it triggers trivy container security scanning for vulnerabilities (Open Source version of AquaSec). Upon completion of static binary scanning, pipeline upload scanned report back to repo for continuous security improvement. Pipeline pushesimage to private repository. Private image repo has been configured to perform nightly container security scan (Clair Scanner). Leverage multiple scanning tools - check and balance. Pipeline clone nginx-ingress deployment repo (my-kubernetes-apps) and update nginx-ingress deployment manifest with the latest build image tag (refer excerpt of the manifest below). Gitlab triggers a webhook to ArgoCD to refresh/sync the desire application state on ArgoCD with deployment state in Kubernetes. By default, ArgoCD will sync with Kubernetes every 3 mins. Webhook will trigger instance sync. ArgoCD (deployed on independent K3S cluster) fetches new code from the repo and detects code changes. ArgoCD automates the deployment of the desired application states in the specified target environment. It tracks updates to git branches, tags or pinned to a specific version of manifest at a git commit. Kubernetes triggers an image pull from private repo, performs a rolling updates, and ensures zero interruption to existing traffic. New pods (nginx-ingress) will spin up and traffic will move to new pods before terminating theold pod. Depending on environment and organisation maturity, a successful build, test and deployment onto DEV environment can be pushed to production environment. Note: DAST Scanning (ZAP Scanner) is not shown in this demo. Currently, running offline non-automated scanning. ArgoCD and Gitlab are integrated with Slack notifications. Events are reported into Slack channel via webhook. NGINX App Protectevents are send to ELK stack for visibility and analytics. Snippet on where Gitlab CI update nginx-plus-ingress deployment manifest (Flow#6). Each new image build will be tagged with <branch>-hash-<version> ... spec: imagePullSecrets: - name: regcred serviceAccountName: nginx-ingress containers: - image: reg.foobz.com.au/apps/nginx-plus-ingress:master-f660306d-1.9.1 imagePullPolicy: IfNotPresent name: nginx-plus-ingress ... Gitlab CI/CD Pipeline Successful run of CI/CD pipeline to build, test, scan and push container image to private repository and execute code commit onto nginx-plus-ingress repo. Note: Trivy scanning report will be uploaded or committed back to the same repo. To prevent Gitlab CI triggering another build process ("pipeline loop"), the code commit is tagged with [skip ci]. ArgoCD continuous deployment ArgoCD constantly (default every 3 mins) syncs desired application state with my Kubernetes cluster. Its ensures configuration manifest stored in Git repository is always synchronised with the target environment. Mytrain-dev apps are protected by nginx-ingress + NGINX App Protect. Specific (per service/URI enforcement). NGINX App Protect policy is applied onto this service. nginx-ingress + NGINX App Protect is deployed as an Ingress Controller in Kubernetes. pod-template-hash=xxxx is labeled and tracked by ArgoCD. $ kubectl -n nginx-ingress get pod --show-labels NAME READY STATUS RESTARTS AGE LABELS nginx-ingress-776b64dc89-pdtv7 1/1 Running 0 8h app=nginx-ingress,pod-template-hash=776b64dc89 nginx-ingress-776b64dc89-rwk8w 1/1 Running 0 8h app=nginx-ingress,pod-template-hash=776b64dc89 Please refer to the attached video links above for full demo in actions. References Tools involved NGINX Plus Ingress Controller - https://www.nginx.com/products/nginx-ingress-controller/ NGINX App Protect - https://www.nginx.com/products/nginx-app-protect/ Gitlab - https://gitlab.com Trivy Scanner - https://github.com/aquasecurity/trivy ArgoCD - https://argoproj.github.io/argo-cd/ Harbor Private Repository - https://goharbor.io/ Clair Scanner - https://github.com/quay/clair Slack - https://slack.com DAST Scanner - https://www.zaproxy.org/ Elasticsearch, Logstash and Kibana (ELK) - https://www.elastic.co/what-is/elk-stack, https://github.com/464d41/f5-waf-elk-dashboards K3S - https://k3s.io/ Source repo used for this demonstration Repo for building nginx-ingress + NGINX App Protect image repo https://github.com/fbchan/nginx-plus-ingress.git Repo use for deployment manifest of nginx-ingress controller with the NGINX App Protect policy. https://github.com/fbchan/my-kubernetes-apps.git Summary GitOps perhaps is a new buzzword. It may or may not make sense in your environment. It definitely makes sense for me. It integrated well with NGINX App Protect and allows me to constantly update and push new code changesinto my environment with ease. A few months down the road, when I need to update nginx-ingress and NGINX App Protect, I just need to trigger a CI job, and then everything works like magic. Your mileage may vary. Experience leads me to think along the line of - start small, start simple by "GitOps-ing" on one of your apps that may require frequency changes. Learn, revise and continuously improve from there. The outcome that GitOps provides will ease your operational burden with "do more with less". Ease of integration of nginx-ingress and NGINX App Protect into your declarative infrastructure and application delivery with GitOps and F5's industry leading Web Application firewall protection will definitely alleviate your organisation's risk exposure to external and internal applications threat.1.6KViews1like3CommentsShift-left Security Visibility

5 Minute Read Applications evolve rapidly whether your organization runs off-the-shelf applications or builds its own applications and frameworks.Increasingly, workloads are developed in a matter of hours, packaged, instantiated and torn down in timespans measured in minutes.This is the nature of continuous deployment pipelines. Securing applications and workloads that are highly dynamic possibly living across different colocation facilities (Colos) and cloud infrastructures (public and private), can only be achieved following continuous integration and continuous deployment (CI/CD) methodologies. In practice, this has resulted in integrating security in the software development and deployment lifecycles with the automation of: ·Code scanning when checked in to software versioning management tools (Git repository) using static application security testing (SAST), ·Integrating web application firewall (WAF) in the CI/CD pipeline as can be seen with BIG-IP Advanced WAF or NGINX App Protect and Kubernetes ·Verifying artifacts used for application instantiation such as packages and containers during creation, before they are stored to ensure that they are free of malware ·Scanning applications/workloads at runtime as part of the pipeline before deployment in production leveraging dynamic application security testing (DAST) tools ·Securing runtime environments enforcing admission control, use of least-privilege during spin-up, and implementing monitoring ·Building in compliance at every stage of the development and deployment process as needed (HIPAA, PCI etc.) Building automated and orchestrated ·Monitoring Kubernetes clusters and other cloud and physical infrastructure where workloads run, however ephemerally, tracking things like container health, container orchestration and ownership across the cluster ·Monitoring workloads and cloud services following site reliability engineering (SRE) guidelines – following the “Golden Signals” (Source: SRE Book) ·Performing regular vulnerability scanning of applications in production Building security in the early stages of the software development lifecycle is part of a “shift-left” strategy, and is discussed here or here. In order to support specific service level objectives (SLO), monitoring at the application and infrastructure level is built into the infrastructure from the perspective of latency, traffic, errors and saturation. More information on adopting SRE with F5 can be found here. What about security visibility? The fundamental need to have a holistic view of the application and its components is essential to be able to mitigate threats.You need to visualize your applications and possible threats in order to assess risk, identify threat vectors before attackers do, and possibly investigate in the event of an issue. In a previous article, we focused on the posture assessment (static view) and monitoring (dynamic view) axis as fundamentals of security visibility.Implementing this visibility entails building and deploying security and security visibility within the pipeline along-side the application, workload or infrastructure. The above figure shows the insertion of WAF policy and logging the software building pipeline.In a shift-left effort the logging, telemetry and security policy configuration is integrated alongside the application’s SDLC.The intent is to leverage the same pipeline infrastructure and have the security and visibility aspects integrated in the project with the workload code.WAF only provides one aspect of the application security suite and is the focus of this exercise.Other infrastructure-centric security configurations, such as mTLS use within a Kubernetes Service Mesh or authentication/authorization framework configurations, can also be inserted in the pipeline. In order to simplify testing and deployment, the security infrastructure needs to be defined as code for consistent deployment for testing and production environments.Within an infrastructure-as-code and security-as-code framework, this article is meant to outline the need for visibility-as-code, and more precisely security-visibility-as-code.All F5 WAF products and their associated logging, monitoring and telemetry capabilities are easily automated and defined as-code.They can be inserted anywhere programmatically in all environment for testing as well as production.812Views0likes0CommentsAdvanced API security for Kubernetes containers running in AWS - NGINX App Protect per-service deployment through a CI/CD pipeline

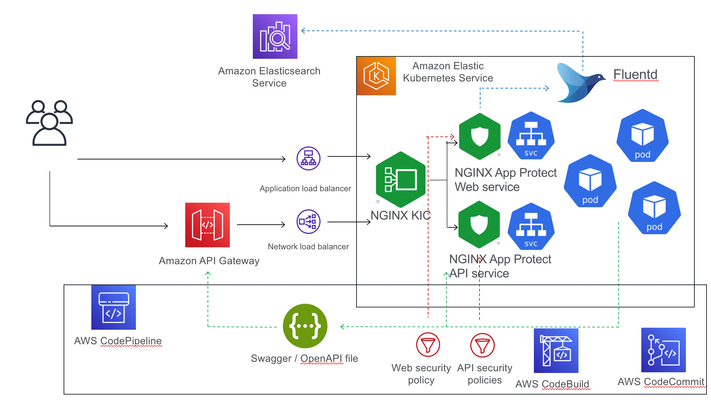

Introduction When the design objective for Kubernetes security is the separate management of WAF security policies, the solution is NGINX App Protect deployed per-service. This article describes such a deployment, with NGINX App Protect augmenting AWS API Gateway to provide advanced security to API workloads, deployed through a CI/CD pipeline. The advantage of NGINX App Protect deployed per-service in a Kubernetes environment is the separation of security policies between different services, allowing for better customisation of the policies and easier portability to different environments. In this particular instance, I used a demo application, Arcadia Finance, that has a Web interface and also exposes an API allowing the users to make financial transactions. The API is described in an OpenAPI 3.0 file, allowing for the automated building of the positive security policy elements (allow list elements) whereas in the case of the web security policy, this configuration will be done manually. The difference in configuration methods between the API and Web policies and the additional requirement for policy separation and independent portability between environments prompted the usage of two separate NGINX App Protect instance, each securing their respective service (Web and API). The deployment used AWS EKS as Kubernetes environment where, beside the Arcadia Finance application components and NGINX App Protect instances, there is also a Fluentd deploymentconfigured as a Syslog server for security logs sent by the NGINX App Protect instances. The logs are then being sent to AWS Elasticsearch and displayed via Kibana NGINX App Protect dashboards. Access to EKS cluster is being provided by an NGINX Ingress Controller instance. The Web interface is being exposed externally through an AWS Application LoadBalancer, handling the SSL offloading while the API is exposed through a Network LoadBalancer. The API is published externally through AWS API Gateway, providing basic security, using a VPC link to connect to the Network LoadBalancer. The configuration The configuration (Arcadia Finance deployment, NGINX KIC, NGINX App Protect configuration, OpenAPI file) is being stored in AWS CodeComit and deployed through AWS CodePipeline and AWS CodeBuild. The API Gateway configuration is being described in an OpenAPI file annotated for AWS API Gateway-specific elements: { "openapi" : "3.0.1", "info" : { "title" : "API Arcadia Finance", "description" : "Arcadia OpenAPI", "version" : "1.0.0-oas3" }, "servers" : [ { "url" : "https://api.cloud-app.uk" } ], "paths" : { "/api/rest/execute_money_transfer.php" : { "post" : { "requestBody" : { "content" : { "application/json" : { "schema" : { "$ref" : "#/components/schemas/MODEL9e8bc4" } } }, "required" : true }, "responses" : { "200" : { "description" : "200 response", "content" : { } } }, "x-amazon-apigateway-request-validator": "Validate body, query string parameters, and headers", "x-amazon-apigateway-gateway-responses": { "BAD_REQUEST_BODY": { "responseTemplates": { "application/json": "{\"message\": \"Bla Bla\"}" } } }, "x-amazon-apigateway-integration" : { "type" : "http_proxy", "uri" : "http://api.cloud-app.uk/api/rest/execute_money_transfer.php", "responses" : { "default" : { "statusCode" : "200" } }, "passthroughBehavior" : "when_no_match", "connectionType" : "VPC_LINK", "connectionId" : "emda4d", "httpMethod" : "POST" } } }, "/trading/transactions.php" : { "get" : { "responses" : { "200" : { "description" : "200 response", "content" : { } } }, "x-amazon-apigateway-request-validator": "Validate body, query string parameters, and headers", "x-amazon-apigateway-gateway-responses": { "BAD_REQUEST_BODY": { "responseTemplates": { "application/json": "{\"message\": \"$context.error.validationErrorString\"}" } } }, "x-amazon-apigateway-integration" : { "type" : "http_proxy", "uri" : "http://www.cloud-app.uk/trading/transactions.php", "responses" : { "default" : { "statusCode" : "200" } }, "passthroughBehavior" : "when_no_match", "connectionType" : "VPC_LINK", "connectionId" : "emda4d", "httpMethod" : "GET" } } }, "/trading/rest/sell_stocks.php" : { "post" : { "requestBody" : { "content" : { "application/json" : { "schema" : { "$ref" : "#/components/schemas/MODEL1ed7ad" } } }, "required" : true }, "responses" : { "200" : { "description" : "200 response", "content" : { } } }, "x-amazon-apigateway-request-validator": "Validate body, query string parameters, and headers", "x-amazon-apigateway-gateway-responses": { "BAD_REQUEST_BODY": { "responseTemplates": { "application/json": "{\"message\": \"$context.error.validationErrorString\"}" } } }, "x-amazon-apigateway-integration" : { "type" : "http_proxy", "uri" : "http://api.cloud-app.uk/trading/rest/sell_stocks.php", "responses" : { "default" : { "statusCode" : "200" } }, "passthroughBehavior" : "when_no_match", "connectionType" : "VPC_LINK", "connectionId" : "emda4d", "httpMethod" : "POST" } } }, "/trading/rest/buy_stocks.php" : { "post" : { "requestBody" : { "content" : { "application/json" : { "schema" : { "$ref" : "#/components/schemas/MODEL94f81c" } } }, "required" : true }, "responses" : { "200" : { "description" : "200 response", "content" : { } } }, "x-amazon-apigateway-request-validator": "Validate body, query string parameters, and headers", "x-amazon-apigateway-gateway-responses": { "BAD_REQUEST_BODY": { "responseTemplates": { "application/json": "{\"message\": \"$context.error.validationErrorString\"}" } } }, "x-amazon-apigateway-integration" : { "type" : "http_proxy", "uri" : "http://api.cloud-app.uk/trading/rest/buy_stocks.php", "responses" : { "default" : { "statusCode" : "200" } }, "passthroughBehavior" : "when_no_match", "connectionType" : "VPC_LINK", "connectionId" : "emda4d", "httpMethod" : "POST" } } } }, "components" : { "schemas" : { "MODEL94f81c" : { "required" : [ "action", "company", "qty", "stock_price", "trans_value" ], "type" : "object", "properties" : { "trans_value" : { "minimum" : 0, "type" : "number" }, "qty" : { "minimum" : 0, "type" : "integer", "format" : "int32" }, "company" : { "type" : "string" }, "action" : { "type" : "string", "enum" : [ "buy" ] }, "stock_price" : { "minimum" : 0, "type" : "number" } }, "additionalProperties" : false }, "MODEL1ed7ad" : { "required" : [ "action", "company", "qty", "stock_price", "trans_value" ], "type" : "object", "properties" : { "trans_value" : { "minimum" : 0, "type" : "number" }, "qty" : { "minimum" : 0, "type" : "integer", "format" : "int32" }, "company" : { "type" : "string" }, "action" : { "type" : "string", "enum" : [ "sell" ] }, "stock_price" : { "minimum" : 0, "type" : "number" } }, "additionalProperties" : false }, "MODEL9e8bc4" : { "required" : [ "account", "amount", "currency", "friend" ], "type" : "object", "properties" : { "amount" : { "minimum" : 0, "type" : "number" }, "account" : { "type" : "number" }, "currency" : { "type" : "string" }, "friend" : { "type" : "string" } }, "additionalProperties" : false } } }, "x-amazon-apigateway-policy" : { "Version" : "2012-10-17", "Statement" : [ { "Effect" : "Allow", "Principal" : "*", "Action" : "execute-api:Invoke", "Resource" : "arn:aws:execute-api:us-west-2:856265587682:7g8sbh9zs6/*" } ] }, "x-amazon-apigateway-request-validators": { "Validate body, query string parameters, and headers": { "validateRequestParameters": true, "validateRequestBody": true } } } The Ingress objects controlled by NGINX KIC are responsible for steering the traffic to either the Web or API instances of NGINX App Protect: apiVersion: extensions/v1beta1 kind: Ingress metadata: annotations: kubernetes.io/ingress.class: nginx name: arcadia-nginx-kic namespace: default spec: rules: - host: "*.cloud-app.uk" http: paths: - backend: serviceName: api-nap servicePort: 80 path: /api/rest/execute_money_transfer.php - backend: serviceName: api-nap servicePort: 80 path: /trading/rest/buy_stocks.php - backend: serviceName: api-nap servicePort: 80 path: /trading/rest/sell_stocks.php - backend: serviceName: web-nap servicePort: 80 path: /trading/transactions.php - backend: serviceName: web-nap servicePort: 80 path: / - backend: serviceName: web-nap servicePort: 80 path: /files - backend: serviceName: web-nap servicePort: 80 path: /api - backend: serviceName: web-nap servicePort: 80 path: /app3 The NGINX App Protect configuration is also controlled by AWS CodePipeline, deployed as a ConfigMap and mounted as a volume on the NGINX instance: apiVersion: v1 kind: ConfigMap metadata: name: nginx-conf-map-api namespace: default data: nginx.conf: |+ user nginx; worker_processes auto; load_module modules/ngx_http_app_protect_module.so; error_log /var/log/nginx/error.log debug; events { worker_connections 10240; } http { include /etc/nginx/mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65; upstream main_DNS_name { server main; } upstream app2_DNS_name { server app2; } server { listen 80; server_name *.cloud-app.uk; proxy_http_version 1.1; app_protect_enable on; app_protect_policy_file "/etc/nginx/NAP_API_Policy.json"; app_protect_security_log_enable on; app_protect_security_log "/etc/nginx/custom_log_format.json" syslog:server=fluentd.logging.svc.cluster.local:24224; location /trading { client_max_body_size 0; default_type text/html; # set your backend here proxy_pass http://main_DNS_name; proxy_set_header Host $host; } location /api { client_max_body_size 0; default_type text/html; # set your backend here proxy_pass http://app2_DNS_name; proxy_set_header Host $host; } } } apiVersion: apps/v1 kind: Deployment metadata: name: api-nap labels: app: api-nap spec: selector: matchLabels: app: api-nap replicas: 1 template: metadata: labels: app: api-nap spec: containers: - name: api-nap image: 856265587682.dkr.ecr.us-west-2.amazonaws.com/per-service-nginx-app-protect:latest imagePullPolicy: IfNotPresent ports: - containerPort: 80 volumeMounts: - name: nginx-conf-map-api-volume mountPath: "/etc/nginx/nginx.conf" subPath: "nginx.conf" readOnly: true - name: nap-api-policy-volume mountPath: "/etc/nginx/NAP_API_Policy.json" subPath: "NAP_API_Policy.json" readOnly: true volumes: - name: nginx-conf-map-api-volume configMap: name: nginx-conf-map-api - name: nap-api-policy-volume configMap: name: nap-api-policy In case of API NGINX App Protect configuration, there is a reference to the OpenAPI file placed by the CI/CD pipeline in an S3 bucket: apiVersion: v1 kind: ConfigMap metadata: name: nap-api-policy namespace: default data: NAP_API_Policy.json: |+ { "policy": { "name": "policy_name", "template": { "name": "POLICY_TEMPLATE_NGINX_BASE" }, "applicationLanguage": "utf-8", "enforcementMode": "blocking", "signature-sets": [ { "name": "High Accuracy Signatures", "block": true, "alarm": true } ], "bot-defense": { "settings": { "isEnabled": true }, "mitigations": { "classes": [ { "name": "trusted-bot", "action": "alarm" }, { "name": "untrusted-bot", "action": "block" }, { "name": "malicious-bot", "action": "block" } ] } }, "open-api-files": [ { "link": "https://per-service-nginx-app-protect.s3.us-west-2.amazonaws.com/arcadia-openapi3-aws.json" } ], "blocking-settings": { "violations": [ { "name": "VIOL_JSON_FORMAT", "alarm": true, "block": true }, { "name": "VIOL_PARAMETER_VALUE_METACHAR", "alarm": false, "block": false }, { "name": "VIOL_HTTP_PROTOCOL", "alarm": true, "block": true }, { "name": "VIOL_EVASION", "alarm": true, "block": true }, { "name": "VIOL_FILETYPE", "alarm": true, "block": true }, { "name": "VIOL_METHOD", "alarm": true, "block": true }, { "block": true, "description": "Disallowed file upload content detected in body", "name": "VIOL_FILE_UPLOAD_IN_BODY" }, { "block": true, "description": "Mandatory request body is missing", "name": "VIOL_MANDATORY_REQUEST_BODY" }, { "block": true, "description": "Illegal parameter location", "name": "VIOL_PARAMETER_LOCATION" }, { "block": true, "description": "Mandatory parameter is missing", "name": "VIOL_MANDATORY_PARAMETER" }, { "block": true, "description": "JSON data does not comply with JSON schema", "name": "VIOL_JSON_SCHEMA" }, { "block": true, "description": "Illegal parameter array value", "name": "VIOL_PARAMETER_ARRAY_VALUE" }, { "block": true, "description": "Illegal Base64 value", "name": "VIOL_PARAMETER_VALUE_BASE64" }, { "block": true, "description": "Disallowed file upload content detected", "name": "VIOL_FILE_UPLOAD" }, { "block": true, "description": "Illegal request content type", "name": "VIOL_URL_CONTENT_TYPE" }, { "block": true, "description": "Illegal static parameter value", "name": "VIOL_PARAMETER_STATIC_VALUE" }, { "block": true, "description": "Illegal parameter value length", "name": "VIOL_PARAMETER_VALUE_LENGTH" }, { "block": true, "description": "Illegal parameter data type", "name": "VIOL_PARAMETER_DATA_TYPE" }, { "block": true, "description": "Illegal parameter numeric value", "name": "VIOL_PARAMETER_NUMERIC_VALUE" }, { "block": true, "description": "Parameter value does not comply with regular expression", "name": "VIOL_PARAMETER_VALUE_REGEXP" }, { "block": true, "description": "Illegal URL", "name": "VIOL_URL" }, { "block": true, "description": "Illegal parameter", "name": "VIOL_PARAMETER" }, { "block": true, "description": "Illegal empty parameter value", "name": "VIOL_PARAMETER_EMPTY_VALUE" }, { "block": true, "description": "Illegal repeated parameter name", "name": "VIOL_PARAMETER_REPEATED" } ], "http-protocols": [ { "description": "Header name with no header value", "enabled": true }, { "description": "Chunked request with Content-Length header", "enabled": true }, { "description": "Check maximum number of parameters", "enabled": true, "maxParams": 5 }, { "description": "Check maximum number of headers", "enabled": true, "maxHeaders": 30 }, { "description": "Body in GET or HEAD requests", "enabled": true }, { "description": "Bad multipart/form-data request parsing", "enabled": true }, { "description": "Bad multipart parameters parsing", "enabled": true }, { "description": "Unescaped space in URL", "enabled": true } ], "evasions": [ { "description": "Bad unescape", "enabled": true }, { "description": "Directory traversals", "enabled": true }, { "description": "Bare byte decoding", "enabled": true }, { "description": "Apache whitespace", "enabled": true }, { "description": "Multiple decoding", "enabled": true, "maxDecodingPasses": 2 }, { "description": "IIS Unicode codepoints", "enabled": true }, { "description": "%u decoding", "enabled": true } ] }, "methodReference": { "link": "https://per-service-nginx-app-protect.s3.us-west-2.amazonaws.com/methods.txt" }, "filetypeReference": { "link": "https://per-service-nginx-app-protect.s3.us-west-2.amazonaws.com/filetypes.txt" } } } In this case, the same OpenAPI file is pushed to both AWS API Gateway and, through the S3 bucket, to the NGINX App Protect API instance. NGINX App Protectaugmenting the security provided by AWS API Gateway The NGINX App Protect API instance enhances the security posture of API Gateway by providing negative security (attack signature matching) and advanced security like bot detection. To demonstrate this functionality, aSQLi attack is simulated against the API. This valid API call sent by Postman is successfully completed: A similar call that contains an attack pattern (SQL injection) is being blocked by the NGINX App Protect instance: To demonstrate the bot detection capability, the same valid call sent before is now sent from Curl (as opposed to Postman used earlier), now matching an untrusted bot signature and being blocked: curl -vvvk https://api.cloud-app.uk/api/rest/execute_money_transfer.php -d '{"account": 2075894, "amount": 1, "currency": "GBP", "friend": "Vincent"}' -H "Content-Type: application/json" -X POST Note: Unnecessary use of -X or --request, POST is already inferred. * Trying 143.204.170.103... * TCP_NODELAY set * Connected to api.cloud-app.uk (143.204.170.103) port 443 (#0) * WARNING: disabling hostname validation also disables SNI. * TLS 1.2 connection using TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256 * Server certificate: *.cloudfront.net * Server certificate: DigiCert Global CA G2 * Server certificate: DigiCert Global Root G2 > POST /api/rest/execute_money_transfer.php HTTP/1.1 > Host: api.cloud-app.uk > User-Agent: curl/7.54.0 > Accept: */* > Content-Type: application/json > Content-Length: 73 > * upload completely sent off: 73 out of 73 bytes < HTTP/1.1 403 Forbidden < Content-Type: application/json; charset=utf-8 < Content-Length: 37 < Connection: keep-alive < Date: Sat, 28 Nov 2020 20:59:01 GMT < x-amzn-RequestId: 213ca523-173b-42a9-ab41-e3420602bef9 < x-amzn-Remapped-Content-Length: 37 < x-amzn-Remapped-Connection: keep-alive < x-amz-apigw-id: WvIDaFu0PHcFZOg= < Cache-Control: no-cache < x-amzn-Remapped-Server: nginx/1.19.3 < Pragma: no-cache < x-amzn-Remapped-Date: Sat, 28 Nov 2020 20:59:01 GMT < X-Cache: Error from cloudfront < Via: 1.1 9a0d5427f47351631cdee4d5e38248d8.cloudfront.net (CloudFront) < X-Amz-Cf-Pop: LHR50-C1 < X-Amz-Cf-Id: _VYxM43hCyrzj5hJNitbxLkzjww7iygpoWXT7sege-5eySEaKmcRxQ== < {"supportID": "4099392564272510395"} * Connection #0 to host api.cloud-app.uk left intact This can be checked in the NGINX App Protect security logs displayed in Kibana NGINX App Protect dashboards, running in AWS ElasticSearch: The bot defense behavior can be controlled from the policy file under the CodeCommit repository. Changing the action from "block" to "alarm" for "untrusted bot" and commiting the change will trigger the pipeline to redeploy the NGINX App Protect policy: apiVersion: v1 kind: ConfigMap metadata: name: nap-api-policy namespace: default data: NAP_API_Policy.json: |+ { "policy": { "name": "policy_name", "template": { "name": "POLICY_TEMPLATE_NGINX_BASE" }, "applicationLanguage": "utf-8", "enforcementMode": "blocking", "signature-sets": [ { "name": "High Accuracy Signatures", "block": true, "alarm": true } ], "bot-defense": { "settings": { "isEnabled": true }, "mitigations": { "classes": [ { "name": "trusted-bot", "action": "alarm" }, { "name": "untrusted-bot", "action": "block" }, { "name": "malicious-bot", "action": "block" } ] } }, ............................................................................................................... The same Curl call is now being allowed: curl -vvvk https://api.cloud-app.uk/api/rest/execute_money_transfer.php -d '{"account": 2075894, "amount": 1, "currency": "GBP", "friend": "Vincent"}' -H "Content-Type: application/json" -X POST Note: Unnecessary use of -X or --request, POST is already inferred. * Trying 143.204.192.29... * TCP_NODELAY set * Connected to api.cloud-app.uk (143.204.192.29) port 443 (#0) * ALPN, offering h2 * ALPN, offering http/1.1 * successfully set certificate verify locations: * CAfile: /etc/ssl/cert.pem CApath: none * TLSv1.2 (OUT), TLS handshake, Client hello (1): * TLSv1.2 (IN), TLS handshake, Server hello (2): * TLSv1.2 (IN), TLS handshake, Certificate (11): * TLSv1.2 (IN), TLS handshake, Server key exchange (12): * TLSv1.2 (IN), TLS handshake, Server finished (14): * TLSv1.2 (OUT), TLS handshake, Client key exchange (16): * TLSv1.2 (OUT), TLS change cipher, Change cipher spec (1): * TLSv1.2 (OUT), TLS handshake, Finished (20): * TLSv1.2 (IN), TLS change cipher, Change cipher spec (1): * TLSv1.2 (IN), TLS handshake, Finished (20): * SSL connection using TLSv1.2 / ECDHE-RSA-AES128-GCM-SHA256 * ALPN, server accepted to use h2 * Server certificate: * subject: CN=*.cloud-app.uk * start date: Nov 18 00:00:00 2020 GMT * expire date: Dec 17 23:59:59 2021 GMT * issuer: C=US; O=Amazon; OU=Server CA 1B; CN=Amazon * SSL certificate verify ok. * Using HTTP2, server supports multi-use * Connection state changed (HTTP/2 confirmed) * Copying HTTP/2 data in stream buffer to connection buffer after upgrade: len=0 * Using Stream ID: 1 (easy handle 0x7fc877008200) > POST /api/rest/execute_money_transfer.php HTTP/2 > Host: api.cloud-app.uk > User-Agent: curl/7.64.1 > Accept: */* > Content-Type: application/json > Content-Length: 75 > * Connection state changed (MAX_CONCURRENT_STREAMS == 128)! * We are completely uploaded and fine < HTTP/2 200 < content-type: text/html; charset=UTF-8 < content-length: 155 < date: Mon, 30 Nov 2020 11:02:19 GMT < x-amzn-requestid: 9bdb2379-06c4-4970-a528-cd8de9cb75b3 < x-amzn-remapped-content-length: 155 < x-amzn-remapped-connection: keep-alive < x-amz-apigw-id: W0WhMH_TvHcFndQ= < x-amzn-remapped-server: nginx/1.19.3 < vary: Accept-Encoding < x-amzn-remapped-date: Mon, 30 Nov 2020 11:02:19 GMT < x-cache: Miss from cloudfront < via: 1.1 bb501579906725a97059c817430425cf.cloudfront.net (CloudFront) < x-amz-cf-pop: LHR3-C1 < x-amz-cf-id: xo4zfKVqUeejkHzLPArADi1rxRxTJkg61YgfhruAR4KjdpMSnYymkQ== < * Connection #0 to host api.cloud-app.uk left intact {"name":"Vincent", "status":"success","amount":"1", "currency":"GBP", "transid":"753910682", "msg":"The money transfer has been successfully completed "}* Closing connection 0 The new bot defense behavior can be checked in the NGINX App Protect Kibana dashboard: Conclusion To recap, this article has demoed the per-service model of deployment for NGINX App Protect in Kubernetes environment. The main advantage of this deployment model is the independent management of security policies and the portability of each security policy. NGINX App Protect elevates the security level provided by the API Gateway by providing negative security and advanced security features like bot detection. The configuration is being controlled by a CI/CD pipeline, in this case AWS CodePipeline, and the same OpenAPI file used to configure the AWS API Gateway is also ingested by the NGINX App Protect API instance. Lastly the security logs sent by NGINX App Protect through Fluentd to AWS ElasticSearch are being displayed in Kibana dashboards.2KViews1like0CommentsNGINX App Protect deployment in Kubernetes integrated in CI/CD pipeline

This article describes the configuration used to insert an NGINX Plus with App Protect container into a pod, protecting the application deployed in the pod. This implements the ‘per-pod proxy’ model, where each pod is augmented with a dedicated, embedded proxy to handle and secure ingress traffic to the pod. Other deployment patterns are also possible. NGINX App Protect may be deployed as a load-balancing proxy tier within Kubernetes, in front of services that require App Protect security and behind the Ingress Controller. Alternatively, NGINX App Protect may be deployed externally to the Kubernetes environment. The advantage of deploying NGINX App Protect within the application pod is that it is very easy to integrate into a Gitlab CI/CD pipeline. For this demo, the Kubernetes Ingress Controller used is F5 BIG-IP along with the F5 BIG-IP controller (k8s-bigip-ctlr) who is pushing the configuration using the AS3 declarative model. You could also use NGINX Plus Ingress Controller to load-balance traffic to the application pods: An alternative deployment model would embed the WAF within the application pod. This extends protection to internal (East-West) traffic beside external (North-South) and ensures that the WAF is packaged alongside the application in an easily relocatable format. The demo setup referenced in this article is using the following components: -Gitlab to deploy the Kubernetes configuration as part of a CI/CD pipeline -OWASP’s vulnerable application JuiceShop as the App container -NGINX Plus with App Protect module as a container, processing ingress traffic -F5 Container Ingress services controller (k8s-bigip-ctrl) to listen for configuration changes and to reconfigure the F5 BIG-IP via AS3 declarations -F5 BIG-IP as an Ingress Controller, adding better reporting capabilities and allowing sending traffic directly to Kubernetes pods using Calico + BGP F5 BIG-IP Configuration To integrate BIG-IP as an Ingress Controller using Calico and BGP, the BIG-IP device needs to be configured as a BGP neighbour to the Kubernetes nodes. For more information on the BIG-IP configuration to integrate with Kubernetes, you can consult CIS and Kubernetes - Part 1: Install Kubernetes and Calico F5 Container Ingress services controller configuration To configure the F5 CIS controller to loadbalance directly the traffic to the Pods, the –pool-member-type=cluster argument needs to be passed to the controller: For a complete list of configuration options for CIS, consult F5 BIG-IP Controller for Kubernetes CI/CD pipeline configuration On running the CI/CD pipeline in Gitlab, the following code gets executed: The main configuration has been split in multiple files: -staging.j2.vars -ConfigMapJS.yaml -ConfigMapNginx.yaml -ConfigMapWaf.yaml -serviceJSplusAppProtect.yaml -deploymentJSplusAppProtect.yaml -ConfigMapLTM.yaml ConfigMapJS.yaml contains JuiceShop config, which is out of the scope of the current article. The deploymentJSplusAppProtect.yaml describes the JuiceShop application container (port 3000) and the NGINX App Protect container (ports 80 and 443 – only port 80 will be used in this demo): ConfigMapNginx.yaml creates the NGINX Plus configuration: -A server listening on port 80 -NGINX App Protect module pointing to waf-policy.json file -A “backend” server pointing to the same pod (127.0.0.1) on port 3000 – the JuiceShop application container The ConfigMapWaf.yaml file contains the NGINX App Protect configuration: For the purpose of this demo a very simple configuration was used, consisting of the base template and setting the enforcementMode to “transparent”. A more complete example of a NGINX App Protect policy could be defined as follows: apiVersion: v1 kind: ConfigMap metadata: name: nginx-waf namespace: production data: waf-policy.json: | { “name”: “nginx-policy”, “template”: { “name”: “POLICY_TEMPLATE_NGINX_BASE” }, “applicationLanguage”: “utf-8”, “enforcementMode”: “blocking”, “signature-sets”: [ { “name”: “All Signatures”, “block”: false, “alarm”: true }, { “name”: “High Accuracy Signatures”, “block”: true, “alarm”: true } ], “blocking-settings”: { “violations”: [ { “name”: “VIOL_RATING_NEED_EXAMINATION”, “alarm”: true, “block”: true }, { “name”: “VIOL_HTTP_PROTOCOL”, “alarm”: true, “block”: true }, { “name”: “VIOL_FILETYPE”, “alarm”: true, “block”: true }, { “name”: “VIOL_COOKIE_MALFORMED”, “alarm”: true, “block”: false } ], “http-protocols”: [ { “description”: “Body in GET or HEAD requests”, “enabled”: true, “maxHeaders”: 20, “maxParams”: 500 } ], “filetypes”: [ { “name”: “*”, “type”: “wildcard”, “allowed”: true, “responseCheck”: true } ], “data-guard”: { “enabled”: true, “maskData”: true, “creditCardNumbers”: true, “usSocialSecurityNumbers”: true }, “cookies”: [ { “name”: “*”, “type”: “wildcard”, “accessibleOnlyThroughTheHttpProtocol”: true, “attackSignaturesCheck”: true, “insertSameSiteAttribute”: “strict” } ], “evasions”: [ { “description”: “%u decoding”, “enabled”: true, “maxDecodingPasses”: 2 } ] } } The serviceJSplusAppProtect.yaml contains the k8s-bigip-ctrl labels that will enable F5 Controller Ingress Services to track the application address and the targetPort that BIG-IP Ingress Controller will use to loadbalance the traffic directly to the Pods: For more information on Container Ingress Services labels, please consult CIS and AS3 Extension Integration (https://clouddocs.f5.com/containers/v2/kubernetes/kctlr-k8s-as3.html). The ConfigMapLTM.yaml defines the AS3 template that k8s-bigip-ctrl will fill by parsing the environment variables and Kubernetes services and then deploy on the BIG-IP: Where the VS_IP is being sourced from staging.j2.vars file and serverAddresses are discovered by querying Kubernetes: Running the pipeline will result in a Virtual Server deployed in the “staging” administrative partition, with a pool with two members, each being one replica of the of the NGINX App Protect container (port 80) deployed in front of their respective application containers. The pool members are the Kubernetes pods allowing for loadbalancing the traffic directly between them as opposed to sending the traffic to a Kubernetes service. The routes to reach the pool members are being learned via BGP.1KViews1like0Comments